Abstract

We study the robustness of machine learning approaches to adversarial perturbations, with a focus on supervised learning scenarios. We find that typical phase classifiers based on deep neural networks are extremely vulnerable to adversarial perturbations: adding a tiny amount of carefully crafted noises into the original legitimate examples will cause the classifiers to make incorrect predictions at a notably high confidence level. Through the lens of activation maps, we find that some important underlying physical principles and symmetries remain to be adequately captured for classifiers with even near-perfect performance. This explains why adversarial perturbations exist for fooling these classifiers. In addition, we find that, after adversarial training the classifiers will become more consistent with physical laws and consequently more robust to certain kinds of adversarial perturbations. Our results provide valuable guidance for both theoretical and experimental future studies on applying machine learning techniques to condensed matter physics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Machine learning is currently revolutionizing many technological areas of modern society, ranging from image/speech recognition to content filtering on social networks and self-driving cars [1, 2]. Recently, its tools and techniques have been adopted to tackle intricate quantum many-body problems [3–14], where the exponential scaling of the Hilbert space dimension poses a notorious challenge. In particular, a number of supervised and unsupervised learning methods have demonstrated remarkable strides in the realm of identifying phases and phase transitions in various systems [13–32]. The integration of deep neural networks and prior knowledge about physical systems has enabled a more nuanced and accurate characterization of different phases in complex materials. These methodologies have proven particularly effective in handling large datasets and extracting intricate patterns that may be challenging for traditional analytical methods. Moreover, the application of machine learning in this domain has not only enhanced the speed of phase identification but has also contributed to a deeper understanding of the underlying mechanisms governing phase transitions [13, 16, 17, 20, 22, 23, 25]. Following these approaches, notable proof-of-principle experiments with different platforms [33–36], including electron spins in diamond nitrogen-vacancy (NV) centers [33], doped CuO2 [36], and cold atoms in optical lattices [34, 35], have also been carried out subsequently, showing great potentials for unparalleled advantages of machine learning approaches compared to traditional means.

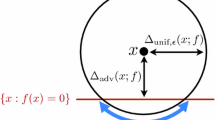

An important question of both theoretical and experimental relevance concerns the reliability of such machine-learning approaches to condensed matter physics: are these approaches robust to adversarial perturbations, which are deliberately crafted in a way intended to fool the classifiers? In the realm of adversarial machine learning [37–43], it has been shown that machine learning models can be surprisingly vulnerable to adversarial perturbations if the dimension of the data is high enough—one can often synthesize small, imperceptible perturbations of the input data to cause the model make highly-confident but erroneous predictions. A prominent adversarial example that clearly manifests such vulnerability of classifiers based on deep neural networks was first observed by Szegedy et al. [44], where adding a small adversarial perturbation, although unnoticeable to human eyes, will cause the classifier to miscategorize a panda as a gibbon with confidence larger than 99%. In this paper, we investigate the vulnerability of machine learning approaches in the context of classifying different phases of matter, with a focus on supervised learning based on deep neural networks (see Fig. 1 for an illustration).

A schematic illustration for the vulnerability of machine learning phases of matter. For a clean image, such as the time-of-flight image obtained in a recent cold-atom experiment [34], a trained neural network (i.e., the classifier) can successfully predict its corresponding Chern number with nearly unit accuracy. However, if we add a tiny adversarial perturbation (which is imperceptible to human eyes) to the original image, the same classifier will misclassify the resulted image into an incorrect category with nearly unit confidence

We find that typical phase classifiers based on deep neural networks are likewise extremely vulnerable to adversarial perturbations. This is demonstrated through two concrete examples, which cover different phases of matter (including both symmetry-breaking and symmetry-protected topological phases) and different strategies to obtain the adversarial perturbations. To better understand why these adversarial examples can fool the classifier in the physics context, we open up the neural network and use an idea borrowed from the machine learning community, called activation map [45, 46], to study how the classifier infers different phases of matter. Further, we show that an adversarial training-based defense strategy improves classifiers’ ability to resist specific perturbations and how well the underlying physical principles are captured. Our results shed light on the fledgling field of machine-learning applications in condensed matter physics, which may provide an important paradigm for future theoretical and experimental studies as the field matures.

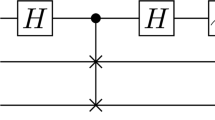

To begin with, we introduce the main ideas of adversarial machine learning, which involves the generation and defense of adversarial examples [37–40]. Adversarial examples are instances with small intentionally crafted perturbations to cause the classifier make incorrect predictions. Under the supervised learning scenario, we have a training data set with labels \(\mathcal{D}_{n}=\{(\boldsymbol{x}^{(1)},y^{(1)}),\ldots ,( \boldsymbol{x}^{(n)},y^{(n)})\}\), a classifier \(h(\cdot ;\theta )\) and a loss function L to evaluate the classifier’s performance. Adversarial examples generation task can be reduced to an optimization problem: searching for a bounded perturbation that maximizes the loss function (see Sec. I in the Additional file 1):

In the machine learning literature, a number of methods have been proposed to solve the above optimization problem, along with corresponding defense strategies [41, 47–50]. We employ some of these methods and one general defense strategy, adversarial training, on two concrete examples: one concerns the conventional paramagnetic/ferromagnetic phases with a two-dimensional classical Ising model [14, 16, 17]; the other involves topological phases with experimental raw data generated by a solid-state quantum simulator [33].

2 Results

2.1 The ferromagnetic Ising model

The first example we consider involves the ferromagnetic Ising model defined on a 2D square lattice: \(H_{\text{Ising}} = -J\sum_{\langle ij\rangle}\sigma _{i}^{z}\sigma _{j}^{z}\), where the Ising variables \(\sigma _{i}^{z}=\pm 1\) and the coupling strength \(J\equiv 1\) is set to be the energy unit. This model features a well-understood phase transition at the critical temperature \(T_{c}=2/\ln (1+\sqrt{2})\approx 2.366\) [51], between a high-temperature paramagnetic phase and a low-temperature ferromagnetic phase. In the context of machine learning phases of matter, different pioneering approaches, including these based on supervised learning [16], unsupervised learning [14], or a confusion scheme combining both [17], have been introduced to classify the ferromagnetic/paramagnetic phases hosted by the above 2D Ising model. In particular, Carrasquilla and Melko first explored a supervised learning scheme based on a fully connected feed-forward neural network [16]. They used equilibrium spin configurations sampled from Monte Carlo simulations to train the network and demonstrated that after training it can correctly classify new samples with notably high accuracy. Moreover, through scanning the temperature the network can also locate the transition temperature \(T_{c}\) and extrapolate the critical exponents that are crucial in the study of phase transitions.

To study the robustness of these introduced machine learning approaches to adversarial perturbations, we first train a powerful classifier which has comparable performance with the ones shown in [16]. After training, the network can successfully classify data from the test set with a high accuracy larger than 97%. Then we try to obtain adversarial perturbations to attack this seemingly ideal classifier. It is natural to consider discrete attack in this scenario since the spin configuration in Ising model can be only discretely changed as spin flips. We apply the differential evolution algorithm (DEA) [52] to the Monte Carlo sampled spin configurations and obtain the corresponding adversarial perturbations. A concrete example found by DEA is illustrated in Fig. 2(a-b). Initially, the legitimate example shown in (a) is in the ferromagnetic phase, which has magnetization \(M=|\sum_{i}^{N}\sigma _{i}|/N=0.791\) and the classifier classifies it into the correct phase with confidence 72%. DEA obtains an adversarial example shown in (b) by flipping only a single spin, which is located in the red circle. This new spin configuration has almost the same magnetization \(M=0.789\) and should still belong to the ferromagnetic phase, but the classifier misclassifies it into the paramagnetic phase with confidence 60%. If we regard \(H_{\text{Ising}}\) as a quantum Hamiltonian and allow the input data to be continuously modified, one can also consider a continuous attack scenario and obtain various adversarial examples, as shown in the Additional file 1.

(a) A legitimate sample of the spin configuration in the ferromagnetic phase with \(M=|\sum_{i}^{N}\sigma _{i}|/N=0.791\). (b) An adversarial example obtained by the differential evolution algorithm (DEA), which only differs from the original legitimate one by flipping one spin (in red circle). (c) The activation map (AM) of the original classifier. The spins at positions with darker colors contribute more to the confidence of being ferromagnetic phase. (d) The activation map of the classifier after adversarial training. The map becomes much flatter and the variance of each position’s activation value becomes much smaller

To understand why this adversarial example crafted with tiny changes leads to misclassification, we dissect the classifier by estimating each position’s importance to the final prediction, which we call the activation map of the classifier (see Sec. II in the Additional file 1). In Fig. 2(c) we depict the activation map for ferromagnetic phase. It is evident that the classifier makes prediction mainly based on positions with large activation values (dark colors). The position where the adversarial spin flip happens in Fig. 2(b) has an activation value 3.28, which is the forth largest among all 900 positions. Then we enumerate all positions with activation values larger than 2.6 and find that single spin flips, which changes the contribution to ferromagnetic phase from positive to negative, can all lead to misclassification. The values at different positions for the activation map is found to be nonuniform, which contradicts to the physical knowledge that each spin contributes equally to the order parameter M. This explains why the classifier is vulnerable to these particular spin flips. We remark that in the traditional machine learning realm of classifying daily-life images (such as images of cats and dogs), such an explanation is unattainable due to the absence of a sharply defined “order parameter”.

2.2 Topological phases of matter

Unlike conventional phases (such as the paramagnetic/ferromagnetic phases discussed above), topological phases do not fit into the paradigm of symmetry breaking [53] and are described by nonlocal topological invariants [54, 55], rather than local order parameters. This makes topological phases harder to learn in general. Notably, a number of different approaches, based on either supervised [15, 23, 56] or unsupervised [22, 57–67] learning paradigms, have been proposed recently and some of them been demonstrated in proof-of-principle experiments [33, 34].

The obtaining of adversarial examples is more challenging, since the topological invariants capture the global properties of the systems and are insensitive to local perturbations. We consider a three-band model for 3D chiral topological insulators (TIs) [68, 69]: \(H_{\text{TI}} = \sum_{\boldsymbol{k\in \text{BZ}}}\Psi _{ \boldsymbol{k}}^{\dagger}H_{\boldsymbol{k}}\Psi _{\boldsymbol{k}}\), where \(\Psi _{\boldsymbol{k}}^{\dagger}=(c_{\boldsymbol{k},1}^{\dagger},c_{ \boldsymbol{k},0}^{\dagger},c_{\boldsymbol{k},-1}^{\dagger})\) with \(c_{\boldsymbol{k,\mu}}^{\dagger}\) the fermion creation operator at momentum \(\boldsymbol{k}=(k_{x},k_{y},k_{z})\) in the orbital (spin) state \(\mu =-1,0,1\) and the summation is over the Brillouin zone (BZ); \(H_{\boldsymbol{k}}=\lambda _{1}\sin k_{x}+\lambda _{2}\sin k_{y}+ \lambda _{6}\sin k_{z}-\lambda _{7}(\cos k_{x}+\cos k_{y}+\cos k_{z}+h)\) denotes the single-particle Hamiltonian, with \(\lambda _{1,2,6,7}\) being four traceless Gell-Mann matrices [68]. The topological properties for each band can be characterized by a topological invariant \(\chi ^{(\eta )}\), where \(\eta = l,m,u\) denotes the lower, middle and upper bands, respectively. \(\chi ^{(\eta )}\) can be written as an integral in the 3D momentum space: \(\chi ^{(\eta )} = \frac{1}{4\pi ^{2}} \int _{\text{BZ}}\epsilon ^{\mu \nu \tau}A_{\mu}^{(\eta )} \partial _{k^{\nu}}A_{\tau}^{(\eta )}\,d^{3} \mathbf{k}\), where \(\epsilon ^{\mu \nu \tau}\) is the Levi-Civita symbol with \(\mu ,\nu ,\tau \in \{x,y,z\}\), and the Berry connection is \(A_{\mu}^{(\eta )}=\langle \psi _{\mathbf{k}}^{(\eta )}|\partial _{k^{\mu}}|\psi _{\mathbf{k}}^{(\eta )}\rangle \) with \(|\psi _{\mathbf{k}}^{(\eta )}\rangle \) denoting the Bloch state for the η band. The topological invariants for each band are related as \(\chi ^{(u)} = \chi ^{(l)} = \chi ^{(m)}/4\) for the three-band chiral topological insulator model. We can obtain that \(\chi ^{(m)}=0,1,\text{and }-2\) for \(|h|>3,1<|h|<3\), and \(|h|<1\), respectively. Recently, an experiment has been carried out to simulate \(H_{\text{TI}}\) with the electron spins in a NV center and a demonstration of the supervised learning approach to topological phases has been reported [33]. Using the measured density matrices in the momentum space (which can be obtained through quantum state tomography) as input data, a trained 3D convolutional neural network (CNN) can correctly identify distinct topological phases with exceptionally high success probability, even when a large portion of the experimentally generated raw data was dropped out or inaccessible. Here, we show that this approach is highly vulnerable to adversarial perturbations.

We first train a 3D CNN with numerically simulated data. The training curve is shown in Fig. 3(a) and the accuracy on validation data saturates at a high value (\(\approx 99\%\)). After the training, we fix the model parameters of the CNN and utilize the Fast Gradient Sign Method (FGSM) [49], projected gradient descent (PGD) [49] and Momentum Iterative Method (MIM) [50] to generate adversarial perturbations [70]. Figure 3(b) shows the confidence probabilities of the classification of a sample with \(\chi ^{({m})}=0\) as functions of the MIM iterations. From this figure, \(P(\chi ^{({m})}=0)\) decreases rapidly as the iteration number increases and converges to a small value (\(\approx 2\%\)) after about eight iterations. Meanwhile, \(P(\chi ^{({m})}=1)\) increases rapidly and converges to a large value (\(\approx 98\%\)), indicating a misclassification of the classifier—after about eight iterations, the sample originally from the category \(\chi ^{({m})}=0\) is misclassified to belong to the category \(\chi ^{({m})}=1\) with a confidence level \(\approx 98\%\). We note that a direct calculation of the topological invariant through integration confirms that \(\chi ^{(\text{m})}=0\) for this adversarial example, indicating that the tiny perturbation would not affect the traditional methods.

(a) The average accuracy and loss of the 3D convolutional neural network to classify the topological phases. (b) We use the momentum iterative method to obtain the adversarial examples. This plot shows the classification probabilities as a function of the iteration number. After around two iterations, the network begin to misclassify the samples. (c-f) The activation maps (AM) of the sixth kernel in the first convolutional layer under different settings. (c) the average AM on all samples in the test set with \(\chi ^{(m)}=0\). (d) the average AM on \(\chi ^{(m)}=1\). (e) the AM obtained by taking the legitimate sample as the input to the topological phases classifier and (f) is taking the adversarial example as input

It is more challenging to figure out why the topological phases classifier is so vulnerable to the adversarial perturbation. Since these convolutional kernels are repeatedly applied to different spatial windows, we have limited method to calculate the importance of each location, as we do with an Ising classifier. We study the activation maps of all convolutional kernels in the first convolutional layer. We find that the sixth kernel has totally different activation patterns for topologically trivial and nontrivial phases, which acts as a strong indicator for the classifier to distinguish these phases (see Sec. II in the Additional file 1). Specifically, the activation patterns for \(\chi ^{(m)}=0,1\) phases are illustrated in Fig. 3(c-d). We then compare the sixth kernel’s activation maps of the legitimate sample and the adversarial example. As shown in Fig. 3(e-f), we can clearly see that the tiny adversarial perturbation makes the activation map much more correlated with the \(\chi ^{(m)}=1\) ones, which gives the classifier high confidence to assert that the adversarial example belongs to the \(\chi ^{(m)}=1\) phase. This explains why adversarial examples can deceive the classifier.

The above two examples clearly demonstrate the vulnerability of machine learning approaches to classify different phases of matter. We mention that, although we have only focused on these two examples, the existence of adversarial perturbations is ubiquitous in learning various phases (independent of the learning model and input data type) and the methods used in the above examples can also be used to generate the desired adversarial perturbations for different phases. From a more theoretical computer science perspective, the vulnerability of the phase classifiers can be understood as a consequence of the strong “No Free Lunch” theorem—there exists an intrinsic tension between adversarial robustness and generalization accuracy [71–73]. The data distributions in the scenarios of learning phases of matter typically satisfy the \(W_{2}\) Talagrand transportation-cost inequality, thus a phase classifier could be adversarially deceived with high probability [74].

2.3 Adversarial training

In adversarial machine learning, a number of countermeasures against adversarial examples have been developed [75, 76]. Adversarial training, whose essential idea is to first generate a substantial amount of adversarial examples and then retrain the classifier with both original data and crafted data, is one of these countermeasures to make the classifiers more robust. Here, in order to study how it works for machine learning phases of matter, we apply adversarial training to the 3D CNN classifier used in classifying topological phases. Partial results are plotted in Fig. 4(a-b). While the classifier’s performance on legitimate examples maintains intact, the test accuracy on adversarial examples increases significantly (at the end of the adversarial training, it increases from about 60% to 98%). This result indicates that the retrained classifier is immune to the adversarial examples generated by the corresponding attacks.

The effectiveness of the adversarial training. (a) We first numerically generate adequate adversarial examples with the FGSM, and then retrain the CNN with both the legitimate and adversarial data. (b) Similar adversarial training for the defense of the PGD attack. (c) The original classifier’s output representing the confidence of being ferromagnetic. The “Configurations” means different spin configurations with the same magnetization M. The corresponding temperature (listed in parentheses) of each M is calculated by Onsager’s formula [51]. The original classifier identify the transition point at \(T=2.255\). (d) The refined classifier’s output after the adversarial training against BIM attack. The identified transition point changes to \(T=2.268\), which becomes closer to \(T_{c}=2.269\)

We also study how adversarial training can make the classifiers grasp physical quantities more thoroughly. As shown in Fig. 2(d), the activation map of the Ising model classifier becomes much flatter after adversarial training (the standard deviation of different positions is reduced from 0.88 to 0.20), which indicates that after adversarial learning the output of the classifier is more consistent with the physical order parameter of magnetization where each spin contributes equally, and hence more robust to adversarial perturbations. This is also reflected by the fact that after adversarial training the classifier can identify the phase transition point more accurately, as shown in Fig. 4(c-d). We also show that adversarial training can also help the topological phase classifier to make better inference from the view of activation maps (see Sec. III in the Additional file 1).

3 Discussion and conclusion

We mention that the adversarial training method is useful only on adversarial examples which are crafted on the original classifier. The defense may not work for black-box attacks [77, 78], where an adversary generates malicious examples on a locally trained substitute model. To deal with the transferred black-box attack, one may explore the recently proposed ensemble adversarial training method that retrain the classifier with adversarial examples generated from multiple sources [79]. In the future, it would be interesting and desirable to find other defense strategies to strengthen the robustness of phase classifiers to adversarial perturbations. In addition, an experimental demonstration of adversarial learning phases of matter together with defense strategies would also be an important step towards reliable practical applications of machine learning in physics.

In summary, we have studied the robustness of machine learning approaches in classifying different phases of matter. Our discussion is mainly focused on supervised learning based on deep neural networks, but its generalization to other types of learning models (such as unsupervised learning or reinforcement learning) and other type of phases are possible. Through two concrete examples, we have demonstrated explicitly that typical phase classifiers based on deep neural networks are extremely vulnerable to tiny adversarial perturbations. We have studied the explainability of adversarial examples and demonstrated that adversarial training significantly improves the robustness of phase classifiers by assisting the model to learn underlying physical principle and symmetries. Our results reveal a novel vulnerability aspect for the growing field of machine learning phases of matter, which would benefit future studies across condensed matter physics, machine learning, and artificial intelligence.

Availability of data and materials

Data and code will be made available on reasonable request.

References

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521(7553):436–444. https://doi.org/10.1038/nature14539

Jordan M, Mitchell T (2015) Machine learning: trends, perspectives, and prospects. Science 349(6245):255–260. https://doi.org/10.1126/science.aaa8415

Sarma SD, Deng D-L, Duan L-M (2019) Machine learning meets quantum physics. Phys Today 72:48. https://doi.org/10.1063/PT.3.4164

Carleo G, Troyer M (2017) Solving the quantum many-body problem with artificial neural networks. Science 355:602–606. https://doi.org/10.1126/science.aag2302

Torlai G, Mazzola G, Carrasquilla J, Troyer M, Melko R, Carleo G (2018) Neural-network quantum state tomography. Nat Phys 14(5):447–450. https://doi.org/10.1038/s41567-018-0048-5

Nomura Y, Darmawan AS, Yamaji Y, Imada M (2017) Restricted Boltzmann machine learning for solving strongly correlated quantum systems. Phys Rev B 96:205152. https://doi.org/10.1103/PhysRevB.96.205152

You Y-Z, Yang Z, Qi X-L (2018) Machine learning spatial geometry from entanglement features. Phys Rev B 97:045153. https://doi.org/10.1103/PhysRevB.97.045153

Deng D-L, Li X, Das Sarma S (2017) Machine learning topological states. Phys Rev B 96:195145. https://doi.org/10.1103/PhysRevB.96.195145

Deng D-L (2018) Machine learning detection of bell nonlocality in quantum many-body systems. Phys Rev Lett 120:240402. https://doi.org/10.1103/PhysRevLett.120.240402

Deng D-L, Li X, Das Sarma S (2017) Quantum entanglement in neural network states. Phys Rev X 7:021021. https://doi.org/10.1103/PhysRevX.7.021021

Gao X, Duan L-M (2017) Efficient representation of quantum many-body states with deep neural networks. Nat Commun 8(1):662. https://doi.org/10.1038/s41467-017-00705-2

Melko RG, Carleo G, Carrasquilla J, Cirac JI (2019) Restricted Boltzmann machines in quantum physics. Nat Phys 15:887–892. https://doi.org/10.1038/s41567-019-0545-1

Ch’ng K, Carrasquilla J, Melko RG, Khatami E (2017) Machine learning phases of strongly correlated fermions. Phys Rev X 7:031038. https://doi.org/10.1103/PhysRevX.7.031038

Wang L (2016) Discovering phase transitions with unsupervised learning. Phys Rev B 94:195105. https://doi.org/10.1103/PhysRevB.94.195105

Zhang Y, Kim E-A (2017) Quantum loop topography for machine learning. Phys Rev Lett 118:216401. https://doi.org/10.1103/PhysRevLett.118.216401

Carrasquilla J, Melko RG (2017) Machine learning phases of matter. Nat Phys 13(5):431–434. https://doi.org/10.1038/nphys4035

Nieuwenburg EP, Liu Y-H, Huber SD (2017) Learning phase transitions by confusion. Nat Phys 13:435–439. https://doi.org/10.1038/nphys4037

Broecker P, Carrasquilla J, Melko RG, Trebst S (2017) Machine learning quantum phases of matter beyond the fermion sign problem. Sci Rep 7:8823. https://doi.org/10.1038/s41598-017-09098-0

Wetzel SJ (2017) Unsupervised learning of phase transitions: from principal component analysis to variational autoencoders. Phys Rev E 96:022140. https://doi.org/10.1103/PhysRevE.96.022140

Hu W, Singh RRP, Scalettar RT (2017) Discovering phases, phase transitions, and crossovers through unsupervised machine learning: a critical examination. Phys Rev E 95:062122. https://doi.org/10.1103/PhysRevE.95.062122

Hsu Y-T, Li X, Deng D-L, Das Sarma S (2018) Machine learning many-body localization: search for the elusive nonergodic metal. Phys Rev Lett 121:245701. https://doi.org/10.1103/PhysRevLett.121.245701

Rodriguez-Nieva JF, Scheurer MS (2019) Identifying topological order through unsupervised machine learning. Nat Phys 790–795. https://doi.org/10.1038/s41567-019-0512-x

Zhang P, Shen H, Zhai H (2018) Machine learning topological invariants with neural networks. Phys Rev Lett 120:066401. https://doi.org/10.1103/PhysRevLett.120.066401

Huembeli P, Dauphin A, Wittek P (2018) Identifying quantum phase transitions with adversarial neural networks. Phys Rev B 97:134109. https://doi.org/10.1103/PhysRevB.97.134109

Suchsland P, Wessel S (2018) Parameter diagnostics of phases and phase transition learning by neural networks. Phys Rev B 97:174435. https://doi.org/10.1103/PhysRevB.97.174435

Ohtsuki T, Ohtsuki T (2016) Deep learning the quantum phase transitions in random two-dimensional electron systems. J Phys Soc Jpn 85(12):123706. https://doi.org/10.7566/JPSJ.85.123706

Ohtsuki T, Ohtsuki T (2017) Deep learning the quantum phase transitions in random electron systems: applications to three dimensions. J Phys Soc Jpn 86(4):044708. https://doi.org/10.7566/JPSJ.85.123706

Ohtsuki T, Mano T (2020) Drawing phase diagrams of random quantum systems by deep learning the wave functions. J Phys Soc Jpn 89(2):022001. https://doi.org/10.7566/jpsj.89.022001

Greplova E, Valenti A, Boschung G, Schäfer F, Lörch N, Huber S (2020) Unsupervised identification of topological order using predictive models. New J Phys 22(4):045003. https://doi.org/10.1088/1367-2630/ab7771

Vargas-Hernández RA, Sous J, Berciu M, Krems RV (2018) Extrapolating quantum observables with machine learning: inferring multiple phase transitions from properties of a single phase. Phys Rev Lett 121:255702. https://doi.org/10.1103/PhysRevLett.121.255702

Yang Y, Sun Z-Z, Ran S-J, Su G (2021) Visualizing quantum phases and identifying quantum phase transitions by nonlinear dimensional reduction. Phys Rev B 103:075106. https://doi.org/10.1103/PhysRevB.103.075106

Canabarro A, Fanchini FF, Malvezzi AL, Pereira R, Chaves R (2019) Unveiling phase transitions with machine learning. Phys Rev B 100:045129. https://doi.org/10.1103/PhysRevB.100.045129

Lian W, Wang S-T, Lu S, Huang Y, Wang F, Yuan X, Zhang W, Ouyang X, Wang X, Huang X, He L, Chang X, Deng D-L, Duan L-M (2019) Machine learning topological phases with a solid-state quantum simulator. Phys Rev Lett 122:210503. https://doi.org/10.1103/PhysRevLett.122.210503

Rem BS, Käming N, Tarnowski M, Asteria L, Fläschner N, Becker C, Sengstock K, Weitenberg C (2019) Identifying quantum phase transitions using artificial neural networks on experimental data. Nat Phys 15(9):917–920. https://doi.org/10.1038/s41567-019-0554-0

Bohrdt A, Chiu CS, Ji G, Xu M, Greif D, Greiner M, Demler E, Grusdt F, Knap M (2019) Classifying snapshots of the doped Hubbard model with machine learning. Nat Phys 15(9):921–924. https://doi.org/10.1038/s41567-019-0565-x

Zhang Y, Mesaros A, Fujita K, Edkins S, Hamidian M, Ch’ng K, Eisaki H, Uchida S, Davis JS, Khatami E et al. (2019) Machine learning in electronic-quantum-matter imaging experiments. Nature 570(7762):484. https://doi.org/10.1038/s41586-019-1319-8

Biggio B, Roli F (2018) Wild patterns: ten years after the rise of adversarial machine learning. Pattern Recognit 84:317–331. https://doi.org/10.1016/j.patcog.2018.07.023

Huang L, Joseph AD, Nelson B, Rubinstein BI, Tygar JD (2011) Adversarial machine learning. In: Proceedings of the 4th ACM workshop on security and artificial intelligence, pp 43–58. ACM. https://dl.acm.org/citation.cfm?id=2046692

Vorobeychik Y, Kantarcioglu M (2018) Adversarial machine learning. Synth Lect Artif Intell Mach Learn 12(3):1–169. https://doi.org/10.2200/S00861ED1V01Y201806AIM039

Miller DJ, Xiang Z, Kesidis G (2020) Adversarial learning targeting deep neural network classification: a comprehensive review of defenses against attacks. Proc IEEE 108(3):402–433. https://doi.org/10.1109/JPROC.2020.2970615

Goodfellow I, Shlens J, Szegedy C (2015) Explaining and harnessing adversarial examples. In: International conference on learning representations. http://arxiv.org/abs/1412.6572

Liu N, Wittek P (2020) Vulnerability of quantum classification to adversarial perturbations. Phys Rev A 101:062331. https://doi.org/10.1103/PhysRevA.101.062331

Schmidt L, Santurkar S, Tsipras D, Talwar K, Madry A (2018) Adversarially robust generalization requires more data. In: Advances in Neural Information Processing Systems. https://proceedings.neurips.cc/paper/2018/file/f708f064faaf32a43e4d3c784e6af9ea-Paper.pdf

Szegedy C, Zaremba W, Sutskever I, Bruna J, Erhan D, Goodfellow IJ, Fergus R (2014) Intriguing properties of neural networks. In: International conference on learning representations. http://arxiv.org/abs/1312.6199

Zhou B, Khosla A, Lapedriza À, Oliva A, Torralba A (2015) Object detectors emerge in deep scene cnns. In: International conference on learning representations. http://arxiv.org/abs/1412.6856

Zhou B, Khosla A, Lapedriza À, Oliva A, Torralba A (2016) Learning deep features for discriminative localization. In: IEEE conference on computer vision and pattern recognition, pp 2921–2929. https://doi.org/10.1109/CVPR.2016.319

Storn R, Price K (1997) Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359. https://doi.org/10.1023/A:1008202821328

Das S, Suganthan PN (2010) Differential evolution: a survey of the state-of-the-art. IEEE Trans Evol Comput 15(1):4–31. https://doi.org/10.1109/TEVC.2010.2059031

Madry A, Makelov A, Schmidt L, Tsipras D, Vladu A (2018) Towards deep learning models resistant to adversarial attacks. In: International conference on learning representations. https://doi.org/10.48550/arXiv.1706.06083

Dong Y, Liao F, Pang T, Su H, Zhu J, Hu X, Li J (2018) Boosting adversarial attacks with momentum. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9185–9193. http://openaccess.thecvf.com/content_cvpr_2018/html/Dong_Boosting_Adversarial_Attacks_CVPR_2018_paper.html

Onsager L (1944) Crystal statistics. I. A two-dimensional model with an order-disorder transition. Phys Rev 65:117–149. https://doi.org/10.1103/PhysRev.65.117

Su J, Vargas DV, Sakurai K (2019) One pixel attack for fooling deep neural networks. IEEE Trans Evol Comput. https://doi.org/10.1109/TEVC.2019.2890858

Lifshitz EM, Pitaevskii LP (2013) Statistical physics: theory of the condensed state, vol 9. Elsevier, London

Qi X-L, Zhang S-C (2011) Topological insulators and superconductors. Rev Mod Phys 83:1057–1110. https://doi.org/10.1103/RevModPhys.83.1057

Hasan MZ, Kane CL (2010) Colloquium: topological insulators. Rev Mod Phys 82:3045–3067. https://doi.org/10.1103/RevModPhys.82.3045

Zhang Y, Melko RG, Kim E-A (2017) Machine learning \(z_{2}\) quantum spin liquids with quasiparticle statistics. Phys Rev B 96:245119. https://doi.org/10.1103/PhysRevB.96.245119

Yu L-W, Deng D-L (2021) Unsupervised learning of non-hermitian topological phases. Phys Rev Lett 126(24). https://doi.org/10.1103/PhysRevLett.126.240402

Scheurer MS, Slager R-J (2020) Unsupervised machine learning and band topology. Phys Rev Lett 124(22). https://doi.org/10.1103/PhysRevLett.124.226401

Long Y, Ren J, Chen H (2020) Unsupervised manifold clustering of topological phononics. Phys Rev Lett 124(18). https://doi.org/10.1103/PhysRevLett.124.185501

Lidiak A, Gong Z (2020) Unsupervised machine learning of quantum phase transitions using diffusion maps. Phys Rev Lett 125(22). https://doi.org/10.1103/PhysRevLett.125.225701

Che Y, Gneiting C, Liu T, Nori F (2020) Topological quantum phase transitions retrieved through unsupervised machine learning. Phys Rev B 102(13). https://doi.org/10.1103/PhysRevB.102.134213

Fukushima K, Funai SS, Iida H (2019) Featuring the topology with the unsupervised machine learning. https://doi.org/10.48550/arXiv.1908.00281

Schäfer F, Lörch N (2019) Vector field divergence of predictive model output as indication of phase transitions. Phys Rev E 99:062107. https://doi.org/10.1103/PhysRevE.99.062107

Balabanov O, Granath M (2020) Unsupervised learning using topological data augmentation. Phys Rev Res 2(1). https://doi.org/10.1103/PhysRevResearch.2.013354

Alexandrou C, Athenodorou A, Chrysostomou C, Paul S (2020) The critical temperature of the 2d-Ising model through deep learning autoencoders. Eur Phys J B 93(12):226. https://doi.org/10.1140/epjb/e2020-100506-5

Greplová E, Valenti A, Boschung G, Schafer F, Lorch N, Huber S (2020) Unsupervised identification of topological order using predictive models. New J Phys 22(4):045003. https://doi.org/10.1088/1367-2630/ab7771

Arnold J, Schäfer F, Žonda M, Lode AUJ (2021) Interpretable and unsupervised phase classification. Phys Rev Res 3(3). https://doi.org/10.1103/PhysRevResearch.3.033052

Neupert T, Santos L, Ryu S, Chamon C, Mudry C (2012) Noncommutative geometry for three-dimensional topological insulators. Phys Rev B 86:035125. https://doi.org/10.1103/PhysRevB.86.035125

Wang S-T, Deng D-L, Duan L-M (2014) Probe of three-dimensional chiral topological insulators in an optical lattice. Phys Rev Lett 113:033002. https://doi.org/10.1103/PhysRevLett.113.033002

Papernot N, Faghri F, Carlini N, Goodfellow I, Feinman R, Kurakin A, Xie C, Sharma Y, Brown T, Roy A et al. (2018) Technical report on the CleverHans v2.1.0 adversarial examples library. https://doi.org/10.48550/arXiv.1610.00768

Tsipras D, Santurkar S, Engstrom L, Turner A, Madry A (2019) Robustness may be at odds with accuracy. In: International conference on learning representations. https://openreview.net/forum?id=SyxAb30cY7

Fawzi A, Fawzi H, Fawzi O (2018) Adversarial vulnerability for any classifier. In: Advances in neural information processing systems, pp 1178–1187. http://papers.nips.cc/paper/7394-adversarial-vulnerability-for-any-classifier

Gilmer J, Metz L, Faghri F, Schoenholz SS, Raghu M, Wattenberg M, Goodfellow I (2018) Adversarial Spheres. https://doi.org/10.48550/arXiv.1801.02774

Dohmatob E (2019) Generalized no free lunch theorem for adversarial robustness. In: Proceedings of the 36th international conference on machine learning, pp 1646–1654. https://proceedings.mlr.press/v97/dohmatob19a.html

Chakraborty A, Alam M, Dey V, Chattopadhyay A, Mukhopadhyay D (2018) Adversarial attacks and defences: a survey. https://doi.org/10.48550/arXiv.1810.00069

Yuan X, He P, Zhu Q, Li X (2019) Adversarial examples: attacks and defenses for deep learning. IEEE Trans Neural Netw Learn Syst. https://doi.org/10.1109/TNNLS.2018.2886017

Narodytska N, Kasiviswanathan S (2017) Simple black-box adversarial attacks on deep neural networks. In: 2017 IEEE conference on computer vision and pattern recognition workshops (CVPRW), pp 1310–1318. https://doi.org/10.1109/CVPRW.2017.172. IEEE

Papernot N, McDaniel P, Goodfellow I (2016) Transferability in machine learning: from phenomena to black-box attacks using adversarial samples. arXiv:1605.07277

Tramèr F, Kurakin A, Papernot N, Goodfellow I, Boneh D, McDaniel P (2018) Ensemble adversarial training: attacks and defenses. In: International conference on learning representations. https://doi.org/10.48550/arXiv.1705.07204

Acknowledgements

We thank Christopher Monroe, John Preskill, Nana Liu, Peter Wittek, Ignacio Cirac, Roger Colbeck, Yi Zhang, Peter Zoller, Xiaopeng Li, Mucong Ding, Rainer Blatt, Zico Kolter, Juan Carrasquilla, and Peter Shor for helpful discussions.

Funding

This work was supported by the start-up fund from Tsinghua University (Grant No. 53330300319).

Author information

Authors and Affiliations

Contributions

SJ and SL. did the theoretical analyses and numerical simulations under the supervision of D-LD. All authors contributed to the discussions of the results and the writing of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

44214_2023_43_MOESM1_ESM.pdf

See Supplemental Material for details on different methods to obtain adversarial examples, the derivation and explaination of activation maps, and for more numerical results on both adversarial examples generation and adversarial training. (PDF 2.0 MB)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jiang, S., Lu, S. & Deng, DL. Adversarial machine learning phases of matter. Quantum Front 2, 15 (2023). https://doi.org/10.1007/s44214-023-00043-z

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44214-023-00043-z