Abstract

Confidence interval for the difference of two proportions has been studied for decades. Many methods were developed to improve the approximation of the limiting distribution of test statistics, such as the profile likelihood method, the score method, and the Wilson method. For the Wilson interval developed by Beal (Biometrics 43:941, 1987), the approximation of the Z test statistic to the standard normal distribution may be further improved by utilizing the continuity correction, in the observation of anti-conservative intervals from the Wilson interval. We theoretically prove that the Wilson interval is nested in the continuity corrected Wilson interval under mild conditions. We compare the continuity corrected Wilson interval with the commonly used methods with regards to coverage probability, interval width, and mean squared error of coverage probability. The proposed interval has good performance in many configurations. An example from a Phase II cancer trial is used to illustrate the application of these methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In a randomized parallel study to compare two treatments, sample sizes for each group are often pre-specified. When the outcome is binary (e.g., response or not), risk difference (RD) is frequently used as the parameter of interest to evaluate the effectiveness of a new treatment as compared to the gold standard. In addition to p-value, confidence interval for RD is always required in the report [1, 2]. This classical and important statistical problem has been studied for decades [3, 4] and many methods were developed to construct confidence intervals for RD between two independent groups [5, 6].

Newcombe [7] evaluated 11 methods to construct confidence intervals for RD between two independent groups. The traditional Wald method utilizes the Z test statistic with a traditional variance estimate of RD. This approach is easy to implement, but its performance highly relies on whether the true distribution of the Z test statistic is close to the normal distribution, which may not be the case with small sample sizes or unbalanced sample sizes or extreme rates. To improve the performance of intervals for RD, other methods were developed, including the profile likelihood method based on the asymptotic limiting distribution of the log-likelihood ratio test [8]. Miettinen and Nurminen [8] developed a score interval by using the estimated rates under the null hypothesis. Beal [9] proposed a Wilson interval by solving the confidence interval from a quadratic equation. Newcombe [7] utilized the individual Wilson intervals from each group to construct a hybrid interval for RD.

When sample size is small, exact methods could be alternatively used to control for the coverage probability. Exact methods compute the confidence interval by using a test statistic to order the sample space which is the collection of all possible samples. For a study with sample sizes of \(n_1\) and \(n_2\) in the first group and the second group, the size of the sample space is \((n_1+1)(n_2+1)\). The estimated RD was used by Santner and Snell [10] to order the sample space. Later, Chan and Zhang [11] proposed using the standardized Z test with a constrained maximum likelihood estimation (MLE) of the variance to order the sample space, and found that their exact approach outperforms the exact interval based on the estimated RD. In addition to RD and the Z test statistic, other measures could be used, such as the asymptotic lower and upper limits. Recently, Wang proposed the exact limit for RD based on a stochastic ordering of the sample size to improve the exact approaches based on existing test statistics. That limit is computationally intensive, although it is associated with good statistical properties. Exact interval is often criticized for its conservativeness with regards to coverage probability and computational resources needed in calculation.

The rest of the article is organized as follows. In Sect. 2, we first give a brief introduction of the existing intervals for the difference of two independent proportions. We then propose the continuity corrected Wilson interval. In Sect. 3, we compare the performance of these intervals using extensive simulations, with regards to coverage probability, interval width, and mean squared error of coverage probabilities. A Phase II study from a cancer trial is used to illustrate the application of these intervals. Lastly, we provide some comments.

2 Methods

Many statistical methods have been developed for confidence intervals for RD between two independent groups with the parameter of interest: \(\delta =p_2-p_1,\) where \(p_i\) is the rate of the i-th group (i=1, 2). We first introduce five commonly used and recommended confidence interval methods to construct a \(100(1-\alpha )\%\) confidence interval, and then propose a new interval based on the Wilson approach with continuity correction for RD [12]. Suppose \(x_i\) is the observed number of responses in the i-th group (\(i=1, 2\)). Then, the response rate in the \(i-\)th group can be estimated as \({\hat{p}}_i=x_i/n_i\).

The Wald confidence interval is calculated from the estimated variance of \({\hat{\delta }}\) as:

where \({\hat{\delta }}={\hat{p}}_2 - {\hat{p}}_1\), and \(z_{\alpha /2}\) is the \((1-\alpha /2)\)th quantile of the standard normal distribution (e.g., \(z_{0.05/2}=1.96\) when \(\alpha =0.05\)). This Wald confidence interval can be computed by using the R function, prop.test.

Newcombe [7] developed a new confidence interval for RD based on the one-sample Wilson confidence intervals using data from each group. Suppose (\(l_i, u_i)\) is the Wilson confidence interval for \(p_i\) in the i-th group (i=1, 2). These individual Wilson intervals for each group can be computed by using the R function scoreci from the R package PropCIs. We refer to this as the Newcombe’s hybrid (NH) interval, which is calculated as:

The third interval is the one proposed by Miettinen and Nurminen [8] (referred to be as the MN interval). The MN method computes interval by inverting the score test as

where \(\lambda =(n_1+n_2)/(n_1+n_2-1)\) and the detailed formulas for \({\tilde{p}}_1\) and \({\tilde{p}}_2\) may be found in Miettinen and Nurminen [8] and Newcombe [7]. Under the null hypothesis with \(\delta =0\), \({\tilde{p}}_2\) is equal to \({\tilde{p}}_1\). The MN interval can be computed by using the R function scoreci from the R package ratesci.

Profile likelihood (PL) confidence interval is also commonly used in practice. It is based on the log-likelihood function as the collection of \(\delta\) satisfying

where \({\tilde{p}}_i\) is a function of \(\delta\), and \({\tilde{p}}_i\) can be obtained from the MN method. We name this interval as the PL interval. This is a one-parameter search problem for each limit, and the R function uniroot is used to calculate the lower limit and the upper limit of the PL interval. It should be noted that \({\hat{p}}_i\) and \(1-{\hat{p}}_i\) are in the denominator of the likelihood function. For that reason, the left part of the equation will not be defined when \({\hat{p}}_i=0\) or 1. To overcome that challenge, Newcombe [7] presented the detailed solutions for these special situations.

Beal [9] proposed a Wilson interval by solving the confidence interval for \(\delta\) from a quadratic equation (referred to be as the WI interval). The confidence interval for \(\delta\) is the collection of \(\delta\) satisfying:

The two parameters \(p_1\) and \(p_2\) can be re-parametrized with \(\delta\) and \(\tau\) (\(\tau =p_2+p_1\)), where \(p_1=(\tau -\delta )/2\) and \(p_2=(\tau +\delta )/2\). Here, \(\tau\) is treated as the nuisance parameter. Equation (1) can be rewritten as:

After some straightforward algebra from a quadratic equation (\(u \delta ^2 + v \delta + w=0\)), the 100(1-\(\alpha\))% WI interval for \(\delta\) is

where

and

where \({\hat{\tau }}={\hat{p}}_2 +{\hat{p}}_1\).

The performance of the WI interval depends on how close is the sampling distribution of the statistic on the left side of Equation (1) to the standard normal distribution. To improve that approximation, we propose adapting the continuity correction [13] to construct a new confidence interval for \(\delta\).

The Wilson confidence interval with continuity correction is defined as the collection of \(\delta\) values satisfying

where \(N=n_1+n_2\) is the total sample size in a study. Similar to the WI interval, the WIC interval is calculated as

where \(v_{1}=v+1/N\), \(w_{1}=w+1/(4 N^2)-({\hat{p}}_2-{\hat{p}}_1)/N\), \(v_{2}=v-1/N\), and \(w_{2}=w+1/(4 N^2)+({\hat{p}}_2-{\hat{p}}_1)/N\). We present the following theorem for the relationship between the WI interval and the WIC interval.

Theorem 2.1

The WI interval (L, U) in Eq (3) is nested in the proposed WIC interval \((L_c,U_c)\) in Eq (4). Specifically, when \(\hat{p}_1\) and \(\hat{p}_2\) do not take boundary values of 0 and 1 and \(z_{\alpha /2} \ge 1\), we have \(L_c< L< U < U_c\).

We start the proof of this theorem with the following Lemma.

Lemma 2.1

Under conditions of Theorem 2.1., we have \(L < {\hat{p}}_2 - {\hat{p}}_1 - \frac{1}{N}\) and \(U > {\hat{p}}_2 - {\hat{p}}_1 + \frac{1}{N}\).

Proof

The Wilson lower limit L and its upper limit U are two solutions of the equation \(u \delta ^2 + v \delta + w=0\), which is equivalent to

Let \(K_1 = z_{\alpha /2} \sqrt{({\hat{\tau }}-L)[2-({\hat{\tau }}-L)]/(4 n_1)+({\hat{\tau }}+L)[2-({\hat{\tau }}+L)]/(4 n_2)}\). By proof of contradiction, we are going to show that \(K_1 > \frac{1}{N}\).

If \(K_1 \le \frac{1}{N}\) can be assumed: \(|L-({\hat{p}}_2-{\hat{p}}_1)| = K_1 \le \frac{1}{N}\), which implies

It is easy to show the bounds of \(\frac{{\hat{\tau }} + L}{2}\) and \(\frac{{\hat{\tau }} - L}{2}\) as

By using the non-boundary value assumption for \({\hat{p}}_1\) and \({\hat{p}}_2\), we have

It is always true that \(\frac{1}{n_2} > \frac{1}{N}\) and \(\frac{1}{n_1} > \frac{1}{N}\). It follows that

From the property of a quadratic equation, we have

This implies that

where the last inequality is satisfied due to the fact that \(4n_1n_2 \le (n_1 + n_2)^2 = N^2\). Because \(\frac{2N-1}{N} = 2 - \frac{1}{N} > 1\) and \(z_{\alpha /2} \ge 1\), we have \(K_1 > \frac{1}{N}\). This proves that, if we assume \(K_1 \le \frac{1}{N}\), it leads to \(K_1 > \frac{1}{N}\). Therefore it is impossible to have \(K_1 \le \frac{1}{N}\). Since \(|L-({\hat{p}}_2-{\hat{p}}_1)| = K_1\), we then have \(L < {\hat{p}}_2 - {\hat{p}}_1 - \frac{1}{N}\).

Finally, we can show that \(K_2 = z_{\alpha /2} \sqrt{({\hat{\tau }}-U)[2-({\hat{\tau }}-U)]/(4 n_1)+({\hat{\tau }}+U)[2-({\hat{\tau }}+U)]/(4 n_2)} > \frac{1}{N}\) using nearly the same argument as above, which implies that \(U > {\hat{p}}_2 - {\hat{p}}_1 + \frac{1}{N}\). \(\square\)

The results from Lemma 2.1. are used to prove Theorem 2.1.

Proof

Let \(f(\delta ) = u \delta ^2 + v_1 \delta + w_1\). Because \(u L^2 + v L + w=0\), we have

where the last inequality follows from the Lemma result. Therefore, \(f(\delta )\) has a zero between \(-\infty\) and L. Since \(L_c\) is the smaller solution of \(f(\delta )=0\), we have \(L_c < L\).

Similarly, we let \(g(\delta ) = u \delta ^2 + v_2 \delta + w_2\). Because \(u U^2 + v U + w=0\), we have

Therefore, \(g(\delta )\) has a zero between U and \(\infty\). Since \(U_c\) is the larger solution of \(g(\delta )=0\), we have \(U < U_c\). \(\square\)

Theorem 2.1 is true under the condition that \(x_i\) is not on the boundary (\(x_i=0\) or \(n_i\)). When \(x_1=0\) and \(x_2=n_2\), the estimated \(U_c\) could be less than U from the WI interval. Similar results are observed when \(x_1=n_1\) and \(x_2=0\).

When \(x_1=x_2=0\), the WI interval can be computed with the lower limit or the upper limit being zero for an unbalanced study, and zeros for both limits when \(n_1=n_2\). In such case, the WIC interval can’t estimated or at least one of the limits is not estimable because a negative value of \(v_{1}^2-4 u w_{1}\) or \(v_{2}^2-4 u w_{2}\). In such cases, the WIC limits are assumed to be the same as the WI limits. Similar arrangements will be made when \(x_1=n_1\) and \(x_2=n_2\). It should be noted that these four cases are very rare in practice.

3 Results

We conduct extensive simulation studies to evaluate the performance of these intervals for a study with sample sizes from 40 to 5000 in each group \(n_i=\)(40, 80, 100, 200, 300, 500, 2000, and 5000), where \(i=1,2\). The nominal level of these intervals is set as 95%. Seven different proportions for the first group are considered: \(p_1=\)0.01, 0.05, 0.1, 0.2, 0.4, 0.6, and 0.8. Six possible \(\delta\) values are studied: 0.05, 0.1, 0.25, 0.5, 0.65, and 0.85. The \(p_1\) values for each \(\delta\) has to meet the constraint such that \(p_1+\delta =p_2<1\). For example, when \(\delta =0.65\), the possible \(p_1\) values are 0.01, 0.05, 0.1, and 0.2.

For a study with design parameters, (\(n_1, n_2, p_1, p_2\)), we simulate \(S=\)20,000 data points \(X_s=(x_{1s}, x_{2s})\), where \(x_{is}\) follows a binomial distribution with parameters \(n_i\) and \(p_i\), and \(s=1,2,\cdots ,S\). The aforementioned six methods are then used to calculate confidence intervals, \(CI(X_s)\), for each simulated data.

3.1 Coverage probability

We first compare the coverage probability (CP) from \(S=20,000\) simulations for each given design parameter (\(n_1, n_2, p_1, p_2\)):

where I() is the index function with \(I()=1\) when \(\delta \in CI(X_s)\), and 0 otherwise. The computed CP can be viewed as the weighted CP because data are simulated from binomial distributions, not enumerated all possible data with equal probability.

The coverage probability comparison is presented in Fig. 1 when \(p_1=10\%\) and 80%. The Wald interval does not have satisfactory coverage probability when sample size is small, or a study with very unbalanced sample sizes (e.g., \(n_2 \gg n_1\)), or \(\delta\) is very large. All other methods have good coverages when \(\delta\) is small. It should be noted that the proposed WIC method often has a higher coverage probability as compared to others when sample size is not too large (e.g., 5000) or too small (e.g. 40). In the majority of these cases, the average coverage probabilities of the WIC method are slightly over 95%. When \(\delta\) is increased to \(85\%\) for the cases in the second column of the figure, the MN method and the NH method have better coverages than others when \(n_2\) is small. The PL method could have the coverage probability below 90% for a study with very unbalanced sample sizes. The WIC method could be slightly conservative as compared with other when sample sizes are small, with the coverage probability being higher than the nominal level. When \(p_1=80\%\), the findings are similar to the cases when \(p_1=10\%\) and \(\delta =25\%\). The proposed WIC method has better coverage probability than the WI interval, except the cases with small sample sizes in both groups where the WIC interval is conservative with the average coverage probability close to 96% while the WI interval has the coverage close to 95%.

We also present the coverage probability in Figure 2 when \(p_1\) is between 20% and 60%. The coverage probability of the Wald interval is closer to 95% as compared the Wald interval in Fig. 1 when \(p_1\) is small, but the Wald interval is still anti-conservative with the actual coverage below the nominal level in many configurations (e.g., a study with unbalanced sample sizes). The proposed WIC interval often has the coverage probability higher than others when sample size is not too large, although it could be slightly anti-conservative. When sample sizes in both groups are large enough, all methods have similar coverage probabilities.

For a given set of (\(p_1, p_2\)), there are a total of 81 combinations of sample sizes \((n_1,n_2)\). Let \(\Omega\) be the space of all combinations of \(n_1\) and \(n_2\). The coverage probabilities of these cases, \(CP(n_1, n_2| p_1, p_2)\), are used to calculate the proportion of guaranteed coverage probability from these 81 cases:

where \(|\Omega |=81\) is the size of \(\Omega\).

A method with a high proportion of guaranteed coverage probability is preferable. In Fig. 3, we show that proportion for each given \((p_1,\delta )\). The Wald interval has the lowest proportion, followed by the PL method and the WI method. The remaining three methods (WIC, MN, and NH) often have higher guaranteed coverage probability than others. The NH method often performs the best when \(p_1\) is small (e.g., 1% and 5%). As \(p_1\) goes up, the proposed WIC method has the highest guaranteed coverage probability. When \(p_1\) is large (e.g., 80%), the NH method could be slightly better than the other two methods.

We plot detailed coverage probability comparison between the NH method and the WIC method in Fig. 4 when \(p_1=0.05\) and \(\delta =0.1\). The average difference of coverage probability between these two methods is 17%. We add the label of the minimum sample size (\(\min (n_1,n_2)\)) in the figure, and find that the 17% difference is mainly due to the cases with small sample sizes (e.g., \(\min (n_1,n_2)=40\)). After studies with \(\min (n_1,n_2)=40\) are removed, their difference is reduced to 4% (73.5% for the WIC interval VS 77.6% for the NH interval). On the right side of the figure, we compare the coverage probability between the WIC method and the MN method when \(p_1=40\%\) and \(\delta =25\%\). As \(p_1\) is medium to large, the WIC method is often the best method with the highest guaranteed coverage probability.

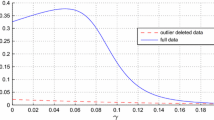

3.2 Mean squared error

Mean squared error (MSE) is also used to compare the performance of these methods:

When a method has a small MSE, its coverage probabilities are generally close to the nominal level. We present the computed MSE in Fig. 5. It can be seen that MSE is much larger for the cases when \(p_1=0.1\%\). The WI method has the largest MSE when \(p_1\) is very small. As \(p_1\) is increased to 5%, the PL method is the worst. When \(p_1\) is medium to large (e.g., between 20% and 80%), the WIC method often has the largest MSE, followed by the PL method, although the difference in MSE between these methods is very small. In such cases, the WIC method has the highest guaranteed coverage probability which leads to conservative confidence intervals in some settings. These conservative cases increase the guaranteed coverage probability, meanwhile they increase the MSE.

3.3 Interval width

We then compare the interval width from S simulations for each (\(n_1, n_2, p_1, p_2\)):

where L() and U() are the lower limit and the upper limit of a confidence interval. In Fig. 6, we show the interval width of the six methods when \(p_1=10\%\), 40%, and 80%, and \(n_1=2000\), 500, and 40. It can be seen that these methods have similar interval widths when sample sizes are not too small in both group. When \(p_1\) is small (e.g., 10%) and \(n_2\) is fewer than \(n_1\), the MN method and the NH method have wider intervals than others. When the sample size in the control group is small (e.g., \(n_1=40\)), as \(n_2\) increases, the WIC interval becomes shorter than the NH interval the MN interval. As \(p_1\) is increased to 40%, the NH method and the MN method are better than others when \(n_1\) is small. When \(p_1\) is very large (e.g., 80%), all methods have similar interval width, except the configurations with small sample sizes in both groups. In such cases, the WI method and the WIC method have slight shorter width than others.

3.4 Examples

A randomized phase II clinical trial is used to illustrate the application of the methods considered in this article. That was a trial to test whether pazopanib has efficacy comparable to doxorubicin in elderly patients with soft tissue sarcoma (STS), where doxorubicin is a standard of care [14]. In that trial, 39 patients were treated with doxorubicin \(n_1=39\), while there were 81 patients in the pazopanib group (\(n_2=81\)). The observed responses were \(x_1=6\) and \(x_2=10\) in the doxorubicin group and the pazopanib group, respectively. The estimated response rates and the computed confidence intervals for \(\delta =p_2-p_1\) are presented in Table 1.

As expected, the WIC interval is slightly wider than the WI interval. The PL interval is similar to the WIC interval. The NH interval has the longest width among these intervals, although the difference is small. The WI interval and the WIC interval have shorter width than the MN interval, the NH interval, and the PL interval. If this was a non-inferiority test problem with the pre-specified margin of -10%, the Wald method is the only method that fails to conclude non-inferiority as its lower limit is less than the non-inferiority margin.

4 Discussion

In the observation of the anti-conservative coverage probability from the WI interval for RD, we propose the continuity corrected Wilson interval to improve the coverage probability. In addition to the coverage probability improvement, the guaranteed coverage probability using the WIC interval is almost doubled as compared to that of the WI interval [15,16,17]. The proposed WIC interval is recommended to be used when a study has the rates from medium to large.

When sample size is small, exact approaches could be used to have guaranteed coverage probability. For exact intervals, the exact lower limit and the exact upper limit are computed separately by using either one test statistic or two different test statistics in the sample space ordering [18,19,20,21]. For a 100(1-\(\alpha\))% two sided interval, the nominal level for a one-sided limit is \(100(1-\alpha /2)\%\). When both lower and upper limits control for the nominal level, the final two-sided intervals could be conservative with the actual coverage probability much above 100(1-\(\alpha\))%. That leads to a high proportion of guaranteed coverage, but the MSE would be large as expected. Alternatively, Bayesian credible intervals may be considered [22,23,24,25]. Under the Bayesian setting, the observed number of responses is assumed to be a random variable that follows a pre-specified prior distribution.

We will extend the WIC method to other test statistics for comparing two treatments with binary outcome: relative risk (RR) and odds ratio (OR) [15, 26,27,28]. In the computation of RR and OR, the test statistic could be undefined when the estimated value in the denominator is zero [16, 29, 30]. In such cases, special arrangements should be utilized to properly compute the intervals for these data points.

Availability of data and materials

Data used in preparation of this article were obtained from the existing publications.

Abbreviations

- RD:

-

Risk difference

- NH interval:

-

Newcombe’s hybrid interval

- MN interval:

-

Miettinen and Nurminen interval

- PL:

-

Profile likelihood

- WI interval:

-

Wilson interval

- WIC interval:

-

Wilson confidence interval with continuity correction

- CP:

-

Coverage probability

- MSE:

-

Mean squared error

References

Agresti, A., Coull, B.A.: Approximate is better than exact for interval estimation of binomial proportions. Am. Stat. 52(2), 119–126 (1998). https://doi.org/10.2307/2685469

Chen, X.: A quasi-exact method for the confidence intervals of the difference of two independent binomial proportions in small sample cases. Stat. Med. 21(6), 943–956 (2002)

Hall, P.: On the bootstrap and continuity correction. J. R. Stat. Soc. Ser. B (Methodol.) 49(1), 82–89 (1987)

Mee, R.W.: Confidence bounds for the difference between two probabilities. Biometrics 40, 1175–1176 (1984)

Casella, G., Berger, R.L.: Statistical Inference, 2nd edn. Thomson Learning, Belmont (2002)

Hirji, K.: Exact Analysis of Discrete Data. Chapman and Hall/CRC, Boca Raton (2005)

Newcombe, R.G.: Interval estimation for the difference between independent proportions: comparison of eleven methods. Stat. Med. 17(8), 873–890 (1998)

Miettinen, O., Nurminen, M.: Comparative analysis of two rates. Stat. Med. 4(2), 213–226 (1985). https://doi.org/10.1002/sim.4780040211

Beal, S.L.: Asymptotic confidence intervals for the difference between two binomial parameters for use with small samples. Biometrics 43(4), 941 (1987)

Santner, T.J., Snell, M.K.: Small-sample confidence intervals for p 1- p 2 and p 1/p 2 in 2 \(\times\) 2 contingency tables. J. Am. Stat. Assoc. 75(370), 386–394 (2012). https://doi.org/10.1080/01621459.1980.10477482

Chan, I.S.F., Zhang, Z.: Test-based exact confidence intervals for the difference of two binomial proportions. Biometrics 55(4), 1202–1209 (1999)

Wilson, E.B.: Probable inference, the law of succession, and statistical inference. J. Am. Stat. Assoc. 22(158), 209–212 (1927). https://doi.org/10.2307/2276774

Fleiss, J.L., Levin, B., Paik, M.C.: Statistical Methods for Rates and Proportions, vol. 46, 3rd edn. Wiley-Interscience, New Jersey (2004). https://doi.org/10.1198/tech.2004.s812

Grünwald, V., Karch, A., Schuler, M., Schöffski, P., Kopp, H.G., Bauer, S., et al.: Randomized comparison of pazopanib and doxorubicin as first-line treatment in patients with metastatic soft tissue sarcoma age 60 years or older: results of a German intergroup study. J. Clin. Oncol. 38, 3555–3564 (2020)

Shan, G., Wilding, G.E., Hutson, A.D., Gerstenberger, S.: Optimal adaptive two-stage designs for early phase II clinical trials. Stat. Med. 35(8), 1257–1266 (2016). https://doi.org/10.1002/sim.6794

Shan, G., Ritter, A., Miller, J., Bernick, C.: Effects of dose change on the success of clinical trials. Contemp. Clin. Trials Commun. 30, 100988 (2022)

Shan, G.: Exact approaches for testing non-inferiority or superiority of two incidence rates. Stat. Prob. Lett. 85, 129–134 (2014). https://doi.org/10.1016/j.spl.2013.11.010

Shan, G.: Improved confidence intervals for the youden index. PLoS ONE 10(7), e0127272 (2015). https://doi.org/10.1371/journal.pone.0127272

Chang, P., Liu, R., Hou, T., Yan, X., Shan, G.: Continuity corrected score confidence interval for the difference in proportions in paired data. J. Appl. Stat. (2022). https://doi.org/10.1080/02664763.2022.2118245

DelRocco, N., Wang, Y., Wu, D., Yang, Y., Shan, G.: New confidence intervals for relative risk of two correlated proportions. Stat. Biosci. (2022). https://doi.org/10.1007/s12561-022-09345-7

Shan, G.: Promising zone two-stage design for a single-arm study with binary outcome. Stat. Methods Med. Res. (2023, in press)

Oleson, J.J.: Bayesian credible intervals for binomial proportions in a single patient trial. Stat. Methods Med. Res. 19(6), 559–574 (2010)

Jaiswal, S., Chaturvedi, A., Bhatti, M.I.: Bayesian inference for unit root in smooth transition autoregressive models and its application to OECD countries. Stud. Nonlinear Dyn. Econom. 26(1), 25–34 (2022)

Cheung, S. F., Pesigan, I. J. A., Vong, W. N.: DIY bootstrapping: getting the nonparametric bootstrap confidence interval in SPSS for any statistics or function of statistics (when this bootstrapping is appropriate). Behav. Res. Methods. 55, 474–490 (2023)

Bhatti, M., Wang, J.: Tests and confidence interval for change-point in scale of two-parameter exponential distribution. J. Appl. Statist. Sci. 14, 45–57 (2005)

Shan, G.: Optimal two-stage designs based on restricted mean survival time for a single-arm study. Contemp. Clin. Trials Commun. 21, 100732 (2021)

Shan, G.: Exact Statistical Inference for Categorical Data, 1st edn. Academic Press, San Diego (2015)

Shan, G.: Accurate confidence intervals for proportion in studies with clustered binary outcome. Stat. Methods Med. Res. 29(10), 3006–3018 (2020). https://doi.org/10.1177/0962280220913971

Shan, G.: Comments on: Two-sample binary phase 2 trials with low type I error and low sample size. Stat. Med. 36(21), 3437–3438 (2017). https://doi.org/10.1002/sim.7359

Shan, G.: Monte Carlo cross-validation for a study with binary outcome and limited sample size. BMC Med. Inf. Decis. Mak. 22(1), 270 (2022). https://doi.org/10.1186/s12911-022-02016-z

Acknowledgements

The authors are very grateful to the Editor, Associate Editor, and Reviewers for their insightful comments that help improve the manuscript.

Funding

Shan’s research is partially supported by grants from the National Institutes of Health: R03CA248006 and R01AG070849.

Author information

Authors and Affiliations

Contributions

The idea for the paper was originally developed by GS. GS, LX, and SW drafted the manuscript and approved the final version.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no competing interests.

Ethical approval and consent to participate

The authors confirm that all methods were performed in accordance with the relevant guidelines and regulations.

Consent to publish

Not applicable

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shan, G., Lou, X. & Wu, S.S. Continuity Corrected Wilson Interval for the Difference of Two Independent Proportions. J Stat Theory Appl 22, 38–53 (2023). https://doi.org/10.1007/s44199-023-00054-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44199-023-00054-8