Abstract

Accurate and reliable prediction of porosity forms the foundational basis for evaluating reservoir quality, which is essential for the systematic deployment of oil and gas exploration and development plans. When data quality of samples is low, and critical model parameters are typically determined through subjective experience, resulting in diminished accuracy and reliability of porosity prediction methods utilizing gated recurrent units (GRU), a committee-voting ensemble learning (EL) method, and an enhanced particle swarm optimization (PSO) algorithm are proposed to optimize the GRU-based porosity prediction model. Initially, outliers are eliminated through box plots and the min–max normalization is applied to enhance data quality. To address issues related to model accuracy and high training costs arising from dimensional complexity, substantial noise, and redundant information in logging data, a committee-voting EL strategy based on four feature selection algorithms is introduced. Following data preprocessing, this approach is employed to identify logging parameters highly correlated with porosity, thereby furnishing the most pertinent data samples for the GRU model, mitigating constraints imposed by single-feature selection methods. Second, an improved PSO algorithm is suggested to tackle challenges associated with low convergence accuracy stemming from random population initialization, alongside the absence of global optimal solutions due to overly rapid particle movement during iteration. This algorithm uses a good-point set for population initialization and incorporates a compression factor to devise an adaptive velocity updating strategy, thereby enhancing search efficacy. The enhanced PSO algorithm’s superiority is substantiated through comparison with four alternative swarm intelligent algorithms across 10 benchmark test functions. Ultimately, optimal hyper-parameters for the GRU model are determined using the improved PSO algorithm, thereby minimizing the influence of human factors. Experimental findings based on approximately 15,000 logging data points from well A01 in an operational field validate that, relative to three other deep learning methodologies, the proposed model proficiently extracts spatiotemporal features from logging data, yielding enhanced accuracy in porosity prediction. The mean squared error on the test set was 7.19 × 10–6, the mean absolute error stood at 0.0082, and coefficient of determination reached 0.99, offering novel insights for predicting reservoir porosity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Research background

Porosity is a crucial physical parameter in reservoir characterization, essential for guiding the efficient and accurate planning of oil and gas exploration and development, thereby helping to reduce costs. Traditional methods for predicting porosity rely on direct measurement, which offers high accuracy, but is time-consuming and resource-intensive, particularly due to the costs associated with sampling and core analysis across entire working areas. In contrast, indirect estimation methods are simpler, cost-effective, and efficient. However, these methods often depend on empirical formulas and simplified geologic models assuming reservoir homogeneity, limiting their ability to move beyond linear equations, and leading to significant relative errors in calculation results [1].

Moreover, constructing a porosity prediction model using a single well-logging dataset necessitates a substantial volume of data for fitting, with model parameters intricately linked to the logging data, posing challenges in ensuring result accuracy. Also if the feature selection of the logging data is inappropriate, it will be hard for model to keep its accuracy [2]. In addition, with increasing well depth, the pore structure of the formation grows more intricate, thereby presenting significant challenges to the precision of porosity prediction models [3]. In recent years, there has been rapid development and widespread adoption of artificial intelligence technology in the field of oil and gas geology. Traditional machine-learning methods such as multiple linear regression, artificial neural networks, and support vector machines have been extensively used for porosity prediction, yielding satisfactory outcomes [4, 5]. However, with the growing focus on unconventional oil and gas reservoirs in exploration and development, the target media for reservoir prediction exhibit pronounced heterogeneity and anisotropic characteristics. Traditional machine-learning methods have limited capability to capture robust nonlinear mapping relationships and spatial continuity between porosity and well-logging data, thereby restricting their application in the field of engineering.

In the era of exploring complex reservoirs, there is an urgent need to develop advanced deep learning models that can efficiently and accurately predict porosity, providing essential guidance for oil and gas field exploration and development. This study focuses on overcoming current challenges in porosity prediction, including complex datasets, inappropriate logging curve selection, and subpar model performance, through rigorous data preprocessing and model optimization efforts. The goal is to enhance porosity prediction accuracy and reduce costs associated with petroleum development, thereby ensuring reliable reservoir characterization.

1.2 Related Work

Deep learning techniques offer distinct advantages in handling vast, high-dimensional, and complex spatiotemporal geologic data. These methods have shown substantial potential across diverse applications, including well log-curve reconstruction [6], reservoir characterization [7], and permeability prediction in oil and gas reservoirs [8]. Data-driven deep learning approaches have also made significant advancements in predicting porosity. Table 1 provides a comparative review of related researches with author(s) names, datasets utilized, preprocessing methods, methodology measures and evaluation index.

Yang [10, 11] used density (DEN), acoustic (AC), gamma ray (GR), and other parameters to predict porosity using a convolutional neural network (CNN) and a deep neural network (DNN). The prediction results of the model showed strong agreement with the porosity in the original logging data, achieving a correlation coefficient of 0.9725. Nonetheless, CNN models demand a significant quantity of labeled data, presenting difficulties in obtaining sufficient core data for practical engineering applications.

Shao et al. [12] introduced a multitask DNN model to predict porosity, permeability, and water saturation, simultaneously, significantly streamlining the prediction process. However, no preprocessing work was performed on the logging data set, and our work utilized a better data-preprocessing approach.

Wang et al. [13] used a deep bidirectional recurrent neural network to predict porosity, compensating for the inability of traditional deep networks to provide contextual information and improved the accuracy and stability of porosity prediction. In our study, we used different preprocessing methods and deep learning models.

An et al. [14] utilized long short-term memory (LSTM) neural networks to predict reservoir porosity, with superior performance compared to DNNs. Chen et al. [15] developed a multilayer LSTM that demonstrated efficient and cost-effective porosity prediction, highlighting LSTM’s robustness and accuracy in forecasting logging data with serialized structural features. Wang et al. [16] proposed an LSTM model incorporating domain information enhancement through principal component analysis and the K-means algorithm, effectively improving porosity prediction accuracy in carbonate rocks. Wang et al. [17] established a model linking logging curves and reservoir properties based on a gate recurrent unit (GRU), achieving strong results in porosity prediction. In contrast to these studies, our research focuses on optimizing model parameters to mitigate uncertainties associated with manual parameter tuning.

Song et al. [18] combined a CNN and GRU to develop a porosity prediction model, improving accuracy by adjusting the learning rate during training. While we also explore combined neural network models for accuracy improvement, our methods differ in model optimization.

Wang et al. [19] proposed a transfer DNN for predicting shale total porosity, reducing reliance on logging and core data. While we share similarities in missing value completion methods, our study additionally incorporate box plots for data processing.

Pan et al. [20] addressed poor accuracy in porosity prediction by optimizing extreme gradient enhancement parameters using grid search and genetic algorithms, achieving favorable outcomes. Our research parallels theirs in the data analysis module, but we employ four distinct data analysis methods.

Huo et al. [21] proposed an enhanced stacking ensemble learning model for reservoir parameter prediction, improving model generalization ability. We have all considered using ensemble learning strategies to improve prediction accuracy, but we introduced this strategy in the feature selection module.

Dai et al. [22] utilized the particle swarm optimization (PSO) algorithm to optimize the Relevance Vector Machine (RVM) model for porosity prediction, thereby reducing model uncertainty and enhancing the precision of the porosity prediction. The coefficient of determination R2 between the prediction results and the original values reached 0.969. We all considered enhancing the model’s generalization capacity through the PSO algorithm, but our research made further refinements to the PSO algorithm, which included a good-point set and an adaptive compression factor.

Moreover, to address the challenge of low accuracy in predicting complex reservoirs, researchers have developed various neural network models based on logging data. These models have been integrated with optimization algorithms such as fruit fly optimization [23], artificial fish swarm [24], firefly [25], gray wolf [26], fireworks [27], PSO [28], shuffled frog leaping [29], etc., to automatically optimize crucial model parameters. The optimized models exhibit enhanced feature learning capabilities, improved generalization adaptability, and achieve higher prediction accuracy and efficiency for porosity compared to standard neural networks. In our study, we employed an enhanced PSO algorithm to optimize GRU model parameters.

1.3 Contributions

The principal contributions of this study are outlined as follows:

First, an optimization method for parameter selection based on ensemble learning was proposed to address the issues of low quality, redundancy, and multicollinearity in logging data.

-

By employing box plots to detect outliers, treating these outliers as missing values, and using linear interpolation for filling the missing values, followed by the application of min–max normalization for scaling and completion, the data can be cleansed, missing values can be filled, and comparability and consistency of the data can be ensured.

-

Following data cleaning, a committee-voting-based ensemble learning (EL) strategy was developed by incorporating random forest (RF), gray relation analysis (GRA), the maximum information coefficient (MIC), and the Spearman coefficient. This strategy selected a subset of logging features highly correlated with porosity to tackle the multiscale coupling issue of high-dimensional logging data with complex space–time dependence. This approach not only avoided the limitations of a single-feature selection algorithm but also simplified data dimensions, provided high-quality samples for deep learning-based porosity prediction models, and enhanced accuracy and efficiency, while reducing computational space and costs.

Second, to address the issues of low model accuracy due to random population initialization in the PSO algorithm and the risk of missing the global optimal solution due to excessively fast particle velocity during iteration, an improved algorithm termed GPSCF-PSO (PSO based on a good-point set and adaptive compression factor) was introduced.

-

The population diversity was enhanced through initialization with a set of good points, imporving the accuracy of algorithm optimization.

-

In addition, a compression factor was introduced to adaptively adjust the particle velocity update method based on the iteration count to prevent the algorithm from being trapped in local optima.

-

Experimental comparisons were conducted on 10 benchmark test functions, with four swarm intelligence algorithms to validate the superiority of the proposed algorithm.

Finally, an EL-IPSO-GRU porosity prediction model (GRU based on EL and improved PSO) was proposed, considering the complex space–time sequence characteristics of logging data.

-

The improved PSO algorithm was utilized to optimize the number of hidden layers, batch size, and learning rate, minimizing the influence of manual empirical values, and thus enhancing model accuracy and reliability.

-

The performance of the proposed model was compared with those of LSTM, GRU, and EL-GRU using mean square error (MSE), mean absolute error (MAE), and coefficient of determination (R2) as evaluation metrics in four wells of an working area.

-

The experimental findings demonstrated that the proposed model exhibits higher prediction accuracy compared to the other three methods.

1.4 Organization of the Paper

This paper is structured into seven sections:

-

Introduction: This section provides an overview of the research background of porosity prediction, evaluates the strengths and weaknesses of prior methodologies, and introduces the EL-ISPO-GRU model adopted in this study.

-

Optimal selection of logging data features based on EL: This section details the data-preprocessing tasks and introduces an ensemble learning strategy incorporating four correlation analysis techniques.

-

Improved PSO algorithm based on a good-point set and adaptive compression factor: This section presents the fundamental concepts of the PSO algorithm, identifies existing challenges, proposes an enhanced PSO algorithm, and conducts comparative analyses to demonstrate the benefits of four distinct PSO algorithms.

-

Optimized GRU for porosity prediction based on the EI and the improved PSO algorithm: This part integrates the approaches from the first two sections with the GRU to predict porosity and conducts comparative evaluations of the accuracy of three different models.

-

Discussion: This section interprets, analyzes, and discusses the experimental results.

-

Conclusions: This section recapitulates the research objectives and methodologies, underscoring the significance and contributions of this research.

-

References: This part catalogs all the references cited throughout the paper.

2 Optimal Selection of Logging Data Features Based on the EL

A single-feature selection method cannot effectively characterize the complex nonlinear mapping relationship between logging curves and porosity. There is multicollinearity between logging data, which means two or more logging features are highly correlated. The model may be overly dependent on one or several features. Therefore, the optimal selection method for porosity-sensitive logging features was investigated in this study to eliminate multicollinearity. To address issues of logging data quality and integrity, outliers and missing data were initially handled using box plots and linear interpolation, followed by min–max normalization. Subsequently, a committee-voting model based on EI was constructed by integrating the Spearman coefficient, RF, GRA, and MIC. Feature subsets with high correlations with porosity were selected from the high-dimensional logging data. This process aimed to enhance the computational efficiency and prediction accuracy of GRU-based porosity prediction models by eliminating redundancy and multicollinearity in the high-quality sample data provided.

2.1 Dataset Selection and Preprocessing

2.1.1 Data Selection

The experimental data are sourced from four wells in a certain oilfield. The A01 well was the main experimental site, with a depth of 2405–4320 m and a sampling interval of 0.125 m. It includes 95 logging curves, totaling approximately about 15,000 data points. Considering the wide variety of logging parameters and varying degrees of sensitivity between different parameters and the porosity, to eliminate redundancy and multicollinearity, based on expert experience and the literature [19], in addition to DEPTH and porosity (POR), 15 logging parameters were initially selected, including acoustic (AC), compensated neutron (CNL), density (DEN), deviation (DEV), dip angle (DAZ), gamma ray (GR), natural gamma spectroscopy logging (K, U, and TH), KTH gamma ray without uranium (KTH), resistivity microacoustic (RMA), resistivity (RT), resistivity array (RTA), flushed zone formation resistivity (RXO), and spontaneous potential (SP) parameters.

The specific distribution of the data is shown in Table 2, including logging curves, unit, average value, maximum value, and minimum value.

2.1.2 Data Preprocessing

Due to complex geologic conditions, instrumentation, measurement costs, and other factors, logging data often contain missing and abnormal value, which significantly impact the performance of porosity prediction models [30]. Therefore, data preprocessing is essential prior to model training. Logging attributes with more than 50% missing values were removed outright. For attributes with a small number of missing values, mean imputation was employed. In addition, outliers were identified using box plots based on the interquartile range, and data quality was enhanced using linear interpolation.

In addition, due to the inconsistent range and dimensions of various logging data, neural network training often favors attributes with larger values, over those with smaller values, thereby impacting training speed and prediction accuracy. Hence, this study employs min–max normalization to scale logging attribute values to the range [0,1], as depicted in Eq. (1). Where, Z and Z* denote the original and normalized data of a specific logging attribute, respectively, while Zmin and Zmax represent its minimum and maximum original values.

Figure 1 illustrates the distribution of normalized logging data from well A01 before and after outlier processing. In the figure, red denotes outlier data, while blue indicates the median. The integration of box plot and linear interpolation methods effectively eliminates outliers from the logging curve, significantly enhancing the quality of the preprocessed logging data.

2.2 Data Feature Correlation Analysis

To reduce the computational complexity of the model, further feature selection based on the relationship between the prediction target and logging parameters is necessary. Key methods for analyzing these relationships include linear and nonlinear analyses such as the Pearson coefficient, Kendall coefficient, Spearman coefficient, and GRA [32]. Given the temporal characteristics and strong nonlinear relationships inherent in logging data features, this study employed Spearman coefficient, GRA, RF, and MIC to assess the correlation degree among 17 logging features using A01 well-logging data as a case study. Subsequently, a committee-voting strategy was devised to identify a subset of porosity-sensitive logging features for input into the GRU model.

2.2.1 Gray Relation Analysis

The GRA method rooted in gray system theory, assesses correlation by comparing a reference sequence that reflects system behavior characteristics (X0 = {X0(k) | k = 1, 2, …, n}) with comparison sequences (Xi = {Xi(k) | k = 1, 2, …, n} (i = 1,2,…,m)) that influence system behavior. Where m denotes the number of factors, and n denotes the number of experiments per factor. The method determines correlation based on the consistency of trends between the reference and comparison sequences: strong correlation exists when trends align, and weak correlation exists when they diverge. Specific steps for GRA are as follows.

Step 1: Normalize the data, as shown in Eq. (2).

Step 2: Find the difference between the comparison sequence and the reference sequence, as shown in Eq. (3).

Step 3: Calculate the maximum difference D and minimum difference d of the two levels, as shown in Eqs. (4) and Eq. (5), respectively.

Step 4: Calculate the correlation coefficient between the comparison sequence and the reference sequence value, as shown in Eq. (6). Where, \({\Delta }_{i}(k)\) is the k-th difference of the i-th factor in the difference sequence matrix.

Step 5: Calculate the gray correlation degree, as shown in Eq. (7).

We use logging curves of well A01 as input data for the GRA algorithm. For any two logging curves, we use one curve data as a reference sequence and the other logging curve data as a comparison sequence. Based on Eqs. 2–7, we calculate the correlation degree between any two logging parameters and obtained the thermal diagram, as shown in Fig. 2. It can be seen that in the GRA analysis, the CNL, K, and SP are strongly correlated with the porosity.

2.2.2 Maximum Information Coefficient

The MIC is employed to measure the degree of correlation between two variables X and Y. The mutual information value is transformed into a metric with the interval [0,1] by employing an optimal discretization method. The main steps are outlined as follows.

Step 1: Variables x and y are partitioned into data spaces using an m × n grid, and the frequency of data points falling within the (x,y) grid is estimated as P(x,y), as depicted in Eq. (8), where N(x,y) denotes the count of occurrences within the (x,y) grid.

Step 2: The grid-partitioning method that maximizes mutual information is selected, and normalization factors are utilized to convert mutual information values into the range of [0,1], as demonstrated in Eqs. (9) and (10). The gird resolution limit is defined as m × n < B; the empirical power exponent of 0.6 can be chosen based on specific datasets; I(X,Y) represents the mutual information value between variable x and variable y; P(x,y) denotes the joint probability density function of x and y; and P(x) and P(y) are the marginal probability density functions of X and Y, respectively. Due to the maximum possible mutual information distributed on the X–Y grid being log(min{X,Y}), the calculation result of MIC can be obtained based on Eq. (11).

Based on the above steps, we conducted data correlation analysis using the MIC method. Figure 3 displays the correlation between logging parameters and porosity. The analysis reveals that the DEPTH, GR, and AC curves exhibit significant correlation with porosity.

2.2.3 Random Forest

RF is a widely used and robust EL method. Its fundamental approach involves constructing multiple decision trees and aggregating their predictions to enhance the accuracy and resilience of prediction or classification tasks. In regression tasks, the RF algorithm employs averaging or voting mechanisms to determine the final predicted value. Figure 4 illustrates the RF model.

The study employs the RF model to analyze the importance of different logging parameters on porosity, with results depicted in Fig. 5. According to Fig. 5, the GR, KTH, and SP curves demonstrate significant correlation with porosity in the RF analysis.

2.2.4 Spearman Coefficient

Logging data exhibits nonlinear characteristics, while the Spearman correlation coefficient outperforms the Pearson coefficient in handling nonlinear data. Hence, this study opted to employ the Spearman correlation coefficient for its data analysis. It assesses the strength of the relationship between two variables by evaluating their monotonicity, typically denoted as ρ and illustrated in Eq. (12).

where, x represents the sequence sorted based on n sample data points according to the predicted results; y represents the sequence sorted based on actual scores, and ρ indicates the evaluation range of [– 1,1]. A larger ρ value, indicates a stronger correlation between the two variables.

Unlike the Pearson coefficient, which assumes linear relationships, the Spearman coefficient is adept at capturing nonlinear correlations between logging parameters and the porosity, with results illustrated in Fig. 6. According to Fig. 6, the GR, KTH, CNL, and AC curves exhibit significant correlation with porosity in the Spearman analysis.

2.3 Optimal Selection of Logging Features Based on Committee Voting

In traditional research concerning logging-feature selection, a single-feature selection algorithm is typically employed. However, each algorithm is suited to specific scenarios, and different algorithms may yield varying results for the same logging data. To mitigate the limitations of relying on a single-feature method, this paper proposes a logging-feature selection strategy using committee-voting EL.

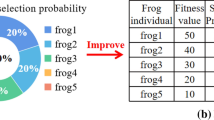

The approach involves several distinct steps. Initially, four feature selection methods were used to evaluate the importance of logging parameters for porosity (Table 3). Subsequently, the results from each method were ranked in descending order. Following this, logging parameters were assigned scores of 4, 2, 1, and 0 points, in a ratio of 3:3:3:1 based on their rankings. The total score for each logging parameter was then computed, as illustrated in Fig. 7. Finally, the top-ten logging parameters were selected as inputs for the porosity prediction model. Figure 8 presents the distribution of the selected logging data.

3 Improved PSO Algorithm Based on a Good-Point Set and an Adaptive Compression Factor

3.1 Principles and Existing Problems of PSO

3.1.1 Basic Principles of the PSO Algorithm

The PSO algorithm, a well-known swarm intelligence method, draws inspiration from the study of bird foraging behavior. Through collective information sharing, the swarm aims to locate the optimal destination. Each particle in the swarm searches along a determined direction and keeps track of the location where the highest quantity of food has been found. Furthermore, particles exchange information regarding the location and quantity of food discovered during each iteration. Throughout the search process, the entire swarm adjusts its direction based on the global and individual optimal positions, as depicted in Eqs. (13) and (14).

where vi represents the velocity of the i-th particle; xi denotes the position of the i-th particle; \(\omega \) is the inertia weight; Pi is the best position experienced by the i-th particle; gbest is the best location experienced by all particles in the group; rand(0,1) is a random number uniformly distributed between (0,1); and c1 and c2 are learning factors, typically ranging from 0 to 2.

3.1.2 Problems with PSO Algorithm

The PSO algorithm is recognized for its rapid computational speed and dependable outcomes, rendering it appropriate for addressing optimization challenges in multidimensional spaces. Nevertheless, this study identifies limitations, primarily manifested in two areas.

(1) Sensitivity to initial values: The distribution and quality of the initial population notably influence the PSO algorithm. Minor variations in initialization can cause substantial differences in the quality of the ultimate solution. Hence, a random initialization may lead to inadequate diversity and reduced global search capabilities.

(2) Prone-to-local optima: Owing to its local search characteristics, the PSO algorithm may inadvertently become confined near a local optimum, failing to explore the entire space for superior solutions. This deficiency in escaping local optima constrains the algorithm’s potential to locate the global optimum.

3.2 GPSCF-PSO algorithm

To address the deficiencies of the PSO algorithm, an enhanced algorithm named GPSCF-PSO was introduced, utilizing good-point sets and a compression factor. Initially, to tackle the issues of inadequate population diversity and limited global search capability resulting from random initialization in conventional PSO algorithms, a good-point set is used for population initialization. This enhances the uniformity of particle distribution and consequently improves the algorithm’s ability to search globally. Second, to mitigate the issue of high particle velocity during iterations, which may overlook the global optimum, a compression factor is incorporated into the velocity update formula. This adjustment limits the particle search range and moderates the velocity change, facilitating a faster and more effective convergence to the global optimum. Moreover, to avoid the algorithm from stagnation at local optima during prolonged iterations, an adaptive position update strategy based on iteration count was devised. This strategy promotes the algorithm’s escape from local extremum points; thus, enhancing its robustness.

3.2.1 Population Initialization Based on a Good-Point Set

A good-point set is an effective and uniform method for selecting points [33], and its basic definition is as follows. Let GS be the unit cube in an s-dimensional Euclidean space, if \(r\in {G}_{s}\), which is represented by Eq. 15, and its deviation \(\varphi (n)\) satisfies Eq. 16. Where \(\varepsilon \) is any positive number, then we call Pn(k) the set of good points, and \(r\) is the best point.

Set r = {2cos(2 \(\pi \) k/p)}, where 1 ≤ k ≤ s and p is the minimum prime that satisfies (p-3)/2 ≥ s, and map it to the search space as shown in Eq. 17, where \({upper}_{j}\) is the upper bound, and \({low}_{j}\) is the lower bound.

This study compares the two-dimensional initial populations created using both the good-point set method and the random method. With a population size set at 100, the individual distributions from the two methods are depicted in Fig. 9. The diagram illustrates that, compared to the random method, the good-point set method produces a more uniform distribution within the search space.

Experimental findings show the spatial pattern generated by the good-point set method remains consistent across different iteration counts, indicating stability. Consequently, this paper adopts the good-point set method for initializing the population in the PSO algorithm, which enables particles to traverse the search space more effectively, thereby enhancing global optimization performance.

3.2.2 Introducing a Compression Factor to Adaptively Adjust the Velocity Update Mode

In the early stages of iteration, if an optimal solution is not found, as the global search capability diminishes and local search strengthens, particle swarm convergence increases, and the algorithm is prone to becoming trapped in local optima. Thus, based on population initialization using a good-point set, this study introduces an adaptive position update strategy that incorporates a compression factor, as detailed in Eq. (18). Where, \(\varphi \) represents the compression factor, defined in Eq. (19); flagnum is a flag variable for the position update strategy, initially set at 0.

The algorithm adjusts flagnum depending on changes in the global optimum. If the global optimum remains unchanged after several iterations, a velocity update strategy employing a compression factor is used to reduce particle speed, allowing for more detailed exploration. Each iteration increments flagnum by one. If the global optimum changes, flagnum is reset to zero. However, if flagnum exceeds 200 and the global optimum remains unaltered, the original velocity update strategy without a compression factor is reinstated to facilitate the particles’ escape from local extremum points at increased speeds.

The velocity update with the compression factor effectively balances the global search capability in the early iterations with the local convergence ability in later stages. It can be adaptively adjusted based on the iteration count while the global optimum remains unchanged; thus, maintaining particle diversity, effectively preventing the algorithm from becoming stuck at a local optimum, and enhancing its global search performance.

3.2.3 Steps of the GPSCF-PSO Algorithm

The procedure for enhancing the adaptive PSO algorithm via the integration of a good-point set and a compression factor is delineated in Fig. 10.

The outlined steps and corresponding pseudo-code are provided below, as shown in algorithm 1.

Step 1: Establish algorithm-related parameters, including population size and the maximum number of iterations.

Step 2: Initialize the population position using the good-point set and set the compression factor.

Step 3: Compute the fitness for each particle’s position and update the individual’s historical optimal position alongside the global optimal position.

Step 4: Verify if there has been a change in the global optimal value.

Step 5: If the global optimal value remains unchanged after a predefined number of times, such as 200, advance to step 6; otherwise, proceed to step 7.

Step 6: Employ the standard PSO algorithm for updating velocity and position, as specified in Eqs. (13) and (14).

Step 7: Implement a strategy with a compression factor to update the velocity and position of the particles, as outlined in Eq. (18).

Step 8: Return to step 3 and repeat the procedure until the preset number of iterations is completed or the termination condition is fulfilled.

Algorithm 1: GPSCF-PSO algorithm

3.3 Algorithm Performance Testing

The efficacy of the modified GPSCF-PSO algorithm was assessed against four distinct PSO algorithms across ten varied types of benchmark test functions, confirming the superiority of the improved algorithm was verified.

3.3.1 Benchmark Functions

Ten benchmark functions, frequently used in CEC2019 [34] and cited earlier [35, 36], were selected for experimental test. https://www.sfu.ca/~ssurjano/optimization.html.

The principal characteristics of these functions are presented in Table 4. They serve to compare and validate the improved algorithm’s superiority over the comparison algorithms. In these functions, x denotes the independent variable; D represents the dimension; Range indicates the range of values for the independent variable; and Xmin denotes the global optimal position of the function.

3.3.2 Compared Algorithms and Parameter Settings

To ensure a rigorous comparison, the standard PSO, Linear Decreasing Inertia Weight PSO (LD-PSO) [37], PSO incorporating compression factors (CF-PSO) [38] and PSO employing good-point sets (GP-PSO) were utilized. All algorithms were configured with identical parameters for fairness in testing. The population size was set at 100, the self-learning factor c1 and the global learning factor c2 were both set at 2.05, and the initial inertia weight was 0.9. For the LD-PSO algorithm, the inertia weight decreased linearly from 0.9 to 0.3 as calculated by 0.3*(t/T), where t is the current iteration count and T is the total number of iterations.

3.3.3 Comparison with Other Algorithms

Each algorithm was independently executed 50 times on each function, with the maximum number of iterations, T, set at 1000. Evaluation indicators included the best value, mean, and standard deviation (denoted as std). The experimental results of the five algorithms are shown in Table 5.

According to the table, within the specified number of iterations, the proposed algorithm reached theoretical optimal values on six functions. On the remaining four functions, although the theoretical optimal value was not achieved, the proposed algorithm found averages closer to the theoretical optimal values compared to other algorithms, and exhibited lower standard deviations, indicating higher convergence accuracy. However, the optimization performance of the proposed algorithm was suboptimal for certain functions, such as F9 and F10, where it was outperformed by the LD-PSO algorithm. This suggests that the performance of the proposed algorithm on complex function solutions requires further enhancements to enhance improve its robustness.

3.3.4 Analysis of Convergence Performance

To visually demonstrate the optimization process, search history graphs for each test function were selected, with four graphs per function shown in Fig. 11. These graphs illustrate the initial broad global search by the particle swarm within the defined search space, followed by more focused local searches, culminating in as close a convergence as possible to the global optimal position.

Two-dimensional diagrams of iterative optimization history of the GPSCF-PSO algorithm on ten functions. a Two-dimensional diagrams of iterative optimization history of the GPSCF-PSO algorithm on the first five functions. b Two-dimensional diagrams of iterative optimization history of the GPSCF-PSO algorithm on the last five functions

In addition, to further assess the convergence of the proposed algorithm, comparisons with four other algorithms were conducted on 10 functions. Owing to space constrains, not all convergence curves could be displayed. Specifically, the iterative convergence curves for the SumSquares and Rastigin functions as depicted in Figs. 12 and 13.

The experimental results indicate that, compared to the four other algorithms, the improved PSO algorithm proposed in this study achieves higher accuracy within the same number of iterations. Even with prolonged stagnation in the later stages of iteration, the optimization values continue to decrease significantly and the curve becomes smoother. This demonstrates that the improvements to the algorithm, though the integration of a good-point set and an adaptive factor, have resulted in greater stability, improved convergence accuracy, and an enhanced ability to escape local optima.

4 Optimized GRU for Porosity Prediction Based on the EI and Improved PSO Algorithm

The EL-IPSO-GRU model was constructed for porosity prediction. The logging data selected by the EL strategy based on committee voting were used as input for the model. The GPSCF-PSO algorithm was used to obtain the optimal hyper-parameters of the model. Then, the porosity was predicted using the parameters GR, AC, etc. By comparison with the LSTM, GRU, and EL-GRU models, the prediction performance of the proposed model was verified.

4.1 EL-IPSO-GRU Model

Key parameters in the traditional GRU typically depend on personal expertise, and excessive adjustments often result in suboptimal accuracy and reliability. Consequently, the GPSCF-PSO algorithm was employed to determine optimal values for the number of hidden layers, batch size, and learning rate to minimize human intervention and enhance accuracy and reliability. The hierarchical structure of the EL-IPSO-GRU model is shown in Fig. 14.

The EL-IPSO-GRU model was implemented as follows.

Step 1: Define the structural parameters and hyper-parameters that need to be optimized for the GRU, set the range for parameter optimization range, and identify the target for the GPSCF-PSO algorithm.

Step 2: Establish the fitness function, utilizing the Mean Squared Error (MSE) as the evaluation criterion for each iteration, where a smaller MSE value indicates a better position.

Step 3: Initiate the population using a good-point set to determine the initial hyper-parameter combinations for the GRU neural network.

Step 4: Assess the fitness of the initial population, adjust particle positions based on fitness values, and cyclically feed the newly optimized individuals back into the GRU, until the termination condition is met.

Step 5: Employ the optimally generated individuals by the GPSCF-PSO algorithm as the ideal hyper-parameters for the GRU neural network, culminating in the trained EL-IPSO-GRU model.

The pseudo-code of the EL-IPSO-GRU model for porosity prediction is shown in algorithm 2.

Algorithm 2: Computing model for porosity prediction

4.2 Porosity Prediction Process

Initially, due to the complex relationship between reservoir porosity and various logging parameters, the committee-voting EL strategy was utilized to identify features significantly correlated with porosity for model input. Subsequently, the enhanced PSO algorithm was employed to determine the hyper-parameters of the GRU. Porosity was then predicted using the EL-IPSO-GRU model, as illustrated in Fig. 15.

-

Data preprocessing and logging-feature selection: To address the quality and integrity of logging data, box plots and linear interpolation were initially applied to detect outliers and impute missing values. This was followed by min–max normalization. Integration of Spearman, RF, GRA, and MIC facilitated the development of a committee-voting EL model, enabling the selection of sensitive and critical logging features as inputs for the porosity prediction model based on the GRU. The selected data were divided into training and testing sets at an 8:2 ratio.

-

Model construction and training: Logging data from the training set were used as input with porosity as the output. Concurrently, settings for relevant parameters were established. The GPSCF-PSO algorithm was then applied to optimize the number of hidden layers, batch size, and learning rate for the GRU neural network. The model’s performance was evaluated using MSE, MAE and R2, with the final trained EL-IPSO-GRU model achieved after multiple training iterations.

-

Porosity prediction and model evaluation: The trained neural network model was utilized to predict porosity in the test set. The performances of LSTM, GRU, and EL-GRU models were assessed by comparing their prediction results with actual values.

4.3 Experimental Results and Analysis

4.3.1 Model Comparison and Parameter Settings

Following preprocessing, 15,000 logging data points from well A01 were inputted into the deep learning model. LSTM and GRU, established models for sequence prediction tasks, including time series forecasting, provided a baseline for evaluating the EL-IPSO-GRU model. By comparing the performance of the proposed EL-IPSO-GRU model with these established models, researchers can assess its effectiveness and determine if it outperforms comparably to the existing methods. Thus the effectiveness of the EL-IPSO-GRU model was compared and analyzed with LSTM, GRU, and EL-GRU models in predicting porosity. as detailed in Table 6.

4.3.2 Model Performance Verification

Each method was independently run ten times on the training and testing sets, using MSE, MAE, and R2 as the evaluation metrics. Tables 7 and 8 display the errors and determination coefficients for the four methods.

It is evident from Tables 7 and 8 that, compared to the other three methods, the proposed model exhibits the smallest error, the highest data fitting degree, and superior prediction performance for porosity on both the training and testing sets.

In addition, the error-iteration curves of the four methods are depicted in Fig. 16. It can be seen that the error loss of the proposed model decreases most rapidly and converges to the optimal result the earliest. The convergence speed and accuracy are significantly better than other three algorithms, indicating superior performance, high convergence accuracy, and stability.

Violin plot can display the distribution and probability density of multiple sets of data. It combines the characteristics of box plots and density plots, and is mainly used to display the distribution shape of data, but it has a better display effect at the density level. Therefore, in our study we used violin plot to display the experimental results of the four models to clearly display the distribution of MSE and MAE over ten runs on a test set comprising 12,000 samples, as depicted in Fig. 17.

The experimental results, as shown in Fig. 17 demonstrate that the MSE distribution for the LSTM model ranges from 2.89E–05 to 6.80E–05, with a mean of 5.88E–05, and the MAE distribution ranges from 0.0155 to 0.025, with a mean of 0.021. The MSE for the GRU model ranges from 1.91 to 8.04E–05, with a mean of 2.27E–05, and the MAE ranges from 0.01 to 0.022, with a mean of 0.155. The MSE distribution for the EL-GRU model ranges from 8.22E–06 to 2.96E–05, with a mean of 1.07E–05, and the MAE ranges from 0.008 to 0.017, with a mean of 0.0092. The MSE distribution for the model proposed in this paper ranges from 4.56E–06 to 1.15E–05, with a mean of 7.19E–06, and the MAE ranges from 0.007 to 0.01, with a mean of 0.0082. Compared to the other three methods, the proposed mdoel demonstrates the highest accuracy, with decreases in MSE of 32–7% and MAE of 11% ~ 61%, and an improvement in R2 of 0.7% to 3.4%. The predicted porosity values are relatively close to the actual values, and the errors for the majority of samples fall within a narrow range, suggesting that the proposed model demonstrates good stability and high prediction accuracy.

4.3.3 Analysis of the Porosity Prediction Results

To further assess the effectiveness and accuracy of the proposed method, for 3000 test samples, the predicted porosity results of the proposed model were compared with the actual values, as depicted in Fig. 18. From Fig. 18, it is evident that the proposed model displays a minimal discrepancy between the predicted value and true porosity values, and a strong correlation exists between the predicted curve and the actual curve, with a determination coefficient of 0.99.

In addition, a visual analysis of the absolute error between the predicted porosity results of the four algorithms and the actual values on the test set was conducted, as shown in Fig. 19. Due to space constraints, the prediction results on the training set are not shown.

The comparison reveals that the absolute error of the model discussed in this paper is lower than that of the other three methods, and the overall error between the predicted and actual values was within a small range.

5 Discussion

The experimental results indicate that the EL strategy of committee voting employed in this paper for optimal logging-feature selection successfully eliminates data redundancy and multicollinearity while facilitating dimensionality reduction. This approach provides the simplest and optimal sample data for the model, enhancing computational efficiency and prediction accuracy. By comparing the results, it was found that EL-GRU showed a decrease in MSE and MAE compared to GRU model, and an improvement in R2.

Furthermore, the PSO algorithm was enhanced by integrating the good-point set and compression factor, and was utilized to derive the optimal hyper-parameters for the GRU. A comparison of the performances of four PSO algorithms on 10 benchmark test functions demonstrated that the improved PSO algorithm achieves higher convergence accuracy and enhanced stability.

From the final evaluation of the results, it can be seen that the proposed method has improved the accuracy and reliability of model, yielding superior porosity prediction results in actual work areas. The MSE on the test set was 7.19 × 10–6, the MAE stood at 0.0082, and R2 reached 0.99. This not only addresses the challenge of obtaining comprehensive porosity distribution characteristics of the entire work area due to the absence of continuous coring but also markedly improves the efficiency and accuracy of porosity prediction, contributing to the reduction of costs in oil and gas exploration and development.

6 Conclusions

To address issues of redundant and nonlinear logging data in machine-learning-based reservoir porosity prediction, as well as the effects of empirical hyper-parameter values in neural networks on model accuracy and reliability, a logging parameter selection method based on EL and committee voting was introduced. Enhancements were applied to the PSO algorithm to secure the optimal hyper-parameter combination for the GRU model. Subsequently, the porosity was predicted using the optimized GRU model yielding the following results.

(1) The optimal logging parameter selection method, utilizing committee voting and EL, effectively addresses the nonlinear mapping problem between logging data and reservoir parameters, eliminates redundant information and multicollinearity, reduces dimensionality, overcomes the limitations of single-feature selection methods, provides the simplest and best optimal high-quality data input for machine-learning-based porosity prediction models, enhances improve prediction accuracy, and reduces computational space and costs.

(2) The PSO algorithm was enhanced by integrating good-point sets, a compression factor, and an adaptive strategy, which resolved the issues of poor global search capability due to random initialization of the population, and the tendency to converge to local optima in the later iterations. A comparison of the performances of three PSO algorithms on 10 benchmark test functions demonstrated that the improved PSO algorithm achieves higher convergence accuracy and enhanced stability.

(3) By refining the PSO algorithm to obtain the optimal hyper-parameter combination of the GRU model, the complexity and uncertainty of personal parameter adjustment are minimized. The porosity prediction model, based on the optimized GRU, has shown promising performance in actual work areas, not only addressing the challenge of not obtaining complete distribution characteristics of porosity due to limited coring and high testing costs but also significantly improving the efficiency, accuracy, and reliability of porosity prediction. This offers valuable guidance for oil and gas exploration and development. Due to the lack of consideration for the long-term dependency of GRU, other methods such as attention mechanisms can be introduced in this study to address this issue. Moreover, future research will focus on the generalization ability of the model in combination with its practical applications in real work areas, providing insights into its engineering applicability.

Availability of Data and Material

All available data are present in the manuscript. All data, models, and code generated or used during the study appear in the submitted article.

Abbreviations

- GRU:

-

Gated recurrent unit

- LSTM:

-

Long short-term memory

- EL:

-

Ensemble learning

- PSO:

-

Particle swarm optimization

- DNN:

-

Deep neural network

- CNN:

-

Convolutional neural network

- FR:

-

Random forest

- GRA:

-

Grey relation analysis

- GPSCF-PSO:

-

PSO based on Good-Point Set and adaptive Compression Factor

- EL-IPSO-GRU:

-

GRU based on EL and Improved PSO

References

Liu, S.Y., Qu, F.L., Zhou, F., et al.: Deep learning reservoir parameter prediction based on seismic attribute reduction: take ledong area of yinggehai basin as an example. CT Theory Appl. 31(5), 577–586 (2022)

Yihao, W.U., Shuangfang, L.U., Fangwen, C.H.E.N., et al.: Quantitative characterization of organic pores in muddy shale reservoirs. Spec. Oil Gas Reserv. 22(5), 65–68 (2015)

Zhong, G., Chen, L., Liao, M., et al.: A comprehensive logging evaluation method of shale gas reservoir quality. Nat. Gas Ind. 40(2), 54–60 (2020)

Otchere, D.A., Arbi Ganat, T.O., Gholami, R., et al.: Application of supervised machine learning paradigms in the prediction of petroleum reservoir properties: comparative analysis of ANN and SVM models. J. Pet. Sci. Eng. 200, 108182 (2021)

Zhang, Q.G., Zhang, Y.W., Lin, X.D.: Application of PCR and LM-BP Neural Network inporosity prediction. Inner Mong. Petrochem. Ind. 1, 1–7 (2022)

Zhang, J.C., Deng, J.G., Tan, Q., et al.: Reconstruction of well logs based on XG-Boost. Oil Geophys. Prospect. 57(03), 97–705+496 (2022)

Korjani, M., Popa, A., Grijalva, E., et al.: A New approach to reservoir characterization using deep learning neural networks. (2016). https://doi.org/10.2118/180359-MS

Liu, H., Xu, Y., Luo, Y.Q., et al.: Permeability prediction of porous media using deep-learning method. J. Mech. Eng. 58(14), 328–336 (2022)

An, P., Yang, X., Zhang, M.: Porosity prediction and application with multiwell-logging curves based on deep neural network. SEG Technical Program Expanded Abstracts, pp. 819–823 (2018)

Yang, L.Q., Zha, B., Chen, W.: Prediction method of reservoir porosity based on deep neural network. China Science Paper 15(1), 73–80 (2020)

Yang, L.Q., Chen, W., Zha, B.: Prediction and application of reservoir porosity by convolutional neural network. Prog. Geophys.. Geophys. 34(4), 1548–1555 (2019)

Shao, R.B., Xiao, L.Z., Liao, G.Z., et al.: A reservoir parameters prediction met-hod for geophysical logs based on transfer learning. Chin. J. Geophys.Geophys. 65(02), 796–808 (2022)

Wang, J., Cao, J.X., Zhou, X.: Reservoir porosity prediction based on deep bidirectional recurrent neural network. Prog. Geophys.. Geophys. 37(01), 267–274 (2022)

An, P., Cao, D.P., Zhao, B.Y., et al.: Reservoir physical parameters prediction based on LSTM recurrent neural network. Prog. Geophys.. Geophys. 34(5), 1849–1858 (2019)

Chen, W., Yang, L.Q., Zha, B., et al.: Deep learning reservoir porosity prediction based on multilayer long short-term memory network. Geophysics 85(4), WA213–WA225 (2020)

Wang, X., Jiang, T., Zhou, M., et al.: Application of neighborhood information-enhanced MLSTM in reservoir parameter prediction: a case study of heterogeneous carbonate reservoirs. Progress Geophys. (2024). https://kns-cnki-net.webvpn.nepu.edu.cn/kcms/detail/11.2982.P.20231103.1753.051.html. Accessed 06 Nov 2023

Wang, J., Cao, J.X., You, J.C., et al.: Prediction of reservoir porosity permeability and saturation based on a gated recurrent unit neural network. Geophys. Prospect. Pet. 59(4), 616–627 (2020)

Song, H., Chen, W., Li, M.J., et al.: A method to predict reservoir parameters based on convolutional neural network-gated recurrent unit. Pet. Geol. Recov. Eff. 26(05), 73–78 (2019)

Wang, M., Yang, T., Tang, H.M., et al.: Prediction for total porosity of shale based on transfer deep neural network. J. Southwest Pet. Univ. (Science & Technology Edition) 45(06), 69–79 (2023)

Pan, S., Zheng, Z., Guo, Z., et al.: An optimized XGBoost method for predicting reservoir porosity using petrophysical logs. J. Pet. Sci. Eng. 208, 109520 (2022)

Huo, F., Li, Q., Dong, H., et al.: Prediction method of reservoir evaluation parameters based on improved stacking fusion model. Progress Geophys. (2024). https://kns-cnki-net.webvpn.nepu.edu.cn/kcms/detail/11.2982.p.20240612.0920.002.html. Accessed 12 Jun 2024

Dai, S., Li, M., Tang, J., et al.: Seismic and well logs integration for reservoir parameter prediction and uncertainty evaluation based on relevance vector machine optimized by particle swarm optimization. Oil Geophys. Prospect. 58(6), 1436–1445 (2023)

Wei, X.X., Tao, D., Huang, H.J.: Optimizing BP neural network to predict gasoline octane number by improved fruit fly algorithm. J. Shandong Univ. (Engineering Science). 23, 20–28 (2023)

Xie, J.B., Jiang, F., Du, J.W., et al.: Stock price prediction based on an improved artificial fish swarm algorithm and RBF neural network. Comput. Eng. Sci. 44(11), 11 (2022)

Zhu, X.H., Shen, G.J., Xia, P.F., et al.: Model based on spirally evolution glowworm swarm optimization and back propagation neural network and its application in PPP financing risk prediction. Comput. Sci. 49(S1), 667–674 (2022)

Cao, K., Tan, C., Liu, H., et al.: Data fusion algorithm of wireless sensor network based on BP neural network optimized by improved grey wolf optimizer. J. Univ. Chin. Acad. Sci. 39(2), 232–239 (2022)

Zhang, J., Hao, Q.N.: Forecast of photovoltaic power generation based on firework algorithm optimized BP neural network. Comput. Technol. Dev. 031(010), 146–153 (2021)

Xiong, C.H., Zhao, F., Qin, B.C., et al.: Application of particle swarm optimization support vector machine in reservoir prediction. Adv. Geosci.Geosci. 10(1), 18–26 (2020)

Miaomiao, L., Dan, Y., Jingfeng, G., et al.: An optimized neural network prediction model for reservoir porosity based on improved shuffled frog leaping algorithm. Int. J. Comput. Intell. Syst. 15, 37 (2022). https://doi.org/10.1007/s44196-022-00093-6

Xiong, Z.M., Guo, H.Y., Wu, Y.X.: Review of missing data processing methods. Comput. Eng. Appl.. Eng. Appl. 57(14), 27–38 (2021). https://doi.org/10.3778/j.issn.1002-8331.2101-0187

Dong, M.C., Chai, R.K., Xin, J., et al.: Study on prediction of dynamic permeability based on improved BP neural network. J. Shaanxi Univ. Sci. Technol. 36(1), 90–95 (2018)

Zhou, W., Zhao, H.H., Jiang, Y.F., et al.: Logging data reconstruction based on cascade bidirectional long short-term memory neural network. Oil Geophys. Prospect. 57(6), 1473–1480 (2022)

Jia, S., Lin, S., Yang, M., et al.: Short-time traffic flow prediction on narrow roads based on improved whale-optimized GRU. Comput. Eng. (2024). https://kns-cnki-net.webvpn.nepu.edu.cn/kcms/detail/31.1289.tp.20240417.1519.005.html. Accessed 19 Apr 2024

Liang, J.J., Qu, B.Y., Gong, D.W., et al.: Problem definitions and evaluation criteria for the CEC 2019 special session on multimodal multiobjective optimization. Wellington-New Zealand, (2019).

Liu, J., Li, D., Wu, Y., et al.: Lion swarm optimization algorithm for comparative study with application to optimal dispatch of cascade hydropower stations. Appl. Soft Comput. J. (2020). https://doi.org/10.1016/j.asoc.2019.105974

Wang, Y., Yu, Y., Gao, S., et al.: A hierarchical gravitational search algorithm with an effective gravitational constant. Swarm Evol. Comput.Evol. Comput. (2019). https://doi.org/10.1016/j.swevo.2019.02.004

Choudhary, S., Sugumaran, S., Belazi, A., et al.: Linearly decreasing inertia weight PSO and improved weight factor-based clustering algorithm for wireless sensor networks. J. Ambient Intell. Humaniz. Comput. 14(6), 6661–6679 (2023)

Wang, P.F., Ren, L.J., Gao, Y.: PSO algorithm based on improved constraction factor. Electron. Sci. Technol. 35(05), 14–18+46 (2022)

Guo, Q.H., Li, Y., Yang, D.S.: Convergence analysis and application of an improved sparrow search algorithm. Control and Decis (2024). https://doi.org/10.13195/j.kzyjc.2023.1065

Funding

This work was supported by the Natural Science Foundation of Hebei Province (D2023107002), Natural Science Foundation of Xinjiang Uygur Autonomous Region (2022D01A59), Special Project of Northeast Petroleum University Characteristic Domain Team (2022TSTD-03), Postdoctoral Scientific Research Development Fund of Heilongjiang Province (LBH-Q20073), Excellent Young and Middle-aged Innovative Team Cultivation Foundation of Northeast Petroleum University (KYCXTDQ202101), Basic Research Support Plan for Excellent Young Teachers in Heilongjiang Province (YQJH2023073), Northeast Petroleum University Guided Innovation Fund (YJSKCSZ_202303).

Author information

Authors and Affiliations

Contributions

Liu MM and Xu HR contributed to the algorithm design and performed the experiments, and wrote the manuscript. Jia Y and Xi JH helped design the algorithm and revised the manuscript. Zhao FD and Zhang Q helped complete simulation experiments and analysis and proofread the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no competing interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, M., Xu, H., Zhao, F. et al. Porosity Prediction Based on Ensemble Learning for Feature Selection and an Optimized GRU Improved by the PSO Algorithm. Int J Comput Intell Syst 17, 189 (2024). https://doi.org/10.1007/s44196-024-00600-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00600-x