Abstract

In the field of image processing and machine vision, object tracking is a significant and rapidly developing subfield. The numerous potential applications of object tracking have garnered much attention in recent years. The effectiveness of tracking and detecting moving targets is directly related to the quality of motion detection algorithms. This paper presents a new method for estimating the tracking of objects by linearizing their trajectories. Estimating the movement paths of objects in dynamic and complex environments is one of the fundamental challenges in various fields, such as surveillance systems, autonomous navigation, and robotics. Existing methods, such as the Kalman filter and particle filter, each have their strengths and weaknesses. The Kalman filter is suitable for linear systems but less efficient in nonlinear systems, while the particle filter can better handle system nonlinearity but requires more computations. The main goal of this research is to improve the accuracy and efficiency of estimating the movement paths of objects by combining path linearization techniques with existing advanced methods. In this method, the nonlinear model of the object's path is first transformed into a simpler linear model using linearization techniques. The Kalman filter is then used to estimate the states of the linearized system. This approach simplifies the calculations while increasing the estimation accuracy. In the subsequent step, a particle filter-based method is employed to manage noise and sudden changes in the object's trajectory. This combination of two different methods allows leveraging the advantages of both, resulting in a more accurate and robust estimate. Experimental results show that the proposed method performs better than traditional methods, achieving higher accuracy in various conditions, including those with high noise and sudden changes in the movement path. Specifically, the proposed approach improves movement forecasting accuracy by about 12% compared to existing methods. In conclusion, this research demonstrates that object trajectory linearization can be an effective tool for improving object tracking estimation. Combining this technique with existing advanced methods can enhance the accuracy and efficiency of tracking systems. Consequently, the results of this research can be applied to the development of advanced surveillance systems, self-driving cars, and other applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Real-time object tracking is one of the most rapidly evolving fields in computer vision [1, 2]. The objective of object tracking is to estimate the location and motion parameters of a target in a video sequence based on its initial position in the first frame. Research in object tracking significantly enhances our understanding of object motion and structure [3,4,5]. Object tracking has numerous industrial and scientific applications, including behavior recognition, traffic monitoring, and driving automation [6]. However, some tracking applications may face difficulties if there is insufficient prior information about the object's appearance, scene settings, irregularities, and ambient lighting conditions [7]. To address this issue, researchers have developed various object trackers that improve accuracy by training on motion trajectory databases before real-time tracking [8,9,10].

Objects can generally be classified into three primary categories: rigid, non-rigid, and articulated [11]. Rigid bodies move through translation and rotation, while articulated objects consist of multiple rigid parts connected by joints [12]. Most video applications, including surveillance, entertainment, and data retrieval, typically treat all objects as rigid [13].

Image acquisition systems capture a two-dimensional representation of a scene or object in the three-dimensional world [14,15,16]. When an object moves, its path can be estimated as a two-dimensional trajectory based on spatial–temporal changes in the image [17]. These changes can be categorized into major and minor changes. Major changes may result from camera movement or significant lighting alterations, while minor changes can be caused by body movements, local lighting variations, and environmental noise such as wind or tree movement [18,19,20]. Motion estimation techniques are used to estimate the apparent motion of objects based on the motion itself or its effects, such as changes in sound and light [21]. The aim of motion estimation is to assign a motion vector (displacement or velocity) to each pixel in the image [22]. This process often relies on assumptions about the object's characteristics, motion, or image properties tailored to the specific application [23]. Issues such as unwanted camera movement, occlusion, noise, missing texture, and brightness changes can pose significant challenges for motion estimation.

To enhance motion estimation performance, researchers have developed variable-size block matching algorithms for motion estimation, object tracking, comparison, and video data compression. A detailed analysis of motion assessment using block matching algorithms, as presented in reference [24], shows that this approach is frequently used in video encoding applications. However, block matching is also recognized as an efficient and reliable technique for various motion estimation tasks.

An approach to solving tracking issues was put forth in [25], who combined a gradient-oriented histogram (HOG) descriptor with a convolutional neural network (CNN) to improve the detection quality rate [26]. To lower the number of false positives, they cleaned and binarized the 120 × 32 images used to train the system. In the trials, the background frame size was eliminated, and after the bubbles, morphological and Laplacian Mixture of Gaussian (LOG) operations were applied. To further decompose the structural information of the objects in the collected video pictures, the images were also subjected to feature extraction and computation using the HOG descriptor [27]. The appearance features were saved in an array before being sent to the network CNN for additional processing. Using the multi-camera pedestrian data set from EPFL, they have tested and deployed their system for real-time multi-object tracking on several metropolitan streets. The outcomes demonstrated that the suggested strategy enhanced data transfer and detection rates [28, 29]. It also outperformed the sophisticated online technique by registering the highest level of specificity and accuracy. A multi-camera, multiplayer tracking framework for live streaming videos is presented in the paper [30, 31]. suitable for views that overlap and don't overlap. To investigate the precise time intervals between captured frames, a time-based motion model is suggested. Additionally, since cameras' orientations and exposures vary, tracked pedestrians crossing camera boundaries can produce misleading matches. A tracked person's tracking identification is likely to change when they re-enter the same camera because their motion model is disabled when they are out of the camera's field of view [32, 33].

In modern object tracking systems, one of the main challenges is to accurately estimate the movement path of objects in dynamic and complex environments. This challenge is especially important in sensitive applications, such as surveillance systems, automatic navigation and robotics. Existing methods for estimating the trajectory of objects, including the Kalman filter and the particle filter, each have limitations. The Kalman filter works by assuming that the system is linear and the noise is Gaussian, whereas many real systems are nonlinear and have non-Gaussian noise. On the other hand, the particle filter, despite its higher accuracy in non-linear environments, is less efficient due to the need for many calculations.

The existing gap in the current research in the field of object trajectory estimation is due to the limitations of the existing methods. The Kalman filter loses its efficiency when faced with nonlinear systems and non-Gaussian noise, and the particle filter is not suitable for many real-time applications due to its high computational complexity. This gap indicates the need to develop a method that can combine the advantages of both methods while addressing their limitations.

The main question of this research is how to improve the accuracy and efficiency of estimating the movement path of objects in dynamic and complex environments by combining existing methods? In other words, is it possible to achieve a more accurate and efficient estimation by linearizing the movement path of objects and combining this technique with advanced methods?

To solve this gap and answer the research question, this paper proposes a new method to estimate the tracking of objects using the linearization of their movement path and combining it with Kalman filter and particle filter. In this method, first, the nonlinear model of the object's path is transformed into a simpler linear model using linearization techniques. Then, the Kalman filter is used to estimate the states of the linearized system. To manage noise and sudden changes in the movement path, a particle filter-based method is also used. This combination of two different methods provides the possibility of using the advantages of both methods and, as a result, provides a more accurate and robust estimate.

This paper is written with the aim of improving the accuracy and efficiency in estimating the tracking of objects in complex and dynamic environments. In many practical applications, such as surveillance systems and self-driving cars, accurate estimation of the trajectory of objects is of great importance. However, there are many challenges, such as sudden changes in the trajectory and the presence of noise in the data, which can reduce the estimation accuracy. The main motivation of the authors is to provide a method that can more accurately predict the movement path of objects and at the same time is resistant to noise and sudden changes using object path linearization methods.

In the following, various sources have been reviewed that have reviewed the available methods in object tracking estimation. These resources include various techniques, including Kalman filter, particle filter, and machine learning-based methods. Each of these methods has its own strengths and weaknesses; for example, the Kalman filter is suitable for linear systems but has a poorer performance when dealing with nonlinear systems, while the particle filter can cope with the nonlinearity of systems better but requires heavier calculations.

The proposed approach is inspired by the analysis and review of these sources and tries to achieve an optimal solution by combining path linearization techniques with existing methods. In this approach, the advantages of linearization methods are used to simplify complex models and advanced techniques are used to manage noise and sudden changes. Therefore, the existing studies serve as a foundation for the development of the new approach and show how to achieve improved accuracy and efficiency in object tracking estimation by combining different ideas.

The main contributions in this article are summarized as follows:

-

Improving tracking of moving targets by tweaking the Kalman filter's state model.

-

Using multiple parallel Kalman filters, each for a specific moving target, to better track moving targets.

-

Use of neural networks in combining information and making decisions in identifying goals.

This article is organized as follows: Sect. 2 provides a brief description of the basic principles and the methods used in the research, including motion estimation, the k-means algorithm, and fuzzy logic. Section 3 presents the proposed scheme for motion estimation in two-dimensional space, followed by a brief graphical analysis. Experimental results obtained in two data sets are provided in Sect. 4, and conclusions are presented in Sect. 5.

2 Background

Using the target's prior position and the present observation, the target position is estimated for each input frame during the tracking process. In this study, the k-means algorithm is used to cluster moving objects based on their position and properties so that they can be distinguished from each other. In addition, fuzzy logic is used to define a series of membership functions for estimating the next state of a linear discrete-time system based on prior information. Functioning as an estimator, this fuzzy system helps predict the trajectory of the tracked object.

2.1 Motion Estimation

Motion-based video frame interpolation has created simple yet efficient approaches. Existing motion-based interpolation techniques often use a pre-built optical flow model or a pyramidal U-Net network for motion estimation, both of which have issues with enormous model sizes or being unable to handle a wide range of difficult motion instances [34]. The challenge of estimating displacement d of every pixel situated at the position of the moving object is known as the motion estimation problem, according to the picture sequence model. The image sequence model is expressed as follows for ease of use:

Solving this equation is similar to solving an extensive optimization problem. To choose d for all frames, it is necessary to choose the appropriate error e(x). The d value is typically chosen such that the Displaced Frame Difference is minimized:

The fact that the motion variable d is an argument of the picture function in (1) makes it more difficult to solve this issue. It is generally difficult to collect motion information since the image function cannot be described as a particular function of position [35].

Many motion estimators use the idea of minimizing some DFD functions. However, the only framework from which all motion estimators can be generated is the Bayesian framework. Since Bayesian analysis is outside the scope of this article, this subject will not be explored here.

Because there are two variables in the motion equation above, it cannot be solved in a single frame. The x component of motion and the y component of motion. Thus, to solve it, we need at least two equations. In other words, at least two coordinate frames are needed to describe the motion [36].

Different motion estimators can be categorized into multiple types based on how they solve the image sequence model of Eq. (1) for the displacement variable d [37]. A summarized description of these types is presented below:

-

Comprehensive search: the most straightforward approach is to use a comprehensive search, i.e., try all possible d values to determine which one minimizes DFD.

-

Linearization with Taylor series: this method involves using the Taylor series to linearize the motion equation, making Eq. (1) an explicit function of d.

-

Pel-recursive techniques: these recursive methods work by incrementally improving the motion vector estimate over the course of several iterations.

-

Optical flow motion estimators: these estimators use additional constraints (for rotation) to enhance motion estimation.

This paper presents a new hybrid method that operates based on the linearization of object motion in two-dimensional space, prediction of motion direction and velocity, and finally prediction of the next position of the object. Object tracking is the process of extracting a trajectory from a visual sequence by detecting how the object moves in that sequence. Obvious examples of object tracking include the process whereby a robot’s position is determined from a sequence of images or hand movements are tracked by a human–computer interaction system. For effective motion tracking, the motion estimator is best to have prior information about the expected characteristics of the motion along a trajectory between frames. Although all of the motion estimators covered in this work can be utilized for tracking, they might require some post-motion processing to guarantee that the target object is tracked correctly from frame to frame. It should be noted that the cases considered in this study are those involving the complete displacement of the object. Thus, motion estimation scenarios are restricted to these cases.

2.2 K-Means Clustering

A quick explanation of the k-means clustering algorithm is given in this section. The proposed method in this work uses the k-means clustering algorithm as a detection filter. The most popular technique for tracking based on color characteristics involves grouping pixel colors into a certain number of clusters (k), and treating the quantity of each color within each cluster as a feature [38]. The k-means algorithm can be used prior to the motion estimate phase for improved tracking. For all of these clusters (k clusters), the center vector can be written as follows [39, 40]:

To determine the Euclidean distance of each pixel before applying the nearest cluster's center vector to them all, perform these steps [41, 42]:

The beginning pixel value in this equation is p, and n is the total number of pixels in each image. Based on the average gray scale value, each pixel should be assigned to the cluster with the closest cluster center. Then, the similarity between the new and old cluster centers should be assessed. This process must be repeated until the old and newly calculated centers become identical. This iterative process is done automatically until the two centers are the same.

The features used in the method of this study include the position of the pixels at the edges of the moving object, the color characteristics extracted from those pixels. The number of clusters will be determined based on the number of holes created inside the masks resulting from the background subtraction, which are detected by the highlighting of those holes.

3 Methodology

Object tracking with motion estimation has a wide range of applications in today’s world [43, 44]. Object tracking with motion estimation is a very common approach for estimating distance vectors between images taken by a camera. Motion estimation involves determining/estimating motion vectors that describe the change from one image to another with minimum possible temporal redundancy. This motion estimation process can be implemented on a pixel basis. The pixel block-based approach has a major drawback in that it depends on the threshold value at which pixel-to-pixel changes appear, but it tends to be less computationally expensive than many other approaches [45]. Block matching algorithms are simple and robust motion estimation schemes operating based on finding the best-matched blocks, which are widely used in video tracking and compression.

Simply identifying a moving object in the scene is what motion detection in video images entails. Motion detection in video surveillance applications refers to the surveillance system's capacity to identify motion and capture events. When a motion is detected, a motion detector typically uses a software-based monitoring algorithm to instruct a CCTV camera to begin recording. Another name for this procedure is activity detection [46]. To evaluate if the operator needs to be warned, an advanced motion detection system can examine the type of motion. In this study, it is assumed that monitoring is done by fixed cameras placed in an outdoor space, which are supposed to detect any motion that crosses the displacement threshold and falls outside the tolerance range. In addition to the inherent usefulness of splitting video streams into a still background and a moving foreground, this approach facilitates motion detection, classification, and analysis and improves the efficiency of subsequent processes, where only moving pixels must be taken into account. There are three common methods for detecting a moving object: optical flow, background subtraction, and frame difference. While the frame difference method is more suitable for dynamic environments, it generally extracts all eligible pixels. Although the background subtraction method offers the most comprehensive feature data, it is particularly susceptible to indirect events and changes in dynamic light [47]. Even when the camera is moving, the optical flow approach can be utilized to find independently moving objects. However, the majority of optical flow techniques require specialized hardware and are computationally challenging when used to process full-frame video streams in real time.

The method of this paper can accurately track an object through a simple procedure with no delay, which makes it suitable for real-time motion estimation. Figure 1 shows the block diagram of the proposed object tracking method based on the motion estimation technique. First, the background subtraction method is used to detect changes and motion of objects. Then, after finding the edges of the objects in the image and extracting various features from their pixels, the detection operation is done with the help of the K-means clustering algorithm based on the number of shadows created from the background subtraction [48, 49]. This process helps achieve a better classification of the pixels of the objects. Finally, the center of gravity of the pixels of each cluster is defined as the location of the object, and its displacements over different frames are observed and assessed. The interesting innovation made in this paper is the estimation of the location of the object in different frames with the proposed hybrid technique based on prior information on the position and velocity of the object. The proposed motion estimation technique is described in the following.

3.1 Proposed Motion Estimation Technique

This section presents a new linearization method for discretizing the displacement terms of object positions in different frames of an image. To linearize non-linear trajectories, we use a technique called point-to-point linearization. In this linearization, for the parts of motion of the object, the governing equations are presented by observing the concepts of motion that the defined slope component is used for linearization of motion under fuzzy logic algorithm. Ultimately, the developed linearization function is used to analyze the motion on the trajectory. In each iteration, the linearization algorithm decomposes the original problem over a number of estimated position-real position pairs, and then uses fuzzy logic to solve each sub-problem in the two-dimensional space to determine the direction of motion. Basically, the proposed linear model has a controlled slope coefficient whereby it calculates the error of position estimates and changes in this error for the previous frames at different time intervals and then determines the slope of the next motion estimation accordingly. The proposed fuzzy system for determining the rate of the linear model consists of a fuzzy PI control structure to reduce the error between the estimated position and the real position of the object [50]:

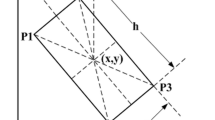

The proposed linearization algorithm is a fast trajectory-based motion estimation method that will be usable for real-time systems. In this algorithm, to solve each nonlinear sub-problem, it is converted through linear approximation to a sequence of linear complementary sub-problems, each of which is solved by the fuzzy method to a certain precision. To demonstrate the performance of the proposed algorithm, it has been applied to several examples with different object displacements. The block diagram of the proposed scheme is shown in Fig. 2. In this structure, the slope coefficient for the motion trajectory is the same as Eq. (5). Object tracking often refers to object feature extraction, the appropriate matching algorithm used to determine object position, and other spatiotemporal change information to monitor objects in a video sequence. Figure 3 shows an estimate of the position of the object in two-dimensional space. In this scheme, object motion estimation and monitoring are done on a frame-to-frame basis. Although changing the number of frames used for sampling the position of the object from one to two or more may decrease the accuracy of estimation and tracking, it will have a positive effect on the tracking speed of the system, making it more suitable for real-time applications. These characteristics are compared frame by frame and assigned to a series of moving object images over a period of time. This information leads to the identification and tracking of the position of each object in the image. In addition, the stored information helps in the process of removing noise in object tracking, which without it leads to irregular tracking of the object.

Theoretically, four 2D coordinates are needed for homography estimation for camera calibration, which can solve eight linear equations. A practical random sampling consensus (RANSAC) based on reliable camera calibration requires at least eight points to reduce the calibration error, as shown in Fig. 4.

3.2 Constructing the Suggested Kalman Filter (S-KF)

The online tracking procedure makes use of the probability diagram discussed in the preceding section. A fresh target is supplied in the same environment in online mode, and S-KF is used to track the target [51]. S-KF use point detection for object detection. The user chooses a spot on the target in the first frame, and S-KF tracks that point.

The many stages needed to produce S-KF are shown in Fig. 5. This image clearly shows that following target recognition in the first frame, which is the S-KF prediction part, two steps of the Kalman filter are performed.

Structure of S-KF is depicted in the flowchart in one frame. The Kalman filter is first used to forecast and update the following position. The most similar graph node to the Kalman prediction is located in the subsequent stage, known as the initialization step, of the proposed Kalman filter. The suggested algorithm then determines the target’s most likely course as its future positions. Finally, the graph is modified (graph update step) based on the learning rate. Every frame of the video is subjected to this technique

The estimated point serves as the input for the S-KF Kalman filter, which uses an estimate of subsequent moves from the chart to provide a newly refined estimate. Initializing S-KF, determining the most likely path using the suggested technique, and updating the graph are the three stages of S-KF. The details of these actions are provided below.

3.2.1 Initialization

The target prediction xi and probability diagram produced by the Kalman filter are available. Two factors are taken into account to determine the target's most likely future course. The similarity between graph nodes and xi is the first criterion. The second criterion, which chooses the node with the highest sum of weights from the internal edges to that node, is taken into account if more than one node shares the same similarity with xi. The second phase of the Viterbi algorithm uses the chosen node, also known as the winning node, as one of its inputs.

3.2.2 Finding the Potential Route Using the Suggested Algorithm

The suggested approach functions as a decoder to determine a sequence's highest joint likelihood. In this study, a set of nodes that roughly represent the target's future journey are found using the suggested technique. It calls for a series of states that are a subset of object nodes, together with the starting node that is supplied at initialization. According to Fig. 5, Algorithm 1 depicts the entire S-KF process in the suggested system.

The S-KF (Suggested Kalman Filter) algorithm is a method for tracking moving objects in videos, which is introduced in this article. This algorithm consists of several key steps, which are detailed below:

Initial target detection in the first frame: in the first frame of the video, the user selects a point on the desired target. This point is considered as the initial tracking point.

Kalman filter initial settings: Kalman filter initial parameters (such as H and F matrices) are set.

Kalman filter prediction: Kalman filter predicts the target's future position based on the current motion model.

Kalman filter correction: the Kalman filter corrects its prediction using the observed data to obtain a better estimate of the target position.

Initial settings: the S-KF algorithm performs its initial settings based on the data obtained from the Kalman filter.

Selection of graph nodes: in this step, the algorithm selects the graph nodes that most likely represent the future path of the target. This selection is based on the similarity criteria of the nodes to the predicted position (xi) and the sum of the weights of the internal edges to that node.

Finding the possible path using the proposed algorithm: the proposed algorithm acts as a decoder to identify the possible path of the target with the highest joint probability.

Graph update: based on the probabilistic path determined in the previous step, the graph is updated to reflect the new information. This update includes reweighting of edges and setting of new nodes.

Iterative steps: the steps of prediction, correction, initialization, probabilistic path finding, and graph update are repeated for each video frame to continuously track the target throughout the video.

3.3 Fundamental Guidelines and Membership Capabilities of the Fuzzy Controller

The proposed control structure’s high-level fuzzy system combines operator knowledge in the form of IF–THEN rules for choosing gain factors in accordance with the control process's present trend. Using information from the public domain regarding the configuration of conventional controllers, the fuzzy control rules of the proposed hybrid controller were created [52, 53]. Table 1 illustrates how changes in the gain parameters affect a fuzzy PI controller's rising time, overshoot, and settling time. The gain resulting from each rule and the fuzzy rule structure are also provided in the table. In this system, the inputs are control rules and error and their changes and the output are the proportional and integral gains taken from the rules. The fuzzy coordinated PI control structure uses 49 linguistic fuzzy rules, which are listed in Table 1. The membership functions partition the domain of discourse into a number of neighboring distances based on the linguistic values of each fuzzy input and output variable. A fuzzy variable with an acronym like NB (large negative), NM (medium negative), etc. is allocated to each membership function. The membership function converts the value to the normalized distance (1, 1). In the proposed controller, triangular and trapezoidal membership functions are used in this stage.

Figures 6, 7, and 8 display triangular membership functions with 50% overlap for input and output fuzzy variables. To achieve the desired results, it is crucial to use a carefully considered system configuration. The fuzzy inference is implemented with the minimum intersection function with the center average method [54] used for defuzzification (obtaining the crisp values of the outputs). The effectiveness of fuzzy controllers of the PI type has been researched, and the coefficient of linearization algorithm is being improved, to show the performance of the suggested control method.

Utilizing practical filter expertise, the rule base and membership operations of the fuzzy PI controller were created. The basis of the controller design for motion estimation is that as the estimator output moves away from the real value, the slope coefficient increases to minimize the output deviation and error according to fuzzy rules. This smart fuzzy controller improves the estimation power of the method in tracking the location of objects. The input and output characteristics of the proposed fuzzy system are shown in Fig. 8.

4 Simulation Settings

This section presents and discusses the results of the simulation of the proposed object tracking scheme. The presented results are for two samples shown in Figs. 9, 10 and 11, which present the results of background subtraction, object edge selection, feature extraction from the selected pixels, object position calculation, identification by the clustering of edge pixels, and finally object position tracking in the two-dimensional space. Figures 12 and 13 show how the exact trajectory of the object is compared with the trajectory estimated by the proposed method.

This section presents tests on video data sets and contrasts the suggested approach with current trackers in various tracking tasks. Prior to online tracking, stored trajectories are treated as a probabilistic graph.

The inner and outer edge probabilities for each node must add up to one because the graph is governed by probabilistic principles. The diagram depicts the many routes that moving items could follow in this setting, with the likelihood of the path being taken being inversely related to the weight of the edges.

Three unblocked films are subjected to the suggested tracker, and the outcomes are displayed in Table 2. When using S-KF, the top row of the table displays a few chosen frames from each video. The Euclidean distance between the ground truth and the S-KF estimate is shown in the second row of the table.

A few selected frames from the videos are shown in the first row, and the Euclidean distance between the ground truth and the S-KF estimate is shown in the second row.

We used the Ped_1 and Ped_2 data sets to apply the Extended Kalman Filter (EKF) and K-Nearest Neighbor (KNN) to evaluate the performance of S-KF with other trackers. Table 3 displays the F-scores for the two targets both with and without occlusion. When there is no obstruction, this table's findings show that EKF and KNN perform comparably to S-KF with respect to performance. As we predicted, because S-KF incorporates data from prior targets in these contexts, it outperforms EKF and KNN when targets are obscured.

The M-60 data set, which contains numerous occlusion spots, is picked to compare S-KF with more detectors. For EK_F [55], E_KF Pose [56], CSCO [57], CSL_PB [58], CoH OG [59], and S-KF, Table 4 provides the precise measurement.

5 Conclusion

The main goal of this paper is to present a new method for tracking using object path linearization, which can provide a significant improvement in object tracking based on motion estimation. This method is designed and developed using the S-KF algorithm to achieve object tracking in the best possible way. The proposed algorithm first uses the background subtraction method to accurately determine the location of the moving object, then identifies the object and predicts its future path with the k-means technique. This technique uses a novel hybrid approach to motion estimation, combining fuzzy logic and trajectory linearization. This strategy relies on a linear model whose gradient is calculated using a Mammanian fuzzy logic system trained with information about the path of the desired objects. This method is also able to track a target with unusual behaviors, which makes it suitable for some applications such as traffic monitoring, robot control, etc. Future studies can expand and improve on the suggestions of this article in various ways. For example, it may be possible to use fuzzy neural networks (ANFIS) instead of fuzzy controllers to determine the trajectory line slope or estimate the most likely path, an approach that may provide more reliable results than existing tracking methods.

The main findings of this research show that the proposed method, compared to the existing methods, significantly improves the speed of background objects by estimating their speed and direction of movement based on the information related to the speed and direction of normal movement. The system speed is comparable to video frames (24 frames per second to 40 frames per second). Specifically, the results show that the proposed motion estimation technique is an effective tool to reduce the difference between the observed and predicted object positions, and based on previous studies, our method has been able to improve object motion prediction by 12%.

The importance of these findings is that additional observations, when available, are used to obtain more accurate estimates of the object's next position and ultimately use this capability to achieve better tracking performance. This can have significant effects on the movement of objects. In particular, providing a solution that can more effectively solve object tracking based on motion estimation will solve the current needs in object tracking and open new avenues for future research.

In addition, the necessity of this article is that due to the complexities and challenges in the movement of objects, there is a need for new and improved approaches that can increase efficiency and accuracy. Our proposed method not only meets these requirements, but also has a high potential for real-world application with the positive results of simulations.

Data Availability

The data used to support the findings of this study are available from the corresponding author upon request.

References

Jung, J., Yoon, I., Paik, J.: Object occlusion detection using automatic camera calibration for a wide-area video surveillance system. Sensors 16, 982 (2016)

Luo, W., Xing, J., Milan, A., Zhang, X., Liu, W., Kim, T.K.: Multiple object tracking: a literature review. Artif. Intell.. Intell. 293, 103448 (2021)

Datondji, S.R.E., Dupuis, Y., Subirats, P., Vasseur, P.: A survey of vision-based traffic monitoring of road intersections. IEEE Trans. Intell. Transp. Syst. 17, 2681–2698 (2016)

Drobnitzky, M., Friederich, J., Egger, B., Zschech, P.: Survey and systematization of 3D object detection models and methods. The Visual Comput. 40(3), 1867–1913 (2024)

Zhao, X., Dawson, D., Sarasua, W.A., Birchfield, S.T.: Automated traffic surveillance system with aerial camera arrays imagery: macroscopic data collection with vehicle tracking. J. Comput. Civ. Eng. 31, 04016072 (2017)

Gao, B., Spratling, M.W.: Explaining away results in more robust visual tracking. Vis. Comput. 39(5), 2081–2095 (2023)

Griffith, E.J., Mishra, C., Ralph, J.F., Maskell, S.: A system for the generation of synthetic wide area aerial surveillance imagery. Simulat. Model. Pract. Theor. 84, 286–308 (2018)

Abu Arqub, O., Mezghiche, R., Maayah, B.: Fuzzy M-fractional integrodifferential models: theoretical existence and uniqueness results, and approximate solutions utilizing the Hilbert reproducing kernel algorithm. Front. Phys. 11, 1252919 (2023)

Fakhri, P.S., Asghari, O., Sarspy, S., Marand, M.B., Moshaver, P., Trik, M.: A fuzzy decision-making system for video tracking with multiple objects in non-stationary conditions. Heliyon 9(11), e22156 (2023)

Zhang, H., Zou, Q., Ying, Ju., Song, C., Chen, D.: Distance-based support vector machine to predict DNA N6-methyladine modification. Curr. Bioinform. 17(5), 473–482 (2022)

Cao, C., Wang, J., Kwok, D., Zhang, Z., Cui, F., Zhao, D., Li, M.J., Zou, Q.: webTWAS: a resource for disease candidate susceptibility genes identified by transcriptome-wide association study. Nucleic Acids Res. 50(D1), D1123–D1130 (2022)

Xia, L.X., Xiao, Y.Y., Jiang, W.J., Yang, X.Y., Tao, H., Mandukhail, S.R., Qin, J.F., Pan, Q.R., Zhu, Y.G., Zhao, L.X., Huang, L.J., Li, Z., Yu, X.Y.: Exosomes derived from induced cardiopulmonary progenitor cells alleviate acute lung injury in mice. Acta Pharmacol. Sin. (2024). https://doi.org/10.1038/s41401-024-01253-4

Wu, Q., Zou, S., Liu, W., Liang, M., Chen, Y., Chang, J., Liu, Y., Yu, X.: A novel onco-cardiological mouse model of lung cancer-induced cardiac dysfunction and its application in identifying potential roles of tRNA-derived small RNAs. Biomed. Pharmacother. 165, 115117 (2023). https://doi.org/10.1016/j.biopha.2023.115117

Lei, X., Li, Z., Zhong, Y., Li, S., Chen, J., Ke, Y., Lv, S., Huang, L., Pan, Q., Zhao, L., Yang, X., Chen, Z., Deng, Q., Yu, X.Y.: Gli1 promotes epithelial-mesenchymal transition and metastasis of non-small cell lung carcinoma by regulating snail transcriptional activity and stability. Acta Pharm. Sin. B. 12(10), 3877–3890 (2022). https://doi.org/10.1016/j.apsb.2022.05.024

Khosravi, M., Trik, M., Ansari, A.: Diagnosis and classification of disturbances in the power distribution network by phasor measurement unit based on fuzzy intelligent system. J. Eng. 2024(1), e12322 (2024)

Wang, R., Zhang, Q., Zhang, Y., Shi, H., Nguyen, K.T., Zhou, X.: Unconventional split aptamers cleaved at functionally essential sites preserve biorecognition capability. Anal. Chem. 91(24), 15811–15817 (2019)

Trik, M., Akhavan, H., Bidgoli, A.M., Molk, A.M.N.G., Vashani, H., Mozaffari, S.P.: A new adaptive selection strategy for reducing latency in networks on chip. Integration 89, 9–24 (2023)

Yin, Y., Guo, Y., Su, Q., Wang, Z.: Task allocation of multiple unmanned aerial vehicles based on deep transfer reinforcement learning. Drones 6(8), 215 (2022). https://doi.org/10.3390/drones6080215

Xu, X., Wei, Z.: Dynamic pickup and delivery problem with transshipments and LIFO constraints. Comput. Ind. Eng. 175, 108835 (2023). https://doi.org/10.1016/j.cie.2022.108835

Trik, M., Molk, A.M.N.G., Ghasemi, F., Pouryeganeh, P.: A hybrid selection strategy based on traffic analysis for improving performance in networks on chip. J. Sens. 2022(1), 3112170 (2022)

Abu Arqub, O., Singh, J., Alhodaly, M.: Adaptation of kernel functions-based approach with Atangana–Baleanu–Caputo distributed order derivative for solutions of fuzzy fractional Volterra and Fredholm integrodifferential equations. Math. Methods Appl. Sci. 46(7), 7807–7834 (2023)

Xiao, Z., Fang, H., Jiang, H., Bai, J., Havyarimana, V., Chen, H., Jiao, L.: Understanding private car aggregation effect via spatio-temporal analysis of trajectory data. IEEE Trans. Cybern. 53(4), 2346–2357 (2023). https://doi.org/10.1109/TCYB.2021.3117705

Wang, Z., Jin, Z., Yang, Z., Zhao, W., Trik, M.: Increasing efficiency for routing in internet of things using binary gray wolf optimization and fuzzy logic. J. King Saud Univ.-Comput. Inf. Sci. 35(9), 101732 (2023)

Xiao, Z., Li, H., Jiang, H., Li, Y., Alazab, M., Zhu, Y., Dustdar, S.: Predicting urban region heat via learning arrive-stay-leave behaviors of private cars. IEEE Trans. Intell. Transport. Syst. 24(10), 10843–10856 (2023). https://doi.org/10.1109/TITS.2023.3276704

Sun, G., Zhang, Y., Liao, D., Yu, H., Du, X., Guizani, M.: Bus-trajectory-based street-centric routing for message delivery in urban vehicular Ad Hoc networks. IEEE Trans. Veh. Technol. 67(8), 7550–7563 (2018). https://doi.org/10.1109/TVT.2018.2828651

Khezri, E., Yahya, R.O., Hassanzadeh, H., Mohaidat, M., Ahmadi, S., Trik, M.: DLJSF: data-locality aware job scheduling IoT tasks in fog-cloud computing environments. Results Eng. 21, 101780 (2024)

Ding, C., Li, C., Xiong, Z., Li, Z., Liang, Q.: Intelligent identification of moving trajectory of autonomous vehicle based on friction nano-generator. IEEE Trans. Intell. Transp. Syst. 25(3), 3090–3097 (2024). https://doi.org/10.1109/TITS.2023.3303267

Wang, G., Wu, J., Trik, M.: A novel approach to reduce video traffic based on understanding user demand and D2D communication in 5G networks. IETE J. Res. (2023). https://doi.org/10.1080/03772063.2023.2278696

He, H., Chen, Z., Liu, H., Liu, X., Guo, Y., Li, J.: Practical tracking method based on best buddies similarity. Cyborg Bionic Syst. 4, 50 (2023). https://doi.org/10.34133/cbsystems.0050

Zhang, L., Hu, S., Trik, M., Liang, S., Li, D.: M2M communication performance for a noisy channel based on latency-aware source-based LTE network measurements. Alex. Eng. J. 99, 47–63 (2024)

Yu, J., Dong, X., Li, Q., Lü, J., Ren, Z.: Adaptive practical optimal time-varying formation tracking control for disturbed high-order multi-agent systems. IEEE Trans. Circuits Syst. I Regul. Pap. 69(6), 2567–2578 (2022). https://doi.org/10.1109/TCSI.2022.3151464

Khezri, E., Zeinali, E., Sargolzaey, H.: SGHRP: secure greedy highway routing protocol with authentication and increased privacy in vehicular ad hoc networks. PLoS ONE 18(4), e0282031 (2023)

Mou, J., Duan, P., Gao, L., Pan, Q., Gao, K., Singh, A.K.: Biologically inspired machine learning-based trajectory analysis in intelligent dispatching energy storage system. IEEE Trans. Intell. Transport. Syst. 24(4), 4509–4518 (2023). https://doi.org/10.1109/TITS.2022.3154750

Ding, X., Yao, R., Khezri, E.: An efficient algorithm for optimal route node sensing in smart tourism urban traffic based on priority constraints. Wirel. Netw. (2023). https://doi.org/10.1007/s11276-023-03541-z

Xiao, L., Cao, Y., Gai, Y., Khezri, E., Liu, J., Yang, M.: Recognizing sports activities from video frames using deformable convolution and adaptive multiscale features. J. Cloud Comput. 12(1), 167 (2023)

Zhu, J., Hu, C., Khezri, E., Ghazali, M.M.M.: Edge intelligence-assisted animation design with large models: a survey. J. Cloud Comput. 13(1), 48 (2024)

Zheng, C., An, Y., Wang, Z., Wu, H., Qin, X., Eynard, B., Zhang, Y.: Hybrid offline programming method for robotic welding systems. Robot. Comput.-Integr. Manuf. 73, 102238 (2022). https://doi.org/10.1016/j.rcim.2021.102238

Zheng, C., An, Y., Wang, Z., Qin, X., Eynard, B., Bricogne, M., Zhang, Y.: Knowledge-based engineering approach for defining robotic manufacturing system architectures. Int. J. Prod. Res. 61(5), 1436–1454 (2023). https://doi.org/10.1080/00207543.2022.2037025

Ding, Y., Zhang, W., Zhou, X., Liao, Q., Luo, Q., Ni, L.M.: FraudTrip: taxi fraudulent trip detection from corresponding trajectories. IEEE Internet Things J. 8(16), 12505–12517 (2021). https://doi.org/10.1109/JIOT.2020.3019398

Lu, C., Gao, R., Yin, L., Zhang, B.: Human-robot collaborative scheduling in energy-efficient welding shop. IEEE Trans. Industr. Inf. 20(1), 963–971 (2024). https://doi.org/10.1109/TII.2023.3271749

Yin, L., Zhuang, M., Jia, J., Wang, H.: Energy saving in flow-shop scheduling management: an improved multiobjective model based on grey wolf optimization algorithm. Math. Probl. Eng. 2020, 9462048 (2020). https://doi.org/10.1155/2020/9462048

Yin, L., Li, X., Gao, L., Lu, C., Zhang, Z.: Energy-efficient job shop scheduling problem with variable spindle speed using a novel multi-objective algorithm. Adv. Mech. Eng. 9(4), 755449641 (2017). https://doi.org/10.1177/1687814017695959

Mohammadzadeh, A., Taghavifar, H., Zhang, C., Alattas, K.A., Liu, J., Vu, M.T.: A non-linear fractional-order type-3 fuzzy control for enhanced path-tracking performance of autonomous cars. IET Control Theory Appl. 18(1), 40–54 (2024). https://doi.org/10.1049/cth2.12538

Chen, B., Hu, J., Zhao, Y., Ghosh, B.K.: Finite-time observer based tracking control of uncertain heterogeneous underwater vehicles using adaptive sliding mode approach. Neurocomputing 481, 322–332 (2022). https://doi.org/10.1016/j.neucom.2022.01.038

Chen, B., Hu, J., Ghosh, B.K.: Finite-time tracking control of heterogeneous multi-AUV systems with partial measurements and intermittent communication. Sci. China Inf. Sci. 67(5), 152202 (2024). https://doi.org/10.1007/s11432-023-3903-6

Jiang, Y., Yang, Y., Xu, Y., Wang, E.: Spatial-temporal interval aware individual future trajectory prediction. IEEE Trans. Knowl. Data Eng. (2023). https://doi.org/10.1109/TKDE.2023.3332929

Zhao, J., Song, D., Zhu, B., Sun, Z., Han, J., Sun, Y.: A human-like trajectory planning method on a curve based on the driver preview mechanism. IEEE Trans. Intell. Transport. Syst. 24(11), 11682–11698 (2023). https://doi.org/10.1109/TITS.2023.3285430

Zhang, Y., Li, S., Wang, S., Wang, X., Duan, H.: Distributed bearing-based formation maneuver control of fixed-wing UAVs by finite-time orientation estimation. Aerosp. Sci. Technol. 136, 108241 (2023). https://doi.org/10.1016/j.ast.2023.108241

Wang, X., Zhang, R., Miao, Y., An, M., Wang, S., Zhang, Y.: PI2-based adaptive impedance control for gait adaption of lower limb exoskeleton. IEEE/ASME Trans. Mechatron. (2024). https://doi.org/10.1109/TMECH.2024.3370954

Wu, J., Wang, Y., Yin, C.: Curvilinear multilane merging and platooning with bounded control in curved road coordinates. IEEE Trans. Veh. Technol. 71(2), 1237–1252 (2022). https://doi.org/10.1109/TVT.2021.3131751

Shen, J., Sheng, H., Wang, S., Cong, R., Yang, D., Zhang, Y.: Blockchain-based distributed multi-agent reinforcement learning for collaborative multi-object tracking framework. IEEE Trans. Comput. (2023). https://doi.org/10.1109/TC.2023.3343102

Wang, S., Sheng, H., Yang, D., Zhang, Y., Wu, Y., Wang, S.: Extendable multiple nodes recurrent tracking framework with RTU++. IEEE Trans. Image Process. 31, 5257–5271 (2022). https://doi.org/10.1109/TIP.2022.3192706

Yang, D., Cui, Z., Sheng, H., Chen, R., Cong, R., Wang, S., Xiong, Z.: An occlusion and noise-aware stereo framework based on light field imaging for robust disparity estimation. IEEE Trans. Comput. (2023). https://doi.org/10.1109/TC.2023.3343098

Hou, X., Zhang, L., Su, Y., Gao, G., Liu, Y., Na, Z., Chen, T.: A space crawling robotic bio-paw (SCRBP) enabled by triboelectric sensors for surface identification. Nano Energy 105, 108013 (2023). https://doi.org/10.1016/j.nanoen.2022.108013

Hanumegowda, A., Dewangan, S., Bhupala, S., Gruson, F., Steinhauser, D.: Extended object tracking with IMM filter for automotive pre-crash safety applications. In: 2021 18th European Radar Conference (EuRAD), pp. 177–180. (2022) IEEE.

Liu, Q., Chen, D., Chu, Q., Yuan, L., Liu, B., Zhang, L., Yu, N.: Online multi-object tracking with unsupervised re-identification learning and occlusion estimation. Neurocomputing 483, 333–347 (2022)

Bhatnagar, B.L., Xie, X., Petrov, I.A., Sminchisescu, C., Theobalt, C., Pons-Moll, G.: Behave: dataset and method for tracking human object interactions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 15935–15946 (2022)

Maayah, B., Arqub, O.A.: Uncertain M-fractional differential problems: existence, uniqueness, and approximations using Hilbert reproducing technique provisioner with the case application: series resistor-inductor circuit. Phys. Scr. 99(2), 025220 (2024)

Abu Arqub, O., Singh, J., Maayah, B., Alhodaly, M.: Reproducing kernel approach for numerical solutions of fuzzy fractional initial value problems under the Mittag-Leffler kernel differential operator. Math. Methods Appl. Sci. 46(7), 7965–7986 (2023)

Funding

The authors received no financial support for the research authorship and or publication of this article.

Author information

Authors and Affiliations

Contributions

“Mohammad Mehdi Yousefi” developed the original idea, analyzed the data, and wrote the manuscript. Related works and the system model were done by “Saleh Mohseni”. The implementation and simulation of the idea were done by “Hadi Dehbovid”. The re-analysis of the training and test data sets as well as the critical review of this version in terms of important intellectual content were done by “Reza Ghaderi”.

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare that they have no conflicts of interest.

Consent for Publication

The manuscript does not contain individual data.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yousefi, S.M.M., Mohseni, S.S., Dehbovid, H. et al. A Practical Approach to Tracking Estimation Using Object Trajectory Linearization. Int J Comput Intell Syst 17, 175 (2024). https://doi.org/10.1007/s44196-024-00579-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00579-5