Abstract

In the current century, heart-related sickness is one of the important causes of death for all humans. An estimated 17.5 million deaths occur due to heart disease worldwide. It is observed that more than 75% of peoples with average income level mostly suffer from heart diseases and its complications. So, there is need for predicting heart infection and its related complications. Data mining is the method of converting raw data into useful information. These tools allow given data to predict future trends. Data mining concepts were mainly adapted in heart disease data sets to interpret the intricate inferences out of it. In the modern world, many research are carried in health care engineering with the use of mining and prediction techniques. This investigation aims to identify significant features in heart disease dataset and to apply ensembling techniques for improving exactness of prediction. Prediction models are developed using different ensembling techniques like stacking and voting. For the experimental purpose, the Z-Alizadeh Sani dataset is used, which is available in the UCI machine learning data repository. Stacking and voting techniques are applied to the dataset. Stacking with substantial characteristics has the maximum accuracy of 86.79% in the Z-Alizadeh dataset. Test outcome proves that the prediction model implemented with the features selected using firefly algorithm and stacking-based classification model has the highest accuracy prediction than other technique. Furthermore, this study delineates a comparative analysis with prior works, showcasing the superior capabilities of the firefly algorithm in optimizing feature selection processes, which is crucial for advancing the accuracy of heart disease predictions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Heart disease, otherwise called as heart syndrome, is the important cause for death all over the world. During 2015, the World Health Organization has predicted that 17 million of life has been lost in the world due to heart-related ailments. Cardiovascular diseases (CVDs) are the main reasons of death internationally [1]. More peoples lost their life annually from CVDs compared to other diseases. But we can guess the heart infection and can provide prevention mechanisms so that several deaths can be prohibited and reduced. Data exploration and mining useful information brings a different dimension to the study of heart disease. With less effort and feedback from the patient, the various methods of data mining are helpful in extracting useful information from the clinical dataset [2]. Over the past years, scientist discovers different behavior to execute data mining in health data to attain a precise observation in heart disease. The effectiveness of data mining basically differs from the type of methods utilized and the characteristics/features chosen [3]. The health datasets are redundant and inconsistent. Data repetition and missing values in a dataset have an impact on the prediction outcome of the algorithms. Really, it seems tough to adopt data mining concepts without past and proper planning. The outcome of data mining methods used in predicting the heart-related illness is greatly influenced by lacking significant features and the inappropriate usage of the data mining concepts. So, there is need for selecting significant features and using good combination of algorithms to improve performance. Heart risk analytical representation has reached major significance in medical investigation and patient concern. Timely, accurate, and exact health risk review methods to make certain health allegations are instance reviewed, which insist a commitment to the use of scientific and computational-based health risk evaluation methods [4].

To scrutinize the cardiac ailments, there are several influential features that are related directly and indirectly [5]. Furthermore, patients will experience extensive assessments, such as blood heaviness, glucose, vital symbols, chest ache, electrocardiograms, maximum heart velocity, and elevated stages of sugar, but the bright side may be that accomplished treatment is feasible if the infection is easily and early sensed and expected, but treatment for all these cardiac patients is dependent on medical studies, the patient history, and the responses to questions by the patient [6]. Therefore, the quest for the fitting approaches to review CVD data and, thus, devise analytical and prognostics methods to stop CVD complications in human beings is a continuing one. Due to one of the above-said causes, developing predictive modeling results for cardiovascular threat judgment has become a tremendous challenge in health concern informatics [7].

Artificial intelligence-based prediction mechanisms can be extensively divided into two classifications, in particular, supervised learning and unsupervised learning. Supervised learning models include arranging variables with respect to their group marks. The progress of any supervised learning model relies upon the accessibility of huge measure of named information [8]. In conventional supervised learning calculation, the framework gets all the preparation tests and fosters a model utilizing something similar. In the cutting edge world, modest, unlabeled information are huge and most of the parts needs investigation. A functioning element, in contrast to its nonfunctioning data, not just trains its model, but also cooperates with the unlabeled information to pick the most ideal samples which make the model more accurate concerning the grouping capacity, training the model with such significant features [9]. A functioning model will not produce errors with a decent data size preparing information, expanding something similar at each part of the calculation. It is accepted that this feature selection decreases the developed models requirement for enormous amounts of named information in case of unsupervised learning. Supervised learning, on other hand, deals with labeled data prediction [10].

For heart disease prediction, considerable characteristics and data mining technique are recognized [11]. The heart disease datasets is taken from the data repository, UCI machine learning repository which is maintained by University of California Irvine. Z-Alizadeh dataset has been chosen since it is a frequently used data record by investigators with the report that are most accurate. Firefly algorithm (FA) which is a meta-heuristic algorithm inspired by the firefly activities is used in selecting significant features. FA is a natural bio-inspired procedure which is based on the fireflies’ social flashing activities. Ensembling techniques are meta-algorithm that combines several algorithms into one predictive model to improve predictions [12]. Ensembling techniques like stacking and voting are used to create forecast representations for this test with an organized dataset. Experimental results from FA show that eight major attributes and stacking data mining technique with combination of three methods Naive Bayes, support vector machine, and logistic regression are useful to provide prediction model with high accuracy of 86.79% compared to other techniques.

2 Literature Survey

In recent decade, various studies have been done that focus on cardiovascular-related disease prediction [12]. They have applied different data mining concepts for detecting the heart sickness and achieved different accuracy for different data mining methods. They tried to attain well-organized methods and accuracies in finding out heart disease. This paper aims to analyze various ensembling techniques that have been used for heart disease detection [13]. Researchers have been examining the usage of data mining ideas to help in accurate calculation. Such methods and huge information bases are of less use for data-driven research. Progresses in neuroimaging, genomic, movement following, eye-following, and numerous other innovation-based information gathering techniques have prompted numerous datasets, which habitually have few examples. Data analysis examples are normal since analyzing and trial conventions which maximally segregate between various circumstances are still under improvement and due to the expenses related with information collection including human members. For instance, in our work with mentally unbalanced adults, running a trial to create one test of high-layered information might require 1.5–4 h of experimentation time (for running the investigation including set up and put down) and 3.5–6 h of member time (counting travel time) [14]. It is challenging to enroll enormous quantities of mentally unbalanced adults because of troubles getting to members and empowering support. Gathering tests from thousands of subjects is, hence, not possible with the assets accessible for beginning phase work [15]. There is as a basic requirement for hearty and dependable AI (ML) strategies utilizing these more modest datasets [16].

Data mining method has been adopting random forest classifier to get better forecast accuracy and to examine different incidents connected to coronary heart disease [17]. Representation can assist the clinical suggesters for detecting coronary heart disease with its different occurrences and how it may be related with various sections of the people. Coronary heart disease is a frequent form of ailment influencing the heart and a significant reason for early death. From the point of examination of medical sciences, data mining is involved in determining a mixture of metabolic syndromes [18]. Ensembling techniques based on voting (a fusion concept with regression and Bayes) was applied in the dataset. Output illustrates that nine important attributes with leading three data mining techniques (Naive Bayes, SVM, voting) have better accuracy compared to others. To confirm the findings proposed by the researchers, Statlog dataset is analyzed in this study. Research outcome shows that the model developed with the nine important features and voting-based data mining algorithm resulted in an accuracy of 87.4% [19].

Data scientists usually try to investigate several datasets for making valuable pattern in a dataset irrespective of the domain [20]. Beginning with a preprocessing stage, where the majority of applicable features are chosen by the correlation matrix, then three data analytics methods such as (neural networks, SVM, and KNN) on data sets of different dimensions are applied, to detect the accuracy and ability of all of the algorithms. Neural networks are simpler to construct and produced a good result (accuracy of 93%). Another ensemble-based technique that intends at discovering important features by relating machine learning systems outcome in predicting the accurateness in the forecast of CVD. Prediction model is pioneered through various collection of features and quite a few known classification systems. This results in an improved prediction stage with an accuracy point of 88.7% through the data representation for heart syndrome with the ensemble of random forest with a linear model (HRFLM) [21].

Pearson correlation, recursive attributes selection, Lasso regularization, nature-inspired features selection methods are applied on machine learning-supported classification algorithms: M5P, random forest, and reduced error pruning with random forest ensemble technique are the commonly used standard steps in prediction task [17]. In this analysis, authors evaluated the value of classification accuracy, precision, sensitivity, and ROC [22]. Using UCI Repository dataset for 1025 instances and 14 attributes, researches are able to identify a person is suffering from heart problem or not [23].

By collecting the different approaches done in predicting heart disease using ensemble-based methods, we propose a firefly-based optimized feature selection algorithm with ensemble-based feature selection [24]. This paper uses dataset from UCI machine learning warehouse and apply firefly algorithm for feature selection. Two ensembling techniques, stacking and voting, are analyzed [25].

This research not only identifies crucial gaps such as the need for more effective predictive algorithms in heart disease identification but also introduces a novel combination of the firefly algorithm with ensemble learning techniques to address these gaps. The aim is to enhance the robustness and accuracy of predictive models.

Within the realm of ensemble methods, our research specifically contrasts the performance of stacking and voting techniques in heart disease classification. While both methods enhance the robustness of predictions by aggregating different models, stacking tends to outperform voting in scenarios where model diversity is high, as it leverages a meta-classifier to integrate predictions more effectively. This nuanced understanding aids in selecting the appropriate ensemble strategy based on the specific characteristics of the dataset.

3 Proposed System

In the field of predictive analytics, various classifiers are employed, and the one which yields the best prediction is selected using accuracy value. It has been seen that the arrangements of data are sometimes misclassified by the various classifiers and the machine learning-based classifiers do not guarantee the prediction outcome [26]. This has been considered by various classifiers that deal with real-time data which could be used to give superior prediction results [27]. These predictions have increased the interest of researchers to collect different data from several sources which leads to data curation [28]. The classifiers are supposed to depend on an independent features to predict the dependent features [29]. The individual features are then used to show the importance based on its contribution in predicting the dependent parameter. Throughout the prediction task, different classifier alteration plans have been proposed by the scientists, and it has been tentatively exhibited that some of them reliably outperform the best classifier [30]. This paper focuses on comparing which ensembling technique, either stacking or voting, has the highest accuracy in the prediction of heart disease. Firefly algorithm for feature selection is employed and is used to identify significant features which improve accuracy and reduces time for building model. Z-Alizadeh Sani heart disease dataset is used. The dataset contains 303 records about patients from Tehran’s Shaheed Rajaei CVDs, Therapeutic and Investigation Centre, and the dataset description is shown in Table 1.

In addressing heart disease classification, this research introduces a novel approach by integrating the firefly algorithm with ensemble learning techniques—stacking and voting. Unlike conventional models, this combination leverages the optimization capabilities of the firefly algorithm to refine feature selection, thereby enhancing the effectiveness and accuracy of the ensemble models. This methodological innovation is poised to set a new benchmark in predictive accuracy and model efficiency in the domain of medical diagnostics.

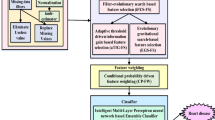

Each record was categorized by 54 features. Such characteristics are grouped in four classes: with similar age group, sign and test, ECG, laboratory and repeat features. The target classes or class label are an optimistic way to analyze coronary artery disease (CAD) and normal fitness condition. If the patient heart diameter narrowing is greater than or equal to 50%, then the patient is categorized as CAD, else Normal. The Z-Alizadeh dataset have no missing values, so the table consists of only selected set of input variable. Overall steps involved in proposed system are shown in Fig. 1. Correlation between various independent variables is shown in Fig. 2. The whole ensemble techniques are summarized in Fig. 3.

Steps involved in proposed system [18]

3.1 Preprocessing the Records

The data should be preprocessed after downloaded from the data repository. The dataset contains nominal values ‘CAD’ and ‘Normal’ into 1 and 0, respectively. First step is to convert all the nominal values into numbers in the dataset using python numpy package. Figure 4 shows age distribution is not distributed evenly across the data and all the features in the dataset need proper preprocessing. Figure 5 shows the data distribution across independent features present in the dataset. This kind of extensive data representation is needed for understanding the data better. Only if the data distribution is detected by the underlying algorithms, the prediction task can be effectively performed. The preprocessing steps were meticulously detailed to include techniques for managing missing values and normalizing data distributions, which are critical for ensuring the integrity and usability of our model inputs. Missing values in the dataset were identified and treated, such as imputation techniques or exclusion of incomplete records. Normalization techniques such as Min–Max scaling and Z-score were applied to standardize the feature scales, ensuring that each feature contributes equally to the model training process.

3.2 Feature Selection

Feature selection (FS) is also known as variable selection. FS is used to select a significant feature which improves the accuracy in predicting heart disease and reduce large data volume. This method removes irrelevant and redundant data. It allows the machine learning methods to train easily, improve the accuracy of a model when selecting the right subset, reduce the complexity of a model, and make it better to infer and reduce fitting.

Features selection methods are as follows:

-

(i)

Filter approach—a statistical measure is applied to identify the scoring of each feature and assign value.

-

(ii)

Wrapper approach—an extrapolative concept used to test a combination of attributes and give a score based on design accuracy.

-

(iii)

Embedded method—mix the good behavior of filter and wrapper approaches.

In this research, we used firefly algorithm (FA) for selecting significant features in the dataset. FA is a meta-heuristic or nature-inspired method. These algorithms are more effective for optimization problems [8]. In this algorithm, the flashings light is useful for fireflies in finding their mates. The fireflies will go to brighter and higher locations through the flashing light electricity that was obtained with the use of goal function in order to get capable solutions. For the basic formulation of FA, the following three policies are adopted.

Every firefly is unisex so the aim of all fireflies is to magnetize each firefly in spite of their sex.

-

(i)

Magnetism is proportional to their intensity reduces if the space increases between two flies. Thus the weaker firefly will go in the way of the brighter firefly. In case if it is no longer capable to observe brighter one, it will pass randomly to any position.

-

(ii)

A firefly's glow is determined by setting the goal or fitness function.

-

(iii)

If the firefly is clueless, it will terminate.

Initializing the firefly positions: Fitness function or objective function β(i) is used to initiate the firefly position. Evaluate fitness function for all fireflies. Maximum accuracy of firefly is the brightest firefly. The space among any two fireflies i and j at positions xi and xj can be calculated using Eq. (1):

where xfirefly is the tth component of the firefly’s spatial coordinate x, and xm is the number of measurements. All fireflies move toward maximum accuracy firefly. Position of firefly can be calculated using Eq. (2)

where LB = 0.5 and UB = 1.

The steps involved in firefly algorithm are summarized in Fig. 6. The function to execute the firefly algorithm is shown in Fig. 7. Finally, this method provides eight significant features: age, length, systolic murmur, typical chest pain, exertional CP, poor R progression, region RWMA, Cath (Class label). To determine the optimal number of features, the firefly algorithm was employed to balance between underfitting and overfitting, ensuring that the selected features contribute most significantly to model performance.

Flowchart of Firefly algorithm [31]

Firefly algorithm [16]

Comparatively, while traditional ensemble methods focus on model aggregation to improve prediction, our approach uniquely optimizes the input features using the firefly algorithm before model aggregation. This preemptive optimization enhances the individual model contributions within the ensemble, ensuring that the aggregation yields more precise and reliable predictions. Such optimization is rarely addressed in current literature, positioning our methodology at the forefront of predictive analytics in healthcare.

3.3 Classification Modeling Using Data Mining Techniques

After selecting features using firefly algorithm, ensembling techniques like stacking and voting are applied using machine learning algorithms. After selecting features, both stacking and voting techniques are used to train the models. A tenfold cross approach is used for training the dataset. This method divides the complete dataset into 10 subsets and process 10-times. 9 subsets are taken for training units and the last 1 subset is used as a testing set. At last, the results are summarized through averaging every 10 repetitions. The subsets are separated using stratified sampling and every subset will have the comparable sampling ratio of the major dataset.

The rationale for employing Naive Bayes, logistic regression, and SVM in our ensemble models stems from their complementary predictive strengths. Naive Bayes offers a robust probabilistic foundation, ideal for initial data interpretation. Logistic regression provides excellent binary classification capabilities, making it invaluable for binary outcomes like heart disease presence or absence. Lastly, SVM is incorporated for its ability to model complex, high-dimensional data spaces, refining the final classification boundary. Together, these models cover a broad spectrum of data characteristics, ensuring that our ensemble approach is not only versatile but also highly accurate.

3.3.1 Ensembling Techniques

Ensemble methods are systems that create multiple models and then merge them together to create a single model with better results. These methods create more exact solutions than a single form:

-

(i)

Stacking: Stacking two or more models of classification or regression by means of a meta-classifier or a meta-regressor. Models of support level are trained on the whole dataset. The meta-model is trained based on the outputs provided as features of the support level model. The base classifiers are Naive Bayes and logistic regression, and the meta-classifier is support vector machine (SVM)

-

(ii)

Voting: Voting mixes different classifiers for machine learning, using either a hard vote or a soft vote to predict the target class. The class label predicted for a specific test sample in majority voting is the class label reflecting the greater part of the target group predicted by the personage classifier. Soft voting predicts the class label with the maximum class probability, averaged over all the individual classifiers. This technique combines Naïve Bayes, logistic regression, and SVM

3.4 Performance Computation

The resulting predictions of the models were calculated using three overall performance actions: accuracy, F-measure, and precision. Accuracy is the share of top anticipated cases in the midst of all instances; F-measure is the weighted mean of the precision and recall; and precision is the percentage of correct estimations for the positive class.

4 Results and Discussion

The analyses have been performed on the provided heart dataset (multiple times), also, the typical exactness and the standard deviation have been noted for each of the datasets. As the heart disease dataset is exceptionally contrasted and the number of times the dataset have been split for producing best accuracy results using cross-validation approach took more than 13000 ms. Subsequently, for each of the heart disease datasets, the proposed strategy has been applied by choosing 10, 50, and 100 elements in firefly algorithm, individually. The element determination strategy utilized here is common information. The G parameter obtained for the individual firefly setting is 10, 50, and 100 elements. Other parameters k = 5, and naming all things considered max_queried = 60 number of tests for heart disease dataset and max_queried = 30 for all remaining datasets. In the analyses in general, 20% of the dataset are utilized for testing. However precision was not the key interest here, still the proposed strategy shows sensibly acceptable outcomes with a moderately more modest number of preparing tests. So 80% is used for training and 20% is used for testing.

Table 2 presents the performance measures like accuracy, precision, recall and F-measure of the stacking without feature selection and voting techniques.

Features selected for classification are age, length, systolic murmur, typical chest pain, exertional CP, poor R progression, region RWMA, Cath (Class label). Table 3 presents the output metrics of stacking and voting techniques.

Our analysis further explores how the selected features specifically impact the predictive model’s performance, with a detailed discussion on the implications of these features in clinical settings. The discussion also addresses computational limitations and suggests potential optimizations for enhancing algorithm efficiency.

Based on the analysis of these two tables, stacking with eight significant features has the highest accuracy of 86.79%. It is inferred that stacking achieved the highest accuracy, precision, recall, and F-measure Fig. 8 shows the ROC for stacking classifier and it can be seen that stacking classifier produced the best prediction accuracy. Confusion matrix s given in Fig. 9.

Table 4 shows the situations where the proposed ensemble-based classification with firefly optimized features produced the best prediction accuracy with its probabilities. Table 5 summarizes the overall setting parameters of firefly algorithm with stacking and voting categorizations. Table 6 summarizes the performance comparison with FA initialization, FA ending design, FA without parameter setting, FA with parameter setting, normalized FA, FA voting, and FA stacking. After setting the parameters, optimized firefly algorithm is shown in Fig. 10. Besides the fact that the proposed stacking-based classification strategy with features selected using optimized firefly algorithm beats the other ensemble models in the greater part of the cases, yet in addition it shows an impressive measure of consistency in execution in various situations. Classifiers which fit ineffectively on the dataset could deceive the last characterization choice and in this way corrupt the prediction accuracy. The weighted classifier, then again, registers the weighted parameters of the classifiers, subsequently giving less importance to the choice of the classifiers having a background marked by several attributes such as train/test split, cross-validation, nesting, and feature selection as shown in Fig. 11.

In comparison to existing methods, our model not only utilizes common ensemble techniques but also incorporates the firefly algorithm to strategically optimize feature selection. Previous studies have demonstrated the efficacy of ensemble methods; however, they often do not address the potential overfitting and feature redundancy. By integrating the firefly algorithm, our approach mitigates these issues, leading to more robust predictions. This comparative analysis underscores the enhanced accuracy of our model against benchmarks set by conventional ensemble methods without optimized feature selection.

Our work can be compared with the other ensemble methods commonly used in heart disease classification, such as random forest, gradient boosting machines (GBM), and bagging techniques. But even our stacked ensemble with optimized firefly feature selection compares in terms of classification accuracy, interpretability, computational efficiency, and robustness against overfitting can provide a comprehensive understanding of its performance relative to existing methods.

The impact of test size on how close the exact arrangement result is to hypothetical possibility level was analyzed. The example size was controlled and gone from 20 to 1000 as shown in Fig. 12. To assess the arrangement results of K-fold, nested, train/test split and ensembled classifiers, some degree of hyper parameter tuned models were utilized. Figure 11 shows that by utilizing both an intricate calculation, where ensemble method includes choices that were combined with an ensemble classifier and a more straightforward calculation, with t-test and a strategic modeled classifier. Although, strategic modeled calculations from this point increase, predictions given by K-fold cross-validation were extensively higher than the hypothetical opportunity level of half. The most elevated sample deviation was seen with higher sample sizes; nonetheless, the thing that matters was at the sample size of N = 1000. Interestingly, prediction disseminations delivered by utilizing nested CV and train/test part fundamentally contrast from half possibility level with SVM and strategic modeled calculations at 96.5% accuracy (p went from 4.3 × 10−4 to 0.997, a moderate number of sample size is normalized by the chance with 95% certainty level). This is most likely on the grounds that dynamic learning guarantees that the number of preparing tests is least and takes out any irrelevant samples. Additionally, new and relevant samples are added after every iteration for decreasing the possibilities overfitting of the classifier. Also, limits of one classifier are controlled by different classifiers which are frequently ready to catch correlative data, subsequently working on the correlation of the information provided by several correlation finding strategies. Accordingly, it can securely be said that the train/test set is a good measuring strategy to improve the presentation of the classifiers.

Because of the shortage of named entity information for disease grouping, customary managed AI calculations might overcome the errors during its preparation stage. As an answer for this issue, we propose an ensemble-based dynamic learning model to get improved prediction outcomes with lesser features selected using firefly algorithm. Utilizing an outfit of classifiers when the number of tests is less becomes significant; it lessens the likelihood of picking less correlated information as its features are prepared in a non-indistinguishable manner. The point of the technique is to keep up with the rate accuracy maintenance, with the inadequate measure of information that is accessible. The ways to select the partitions are tested on the scope of ideal features present inside the given data sample. In this paper, we apply pool-based dynamic feature sampler, where an enormous pool of unlabeled information tests can be accessible to the classifier, and the selections should be possible just from this pool.

5 Conclusion

The healthcare industry has enormous data from people who are suffering with different ailment. Finding a system to turn such raw data into useful information would keep most people's lives in a healthy state. Data mining concepts can be used to interpret the raw data, with accurate predictions to give different insights into the goal of disease avoidance. Heart disease is the main reason for death in the present days, notable features have been chosen in this learning utilizing firefly algorithm and the finest performing classification modeling techniques using ensemble techniques that advance the accuracy of prediction of heart disease is proposed. First, a trial was conducted using the UCI Z-Alizadeh Sani dataset to classify the important characteristics and the same standard process is improved using feature selection and ensemble techniques. The eight major features selected in this study are age, size, systolic murmur, normal chest pain, exertional CP, poor R progression, RWMA area, Cath (Class label). Stacking and voting techniques are applied to dataset. Stacking with substantial characteristics has the maximum accuracy of 86.79% in Z-Alizadeh dataset.

There are a lot of methods to improve this study and tackle the restrictions of this learning. This exploration able to be expanded by doing the similar testing on a wide-scale real-time data. Further inquiries can be carried out to test various mixtures of data mining concepts or machine learning in heart symptoms forecast. Additionally, new classification methods in the attribute’s preference are to be chosen for getting an elaborate view point on the important attributes to progress the accuracy in prediction.

Data Availability

Data used for the findings will be shared by the corresponding author upon request.

References

World Health Organization (WHO): Cardiovascular diseases (CVDs)—Key Facts (2017). http://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds)

Srinivas, K., Rao, G.R., Govardhan, A.: Analysis of coronary heart disease and prediction of heart attack in coal mining regions using data mining techniques. In: 5th International Conference on Computer Science and Education (ICCSE), Hefei, pp. 1344–1349 (2010)

Dey, A., Singh, J., Singh, N.: Analysis of supervised machine learning algorithms for heart disease prediction with reduced number of attributes using principal component analysis. Int. J. Comput. Appl. 140(2), 27–31 (2016)

Idris, N.M., Chiam, Y.K., Varathan, K.D., Wan Ahmad, W.A., Chee, K.H., Liew, Y.M.: Feature selection and risk prediction for patients with coronary artery disease using data mining. Med. Biol. Eng. Comput. 58(12), 3123–3140 (2020)

Banati, H., Bajaj, M.: Fire fly based feature selection approach. Int. J. Comput. Sci. Issues (IJCSI) 8(4), 2 (2011)

Sharma, A., Zaidi, A., Singh, R., Jain, S., Sahoo, A.: Optimization of SVM classifier using Firefly algorithm. In: IEEE Second International Conference on Image Information Processing (ICIIP-2013), pp. 198–202. IEEE (2013)

Belloufi, A., Assas, M., Rezgui, I.: Intelligent selection of machining parameters in multipass turnings using firefly algorithm. Model. Simul. Eng. 2014, 1 (2014)

Pramudita, B.A., Wibirama, S., Izhar, L.I., Setiawan, N.A.: EEG motor imagery signal classification using firefly support vector machine. In: 2018 International Conference on Intelligent and Advanced System (ICIAS), pp. 1–6. IEEE (2018, August)

Sangeetha, R., Sathappan, S.: Preprocessing using attribute selection in data stream mining. In: 2018 3rd International Conference on Communication and Electronics Systems (ICCES), pp. 431–438. IEEE (2018)

Abhinaya, P.M., Nivethitha, V.: Detection of novel attacks by anomaly intrusion detection system using classifiers. Int. J. Eng. Technol. 7, 54–58 (2017)

Abdullah, A.S., Rajalaxmi, R.: A data mining model for predicting the coronary heart disease using random forest classifier. In: International Conference in Recent Trends in Computational Methods, Communication and Controls, pp. 22–25 (2012)

Ganesan, M., Sivakumar, N.: IoT based heart disease prediction and diagnosis model for healthcare using machine learning models. In: IEEE International Conference on System, Computation, Automation and Networking (ICSCAN), pp. 1–5. IEEE (2019)

Mohan, S., Thirumalai, C., Srivastava, G.: Effective heart disease prediction using hybrid machine learning techniques. IEEE Access 7, 81542–81554 (2019)

Yadav, D.C., Pal, S.: Prediction of heart disease using feature selection and random forest ensemble method. Int. J. Pharmaceut. Res. 12(4), 56–66 (2020)

Sharma, S.: Heart diseases prediction using hybrid ensemble learning. Dissertation—Dublin Business School Excellence through Learning (Jan. 2020)

Yang, X.S., He, X.S.: Why the firefly algorithm works? Nature-inspired algorithms and applied optimization, pp. 245–259. Springer, Cham (2018)

Ezhilarasi, K., Hussain, D.M., Sowmiya, M., Krishnamoorthy, N.: Crop information retrieval framework based on LDW-ontology and SNM-BERT techniques. Inform. Technol. Control 52(3), 731–743 (2023)

Zhenya, Q., Zhang, Z.: A hybrid cost-sensitive ensemble for heart disease prediction. BMC Med. Inform. Decis. Mak. 21, 1–18 (2021)

Krishnamoorthy, N., Nirmaladevi, K., Shanthi, S., Karthikeyan, N.: Investigation and comparison of different CNN architectures on tomato leaf disease prediction using deep learning. AIP Conf. Proc. 2387, 1 (2021)

Mahesh, T.R., Sivakami, R., Manimozhi, I., Krishnamoorthy, N., Swapna, B.: Early predictive model for detection of plant leaf diseases using MobileNetV2 architecture. Int. J. Intell. Syst. Appl. Eng. 11(2), 46–54 (2023)

Chinthamu, N., Gooda, S.K., Shenbagavalli, P., Krishnamoorthy, N., Selvan, S.T.: Detecting the anti-social activity on Twitter using EGBDT with BCM

De, R., Chakraborty, A., Chatterjee, A., Sarkar, R.: A weighted ensemble-based active learning model to label microarray data. Med. Biol. Eng. Compu. 58(10), 2427–2441 (2020)

Krishnamoorthy, N., Asokan, R., Jones, I.: Classification of malignant and benign micro calcifications from mammogram using optimized cascading classifier. Curr. Signal Transduct. Ther. 11(2), 98–104 (2016)

Devi, K.N., Krishnamoorthy, N., Jayanthi, P., Karthi, S., Karthik, T., Kiranbharath, K.: Machine learning based adult obesity prediction. In: 2022 International Conference on Computer Communication and Informatics (ICCCI), pp. 1–5. IEEE (Jan. 2022)

Deepika, D., Balaji, N.: Effective heart disease prediction with Grey-wolf with Firefly algorithm-differential evolution (GF-DE) for feature selection and weighted ANN classification. Comput. Methods Biomech. Biomed. Engin. 25(12), 1409–1427 (2022)

Kumar, P.R., Ravichandran, S., Narayana, S.: Ensemble classification technique for heart disease prediction with meta-heuristic-enabled training system. Bio-Algorithms Med-Syst. 17(2), 119–136 (2020)

Krishnamoorthy, N., Ramya, K.S., Pavithra, K., Naveenkumar, D.: TV shows popularity and performance prediction using CNN algorithm. J. Adv. Res. Dyn. Control Syst 12, 1541–1550 (2020)

Wang, J., Liu, C., Li, L., Li, W., Yao, L., Li, H., Zhang, H.: A stacking-based model for non-invasive detection of coronary heart disease. IEEE Access 8, 37124–37133 (2020)

Abdar, M., Książek, W., Acharya, U.R., Tan, R.S., Makarenkov, V., Pławiak, P.: A new machine learning technique for an accurate diagnosis of coronary artery disease. Comput. Methods Programs Biomed. 179, 104992 (2019)

Kapila, R., Saleti, S.: Optimizing fetal health prediction: Ensemble modeling with fusion of feature selection and extraction techniques for cardiotocography data. Comput. Biol. Chem. 2023, 107973 (2023)

Kumar, R., Talukdar, F.A., Dey, N., Balas, V.E.: Quality factor optimisation of spiral inductor using firefly algorithm and its application in amplifier. Int. J. Adv. Intell. Parad. 11(3–4), 299–314 (2018)

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through Small Research Project under grant number RGP1/139/45.

Funding

This research receives no funding from any organization.

Author information

Authors and Affiliations

Contributions

K.N and V.K.V took care of the review of literature and methodology. M.A and N.K have done the formal analysis, data collection, and investigation. M.T.R has done the initial drafting and statistical analysis. N.K, E.M, and S.G have supervised the overall project. All the authors of the article have read and approved the final article.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Consent for Publication

Not applicable as the work is carried out on publicly available dataset.

Ethics Approval and Consent to Participate

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Natarajan, K., Vinoth Kumar, V., Mahesh, T.R. et al. Efficient Heart Disease Classification Through Stacked Ensemble with Optimized Firefly Feature Selection. Int J Comput Intell Syst 17, 174 (2024). https://doi.org/10.1007/s44196-024-00538-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00538-0