Abstract

The use of deep learning for identifying defects in medical images has rapidly emerged as a significant area of interest across various medical diagnostic applications. The automated recognition of Posterior Fossa Tumors (PFT) in Magnetic Resonance Imaging (MRI) plays a vital role, as it furnishes essential data about irregular tissue, essential for treatment planning. Human examination has traditionally been the standard approach for identifying defects in brain MRI. This technique is unsuitable for a massive quantity of data. Therefore, automated PFT detection techniques are being established to minimize radiologist's time. In this paper, the posterior fossa tumor is detected and classified in brain MRI using Convolutional Neural Network (CNN) algorithms, and the model result and accuracy obtained from each algorithm are explained. A dataset collection made up of 3,00,000 images with an average of 500 patients from the Children's Cancer Hospital Egypt (CCHE) was used. The CNN algorithms investigated to classify the PFT were VGG19, VGG16, and ResNet50. Moreover, explanations for the behavior of networks were investigated using three different techniques: LIME, SHAP, and ICE. Overall, the results showed that the best model was VGG16 compared with other CNN-used models with accuracy rate values of 95.33%, 93.25%, and 87.4%, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

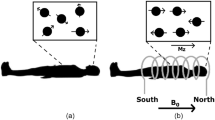

Posterior fossa tumors in children (PFTC) are the most prevalent form of tumor in children. Medulloblastoma, Astrocytoma, and Ependymoma are the most common types of posterior fossa tumors in children [1]. Brain stem gliomas and atypical rhomboid-treated tumors are uncommon. Because there is limited space in the posterior fossa, tumors in this area induce symptoms early on and demand rapid treatment to avert significant mortality and morbidity [2]. The timely identification and diagnosis of these tumors, along with immediate consultation with a neurosurgeon, are critical for the best possible management of pediatric infratentorial brain tumors. Oncologists typically use medical imaging techniques such as Magnetic Resonance Imaging (MRI) to undertake the initial evaluation of brain tumors [3]. This modality is commonly utilized to generate very detailed photos of brain anatomy, allowing any alterations to be detected. However, if the doctor anticipates the posterior fossa tumor and requires further data about its kind, a medical examination of the suspicious cells (the tumor) is important for a detailed prognosis with the aid of a specialist. This one-of-a-kind technology in posterior fossa tumor tissue imaging has improved over the last few decades for picture resolution and contrast augmentation, allowing radiologists become aware of more microscopic tumors and hence achieve more accurate diagnosis reliability [4,5,6]. Merging pictures obtained from various methods of imaging and reaping benefits from the current developments in technology that improve the precision of posterior fossa tumor detection with inside the subject of artificial intelligence for applications involving computer vision, where AI can be combined with these imaging techniques to create a computer-aided diagnostic system.

These methods can assist clinicians in improving the efficiency of tumor detection in the early stages. Several AI approaches, like CNN, have recently been used to identify and recognize posterior fossa cancers [7].

CNN is the most current breakthrough, and it is cutting edge in the field of deep-learning sector. It is used in the domain of illness diagnosis according to medical imaging, MRI. CNN has lately been popular in healthcare images classification and evaluation, because it does not necessitate any preparation or feature extraction prior to training [8]. In general, CNNs are meant to minimize or cancel data pre-processing processes and are typically employed to deal with raw images. CNN comprises convolutional layers, pooling layers, and fully connected layers. The power of machine learning lies in its capability to make decision-based on features adapted during the learning process. The accuracy of a model is an important factor to determine the extent to which a model can be trusted, but at the same time, the unexplained decision of the model leaves it unreliable [9].

To build this trust, a model shall either produce an accurate result, starting with understanding the combination that lays the foundation of XAI interactive machine learning, which is all about adding humans into the loop of information processing. Visual analytics is then built on top of IML and facilitates the workflow by making the user more aware of data interactive and visual AI by adding interactivity to machine learning. The overall focus is to make the doctor aware of the features that are responsible for any given results [10, 11]. These explanations can provide doctors with an understanding of how the system makes decisions, which is a fundamental element in building trust and reliance in AI systems.

The main contribution of this paper is the classification, explanation, and ablation study of pediatric posterior fossa tumors using the best convolutional neural network algorithms. These techniques are being established to minimize radiologists' time.

This paper is structured into six parts. The first section serves as an introduction. Section 2 provides an overview of previous research related to PFT classification using MRI, deep learning, and machine learning techniques. Section 3 outlines the framework of our work. Section 4 reveals the outcomes. Sections 5 and 6, respectively, delve into the conclusion and future endeavors.

2 Literature Review

Recently, CNN and Explainable Artificial Intelligence (XAI) methods have been widely used for the detection and Explain grading of PFT using several imaging techniques, particularly those acquired with MRI. This section presents the latest and pertinent research studies related to the paper's subject matter.

Alexandra I. Korda, et al. presented in [12] texture MRI brain anomalies in first-episode psychosis and clinical high-risk participants were identified utilizing explainable artificial intelligence. The framework suggested improves classification decisions for FEP, CHR_NT, and HC subjects with accuracy greater than 72% in both analyses, validates diagnosis-relevant features, and may help to identify psychosis structural biomarkers, in addition to changes in brain volume.

Toygar Tanyel, et al. [13] introduced technique using MRI characteristics to decipher machine learning outcomes to differentiate between PFT forms. The suggested methodology entails data analysis utilizing kernel density estimations using Gaussian distributions to investigate individual MRI characteristics, an investigation of the correlations between these characteristics, and a full analysis of ML behavior of the model. The findings show that, in the absence of very big datasets, using a simplified strategy produces substantially more prominent and explainable results.

Alves, et al. [14] introduced a pediatric diagnostic method for posterior fossa tumors (PTF). With excellent sensitivity and specificity, the modified process diagram provides a structured tool to assist in the adjunct diagnosis of kids’ PTF.

Moran Artzi, et al. [15] proposed a new convolutional neural network technique. They have used a 2D ResNet50 for the classification of the posterior fossa tumors, and for each detected. The results show that they achieved an accuracy rate of 88%.

Hugo Oliveira, et al. [16] presented an automated posterior fossa structural classification in pediatric brain MRIs using a U-Net CNN algorithm for scans segmentation used data set in this model consists of 32 T2-weighted MRI. They validated the result with fivefold cross-validation.

Morteza Esmaeili, et al. [17] proposed an XAI system for human–machine interaction in the localization of brain tumors. They applied a widely recognized explainable AI algorithm to assess the effectiveness of three deep-learning techniques (DenseNet, GoogleNet, and MobileNet) in pinpointing tumor areas in the brain. The results show that they achieve an accuracy DenseNet Classification Accuracy 92%, GoogleNet with Classification Accuracy 87%, and MobileNet with Classification Accuracy 88.9%.

In [18], Plamen P. et al. proposed a description about Describe the high-level Ontology of XAI approaches to two main categories Opaque Models (Ensemble method, Support Vector Machine, Convolution Neural Network) and Transparent Models (Decision Trees, K-nearest, Linear Regression).

Catherine Pringle, et al. [19] proposed the role of artificial intelligence in pediatric neuroradiology used machine learning techniques: Artificial neural networks, Support vector machines, Decision trees, and Naïve Bayesian and CNN-used MRI image from open-source link for datasets OpenNEURO.org. The results of the experiments show that accuracy was achieved for SVM by (93%), Decision Trees (94%), Naïve Base (93%), and CNN (90%).

Jacob T. et al. [20] proposed a description for present AI capabilities, upcoming possibilities, and moral issues in oncology a CNN is used to detect tumors automatically.

Juan M. Corchado et al. [21] presented the description of explainable artificial intelligence innovations and edge computing applications using XAI and AI models together. To convert the black-box model to supplement systems by allowing the employment of data treatment which can describe the knowledge processing AI algorithms for specialists.

Authors in [22] introduced pediatric PFT diagnosis with machine assistance. The best diagnostic performance was obtained through two phases, each with its unique set of image characteristics and classifier algorithm. The proposed method offers a high-performance prediction of pediatric PFT across a large scale.

According to the study’s findings, different techniques for segmentation were identified, including region of interest, feature extraction, and classifier training. Challenges in effectively segmenting and extracting features led to poor accuracy in tumor detection and classification. Moreover, the classifiers used for learning features were found to be inefficient enough. Through an examination of existing techniques for segmenting and categorizing brain tumors, several constraints in their advancement have been identified. These include sensitivities to the data's local structure, memory constraints, intricacy, susceptibility to inaccuracies, instability, convoluted stochastic procedures, absence of uncertainty quantification, limited adaptability, and extended training periods.

3 Materials and Methods

3.1 Dataset Used and Implementation Setup

In this study, the proposed methodology experiments were conducted using a real dataset from the Children Cancer Hospital Egypt (CCHE). This dataset was divided into two categories: training and testing files. Every training and testing set included three tumor types of MRI images: Astrocytoma, Medulloblastoma, and Ependymoma. The testing set consisted of an average of 500 patients with 3,00,000 MRI images. Figure 1 shows samples of the tumor classes in the used dataset.

All proposed work stages are coded in Python 3.8.10 using the Google Colab platform. TensorFlow version 2.9.2 is utilized. The Google Colab platform makes use of a professional graphics card, the NVIDIA GeForce GTX 1080 Ti GPU, 16 GB of RAM, and an Intel Core i7 (2.8 GHz) CPU (Table 1).

3.2 Methodology

Existing PFT classification methods often lack interpretability, making it difficult for healthcare professionals to understand the reasoning behind the diagnosis and requiring explanations for the decisions made by AI models. The proposed model addresses this gap by explaining their classifications, fostering trust and transparency in decision-making, and adopting them in clinical practice.

To address the shortcomings of prior works, this research presents an explainable AI model for pediatric posterior fossa tumors. The primary emphasis of this research lies in the classification and elucidation of brain tumors, aimed at reducing human mortality rates and enhancing human longevity. The proposed methodology is divided into three stages workflow, as described below.

The first stage is classifying PFT using convolutional neural networks algorithms, and the second stage is implementing post hoc explanation methods to explain the predictions of image classification models. The third step is the ablation study for the proposed work. Figure 2 shows the block diagram of the proposed methodology.

3.2.1 Classifying PFT Using Convolutional Neural Network (CNN)

CNN are the most extensively used deep-feed forward neural networks that can handle a variety of data inputs, including 2D pictures and 1D images. Overall, CNN is made up of several layers, including an input layer, a convolution layer, an RELU layer, a fully connected layer, a classification layer, and an output layer [23, 24]. CNN relies fundamentally on two processes: convolution using a predetermined trainable filter and weights that undergo modification during the down sampling process in the training phase to achieve elevated accuracy [9, 25].

At this stage, the cropped PFT images are saved as a dataset, and three folders are formed, each with images for a different tumor: Astrocytoma, Ependymoma, and Medulloblastoma. The dataset is divided into training and testing data, with 70% of the data used in training and the remainder used in testing. The classification stage in this paper is implemented and evaluated using the VGG16, VGG19, and ResNet-50 CNN algorithms as described below.

3.2.1.1 VGG16

Visual Geometry Group VGG16 [26] is a CNN model with 16 layers. It is regarded as one of the best and most effective types available today. The VGG-16 model architecture, rather than having several parameters, focuses on ConvNet layers using a 3 × 3 kernel size. This model holds significance as its values are accessible to the public online and can be obtained for integration into individual systems and applications. It stands out for its simplicity compared to other sophisticated models. This model requires a minimum input image size of 224 × 224 pixels with three channels. Neural networks utilize optimization algorithms to determine the activation of a neuron by computing the weighted sum of inputs. The image is then processed by the convolutional and pooling layers. This is the fundamental of VGG16 model.

3.2.1.2 VGG19

VGG19 [26] is a popular CNN architecture model with 19 layers, three convolution filters, and a stride of one that is meant to produce good accuracy in large-scale picture applications. A VGG19 was critical to our framework as a large-scale picture features extractor with great accuracy to compare its results with other CNN models.

Several deep-learning classifiers were combined with the VGG19 extractor to achieve maximum brain tumor classification accuracy.

3.2.1.3 VGG19

VGG19 [26] is a popular CNN architecture model with 19 layers, three convolution filters, and a stride of one that is meant to produce good accuracy in large-scale picture applications. A VGG19 was critical to our framework as a large-scale picture features extractor with great accuracy to compare its results with other CNN models. Several deep-learning classifiers were combined with the VGG19 extractor to achieve maximum brain tumor classification accuracy.

3.2.1.4 ResNet50

ResNet50 [27] is an object recognition CNN model that underwent training on the extensive ImageNet dataset. It encompasses numerous layers, including convolutional, pooling, and fully connected layers. This model can extract features for the brain tumor identification job. The ResNet50 model’s bottom layers learn public picture attributes that can be used to detect brain tumors. With the specific objective of identifying and classifying brain tumors, a new set of fully connected layers replaces the final layers of the ResNet50 model (Table 2).

By learning abstract representations with high semantic levels, the three CNN models that have been explained excel at picture analysis. They are particularly well suited for texture analysis as they are designed to use weight sharing and local connections in filter banks, enabling them to identify patterns across the entire image. The first convolutional layer of a CNN is dedicated to detecting basic features, primarily consisting of edges. Subsequent layers then progress to identifying larger and more intricate features through combinations of these simple nonlinear features. However, as the complexity and level of abstraction of the features, as well as the receptive fields of the neurons, increase in deeper layers, CNNs begin to recognize basic texture patterns, global structures and shapes, as shown in Fig. 3.

The PTF classification stage ends after implementing the three models and collecting their results to compare them later to determine the best model in terms of the accuracy of results. The proposed models’ performance is evaluated utilizing main performance indicators, such as Accuracy, Precision, Recall, and F1 score. The performance is evaluated by comparing it to pre-trained models, and the validation of the loss fraction during training is also conducted. All these aspects were taken into account to determine which model exhibited the best performance with the given dataset.

3.2.2 PFT Post Hoc Explanation (Table 3)

To gain more trust, current XAI models were proposed for posterior fossa tumors at this stage. Explainable AI (XAI) is an area in which methodologies are developed to explain CNN predictions to solve issues in the black-box data output of AI model judgments that have resulted in a lack of accountability and trust in the decisions made. The PTF explanation phase was implemented using three different models, as described below.

3.2.2.1 Local Interpretable Model-Agnostic Explanations (LIME)

LIME assists deep-learning practitioners in explaining any machine learning classifier by emphasizing the key input properties that are responsible for a prediction.

LIME generates an explanation by approximating a black-box model with an interpretable model. An interpretable representation for images is a binary vector that denotes the presence or absence of a series of similar contiguous pixels (also known as superpixels) [28].

Equation 1 presents the explanation supplied by LIME

where we can define an explanation as a model with different explanation families , fidelity functions , and complexity measures .

3.2.2.2 Shapley Additive Explanations (SHAP)

The SHAP technique is a game-theoretic way to understand any machine learning model’s output [29]. The Shapley values are associated with each tumor type’s property. It is difficult to interpret the CNN model directly using mathematical behavior. SHAP allows us to explain in detail how each input attribute affects the model's output. The SHAP technique computes the Shapley value for each input feature, providing a measure of the contribution of each feature to the model output. For an initial model QUOTE , the explanation model \(g\left( {x^{\prime}} \right)\) with simplified input \(x^{\prime}\) is obtained from

where represents the number of input features, and represents the constant value when all inputs are missing.

3.2.2.3 Individual Conditional Expectation (ICE)

ICE plots have been used in our proposed work as a visualization technique for understanding the relationship between a specific input variable and the output of machine learning. They show one line for each individual, indicating how the individual's prediction varies when a feature changes. Other feature values are predetermined by the individual's data. Second, breakdown plots display feature attributions, which means that the prediction is broken into contributions that may be assigned to various interpretive features. Based on the average predicted value for all datasets, a plot may be produced by adding or deleting each feature contribution one by one [30], and it shows the predicted values of the output variable for different values of a single input variable while keeping the values of all other input variables constant.

Considerations for PPT explanation:

-

Interpretability: All three methods can offer valuable insights, but LIME might be preferable due to its ability to generate human-understandable image attributions, crucial for explaining PPT classifications to healthcare professionals.

-

Reliability: SHAP can be a good choice due to its theoretical foundation in game theory, potentially leading to more robust explanations. However, for PPT classification tasks, extensive evaluation is needed to assess the reliability of explanations generated by all three methods.

3.3 Performance Evaluation

To evaluate the performance of the proposed CNN architecture in the classification and explanation of the PFT in MRI images, the confusion matrix was produced for all instances, and an evaluation was conducted by comparing the outputs of the employed CNNs techniques with their corresponding original image labels, utilizing the generated confusion matrices. The criteria used to scrutinize the study's outcomes encompassed specificity, sensitivity, f1-score, and accuracy. The confusion matrix was used to calculate these metric values to measure how precisely the Posterior Fossa tumors are being graded (Table 4).

Three statistical metrics, specifically true positive (TP), false positive (FP), false negative (FN), and true negative (TN), are computed and employed to assess the efficiency of the suggested classification system [31], as follows:

3.3.1 Accuracy

To calculate accuracy percentage that describes the fraction of the test images that have been correctly classified by Eq. 3

3.3.2 F1-Score:

According to Eqs. 4, 5, 6, the F1-score is defined as the harmonic average of precision and recall. F1-score has a greatest value of 1 and a worst value of 0. It is a useful metric for imbalanced datasets since it prioritizes underrepresented classes. First, we must define Precision and Recall as follows:

then, we can calculate The F1-score from the following equation [Eq. 6]:

4 Results Analysis

In this section, the classification, explanation, and ablation results will be explained in order, and the confusion matrix of the proposed CNN algorithms: VGG16, VGG19, and ResNet-50. The performance of the assessment of the proposed model relies on accuracy and the count of incorrect predictions. The figures and data tables below illustrate the curves for the conventional outcomes of CNNs (Table 5).

4.1 Classification Results

Table 6 displays our experimental results with different models, tumor classes, and CNN classifiers; an accuracy rate value of 95.33%, 93.25%, and 87.4% for input =image size of (224,224), respectively, for the three used models, as illustrated in Fig. 4.

4.1.1 Results of Confusion Matrix for VGG16

Figure 5 depicts the training and validation losses achieved after 50 epochs. The VGG-16 model loss curve shows a rapid drop in training and validation loss values up to 20 epochs. Following that, a minor decrease in loss is noted, and the model is almost stable after 40 epochs. We used the confusion matrix figure 6 to describe and visualize the performance of a PFT classification model. For an input MRI image size of 224x224, the accuracy rate is 95.33%.

4.1.2 Results of Confusion Matrix for VGG19

The VGG19 confusion matrix and loss of the training dataset and validation data for 50 epochs are illustrated in Figs. 7 and 8. Following experimental system testing data output, we acquired a loss of 0.20% and an accuracy of 93.25%.

4.1.3 Results of Confusion Matrix for ResNet-50

The graphical classification confusion matrix of accuracy, and loss chart by the red and blue lines for the ResNet50 model are also shown in Figs. 9 and 10.

4.2 Explanation Results

Explanation results’ strategies generated by LIME, SHAP, and ICE will be presented here to help users understand the predictions of complex data models.

4.2.1 LIME

LIME interpretations for PFT tumor classifications were divided into two images. The first image is a PFT tumor sample from the dataset-tested images. The second image is a superpixel created using quick-shift segmentation to provide a perturbation image and play a crucial role in understanding the model's decision-making process and offer valuable insights into specific characteristics of a PFT tumor. As shown in Figs. 11, 12, and 13. This empowers healthcare professionals to not only rely on the model's output but also understand the reasoning behind it, fostering trust and collaboration in the diagnostic process.

4.2.2 SHAP

The following figures explain the SHAP explanation method results output for implemented VGG16, VGG19, and ResNet50 CNN models in order.

Directly interpreting the CNN models with mathematical behavior is difficult. Thus, utilizing SHAP, the effect of each input feature on model output is readily explained and illustrated in Figs. 14, 15, and 16. Red pixels show positive SHAP values that increase the likelihood of the class. Higher the positive value, the stronger the feature’s influence in pushing the model's prediction toward that particular tumor class. Blue pixels show negative SHAP values, which diminish the likelihood of the class. Higher the negative value, the stronger the feature's influence in pushing the model's prediction away from that particular tumor class.

4.2.3 ICE

The ICE plot reveals a distinct pattern: while one observation shows a notably original line with a blue color relationship, the other demonstrates a notably modified one with an orange color. This plot underscores that the horizontal axis has a connection with the target variable, but this connection varies significantly among individuals. Typically, ICE plots illustrate a curve for each data point in the used training dataset, but displaying a curve for each data point can lead to information overload for large datasets. We can control the number of curves shown through different methods like sampling or clustering. Figures 17, 18, and 19 show the visualization of CNN models on the used MRI dataset.

4.3 Ablation Study

We performed an ablation study to confirm which CNN model outperforms in terms of accuracy and find out which loss function and training approach are best suited for our proposed model architecture. Overall, an ablation study [32] is a series of experiments in which one of the elements of a deep-learning model is removed or replaced to assess the impact of these parts on the model's performance. Removing the last convolutional block serves as a controlled experiment to assess the impact of model complexity on performance and interpretability for PPT classification. By comparing the model's accuracy and the interpretability of explanations with and without the final block, researchers can gain insights into the optimal balance between these factors for their specific task.

The ablation study scenario was performed regarding layer configurations and activation functions. We removed the last convolutional block from all used models to test the ablation study's new configuration, where we freeze the layers of the pre-trained VGG16, VGG19, and Resnet50 CNNs used models up to the specified block to obtain custom classification layers. The modified models were compiled and compared to the original ones. Figure 20 and Table 7 present the results of the ablation study.

The testing results in Table 7 show that the three used modified CNN models’ performance outperformed the original VGG16, VGG19, and Resnet50 in terms of precision, recall, F1 score, and accuracy values.

The use of AI models in pediatric medicine, while promising, has ethical concerns. Bias in training data can lead to models that perform poorly for certain patient groups, potentially exacerbating healthcare disparities. Additionally, the “black box” nature of traditional AI models and the need for clear, actionable explanations in XAI models are crucial for building trust with healthcare professionals, especially when making critical decisions about a child's health. Finally, robust data security measures and clear guidelines regarding patient privacy and data ownership are essential to protect vulnerable pediatric patients.

5 Conclusion

In this study, we presented pediatric posterior fossa tumor (PFT) classification with explainable artificial intelligence. We used three pre-trained VGG16, VGG19, and ResNet-50 CNN models for classifying PFT using a real MRI dataset from the Children's Cancer Hospital Egypt (CCHE). For the proposed classification and explanation work, the PFT dataset is categorized into three tumor classes: astrocytoma, ependymoma, and medulloblastoma. The implemented CNN classification models were evaluated with accuracy rate values of 95.33%, 93.25%, and 81.4% for each model in order. Explanations for the behavior of the proposed work were investigated using three different techniques: LIME, SHAP, and ICE. The experimental results reveal that the VGG16 achieves the highest accuracy and performance among other implemented CNN models. In future work, we are planning to develop a new hybrid CNN model utilizing an ablation study to obtain the maximum accuracy while maintaining the lowest complexity over time, which will be valuable in future research and real-world tumor decision support systems.

Data Availability

All the datasets and material can be provided upon request from the corresponding author.

References

Mengide, J.P., Berros, M.F., Turza, M.E., Liñares, J.M.: Posterior fossa tumors in children: An update and new concepts. Surg. Neurol. Int. (2023). https://doi.org/10.25259/SNI_43_2023

Sridhar, K., Sridhar, R., Venkatprasanna, G.: Management of posterior fossa gliomas in children. J. Pediatr. Neurosci. 6(Suppl1), S72 (2011). https://doi.org/10.4103/1817-1745.85714

Fleming, A.J., Chi, S.N.: Brain tumors in children. Curr. Probl. Pediatr. Adolesc. Health Care 42(4), 80–103 (2012)

Huang, J., Shlobin, N.A., Lam, S.K., DeCuypere, M.: Artificial intelligence applications in pediatric brain tumor imaging: a systematic review. World Neurosurgery 157, 99–105 (2022). https://doi.org/10.1016/j.wneu.2021.10.068

Quon, J.L., Bala, W., Chen, L.C., Wright, J., Kim, L.H., Han, M., Shpanskaya, K., Lee, E.H., Tong, E., Iv, M., Seekins, J., Lungren, M.P., Braun, R.M., Poussaint, T.Y., Laughlin, S., Taylor, M.D., Lober, R.M., Vogel, H., Fisher, P.G., Yeom, K.W.: Deep learning for pediatric posterior fossa tumor detection and classification: a multi-institutional study. AJNR Am. J. Neuroradiol. 41(9), 1718–1725 (2020). https://doi.org/10.3174/ajnr.A6704

Yearley, A.G., Blitz, S.E., Patel, R.V., Chan, A., Baird, L.C., Friedman, G.K., Arnaout, O., Smith, T.R., Bernstock, J.D.: Machine learning in the classification of pediatric posterior fossa tumors: a systematic review. Cancers (2022). https://doi.org/10.3390/cancers14225608

Xie, Y., Zaccagna, F., Rundo, L., Testa, C., Agati, R., Lodi, R., Tonon, C.: Convolutional neural network techniques for brain tumor classification (from 2015 to 2022): Review, challenges, and future perspectives. Diagnostics. 12(8), 1850 (2022)

Lundervold, A.S., Lundervold, A.: An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 29(2), 102–127 (2019)

Razzak, M.I., Naz, S., Zaib, A.: Deep learning for medical image processing: Overview, challenges and the future. Classif. BioApps Autom. Deci. Mak. (2018). https://doi.org/10.1007/978-3-319-65981-7_12

Arrieta, A.B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., Herrera, F.: Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. fusion. 58, 82–115 (2020)

Das A., Rad P. Opportunities and challenges in explainable artificial intelligence (xai): A survey. arXiv preprint arXiv:2006.11371. (2020)

Korda, A.I., Andreou, C., Rogg, H.V., Avram, M., Ruef, A., Davatzikos, C., Koutsouleris, N., Borgwardt, S.: Identification of texture MRI brain abnormalities on first-episode psychosis and clinical high-risk subjects using explainable artificial intelligence. Transl. Psychiatry 12(1), 1–12 (2022). https://doi.org/10.1038/s41398-022-02242-z

Tanyel, T., Nadarajan, C., Duc, N.M., Keserci, B.: Deciphering machine learning decisions to distinguish between posterior fossa tumor types using mri features: what do the data tell us? Cancers 15(16), 4015 (2023). https://doi.org/10.3390/cancers15164015

Alves, C.A.P.F., et al.: A diagnostic algorithm for posterior fossa tumors in children: a validation study. Am. J. Neuroradiol. 42(5), 961–968 (2021). https://doi.org/10.3174/ajnr.a7057

Artzi, M., et al.: Classification of pediatric posterior fossa tumors using convolutional neural network and tabular data. IEEE Access 9, 91966–91973 (2021). https://doi.org/10.1109/access.2021.3085771

Oliveira H., Penteado L., Maciel J. L., Ferraciolli S. F., Takahashi M. S., Bloch I., Junior R. C.. Automatic segmentation of posterior fossa structures in pediatric brain mris. In 2021 34th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI) (pp. 121–128). IEEE. (2021)

Esmaeili, M., Vettukattil, R., Banitalebi, H., Krogh, N.R., Geitung, J.T.: Explainable artificial intelligence for human-machine interaction in brain tumor localization. J. Pers. Med. 11(11), 1213 (2021)

Angelov, P.P., Soares, E.A., Jiang, R., Arnold, N.I., Atkinson, P.M.: Explainable artificial intelligence: an analytical review. Wiley Interdiscip. Rev. Data Mining Knowl Discov 11(5), e1424 (2021)

Pringle, C., Kilday, P., Kamaly-Asl, I., Stivaros, S.M.: The role of artificial intelligence in paediatric neuroradiology. Pediatr. Radiol. 52(11), 2159–2172 (2022). https://doi.org/10.1007/s00247-022-05322-w

Shreve, J.T., Khanani, S.A., Haddad, T.C.: Artificial intelligence in oncology: current capabilities, future opportunities, and ethical considerations. Am. Soc. Clin. Oncol. Educ. Book 42, 842–851 (2022). https://doi.org/10.1200/edbk_350652

Corchado, J.M., Ossowski, S.: Advances in explainable artificial intelligence and edge computing applications. Electronics 11(19), 111 (2022). https://doi.org/10.3390/electronics11193111

Zhang, M., Wong, S.W., Wright, J.N., Toescu, S., Mohammadzadeh, M., Han, M., Lummus, S., Wagner, M.W., Yecies, D., Lai, H., Eghbal, A., Radmanesh, A., Nemelka, J., Stephen Harward, I., Malinzak, M., Laughlin, S., Perreault, S., Braun, K.R.M., Vossough, A., Yeom, K.W.: Machine assist for pediatric posterior fossa tumor diagnosis: a multinational study. Neurosurgery 89(5), 892–900 (2021). https://doi.org/10.1093/neuros/nyab311

Yamashita, R., Nishio, M., Do, R.K., Togashi, K.: Convolutional neural networks: an overview and application in radiology. Insight Imaging 9(4), 611–629 (2018). https://doi.org/10.1007/s13244-018-0639-9

Malek, S., Melgani, F., Bazi, Y.: One-dimensional convolutional neural networks for spectroscopic signal regression. J. Chemom. 32(5), e2977 (2018)

Alzubaidi, L., Zhang, J., Humaidi, A.J., Duan, Y., Santamaría, J., Fadhel, M.A., Farhan, L.: Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 8(1), 1–74 (2021). https://doi.org/10.1186/s40537-021-00444-8

Simonyan, K., Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. ArXiv. /abs/1409.1556. (2014)

He K., Zhang, X., Ren, S., Sun, J. Deep Residual Learning for Image Recognition. ArXiv. /abs/1512.03385. (2015)

Ribeiro M. T., Singh S., Guestrin, C. "Why Should I Trust You?" Explaining the Predictions of Any Classifier. ArXiv. /abs/1602.04938. (2016)

Ribeiro M. T., Singh S., Guestrin C. " Why should i trust you?" Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135–1144). (2016)

Goldstein, A., Kapelner, A., Bleich, J., Pitkin, E.: Peeking inside the black box: visualizing statistical learning with plots of individual conditional expectation. J. Comput. Graph. Stat. 24(1), 44–65 (2015)

Grandini M., Bagli E., Visani G Metrics for multi-class classification: an overview. arXiv preprint arXiv:2008.05756. (2020)

Meyes R., Lu, M., de Puiseau, C. W., & Meisen, T. Ablation studies in artificial neural networks. arXiv preprint arXiv:1901. 08644. (2019)

Acknowledgements

The authors would like to thank the Children’s Cancer Hospital Egypt 57357 for providing the MRI dataset of the pediatric posterior fossa tumors and making it available for this study.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

ER carried out the experiments and drafted the manuscript; FM, YM, and MH participated in performing the analysis. The research study is written by all authors. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare there are no any relevant financial and non-financial competing interests to the manuscript.

Consent for Publication

Miss Eman Ragab drafted the manuscript, and Dr. Fahima A. Maghraby, Dr. Yasser M. Abd El-Latif, and Dr. Mohamed Hagag participated in all experiments. All authors read and approved the final manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ashry, E.R., Maghraby, F.A., El-Latif, Y.M.A. et al. Pediatric Posterior Fossa Tumors Classification and Explanation-Driven with Explainable Artificial Intelligence Models. Int J Comput Intell Syst 17, 166 (2024). https://doi.org/10.1007/s44196-024-00527-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-024-00527-3