Abstract

Ballistic missile defense systems require accurate target recognition technology. Effective feature extraction is crucial for this purpose. The deep convolutional neural network (CNN) has proven to be an effective method for recognizing high-resolution range profiles (HRRPs) of ballistic targets. It excels in perceiving local features and extracting robust features. However, the standard CNN's fully connected manner results in high computational complexity, which is unsuitable for deployment in real-time missile defense systems with stringent performance requirements. To address the issue of computational complexity in HRRP recognition based on the standard one-dimensional CNN (1DCNN), we propose a lightweight network called group-fusion 1DCNN with layer-wise auxiliary classifiers (GFAC-1DCNN). GFAC-1DCNN employs group convolution (G-Conv) instead of standard convolution to effectively reduce model complexity. Simply using G-Conv, however, may decrease model recognition accuracy due to the lack of information flow between feature maps generated by each G-Conv. To overcome this limitation, we introduce a linear fusion layer to combine the output features of G-Convs, thereby improving recognition accuracy. Additionally, besides the main classifier at the deepest layer, we construct layer-wise auxiliary classifiers for different hierarchical features. The results from all classifiers are then fused for comprehensive target recognition. Extensive experiments demonstrate that GFAC-1DCNN with such simple and effective techniques achieves higher overall testing accuracy than state-of-the-art ballistic target HRRP recognition models, while significantly reducing model complexity. It also exhibits a higher recall rate for warhead recognition compared to other methods. Based on these compelling results, we believe this work is valuable in reducing workload and enhancing missile interception rates in missile defense systems.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Efficient ballistic target recognition is a key issue for ballistic missile defense systems, especially the fast and effective recognition of missile warheads from numerous decoy targets is a very challenging task. For ballistic target recognition, the infrared radiation [1,2,3,4,5], micro-Doppler [6,7,8] radar cross-section [9,10,11,12], and high-resolution range profile (HRRP) [13,14,15,16] of ballistic targets are all considered. Among them, HRRP represents the radial distribution of target scattering centers on radar line of sight and benefits from its rich structural information of the target and the easiness of obtaining, which has become an effective feature for radar automatic target recognition (RATR) [13, 17].

Efficient feature extraction is a critical step in improving ballistic target recognition. In recent years, machine learning techniques have been widely applied to HRRP-based radar automatic target recognition problems because they can perform efficient automatic feature extraction in a data-driven manner, among which representative methods include k-nearest neighbor [18], support vector machine [19, 20], decision tree [21], linear discriminant analysis [22, 23], etc. However, since these algorithms extract shallow features, they are showing deficiencies in robustness and generalization performance [24]. Compared with shallow machine learning techniques, deep learning (DL) can extract deep features from datasets, and the representative models, such as auto-encoders (AE) [25,26,27,28,29], extreme learning machines [30], long short-term memory (LSTM) [31], gate recurrent unit (GRU) [32], convolutional neural network (CNN) [33, 34], and their variants, have been widely applied in HRRP recognition research.

Among the aforementioned DL-based methods, one-dimensional CNN (1DCNN) is a kind of HRRP target recognition method that can automatically extract deep features and has better local feature perception capability and robustness than other deep neural networks [13, 17], it has been widely applied to HRRP target recognition field. For example, Pan et al. [34] take advantage of CNN and attention mechanisms to effectively extract envelope features and physical structural features from HRRP, achieving high robustness to the small translation of test samples and noises. Chen et al. [32] take 1DCNN as the feature extractor and can excavate abundant local structural features of data and combine a bidirectional GRU (Bi-GRU) network to consider sequential relationships among different regional features in HRRP. Xiang et al. [17] incorporate the global best leading artificial bee colony algorithm to automatically and heuristically search for the optimal channel number of the 1DCNN, avoiding the rule-of-thumb design of 1DCNN for HRRP recognition.

However, the standard 1DCNN is a fully connected neural network in nature, i.e., the output feature maps of the previous layer are all performing convolution operations with each convolution kernel in the current convolution layer, which has the problem of high computational complexity [35] and is not conducive to the aim of the rapid recognition of ballistic targets. In this paper, we carried out a research on ballistic target recognition method based on lightweight 1DCNN, and a ballistic target recognition method based on group-fusion 1DCNN with layer-wise auxiliary classifiers (GFAC-1DCNN) is proposed. To address the problem of the high computational complexity of standard 1DCNN, as shown in Fig. 2, this paper proposes a novel lightweight convolution, namely group-fusion convolution (GF-Conv), which first splits the standard 1D convolution filters as well as input feature maps into the largest number of groups equally, and each convolution filter only performs convolution operation with the input feature maps in the same group, avoiding the high computational complexity of using the fully connected structure. Simply replacing the standard one-dimensional convolution with group convolution (G-Conv) will reduce the recognition effect accordingly, which is mainly caused by the lack of information flow between groups. Therefore, a linear fusion layer is constructed after G-Conv to realize the effective information between groups. Different convolution layers extract features at varying levels of abstraction, representing different levels of the target features. Thus we suggest using features at different levels for comprehensive target recognition. Besides the main classifier in the deepest layer, layer-wise auxiliary classifiers are built for different hierarchical features, and their results are finally fused for target recognition. In addition, to optimize the training of GFAC-1DCNN, the traditional mini-batch stochastic gradient descent (SGD) algorithm [36] is changed by equal-sized steps for all parameters, resulting in poor convergence. In this paper, we applied the diffGrad algorithm [37] for optimizing the training of GFAC-1DCNN leveraging the short-term gradient change to adjust the step size of each parameter in such a way that it should have a larger step size for faster gradient changing parameters and vice versa.

Compared with other HRRP recognition methods based on deep learning, the proposed GFAC-1DCNN in this paper has the following advantages.

-

1.

Compared with the standard 1DCNN, the GFAC-1DCNN not only greatly reduces the total number of model parameters, but also ensures that the model has a high recognition accuracy. It has a certain reference significance for reducing the workload of the missile defense system as well as improving the recognition speed.

-

2.

The recognition performance of 1DCNN for ballistic targets is effectively improved by using layer-wise auxiliary classifiers to fuse the outputs of different convolution modules. This indicates that integrating intermediate layer features from different levels and output features can effectively enhance target recognition performance.

-

3.

Compared with other deep neural networks, GFAC-1DCNN has higher overall recognition accuracy, especially the highest recall rate of warhead recognition. The higher recall rate of warhead targets can reduce the missed detection rate of ballistic targets more, which has a certain reference significance for the missile defense system to improve the missile interception rate.

The remainder of this paper is organized as follows. In Sect. 2, we give a brief introduction to ballistic target HRRP recognition, and state-of-the-art (SOTA) models of ballistic target HRRP recognition. The proposed model, a.k.a, GFAC-1DCNN for radar HRRP target recognition and the training optimizer based on diffGrad are introduced in Sect. 3. The measured data of five ballistic targets, experimental settings, and detailed experimental results are elaborated in Sect. 4, and we conclude Sect. 5.

2 Related Works

2.1 Introduction to Ballistic Target HRRP Recognition

HRRP is an effective 1D radar signature used for target recognition. HRRP captures the projection of target scattering centers onto the radar line of sight (RLOS), using wideband waveforms. It represents the range distribution of the complex returned signals from target scatterers. With wide bandwidths, modern radars can achieve high down-range resolution, resulting in fine-grained HRRP signatures that reveal micro-Doppler and structural characteristics of targets. The amplitude component of HRRP is most useful, as the phase is sensitive to aspect angles. HRRP combines high information content and noise robustness, enabling the classification and discrimination of targets based on unique range profile features extracted from their high-fidelity scattering responses. Therefore, HRRP has emerged as an efficient 1D basis for ballistic target HRRP recognition [38].

HRRP signals are obtained by projecting the echo sum of target scattering points onto the RLOS using wideband radar signals. Its resolution is inversely proportional to the radar bandwidth \(\Delta r\). Therefore, wideband radars can acquire HRRP signals with higher resolution [35]. In the RLOS direction, the target is equivalent to several range cells with width \(\Delta r\). The echo of each range cell is the sum of echoes from all scatterers within that cell. The echo of the \(i\)th range cell is defined as

where \({N}_{i}\) is the number of target scatterers in the \(i\)th range cell, \({a}_{i,k}\) denotes the scattering intensity of the \(k\)th scatterer in the \(i\)th range cell, and \({\tau }_{i,k}\) denotes the time for the radar wave to reach the \(k\)th scatterer in the ith range cell. \(f\) is the frequency of the incident wave, \(I(i)\) and \(Q(i)\) are the real and imaginary parts of the signal. \(j\) is the imaginary unit, the square root of − 1.

Since the phase information in HRRP is sensitive to target azimuth and elevation, while the amplitude information is relatively stable. The amplitude of HRRP is used as the basis for target recognition, which is defined as

where vector \({\varvec{X}}\) denotes the HRRP signal, and \(E\) denotes the dimension of the HRRP.

2.2 SOTA Models of Ballistic Target HRRP Recognition

In recent years, CNN has become a predominant technique for ballistic target HRRP recognition. Several CNN-related innovations in elaborated architecture design, robust HRRP representation learning, and advanced training methodology have pushed the state-of-the-art in this field.

Elaborated Architecture Design Many sophisticated CNN architectures have been proposed for efficient and accurate ballistic target HRRP recognition. The stacked CNN-BiRNN [34] architecture with attention mechanism enables the model to exploit the envelope, local structure, and translation invariance characteristics of HRRP for robust recognition. By replacing regular convolutions with multi-scale G-Convs and pointwise convolutions, MSGF-1DCNN [35] can reduce parameters while capturing target details at multiple scales. This improves feature learning and reduces complexity simultaneously. DSFCNN [38] utilizes depthwise and pointwise convolutions to reduce computational complexity compared to standard CNNs while improving recognition accuracy. TACNN [32] uses Bi-GRU to generate attention weights so that it can focus on HRRP target regions and filter out backgrounds to improve recognition performance. Introducing channel attention modules enables 1DCNN to recalibrate channel features for improved HRRP feature extraction [17]. Similar to MSGF-1DCNN, our proposed GFAC-1DCNN also employs G-Conv. However, the two approaches apply G-Conv in different ways to achieve complementary goals of efficiency and representation learning. GFAC-1DCNN focuses on optimizing efficiency by maximizing the number of groups, whereas MSGF-1DCNN focuses on enhancing feature extraction using multiple G-Conv scales.

Robust HRRP Representation Learning Robust feature extraction from HRRP is another important area of focus. The two-stream fusion network architecture with separate VAE and CNN branches [33] extracts and fuses probabilistic and visual features from HRRP and SAR data, respectively, for enhanced SAR target recognition. The AE-based network architecture enables 1D ELM-LRF-AE [30] to learn hierarchical local receptive field representations of HRRP in an unsupervised manner, allowing more efficient and robust feature extraction. Converting HRRP to binary images enables DCNNs to extract visual patterns and spatial features from the images, enhancing local feature learning compared to using 1D HRRP directly [14]. In contrast to the above methods which aim to enhance feature extraction from HRRP, our proposed GFAC-1DCNN focuses on fusing features from different layers. Specifically, GFAC-1DCNN introduces a linear fusion layer to combine output features from group convolutions, enabling information aggregation across groups. Furthermore, layer-wise auxiliary classifiers are constructed to perform decision-level fusion using predictions from multiple network layers. This hierarchical feature and decision fusion provides a more comprehensive target representation compared to solely improving feature learning at individual layers. In summary, while other models concentrate on boosting feature extraction, GFAC-1DCNN uniquely focuses on cross-layer feature aggregation and multi-level decision fusion, complementing existing HRRP recognition approaches. The hierarchical fusion design is the key differentiator of GFAC-1DCNN compared to prior arts that augment single-layer feature learning.

Advanced Training Methodology Advanced CNN training methodologies have also emerged. The snapshot ensemble technique [39] allows 1DCNN to improve HRRP recognition performance without additional training costs. AdamW optimization enables faster convergence for the snapshot ensemble training process and taking models at different minima promotes diversity. The cost-sensitive pruning technique [13] based on the artificial bee colony algorithm can automatically find a compact 1DCNN architecture optimized for lower misclassification cost and complexity. The automated lottery ticket hypothesis-based neural architecture search technique can significantly reduce 1DCNN's complexity and promote overall recognition accuracy [17]. SFCD loss [38] optimizes sample fitting and between-class separability, enhancing 1DCNN recognition performance with limited training data. The cost-sensitive cross-entropy loss [13] focuses 1DCNN's training on minimizing the cost of misclassifying expensive categories. Unlike the advanced training methodologies mentioned previously, this paper applies the diffGrad algorithm [37] to optimize GFAC-1DCNN training.

While the above-mentioned methods have advanced the SOTA in specific tasks, most still employ fully connected convolutional architectures, which lead to high complexity unsuitable for applications. Our proposed GFAC-1DCNN method introduces GF-Conv to reduce complexity, while improving accuracy compared to previous HRRP recognition models. The layer-wise auxiliary classifier design for hierarchical feature fusion further enhances recognition performance.

3 Ballistic Target HRRP Recognition Based on GFAC-1DCNN

The ballistic target recognition method based on GFAC-1DCNN is shown in Fig. 1, which includes the training phase and the testing phase. In the training phase, the one-dimensional HRRP samples of ballistic targets are fed into the GFAC-1DCNN network, the output of the Softmax classifier with the corresponding labels is fed into the cross-entropy loss function, and the model parameters of the GFAC-1DCNN are updated using the mini-batch SGD algorithm [36]. In the testing phase, the HRRP samples are fed into the trained GFAC-1DCNN, and the prediction results of the samples are obtained by the Softmax classifier by maximizing the posterior probability.

3.1 Overall Structure of GFAC-1DCNN

The overall structure of GFAC-1DCNN is shown in Fig. 2. As can be seen from Fig. 2, GFAC-1DCNN consists of the input layer, one-dimensional convolution (1D-Conv) layer, batch normalization (BN) layer, non-linear activation layer, global max-pooling layer, flatten layer, and fully connected layer. The 1D-Conv layer, BN layer, and non-linear activation layer form a convolution module, while the alternately connected multiple convolution modules are mainly used for feature extraction. The global max-pooling layer, flatten layer, and fully connected layer form a classifier, and the classifier is connected after each convolution layer. As shown in Fig. 2, the last classifier is the main classifier while other classifiers among the alternately connected convolution modules serve as auxiliary classifiers.

3.2 Convolution Module

In this paper, the specific settings and roles of the convolution module are as follows.

1D-Conv Layer The 1D-Conv layer plays a pivotal role in the 1DCNN architecture as it utilizes fixed-size windows and 1D-Conv filters to extract features from input data. This layer incorporates a parameter-sharing mechanism, where each channel's features undergo convolution operations with the same 1D-Conv filters in a sliding manner. Such an approach effectively mitigates the significant increase in the number of parameters as the input data dimension expands. By stacking multiple 1D-Conv layers, local features are extracted progressively, allowing earlier layers to capture more finely grained details while later layers extract more global features. In our proposed method (as shown in Fig. 2), we replace the standard 1D-Conv with a lightweight group-fusion 1D-Conv (GF-Conv) within the 1D-Conv layer. This modification aims to concurrently reduce model complexity and enhance feature extraction.

BN Layer The BN layer is utilized after the 1D-Conv layer to normalize the features of each mini-batch sample. This normalization process contributes to faster convergence and improved training stability of the DCNN. The BN layer ensures that the output of the 1D-Conv layer is normalized to a distribution with a mean of 0 and a standard deviation of 1. Specifically, let the output of the 1D-Conv layer be denoted as \({\varvec{x}}=[{{\varvec{x}}}^{(1)},{{\varvec{x}}}^{(2)},...,{{\varvec{x}}}^{(d)}]\), where \(d\) represents the dimension of \({\varvec{x}}\). Each dimension of \({\varvec{x}}\) is then normalized to

where \({\mathbb{E}}(\cdot )\) and \({\text{Var}}(\cdot )\) denote the expectation and standard deviation of each mini-batch sample respectively, \(\varepsilon\) is a very small number close to 0 to prevent the denominator from being 0. The normalized values are then scaled and shifted by introducing the hyper-parameters \({\gamma }^{(k)}\) and \({\beta }^{(k)}\).

Non-linear Activation Layer To enhance the model's non-linear fitting capability to features, neural networks commonly incorporate non-linear activation functions. In this study, we utilize the Mish function [17] as the chosen non-linear activation function. The formula for Mish is represented by Eq. (5).

3.3 Group-Fusion 1D-Conv Layer

Let the total number of convolution modules be \(\mathcal{L}\), the multi-channel input of the \(l\)th (\(l\in \{0,2,\dots ,\mathcal{L}-1\}\)) standard 1D-Conv layer is represented by the tensor \({{\varvec{X}}}^{(l)}\)∈\({\mathbb{R}}^{{D}^{(l)}\times {C}^{(l)}}\), each channel corresponds to a 1D feature map, \({D}^{(l)}\) and \({C}^{(l)}\) denote the length of a single 1D feature map and the channel number of the 1D feature maps, respectively. The output feature map of the 1D-Conv layer is denoted by the tensor \({{\varvec{X}}}^{(l+ 1 )}\)∈\({\mathbb{R}}^{{D}^{(l+ 1 )}\times {C}^{(l+ 1 )}}\), where \({D}^{(l+ 1 )}\) and \({C}^{(l+ 1 )}\) denote the length and the channel number of the feature map after convolution operations as well. Let \({H}^{(l)}\) denote the kernel size of the \(l\)th standard 1D-Conv layer, and the weight tensor \({{\varvec{W}}}^{(l)}\)∈\({\mathbb{R}}^{{H}^{(l)}\times {C}^{(l)}\times {C}^{(l+ 1 )}}\) represents the convolution filters. The \(l\)th standard 1D-Conv convolves the multi-channel input feature maps \({{\varvec{X}}}^{(l)}\) with all the 1D-Conv filters \({{\varvec{W}}}^{(l)}\) to yield an output feature map \({{\varvec{X}}}^{(l+ 1 )}\) with the channel number of \({C}^{(l+1)}\). Specifically, each convolution filter is convolved with all the input feature maps, so that the 1D feature map generated by the \(k\)th (\(k\in \{1,2,\dots ,{C}^{(l+ 1 )}\}\)) 1D-Conv filter \({{\varvec{W}}}_{k}^{(l)}\) and all input feature maps \({{\varvec{X}}}^{(l)}\) is

where the operator \(*\) denotes the convolution operation, \({{\varvec{b}}}^{(l)}\)∈\({\mathbb{R}}^{{C^{{(l{ + 1})}} }}\), and \({{\varvec{b}}}_{k}^{(l)}\) denotes the bias parameter of the \(k\)th convolution filter. Considering that the input feature maps of each layer are padded with zeros, let the number of zeros padded on one side of each input feature map in the \(l\)th layer be\({P}^{(l)}\), the length of the input 1D feature map becomes\({D}^{(l)}+2{P}^{(l)}\), thus \({X}^{(l)}\)∈\({\mathbb{R}}^{({D}^{(l)}+2{P}^{(l)})\times {C}^{(l)}}\), then the convolution operation is performed with the convolution filter using a stride of\({S}^{(l)}\), the value of the \(k\)th output feature map at position \(i\) (\(i\)∈ \(\{1,2,...,{D}^{(l+1)}\}\)) is

where \(i\)∈\(\{1,2,...,{D}^{(l+1)}\}\), \(k\)∈\(\{1,2,...,{C}^{(l+1)}\}\). Therefore, the length of the output feature map is

where \(\lfloor \cdot \rfloor \) indicates rounding-down operation.

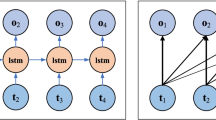

As we can see from Eq. (7) and Fig. 3a, the standard 1D-Conv layer in which the convolutional filter is computed with all the input feature maps is similar to a fully connected model, so the standard 1D-Conv is very computationally intensive. To reduce the computational complexity of the standard 1D-Conv, we first use group convolution (G-Conv) to take the place of standard 1D-Conv. Both the input feature map \({{\varvec{X}}}^{(l)}\) and the weight tensor \({{\varvec{W}}}^{(l)}\) are divided into \({\Omega }^{(l)}\) groups according to the spatial order, so \({\Omega }^{(l)}\) needs to divide both the number of input channels \({C}^{(l)}\) and the number of output channels \({C}^{(l+1)}\) simultaneously. Let the input feature map, weight tensor and output feature map of the \(g\)th (\(g\)∈\(\{1,2,...,{\Omega }^{(l)}\}\)) group be \({\widetilde{{\varvec{X}}}}^{(g,l)}\), \({\widetilde{{\varvec{W}}}}^{(g,l)}\) and \({\widetilde{{\varvec{X}}}}^{(g,l+ 1 )}\) respectively, then \({\widetilde{{\varvec{X}}}}^{(g,l)}\in {\mathbb{R}}^{{D}^{(l)}\times ({C}^{(l)}/{\Omega }^{(l)})}\), \({\widetilde{{\varvec{W}}}}^{(g,l)}\in {\mathbb{R}}^{{H}^{(l)}\times ({C}^{(l)}/{\Omega }^{(l)})\times ({C}^{(l+ 1 )}/{\Omega }^{(l)})}\), \({\widetilde{{\varvec{X}}}}^{(g,l+ 1 )}\)∈\({\mathbb{R}}^{{D}^{(l+ 1 )}\times ({C}^{(l+ 1 )}/{\Omega }^{(l)})}\). Keeping the step size and number of padded zeros of each G-Conv the same as the standard 1D-Conv, the output feature map of the G-Conv is

where \(\mathit{mod}(k,{\Omega }^{(l)})\) denotes the remainder of \(k\) divided by \({\Omega }^{(l)}\), \(ceil(\cdot )\) denotes rounding-up operation, and \({\widetilde{{\varvec{X}}}}^{(l+ 1 )}\) denotes all feature maps generated by G-Conv operation, then \({\widetilde{{\varvec{X}}}}^{(l+ 1 )}\)∈\({\mathbb{R}}^{{D}^{(l+ 1 )}\times {C}^{(l+ 1 )}}\) and \({D}^{(l+1)}\) can still be calculated via Eq. (8).

As shown in Fig. 3, in the G-Conv, the convolution operation only occurs in each group, avoiding the fully connected structure, and the standard 1D-Conv is equivalent to the G-Conv when \(\Omega \) = 1. However, since the convolution operation occurs only within each group, the intra-group feature information exchange is relatively plentiful but the inter-group feature information exchange is quite scarce. Therefore, to promote the information exchange between groups, a simple linear fusion of the G-Conv output features is performed by connecting pointwise convolution (PW-Conv) after G-Conv. The G-Conv with feature fusion by PW-Conv is termed group-fusion convolution (GF-Conv) hereinafter.

Using the tensor \({{\varvec{W}}}^{{\prime}(l)}\)∈\({\mathbb{R}}^{1\times {C}^{(l+1)}\times {C}^{(l+ 1 )}}\) and \({{\varvec{b}}}^{{\prime}(l)}\)∈\({\mathbb{R}}^{{C^{{(l{ + 1})}} }}\) to denote the weight parameter and bias parameter of the PW-Conv filters respectively, with the number padded zeros \({P}^{{\prime}(l)}\)=0 and step size \({S}^{{\prime}(l)}\)=1, the feature fusion process using PW-Conv is

where \({{\varvec{X}}}^{{\prime}(l+ 1 )}\)∈\({\mathbb{R}}^{{D}^{(l+ 1 )}\times {C}^{(l+ 1 )}}\) is the final output feature map of the GF-Conv layer. \({{\varvec{X}}}^{{\prime}(l+ 1 )}\) is consistent with the output dimension of the standard 1D-Conv calculated by Eq. (7), so the replacement of the standard 1D-Conv is feasible.

3.4 Complexity Analysis of GF-Conv

The number of parameters is an important metric for analyzing the model complexity, the more parameters there are, the more computation is required in both the training and testing phases. To compare the model complexity of the proposed GF-Conv with that of the standard 1D-Conv, we calculate the parameter number in the same layer of their model structure shown in Fig. 2. For the standard CNN, the total parameter number of its \(l\)th layer is

For GF-1DCNN, the total parameters of the \(l\)th GF-Conv layer include two parts, i.e., the G-Conv parameters and the PW-Conv parameters. Thus, the total parameter number of the GF-Conv layer is

From Eqs. (11) and (12), we obtain

where the convolution kernel size \({H}^{(l)}\) is generally taken as a positive odd number and the group number \({\Omega }^{(l)}\) is generally taken as a positive integer. When \(\Omega \) = 1, the parameter number of GF-1DCNN is increased by 1/\({H}^{(l)}\) than that of standard 1DCNN, and when \({\Omega }^{(l)}\) is increased, especially when the group number is larger, i.e., \({H}^{(l)}\ll {\Omega }^{(l)}\), then \({H}^{(l)}/{\Omega }^{(l)}\hspace{0.17em}\)≈ 0, the parameter number of standard 1DCNN is about \({H}^{(l)}\) times of GF-1DCNN. It can be seen that when other conditions remain constant, the larger the group number of GF-1DCNN, the smaller the computational complexity of the model. Thus, the group number is maximized for the largest computation reduction.

3.5 Fusion of Layer-Wise Auxiliary Classifiers

Figure 2 illustrates that each convolution module captures features at different depths, signifying varying levels of abstraction for the target. Based on this observation, we propose utilizing features from multiple levels for target recognition. In addition to the main classifier positioned at the deepest layer, layer-wise auxiliary classifiers are established to leverage hierarchical features, and their results are combined to achieve comprehensive recognition. The components of each classifier are outlined as follows.

Global Max-Pooling Layer Global pooling can reduce each channel of a 1D feature map with length \({D}^{(l)}\) into the length of 1, decreasing the parameter demands of successive fully connected layers. There are generally two kinds of pooling operation, i.e., the max-pooling and the average-pooling. Here, we use global max-pooling, which extracts the maximum value of a feature map. From Eq. (10), we get the output feature map of the GF-Conv \({{\varvec{X}}}^{{\prime}(l+ 1 )}\)∈\({\mathbb{R}}^{{D}^{(l+ 1 )}\times {C}^{(l+ 1 )}}\), then we apply BN and non-linear activation to it, i.e.,

where \({\text{BN}}(\cdot )\) denotes the batch normalization operation. Therefore, the global max-pooling operation can be described as Eq. (15).

where \({{\varvec{X}}}^{*(l+1)}\)∈\({\mathbb{R}}^{1\times {C}^{(l+ 1 )}}\) denotes the output of the global max-pooling layer.

Flatten Layer and Softmax Classifier The main purpose of the flatten layer is to restructure the output of the global max-pooling layer \({{\varvec{X}}}^{*(l+1)}\) into a one-dimensional vector \({{\varvec{u}}}^{(l+1)}\)∈\({\mathbb{R}}^{{C^{{(l{ + 1})}} }}\), namely

where \({{\varvec{u}}}^{(l+1)}\) contains the extracted features in the \(l\)th layer that are then passed on to the fully connected layer.

The fully connected layer acts as a Softmax classifier, which is essentially a single-layer neural network, whose number of neurons is equal to \(\mathcal{Q}\), the total number of target classes to be classified. It utilizes the Softmax function to activate and classify based on the maximization of posterior probabilities.

Let the parameters of the Softmax classifier after the \(l\)th convolution module be \({\widehat{{\boldsymbol{\theta}}}}^{(l+1)}=\{{\widehat{{\varvec{W}}}}^{(l+1)},{\widehat{{\varvec{b}}}}^{(l+1)}\}\), where \({\widehat{{\varvec{W}}}}^{(l+1)}\)∈\({\mathbb{R}}^{{C}^{(l+ 1 )}\times \mathcal{Q}}\), \({\widehat{{\varvec{b}}}}^{(l+1)}\)∈\({\mathbb{R}}^{\mathcal{Q}}\). The output of the \(l\)th fully connected layer is

where \({{\varvec{z}}}_{q}^{(l+1)}={{\varvec{u}}}^{(l+1)T}\times {\widehat{{\varvec{W}}}}_{q}^{(l+1)}+{\widehat{{\varvec{b}}}}_{q}^{(l+1)}\), \(q\in \{1,2,\dots ,\mathcal{Q}\}\).

To fuse multiple classfiers’ results, we first sum all outputs of fully connected layers in various depth, which is

Then, the output of the \(q\)th neuron of the fused Softmax classifiers represents the probability of predicting the sample as the \(q\)th class, which is

The prediction process of the sample \({{\varvec{x}}}^{(k)}\) is to maximize the posterior probability, namely,

where \(\widetilde{y}\) is the predicted label.

3.6 Model Training

The training of the network is the process of updating the network parameters using the training samples, which first requires the construction of a loss function indicating the difference between the output of the neural network and the ground-truth labels. Let \({\mathbb{D}}^{\text{train}}\) and \({\mathbb{D}}^{\text{test}}\) denote the training dataset with \({\mathcal{N}}^{\text{train}}\) samples and the testing dataset with \({\mathcal{N}}^{\text{test}}\) samples, and the tuple (\({\varvec{x}}\), \(y\))∈\({\mathbb{D}}^{\text{train}}\) denotes the input features of a sample in the training dataset and its corresponding ground-truth label, respectively, where \(y\)∈{\(1,2,...,\mathcal{Q}\)}, and \(\mathcal{Q}\) denotes the total number of target classes, then the cross-entropy loss function over \({\mathbb{D}}^{\text{train}}\) can be constructed as

where \({\boldsymbol{\theta}}\) denotes all trainable parameters and \(P(y=q|{\varvec{x}};{\boldsymbol{\theta}})\) is the \(q\)th output of the Softmax classifier, indicating the probability that the prediction of the input feature \({\varvec{x}}\) is the \(q\)th class.

The Eq. (21) is called the empirical risk loss function, and the training of the neural network is essentially minimizing the empirical risk loss function, i.e.,

For a dataset with larger \({\mathcal{N}}^{\text{train}}\), due to the limitation of computer memory and computing power, it is not possible to feed all the samples into the network at the same time to update the parameter, so the mini-batch SGD algorithm [36] is generally used to randomly select \(\mathcal{B}\) samples at a time to update the network parameters, therefore, the whole training dataset needs to be randomly divided into \(\mathcal{T}=ceil({\mathcal{N}}^{\text{train}}/\mathcal{B})\) mini-batches, i.e.,\({\mathbb{D}}^{\text{train}}=\{{\mathbb{D}}_{t}^{\text{train}}{\}}_{t=1}^{\mathcal{T}}\). The cross-entropy loss function over the \(t\)th (\(t\in \{1,2,\dots ,T\}\)) mini-batch dataset \({\mathbb{D}}_{t}^{\text{train}}\) is

Feeding \(\mathcal{T}\) mini-batches of samples into GF-1DCNN to train with the SGD algorithm successively, for the \(t\)th iteration, the parameters are updated as

where \(\alpha \) is the learning rate and \({{\varvec{g}}}_{t}\) is the gradient of the loss function w.r.t. the parameter \({{\boldsymbol{\theta}}}_{t}\), that is

In Eq. (24), the choice of the learning rate \(\alpha \) has a great impact on the training convergence. If \(\alpha \) is chosen too large, it may make the network training oscillate near or even far from the optimal, and if it is chosen too small, the network training speed may be too slow. To address this issue, the Adam optimizer [40] combines the first-order and second-order moment estimates of the gradient to dynamically adjust the learning rate. For the \(t\)th iteration, the first-order moment estimate \({{\varvec{m}}}_{t}\) and the second-order moment estimate \({{\varvec{u}}}_{t}\) of the gradient \({{\varvec{g}}}_{t}\) are calculated as

where \({\beta }_{1}\), \({\beta }_{2}\)∈[0,1] are the decay coefficients of \({{\varvec{m}}}_{t}\) and \({{\varvec{u}}}_{{\varvec{t}}}\), respectively.

Then, the deviations of \({{\varvec{m}}}_{t}\) and \({{\varvec{u}}}_{{\varvec{t}}}\) are corrected as

Finally, the parameter update equation of the Adam optimizer is

where \(\varepsilon \) is a small number close to 0 to prevent the denominator from being 0, and \(\odot \) indicates that the vector is multiplied by the corresponding element.

The Adam optimizer has a wide range of applications in the training process of neural networks. Based on the Adam optimizer, the diffGrad optimizer [37] introduces the short-term gradient changing into the parameter update equation, which is based on the idea that for larger gradient changing parameters, a larger learning rate should be used to accelerate the convergence speed; while for smaller gradient changing parameters, a smaller learning rate should be used to prevent training oscillations or even move away from the optimal. In diffGrad, the short-term gradient change is characterized with the help of the AbsSig function, which is

where \(\Delta {{\varvec{g}}}_{t}={{\varvec{g}}}_{t}-{{\varvec{g}}}_{t-1}\).

As shown in Fig. 4, the AbsSig function compresses the input values into [0.5, 1.0] and is defined as

Using the AbsSig function to dynamically adjust the learning rate according to the short-term gradient changing, Eq. (30) is updated as

In summary, the GFAC-1DCNN training optimization algorithm based on diffGrad is shown in Algorithm 1, where \(Acc({{\boldsymbol{\theta}}}_{\mathcal{T}};{\mathbb{D}}^{\text{test}})\) denotes the testing accuracy over \({\mathbb{D}}^{\text{test}}\) with parameters \({{\boldsymbol{\theta}}}_{\mathcal{T}}\) after \(\mathcal{T}\) iterations.

4 Experiments and Analysis

4.1 Dataset

To validate the proposed HRRP recognition method for ballistic targets based on GFAC-1DCNN, the physical optics method in electromagnetic scattering calculation is used to simulate five types of mid-ballistic targets such as warhead, high-imitated decoys, simple decoys, spherical decoys, and motherships, thus \(\mathcal{Q}\) = 5, and the physical parameters of the five types of ballistic targets are shown in Fig. 5. The hyper-parameters in the simulation process are set as follows: the radar center frequency is 10 GHz, the polarization mode is horizontal, the azimuth angle range is 0°–180°, and the simulation accuracy is 0.05°. For each type of target, 3601 HRRP samples with different azimuth angles were obtained, and the number of range cells for each HRRP sample was 256, thus\( E=256\). For each class of targets, 80% of samples are randomly selected as the training dataset \({\mathbb{D}}^{\text{train}}\), and the remaining samples are used as the testing dataset\({\mathbb{D}}^{\text{test}}\).

4.2 Experimental Setup

The hyper-parameters of GFAC-1DCNN are set as follows: the number of convolution modules \(\mathcal{L}\) = 4, the number of complementary padded zeros of all convolution kernels \({P}^{(l)}=0\), the stride of convolution \({S}^{(l)}=1\), and the size of all convolution kernels except PW-Conv \(H\) are chosen in the range {\(3,5, 7,9\)}. Let a 1D array \(C=[{C}^{(1)},{C}^{(2)},\dots ,{C}^{(\mathcal{L})}]\) denote different channel settings of a 1D-Conv layer. To compare the generality of the proposed method under different channel settings, five channel settings are considered for experiments, i.e., \({C}_{1}\) = [8, 16, 32, 64], \({C}_{2}\) = [16, 32, 64, 128], \({C}_{3}\) = [32, 64, 128, 256], \({C}_{4}\) = [64, 128, 256, 512], \({C}_{5}\) = [128, 256, 512, 1024], and \({C}_{6}\) = [256, 512, 1024, 2048]. According to Eq. (13), the group number G-Conv is set to the maximum under the five channel settings to minimize the computation complexity; thus, the group number in each layer under the five channel settings is [1, 8, 16, 32], [1, 16, 32, 64], [1, 32, 64, 128], [1, 64, 128, 256], and [1, 128, 256, 512], respectively. To perform ablation studies on GFAC-1DCNN and verify the effectiveness of each component, we refer to GFAC-1DCNN without auxiliary classifiers as GF-1DCNN. The PW-Conv used for G-Conv feature fusion in GF-1DCNN is removed for comparison, we refer to the GF-1DCNN without PW-Conv as G-1DCNN for short hereinafter. In conclusion, the models with different components for ablation studies are shown in Table 1.

In addition, during the model training, we set the training epochs \(T\) = 200, batch size \(\mathcal{B}\) = 64, and the learning rate \(\alpha \) = \(10^{ - 3}\). In this study, Python 3.9 was utilized along with the open-source deep learning framework PyTorch 2.0.1 LTS [42] for constructing and training the deep learning models. Furthermore, network training was accelerated using CUDA 11.1.

To further compare GFAC-1DCNN with other DL-based state-of-the-art ballistic target HRRP recognition methods based on deep learning, we still use PyTorch to construct stacked denoising sparse autoencoder (sDSAE) [26], bidirectional LSTM (Bi-LSTM) [31], CNN1D-CA [17], MSGF-1DCNN [35], and Bi-GRU [32] for comparison. Meanwhile, to analyze the recognition performance of each target over \({\mathbb{D}}^{\text{test}}\) in more detail, the precision rate \({F}_{P}\), the recall rate \({F}_{R}\), and F1-measure \({F}_{M}\) are calculated for each HRRP recognition method, respectively; while the overall recognition performance is still evaluated using the overall testing accuracy over \({\mathbb{D}}^{\text{test}}\). The \({F}_{P}\), \({F}_{R}\) and \({F}_{M}\) are expressed by Eq. (34), where \({F}_{P}\) represents the proportion of samples recognized as positive examples that are actually positive examples, \({F}_{R}\) represents the proportion of samples correctly recognized as positive examples to all samples that are actually positive, and \({F}_{M}\) is a weighted summation average of \({F}_{P}\) and \({F}_{R}\), which is a combination of precision and recall.

where \(\mathrm{TP}\) stands for True Positive which indicates the number of positive examples classified accurately, the term \(\mathrm{FP}\) shows False-Positive value, i.e., the number of actual negative examples classified as positive, \(\mathrm{TN}\) stands for True Negative which shows the number of negative examples classified accurately, and similarly, \(\mathrm{FN}\) means a False-Negative value which is the number of actual positive examples classified as negative.

4.3 Ablation Study

To verify the impact of different hyper-parameters on the model performance as well as conduct ablation studies of feature fusion, 6 types of networks, i.e., 1DCNN, AC-1DCNN G-1DCNN, GAC-1DCNN, GF-1DCNN, and GFAC-1DCNN were trained and tested with different kernel sizes and channel settings. To evaluate the recognition performance, Table 2 shows the testing accuracy of those models with different kernel sizes and channel settings over \({\mathbb{D}}^{\text{test}}\). What is more, to evaluate the computational complexity of different models, we count up the parameter number of different models and Table 3 shows the results. From Tables 2 and 3, the following conclusions can be drawn.

-

1.

The Impact of Kernel Size As shown in Table 2, when the 6 models have the same 1D-Conv channel settings, increasing the kernel size generally improves the recognition performance in most cases. Table 3 clearly demonstrates that increasing the kernel size also leads to an increase in the number of parameters. This suggests that increasing the kernel size enhances recognition performance but also raises computational complexity.

-

2.

The Impact of 1D-Conv Channel Settings As shown in Table 2, increasing the channel number generally improves recognition performance for most models with the same kernel size. However, there may be a decrease in performance for some models when the channel number becomes too large. The optimal 1D-Conv channel settings for each kernel size are as follows: \({C}_{5}\) for 1DCNN, \({C}_{3}\) for AC-1DCNN, \({C}_{6}\) for G-1DCNN, GAC-1DCNN, GF-1DCNN, and GFAC-1DCNN. It is worth noting that increasing the channel number also results in an increase in the number of parameters, as shown in Table 3.

-

3.

The Impact of G-Conv (1DCNN vs G-1DCNN, AC-1DCNN vs GAC-1DCNN) From Table 2, it is evident that replacing the standard 1D-Conv with G-Conv results in a decrease in recognition performance, despite a significant decrease in complexity as shown in Table 3. This is due to G-Conv breaking the fully connected manner of standard 1D-Conv, which leads to a hindered information flow between channel groups.

-

4.

The Impact of GF-Conv (G-1DCNN vs GF-1DCNN, GAC-1DCNN vs GFAC-1DCNN, 1DCNN vs GF-1DCNN, AC-1DCNN vs GFAC-1DCNN) Compared to G-Conv, GF-Conv improves recognition performance by utilizing PW-Conv to fuse grouped features from G-Conv. Although adding PW-Conv after G-Conv increases computational complexity, it is still lower than standard 1D-Conv. When the 1D-Conv channel settings are \({C}_{1}\), \({C}_{2}\), \({C}_{3}\) and \({C}_{4}\), GF-Conv has lower testing recognition accuracy than standard 1D-Conv. However, when the channel settings are \({C}_{5}\) and \({C}_{6}\), GF-Conv significantly improves recognition performance. We believe this phenomenon is due to standard 1D-Conv being over-parameterized, while GF-Conv is somewhat under-parameterized, which can be seen in Table 3. As the channel number increases, standard 1D-Conv becomes more over-parameterized, whereas GF-Conv becomes better parameterized. Consequently, the recognition performance of 1DCNN and AC-1DCNN decreases after \({C}_{4}\) and \({C}_{3}\), respectively. In contrast, the recognition performance of GF-1DCNN and GFAC-1DCNN exhibits an approximately monotonically increasing trend from \({C}_{1}\) to \({C}_{6}\).

-

5.

The Impact of Layer-Wise Auxiliary Classifiers (1DCNN vs AC-1DCNN, G-1DCNN vs GAC-1DCNN, GF-1DCNN vs GFAC-1DCNN) Table 2 shows that, for certain kernel sizes and 1D-Conv channel settings, the recognition performance is improved with the inclusion of layer-wise auxiliary classifiers. Specifically, the recognition of 1DCNN and GF-1DCNN is significantly enhanced with layer-wise auxiliary classifiers. However, there are still instances where the recognition performance is not improved, indicating the need for further in-depth research to enhance the effectiveness of layer-wise auxiliary classifiers in the future. Table 3 demonstrates that the introduction of auxiliary classifiers only slightly increases the computational complexity, which is applicable to missile defense systems.

-

6.

The Impact of Combing GF-Conv and Layer-wise Auxiliary Classifiers (1DCNN vs GFAC-1DCNN) Based on the results presented in Table 2, GFAC-1DCNN exhibits higher testing accuracy compared to 1DCNN when the 1D-Conv channel settings are adjusted to \({C}_{5}\) and \({C}_{6}\). Additionally, Fig. 6 depicts the testing accuracy curves of GFAC-1DCNN and 1DCNN. It is evident from Fig. 6 that GFAC-1DCNN demonstrates a faster convergence rate, reaching a higher asymptote. Moreover, GFAC-1DCNN has a smaller parameter number, further affirming the effectiveness and advancement of our proposed method.

Figure 7 displays the receiver operating characteristic (ROC) curves and the corresponding area under curve (AUC) values of various HRRP recognition methods, with warheads as positive samples and other targets as negative samples, where \(\mathrm{FPR}=\mathrm{TP}/(\mathrm{FN}+\mathrm{TP})\) and \(\mathrm{TPR}=\mathrm{TN}/(\mathrm{TN}+\mathrm{FP})\). Demonstrating exceptional performance specifically for warheads, GFAC-1DCNN achieves a remarkable AUC value of 0.9972. This high AUC value highlights the significant importance of GFAC-1DCNN's recognition capabilities in anti-missile missions.

Based on the above experimental results, the proposed GF-Conv decreases computation complexity and enhances feature extraction by breaking away from the fully connected manner of standard 1D-Conv. Furthermore, the layer-wise auxiliary classifier serves as a lightweight module to enhance recognition performance. It is noteworthy that our methods are particularly effective for over-parameterized 1DCNN models, especially those with larger channel numbers. The increase in recognition performance is more significant when the 1D-Conv channel settings are adjusted to \({C}_{5}\) and \({C}_{6}\).

4.4 Comparison with Other Deep Learning Methods

Table 4 presents the recognition results of deep neural network-based HRRP recognition methods. It is evident from the table that each method yields varying recognition outcomes for different targets. Notably, all methods achieve 100% precision (\({F}_{P}\)) and recall (\({F}_{R}\)) for spherical decoys, which can be attributed to their distinct physical characteristics depicted in Fig. 5. The distinguishability between spherical decoys and other targets is pronounced, thereby resulting in superior recognition performance for spherical decoys. However, some methods exhibit relatively low \({F}_{R}\) (< 95%) for warhead recognition, namely Bi-LSTM, sDSAE, Bi-GRU, CNN1D-CA, 1DCNN, AC-1DCNN, GAC-1DCNN, GF-1DCNN, and G-1DCNN, while GFAC-1DCNN demonstrates the highest \({F}_{R}\) for warhead recognition.

Figure 8 provides a detailed view of the recognition accuracy of different targets across the 11 compared models. Notably, GFAC-1DCNN exhibits excellent recognition performance for warheads and simple decoys, along with superior accuracy for all samples. However, the recognition accuracy for warheads lags behind that of other targets, demanding further research to enhance warhead recognition precision.

It is worth mentioning that GFAC-1DCNN exhibits a slightly lower \({F}_{P}\) for warhead recognition compared to Bi-LSTM, Bi-GRU, and GF-1DCNN, potentially leading to a higher false alarm rate in anti-missile systems. Nevertheless, the higher \({F}_{R}\) can enhance missile interception rates and reduce losses.

To further analyze the recognition performance of CNN-based methods in more detail, we plot the confusion matrix for each method using the \({\mathbb{D}}^{\text{test}}\), as shown in Fig. 9. From Fig. 9, it is evident that all CNN-based models, namely 1DCNN, AC-1DCNN, CNN1D-CA, G-1DCNN, GAC-1DCNN, GF-1DCNN, MSGF-1DCNN, and GFAC-1DCNN, misclassify a significant proportion of warhead samples as high-imitated decoys. Similarly, all 8 DL methods misidentify a large number of high-imitated decoy samples as warheads. This observation aligns with the findings presented in Fig. 5, demonstrating that the physical characteristics of warheads and high-imitated decoys are remarkably similar. Consequently, distinguishing between the two proves challenging, leading to poor recognition performance in this aspect. Furthermore, it is worth noting that 1DCNN, AC-1DCNN, CNN1D-CA, G-1DCNN, GAC-1DCNN, GF-1DCNN, and MSGF-1DCNN exhibit a higher rate of misclassification for mothership and simple decoy samples as warheads. However, this limitation has been effectively addressed by our proposed method, GFAC-1DCNN, showcasing its superiority.

5 Conclusions and Future Scope

To address the issue of high computational complexity in standard 1DCNN for ballistic target HRRP recognition, this paper proposes a lightweight GFAC-1DCNN. The contributions are determined as follows.

-

1.

The proposed GFAC-1DCNN architecture introduces G-Conv to replace standard convolutions, which significantly reduces computational complexity and model size by maximizing the group number. This enables real-time deployment feasibility for missile defense systems.

-

2.

The linear fusion layer after G-Conv promotes information flow between groups, overcoming the limitation of lost inter-group connections that occur with naive G-Conv. This allows the aggregation of multi-group features to enhance representation learning.

-

3.

Layer-wise auxiliary classifiers are designed to leverage hierarchical features from different network depths. Fusing their outputs improves accuracy by combining multi-level information, complementing the representation learned by the deepest classifier.

Experimental results demonstrate that GFAC-1DCNN advances the SOTA in higher overall testing accuracy and recall rate for warhead targets compared to other deep learning methods while maintaining lower computational complexity. This highlights the potential of GFC-1DCNN to reduce computational burden in ballistic missile defense systems and enhance interception rates, making it highly valuable in engineering applications.

However, the selection of hyper-parameters in GFAC-1DCNN is currently based on rule-of-thumb methods, which may not be suitable for real-life scenarios. In the future, we plan to incorporate neural architecture search methods to obtain proper hyper-parameter settings for various real-life scenarios. Additionally, it is important to note that auxiliary classifiers do not consistently improve recognition across all kernel sizes and 1D-Conv channel settings. Therefore, a further in-depth research is needed to fully understand and enhance the interpretation, versatility, and scalability of the proposed auxiliary classifiers.

Data Availability

The datasets generated during and analyzed during the current study are available from the corresponding author on reasonable request.

References

Sun, F., Wang, H.: Research on detection mission scheduling strategy for the LEO constellation to multiple targets. J. Defense Model. Simul. Appl. Methodol. Technol. JDMS 18, 87–104 (2021)

Xu, M., Bu, X., Chen, X., Song, Y.: Rotating missile self-infrared radiation interference compensation for dual-band infrared attitude measurement. J. Appl. Phys. 128, 1–7 (2020)

Lu, L., Yan, S., Zou, J., Sheng, W.: Performance analysis of infrared detection system based on near space platform. In: Conference on Infrared, Millimeter-Wave, and Terahertz Technologies VI. Hangzhou, People’s Republic of China (2019)

Lu, L., Sheng, W., Jiang, W., Jiang, F.: Estimating detection range of ballistic missile in infrared system based on near space platform. In: Conference on Infrared, Millimeter-Wave, and Terahertz Technologies V. Beijing, People’s Republic of China (2018)

Liu, J., Huang, S., Zhao, W., Pang, C., Huang, D.: Analysis on demand of anti-missile operational ability of space-based infrared low-orbit satellites. Syst. Eng. Electron. 40, 1777–1785 (2018)

Zhu, N., Xu, S., Li, C., Hu, J., Fan, X., Wu, W., Chen, Z.: An improved phase-derived range method based on high-order multi-frame track-before-detect for warhead detection. Remote Sens. 14, 1–29 (2022)

Choi, I.-O.H., Park, S.-H., Kim, M., Kang, K.-B., Kim, K.-T.: Efficient discrimination of ballistic targets with micromotions. IEEE Trans. Aerosp. Electron. Syst. 56, 1243–1261 (2020)

Choi, I., Jung, J., Kim, K., Park, S.: Novel parameter estimation method for a ballistic warhead with micromotion. J. Electromagn. Eng. Sci. 20, 262–269 (2020)

Niu, L., Xie, Y., Zhang, C., Wu, D.: Detection simulation of AEGIS combat system for ballistic missile in electronic warfare environment. Syst. Eng. Electron. 41, 1195–1201 (2019)

Kim, Y., Choi, Y.-J., Choi, I.-S.: Separation of dynamic RCS using Hough transform in multi-target environment. J. Korean Inst. Inf. Technol. 17, 91–97 (2019)

Chen, J., Xu, S., Chen, Z.: Convolutional neural network for classifying space target of the same shape by using RCS time series. IET Radar Sonar Navig. 12, 1268–1275 (2018)

Hua, H.-Q., Jiang, Y.-S., He, Y.-T.: High-frequency method for scattering from coated targets with extremely electrically large size in terahertz band. Electromagnetics 35, 321–339 (2015)

Xiang, Q., Wang, X., Song, Y., Li, R., Lai, J., Zhang, G.: Ballistic target recognition based on cost-sensitively pruned convolutional neural network. J. Beijing Univ. Aeronaut. Astronaut. 47, 2387–2398 (2021)

Xiang, Q., Wang, X., Li, R., Lai, J., Zhang, G.: HRRP image recognition of midcourse ballistic targets based on DCNN. Syst. Eng. Electron. 42, 2426–2433 (2020)

Choi, I.-O., Jung, J., Kim, K., Park, S.-H.: Effective discrimination between warhead and decoy in mid-course phase of ballistic missile. J. Korean Inst. Electromagn. Eng. Sci. 31, 468–477 (2020)

Persico, A.R., Ilioudis, C.V., Clemente, C., Soraghan, J.J.: Novel classification algorithm for ballistic target based on HRRP frame. IEEE Trans. Aerosp. Electron. Syst. 55, 3168–3189 (2019)

Xiang, Q., Wang, X., Song, Y., Lei, L., Li, R., Lai, J.: One-dimensional convolutional neural networks for high-resolution range profile recognition via adaptively feature recalibrating and automatically channel pruning. Int. J. Intell. Syst. 36, 332–361 (2021)

Liu, W., Yuan, J., Zhang, G., Shen, Q.: HRRP target recognition based on kernel joint discriminant analysis. J. Syst. Eng. Electron. 30, 703–708 (2019)

Zhang, J., Li, J., Li, Y.: TOPSIS missile target selection method supported by the posterior probability of target recognition. Appl. Math. Nonlinear Sci. 7, 713–720 (2022)

Jasinski, T., Brooker, G., Antipov, I.: W-band multi-aspect high resolution range profile radar target classification using support vector machines. Sensors-Basel 21, 2385 (2021)

Wang, S., Li, J., Wang, Y., Li, Y.: Radar HRRP target recognition based on gradient boosting decision tree. In: 9th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), pp. 1013–1017. Datong, People’s Republic of China (2016)

Chen, J., Liao, L., Zhang, W., Du, L.: Mixture factor analysis with distance metric constraint for dimensionality reduction. Pattern Recognit. 121, 108156 (2022)

Liu, J., Su, M., Xu, Q., Fang, N., Wang, B.F.: Multi-scale feature vector reconstruction for aircraft classification using high range resolution radar signatures. J. Electromagn. Wave 35, 1843–1862 (2021)

Wan, J.W., Chen, B., Xu, B., Liu, H.W., Jin, L.: Convolutional neural networks for radar HRRP target recognition and rejection. EURASIP J. Adv. Signal Process. (2019). https://doi.org/10.1186/s13634-019-0603-y

Du, C., Chen, B., Xu, B., Guo, D., Liu, H.: Factorized discriminative conditional variational auto-encoder for radar HRRP target recognition. Signal Process. 158, 176–189 (2019)

Guo, C., Wang, H., Jian, T., Xu, C., Sun, S.: Method for denoising and reconstructing radar HRRP using modified sparse auto-encoder. Chin. J. Aeronaut. 33, 1026–1036 (2020)

Li, C., Du, L., Deng, S., Sun, Y., Liu, H.: Point-wise discriminative auto-encoder with application on robust radar automatic target recognition. Signal Process. 169, 107385 (2020)

Liao, L., Du, L., Chen, J.: Class factorized complex variational auto-encoder for HRR radar target recognition. Signal Process. 182, 107932 (2021)

Zhao, F.X., Liu, Y.X., Huo, K.: Radar target recognition based on stacked denoising sparse autoencoder. J. Radars 6, 149–156 (2017)

Wang, X., Li, R., Wang, J., Lei, L., Song, Y.: One-dimension hierarchical local receptive fields based extreme learning machine for radar target HRRP recognition. Neurocomputing 418, 314–325 (2020)

Xu, B., Chen, B., Liu, J., Q., P.H. Wang, H.W. Liu,: Radar HRRP target recognition by the bidirectional LSTM model. J. Xidian Univ. 46, 29–34 (2019)

Chen, J., Du, L., Guo, G., Yin, L., Wei, D.: Target-attentional CNN for radar automatic target recognition with HRRP. Signal Process. 196, 108497 (2022)

Du, L., Li, L., Guo, Y., Wang, Y., Ren, K., Chen, J.: Two-stream deep fusion network based on VAE and CNN for synthetic aperture radar target recognition. Remote Sens. 13, 4021 (2021)

Pan, M., Liu, A., Yu, Y., Wang, P., Li, J., Liu, Y., Lv, S., Zhu, H.: Radar HRRP target recognition model based on a stacked CNNBi-RNN with attention mechanism. IEEE Trans. Geosci. Remote Sens. 60, 1–14 (2022)

Xiang, Q., Wang, X.D., Lai, J., Song, Y.F., Li, R., Lei, L.: Multi-scale group-fusion convolutional neural network for high-resolution range profile target recognition. IET Radar Sonar Navig. 16, 1997–2016 (2022)

Budin, M.A.: Stochastic approximation method. IEEE Trans. Syst. Man Cybern. 3, 396–402 (1972)

Dubey, S.R., Chakraborty, S., Roy, S.K., Mukherjee, S., Singh, S.K., Chaudhuri, B.B.: diffGrad: an optimization method for convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 31, 4500–4511 (2020)

Xiang, Q., Wang, X., Lai, J., Lei, L., Song, Y., He, J., Li, R.: Quadruplet depth-wise separable fusion convolution neural network for ballistic target recognition with limited samples. Expert Syst. Appl. 235, 121182 (2024)

Xiang, Q., Wang, X., Lai, J., Song, Y., He, J., Lei, L.: Snapshot ensemble one-dimensional convolutional neural networks for ballistic target recognition. In: 2023 6th World Conference on Computing and Communication Technologies (WCCCT), pp. 187–193. IEEE, Chengdu, China (2023)

Cortinas-Lorenzo, B., Perez-Gonzalez, F.: Adam and the ants: on the influence of the optimization algorithm on the detectability of DNN watermarks. Entropy 22, 1379 (2020)

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: surpassing human-level performance on imagenet classification. In: 2015 IEEE international conference on computer vision (ICCV), pp. 1026–1034. IEEE, Santiago, Chile (2015)

Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., Chintala, S.: PyTorch: an imperative style, high-performance deep learning library. In: Wallach, H., Larochelle, H., Beygelzimer, A., Buc, F.D.T.A., Fox, E., Garnett, R. (eds.) Advances in Neural Information Processing Systems 32, pp. 8026–8037. Curran Associates, Inc., Red Hook (2019)

Acknowledgements

The authors are grateful to the editor and the anonymous reviewers for their constructive comments.

Funding

This research was funded by the National Natural Science Foundation of China, grant numbers 61806219, 61876189, 61503407, 61703426, and 61273275; the Young Talent Fund of University Association for Science and Technology in Shaanxi, China, grant numbers 20190108 and 20220106; and the Innovation Talent Supporting Project of Shaanxi, China, grant number 2020KJXX-065.

Author information

Authors and Affiliations

Contributions

QX: conceptualization, methodology, writing—original draft, writing—review and editing, software. XW: funding acquisition, methodology, project administration, supervision. YS: funding acquisition, project administration, supervision. LL: funding acquisition, software, supervision. RL: project administration, resources, software. JL: resources, software, validation.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no conflict of interest.

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiang, Q., Wang, X., Lai, J. et al. Group-Fusion One-Dimensional Convolutional Neural Network for Ballistic Target High-Resolution Range Profile Recognition with Layer-Wise Auxiliary Classifiers. Int J Comput Intell Syst 16, 190 (2023). https://doi.org/10.1007/s44196-023-00372-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00372-w