Abstract

Redundant nodes in a kernel incremental extreme learning machine (KI-ELM) increase ineffective iterations and reduce learning efficiency. To address this problem, this study established a novel improved hybrid intelligent deep kernel incremental extreme learning machine (HI-DKIELM), which is based on a hybrid intelligent algorithm and a KI-ELM. First, a hybrid intelligent algorithm was established based on the artificial transgender longicorn algorithm and multiple population gray wolf optimization methods to reduce the parameters of hidden layer neurons and then to determine the effective number of hidden layer neurons. The learning efficiency of the algorithm was improved through the reduction of network complexity. Then, to improve the classification accuracy and generalization performance of the algorithm, a deep network structure was introduced to the KI-ELM to gradually extract the original input data layer by layer and realize high-dimensional mapping of data. The experimental results show that the number of network nodes of HI-DKIELM algorithm is obviously reduced, which reduces the network complexity of ELM and greatly improves the learning efficiency of the algorithm. From the regression and classification experiments, its CCPP can be seen that the training error and test error of the HI-DKIELM algorithm proposed in this paper are 0.0417 and 0.0435, which are 0.0103 and 0.0078 lower than the suboptimal algorithm, respectively. On the Boston Housing database, the average and standard deviation of this algorithm are 98.21 and 0.0038, which are 6.2 and 0.0003 higher than the suboptimal algorithm, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Conventional single hidden layer feed forward neural networks (SLFNs) are based on ineffective training algorithms with weak learning abilities. All parameters of such networks should be updated in their learning algorithms. Huang et al. [1] have presented an extreme learning machine (ELM) in recent years. Unlike in conventional neural networks, In ELM, the weight and bias values of each hidden node adopt the random initialization before being determined with no effort-intensive iterative tuning. To ensure good network learning performance and generalization performance of the network structure, the ELM has considerably improved sample training speeds, fewer local minima, and low convergence speeds [2,3,4,5].

In conventional ELM, gain stronger learning ability, higher dimensional network structures are always utilized. Finding the ideal hidden layer node count and the scale of the control models, however, is complicated. Due to this, Huang et al. [6,7,8] established an incremental extreme learning machine (I-ELM) by adopting an incremental algorithm that adaptively selects the number of hidden layer nodes and updates the output weights in real time. By optimizing the I-ELM algorithm, Huang developed an enhanced incremental extreme learning machine (EI-ELM) [9], which effectively selects the hidden layer nodes to build the network model by making the network less complex. However, when the network scale is exceedingly high, the cycles’ number of EI-ELM considerably increases, thereby affecting its capability of generalization. Yang and other academics [10] introduced the Barron-optimized convex incremental extreme learning machine, subsequently named it as the CI-ELM, which, to boost the convergence rate, calculates the hidden layer’ output weights when more nodes were added. Furthermore, in [11], a hybrid incremental extreme learning machine was put forward, adjusting the hidden layer nodes’ weight and bias values to minimize the error via the chaos optimization algorithm. Nonetheless, the existing I-ELM has certain limitations that should be overcome desperately. The intricateness of the network architecture may increase because of redundant nodes, thereby reducing the efficient of studying. The low convergence rate gives rise to the amount of hidden layer nodes exceeding the amount of training samples. The model updating effect is poor and has higher sensitivity to new data. Combining these factors is essential for the ELM as it affects the ELM’s accuracy as well as the rate of convergence. Hence, several studies have focused on optimizing the combination of these parameters. Intelligent optimization algorithms based on bionics technologies have been applied to improve ELM variables based on bionics methods to improve learning speed and accuracy. To find the ideal parameters, a brand new hybrid approach was proposed [12]. Furthermore, the settings of the hidden layer nodes were optimized using an adaptable DE algorithm, and then, the output weights were determined using the MP generalized inverted approach [13]. A more effective technique for particle swarm optimization is employed to adjust the hidden layer nodes’ settings [14]. A hybrid intelligent extreme learning machine was put forth, which packaged DE and PSO together to optimize network parameters [15]. However, the following two issues arise with this form of intelligent optimization algorithm: PSO may do local search but moves slowly, while DE has a high ability to find the global optimum but will experience premature convergence.

To improve the parameter performance of ELM, the combination of deep learning and ELM has been applied by several studies owing to deep learning's superior feature extraction abilities. A multilayer ELM was established to effectively improve the parameter performance and training ability of ELM due to the excellent feature extraction ability of deep learning [16]. An ELM with deep kernels [17] is proposed as a means of enhancing accuracy and has been successfully applied to detect flaws in aero engine components.

Based on the aforementioned studies, a deep incremental extreme learning machine on the basis of a deep kernel that is hybrid intelligent was established in this study. First, we associated the courtship search behavior of longhorn with the objective function to be optimized and designed a new swarm intelligence optimization algorithm, namely the artificial transgender longicorn algorithm (ATLA). Then, the algorithm was combined with the multi-population gray wolf optimization. Therefore, a new hybrid intelligent optimized algorithm, namely the artificial transgender longicorn multi-population gray wolf optimization (ATL-MPGWO) method, was established to optimize the output weight using a hybrid intelligent method. The proposed mixed intelligence algorithm combines the MPGWO algorithm's global search capability with its local search capability of the ATLA to achieve the ideal output weights to increase the I-ELM's training efficiency and classification precision.

This study’s significant contributions are summarized below:

-

(1)

The ATLA was combined with the MPGWO algorithm to establish a novel mixed intelligence optimization algorithm, which optimizes the parameters of hidden nodes to obtain an optimal output weight.

-

(2)

A deep network structure was incorporated into a KI-ELM to realize a high-dimensional spatial mapping classification of data through deep kernel incremental kernel extreme learning, thereby improving the classification accuracy and generalization performance of the algorithm.

2 ATL-MPGWO Method

2.1 ATLA

To address the nonlinear optimization of pressure vessels, a longicorn herd algorithm was proposed by simulating longicorn foraging [18, 19].

The suggested ATLA algorithm is demonstrated in Fig. 1. The population is assumed to be composed entirely of male longicorn beetles. As indicated by the optimal search results, male longicorn beetles transform into female longicorn beetles. During the transformation, the longicorn beetles release sex pheromones and attract male longicorn beetles within a certain concentration range to approach them. The source of sex pheromones is situated in the circle's center, where the transsexual longicorn beetles are also located. The male longicorn beetles in the circle approach the transsexual longicorn beetles, whereas the beetles outside the circle search freely.

The steps involved in the ALTA are as follows:

-

(1)

Attraction of the opposite sex: After the male longicorn beetles transform into female longicorn beetles, they attract the male longicorn beetles within the concentration range. Thus, the moving direction of the male longicorn beetles is as follows:

In formula (1), \(x_{i}^{t}\) denotes the position of the \(i\)th male longicorn beetle in the \(t\)th iteration, and \(x_{{{\text{best}}}}^{t}\) denotes the optimal value of the current iteration. When the male longicorn beetles identify the moving direction, they change their position as follows [20]:

where \(r_{i}\) denotes the fitness of the \(i\)th male longicorn beetle in the current iteration; with the increase in the value, the gap between the current individual and the current optimal value decreases, and the value of the moving step \(s^{t}\) thus decreases.

(2) Levy flight random search: In the population of longicorn beetles, male longicorn beetles outside the concentration range are randomly searched, as expressed in the following formula for Levy flight:

The advantage of the ALTA is that male longicorn beetles approach female longicorn beetles when the fitness of the male longicorn beetles is greater than the set threshold; otherwise, they perform Levy flight random search. In addition to ensuring that the male longicorn beetles can find the optimal mate with the sex pheromone concentration range, a small number of male longicorn beetles are allowed to move randomly outside the range. As a result, the ALTA is kept from reaching a local optimum. According to the description above, the ALTA algorithm is depicted in Table 1.

2.2 MPGWO Algorithm

MPGWO is an intelligent optimization algorithm based on GWO [21, 22]. The multi-population algorithm involves simultaneously diversifying the populations and search spaces using the gray wolf algorithm. Multiple populations are used to search independently in different search spaces through an elite mechanism. The information sharing and communication among populations can improve the diversity of populations and can ensure a better solution. The specific implementation steps of the algorithm are described in Table 2.

2.3 ATL-MPGWO Methods

Through this study, an improved hybrid intelligent optimization algorithm was established, on the basis of the artificial modified longicorn beetle optimization algorithm and the MPGWO algorithm. The algorithm is inspired by the memetic evolution mechanism of the shuffled frog-leaping algorithm—the ATL-MPGWO algorithm. To utilize the complementary advantages of the two algorithms to improve the performance of the hybrid algorithm, the specific implementation steps of the algorithm are described in Table 3.

3 Improved Hybrid Intelligent Extreme Learning Machine

3.1 KI-ELM

The I-ELM is less similar to the original incremental neural network. The latter just could employ a particular category of excitation functions. On the contrary, the former could not only adopt continuous but also piecewise continuous functions. Under the premise of the same performance index, the operation efficiency of I-ELM is higher than that of SVM algorithm and BP algorithm. Some improved incremental extreme learning machines have been proposed successively, just like EI-ELM, PC-ELM, and OP-ELM, which are primarily improved the hidden layer node optimization comparing with the I-ELM [23]. The model are stated as follows:

The output function of the K-ELM can be converted as follows:

In formula (5), suppose \(A = \left[ {\frac{1}{C} + K_{ELM} } \right]\). Then, at the moment \(t\)

At the moment \(t + 1\)

Formula (7) is simplified and set as follows:

By a finite number of transformations, Formula (9) is equal to Formula (10)

With new data

where \(C_{t} = D_{t} - U_{t}^{T} A_{t}^{ - 1} U_{t}\). For test data \(X_{{{\text{text}}}} = \left[ {x_{{{\text{test}}1}} ,x_{{{\text{test}}2}} , \cdots x_{{{\text{test}}M}} } \right]\), the output value \(\mathop Y\limits^{ \wedge }_{test}\) can be estimated online, as follows:

3.2 Proposed Algorithm

Based on the KI-ELM, this paper proposes a DKIELM. During the training phase, an artificially transgendered beetle MPGWO algorithm and a parameter optimization algorithm were applied to enhance the robustness of the algorithm.

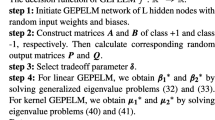

Key components of the proposed HI-DKIELM are multiple hidden layers, an input layer, and an output layer, as illustrated in Fig. 2. The steps involved in the algorithm are mentioned in Table 4:

The HI-DKIELM deals with the input data in layer-by-layer extraction and finds effective features, thereby facilitating the differentiation of the types. These abstract features are summaries and summaries of the original datum. Calculations on kernel functions are utilized to replace internal high-dimensional spaces. Furthermore, product operation improves classification accuracy. After the original datum is abstracted by \(k\) hidden layer, the input feature can be obtained, and then, the kernel function can be used to map the input feature \(X^{k}\).

4 Experiment Analysis

To test the effectiveness and robustness, the optimization performance of the hybrid intelligent algorithm was evaluated. The specifications of the selected data set are listed into Table 5. The system used in the experiments was a PC running on Windows 7 with an Intel (r) Xeon (r) CPU E3-1231v3 @ 3.40 GHz and 16 GB of memory.

4.1 Testing the Performance of the ATLA

The performance optimization of the ATLA was evaluated using the Schaffer, Michalewicz, and Step functions. The experimental results are summarized in Figs. 3, 4, and 5.

The experimental results indicated that the three test functions contained a large number of local extremum points except the global extremum points; the functions satisfied some requirements for the algorithm's optimization ability. Moreover, the proposed ATLA can be used to effectively determine the global optimum solution. These results were near the position of the global extremum reference values, manifesting as good optimization ability.

4.2 Performance Test of the Proposed Hybrid Optimization Algorithm

First, the hybrid intelligent optimization algorithm—the ATL-MPGWO algorithm—was compared with the BSO algorithm, MPGWO algorithm, and DEPSO algorithm [24]. Ten typical optimization functions were selected, as listed in Table 6. In the parameter settings, the dimensions were all set to 30, and the value ranges of the solutions were \(F_{n6} :[ - 100,100]\) and \(F_{n9} :[ - 500,500]\). The remaining eight functions were \([ - 30,30]\).

According to the optimization results in Table 7, the four optimization methods proposed in this paper can achieve good optimization results when the \(F_{n2}\) optimization function is used. For the other nine optimization functions, the proposed ATL-MPGWO hybrid optimization algorithm has higher optimization accuracy than the other three optimization algorithms. By contrast, the MPGWO algorithm may fall into the minimum point without jumping out of the extremum, whereas the BSO algorithm and other algorithms in the literature [24] have a certain degree of optimization ability and can converge to a more accurate solution. However, the ATL-MPGWO hybrid optimization algorithm can constantly jump out of the minimum during the iterations of the algorithm and has good searching ability.

4.3 HI-DKIELM Parameter Settings

In the HI-DKIELM, setting the scale of hidden layers exerts a great influence on the performance in different networks. In view of the Abalone data set, it is assumed that the layers range from 1 to 6 in the hidden layers and the quantities of nodes are set into 20 in each layer. By testing ten times in each network structure, Fig. 6 illustrates the relation between the scale of hidden layers and the network structure. The testing accuracy fails to increase simultaneously with the increasing number of layers. The HI-DKIELM algorithm succeeds to perform the best with three hidden layers. Once the number of layers increases, the testing accuracy goes declining. Therefore, setting the hidden layers of the network structure is 3 in the following regression and classification test.

In the HI-DKIELM, the kernel function parameter \(\gamma\) and regularization parameter \(C\) are easy to change the performance of algorithm, and are mostly chosen through cross-validation measures. The values are taken within \(10^{0} \sim 10^{10}\), and the corresponding test precision was evaluated during the test. The test accuracy and the values of \(\gamma\) and \(C\) are plotted as a curved surface as displayed in Fig. 7. When the regularization parameter \(C\) is not large, the algorithm exhibits poor performance, which fluctuates up and down with the kernel function. Furthermore, the performance of the algorithm is little fluctuation with the increase in the regularization parameter, and the algorithm has the highest accuracy.

4.4 HI-DKIELM Regression Test

In this paper, we conducted regression problem test on HI-DKIELM and the commonly used CI-ELM, EI-ELM, ECI-ELM, and DCI-KELM, with the aim of evaluating how well the HI-DKIELM generalizes. For the comparison, the neural-network hidden layer neurons started out with 1, and the number autoincreased by 1 with each training iteration. The amount of iterations and hidden layer neurons were identical across all five ELM. The contrast of test error and training error is revealed in Table 8 during the regression test, and Table 9 shows the contrast in network complexity and training time.

As listed in the experimental data in Table 8, on the accuracy of the regression, the HI-DKIELM algorithm observably improved. Compared with other algorithms, the proposed algorithm has lower error in training and testing, which compared with the others. With the CCPP database as an example, RMSE = 0.052 is the error termination condition, and the maximum number of hidden layer nodes is set to 100, the training error of the HI-DKIELM algorithm in was 0.0417, and the testing error was 0.0435, whereas the training error of DCI-KELM algorithm was 0.0535 and the testing error was 0.0604. In terms of training error and test error, the proposed HI-DKIELM algorithm is obviously better than others.

Table 9 analyzes the algorithm performance of the regression problem. From the experimental data, a great quantity of nodes for HI-DKIELM decreased significantly, which compared with the other four ELM algorithms. Similarly, with CCPP database as an example, when the stop condition was 0.052, the required nodes for the proposed HI-DKIELM algorithm were 27.06 and the training time was 1.0277 s. By contrast, the required nodes for the DCI-KELM algorithm were 45.35 and the training time was 2.0743 s, and the required nodes for the ECI-KELM algorithm were 11.92 and the training time was 3.0178 s. The HI-DKIELM algorithm is significantly better than the others, from the complexity of the network and the training time. Compared with other algorithms, the proposed algorithm is superior in network complexity and training time.

4.5 HI-DKIELM Classification Test

In this paper, we conducted classification problem test on HI-DKIELM and the commonly used CI-ELM, EI-ELM, ECI-ELM, and DCI-KELM, with the aim of evaluating how well the HI-DKIELM generalizes. For the comparison, the neural-network hidden layer neurons started out with 1, and autoincreased by 1 with each training iteration. The amount of iterations and hidden layer neurons were identical across all five ELM. The contrast of in average value and standard deviation is revealed in Table 10 during the classification problem test, and Table 11 shows the contrast in network complexity and training time. The value of the error termination condition RMSE is given in parentheses.

As can be seen from the experimental data in Table 10, the classification accuracy of the HI-DKIELM algorithm was significantly improved in the comparative analysis of all five ELM. As an illustration, considering the Boston Housing data set, we put RMSE = 0.1 as the error termination condition. The average and standard deviation of the proposed HI-DKIELM algorithm were 98.21 and 0.0038, while those of DCI-KELM algorithm were 93.01 and 0.0041, and ECI-KELM algorithm were 84.82 and 0.0072, respectively. In terms of the above analysis, the HI-DKIELM algorithm proposed outperforms others by a large margin.

Table 11 illustrates a comparison of the performances of different algorithms in the classification problem. The data suggested that the HI-DKIELM performed the best among the all five algorithms. We compared the experimental results, and found that the number of nodes of neural network was considerably reduced. Similarly, with Boston Housing data set as an example, when the RMSE is 0.1, the nodes and training time of HI-DKIELM algorithm were only 19.42 and 0.0772 s, while the DCI-KELM algorithm is 22.06 and 0.0942 s, and the ECI-KELM algorithm is 39.17 and 0.1168 s, respectively.. In terms of the above analysis, the HI-DKIELM algorithm proposed outperforms others by a large margin. Compared with other algorithms, the proposed algorithm is superior to other algorithms in terms of mean and standard deviation.

4.6 Summary

According to the above experimental results of regression and classification, it can be seen that: in the regression test, the training and testing errors of the HI-DKIELM algorithm are significantly better than those of the other algorithms (Table 8), and the number of nodes and the training time are much less than those of the other algorithms (Table 9); in the classification test, compared with the other four ELM algorithms, the classification accuracy of the HI-DKIELM algorithm has been significantly improved (Table 10), and the number of nodes and training time of the network are significantly reduced (Table 11).

It can be seen that compared with other algorithms, the number of network nodes of the HI-DKIELM algorithm is significantly reduced, which reduces the network complexity of the ELM and greatly improves the learning efficiency of the algorithm; and it has a more compact and efficient network structure, and has better prediction and generalization capabilities. However, adding deep networks to the algorithm may lead to overfitting problems.

5 Conclusions

This paper first summarizes the algorithm of extreme learning machine, then introduces the ATL-MPGWO Method, then introduces the improved hybrid intelligent extreme learning machine, and finally the simulation experiment and conclusion. This study successfully addressed the problem of ineffective iteration and low learning efficiency in K-ELM due to redundant nodes in the neural network. A DKIELM with hybrid intelligence and a deep network structure was established. First, a novel hybrid intelligent optimization algorithm was designed by combining the ATLA and the MPGWO algorithm. The designed algorithm was applied to optimize the hidden layer node parameters, which not only improved network stability but also reduced the network complexity of the ELM and improved the learning efficiency of model parameters. Second, a deep network structure was applied to the KI-ELM, which extracted the input data layer by layer and improved the classification accuracy and generalization abilities. From the perspective of network complexity and training time, the results of regression test and classification test show that the proposed HI-DKELM algorithm has more compact and effective network structure, and has better prediction and generalization ability.

The deep network is added to the deep kernel extreme learning machine of hybrid intelligence, which may lead to the overfitting problem. Considering the appropriate number of network layers will be the future research direction.

Availability of Data and Materials

The data for this work are available upon request. Please direct all inquiries to the relevant author.

Code Availability

Part of the code can be obtained from the author. For details, please contact the relevant author.

References

Huang, G.B., Zhu, Q.Y., Siew, C.K.: Extreme learning machine : theory and applications. Neurocomputing 70(1), 489–501 (2006). https://doi.org/10.1016/j.neucom.2005.12.126

Yu, X., Zhao, Y., Gao, Y., et al.: MaskCOV: a random mask covariance network for ultra-fine-grained visual categorization. Pattern Recognit. 119(7553), 108067 (2021). https://doi.org/10.1016/j.patcog.2021.108067

Sanjoy, D., Charles, F.S.: A neural algorithm for a fundamental computing problem. Science 358(63), 793–796 (2017). https://doi.org/10.1126/science.aam9868

Yi, Z., Xu, T., Shang, W., Li, W., Wu, X.: Genetic algorithm-based ensemble hybrid sparse ELM for grasp stability recognition with multimodal tactile signals. IEEE Trans. Ind. Electron. 70(3), 2790–2799 (2023). https://doi.org/10.1109/TIE.2022.3170631

Huang, G.B., Chen, L.: Enhanced random search based incremental extreme learning machine. Neurocomputing 71(1), 3460–3468 (2008). https://doi.org/10.1016/j.neucom.2007.10.008

Huang, G.B., Chen, L.: Convex incremental extreme learning machine. Neurocomputing 70(1), 3056–3062 (2007). https://doi.org/10.1016/j.neucom.2007.02.009

Cai, W., Yang, J., Yu, Y., et al.: PSO-ELM: a hybrid learning model for short-term traffic flow forecasting. IEEE access 8, 6505–6514 (2020). https://doi.org/10.1109/ACCESS.2019.2963784

Lian, Z., Duan, L., Qiao, Y., et al.: The improved ELM algorithms optimized by bionic WOA for EEG classification of brain computer interface. IEEE Access 9, 67405–67416 (2021). https://doi.org/10.1109/ACCESS.2021.3076347

Wang, Z., Wang, N., Zhang, H., et al.: Segmentalized mRMR features and cost-sensitive ELM with fixed inputs for fault diagnosis of high-speed railway turnouts. IEEE Trans. Intell. Transp. Syst. 24(5), 4975–5498 (2023). https://doi.org/10.1109/TITS.2023.3239636

Chen, Y.T., Chuang, Y.C., Chang, L.S., et al.: S-QRD-ELM: scalable QR-decomposition-based extreme learning machine engine supporting online class-incremental learning for ECG-based user identification. IEEE Trans. Circuits Syst. 70(6), 2342–2355 (2023). https://doi.org/10.1109/TCSI.2023.3253705

Huang, F., Lu, J., Tao, J., et al.: Research on optimization methods of ELM classification algorithm for hyperspectral remote sensing images. IEEE Access 7, 108070–108089 (2019). https://doi.org/10.1109/ACCESS.2019.2932909

Cao, J., Lin, Z., Huang, G.B.: Self-adaptive evolutionary extreme learning machine. Neural Process. Lett. 36, 285–305 (2012). https://doi.org/10.1007/s11063-012-9236-y

Wu, W., Lu, S.: Remaining useful life prediction of lithium-ion batteries based on data preprocessing and improved ELM. IEEE Trans. Instrum. Meas. 72, 1–14 (2023). https://doi.org/10.1109/TIM.2023.3267362

Abu Arqub, O., Abo-Hammour, Z.: Numerical solution of systems of second-order boundary value problems using continuous genetic algorithm. Inf. Sci. 279, 396–415 (2014). https://doi.org/10.1016/j.ins.2014.03.128

Abo-Hammour, Z., Alsmadi, O., Momani, S., Abu Arqub, O.: A genetic algorithm approach for prediction of linear dynamical systems. Math. Probl. Eng. 2013, 1–12 (2013). https://doi.org/10.1155/2013/831657

Abo-Hammour, Z., Abu Arqub, O., Momani, S., Shawagfeh, N.: Optimization solution of Troesch’s and Bratu’s problems of ordinary type using novel continuous genetic algorithm. Discrete Dyn Nat Soc (2014). https://doi.org/10.1155/2014/401696

Abu Arqub, O., Abo-Hammour, Z., Momani, S., Shawagfeh, N.: Solving singular two-point boundary value problems using continuous genetic algorithm. Abstr. Appl. Anal. 2012, 205391 (2012). https://doi.org/10.1155/2012/205391

Jones, A.J.: New tools in non-linear modeling and prediction. Comput. Manag. Sci. 1(2), 109–149 (2004). https://doi.org/10.1007/s10287-003-0006-1

Balsa-Canto, E., Bandiera, L., Menolascina, F.: Optimal experimental design for systems and synthetic biology using amigo2. Synth. Gene Circuits (2021). https://doi.org/10.1007/978-1-0716-1032-9_11

Wu, D., Qu, Z.S., Guo, F.J., et al.: Hybrid intelligent deep kernel incremental extreme learning machine based on differential evolution and multiple population grey wolf optimization methods. Automatika 60(1), 48–57 (2019). https://doi.org/10.1080/00051144.2019.1570642

Wu, D., Qu, Z., Guo, F., et al.: Multilayer incremental hybrid cost-sensitive extreme learning machine with multiple hidden output matrix and subnetwork hidden nodes. IEEE Access 7, 118422–118434 (2019). https://doi.org/10.1109/ACCESS.2019.2936856

Sulaiman, M.H., Mustaffa, Z., Mohamed, M.R., et al.: Using the gray wolf optimizer for solving optimal reactive power dispatch problem. Appl. Soft Comput. 32, 286–292 (2015). https://doi.org/10.1016/j.asoc.2015.03.041

Song, X., Tang, L., Zhao, S., et al.: grey wolf Optimizer for parameter estimation in surface waves. Soil Dyn. Earthq. Eng. 75(8), 147–157 (2015). https://doi.org/10.1016/j.soildyn.2015.04.004

Di, W., Ting, L., Qin, W.: A hybrid deep kernel incremental extreme learning machine based on improved coyote and beetle swarm optimization methods. Complex Intell. Syst. 7, 3015–3032 (2021). https://doi.org/10.1007/s40747-021-00486-8

Acknowledgements

This work were supported by National Natural Science Foundation of China (Grant No. 62006075), National Natural Science Foundation of Hunan Province of China (Grant No. 2022JJ30198), Hunan Provincial Science and Technology Department (CN) (Grant No. 21A0460) and the science and technology innovation program of Hunan Province (Grant No. 2020RC5019). Any opinions, findings, and conclusions expressed in this work are those of the authors and do not necessarily reflect the views of the funding.

Funding

This work were supported by National Natural Science Foundation of China (Grant No. 62006075), National Natural Science Foundation of Hunan Province of China (Grant No. 2022JJ30198), Hunan Provincial Science and Technology Department (CN) (Grant No. 21A0460), and the science and technology innovation program of Hunan Province (Grant No. 2020RC5019).

Author information

Authors and Affiliations

Contributions

Methodology, DW and YX; data curation, DW and YX; code and resources, DW and YX; writing—original draft preparation, DW and YX; writing–review and editing, DW and YX.

Corresponding author

Ethics declarations

Conflict of Interest

All authors declare that they have no competing interests.

Ethical Approval and Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, D., Xiao, Y. A Novel Deep Kernel Incremental Extreme Learning Machine Based on Artificial Transgender Longicorn Algorithm and Multiple Population Gray Wolf Optimization Methods. Int J Comput Intell Syst 16, 182 (2023). https://doi.org/10.1007/s44196-023-00323-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00323-5