Abstract

Many complex real-world problems have been resolved based on similarity operators under intuitionistic fuzzy sets (IFSs). Numerous authors have developed intuitionistic fuzzy similarity operators (IFSOs) but with some setbacks, which include imprecise results, omission of hesitation information, misleading interpretations, and outright violations of metric axioms of similarity operator. To this end, this article presents a newly developed similarity operator under IFSs to ameliorate the itemized setbacks noticed with the hitherto similarity operators. To buttress the validity of the new similarity operator, we discuss its properties in alliance with the truisms of similarity. In addition, we discuss some complex decision-making situations involving car purchase selection process, pattern recognition, and emergency management using the new similarity operator based on multiple criteria decision making (MCDM) technique and recognition principle, respectively. Finally, comparative studies are presented to argue the justification of the new similarity operator. In short, the novelty of this work includes the evaluation of the existing IFSOs to isolate their fault lines, development of a new IFSO technique with the capacity to resolve the fault lines in the existing techniques, elaboration of some properties of the newly developed IFSO, and its applications in the solution of disaster control, pattern recognition, and the process of car selection for purchasing purpose based on the recognition principle and MCDM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Decision making is an art of making choices established on information gathering and appraisal of alternative resolutions. An elaborate decision-making procedure enhances more thoughtful and deliberate decisions by establishing pertinent information and defining alternate. Decision making can be tactical, strategic, and operational depending on the aims and alternatives. The process of decision-making could be carried out based on the recognition principle and multiple criteria decision making (MCDM) by using information measures like distance operator, correlation coefficient, and similarity operator. Most often, the art of decision making is hampered by indeterminacies which necessitate the deployment of fuzzy sets and/or fuzzy logic [53] to resolve the indeterminacies interwoven with the decision making process to attain consistent ends. Fuzzy system though resourceful is handicapped because it does not consider the hesitant degree component of the alternatives under consideration. Because of this setback, the construct of intuitionistic fuzzy sets (IFSs) was introduced by Atanassov [1]. In fact, the idea of IFSs expands the scope and modelling capability of fuzzy set. Mathematically speaking, IFS is described by grades of membership and non-membership, with hesitation margin collected from the closed interval, \(I=[0,1]\). While all fuzzy sets can be described as an IFS, the idea of IFSs is different from fuzzy set in the sense that the grades of membership and non-membership are not complementary.

Pertinent applications of the IFSs have been discussed in the solutions of real-world problems such as medical diagnosis [12, 41]. Shi and Ye [10] deliberated on the fuzzy queries process via intuitionistic fuzzy social networks, and Liu and Chen [33] discussed a decision-making process using Heronian aggregation operators under IFSs. In the same way, some information measures have been discussed with applications to decision-making problem and diagnostic analysis [20]. A work on clustering algorithm using IFSs was conducted in [51]. Boran [3] deliberated on the selection process of a facility location by means of IFSs approach, and an attribute selection using Hellwig’s algorithm was unveiled using IFSs [42]. Xu and Yager [52] discussed some intuitionistic fuzzy preference relations for the assessment of group agreement. Belief and plausibility measures for IFSs were developed with application to belief-plausibility TOPSIS [45], and a hybridized correlation coefficient technique was developed under IFSs with application to classification process [16]. Numerous practical problems have been solved based on sundry correlation approaches [13, 17,18,19, 21, 43].

To enlarge the application spectral of IFSs, the concept of intuitionistic fuzzy distance operators (IFDOs) has been extensively discussed. Burillo and Bustince [5] pioneered the concept of IFDOs and extended it to interval-valued fuzzy sets. Szmidt and Kacprzyk [40] improved on the IFDOs in [5] by incorporating all the describing parameters of IFSs to enhance accuracy. Various IFDOs via Hausdorff metric were deliberated on in [7, 23], and a wide-ranging overview on IFDOs was discussed in [50]. Hatzimichailidis et al. [24] presented a new IFDOs and its application in cases of pattern recognition. Wang and Xin [44] discussed a novel IFDO and its weighted variant with application to pattern recognition problem, and Davvaz and Sadrabadi [11] revised certain existing IFDOs and discussed their application in medical diagnosis.

Intuitionistic fuzzy similarity operator (IFSO) is one of the widely used techniques applied to data analysis, machine learning, pattern recognition, and other related decision-making problems. IFSO measures the relationship between two IFSs to examine whether there is relation between two IFSs. Many authors have worked on IFSOs because the concept is very applicable to real-world problems. Boran and Akay [4] developed an IFSO from a biparametric approach and applied it to pattern recognition. A technique of IFSO was developed from transformation approach and used to discuss pattern recognition [8], and Xu [49] applied certain developed techniques of IFSO in MCDM. Certain IFSOs were developed and used to discuss pattern recognition [39, 48]. IFSOs using Hausdorff distance [26] and Lp metric [27] have been studied. In [29], some new IFSOs based on upper, lower and middle fuzzy sets were developed, and Ye [47] developed an IFSO using the cosine function and applied it to mechanical design schemes. Some IFSOs using set pair analysis were developed [22]. Numerous practical problems have been solved based on sundry approaches of IFSO [9, 14, 30, 32].

More so, Chen [6] developed an IFSO technique based on biparametric approach for estimating the similarity of IFSs. This similarity technique cannot be reliable because the hesitation margins of the concerned IFSs are omitted. Similarly, [25] developed an IFSO technique akin to the technique in [6], with the same limitation. Mitchell [36] developed an IFSO technique to augment the Dengfeng–Chuntian similarity approach. In addition, Li et al. [31] developed an IFSO based on an IFDO in [5] by considering the incomplete parameters of IFSs. Ye [46] developed a cosine-based IFSO, and Hung and Yang [28] presented some techniques of IFSO. However, both the techniques [28, 46] do not consider the hesitation margins of IFSs. In [38], an IFSO [46] was modified by including the whole descriptive parameters of IFSs and applied it to fault diagnosis of turbine. Luo and Ren [34] developed an IFSO using biparametric approach and used it to discuss multiple attributes decision making (MADM). In [37], a new IFSO was introduced and used to discuss sundry applications. Most recently, a new IFSO was developed and applied to solve emergency control problem [15]. Though this technique [15] includes all the describing parameters of IFSs, it lacks accuracy, which will lead to misinterpretation in terms of application.

The interpretations from the existing techniques of IFSO cannot be rely upon because

-

(i)

some omit hesitation margins of IFSs in their computations,

-

(ii)

some could not measure the similarity between two comparable IFSs,

-

(iii)

the IFSO technique in [37] violates the axiomatic description of similarity operator,

-

(iv)

all the existing IFSO techniques yield outcomes that are not reliable, and so give misleading interpretations.

These drawbacks constitute the justification of this present work. The present work is equipped with the necessary facilities to absolve the drawbacks of the hitherto IFSOs. The aim of work, which form the contributions of the study will be achieved by the following points:

-

(i)

evaluating the existing IFSOs to isolate their fault lines,

-

(ii)

developing a new IFSO technique with the capacity to resolve the drawbacks in the existing techniques,

-

(iii)

elaborating some properties of the new IFSO to describe its alliances with the notion of similarity operator,

-

(iv)

discussing the application of the new IFSO in the solution of disaster control problem, pattern recognition, and the process of car selection for purchasing purpose based on recognition principle and MCDM.

For organization purpose, we outline the rest of the work as follows: Sect. 2 dwells on some preliminaries of IFSs and recaps some existing IFSO techniques; Sect. 3 presents the development of the new IFSO and discusses its properties; Sect. 4 discusses the application examples based on recognition principle and MCDM approach; and Sect. 5 gives the summary of the findings and suggest areas of future direction.

2 Intuitionistic Fuzzy Sets and Their Similarity Operators

This section elucidates the preliminaries of IFSs and reiterates some hitherto similarity operators for IFSs.

2.1 Intuitionistic Fuzzy Sets

Assume X to be the classical set in this article. Firstly, we reiterate the definition of fuzzy set:

Definition 2.1

A fuzzy set symbolized by C in X is describes by

where \(\beta _C(x)\) is a degree of membership of x in X defined by the function given as \(\beta _C: X\rightarrow [0,1]\) [53].

Definition 2.2

An intuitionistic fuzzy set symbolized by \(\Re\) in X is describes by

where \(\beta _{\Re }(x)\) and \(\eta _{\Re }(x)\) are grades of membership and non-membership of x in X defined by the functions \(\beta _{\Re }: X\rightarrow [0,1]\) and \(\eta _{\Re }: X\rightarrow [0,1]\), with the property that \(\beta _{\Re }(x)+ \eta _{\Re }(x)\le 1\) [1].

The hesitation margin of an IFS \({\Re }\) in X denoted by \(\psi _{\Re }(x)\in [0,1]\), expresses the knowledge of the degree to whether x in X or not, and it is defined by \(\psi _{\Re }(x)= 1-\beta _{\Re }(x)-\eta _{\Re }(x)\). For simplicity, we symbolized an IFS \({\Re }=\lbrace \langle x, \beta _{\Re }(x), \eta _{\Re }(x) \rangle :x\in X\rbrace\) as \({\Re }=\Big (\beta _{\Re }(x), \eta _{\Re }(x)\Big )\).

Next, we recap certain operations on IFSs like equality, inclusion, complement, union, and intersection.

Definition 2.3

Assume \(\Re _1\) and \(\Re _2\) are IFSs in X, then the following properties follows [2]:

-

(i)

\(\Re _1=\Re _2\) \(\Leftrightarrow\) \(\beta _{\Re _1}(x)=\beta _{\Re _2}(x)\) and \(\eta _{\Re _1}(x)=\eta _{\Re _2}(x)\), \(\forall x\in X\),

-

(ii)

\(\Re _1\subseteq \Re _2\) \(\Leftrightarrow\) \(\beta _{\Re _1}(x)\le \beta _{\Re _2}(x)\) and \(\eta _{\Re _1}(x)\ge \eta _{\Re _2}(x)\), \(\forall x\in X\),

-

(iii)

\(\Re _1\preceq \Re _2\) \(\Leftrightarrow\) \(\beta _{\Re _1}(x)\le \beta _{\Re _2}(x)\) and \(\eta _{\Re _1}(x)\le \eta _{\Re _2}(x)\), \(\forall x\in X\),

-

(iv)

\(\overline{\Re }_1=\lbrace \langle x, \beta _{\Re _1}(x), \eta _{\Re _1}(x)\rangle :x\in X\rbrace\), \(\overline{\Re }_2=\lbrace \langle x, \beta _{\Re _2}(x), \eta _{\Re _2}(x)\rangle :x\in X\rbrace\),

-

(v)

\(\Re _1\cup \Re _2=\lbrace \langle x, \max \big \lbrace \beta _{\Re _1}(x),\beta _{\Re _2}(x)\big \rbrace , \min \big \lbrace \eta _{\Re _1}(x),\eta _{\Re _2}(x)\big \rbrace \rangle :x\in X\rbrace\),

-

(vi)

\(\Re _1\cap \Re _2=\lbrace \langle x, \min \big \lbrace \beta _{\Re _1}(x),\beta _{\Re _2}(x)\big \rbrace , \max \big \lbrace \eta _{\Re _1}(x),\eta _{\Re }(x)\big \rbrace \rangle :x\in X\rbrace\).

2.2 Some Intuitionistic Fuzzy Similarity Operators

Some obtainable techniques for calculating the similarity between IFSs are recapped before the development of the new IFSO technique. First, the definition of IFSO is presented as in [40].

Definition 2.4

Suppose \(\Re _1\) and \(\Re _2\) symbolize IFSs in \(X=\lbrace x_1,\ldots , x_N\rbrace\), then the similarity measure between the IFSs is a function \(\mathbb {S}(\Re _1,\Re _2):\Re _1 \times \Re _2\rightarrow [0,1]\) which satisfies the following conditions;

-

(i)

\(0\le \mathbb {S}(\Re _1,\Re _2)\le 1\),

-

(ii)

\(\mathbb {S}(\Re _1,\Re _1)=1\),

-

(iii)

\(\mathbb {S}(\Re _1,\Re _2)=1\) \(\Leftrightarrow\) \(\Re _1=\Re _2\),

-

(iv)

\(\mathbb {S}(\Re _1,\Re _2)=\mathbb {S}(\Re _2,\Re _1)\),

-

(v)

\(\mathbb {S}(\Re _1,\Re _3)\le \mathbb {S}(\Re _1,\Re _2)+\mathbb {S}(\Re _2,\Re _3)\), where \(\Re _3\) is an IFS in X.

When \(\mathbb {S}(\Re _1,\Re _2)\) tends to 1 implies that \(\Re _1\) and \(\Re _2\) are strongly similar, \(\mathbb {S}(\Re _1,\Re _2)\) tends to 0 implies \(\Re _1\) and \(\Re _2\) are weakly similar. In particular, if \(\mathbb {S}(\Re _1,\Re _2)=0\) implies \(\Re _1\) and \(\Re _2\) are not similar, and if \(\mathbb {S}(\Re _1,\Re _2)=1\) then implies \(\Re _1\) and \(\Re _2\) are perfectly similar.

The following are some of the existing techniques for measuring similarity concerning IFSs:

2.2.1 Chen [6]

This similarity operator does not include the hesitation information, and so the operator lacks credibility due to the exclusion. In addition, the operator yields results with low precision as seen in Tables 2 and 3.

2.2.2 Hong and Kim [25]

This similarity operator modified the operator in [6] with the inclusion of absolute values for the parametric difference. Likewise, the similarity operator excludes the hesitation information, and so lacks credibility. Also, the operator yields results with low precision as seen in Tables 2 and 3.

2.2.3 Mitchell [36]

The similarity operators in [36] improved the operators developed in [6, 25], but the limitation of the exclusion of the hesitation information remains. Similarly, the similarity values from these operators have low precision as seen in Tables 2 and 3.

2.2.4 Li et al. [31]

This similarity operator is similar to (6), and hence has the same limitation as the operator proposed in [36].

2.2.5 Hung and Yang [28]

The similarity operators are based on the extreme values of parameters and absolute values of parametric difference different from the aforementioned operators. Albeit, the outcomes of these operators cannot be relied on because of the omission of the hesitation information. Also, the results from these operators have low precision and accuracy as seen in Tables 2 and 3.

2.2.6 Ye [46]

This similarity operator has three deficiencies namely, (i) it does not include the hesitation information, and so the operator lacks credibility due to the exclusion, (ii) it yields results with low precision as seen in Tables 2 and 3, and (iii) it violates the metric condition of the similarity operator. To see (iii), we consider the following example.

Example 2.5

Assume IFSs \(\Re _1\) and \(\Re _2\) in \(X=\lbrace x_1,x_2\rbrace\) are given by

By applying (11), we have

which infringes on the metric condition of the similarity operator.

2.2.7 Shi and Ye [38]

This similarity operator improved the operator in [46] by the inclusion of hesitation information. Its deficiencies include (i) it yields results with low precision as seen in Tables 2 and 3, and (ii) it violates the metric condition of similarity operator. To see (ii), we consider Example 2.5 by applying (11), and similarly,

which violates the metric condition of the similarity operator.

2.2.8 Luo and Ren [34]

where

Similarly, this similarity operator does not include the hesitation information, and so it lacks credibility due to the exclusion, and yields results with low precision as seen in Tables 2 and 3.

2.2.9 Quynh et al. [37]

This similarity operator has three deficiencies namely, (i) it does not include the hesitation information, (ii) it violates the metric condition of similarity operator by yielding results that are not within the close interval [0, 1] (see Table 3), and (iii) it yields results with low precision as seen in Tables 2 and 3.

2.2.10 Ejegwa and Ahemen [15]

This similarity operator includes the hesitation information and satisfies the metric condition of the similarity operator, but yields results with low precision as seen in Tables 2 and 3 compare to the new similarity operator.

3 New Similarity Operator for IFSs

Every one of the enlisted similarity methods of IFSs possesses either one of the setbacks, namely; (1) omission of the indeterminate parameter, (2) unable to produce valid similarity value, and (3) violation of the axioms of the similarity operator. Sequel to these setbacks, we develop a new method similarity operator under IFSs to resolve the setbacks. The new similarity operator concerning IFSs F and G in \(S=\lbrace s_1, s_2, \ldots , s_N\rbrace\) is given by:

where

for \(\alpha =1, 2\).

We see that (16) includes the indeterminate parameter/hesitation information, can produce valid similarity value (as seen in Tables 2, 3), and satisfies the axioms of similarity operator unlike the discussed similarity operators.

3.1 Some Properties of the New IFSO

Some of the properties of the new similarity operator under IFSs are presented in this subsection to validate the new method. We observe that

Substituting (17) into (16), we have

Theorem 3.1

Suppose \(\Re _1\subseteq \Re _2\subseteq \Re _3\) are IFSs in \(X=\lbrace x_1, x_2\ldots , x_n\rbrace\), then

-

(i)

\(\tilde{\mathbb {S}}(\Re _1,\Re _3)\le \tilde{\mathbb {S}}(\Re _1,\Re _2)\),

-

(ii)

\(\tilde{\mathbb {S}}(\Re _1,\Re _3)\le \tilde{\mathbb {S}}(\Re _2,\Re _3)\),

-

(iii)

\(\tilde{\mathbb {S}}(\Re _1,\Re _3)\le \min \big \lbrace \tilde{\mathbb {S}}(\Re _1,\Re _2), \tilde{\mathbb {S}}(\Re _2,\Re _3)\big \rbrace\).

Proof

Since \(\Re _1\subseteq \Re _2\subseteq \Re _3\), then \(\beta _{\Re _1}(x_j)\le \beta _{\Re _2}(x_j)\le \beta _{\Re _3}(x_j)\) and \(\eta _{\Re _1}(x_j)\ge \eta _{\Re _2}(x_j)\ge \eta _{\Re _3}(x_j)\) \(\forall x_j\in X\). By consequence,

which implies that

Hence, \(\tilde{\mathbb {S}}(\Re _1,\Re _3)\le \tilde{\mathbb {S}}(\Re _1,\Re _2)\) by (18), which proves (i). Similarly, (ii) follows. From (i) and (ii), (iii) follows. \(\square\)

Theorem 3.2

Suppose \(\Re _1\subseteq \Re _2\subseteq \Re _3\) are IFSs in \(X=\lbrace x_1, x_2\ldots , x_n\rbrace\), then

-

(i)

\(0\le \tilde{\mathbb {S}}(\Re _1,\Re _2)\le 1\),

-

(ii)

\(\tilde{\mathbb {S}}(\Re _1,\Re _1)=1\), \(\tilde{\mathbb {S}}(\Re _2,\Re _2)=1\),

-

(iii)

\(\tilde{\mathbb {S}}(\Re _1,\Re _2)=1\) \(\Leftrightarrow\) \(\Re _1=\Re _2\),

-

(iv)

\(\tilde{\mathbb {S}}(\Re _1,\Re _2)=\tilde{\mathbb {S}}(\overline{\Re }_1,\overline{\Re }_2)\),

-

(v)

\(\tilde{\mathbb {S}}(\Re _1,\Re _2)=\tilde{\mathbb {S}}(\Re _2,\Re _1)\),

-

(vi)

\(\mathbb {S}(\Re _1,\Re _3)\le \mathbb {S}(\Re _1,\Re _2)+\mathbb {S}(\Re _2,\Re _3)\),

-

(vii)

\(\tilde{\mathbb {S}}(\Re _1\cap \Re _2, \Re _1\cup \Re _2)= \tilde{\mathbb {S}}(\Re _1,\Re _2)\).

Proof

To show (i), we establish that (a) \(\tilde{\mathbb {S}}(\Re _1,\Re _2)\ge 0\), and (b) \(\tilde{\mathbb {S}}(\Re _1,\Re _2)\le 1\). It is easy to see (a) because

To prove (b), we assume that

Substituting (19) into 18, we have

Then

Hence, \(\tilde{\mathbb {S}}(\Re _1,\Re _2)-1\le 0 \Rightarrow \tilde{\mathbb {S}}(\Re _1,\Re _2)\le 1\), which proves (i). The proofs of (ii)–(iv) are straightforward.

We prove (v) as follows:

which shows that \(\tilde{\mathbb {S}}(\Re _1,\Re _2)=\tilde{\mathbb {S}}(\Re _2,\Re _1)\). The proof of (vi) is deductively from Theorem 3.1.

By incorporating the intersection and union of IFSs based on the new similarity operator, we have

which proves (vii). \(\square\)

4 Application Examples

The applications of the new similarity operator in real-world problems based on recognition principle and MCDM technique are discussed in this section.

4.1 Recognition Principle

Recognition principle involves the classification of an unidentified pattern \(\Re\) with known patterns \(\Re _j\) (\(j=1,2,\ldots , N\)) to decide which class \(\Re\) belongs with the aid of an information measure. Now, using the new similarity operator, we determine the classification process of \(\Re\) to \(\Re _j\) by

4.1.1 Application 1

Emergency control is the management of responsibilities or resources to deal with a disaster to avert or lessen its harmful effects. The incidence of disasters is indeterminate, and so it is good to adopt indeterminate approaches to manage disasters. We deploy IFSO to control disaster because of the ability of IFSs to tackle hesitations.

Suppose emergency rescue workers have documented three typical situations of emergency rescue planning data represented as IFSs denoted by \(\tilde{D}_1\), \(\tilde{D}_2\) and \(\tilde{D}_3\) determined by rescue difficulty (\(s_1\)), scale of people affected (\(s_2\)), traffic conditions (\(s_3\)), and emergency supplies (\(s_4\)), respectively. Assume a new emergency situation occurred denoted by IFS \(\tilde{E}\) also describe by rescue difficulty, scale of people affected, traffic conditions, and emergency supplies. To control the present emergency, the emergency rescue workers will match the present emergency with the past emergencies to classify the current emergency and adopt the corrective measures of the past emergency to the present one. The disaster situation data used in the application is taken from the work in [35].

First, the similarity indexes of \(\tilde{E}\) and \(\tilde{D}_j\) (\(j=1,2,3\)) are computed using (18) as follow:

and

By applying (20), the current emergency \(\tilde{E}\) is most associated with emergency \(\tilde{D}_3\). Hence, the disaster rescue team should apply the disaster control measures used to control emergency \(\tilde{D}_3\) for the current disaster \(\tilde{E}\). This approach of disaster control is very significant in real life because during an emergency, there are apprehension and confusion that needed to be controlled as quickly as possible. With this approach, disasters can be adequately controlled provided there are intuitionistic fuzzy dataset for past emergencies.

4.1.2 Application 2

The process of pattern recognition or classification is most often enmeshed with uncertainties, and as such, pattern recognition based on IFSO is the significance for the dependable outcome. Suppose there are four classes of building material symbolized by IFSs \(\tilde{P}_1\), \(\tilde{P}_2\), \(\tilde{P}_3\), and \(\tilde{P}_4\) described by the set of features \(\ell =\lbrace \ell _1, \ell _2,\ldots , \ell _{12}\rbrace\). Assume there is an unknown building material symbolized by an IFS \(\tilde{C}\) defined in the same features. The recognition principle associates \(\tilde{C}\) with any of the known patterns using the new similarity operator. The patterns are given in Table 1 [44].

The similarity indexes of \(\tilde{C}\) and \(\tilde{P}_j\) (\(j=1,2,3,4\)) are computed using (18) as follows:

and

Using (20), we see that the unknown \(\tilde{C}\) can be classified with pattern \(\tilde{P}_4\) since their similarity index is the greatest. The unknown pattern is categorized using the greatest similarity value without any indecision. Because of the inevitability of vagueness in the process of pattern recognition, this idea of IFSO will be of a significant help in eye tracking algorithm using multi-model Kalman filter, human action recognition in movies, among others.

4.1.3 Comparison of the IFSOs Based on Recognition Principle

Next, we justify the validity of the newly developed similarity operator by comparison with the other similarity operators [6, 15, 25, 28, 31, 34, 36,37,38, 46], and present the results in Tables 2 and 3.

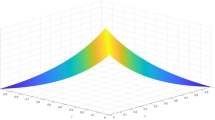

Plot of Table 2

From Table 2 and Fig. 1, we see that all the IFSOs give the same classification. Albeit, the new developed similarity operator yields the greatest similarity values when compared to the methods in [6, 15, 25, 28, 31, 34, 36,37,38, 46]. In fact, the developed IFSO gives the most accurate results, and so it is very reliable and appropriate for MCDM problems.

Plot of Table 3

From Table 3 and Fig. 2, we see that the newly developed IFSO gives the best similarity indexes with a high reliability rate. It is observed that the IFSO [37] violates a condition of similarity measure by yielding values that are not within [0, 1]. Nonetheless, all the similarity operators in [6, 15, 25, 28, 31, 34, 36, 38, 46] and the new similarity operator give the same classification.

4.2 Multiple Criteria Decision Making

The multiplicity of objectives in most human decision-making cases necessitate the use of multiple criteria decision-making (MCDM) approaches. Multiple criteria decision-making (MCDM) is the process use to tackle the multiplicity of objectives in the human decision domain. MCDM is the aspect of operations research that explicitly assesses numerous conflicting criteria in decision-making. The best way to effectively carry out MCDM is through fuzzy logic.

4.2.1 Application 3

Suppose a car buyer wants to buy an effective car for private use, and there are five cars represented by IFSs \(\tilde{C}_j\) \((j=1,2,3,4,5)\) to be selected from. The five selected cars were based on the following evaluating factors (E); fuel consumption, degree of friction, price, comfort, design, and security.

4.2.2 Algorithm for the MCDM

Step 1. Formulate the intuitionisic fuzzy decision matrix (IFDM) \(\tilde{C}_k=\lbrace E_i (\tilde{C}_j)\rbrace _{(m\times n)}\) for \(i=1,\ldots , m\) and \(j=1,\ldots , n\). The information of the cars are represented by IFDM based on the selecting factors in Table 4.

The least criterion from Table 4 is price and it is called the cost criterion, and the other criteria are the benefit criteria.

Step 2. Find the normalized IFDM \(\tilde{D}=\langle \beta _{\tilde{C}^*_{j}}(E_i), \eta _{\tilde{C}^*_{j}}(E_i)\rangle _{m\times n}\) for \(\tilde{C}_k\), where \(\langle \beta _{\tilde{C}^*_{j}}(E_i), \eta _{\tilde{C}^*_{j}}(E_i)\rangle\) are intuitionistic fuzzy values, and \(\tilde{D}\) is defined by

Table 5 contains the information of the normalized IFDM.

Step 3. Compute positive ideal solution (PIS) and negative ideal solution (NIS) given by

where

The information for NIS and PIS is contains in Table 6.

Step 4. Calculate the similarity indexes, \(\tilde{\mathbb {S}}(\tilde{C}_{j}, \tilde{C}^-)\) and \(\tilde{\mathbb {S}}(\tilde{C}_{j}, \tilde{C}^+)\) using the new method for \(\alpha =1,2\). Applying (18) on Tables 4 and 6, we get Tables 7 and 8.

Step 5. Calculate the closeness coefficients for each cars \(\tilde{C}_j\) using

By applying (25), we get Table 9.

Step 6. The car with the greatest closeness coefficient is the most suitable to purchase by the car buyer. From Table 9, we see that car \(\tilde{C}_2\) is the appropriate car to buy because of its economic fuel consumption, commensurate price, comfort, good design, and security.

For the sake of comparison, we deploy the existing similarity operators [6, 15, 25, 28, 31, 34, 36,37,38, 46] and our new similarity operator on the data in Tables 4 and 6. By following Steps 4–6, we get the orderings in Table 10.

Table 10 shows that the MCDM technique embedded with the discussed similarity operators yields the same choice with the exception of the similarity operators in [6, 25]. In fact, the MCDM approach with the similarity operators in [6, 25] gives the same ordering and ranking, \(\tilde{C}_4\succ \tilde{C}_3\succ \tilde{C}_2\succ \tilde{C}_5\succ \tilde{C}_1\). The MCDM approach with the similarity operator in [46] and \(\mathbb {S}_6\) in [28] gives the same ordering and ranking, \(\tilde{C}_2\succ \tilde{C}_3\succ \tilde{C}_5\succ \tilde{C}_1\succ \tilde{C}_4\). The MCDM approach with the similarity operators in [15, 38] gives the same ordering and ranking, \(\tilde{C}_2\succ \tilde{C}_4\succ \tilde{C}_5\succ \tilde{C}_3\succ \tilde{C}_1\). Finally, the MCDM approach with the similarity operators in

[28, 31, 34, 36, 37] and the new similarity operator gives the same ordering and ranking, \(\tilde{C}_2\succ \tilde{C}_4\succ \tilde{C}_3\succ \tilde{C}_5\succ \tilde{C}_1\).

The new similarity method possesses some overriding advantages, which are:

-

(i)

the new similarity method produces more precise and reliable similarity values compare to the other similarity methods.

-

(ii)

the new similarity method incorporates all the parameters of IFSs to enhance performance rating and avoid exclusion error as witnessed in [6, 25, 28, 31, 34, 36, 37, 46].

-

(iii)

the new similarity method completely satisfied the axioms of similarity operator unlike the method in [37].

5 Conclusion

The place of similarity operator in real-world problems of decision making under indeterminate environments cannot be overemphasized. To this end, the concept of similarity operator have been researched by not a few authors using intuitionistic fuzzy information. In this paper, a generalized similarity operator is developed which produces more precise and reliable similarity values compare to the other similarity methods. Besides the fact that the new similarity operator completely satisfied the axioms of the similarity operator unlike the method in [37], it equally incorporates all the descriptive features of IFSs to enhance performance rating unlike the methods in [6, 25, 28, 31, 34, 36, 37, 46]. Some theoretical results of the generalized similarity operator for IFSs were discussed. In addition, the generalized similarity operator was used to discuss problems of real-world decision-making based on the recognition principle and MCDM approach. Some comparative studies were carried out to justify the rationale behind the development of the generalized similarity operator for IFSs. The generalized similarity operator for IFSs could be extended to other uncertain environments besides IFSs with some marginal modifications for future consideration.

Data Availability

This work has no associated data.

References

Atanassov, K.T.: Intuitionistic fuzzy sets. Fuzzy Set Syst. 20, 87–96 (1986)

Atanassov, K.T.: Intuitionistic Fuzzy Sets: Theory and Applications. Physica-Verlag, Heidelberg (1999)

Boran, F.E.: An integrated intuitionistic fuzzy multi criteria decision making method for facility location selection. Math. Comput. Appl. 16(2), 487–496 (2011)

Boran, F.E., Akay, D.: A biparametric similarity measure on intuitionistic fuzzy sets with applications to pattern recognition. Inf. Sci. 255(10), 45–57 (2014)

Burillo, P., Bustince, H.: Entropy on intuitionistic fuzzy sets and on interval-valued fuzzy sets. Fuzzy Set Syst. 78, 305–315 (1996)

Chen, S.M.: Similarity measures between vague sets and between elements. IEEE Trans. Syst. Man Cybern. 27(1), 153–158 (1997)

Chen, T.Y.: A note on distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the Hausdorff metric. Fuzzy Set Syst. 158, 2523–2525 (2007)

Chen, S.M., Chang, C.H.: A novel similarity measure between Atanassov’s intuitionistic fuzzy sets based on transformation techniques with applications to pattern recognition. Inf. Sci. 291, 96–114 (2015)

Chen, S.M., Randyanto, Y.: A novel similarity measure between intuitionistic fuzzy sets and its applications. Int. J. Pattern Recognit. Artif. Intell. 27(7), 1350021 (2013)

Chen, S.M., Randyanto, Y., Cheng, S.H.: Fuzzy queries processing based on intuitionistic fuzzy social relational networks. Inf. Sci. 327, 110–124 (2016)

Davvaz, B., Sadrabadi, E.H.: An application of intuitionistic fuzzy sets in medicine. Int. J. Biomath. 9(3), 1650037 (2016)

De, S.K., Biswas, R., Roy, A.R.: An application of intuitionistic fuzzy sets in medical diagnosis. Fuzzy Set Syst. 117(2), 209–213 (2001)

Ejegwa, P.A.: Novel correlation coefficient for intuitionistic fuzzy sets and its application to multi-criteria decision-making problems. Int. J. Fuzzy Syst. Appl. 10(2), 39–58 (2021)

Ejegwa, P.A., Agbetayo, J.M.: Similarity-distance decision-making technique and its applications via intuitionistic fuzzy pairs. J. Comput. Cogn. Eng. 2(1), 68–74 (2022)

Ejegwa, P.A., Ahemen, S.: Enhanced intuitionistic fuzzy similarity operators with applications in emergency management and pattern recognition. Granul. Comput. 8, 361–372 (2022)

Ejegwa, P.A., Ajogwu, C.F., Sarkar, A.: A hybridized correlation coefficient technique and its application in classification process under intuitionistic fuzzy setting. Iran. J. Fuzzy Syst. (2023). https://doi.org/10.22111/IJFS.2023.7549

Ejegwa, P.A., Onyeke, I.C.: Intuitionistic fuzzy statistical correlation algorithm with applications to multi-criteria based decision-making processes. Int. J. Intell. Syst. 36(3), 1386–1407 (2021)

Ejegwa, P.A., Onyeke, I.C.: A novel intuitionistic fuzzy correlation algorithm and its applications in pattern recognition and student admission process. Int. J. Fuzzy Syst. Appl. (2022). https://doi.org/10.4018/IJFSA.285984

Ejegwa, P.A., Onyeke, I.C., Kausar, N., Kattel, P.: A new partial correlation coefficient technique based on intuitionistic fuzzy information and its pattern recognition application. Int. J. Intell. Syst. (2023). https://doi.org/10.1155/2023/5540085

Ejegwa, P.A., Onyeke, I.C., Terhemen, B.T., Onoja, M.P., Ogiji, A., Opeh, C.U.: Modified Szmidt and Kacprzyk’s intuitionistic fuzzy distances and their applications in decision-making. J. Niger. Soc. Phys. Sci. 4, 175–182 (2022)

Garg, H., Kumar, K.: A novel correlation coefficient of intuitionistic fuzzy sets based on the connection number of set pair analysis and its application. Sci. Iran. 25(4), 2373–2388 (2018)

Garg, H., Kumar, K.: An advanced study on the similarity measures of intuitionistic fuzzy sets based on the set pair analysis theory and their application in decision making. Soft Comput. 22, 4959–4970 (2018)

Grzegorzewski, P.: Distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the Hausdor metric. Fuzzy Set Syst. 148, 319–328 (2004)

Hatzimichailidis, A.G., Papakostas, A.G., Kaburlasos, V.G.: A novel distance measure of intuitionistic fuzzy sets and its application to pattern recognition problems. Int. J. Intell. Syst. 27, 396–409 (2012)

Hong, D.H., Kim, C.: A note on similarity measures between vague sets and between elements. Inf. Sci. 115(1), 83–96 (1999)

Hung, W.L., Yang, M.S.: Similarity measures of intuitionistic fuzzy sets based on Hausdorff distance. Pattern Recognit. Lett. 25, 1603–1611 (2004)

Hung, W.L., Yang, M.S.: Similarity measures of intuitionistic fuzzy sets based on Lp metric. Int. J. Approx. Reason. 46, 120–136 (2007)

Hung, W.L., Yang, M.S.: Similarity measures between intuitionistic fuzzy sets. Int. J. Intell. Syst. 23, 364–384 (2008)

Hwang, C.M., Yang, M.S.: New construction for similarity measures between intuitionistic fuzzy sets based on lower, upper and middle fuzzy sets. Int. J. Fuzzy Syst. 15(3), 371–378 (2013)

Iqbal, M.N., Rizwan, U.: Some applications of intuitionistic fuzzy sets using new similarity measure. J. Ambient Intell. Hum. Comput. (2019). https://doi.org/10.1007/s12652-019-01516-7

Li, Y., Olson, D.L., Qin, Z.: Similarity measures between intuitionistic fuzzy (vague) sets: a comparative analysis. Pattern Recognit. Lett. 28(2), 278–285 (2007)

Liang, Z., Shi, P.: Similarity measures on intuitionistic fuzzy sets. Pattern Recognit. Lett. 24, 2687–2693 (2003)

Liu, P., Chen, S.M.: Group decision making based on Heronian aggregation operators of intuitionistic fuzzy numbers. IEEE Trans. Cybern. 47(9), 2514–2530 (2017)

Luo, L., Ren, H.: A new similarity measure of intuitionistic fuzzy set and application in MADM problem. AMSE J. 2016 Ser. Adv. A 53(1), 204–223 (2016)

Mo, X., Zhou, X., Song, Y.: Emergency scenario similarity measures in emergency rescue planning based on intuitionistic fuzzy sets. Syst. Eng. Procedia 5, 168–172 (2012)

Mitchell, H.B.: On the Dengfeng–Chuntian similarity measure and its application to pattern recognition. Pattern Recognit. Lett. 24(16), 3101–3104 (2003)

Quynh TD, Thao NX, Thuan NQ, Dinh NV (2020) A new similarity measure of IFSs and its applications. In: Proceedings of the 12th International Conference on Knowledge and Systems Engineering, Vietnam

Shi, L.L., Ye, J.: Study on fault diagnosis of turbine using an improved cosine similarity measure for vague sets. J. Appl. Sci. 13(10), 1781–1786 (2013)

Song, Y., Wang, X., Lei, L., Xue, A.: A new similarity measure between intuitionistic fuzzy sets and its application to pattern recognition. Abstract Appl. Anal. (2014). https://doi.org/10.1155/2014/384241

Szmidt, E., Kacprzyk, J.: Distances between intuitionistic fuzzy sets. Fuzzy Set Syst. 114, 505–518 (2000)

Szmidt, E., Kacprzyk, J.: Intuitionistic fuzzy sets in some medical applications. Note IFS 7(4), 58–64 (2001)

Szmidt, E., Kacprzyk, J., Bujnowski, P.: Attribute selection via Hellwig’s for Atanassov’s intuitionistic fuzzy sets. In: Koczy, L.T., et al. (eds.) Computational Intelligence and Mathematics for Tackling Complex Problems, Studies in Computational Intelligence 819, pp. 81–90. Springer Nature Switzerland AG (2020)

Thao, N.X.: A new correlation coefficient of the intuitionistic fuzzy sets and its application. J. Intell. Fuzzy Syst. 35(2), 1959–1968 (2018)

Wang, W., Xin, X.: Distance measure between intuitionistic fuzzy sets. Pattern Recognit. Lett. 26, 2063–2069 (2005)

Yang, M.S., Hussain, Z., Ali, M.: Belief and plausibility measures on intuitionistic fuzzy sets with construction of belief-plausibility TOPSIS. Complexity (2020). https://doi.org/10.1155/2020/7849686

Ye, J.: Cosine similarity measures for intuitionistic fuzzy sets and their applications. Math. Comput. Model. 53, 91–97 (2011)

Ye, J.: Similarity measures of intuitionistic fuzzy sets based on cosine function for the decision making of mechanical design schemes. J. Intell. Fuzzy Syst. 30, 151–158 (2016)

Yen, P.C.P., Fan, K.C., Chao, H.C.J.: A new method for similarity measures for pattern recognition. Appl. Math. Model. 37, 5335–5342 (2013)

Xu, Z.S.: Some similarity measures of intuitionistic fuzzy sets and their applications to multiple attribute decision making. Fuzzy Optim. Decis. Mak. 6, 109–121 (2007)

Xu, Z.S.: An overview of distance and similarity measures of intuitionistic fuzzy sets. Int. J. Uncertain. Fuzzy Knowl. Based Syst. 16(4), 529–555 (2008)

Xu, Z.S., Chen, J., Wu, J.: Clustering algorithm for intuitionistic fuzzy sets. Inf. Sci. 178, 3775–3790 (2008)

Xu, Z.S., Yager, R.R.: Intuitionistic and interval-valued intutionistic fuzzy preference relations and their measures of similarity for the evaluation of agreement within a group. Fuzzy Optim. Dec. Mak. 8, 123–139 (2009)

Zadeh, L.A.: Fuzzy sets. Inf. Control 8, 338–353 (1965)

Acknowledgements

We would like to express our profound gratitude to the editor and all the reviewers for their insights, suggestions, and contributions.

Funding

The work received no external funding.

Author information

Authors and Affiliations

Contributions

YZ supervised and provided funding for the work. PAE designed the work and wrote the manuscript. ESJ did the computational analysis.

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest with the publication of this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, Y., Ejegwa, P.A. & Johnny, S.E. Generalized Similarity Operator for Intuitionistic Fuzzy Sets and its Applications Based on Recognition Principle and Multiple Criteria Decision Making Technique. Int J Comput Intell Syst 16, 85 (2023). https://doi.org/10.1007/s44196-023-00245-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00245-2