Abstract

In recent years, computer vision and convolutional neural networks have been gradually applied in embedded devices. However, due to the limitation of hardware, the inference speed of many high-precision algorithms is very slow, which requires high performance hardware. In this study, a lightweight network called LightCSPNet is proposed for image classification and object detection. LightCSPNet is built by stacking four identical modules, each of which has adopted an improved CSP (Cross-Stage-Partial-connections) structure for channel number expansion. The special inverse residual structure is constructed for feature extraction, and the transformer modules are added in the proposed model. In this study, the typical defect detection in industry is adopted as testing platform, and a defect dataset consisting of 12 categories including cloth, road, bridge, steel and etc., was constructed for image classification. Compared with MobileNetV3, our model has almost the same accuracy, but the number of parameters and GFLOPs (Giga Floating-point Operations Per Second) have been, respectively, reduced to 88% and 36% for ImageNet100 and the dataset we built. In addition, compared with MobileNetV2 and MobileNetV3 for VOC2012 dataset in object detection, LightCSPNet obtained 0.4% and 0.6% mAP (Mean Average Precision) improvement respectively, and the inference speed on CPU was twice as fast.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

At present, the research on computer vision has gradually shifted from the laboratory to the embedded devices. Although many algorithms can achieve high accuracy, they usually have a large number of parameters and computational costs and need high-performance hardware. Many chips have special convolutional acceleration modes, but they are relatively expensive. Therefore, the use of a lightweight network is an effective way to balance performance and speed.

The advantages of the lightweight convolutional neural network (CNN) model are as follows:

-

1.

The lightweight model has fewer parameters and less data exchange during training. Given this perspective, it can achieve better results in a shorter training time, and can significantly reduce training time.

-

2.

The model with fewer parameters and less computation is more suitable for embedded devices. The embedded devices have relatively smaller size, so the heat dissipation and hardware performance are greatly different from the counterpart of laboratory machines. Therefore, compared to the model with high accuracy and slow operation, the model with general accuracy and fast operation is more suitable for the embedded devices.

-

3.

At present, transformer model of architecture achieves better accuracy than CNN based on huge training time and parameters, while the convolutional hardware optimization method of CNN is mature at present, so the speed of CNN can be improved more easily compared with the transformer structure.

Lightweight models usually consist of knowledge distillation [1], pruning [2] and quantification [3], or modifying network structures. There are many similar researches on network structure optimization. AlexNet used group convolution to reduce parameters and computation [4]. AlexNet's team adopted group convolution by two GPUs to reduce training time for the low performance of the device at the time. Group convolution can significantly reduce the number of parameters and training time, and increase the diagonal correlation of the convolution kernel, making the network difficult to overfit [5]. Iandola et al. constructed SqueezeNet network, which used 1 × 1 convolution kernel to reduce the number of channels and added multi-scale convolution parallel computing to preserve more information [6]. SqueezeNet has only one fiftieth of AlexNet's parameters but similar performance. MobileNet series networks adopt the deepwise separable convolution, which greatly reduces the number of parameters and enables channel and region separation to better integrate the features of different channels through separate operation for each channel [7]. ShuffleNet proposed four criteria for network design, and through the shuffle of group convolution, information can circulate inside of groups to avoid information isolation [8, 9]. However, the number of parameters and computational costs of the above networks are still too much, so a novel model is proposed based on the characteristics of the previous lightweight models in this study, of which number of parameters and computational costs are, respectively 12% and 64% of the counterparts of MobileNetV3. The traditional dataset and the defect dataset built in this study are both tested by the novel algorithm proposed in this study, which further verifies the effect under actual conditions.

For image classification tasks, structure optimization can significantly improve the network speed or performance, while the target detection task needs to consider the speed of different parts. For example, a common object detection model is selected to calculate inference time for different components, and the results are shown in Fig. 1.

In Fig. 1, (a) represents the required inference time for each part of model, and (b) represents the proportion of computation (Multiply Add) for each part. Backbone, Neck and Head represent the corresponding structures of model, NMS represents non-maximum suppression, and other represents hardware such as model loaded into GPU and data memory throughput.

From Fig. 1, it can be concluded that a large amount of inference time and computational costs in the object detection model are occupied by Backbone. Therefore, it is a good method to optimize backbone. The neck part usually adopts the Feature Pyramid structure [10]. The optimization for FPN structure needs to consider the impact of each branch, and the optimization for Backbone is more direct.

2 Materials and Methods

The purpose of the lightweight network is to minimize the number of parameters and size of the network without reducing accuracy too much. Although the number of parameters in MobileNetV3 is small enough, there are still about 4 million training parameters. A large number of parameters occupy a large amount of video memory in the prediction stage. For embedded devices, the less video memory an algorithm consumes, the more images it can process in the same time. Such algorithms have a higher upper limit of optimization. Many improvements have been made to reduce the size of network. We mainly use deepwise conv (deepwise separable convolution), as shown in Fig. 2. Deepwise conv is used by many lightweight networks because it can significantly reduce the number of parameters and increase speed [7, 11].

Compared with ordinary convolution, depthwise separable convolution does not add in the channel direction after convolution but directly outputs the elements in the channel direction.

2.1 The Model Proposed in This Study

Our network is similar to the CSP structure, which is called LightCSPNet because it expands the number of channels through convolution [12]. Four CSPlayer modules are connected to be used in our network. CSPlayer module includes two structures: Down layer and Extraction layer for expansion of channel number and feature extraction respectively. Our lightweight CSP structure is used by down-layer as shown in Fig. 3. This structure is stacked with feature layers of deepwise conv, similar to GhostNet but used to expand the number of channels [13]. Depthwise represents depthwise separable convolution, Conv represents convolution normalization and activation functions, and Concat represents concatenation in channel direction.

If ordinary convolution is used on the residual edge, many redundant information will be generated as shown in Fig. 4. According to reference [13], these redundant features can be reduced by using depthwise separable convolution, and overfitting can be avoided by reducing the number of parameters and computational costs. The feature map is processed by Down layer, of which the width and height then become half, and the number of channels increases twice. Down sampling can improve the speed of network operation.

Redundant features are represented by boxes with the same color, and one feature can be derived from another by a simple transformation. It provides little information but takes up memory.

The extraction layer is shown in Fig. 5. The inversed residual structure is used in the extraction layer, which is present in many networks, mainly because it can expand the number of channels so that less information is reduced through the activation function [14, 15].

The channel magnification of center part of the inverse residual is a hyperparameter, and we choose 4 × magnification here. Therefore, the number of channels in the middle layer is 1024. Increasing the number of channels by convolution can decompose the features of the middle layer. Afterwards, reducing the number of channels compresses the features of the middle layer, thus preserving more distinct parts.

In Fig. 5, Left: inversed residuals, Right: Resmax structure. 256,1 × 1Conv,1024 indicates that the convolution is used to change the number of channels from 256 to 1024, and 1 × 1 Conv represents that the size of convolution kernel is 1.

On the residual edge, the Resmax structure is used, as shown in Fig. 6, which consists of two branches. One branch gets the most obvious feature of the current feature layer, and the other branch obtains the weight factor of each channel. Max represents that obtaining the maximum value of all values in the channel direction, and adaptive max pooling indicates that obtaining the maximum value of all pixels in the width and height direction. Linear represents the full connection. Resmax controls the feature map ratio of each channel by adaptive parameters. This structure can screen out more obvious network features and better distinguish foreground and background.

Compared with direct linking, Resmax structure adds the capability of feature filtering and achieved better results in experimental test. Three different CSPlayer approaches are tested, which are shown in Fig. 7, (a): Resmax; (b): Direct addition; (c): Direct connection of Resmax module.

The test results are shown in Table 1, (a) structure using Resmax proposed in this study has a better effect than the counterpart without using Resmax. Although Resmax structure can extract features that are significantly different from the background, it also removes objects that are similar to the background. If the direct connection way like (c) structure is adopted, a large number of similar features will be removed, which can result in performance degradation. However, if the direct connection way is used as residual edge, the above disadvantage can be effectively overcome, because of the trunk containing a wealth of similar features [16, 17]. That's the reason why structure (c) is selected in this study.

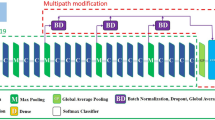

Top 1 and Top 5 are indicators to measure the prediction accuracy of model. ImageNet100 was selected as the experimental dataset, of which 120 thousand images are part of ImageNet1k. The network structure is shown in Fig. 8.

Down layer and extraction layer in Fig. 8, is described in Figs. 3 and 4. Conv means convolution normalization and activation function.

2.2 Additional Improvements

At present, transformer model has been proved to be very effective in the CV (Computer Vision) field [18,19,20]. The MHSA (Multi-head self-attention) structure in Transformer model has been tried to be added into the improved network proposed in this study. MHSA structure as shown in Fig. 9, was first used in the NLP (natural language processing) field to improve accuracy by enabling networks to select useful information themselves [21]. MHSA structure has been proved to be very suitable for image classification and segmentation target detection tasks. The combination of CNN and Transformer models has been tried by many scholars [22, 23]. Based on the above improvements, the innovations are put forward in this study that the extraction layer in the last CSPlayer is replaced with MHSA structure.

In this paper, four heads are used in fact, and only one head is shown in Fig. 9 for simplicity. q, k and v, respectively represent query (to match others), key (to be matched) and information to be extracted. P is the relative position code. ⊕ and ⊗ , respectively represents sum and matrix multiplication. \(W^{q}\), \(W^{k}\) and \(W^{v}\) are all implemented using 1 × 1 conv [24].

The Softmax operation in Fig. 9 is shown in Eq. (1):

\(x_{i} = \{ x_{1} ,x_{2} ,...,x_{i} \}\).

Softmax generates a series of weight coefficients in the range of [0,1], and can be regarded as network normalization operations, by which the network can increase the weight proportion of foreground part and reduce the weight proportion of background part.

The MHSA structure is shown in Eq. (2):

where Q, K, V and P in Eq. (2) represent the tensors of the lowercase letters in Fig. 9.

The addition of MHSA structure reduces the size of model further, but the structure needs a lot of training time to fit. Finally, the accuracy of the classification network after adding MHSA structure decreases partly but the structure achieves good results in the target detection network.

2.3 Dataset and Hyperparameter Setting

Defect detection is a common task of computer vision in industry but most defect datasets have too few categories, resulting in easy to overfit for models. For this disadvantage, in this paper, a special defect dataset was built for image classification task, which includes 12 varieties of defect types such as cloth, concrete, bridge, steel, etc. [25,26,27]. One picture of a portion of dataset is shown in Fig. 10.

The dataset comes from the work of many previous scholars. Different models are adopted for defect detection, most of which are not as lightweight as ours [28,29,30]. A variety of defects have not only similar characteristics but also fundamental differences, so training together is conducive to network convergence. For example, whether or not the cracks and defects is from bridge, ground or concrete cracks is similar to the difference between a cat and a dog, they have some of the same features but not quite the same details. Putting multiple defects in one dataset is equivalent to adding negative samples, which can better improve the generalization ability of the model. Moreover, the model of multiple categories can be used as the pre-training weight to continue training the target of fewer categories. This is similar to the distillation of knowledge using large models to train small models. The pseudo-code for training the LightCSPNet algorithm is as follows:

The function in Algorithm 1 is shown in Figs. 3 and 5. The hyperparameters of LightCSPNet are set according to experimental experience. The epoch is set to 200 to facilitate the convergence of the algorithm. Adam and Cross Entropy are adopted as the gradient descent algorithm and Loss function, respectively. The cosine annealing method is used to automatically adjust the learning rate, and the initial learning rate is set to 0.001.

The classification capacity of the novel model proposed in this paper was tested for ImageNet100 dataset. In addition, the novel model as backbone is introduced into the object detection model to obtain AP50 that is compared with the counterparts of MobileNetV2 and MobileNetV3.

3 Results

Some small optimizations were made to further modify our model, such as EL (increasing the number of Extraction layers) using dropout and MHSA. The Extraction Layer 3 in Fig. 8 are recycled three times, for the module ratio in the network was 1:1:3:1 [31], but the effect was not good. The dropout is also used in MobileNetV2, MobileNetV3 and LightCSPNet proposed in this study, by which the accuracy is improved to a certain extent [32]. The experimental condition is that (224,224) input and CPU: Intel(R) Xeon(R) Platinum 8255C and GPU: RTX 2080 Ti and RTX 3090. The ablation experiments shown in Table 2 were done to verify the improvement effect. The experimental results for two datasets are shown in Table 3.

It can be seen from Table 3 that our model reduces a large number of parameters and computational costs while the accuracy remains almost unchanged. Although the addition of MHSA structure results in much performance degradation in the classification`, the MHSA structure achieves better performance in the object detection model.

Compared to the other lightweight algorithms, the number of parameters of LightCSPNet is reduced by 75%. In addition, the performance of LightCSPNet is almost the same as the counterparts of other lightweight networks after using these skills. LightCSPNet is combined with the MHSA, the number of parameters of LightCSPNet is further reduced. In addition, the Vision Transformer algorithm, which consists of multiple MHSA structures, was used for our tests. In fact, the vgg16 and Vision Transformer networks in the experiments were so large that it was difficult to converge for the GTX3090. Therefore, pre-training weights for large models are used to accelerate the convergence of the algorithms. Finally, it can be seen from the ablation experiment in Table 2 that our improvement is effective.

LightCSPNet as backbone was put into the target detection model and further compared with MobileNetV2 and V3. The backbone is tested on two algorithms: anchor-base and anchor-free, which are YOLOv4 [33] and YOLOX [34] respectively, both of which structures are very similar and uses the same PAN (Path Aggregation Network) structure [35] and is shown in Fig. 11. The difference of the above structures is whether anchor is used.

The detection results are shown in Table 4. The image size of the input model is (416,416), and the dataset is VOC2012 that consists of 10,000 images from 20 classes. The pre-training weights from ImageNet100 are used by all models in this paper.

For the VOC dataset, the AP of LightCSPNet was improved by 0.4% and 0.6%, respectively. Although LightCSPNet has a significant reduction in the number of parameters and computational costs, the inference time on the GPU does not decrease much. The reason is that the Nvidia GPU used in PC has hardware optimizations and frameworks for ordinary convolution and continuous data such as CUDNN, but not optimizations for depthwise separable convolution and discrete data. It can be concluded that the inference time of LightCSPNet is only half of the counterpart of MobileNetV2 on CPU without optimization mechanism, which means that the performance of LightCSPNet can perform better on the embedded device without CUDNN.

4 Conclusion

For the relatively poor computing power of embedded devices, a more lightweight and better performing network that LightCSPNet is proposed to accommodate low performance devices. The dataset including multiple defects was constructed to test the algorithm in the defect detection target. Two international public datasets that ImageNet100 and VOC2012, were used to train and test the algorithms in the areas of image classification and target detection, respectively. The main conclusions are as follows:

-

1.

The proposed Down layer and Extraction layer structures combining deepwise separable convolution and CSP structures reduce the computation and the number of parameters without degrading the performance.

-

2.

For the classification task, LightCSPNet obtained better results for the ImageNet100 and defect datasets. Compared to the other algorithms, LightCSPNet has fewer parameters and less computation and owns almost the same performance.

-

3.

The new dataset constructed in this paper consisting of 12 categories can be better used for defect detection tasks.

-

4.

LightCSPNet adopted some skills, such as adding drop out and MHSA structures, and achieves a better performance improvement.

-

5.

For the target detection task, LightCSPNet is used to replace the backbone part, which increases the mAP for the VOC2012 dataset. In addition, the running time of LightCSPNet is only half of the counterpart of MobileNetV2 on CPU. LightCSPNet proposed in this paper can be better applied in embedded devices that owns only CPU or GPU with low performance.

Data Availability

The dataset can be obtained from https://image-net.org/ and http://host.robots.ox.ac.uk/pascal/VOC/ (accessed on 7 March 2022).

References

Hinton, G., Vinyals, O.: Distilling the Knowledge in a Neural Network. In: NIPS Deep Learning and Representation Learning Workshop. (2015).

Liu, Z. Li, J., Shen, Z., Huang, G., Yan, S., Zhang, C.: Learning Efficient Convolutional Networks through Network Slimming. In: 2017 IEEE International Conference on Computer Vision (ICCV). pp. 2755–2763 IEEE, Venice (2017). https://doi.org/10.1109/ICCV.2017.298.

Gholami, A., Kim, S., Dong, Z., Yao, Z., Mahoney, M.W.: A Survey of Quantization Methods for Efficient Neural Network Inference, http://arxiv.org/abs/2103.13630, (2021).

Krizhevsky, A., Sutskever, I., Hinton, G.E.: ImageNet classification with deep convolutional neural networks. Commun. ACM. 60(6), 84–90 (2017). https://doi.org/10.1145/3065386

Singh, P., Verma, V.K., Rai, P., Namboodiri, V.P.: HetConv: Heterogeneous Kernel-Based Convolutions for Deep CNNs. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4830–4839 (2019). https://doi.org/10.1109/CVPR.2019.00497.

Iandola, F.N., Han, S., Moskewicz, M.W., Ashraf, K., Dally, W.J., Keutzer, K.: SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size, http://arxiv.org/abs/1602.07360, (2016).

Howard, A.G., Zhu, M., Chen, B., Kalenichenko, D., Wang, W., Weyand, T., Andreetto, M.: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications, http://arxiv.org/abs/1704.04861, (2017).

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices, http://arxiv.org/abs/1707.01083, (2017).

Ma, N., Zhang, X., Zheng, H.-T., Sun, J.: ShuffleNet V2: Practical Guidelines for Efficient CNN Architecture Design. In: Ferrari, V. et al. (eds.) Computer Vision – ECCV 2018. pp. 122–138 Springer International Publishing, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_8.

Lin, T.-Y. Dollár, P., Girshick, R., He, K., Hariharan, B., Be-longie, S.: Feature Pyramid Networks for Object Detection. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 936–944 (2017). https://doi.org/10.1109/CVPR.2017.106.

Chollet, F.: Xception: Deep Learning with Depthwise Separable Convolutions. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1800–1807 IEEE, Honolulu, HI (2017). https://doi.org/10.1109/CVPR.2017.195.

Wang, C.-Y., Mark Liao, H.-Y., Wu, Y.-H., Chen, P.-Y., Hsieh, J.-W., Yeh, I.-H.: CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). pp. 1571–1580 (2020). https://doi.org/10.1109/CVPRW50498.2020.00203.

Han, K. Wang, Y., Tian, Q., Guo, J., Xu, C., Xu, C.: GhostNet: More Features From Cheap Operations. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 1577–1586 (2020). https://doi.org/10.1109/CVPR42600.2020.00165.

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.-C.: MobileNetV2: Inverted Residuals and Linear Bottlenecks. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4510–4520 (2018). https://doi.org/10.1109/CVPR.2018.00474.

Howard, A., Sandler, M., Chen, B., Wang, W., Chen, L.-C., Tan, M., Chu, G., Vasudevan, V., Zhu, Y., Pang, R., et al.: Searching for MobileNetV3. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 1314–1324 (2019). https://doi.org/10.1109/ICCV.2019.00140.

He, K., Zhang, X., Ren, S., Sun, J.: Deep Residual Learning for Image Recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90.

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated Residual Transformations for Deep Neural Networks. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5987–5995 (2017). https://doi.org/10.1109/CVPR.2017.634.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., et al.: An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. Presented at the International Conference on Learning Representations September 28 (2020).

Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z., Lin, S., Guo, B.: Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In: 2021 IEEE/CVF International Conference on Computer Vision (ICCV). pp. 9992–10002 (2021). https://doi.org/10.1109/ICCV48922.2021.00986.

Carion, N., Massa, F., Synnaeve, G., Usunier, N., Kirillov, A., Zagoruyko, S.: End-to-End Object Detection with Transformers, http://arxiv.org/abs/2005.12872, (2020).

Vaswani, A. Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is All you Need. In: Advances in Neural Information Processing Systems. Curran Associates, Inc. (2017).

Liu, Y., Wu, Y.-H., Sun, G., Zhang, L., Chhatkuli, A., Van Gool, L.: Vision Transformers with Hierarchical Attention, http://arxiv.org/abs/2106.03180, (2022).

Jing, Y., Ren, Y., Liu, Y., Wang, D., Yu, L.: Automatic Extraction of Damaged Houses by Earthquake Based on Improved YOLOv5: A Case Study in Yangbi. Remote Sensing. 14, 2, 382 (2022). https://doi.org/10.3390/rs14020382.

Srinivas, A., Lin, T.-Y., Parmar, N.: Bottleneck Transformers for Visual Recognition. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 16514–16524 (2021). https://doi.org/10.1109/CVPR46437.2021.01625.

Silvestre-Blanes, J., Albero-Albero, T., Miralles, I., Pérez-Llorens, R., Moreno, J.: A public fabric database for defect detection methods and results. Autex Research Journal. 19(4), 363–374 (2019). https://doi.org/10.2478/aut-2019-0035

Bianchi, Eric., Hebdon, Matthew.: Trained Model for the Semantic Segmentation of Concrete Cracks (Conglomerate). University Libraries, Virginia Tech. Software (2021). https://doi.org/10.7294/16628596.v1.

Shi, Y., Cui, L., Qi, Z., Meng, F., Chen, Z.: Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 17(12), 3434–3445 (2016). https://doi.org/10.1109/TITS.2016.2552248

Huang, Y., Qiu, C., Wang, X., Wang, S., Yuan, K.: A Compact Convolutional Neural Network for Surface Defect Inspection. Sensors. 20, 7, 1974 (2020). https://doi.org/10.3390/s20071974.

Bao, Y., Song, K., Liu, J., Wang, Y., Yan, Y., Yu, H., Li, X.: Triplet-graph reasoning network for few-shot metal generic surface defect segmentation. IEEE Trans. Instrum. Meas. 70, 1–11 (2021). https://doi.org/10.1109/TIM.2021.3083561

Song, K., Yan, Y.: A noise robust method based on completed local binary patterns for hot-rolled steel strip surface defects. Appl. Surf. Sci. 285, 858–864 (2013). https://doi.org/10.1016/j.apsusc.2013.09.002

Liu, Z., Mao, H., Wu, C.-Y.: A ConvNet for the 2020s, http://arxiv.org/abs/2201.03545, (2022).

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Sala-khutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Bochkovskiy, A., Wang, C.-Y., Liao, H.-Y.M.: YOLOv4: Optimal Speed and Accuracy of Object Detection, http://arxiv.org/abs/2004.10934, (2020).

Ge, Z., Liu, S., Wang, F., Li, Z., Sun, J.: YOLOX: Exceeding YOLO Series in 2021, http://arxiv.org/abs/2107.08430, (2021).

Liu, S., Qi, L., Qin, H., Shi, J., Jia, J.: Path Aggregation Network for Instance Segmentation. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 8759–8768 (2018). https://doi.org/10.1109/CVPR.2018.00913.

Acknowledgements

We thank all editors and reviewers for handling and reviewing our paper.

Funding

This work is supported by Shandong Provincial Key Laboratory of Precision Manufacturing and Non-traditional Machining and SDUT&Zhangdian District Integration Development Project (Grant No. 2021JSCG0021).

Author information

Authors and Affiliations

Contributions

Conceptualization, QL and YSL; methodology, QL; software, CW; validation, CW and MWG; formal analysis, QL; investigation, CW; resources, CW; writing—original draft preparation, CW; writing—review and editing, QL; visualization, YSL; supervision, MWG; project administration, QL. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

Chuan Wang, Qiang Liu, Yusheng Li and Mingwang Gao declare that they have no conflict of interest.

Ethics Approval

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, C., Liu, Q., Li, Y. et al. LightCSPNet: A Lightweight Network for Image Classification and Objection Detection. Int J Comput Intell Syst 16, 46 (2023). https://doi.org/10.1007/s44196-023-00226-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00226-5