Abstract

Scene text recognition (STR) has been widely applied in industrial and commercial fields. However, existing methods still face challenges when processing text images with defects such as low contrast, blur, low resolution, and insufficient illumination. These defects are common in actual situations because of diverse text backgrounds in natural scenes and limitations in shooting conditions. To address these challenges, we propose a novel network for noise-robust scene text recognition (NRSTRNet), which comprehensively suppresses the noise in the three critical steps of STR. Specifically, in the text feature extraction stage, NRSTRNet enhances the text-related features through the channel and spatial dimensions and disregards some disturbances from the non-text area, reducing the noise and redundancy in the input image. In the context encoding stage, fine-grained feature coding is proposed to effectively reduce the influence of previous noisy temporal features on current temporal features while simultaneously reducing the impact of partial noise on the overall encoding by sharing contextual feature encoding parameters. In the decoding stage, a self-attention module is added to enhance the connections between different temporal features, thereby leveraging the global information to obtain noise-resistant features. Through these approaches, NRSTRNet can enhance the local semantic information while considering the global semantic information. Experimental results show that the proposed NRSTRNet can improve the ability to characterize text images, enhance stability under the influence of noise, and achieve superior accuracy in text recognition. As a result, our model outperforms SOTA STR models on irregular text recognition benchmarks by 2% on average, and it is exceptionally robust when applied to noisy images.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scene text recognition is an important research topic in the computer vision field and has been widely used in various applications, including human–computer interaction, autonomous driving, road sign recognition, and industrial automation. Owing to diverse text backgrounds in natural scenes and limitations in shooting conditions, text images typically contain defects such as low contrast, blur, low resolution, and insufficient illumination. Designing diverse hand-crafted features is a challenge in traditional machine learning methods, and these features are limited to high-level representation. As a result, traditional text recognition methods [1,2,3] cannot obtain satisfactory recognition results.

Unlike traditional text recognition methods, deep learning methods [5,6,7,8,9,10,11] perform a top-down approach to recognize words or text lines directly, showing more promising results. Recent approaches [4, 12] treat the STR problem as a sequence prediction problem, resulting in increased precision while eliminating the need for character-level annotation. However, the performance of the existing methods would drop significantly when blurred, occluded, and incomplete characters appeared in the image, as shown in Fig. 1. As an example, in the second sub-image of Fig. 1, the last character ‘i’ is not correctly recognized due to the noise. As another example, in the fourth sub-image of Fig. 1, the second character ‘r’ is recognized as ‘im’ due to blurring. The examples above are local semantic information errors caused by noise in the global semantic information. More specifically, most existing frameworks simply apply BiLSTM-based encoders on the entire image, which encodes the current temporal features according to previous temporal features. If the previous temporal features contained noise, the current temporal features would be affected as well, making the encoder susceptible to the global effects of noise.

Recognition effect comparison of proposed NRSTRNet and best model presented in [4]. The first column shows examples of noisy text images, including image blurring, image ghosting, and background interference. The second column shows the recognition results obtained by the model proposed in [4]. The third column shows the prediction results of the model proposed in this paper. The red and green characters indicate wrong and correct results, respectively

To address these challenges, we propose a novel network for noise-robust scene text recognition (NRSTRNet), which comprehensively suppresses the noise in the three steps of STR, namely, text feature extraction, context encoding, and label decoding. Specifically, in the text feature extraction stage, the convolutional block attention module (CBAM) [13] is introduced to assign important weights to text regions and disregard some disturbances from the non-text area, reducing noise and redundancy in the input image. In the context encoding stage, fine-grained feature coding is proposed to effectively reduce the influence of previous noisy temporal features on current temporal features, while simultaneously reducing the impact of partial noise on the overall encoding by sharing contextual feature encoding parameters. In the decoding stage, a self-attention module is added to enhance the connections between different temporal features, thereby leveraging the global information to obtain noise-resistant features. These processing methods can enhance the local semantic information while taking into account the global semantic information. As a result, NRSTRNet enhances the stability under the influence of noise and achieves superior accuracy in text recognition, as shown in Fig. 1.

The main contributions of this paper are as follows:

-

(1)

We propose NRSTRNet for scene text recognition, which comprehensively suppresses the noise in all three primary stages of STR, namely, text feature extraction, context encoding, and label decoding. NRSTRNet can enhance the local semantic information while considering the global semantic information, thus eliminating the influence of noise.

-

(2)

Extensive experiments on a variety of public scene text benchmarks show that the proposed framework can exhibit state-of-the-art performance, notably in irregular text recognition, and it is exceptionally robust in noisy images.

The rest of this paper is organized as follows. The framework of the proposed model is described in detail in Sect. 3. The experimental analysis of the proposed model is given in Sect. 4. Section 5 provides the conclusions.

2 Related Works

Existing methods for scene text recognition can be roughly divided into two categories [14]: traditional and deep learning-based methods. This section analyzes and summarizes the research on both categories.

2.1 Traditional Text Recognition Methods

The traditional text recognition methods have been mainly based on the approach from the local to the whole; specifically, characters are first detected and classified, and then the obtained text is arranged into words or text lines using heuristic rules, language models, and dictionaries. Generally, by designing various artificial features and using them, the feature classifiers are trained using the support vector machine and other methods. For instance, Neumann et al. [1] used features such as text aspect ratio and whole area ratio for classification. Wang et al. [2] utilized the histogram of oriented gradient (HOG) features for the classification through a set of sliding windows. Yao et al. [3] proposed a classification based on the HOG features using a random forest algorithm to complete the character classification. Traditional methods mainly extract artificial features, which require complex and repetitive pre-processing and post-processing steps. Due to the limited representation of artificial design features, these methods are challenging to process natural scene texts with complex backgrounds.

2.2 Deep Learning-Based Text Recognition Methods

Unlike traditional text recognition methods, the working principle of most deep learning-based text recognition methods is from the whole to the local. The general recognition step is to extract the visual features of an image based on the model and then classify these features, which converts the text recognition problem into an image classification problem [5]. With the development of deep learning, research on scene text recognition has achieved promising results. This method includes three main steps: text feature extraction, context encoding, and label decoding. Effective extraction of text features has an important impact on the recognition results, so designing a reasonable network structure and depth is necessary to improve the feature discriminability of text images. All three networks of VGG [15], RCNN [16], and Resnet can be used as text feature extraction networks. VGG consists of multiple convolutional layers and several fully connected layers. RCNN is a variant of CNN that recursively adjusts its receptive field according to character shape. Resnet is a CNN with residual connections. Context feature encoding also has an important effect on the recognition result. Baek et al. [4] used a two-layer bidirectional long short-term memory (LSTM) to encode context features. However, because this method encodes the entire text, and the text line contains more noise than a single character, this model is highly susceptible to noise.

The existing decoding methods can be roughly divided into two types: connectionist temporal classification (CTC) [17] and attention (Attn) [18] methods. For a model using the CTC decoding method, Hu et al. [19] suggested using a convolutional neural network (CNN) and recurrent neural network (RNN) to encode the sequence features, while the CTC was suggested for the character decoder. For a model using the Attn decoding method, Yu et al. [20] suggested using CNN and bidirectional LSTM (BiLSTM) to encode sequence features and adding an auxiliary network to correct the prediction errors after decoding. Lee et al. [8] suggested using recursive CNN to capture longer contextual relevance and the Attn method to decode the generated sequence. Baek et al. [4] used ResNet to represent deep text features and the Attn method to decode. This method improved the model’s ability to express text, but it was less robust and could not handle noisy text images well. Li et al. [21] and Sheng et al. [22] combined visual and context features and added an attention mechanism to both the encoder and the decoder, demonstrating the effectiveness of the attention mechanism in scene text recognition. Zhong et al. [9] added a balance module to the decoder, which can reduce the impact of noise on characters. Huang et al. [10] used the results of text detection to correct the loss of text recognition and achieved better recognition performance. He et al. [11] employed the segmentation network to segment the characters, generated the corresponding graph node network according to the segmentation graph, and finally utilized the graph neural network for recognition. These methods can effectively remove noise in the encoder and decoder; however, they cannot handle text images containing a lot of noise due to the inability to suppress the noise of the encoder. Lu et al. [23] borrowed ideas from natural language processing (NLP) and adopted the architecture of transformer for encoding and decoding. This method cut the text image into many small blocks for processing, which greatly improved the decoding ability of the model, but it was also inevitably affected by small blocks containing noise. The aforementioned methods extract the context feature information from text images using an RNN in the encoder, which could better capture the contextual relevance of text features. These methods have achieved significant improvement over the traditional methods on regular text datasets.

However, existing methods do not comprehensively suppress the noise in all three key stages of STR, namely, feature extraction, context encoding and label decoding, resulting in suboptimal results.

3 Proposed Algorithm Framework

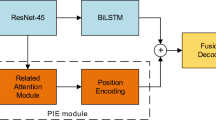

The structure of the proposed text recognition network is shown in Fig. 2. The proposed network mainly consists of a feature extraction layer, an encoding layer, and a decoding layer. The network takes text blocks directly as input and then normalizes the image and adjusts the curved text. Next, feature extraction is performed by a residual network with a convolutional block attention module. The encoding layer consists of two bidirectional LSTMs. To reduce the influence of noise, we divide the extracted sequence features into multiple feature subsets and then perform the bidirectional LSTM context encoding on each feature subset. Multiple feature subsets perform iterative learning by sharing the encoding layer parameters of the feature subset of the previous layer to reduce the noise impact on the prediction results. The decoding layer consists of a 1D self-attention block and an Attn decoder. The Attn decoder decodes the encoded information and outputs the corresponding character sequences. The details of the encoding and decoding layers are given later in this paper.

3.1 Residual Network Feature Extraction Based on Convolutional Block Attention Module

In this paper, a residual network based on a convolutional block attention module is used to extract features of text images. The residual network uses the convolutional block attention module while increasing the network depth, which enhances the ability of the network to characterize text images and achieve effective extraction of text information. Compared with the traditional residual networks, the residual network with the convolutional block attention module effectively refines the visual features of an image and makes the model focus on the text characters. In this study, the Grad-CAM method [24] is used to visualize the heat maps of two residual networks, and the results are shown in Fig. 3. In this figure, the first row denotes the sample image, and the second row shows the visualization result obtained by Baek’s model, and the third row presents the visualization result of introducing the CBAM convolutional block attention module into Baek’s model. The heat map comparison shows that the text characters are more easily focused after the introduction of the CBAM convolutional block attention module.

3.2 Convolutional Block Attention Module

The structure of the residual block is shown in Fig. 4, which shows that the convolutional block attention module includes two modules: channel domain attention and spatial attention. The channel domain attention module uses average pooling and maximum pooling to extract information \({F}_{avg}^{c}\) and \({F}_{max}^{c}\),where \({F}_{avg}^{c}\in {R}^{C\times 1\times 1}\), \({F}_{max}^{c}\in {R}^{C\times 1\times 1}\),from the feature layers, which is subsequently fed into a shared network containing a multilayer perceptron (MLP) [25] network, which generates a heat map \({M}_{c}\in {R}^{C\times 1\times 1}\). To reduce the parameter number, we set the hidden layer size to \({R}^{\frac{C}{r}\times 1\times 1}\), where \(r\) is the reduction ratio that is set to 16 in this study. The activation is subsequently performed by a ReLU function, and finally, a convolution layer is used to obtain \({M}_{c}\). The output of the channel domain attention module satisfies the following relationship:

where \(\sigma\) is the sigmoid function, \({W}_{0}\in {R}^{\frac{C}{r}\times C}\) and \({W}_{1}\in {R}^{C\times \frac{C}{r}}\).

Spatial attention focuses on location information and supplement to channel domain attention. Spatial attention is computed on channel domain attention \({M}_{c}\). Similar to the channel domain attention module, the spatial attention module uses average pooling and maximum pooling to extract information \({F}_{avg}^{S}\) and \({F}_{max}^{S}\), where \({F}_{avg}^{S}\in {R}^{1\times H\times W}\), \({F}_{max}^{S}\in {R}^{1\times H\times W}\). The spatial attention result \({M}_{S}\) is obtained by combining the above two vectors and passing them through a convolutional layer. The output of the spatial attention module satisfies the following condition:

where \(\sigma\) is the sigmoid function, and \({f}^{7\times 7}\) indicates that the convolutional kernel is a \(7\times 7\) convolution operation. For convenience, we set the parameters according to references [6] and [10]. Extensive experiments have proved that the parameter settings in references [6] and [10] can obtain satisfactory results in different computer vision tasks. On this basis, we add channel attention and spatial attention to the network parameter settings. The entire residual network structure and parameter settings are given in Table 1.

The convolutional block attention module residual network takes a fixed-size text image as input. After passing the input signal through the \(3\times 3\) convolution operation of two convolution kernels and then through a maximum pooling layer, 64 convolution feature maps with half the height and width of the original image are obtained. After the output of the convolution layer, batch normalization and non-linear activation of the feature map is performed by the BN and ReLU activation layers, respectively. The main function of the max pool layer is to extract the geometric features of the text edge in an image. These geometric features are used as an input of the residual network based on the convolutional block attention module.

Compared with the traditional residual network, the main feature of this network is that after the convolution of each block is completed, the signal also passes through the channel attention and spatial attention modules. The number of channels of feature maps gradually increases with the block index, such that the latter block has twice more feature maps than the former block. The blocks are designed to align the text images with the predicted text length to go through the decoding layer for label prediction. The output of each residual unit structure satisfies the following condition:

where \(x\) is obtained from the identity mapping, and \(\widetilde{F}\) represents the output of each unit after two convolutional layers and CBAM.

The output \({\widetilde{F}}^{l}\) of each unit is calculated by

where \({x}_{i}^{l-1}\) denotes the input feature map; \({\widetilde{F}}^{l}\) is the output of the \(l\) th convolutional and CBAM layers; \(M\) represents the set of input feature maps; \({W}_{i}^{l}\) is the convolution weight corresponding to the convolutional layer; \(f\left(x\right)\) represents the ReLU activation function; \({b}^{l}\) is the bias; \({M}_{c}\) and \({M}_{s}\) denote the channel domain attention and spatial attention function, respectively. The output of the final CBAM layer is passed through two convolution layers with kernel sizes of 2 × 2, obtaining the final output \(F\mathrm{^{\prime}}\).

3.3 Sequence Context Feature Encoding

Most existing frameworks simply applied BiLSTM-based encoders on the entire image, thereby encoding the current temporal features according to previous temporal features. If the previous temporal features contain noise, the current temporal features would be affected. As a result, existing frameworks have poor recognition results when noise, punctuation, and character sticking appear on the image, as shown in Fig. 5.

To reduce the influence of previous temporal features (which may contain noise) on current temporal features, we combine the BiLSTM encoder and fine-grained feature coding, as shown in Fig. 6. Specifically, a two-layer bidirectional long- and short-term memory network structure is used to learn the long-term dependencies of text sequences. Additionally, we propose a fine-grained feature coding method to minimize the noise in the text line features. The fine-grained feature coding method severs the connection between different column vectors in the feature map by encoding separate column vectors. Therefore, column feature vectors containing noise will not affect the nearby feature vectors. More specifically, the fine-grained feature coding method aims to divide the text features into adjacent subsets according to the length of the predicted text and then encode each of the subsets. To reduce the impact of partial noise on the overall encoding, the encoding modules of the subsets share the weights. Finally, \(T\) coding features are connected to form a single encoding feature. Encoding fine-grained features separately could effectively reduce the impact of the noise of a single character on the surrounding characters, thus making the encoding layer focus on text information and improving the recognition accuracy of noisy images. The pseudo-code of the feature encoding implementation combined with fine-grained learning is given in Algorithm 1.

Algorithm 1 Implementation of feature coding combined with fine-grained learning.

Note: operator is a stacking operation between \(BiLSTM\left({F}^{^{\prime}}\left(batch,i,512\right)\right)\) and \(v\) at the width dimension.

Algorithm 1 consists of the following steps:

-

(1)

The input features are divided into columns, where each column feature vector is 512 channels. A total of 26 feature vectors are generated, arranged by column number.

-

(2)

The feature vectors are placed in the BiLSTM encoder to obtain 26 encoded vectors of 256 channels.

-

(3)

The 26 encoding vectors of 256 channels are combined to form a 256 channel by 26 column output.

3.4 Enhancement and Transcription of Text Features

Using a fine-grained encoder can reduce the influence of previous features (which may contain noise) on current features. However, this encoding also weakens the connection between different temporal features, making it unable to leverage the global information to obtain more noise-resistant features. To solve this problem, we use 1D self-attention [21] to enhance the local features; namely, the attention map is calculated by a fully connected layer, and the attention features are obtained by multiplying the feature layer with the attention map, as shown in Fig. 7. This attention feature has better noise immunity. The Attn transcription mechanism [5] does not require the complete original sentence to be encoded as a fixed-length vector. That is, when transcription is performed, a different part of the original text is used for each output step, and the next transcription step is performed based on the input sentence and generated content. The details of the Attn transcription mechanism are shown in Fig. 8.

The transcribed translation \({S}_{t}\) is given by

where \({y}_{t-1}\) is the label value at time (t − 1); \(f\) indicates an LSTM network; \({h}_{j}\) refers to the jth input of the Attn transcription; \({\alpha }_{t,j}\) is a weight whose calculation involves \({e}_{t,j}\), which satisfies the following relationship:

where \({w}^{T}\), \(W\), and \(V\) are three weights corresponding to different fully connected layers, and \(b\) is the bias of the fully connected layer corresponding to \({h}_{j}\).

After obtaining the label sequence with the maximum probability by transcription, the starting character “[GO]” and the ending character “[s]” are removed, and the text recognition label is obtained.

3.5 Loss Function

The loss function of an end-to-end network structure is defined as a cross-entropy cost function, and it is defined as

where \({X}_{i}\) is an input training image, \({Y}_{i}\) is the sequence of probability distributions obtained after \({X}_{i}\) passes through the network, and \({l}_{i}\) denotes the true labels of the training images.

4 Experimental Analysis

Algorithm comparison and analysis are conducted to verify the effectiveness of the proposed NRSTRNet. For fairness of comparison, the same training set as that used in the work of Baek and other literature is used, and it includes approximately 14.4 million text samples.

4.1 Datasets

When training a scene text recognition model, synthetic datasets have been mostly used instead of real-world datasets because of the requirement for a large training dataset. In this study, the following synthetic datasets are used for training:

-

(1)

MJSynth (MJ) [26]. This dataset is an artificial, synthetic natural scene text image dataset of complex scenes that contain about 8.9 million images.

-

(2)

SynthText (ST) [27]. This dataset is a synthetic scene text dataset that contains about 5.5 million images.

To test the performance of the proposed model in scene text recognition, we use the test images of the following real-world datasets as test data:

-

(1)

IIIT5K [28]. The IIIT5K dataset is obtained from Google search data. This dataset contains information on billboards, door numbers, movie posters, and signboards. This dataset contains 2000 training images and 3000 test images.

-

(2)

SVT [29]. The SVT dataset contains street images collected by Google Street View. This dataset has the characteristics of noise, blur, and low resolution and includes 257 training images and 647 test images.

-

(3)

ICDAR2003 (IC03) [30]. This dataset is obtained by filtering out non-alphanumeric characters and images with a character length less than three from the IC03 dataset. It includes a total of 867 test images.

-

(4)

ICDAR2013 (IC13) [31]. This dataset is obtained by filtering out non-alphanumeric characters from the IC13 dataset. It includes 857 training images and 1,015 test images.

-

(5)

ICDAR2015 (IC15) [32]. The IC15 dataset is mainly collected by Google Glass during a wearer’s activities, so this dataset suffers from much noise and low resolution. This dataset has two versions. One version has 1811 images and is obtained after removing non-alphanumeric characters, and the other version has 2077 images. We use the latter version of the dataset for testing.

-

(6)

SVT Perspective (SVTP) [33]. This dataset, like the SVT dataset, is collected from Google Street View. Many images in this dataset contain perspective projections, and a total of 645 test images are in this dataset.

-

(7)

CUTE80 (CUTE) [34]. Most of the data in this dataset are curved text images. A total of 288 test images are available.

4.2 Network Structure and Parameter Settings

We first present the implementation details of NRSTRNet (Fig. 2). The number of hidden nodes per LSTM unit is 256. The size of the training images is normalized to \(100\times 32\) pixels before they are input to the convolutional layer for feature extraction. The training dataset is randomly shuffled during the training, and every 128 samples form a batch size of data. To increase the diversity of training samples, 64 samples in each batch are from the MJ dataset and 64 samples are from the ST dataset. The network is trained using the AdaDelta algorithm with a decay rate of \(\rho =0.95\).

To further explore the influence of text recognition rate of the algorithm model, we make the following adjustments to the network structure and fine granularity.

-

(1)

Influence of network structure on recognition results

To verify the influence of the network structure on recognition results, we use only the IC03 and SVTP test sets in the model evaluation experiment, where IC03 is a regular dataset and SVTP is an irregular dataset. Table 2 lists the recognition results of the network on the two test sets after adding different modules, where CBAM stands for the convolutional block attention module, FGI stands for the fine-grained feature coding module, and SA stands for the self-attention module. As shown in Table 2, after adding convolutional attention to the residual network, the accuracy of the model improves by 0.2% on the IC03 dataset and remains unchanged on the SVTP dataset. After adding CBAM and fine-grained coding, the accuracy of the model improves by 0.3% on the IC03 dataset and by 0.8% on the SVTP dataset. Furthermore, after adding the CBAM and self-attention, the accuracy of the model improves by 0.4% on the IC03 dataset but improves by 1.4% on the SVTP dataset. Furthermore, after adding the CBAM, fine-grained coding, and self-attention, the accuracy of the model improves by 0.6% on the IC03 dataset and by 2.8% on the SVTP dataset.

Figure 9 shows the loss curves of the five models on the MJSynth and SynthText training datasets. As shown in Fig. 9, after the convolutional block attention module is added, the loss curve of the proposed model reduces more slowly than that of the baseline model, which indicates that the convolutional block attention module could effectively extract the text features of an image. After the fine-grained coding module is added, the learned parameters are significantly increased, so the loss decreases more slowly compared to the previous model but still is faster than that of the baseline model. After the self-attention module is added, the loss reduction curve decays significantly.

-

(2)

Influence of fine granularity on recognition results

The influence of fine granularity on the recognition results during the training process is also considered. The recognition accuracy of the proposed model on the test set under different fine-grained strategies is shown in Fig. 10. The fine-grained formula is

where \({P}_{divisions}\) is the size of fine-grained features in each batch of data during training, and T = 26 is the maximum prediction length of each batch of data during training. When \({F}_{g}\)=1, it means that the model did not use fine-grained coding; that is, the model is the Baek-CBAM-SA model.

As shown in Fig. 10, as the fine granularity gradually increases, the accuracy of the proposed model on the regular text datasets (IIIT5K, SVT, IC03, and IC13) do not change much, but its accuracy on the irregular text datasets (IC15, SVTP, and CUTE) decreases. This result indicates that appropriate granularity could enable the proposed model to achieve better recognition performance on complex and low-quality images.

4.3 Experimental Comparison of Different Methods

To determine the recognition performance of the proposed method more intuitively, we compare NRSTRNet with different methods from the literature. The results are given in Table 3, where the boldfaced values denote the best-obtained result, and the underlined values represent the second-best result. In the comparison process, the training dataset used for each model is consistent with that in the literature.

As shown in Table 3, the proposed method performs better than the other methods on several standard datasets. Compared with the mainstream methods, such as R2AM, STARNet, GRCNN, Rosetta, SAFE, and ViTSTR, the proposed algorithm obtains better recognition results on several test datasets. The block diagram of the method presented by Baek et al. [4] is similar to that of the proposed method. Namely, both of them use ResNet as a basic feature extraction network and complete text transcription using the Attn, but the prediction results of the characters of the method are greatly affected by noise. By comparing the results obtained in this study with the recognition results reported by Baek et al. [4], we can conclude that the robustness of the proposed method has been improved to a certain extent, especially on the irregular dataset.

To further demonstrate the noise-robust of NRSTRNet, we add noises and blur to the regular datasets (i.e., IIIT5K, SVT, IC03, and IC13). Figure 11 depicts some samples after salt and pepper noise was added, whereas Fig. 12 depicts some samples after a Gaussian blur was added. As shown in Tables 4 and 5, compared with the work of Baek et al. [4], the accuracy improvement of NRSTRNet on the noisy dataset and blur dataset is more significant than that on the original dataset, indicating that NRSTRNet is a promising STR method for significantly reducing the impact of noise. A comparison of Tables 4 and 5 shows that the model has a larger gap than the original model on the dataset with noise, which indicates that the model has better robustness to noise.

To illustrate the robustness of NRSTRNet, we test some images with large deviations from regular text. Figure 13 shows some examples of text images that are significantly different from conventional text images. As can be seen from these examples, the contrast between the text and the background is not obvious, and there are untrained special characters in the text, etc. It can be seen from the test results that compared with the work of Baek et al. [4], NRSTRNet can resist large deviations and has better robustness.

5 Conclusion

This paper proposes NRSTRNet to comprehensively overcome the problem of noise interference in scene text recognition. According to the experimental results, the conclusions can be drawn as follows:

-

(1)

NRSTRNet is thoroughly evaluated on the regular and irregular datasets, demonstrating that NRSTRNet could improve the ability to characterize text images, enhance stability under the influence of noise, and achieve superior accuracy in text recognition. NRSTRNet outperforms SOTA STR models on irregular text recognition benchmarks by 2% on average, and it is exceptionally robust in noisy images.

-

(2)

NRSTRNet is investigated with detailed ablation studies, and experimental results show that all components of NRSTRNet are important and complementary to each other. The experimental results show that local information is effectively extracted through fine-grained coding, and the accuracy rate is slightly increased. Through the self-attention module, global information is effectively integrated. A large improvement in accuracy is observed when the model extracts local and global information.

-

(3)

The proposed fine-grained coding, which encodes the separate column vectors in the feature map, effectively improves the performance of the encoder. The proposed coding method can be applied in NLP, speech recognition, and other fields.

In a nutshell, NRSTRNet is promising for scene text recognition and exceptionally robust when applied to noisy images. Our study provides new insight into noise-robust scene text recognition and has a certain significance for improving text recognition accuracy. In the future, we will incorporate LSTMs with skip connections into our framework so that additional semantic information can be utilized.

Data Availability

The dataset used in this research is openly accessible via: https://www.robots.ox.ac.uk/~vgg/data/text/ and https://www.robots.ox.ac.uk/~vgg/data/scenetext/.

Abbreviations

- STR:

-

Scene text recognition

- NRSTR:

-

Noise-robust scene text recognition

- CBAM:

-

Convolutional block attention module

- HOG:

-

Histogram of oriented gradient

- VGG:

-

Visual geometry group

- RCNN:

-

Regions with CNN features

- CNN:

-

Convolutional neural networks

- LSTM:

-

Long short-term memory

- BiLSTM:

-

Bi-directional LSTM

- CTC:

-

Connectionist temporal classification

- Attn:

-

Attention

- RNN:

-

Recurrent neural network

- NLP:

-

Natural language processing

- Grad-CAM:

-

Gradient-weighted Class Activation Mapping

- MLP:

-

Multilayer perceptron

- BN:

-

Batchnorm

- ReLU:

-

Rectified linear activation function

- FGI:

-

Fine-grained feature coding module

- SA:

-

Self-attention module

References

L. Neumann, J. Matas, Real-time scene text localization and recognition. In 2012 IEEE conference on computer vision and pattern recognition, 2012: IEEE, p. 3538–3545

K. Wang, S. Belongie, Word spotting in the wild. In European conference on computer vision, 2010: Springer, p. 591-604

C. Yao, X. Bai, B. Shi, and W. Liu, Strokelets: a learned multi-scale representation for scene text recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2014, p. 4042–4049

J. Baek et al., What is wrong with scene text recognition model comparisons? Dataset and model analysis. In Proceedings of the IEEE/CVF international conference on computer vision, 2019, p. 4715–4723

Shi, B., Bai, X., Yao, C.: An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 39(11), 2298–2304 (2016)

Z. Cheng, F. Bai, Y. Xu, G. Zheng, S. Pu, S. Zhou, Focusing attention: Towards accurate text recognition in natural images. In Proceedings of the IEEE international conference on computer vision, 2017, p. 5076–5084

S. K. Ghosh, E. Valveny, A. D. Bagdanov, Visual attention models for scene text recognition. In 2017 14th IAPR international conference on document analysis and recognition (ICDAR), 2017, vol. 1: IEEE, p. 943–948

C.-Y. Lee, S. Osindero, Recursive recurrent nets with attention modeling for ocr in the wild. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, p. 2231–2239

D. Zhong et al., SGBANet: semantic GAN and balanced attention network for arbitrarily oriented scene text recognition. In European conference on computer vision, 2022: Springer, p. 464-480

M. Huang et al., SwinTextSpotter: scene text spotting via better synergy between text detection and text recognition. In proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2022, p. 4593–4603

Y. He et al., Visual semantics allow for textual reasoning better in scene text recognition. In Proceedings of the AAAI conference on artificial intelligence, 2022, vol. 36, no. 1, p. 888-896

B. Shi, X. Wang, P. Lyu, C. Yao, X. Bai, Robust scene text recognition with automatic rectification. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, p. 4168–4176

S. Woo, J. Park, J.-Y. Lee, I. S. Kweon, Cbam: convolutional block attention module. In proceedings of the European conference on computer vision (ECCV), 2018, p. 3–19

Ma, X., He, K., Zhang, D., Li, D.: PIEED: position information enhanced encoder-decoder framework for scene text recognition. Appl. Intell. 51(10), 6698–6707 (2021)

Yaseliani, M., Hamadani, A.Z., Maghsoodi, A.I., Mosavi, A.: Pneumonia detection proposing a hybrid deep convolutional neural network based on two parallel visual geometry group architectures and machine learning classifiers. IEEE Access 10, 62110–62128 (2022)

S. Kido, Y. Hirano, N. Hashimoto, Detection and classification of lung abnormalities by use of convolutional neural network (CNN) and regions with CNN features (R-CNN). In 2018 International workshop on advanced image technology (IWAIT), 2018: IEEE, p. 1–4

L. Chao, J. Chen, W. Chu, Variational connectionist temporal classification. In European conference on computer vision, 2020: Springer, p. 460-476

Wu, Y., et al.: Sequential alignment attention model for scene text recognition. J. Vis. Commun. Image Represent. 80, 103289 (2021)

W. Hu, X. Cai, J. Hou, S. Yi, Z. Lin, Gtc: guided training of ctc towards efficient and accurate scene text recognition. In Proceedings of the AAAI conference on artificial intelligence, 2020, vol. 34, no. 07, p. 11005-11012

A. K. Bhunia, P. N. Chowdhury, A. Sain, Y.-Z. Song, Towards the unseen: Iterative text recognition by distilling from errors. In Proceedings of the IEEE/CVF international conference on computer vision, 2021, p. 14950–14959

H. Li, P. Wang, C. Shen, G. Zhang, Show, attend and read: A simple and strong baseline for irregular text recognition. In proceedings of the AAAI conference on artificial intelligence, 2019, vol. 33, no. 01, p. 8610-8617

F. Sheng, Z. Chen, B. Xu, NRTR: a no-recurrence sequence-to-sequence model for scene text recognition. In 2019 international conference on document analysis and recognition (ICDAR), 2019: IEEE, p. 781–786

Lu, N., et al.: Master: multi-aspect non-local network for scene text recognition. Pattern Recognit. 117, 107980 (2021)

L. Chen, J. Chen, H. Hajimirsadeghi, G. Mori, Adapting grad-cam for embedding networks. In proceedings of the IEEE/CVF winter conference on applications of computer vision, 2020, p. 2794–2803

Zhang, C., et al.: A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote. Sens. 140, 133–144 (2018)

Jaderberg, M., Simonyan, K., Vedaldi, A., Zisserman, A.: Synthetic data and artificial neural networks for natural scene text recognition. arXiv preprint arXiv, 1406.2227 (2014)

A. Gupta, A. Vedaldi, A. Zisserman, Synthetic data for text localisation in natural images. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, p. 2315–2324

A. Mishra, K. Alahari, C. Jawahar, Scene text recognition using higher order language priors. In BMVC-British machine vision conference, 2012: BMVA

K. Wang, B. Babenko, S. Belongie, End-to-end scene text recognition. In 2011 international conference on computer vision, 2011: IEEE, p. 1457–1464

Lucas, S.M., et al.: ICDAR 2003 robust reading competitions: entries, results, and future directions. IJDAR 7(2), 105–122 (2005)

D. Karatzas et al., ICDAR 2013 robust reading competition. In 2013 12th international conference on document analysis and recognition, 2013: IEEE, p. 1484–1493

D. Karatzas et al., ICDAR 2015 competition on robust reading. In 2015 13th international conference on document analysis and recognition (ICDAR), 2015: IEEE, p. 1156–1160

T. Q. Phan, P. Shivakumara, S. Tian, C. L. Tan, Recognizing text with perspective distortion in natural scenes. In Proceedings of the IEEE international conference on computer vision, 2013, p. 569–576

Risnumawan, A., Shivakumara, P., Chan, C.S., Tan, C.L.: A robust arbitrary text detection system for natural scene images. Expert Syst. Appl. 41(18), 8027–8048 (2014)

Liu, W., Chen, C., Wong, K.-Y.K., Su, Z., Han, J.: Star-net: a spatial attention residue network for scene text recognition. BMVC 2, 7 (2016)

J. Wang, X. Hu, Gated recurrent convolution neural network for ocr. Advances in neural information processing systems, vol. 30, 2017

F. Borisyuk, A. Gordo, V. Sivakumar, Rosetta: large scale system for text detection and recognition in images. In Proceedings of the 24th ACM SIGKDD international conference on knowledge discovery & data mining, 2018, p. 71–79

R. F. Ghani, Robust character recognition for optical and natural images using deep learning. In 2019 IEEE student conference on research and development (SCOReD), 2019: IEEE, p. 152–156

R. Yan, L. Peng, S. Xiao, G. Yao, Primitive representation learning for scene text recognition. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, p. 284–293

Acknowledgements

The authors would like to thank the editors and the anonymous referees for their valuable time and efforts in handling and reviewing this paper.

Funding

This work was supported in part by the Characteristic Innovation Projects of Colleges and Universities of Guangdong Province under Grant 2022KTSCX091, Grant 2021KTSCX136, in part by the Guangdong Basic and Applied Basic Research Foundation under Grant 2020A1515111154, Grant 2020A1515110458, in part by the Teaching Quality and Reform Project of Guangdong University of Education under Grant 2022jxgg09, in part by the Jiangmen Science and Technology Project under Grant 2021030100050004285, and in part by the Joint Research and Development Fund of Wuyi University, Hong Kong and Macau under Grant 2021WGALH19.

Author information

Authors and Affiliations

Contributions

HY: conceptualization, methodology, writing—original draft, writing–review and editing, and funding acquisition. YH: data curation, methodology, software, visualization, and writing—original draft. CMV: investigation and formal analysis. YJ: resources and formal analysis. ZZ: validation. MY: validation. CC: funding acquisition, writing—review and editing, and supervision.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no competing interests.

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yue, H., Huang, Y., Vong, CM. et al. NRSTRNet: A Novel Network for Noise-Robust Scene Text Recognition. Int J Comput Intell Syst 16, 5 (2023). https://doi.org/10.1007/s44196-023-00181-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00181-1