Abstract

To prove that a certain algorithm is superior to the benchmark algorithms, the statistical hypothesis tests are commonly adopted with experimental results on a number of datasets. Some statistical hypothesis tests draw statistical test results more conservative than the others, while it is not yet possible to characterize quantitatively the degree of conservativeness of such a statistical test. On the basis of the existing nonparametric statistical tests, this paper proposes a new statistical test for multiple comparison which is named as t-Friedman test. T-Friedman test combines t test with Friedman test for multiple comparison. The confidence level of the t test is adopted as a measure of conservativeness of the proposed t-Friedman test. A bigger confidence level infers a higher degree of conservativeness, and vice versa. Based on the synthetic results generated by Monte Carlo simulations with predefined distributions, the performance of several state-of-the-art multiple comparison tests and post hoc procedures are first qualitatively analyzed. The influences of the type of predefined distribution, the number of benchmark algorithms and the number of datasets are explored in the experiments. The conservativeness measure of the proposed method is also validated and verified in the experiments. Finally, some suggestions for the application of these nonparametric statistical tests are provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In computer science and its applications, when a new algorithm or an improved version (both referred to as the control algorithm in this paper) is proposed, it is quite important to compare it with existing algorithms (referred to as benchmark algorithms in this paper) on a number of datasets, so as to prove that the control algorithm performs better than the benchmark ones, i.e. the results of the control algorithm is statistically superior to the benchmark algorithms with respect to the considered datasets. Let X1 represent the control algorithm, and X2, X3, X4, …, Xk represent the \(\left( {K - 1} \right)\) benchmark algorithms. The challenge lies in the test to better judge whether the control algorithm X1 has a significant advantage over other benchmark algorithms on some experimental datasets. Due to the diversity of datasets and various random factors in training and testing processes, it is rare that the control algorithm performs better on all experimental datasets. Therefore, it is difficult to draw a meaningful conclusion without adopting a statistical hypothesis test to favor the decision process [21].

Statistical hypothesis tests can be divided into two categories, as shown in Table 1: parametric statistical tests and nonparametric statistical tests [4, 14, 23]. Parametric statistical tests assume that results data comes from a known type of probability distribution and makes inferences about the parameters of the distribution [14]. On the contrary, nonparametric statistical tests usually have no preliminary assumption on the distribution of results data and therefore it is applicable under various circumstances. Most nonparametric statistical tests adopt rankings instead of raw data for their hypothesis tests. A transformation procedure is adopted in such statistical tests to obtain rankings of control and benchmark algorithms [4, 23]. The choice of statistical tests depends on the specific application, data characteristics, and especially the researchers.

Parametric statistical tests often have a number of assumptions on data characteristics for comparison. For example, analysis of variance (ANOVA) requires the data to meet the following three conditions: independence, normality and homogeneity [14]. When the data meets these assumptions, parametric statistical tests are usually more effective [14]. Otherwise, parametric statistical tests may draw biased conclusions. In practical applications, it is barely possible to verify that the results obtained by the algorithms on different datasets fall into these assumptions. Therefore, nonparametric statistical tests are commonly considered [4, 8].

When selecting a nonparametric statistical test, it is also necessary to distinguish the pairwise comparison and the multiple comparison. In pairwise comparison, nonparametric statistical tests include Wilcoxon signed rank test [7], etc. For multiple comparison, nonparametric statistical tests include Friedman test, Multiple Sign-test [29], and Contrast Estimation based on medians [9]. Some popular nonparametric statistical tests for multiple comparison are shown in Fig. 1.

Nonparametric Statistical Tests [14]

Although nonparametric statistical tests have been widely adopted, there are still some interesting questions to discuss: (1) selection of proper nonparametric statistical tests from all the candidates, (2) minimum number of datasets for a fixed number of algorithms, and (3) trustworthiness of the conclusion given by a nonparametric statistical test. These questions are not independent among each other. For example, the trustworthiness of the conclusion depends on the adopted nonparametric statistical test and the number of datasets, while, at the same time, the minimum number of datasets depends also on the adopted nonparametric statistical test. For the same datasets and algorithms, different nonparametric statistical tests do not draw necessarily conclusions with the same conservativeness.

Considering the above questions, this work tries to analyze qualitatively the conservativeness of different nonparametric statistical tests, to give an empirical minimum number of datasets for drawing a trustable comparison results, and to propose a new multiple comparison test for multiple comparison with a quantitative measure of conservativeness. This work will not cover all the state-of-the-art nonparametric statistical tests, but focus on variants of Friedman test (including Friedman test and Aligned Ranks Friedman test), since they have been widely adopted in the published papers [3, 6, 26, 28]. The Friedman test judges the existence of a significant difference among the considered algorithms by converting the experimental results into rankings according to their achieved performance. In case of existence of significant difference, it is necessary to use a post hoc procedure to adjust the p-value to express the probability of the Type I error in the multiple comparison with a controlled Type II error. These post hoc procedures are also listed in Fig. 1. Among the previous nonparametric statistical tests, Friedman test is more effective and is widely used by many scholars [20, 25].

Some similar work has been reported on the analysis of the nonparametric statistical tests for multiple comparison. O’Gorman [22] compared the F-test, Friedman test and several Aligned Friedman tests with Monte Carlo simulation, taking the percentage of rejecting the null hypothesis in the simulation as the performance measure. Pereira et al. [24] used the same performance measure, and compared various Friedman test and post hoc procedures. García et al. (2010 used adjusted p-values (APVs) as performance measure to compare the Friedman test, its two variants, and eight post hoc procedures. However, these works do not cover specifically the questions raised in the above paragraphs.

To quantify the conservativeness of a statistical test for multiple comparison, this paper proposes a new method named t-Friedman test which combines t test and Friedman test to make the test more flexible and trustworthy. Instead of deciding the ranks with the absolute values of the experimental results, the ranks in t Friedman test are given by t test on the results of a cross validation process. Experiments have been carried out with Monte Carlo simulations. T-Friedman test is proved to be an interesting alternative of state-of-the-art statistical tests considering the experimental results. Some suggestions are given based on the experimental results.

The remaining of this paper is organized as follows. Section 2 introduces the related work of multiple comparison tests and post hoc procedures, as well as the proposed method t-Friedman test. The experimental process and results are analyzed in Sect. 3, with some empirical suggestions and guidance. Finally, Sect. 4 summarizes this paper and gives some perspectives on future work.

2 Multiple Comparison Tests and Post Hoc Procedures

The process for drawing a quantitative conclusion by multiple comparison takes generally two steps. The first step is to identify the significant difference among the considered algorithms. In case of the existence of significant difference, the second step is a pairwise comparison via a post hoc procedure, to control the probability of making Type I error.

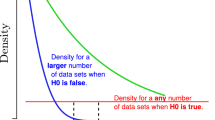

When comparing the differences among different algorithms, it is assumed that the total number of algorithms considered, including the control algorithm, is K (K ≥ 3) and the number of datasets is N. The null hypothesis is that there is no significant difference among the performances of the K algorithms on these N datasets, which is usually represented by H0. The alternative hypothesis is that significant difference exists, which is usually represented by H1.

This section first introduces the related work of multiple comparison methods, mainly the widely used Friedman test and its variant Aligned ranks Friedman test. Then, the related work of popular post hoc procedures is briefly explained. Finally, the proposed method t-Friedman test in this paper is explained in detail.

2.1 Related Work

2.1.1 Multiple Comparison Tests

This section introduces the most popular multiple comparison method Friedman test and its variant Aligned ranks Friedman test in detail.

2.1.1.1 Friedman Test

The Friedman test [12, 13] needs to rank K algorithms on each dataset with respect to the absolute value of the results given by these algorithms. The rank of the algorithm with highest performance is 1, and the one with lowest performance is ranked K. Then the value of the statistic based on all rankings is calculated as shown in Eqs. (1) and (2) with \({r}_{i}^{j}\) being the ranking of the performance of the j-th algorithm on the i-th dataset. This statistic obeys the chi-square distribution with K-1 degrees of freedom.

Iman and Davenport [5] thought that the statistic was conservative, and as an extension, they proposed the statistic shown in Eq. (3).

The statistics FF shown in Eq. (3) obey the F distribution with K-1 and (K-1) (N-1) degrees of freedom. By checking the F distribution table, the critical value under the specified significance level (usually α = 0.05 or 0.01) can be obtained. By comparing this critical value with the value calculated with Eq. (3), the null hypothesis is rejected if the statistics value FF is greater than the critical value, indicating that there are significant differences among the K algorithms. Then one may carry out a post hoc procedure to further analyze whether the control algorithm is significantly better than each benchmark algorithm in the experiments. On the contrary, if the value is less than or equal to the critical value, the null hypothesis is accepted, indicating that there are no significant differences among the K algorithms.

As an alternative, the p-value can be calculated based on the statistics value FF and its distribution obtained in Eq. (3), i.e. the probability, under the null hypothesis, of obtaining a result at least as extreme as the observed one. When the p-value is less than the specified significance level α, the null hypothesis can be rejected at this significance level. Otherwise, the null hypothesis is accepted.

2.1.1.2 Aligned Ranks Friedman Test

The Friedman test ranks the considered K algorithms on each dataset, separately. This process actually ignores the performance difference on different datasets [14]. The Aligned Ranks Friedman test calculates the mean performance value of all algorithms on each dataset, and, then, subtracts the corresponding mean value from the results of all algorithms to get the difference. The ranking of the algorithms is obtained with respect to the difference on all these N datasets, where the algorithm with highest difference is ranked 1, and the one with lowest difference is ranked N × K.

Finally, the value of the statistic shown in Eq. (4) is calculated according to the obtained ranking values:

where Ri· is the sum of the rankings of all algorithms on the i-th dataset and R·j is the sum of the ranks of the j-th algorithm on all datasets.

The statistic T shown in Eq. (4) obeys a chi-square distribution with K-1 degrees of freedom. The critical value under the specified significance level can be obtained by checking the chi-square distribution table. By comparing the value of T with this critical value, the null hypothesis is rejected if the value of T is greater than critical value, and verse versa.

2.1.2 Post Hoc Procedures

In the multiple comparison tests described in Sect. 2.1.1, Friedman test and Aligned Ranks Friedman test can determine whether there are significant differences among the considered algorithms in the experiments. However, it cannot identify which one is significantly better than the others. Therefore, once the null hypothesis is rejected by these multiple comparison tests, it is usually necessary to adopt a post hoc procedure for pairwise comparison. In this work, the objective is to judge if there is a significant difference between the control algorithm and each benchmark algorithm, i.e. the control algorithm needs to be compared with the other K-1 benchmark algorithms.

As explained in Sect. 2.1.1.1, rejection of the null hypothesis can be justified by comparing the p-value and the specified significance level α. If the p-value is very small for one paired comparison, generally less than the significance level, indicating that the probability making errors in rejecting the null hypothesis is tolerable, so the null hypothesis can be rejected.

For multiple comparisons, there are K-1 paired algorithms for statistical test. Assuming that each paired algorithms has a probability α to make Type I error when rejecting the null hypothesis, the probability of not making Type I error is \(1-\alpha\). Therefore, the probability that none of the K-1 paired algorithms makes Type I error is \({\left(1-\alpha \right)}^{K-1}\), and the probability that one or more statistical test make Type I error is \(1-{\left(1-\alpha \right)}^{K-1}\). When the specified significance level α is 0.05 and the number of algorithms K is 10, the probability of making one or more Type I error in all the statistical tests is \(1-{\left(1-0.05\right)}^{10-1}=0.3698\) [14]. Obviously, there will be a greater probability of making error in multiple comparison tests if no additional processing is performed.

The post hoc procedure is to adjust the significance level α or the p-value associated to each paired algorithm to reduce the probability of making Type I errors. The adjusted p-values are noted as APVs in short. Before launching post hoc procedures, the p-value of a Friedman test should be calculated. The specific calculation processes are as follows.

The first step is to calculate the test statistic z for each paired algorithm.

Friedman test:

where R0 and Rj are separately the average rankings by Friedman test of the control algorithm and the j-th benchmark algorithm on all datasets.

Aligned Ranks Friedman test:

where R0 and Rj are separately the average rankings by Aligned Ranks Friedman test of the control algorithm and the j-th benchmark algorithm on all datasets.

The z-values given by Eqs. (5) and (6) all follow approximately the standard normal distribution N(0,1). Therefore, the corresponding p-value can be calculated according to the z-values and the normal distribution law, as shown in Eqs. (7) and (8).

After obtaining the p-value, the p-value can be adjusted according to the post hoc procedures. The post hoc procedures can be divided into four categories according to the adjustment process, and the calculation process is as follows [11, 14, 16].

Suppose that \({p}_{1},{p}_{2}...{p}_{K-1}\) are the p values when the control algorithm is compared with the K-1 benchmark algorithms, and they are arranged in ascending order, that is, \({p}_{1}\le {p}_{2}\le ...\le {p}_{K-1}\). \({H}_{01},{H}_{02}...{H}_{0(K-1)}\) are the corresponding null hypotheses.

-

a.

One-step: Bonferroni–Dunn procedure [10]

Bonferroni–Dunn procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\left(K-1\right){p}_{i}\)

-

b.

Step-down: Holm procedure [17], Holland procedure [16], Finner procedure [11]

Holm procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\mathrm{max}\left\{\left(K-j\right){p}_{j}:1\le j\le i\right\}\)

Holland procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\mathrm{max}\left\{1-{\left(1-{p}_{j}\right)}^{K-j}:1\le j\le i\right\}\)

Finner procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\mathrm{max}\left\{1-{\left(1-{p}_{j}\right)}^{\left(K-1\right)/j}:1\le j\le i\right\}\)

-

c.

step-up: Hochberg procedure [15], Hommel procedure [18], Rom procedure [27]

Hochberg procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\mathrm{max}\left\{\left(K-j\right){p}_{j}:\left(K-1\right)\ge j\ge i\right\}\)

The calculation of APVs for Hommel procedure and Rom procedure are more complicated, so they are not described in detail here. For details, please refer to [18, 27].

-

d.

Two-step: Li procedure ((David) Li, [1].

Li procedure:

\(AP{V}_{i}=\mathrm{min}\left\{{v}_{i},1\right\}\), where \({v}_{i}=\frac{{p}_{i}}{{p}_{i}+1-{p}_{K-1}}.\)

After the APVs are obtained from the post hoc procedures, they can be compared with the specified significance level α. If APVi < α, the corresponding null hypothesis \({H}_{0i}\) can be rejected. If all K-1 null hypotheses are rejected, it can be identified that there are significant differences between the control algorithm and the benchmark algorithms.

2.2 Proposed Method t-Friedman Test

It can be found that the existing multiple comparison tests have some drawbacks in ranking the algorithms. Cross validation is widely adopted in the experiments to reduce the influence of randomness in the experiment process, the Friedman test and Aligned Ranks Friedman test rank the performance of all algorithms with respect to the mean value of cross validation process without considering the variance. In this way, two algorithms may be given different ranks with slight difference on the mean value with similar distributions. Taking the results in Table 2 as an example, it can be found that although the mean values of algorithm A and algorithm B are different, the difference is very small. Friedman test and Aligned Ranks Friedman test will judge that algorithm B is superior to algorithm A, only considering the mean value, and their ranking is also different. But in fact, there may be no significant difference between the two algorithms.

T-test, also known as student's t test, is a hypothesis test that uses t-distribution theory to infer the probability of the difference, and, then, judging whether the difference between the two mean values from two distributions is significant. Still take the results in Table 2 as an example. When adopting t test on the performance of algorithm A and algorithm B, the p-value calculated is 0.3352. It can be judged that there is no significant difference between the two algorithms when the significance level is αt = 0.05.

In addition, the credibility of the existing multiple comparison tests is difficult to measure. Based on these concerns, this paper proposes a new multiple comparison method t-Friedman test. The new method applies t test to the ranking process of Friedman test. The framework of t-Friedman test is shown in Fig. 2. For adjacent ranked algorithms of Friedman test, t test is performed to identify the difference of the distributions of the cross-validation results. If the null hypothesis is accepted under the specified significance level of t test αt, it is considered that there is no significant difference between the two algorithms on this dataset, so the rankings of the two algorithms are treated as equal.

It can be found that the t-Friedman test considers both the mean value and the variance of algorithms’ performance in the cross validation for ranking the algorithms on each dataset. In addition, the significance level of t test in the proposed t-Friedman test may be adopted as a conservativeness measure, which will be described in detail in the experiment section. The subsequent calculation of statistics and post hoc procedures of t-Friedman test are the same as Friedman test.

The pseudo-code of the proposed multiple comparison method t-Friedman test is shown as follows:

3 Experiments

This section compares experimentally the performance, especially the conservativeness of Friedman test, Aligned Ranks Friedman test and the proposed t-Friedman test in combination with different post hoc procedures. An empirical study on the minimum number of datasets for a specified number of algorithms is also conducted in this Section. Based on the results in [7], only three post hoc procedures (i.e. Bonferroni–Dunn, Holm, and Finner post hoc procedures) are considered in this work, since they show different power in the reported experiments. In addition, this Section investigates also the performance of proposed t-Friedman test at different significance levels of t test αt.

3.1 Experimental Process

The experimental flowchart is shown in Fig. 3, which mainly includes experimental data generation, hypothesis testing, and analysis of experimental result.

This paper uses Monte Carlo simulation to generate experimental data with predefined probability distributions representing the performance of an algorithm on a specific dataset. Suppose that there are K algorithms, where the first algorithm (j = 1) is the control algorithm, and that the number of datasets is N. The value Xij is the performance result of the j-th algorithm on the i-th dataset. The value of the algorithm performance is randomly generated according to Eq. (9) [24]:

where μ is the overall median and is set to 0 in the experiments, \({\beta }_{i}\) is the unknown effect of the i-th dataset, \({\tau }_{j}\) is the unknown effect of the j-th algorithm, \({\varepsilon }_{ij}\) is the random effect of i-th dataset and j-th algorithm.

When generating data, two types of probability distribution are considered, i.e. normal distribution and Poisson distribution. The mean value of \(\tau_{j}\) for the control algorithm is larger than that for the benchmark algorithms, indicating that the control algorithm performs better than the benchmark algorithms in nature. Table 3 shows the different parameter combinations in the experiment of Eq. (9) which are named as experiment setting i ~ x.

The main objective is to verify, with the influence of randomness caused by training data (i.e. \({\varepsilon }_{ij}\)), the performance (conservativeness) of different nonparametric statistical tests in identifying the significant difference between the control algorithm and the benchmark algorithms. By comparing the results of the first eight experimental settings, one may draw some empirical conclusions on the influencing factors on the minimal number of datasets for a maximal 5% Type I error given by the statistical tests. The performance of different algorithms follows separately normal distributions and Poisson distributions in experimental settings i ~ iv and v ~ viii. A small difference in the settings iv and viii is that the performance of the benchmark algorithms does not follow the same distribution. Experimental settings ix and x are to verify the conservativeness of the proposed statistical test with different significance levels of t test under normal and Poisson distributions.

In addition, in the experiment, the performance value Xij adopted for statistical tests is the averaged one of ten values randomly generated with Eq. (9), representing the tenfold cross-validation process that is commonly adopted in the experiments of the published work [2, 19].

With the generated data, nine variants of statistical tests for multiple comparisons are adopted, with the APV value being the performance indicator. The APVs represents the probability of making errors when rejecting the null hypothesis. Under the same experimental settings, the smaller the APVs, the lower the level of the conservativeness of a statistical test.

To avoid the random factors, the process of data generation and hypothesis test described in the previous paragraphs is repeated 1000 times with Monte Carlo simulation. The average value of the APVs of the 1000 replications is taken as the final performance of a statistical test.

By analyzing the results, this work tries to draw some empirical conclusions on the influence of the following parameters on the performance of different statistical tests, including the significance level of the t test αt, the unknown effect of the algorithm \({\tau }_{j}\), the random effect of the dataset and the algorithm, the number of datasets N and the number of algorithms K.

3.2 Experimental Results and Analysis

For simplicity, abbreviations are adopted for different combinations of multiple comparison tests and post hoc procedures, as shown in Table 4. Figures 4 and 5 show the experimental results of the settings i with normal distribution and v with Poisson distribution, respectively. In these figures, the abscissa is the number of datasets N, and the ordinate is the average APVs of 1000 Monte-Carlo replications. With the same numbers of algorithms K and datasets N, the average APV reflect the conservativeness of a statistical test. A higher average APV value indicates that the corresponding statistical test decide more rigorously on the existence of a significant difference among the performance of the control algorithm and the benchmark algorithms. Note that significant difference is identified while the APV value is smaller than 0.05 in the experiments. Since the results of different experimental settings are quite coherent, the results of the experimental settings ii–iv and vi–viii are shown in Figs. 7, 8, 9, 10, 11, 12 in the Appendix. Note that in these figures, the significance level of t test in the proposed t-Friedman test is fixed as 0.05. Figure 6 shows the evolution of the average APVs of the proposed t-Friedman test with different significance levels αt of t test with the experimental setting ix. The result of the experimental setting x is shown in Fig. 13 in the Appendix.

From Figs. 4, 5 or Figs. 7, 8, 9, 10, 11, 12, one may observe that the relative average APV values of different statistical tests in Table 4 are quite similar. For the multiple comparison tests considered in the experiments, i.e. Friedman test, Aligned Friedman test and the proposed t-Friedman test, t-Friedman test with a significance level of 0.05 achieves the relatively highest average APV values in all the experimental settings, indicating it is the most conservative one for decision-making. It may accept the null hypothesis more probably than the other two multiple comparison tests. The Aligned Friedman test is the least conservative in the experiments and, with the same number of algorithms, it may draw a conclusion on significant difference with much smaller number of datasets.

Similarly, the conservativeness of the post hoc procedures with the same multiple comparison test are also similar under different experimental settings. The Bonferroni–Dunn procedure is the most conservative one with the highest average APV values achieved in the experiments. The Finner procedure is the least conservative one.

Assuming that the significance level of the statistical test is α = 0.05, the null hypothesis can be rejected when APVs < α, i.e. there is a significant difference between the control algorithm and benchmark algorithms with the probability of making Type I error is smaller than the significance level α. Tables 5 and 6 are the minimum number of datasets needed to reject the null hypothesis according to the experimental results. The adopted distributions of the benchmark algorithms performance are the same in the experimental settings of Table 5, i.e. in the experimental settings i–iii and v–vii. The results in Table 6 are from the experimental settings iv and viii. Since the degree of conservation of t-Friedman test is adjustable, the minimum number of datasets needed under different degrees of conservation is also different, so the t-Friedman test is not listed in Tables 5 and 6.

From these two tables, one may observe again the conservativeness of different multiple comparison tests and the post hoc procedures. The larger the number in Tables 5 and 6, the more conservative the corresponding test. Except the adopted statistical test, Tables 5 and 6 show also other influence factors on the minimum number of datasets required for rejecting the null hypothesis, i.e. the difference of the performance and the number of benchmark algorithms.

The difference of the performance means the absolute difference of the performance between the control algorithm and the benchmark algorithms, i.e. to what degree the control algorithm is better than the benchmark algorithms. In the experiments, it is reflected as the difference of the mean values of the distributions \({\tau }_{j}\) in the experimental settings. While the difference is larger, much less datasets are required. For example, the minimum number of datasets required by F_bonf is 27 with a mean value difference of 0.5 in Normal distributions with 4 algorithms, while this number is only 4 with a mean value difference of 2 in normal distributions with 4 algorithms. While the mean difference is much larger than the standard deviation in the distributions \({\tau }_{j}\), the influence of the mean value difference decreases, as the overlapping probability approaches zero. More datasets are required as the number of benchmark algorithms increases.

The previous experiments show that the proposed t-Friedman test with a significance level αt of 0.05 in the adopted t test, is more conservative than Friedman test and Aligned Friedman test. The rejection of the null hypothesis with t-Friedman test is quite trustable for the decision maker who relies on a more conservative statistical test. Due to some constraints, one may not always adopt the most conservative statistical test. However, it may be interesting to characterize quantitatively the conservativeness level of the adopted statistical test. One advantage of the proposed t-Friedman test is that the significance level αt is adjustable. Figures 6 and 13 show the performance changes of t-Friedman test with different αt. These figures show that as the significance level of t test αt increases, that is, the confidence level of t test 1-αt decreases, the degree of conservativeness of t-Friedman test becomes lower and approaches the classical Friedman test. Therefore, the confidence level of the t test \(1 - \alpha_{t}\) can be adopted as a measure of conservativeness of the proposed t-Friedman test in this work. A bigger confidence level of t test infers a higher degree of conservativeness of t-Friedman test. Obviously, t-Friedman test can flexibly adjust its own degree of conservativeness by adjusting the significance level of t test αt.

Consider that fact that the proposed t-Friedman test is the classical Friedman test while αt equals to 1, the authors recommend t-Friedman test for related researchers in their future work. The researchers can give the conservativeness of their statistical test results. One main benefit is that the followers of their work may assess to what degree the researchers may trust the reported results.

3.3 Suggestions for the Application of These Statistical Tests

Through the analysis of the experimental results, the following suggestions are given:

-

1.

Since the performance difference of the control algorithm and the benchmark algorithms is not exactly known in real application, more datasets are definitely interesting.

-

2.

A more conservative statistical test is recommended for rejecting the null hypothesis. The Friedman test and t-Friedman test are recommended, in comparison with the Aligned Friedman test.

-

3.

Considering that fact that the classical Friedman test is a special case of the proposed t-Friedman test while αt equals to 1, the authors recommend t-Friedman test for related researchers in their future work. The researchers can give the conservativeness of their statistical test results. One main benefit is that the followers of their work may assess to what degree the researchers may trust the reported results.

-

4.

For the commonly adopted five- or tenfold cross-validation, one may repeat the cross validation process several times. Due to the randomness of the cross-validation algorithm itself, more performance values can be obtained to guarantee the normal distribution of t test.

4 Conclusions

Nonparametric statistical tests (combinations of a nonparametric multiple comparison test and a post hoc procedure) for multiple comparison have been widely adopted for identify the significant difference among the performance of a control algorithm and the benchmark ones. The nonparametric statistical tests draw conclusions with different conservativeness. A decision-maker prefers a more conservative statistical test with higher trust on the rejection of the null hypothesis. Except the adopted statistical test itself, some other factors influence the trustiness of a statistical test, such as the numbers of datasets adopted in the experiment and the number of considered benchmark algorithms. The state-of-the-art work lacks also a quantitative measure of the conservativeness. Thus, in this work, following an analysis of the popular Friedman test and one of its variants, t-Friedman test is proposed in this work by integrating the classical Friedman test and t test. Rigorous experiments have been designed to verify qualitatively the conservativeness of the multiple comparison tests and of the post hoc procedures. Minimum number datasets for rejecting the null hypothesis with a maximal 0.05 Type I error are also discussed. Some empirical suggestions are addressed. The experimental results show that the confidence level of t test in the proposed t-Friedman test may be adopted as the conservativeness measure. Considering the fact that Aligned Friedman test is the least conservative and that the proposed t-Friedman test approaches Friedman test as the significance level in t test approaches one, the relative researchers are recommended to adopt the proposed multiple comparison test and the conservativeness level should be provided along with the APVs given by different post hoc procedures.

In addition, this paper aims to propose the idea and framework of integrating pairwise comparison test in multiple comparison test. This paper combines t test with Friedman test, and its effectiveness is verified in the experiments. Therefore, to a certain extent, it is reasonable to believe that the combination of other methods may also be feasible. For example, the future work may combine U test or F test with Aligned Ranks Friedman test. Similarly, in the experiments, only the commonly adopted normal distribution and Poisson distribution are selected to generate the simulation data, to verify the effectiveness of the proposed t-Friedman test. However, to a certain extent, it is believed that t-Friedman test is highly likely to be effective for data satisfying other distribution types.

Availability of Data and Material

The data that support the findings of this study are available from the corresponding author upon request.

Abbreviations

- t-Friedman test:

-

The proposed method combines t test with Friedman test for multiple comparison test

- APVs:

-

Adjusted p-values

References

David Li, J.: A two-step rejection procedure for testing multiple hypotheses. J. Stat. Plan. Inf. (2008). https://doi.org/10.1016/j.jspi.2007.04.032

Baliarsingh, S.K., Vipsita, S., Muhammad, K., Dash, B., Bakshi, S.: Analysis of high-dimensional genomic data employing a novel bio-inspired algorithm. Appl. Soft Comput. J. (2019). https://doi.org/10.1016/j.asoc.2019.01.007

Chandra, T.B., Verma, K., Singh, B.K., Jain, D., Netam, S.S.: Coronavirus disease (COVID-19) detection in chest X-Ray images using majority voting based classifier ensemble. Expert Syst. Appl. (2021). https://doi.org/10.1016/j.eswa.2020.113909

Couch, S., Kazan, Z., Shi, K., Bray, A., Groce, A.: Differentially private nonparametric hypothesis testing. Proc. of the ACM Conf. Comput. Commun. Secur. (2019). https://doi.org/10.1145/3319535.3339821

Davenport, J.M.: Approximations of the critical region of the friedman statistic. Commun. Stat.-Theor Methods (1980). https://doi.org/10.1080/03610928008827904

De Gregorio, M., Giordano, M.: An experimental evaluation of weightless neural networks for multi-class classification. Appl.Soft Comput. J. (2018). https://doi.org/10.1016/j.asoc.2018.07.052

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res. 7, 1 (2006)

Derrac, J., García, S., Hui, S., Suganthan, P.N., Herrera, F.: Analyzing convergence performance of evolutionary algorithms: a statistical approach. Inf. Sci. (2014). https://doi.org/10.1016/j.ins.2014.06.009

Doksum, K.: Robust procedures for some linear models with one observation per cell. Ann. Math. Stat. (1967). https://doi.org/10.1214/aoms/1177698881

Dunn, O.J.: Multiple comparisons among means. J. Am. Stat. Assoc. (1961). https://doi.org/10.2307/2282330

Finner, H.: On a monotonicity problem in step-down multiple test procedures. J. Am. Stat. Assoc. (1993). https://doi.org/10.1080/01621459.1993.10476358

Friedman, M.: The use of ranks to avoid the assumption of normality implicit in the analysis of variance. J. Am. Stat. Assoc. (1937). https://doi.org/10.2307/2279372

Friedman, M.: A comparison of alternative tests of significance for the problem of $m$ rankings. Ann. Math. Stat. (1940). https://doi.org/10.1214/aoms/1177731944

García, S., Fernández, A., Luengo, J., Herrera, F.: Advanced nonparametric tests for multiple comparisons in the design of experiments in computational intelligence and data mining: experimental analysis of power. Inf. Sci. (2010). https://doi.org/10.1016/j.ins.2009.12.010

Hochberg, Y.: A sharper bonferroni procedure for multiple tests of significance. Biometrika (1988). https://doi.org/10.1093/biomet/75.4.800

Holland, B.S., Copenhaver, M.D.: An improved sequentially rejective bonferroni test procedure. Biometrics (1987). https://doi.org/10.2307/2531823

Holm, S.: A simple sequentially rejective multiple test procedure. Scand. J. Stat. 6, 65 (1979)

Hommel, G.: A stagewise rejective multiple test procedure based on a modified bonferroni test. Biometrika (1988). https://doi.org/10.1093/biomet/75.2.383

Jain, I., Jain, V.K., Jain, R.: Correlation feature selection based improved-binary particle swarm optimization for gene selection and cancer classification. Appl. Soft Comput. (2018). https://doi.org/10.1016/j.asoc.2017.09.038

Liu, J.: Fuzzy support vector machine for imbalanced data with borderline noise. Fuzzy Sets Syst. (2020). https://doi.org/10.1016/j.fss.2020.07.018

Liu, Z., Blasch, E., John, V.: Statistical comparison of image fusion algorithms: recommendations. Inf. Fusion (2017). https://doi.org/10.1016/j.inffus.2016.12.007

O’Gorman, T.W.: A comparison of the F-test, Friedman’s test, and several aligned rank tests for the analysis of randomized complete blocks. J. Agric. Biol. Environ. Stat. (2001). https://doi.org/10.1198/108571101317096578

Pawar, S.D., Shirke, D.T.: Nonparametric tests for multivariate multi-sample locations based on data depth. J. Stat. Comput. Simul. (2019). https://doi.org/10.1080/00949655.2019.1590577

Pereira, D.G., Afonso, A., Medeiros, F.M.: Overview of friedmans test and post hoc analysis. Commun. Stat.: Simul. Comput. (2015). https://doi.org/10.1080/03610918.2014.931971

Petrović, M., Miljković, Z., Jokić, A.: A novel methodology for optimal single mobile robot scheduling using whale optimization algorithm. Appl. Soft Comput. J. (2019). https://doi.org/10.1016/j.asoc.2019.105520

Pulgar, F.J., Charte, F., Rivera, A.J., del Jesus, M.J.: Choosing the proper autoencoder for feature fusion based on data complexity and classifiers: analysis, tips and guidelines. Inf. Fusion (2020). https://doi.org/10.1016/j.inffus.2019.07.004

Rom, D.M.: A sequentially rejective test procedure based on a modified bonferroni inequality. Biometrika (1990). https://doi.org/10.1093/biomet/77.3.663

Shi, S., Ding, S., Zhang, Z., Jia, W.: Energy-based structural least squares MBSVM for classification. Appl. Intell. (2020). https://doi.org/10.1007/s10489-019-01536-y

Steel, R.G.D.: A multiple comparison sign test: treatments versus control. J. Am. Stat. Assoc. (1959). https://doi.org/10.2307/2282500

Funding

This work was supported in part by National Natural Science Foundation of China (No.52005027).

Author information

Authors and Affiliations

Contributions

JL proposed the methodology and revised manuscript; YX implemented the algorithm and wrote initial manuscript; all authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Ethics Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visithttp://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, J., Xu, Y. T-Friedman Test: A New Statistical Test for Multiple Comparison with an Adjustable Conservativeness Measure. Int J Comput Intell Syst 15, 29 (2022). https://doi.org/10.1007/s44196-022-00083-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-022-00083-8