Abstract

Algorithmic technologies are widely applied in organizational decision-making today, which can improve resource allocation and decision-making coordination to facilitate the accuracy and efficiency of the decision-making process within and across organizations. However, algorithmic controls also introduce and amplify organizational inequalities—workers who are female, people of color and the marginalized population, and workers with low skills, a low level of education, or who have low technology literacy can be disadvantaged and discriminated against due to the lack of transparency, explainability, objectivity, and accountability in these algorithms. Through a systematic literature review, this study comprehensively compares three different types of controls in organizations: technical controls, bureaucratic controls, and algorithmic controls, which led to our understanding of the advantages and disadvantages associated with algorithmic controls. The literature on the organizational inequality related to the employment of algorithmic controls is then discussed and summarized. Finally, we explore the potential of trustworthy algorithmic controls and participatory development of algorithms to mitigate organizational inequalities associated with algorithmic controls. Our findings raise the awareness related to the potential corporate inequalities associated with algorithmic controls in organizations and endorse the development of future generations of hiring and employment algorithms through trustworthy and participatory approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Background

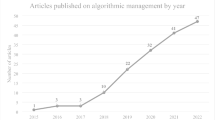

Algorithmic technologies, defined as “computer-programmed procedures that transform input data into desired outputs in ways that tend to be more encompassing” [1], have gained popularity in organizations in recent decades. Many technologies employed in the field of employment and hiring have adopted automated processes and decision-making. While many researchers believe that applying algorithms can improve resource allocation and decision-making coordination [2], facilitate the transparency and efficiency of the decision-making process within and across organizations [3], and enhance organizational learning [4], algorithmic decision-making is subject to data and developer biases. Artificial intelligence (AI) systems used in employment contexts (e.g., recruiting, performance evaluation, and related activities) are considered “high risk” for people’s lives under the European AI Act [5]. Transparency and objectivity can be limited [6], and privacy can be compromised [7, 8] when algorithmic controls in organizational decision-making are used more than conventional technical or bureaucratic controls.

Algorithmic control is a term used to describe automated labor management practices in the contemporary digital economy [9]. In this study, we discuss algorithmic controls that employ AI technologies to collect data on workers’ behavior, physiology, and emotions to model, predict, and modify their organizational behavior. Organizational equality can be challenged in the age of comprehensive employment of algorithmic controls in organizations. Typically, various organizational inequalities, such as gender, class, and racial inequalities, have been studied in recent decades [10, 11]. Where algorithmic controls are employed in organizations, literature evidence shows that workers can be more constrained [6], confused and frustrated [12], voiceless [13], non-cooperative [1], resistant [14, 15], and discriminated against [16, 17]. However, current research addressing organizational inequalities using algorithmic controls is significantly lacking [1, 18]. This may be associated with underdeveloped literature on managing AI risks and social impacts. More research must be conducted to understand how algorithmic controls deviate from traditional controls, how algorithmic controls reproduce organizational inequalities, and how to mitigate these inequalities in organizations.

Literature on trustworthy AI is emerging that addresses the lawfulness, ethics, and responsibility of AI systems [19,20,21,22,23]. Developing trustworthy AI technologies contributes to accountability [19, 20], explainability (National Institute of Standards and Technology [24], and transparency [19] of the algorithm and ensures non-discrimination [21] of end-users and privacy of the individuals whose data is collected [24], while trustworthy AI establishes requirements for each stage of AI technologies (e.g., data sanitization, robust algorithms, and anomaly monitoring) to support AI robustness, generalization, explainability, transparency, reproducibility, fairness, privacy preservation, and accountability [25]. While many regulatory entities have published guidance on developing trustworthy AI [24, 26], assurance of such trustworthiness may only be obtained through adequate internal controls [22] or external compliance and ethics-based audits [19, 27, 28]. Through a systematic literature review, we examine whether and how trustworthy algorithmic controls can mitigate relevant organizational inequalities.

Participatory AI, also known as inclusive and equitable AI or co-creative AI, is a field that has emerged in recent years [29]. It involves a model that allows the participation of various stakeholders in the design, development, and decision-making process of AI systems [29]. Unlike traditional approaches in which AI systems are primarily developed and controlled by a small group of experts, participatory AI seeks to democratize technology by actively engaging individuals and communities who may be affected by or have valuable insights into its applications [30]. Participatory AI systems involve various participatory processes, including co-design, public consultation, citizen science initiatives, and ongoing collaboration among researchers, developers, and the community. Through these processes, participants collectively state the problem, identify data sources, create algorithmic models, establish evaluation metrics, and shape the AI system’s deployment and governance framework [29].

Participatory AI recognizes that the influence of AI is not limited to technical aspects but also includes ethical and sociocultural contexts. It can help identify potential biases AI can produce in sensitive areas such as healthcare and criminal justice [31]. By incorporating diverse and inclusive perspectives from marginalized groups and communities, domain experts, policymakers, and civil society organizations, participatory AI aims to combat bias and inequality and address the needs and values of a diverse population. This systematic review also explores the potential of using participatory AI to combat biases and inequalities in algorithmic controls.

In the following sections, this article first reviews three well-known types of controls: technical, bureaucratic, and algorithmic, in order to identify how algorithmic controls are unique. Then, we explain the methodology used for the systematic literature review. Third, it summarizes what we learned from the systematic literature review about how algorithmic controls can promote organizational inequalities and how trustworthy characteristics and participatory AI can mitigate these inequalities. Finally, the article concludes with the major takeaways of this research.

1.1 Technical, bureaucratic, and algorithmic controls

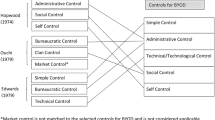

Organizational controls are dialectical processes in which employers continuously innovate to maximize the value obtained from workers. In other words, the control process refers to any process that managers use to direct and motivate employees to meet organizational expectations in desired ways. The literature has studied two broad types of controls: normative and rational controls. Employers implement normative controls and earn employees’ trust [32] to obtain desired work performance. In contrast, rational controls are used when employers appeal to workers’ self-interest to compel' desired behavior from workers [33]. This study focuses on one type of these so-called rational controls: algorithmic controls.

To illustrate how rational controls work, it is essential to understand that the rational model of choice assumes that human behavior has some purpose. In this model, actors enter decision situations with known objectives, which helps them determine the value of the possible consequences of an action [34]. In rational control systems, worker behavior is directed by well-designed tasks, clear objectives, and reasonable incentives.

Within rational control systems, technical controls rely on the intervention of machine or computer software to substitute for the presence of a supervisor; they have historically been employed in the physical and technological aspects of production [33]. Bureaucratic controls are based on standardized rules and procedures to guide worker behavior [35]; they reduce supervisors’ time and effort spent on managing their subordinates. Algorithmic controls allow workers’ data to be collected, modeled, predicted, and modified through algorithms, which leads to improvement, substituting, or supplements of traditional means of organizational controls (i.e., technical and bureaucratic controls) [18]. Technical, bureaucratic, and algorithmic controls will be discussed in detail. The comparison and summary of mechanisms, procedures, benefits, adverse effects, and resistance actions of these three kinds of controls are presented in Table 1.

1.1.1 Technical controls

Technical controls are exercised through physical devices that replace direct supervision [1]. Early in the twentieth century, employers began to set a machine-driven pace and limited workers’ workspace as assembly lines were developed [36]. Technical controls have made it possible for employers to direct workers through external devices (e.g., the pace of production can be directed by a machine). To evaluate their workers, employers record the frequency and duration of work tasks, worker productivity, accuracy, and response time [37]. If workers are not cooperating or following the directives and goals set by their employers, secondary workers will be recruited to replace them [36]. Employers expect workers to perceive constant surveillance, which leads workers to police their own behavior in compliance with set directions [38]. However, employees may sabotage the machines and related equipment [39] or collectively withhold effort [40] to resist managerial objectives.

However, technical controls may have a negative impact on worker motivation. For example, technical controls can make workers experience alienation because they can be deprived of the right to see themselves as commanding their actions [41]. This is evidenced through workers’ various individual and collective actions to resist technical controls, such as sabotaging the machines and related equipment, stealing supplies, loafing on the job, developing alternative technical procedures, and other measures.

1.1.2 Bureaucratic controls

Bureaucratic controls emerged in the years following World War II. Unlike technical control, bureaucratic controls direct employees through narratives, such as job descriptions, rules, and checklists. In the bureaucratic model, decision-making is conducted by people with both power and competency who interpret master plans; such master plans provide rules and procedures governing contingencies, performance expectations, and individual behavior for organizational decision-making [35]. Thus, the bureaucratic model applies when organizations have stable domains and the costs of developing master plans are under control.

Under bureaucratic controls, incentives and penalties are implemented to accomplish discipline of employees [42]. Leaders of an organization may direct worker behavior by creating rule systems (e.g., employee handbooks) that detail how to perform specific tasks and make decisions and how member compliance with organizational directives is evaluated. On the other hand, bureaucratic controls can create a rigid, dehumanized work environment for workers [43]. The feeling of losing freedom and autonomy may lead to similar resistance actions from employees, as was discussed in relation to technical controls, such as worker strikes. Additional resistance actions may include cynicism, workarounds, or pro forma compliance [44].

1.1.3 Algorithmic controls

In recent decades, algorithmic controls have become a significant force in allowing employers to reconfigure employer-worker relationships within and across organizations. Algorithms are defined by Gillespie [45] as computer-programmed procedures for transforming input data into the desired output, and therefore, algorithmic controls can be understood to use these computer-programmed procedures to collect, analyze, and model workers’ behavioral data in order to predict and modify workers’ organizational behaviors.

Scholars have identified multiple economic benefits of algorithmic controls. For example, algorithmic controls, such as algorithmic recommendations at the workplace, can enable individual workers to make decisions more accurately than previously. Algorithmic controls also save large amounts of human labor in organizational decision-making by removing humans partially or almost entirely from the process. For example, algorithms can scan many resumes in seconds, thus promoting the efficiency of the recruiting process. Algorithmic controls also efficiently provide prompt insights and feedback. In addition, algorithmic controls can automate coordination processes, which contributes to maximizing economic value for employers.

Team coordination, “a process that involves the use of strategies and patterns of behavior aimed to integrate actions, knowledge, and goals of interdependent members” [46], is used to achieve common goals. Automating coordination processes has been shown to provide economic efficiency [47]. In addition, employers can use algorithmic controls to automate organizational learning to create economic value. Studies have shown how employers have used algorithmic controls to identify and learn from user patterns across individuals and then responsively change system behavior in real-time [3]. In addition, some emerging literature suggests algorithmic controls through platforms (e.g., Uber) can enhance motivation and enjoyment for gig workers through a sense of “being your own boss” and opportunities to connect with others [48]. As mundane, repetitive, and tedious jobs are automated under algorithmic controls, workers can take on higher-value work and pursue retraining and reskilling opportunities, thus leading to higher job satisfaction [49].

Compared to technical and bureaucratic controls, worker activities can be more constrained under algorithmic controls because there is a lack of transparency in how the controls direct, evaluate, and discipline workers. That is, workers often do not fully understand how algorithms are being used to direct, evaluate, and discipline them [6]. By facilitating interactive and crowd-sourced data and procedures, these controls can also tighten the power of managers over workers and remove employees’ discretion. In the context of the public sector, Borry and Getha-Taylor [50] found that algorithmic controls resulted in elevated gender and racial inequalities with regard to job categories and job functions. For example, under algorithmic controls, there was a higher representation of women in administrative support and paraprofessional roles but a lower representation among technicians, skilled craft, and service maintenance categories. In addition, white employees were more often found than non-white employees in the public sector. In particular, white employees were significantly more represented than non-white employees in terms of officials and administration, professionals, and protective services categories. Employees can also find themselves under high pressure when algorithmic controls are in place because they can fear being replaced by automation, which leads to increased risk of mental health issues [49].

As under the other categories of controls, workers resist the tighter employer controls and defend their autonomy and individuality [1]. Under algorithmic controls, workers may engage in non-cooperation. However, due to the instantaneous and interactive nature of algorithms, the tactics of noncooperation are different from those under conventional controls. First, workers resist controls by ignoring recommendations or rewards provided by the algorithms. For example, it has been found that web journalists and legal professionals abandoned algorithmic risk evaluations, obtained desired risk scores by manipulating the input data, and claimed the rules and computational analyses behind the algorithmic systems were problematic [51]. Second, workers also engage in noncooperation by disrupting algorithmic recording. For instance, Uber drivers may turn off their driver mode in particular areas, stay in only residential areas, and frequently log off to avoid unwanted trips [9]. Third, workers have also been shown to leverage algorithms to resist controls. For example, some Airbnb hosts attempt to figure out the characteristics or behaviors that potentially impact their ratings by studying all the accessible information (e.g., online forums, the company’s technical documentation, and competitors’ profiles and ratings) [15]. Finally, workers resist algorithmic controls by personally negotiating with clients to bypass or alter algorithmic ratings. The inflated ratings for workers (e.g., Uber drivers, Airbnb hosts) in the online labor markets can be explained by such personal negotiation [14].

Overall, algorithmic controls exhibit greater efficiency compared to technical and bureaucratic controls. While algorithmic controls have the potential to overcome some negative impacts of traditional means of organizational controls (e.g., the lack of comprehensive analyses for controlling factors causing poor performance), algorithmic controls also amplify existing negative impacts of traditional controls (e.g., worker frustration) and introduce a new source of negative impacts (e.g., loss of employee privacy). We present a summary of mechanisms and direction, evaluation, and discipline of worker behaviors for technical, bureaucratic, and algorithmic controls in Table 1. We also provide a comparison of benefits, negative impacts, and workers’ resistant actions for the three types of organizational controls in Table 2.

2 Methods

2.1 Literature search

2.1.1 Database search

A comprehensive search strategy was employed to identify relevant articles in the field of organizational inequalities associated with algorithmic controls. An initial search was conducted on ScienceDirect, resulting in 1,094 records. The search was limited to articles written in English, published as scientific research articles, accessible through ScienceDirect, openly accessible on Google Scholar or accessible through university interlibrary loans, and articles that address organizational inequalities and/or power asymmetry in the context of algorithmic controls. This step yielded 372 records, which were further filtered based on article type, language, and subject area.

2.1.2 Supplementary search

An additional search was performed using Google Scholar to augment the initial findings; it identified 35 relevant records. The inclusion criteria for this search were openness and accessibility, which ensured that the articles were openly accessible on Google Scholar.

2.2 Screening process

2.2.1 Title and abstract screening

A systematic screening process was implemented for the remaining 407 records, which involved the entire reading of titles, keywords, and abstracts. This stage led to the exclusion of 354 records, primarily based on relevance to the research topic and alignment with the inclusion criteria. The screening process aimed to identify articles addressing organizational inequalities and power asymmetry in the context of algorithmic controls.

2.2.2 Full-text review

Subsequently, the remaining records underwent a full-text review. Articles were thoroughly examined during this phase for appropriateness to the research focus. Duplications were identified and removed, resulting in a final set of 53 articles for detailed analysis.

2.3 Data extraction and analysis

2.3.1 Data extraction

Data extraction was carried out from the selected articles, including information on study design, methodologies employed, key findings, and relevance to organizational inequalities associated with algorithmic control.

2.3.2 Synthesis and analysis

A qualitative synthesis approach was adopted to analyze the extracted data. Patterns, themes, and commonalities across studies were identified to gain insights into reducing organizational inequalities in the context of algorithmic controls.

2.4 Quality assessment

A quality assessment of the included articles was conducted to evaluate the rigor and validity of the studies. This assessment considered study design, sampling methods, and reporting transparency.

2.5 Reporting

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [82] were followed to ensure transparent reporting of the systematic review process. The PRISMA flow diagram (Fig. 1) illustrates the step-by-step inclusion and exclusion of articles.

2.6 Limitations

Potential limitations of this systematic review include the initial restriction of 372 records based on article type, language, and subject area, excluding non-English language articles and relying on available databases. The final review will discuss these limitations to provide a comprehensive overview of the study’s scope.

3 Results

3.1 Organizational inequality in algorithmic controls

Inequality is defined as “systematic disparities between participants in power and control over goals, resources, and outcomes” [83]. Since a few decades ago, various types of organizational inequalities, including gender [84,85,86], class [52], and racial inequalities [87, 88] have been studied. Organizational inequality involves all possible forms of divergence in the treatment of and opportunities for workers within the same organization. In organizations, female, lower class, and non-white workers may be paid less, have fewer opportunities to receive a promotion, be excluded from important meetings, receive less benefits, have a low chance to be hired and a high chance to be fired, among other factors, compared to male, upper class, and white workers. During the late 1990s to 2000s, specific organizational processes that produced inequalities were identified and studied, including job allocation [11], performance evaluation, and wage setting [10].

As algorithmic controls have become popular, they have also introduced new sources of organizational inequalities. According to Eubanks [12], poor and working-class people are targeted by many algorithmic controls across governments and corporations, leading to negative consequences. For example, more personal information is collected from the poor and working-class, and these data are less likely to be kept private or secured. Worse still, these classes are labeled by predictive models and algorithms as risky investments, which makes them vulnerable in claiming equal resources and rights. These new systems have been shown to have the most destructive effects on low-income communities and people of color. However, the growth of automated decision-making impacts the quality of democracy for us all. Eubanks [12] argued that the use of automated decision-making algorithms “shatters the social safety net, criminalizes the poor, intensifies discrimination, and compromises our deepest national values” (p. 12). In other words, the social decisions about who we are and what we want to achieve have been reframed as systems engineering problems in the age of algorithms. In addition, societal biases toward people of color may also be perpetuated through training AI-powered algorithmic controls with biased training data [89].

Recent research has analyzed organizational inequality using Kellogg et al.’s [1] “6Rs” model of algorithmic controls [18]. Kellogg et al. [1] proposed six algorithmic controls that employers use to control workers, including direction mechanisms (i.e., restricting and recommending), evaluation mechanisms (i.e., recording and rating), and discipline mechanisms (i.e., replacing and rewarding). Algorithmic recommendations provide suggestions to managers to ensure that workers’ decisions are made within managers’ preferences. Algorithmic restricting helps managers control the type and amount of information to which workers have access and limit workers’ behaviors in certain ways, while algorithmic recording is used to monitor, aggregate, process, and report worker behaviors. Algorithmic rating is used to identify patterns in the data recorded that is relevant to worker performance measures. Algorithmic replacing is used to automatically fire workers with low performance and replace them with qualified eligible candidates. Finally, algorithmic rewarding is used to determine and distribute professional and material rewards among workers to modify their behaviors. We summarize the research findings on organizational inequalities associated with algorithmic controls in Table 3.

3.2 How trustworthy AI-powered algorithmic controls reduce organizational inequalities

Research on trustworthy AI has emerged in the past few years, which generally examines AI accountability, transparency, explainability, interpretability, fairness, safety, privacy, and security [19, 20, 22, 23, 110,111,112,113]. Research has advocated compliance and ethics-based audits [19, 27, 28] as well as impact assessments [114] for hiring and employment algorithms to ensure AI trustworthiness. Based on the review of the literature, we suggest that developing accountable, transparent, explainable, fair, and privacy-enhanced AI-powered algorithmic controls may mitigate organizational inequalities involved in algorithms.

3.2.1 Accountability

Accountability is fundamentally referred to as answerability of actors for outcomes to auditors, users, regulators, and other key stakeholders [110]. In the context of AI systems, accountability is achieved when organizations keep records regarding the dimensions of time, information, and action to ensure that misbehavior in a protocol can be attributed to a specific party; to accomplish accountability, organizations also need to demonstrate partial information about the contents of these records to affected people or to oversight entities [20]. AI-powered algorithmic controls need to be developed with accountability while following modern accountability approaches (e.g., creating logs and audit trails that explain decision-making for each step and maintaining contact lists of key actors involved in AI development and management.) [19, 22, 113]. Organizations may require third-party audits to examine algorithmic accountability; they may also demand continuous audits during AI deployment and use [19]. As lawmakers, businesses, and the general public demonstrate increasing concern about incompetent, unethical, and misused AI-powered algorithmic controls, algorithmic audits have become increasingly demanded, either by law or by clients’ requests. While an algorithm is in use, continued internal monitoring and governance should also be in place [22]. In addition, providing processes for workers to access and challenge algorithmic decisions is important, as workers most often have no procedures to do so [115].

3.2.2 Transparency

Transparency reflects the extent to which information is available to individuals about an AI system; it is usually compromised in the context of “black box” models. Algorithmic controls rely on rules embedded in coding that are inaccessible to the public or end users, which is different from previous instantiations of bureaucratic controls. For example, employers using algorithmic rewarding often keep their algorithms secret to discourage manipulation and rating inflation, which offers workers limited transparency. According to Burrell [6], algorithms can be opaque for three major reasons: intentional secrecy, required technical literacy, and machine-learning opacity. All of these factors may contribute to increased organizational inequality and delay in detection. The algorithm implemented should be accompanied with comprehensive transparency measures related to AI commissioning, model building, decision-making, and investigation [19].

In addition to algorithmic transparency, the training datasets used should also be made transparent. The training data used in algorithmic analyses can be already biased in a way that allows these algorithms to inherit the prejudices of prior decision-makers [93]. Gebru et al. [116] proposed data transparency measures, such as using datasheets that include the question about whether the dataset identifies any subpopulations (e.g., by race, age, or gender). Moreover, auditability and traceability measures, such as log mechanisms, are usually solutions to transparency issues. Therefore, the system must have interfaces that enable auditing and also policies that allow effective accessibility to those interfaces [20].

Traceability means that the outputs of a computer system can be understood through the processes in design and development. To achieve traceability, the algorithm must maintain sufficient records during development and operation so that their creation can be reproduced; this evidence should also be amenable to review [20]. Traceability measures may mitigate the organizational inequalities associated with opaque predictive algorithmic controls [18]. Therefore, having auditable and traceable algorithms and maintaining such auditability and traceability in deployment and in use can effectively enhance algorithmic transparency, thereby contributing to inequality mitigation.

3.2.3 Explainability

Explainability means the algorithm and its output can be explained in a way that a human being can understand. Many organizational inequality issues associated with algorithmic controls are results of lack of explainability. For instance, due to the large information asymmetry between the algorithm and workers, algorithms can easily nudge workers to make decisions in the manager’s best interest [18]. Additionally, the objective function and limitations of the algorithm are often unknown to the workers, which leads workers to rely on their heuristics to decide whether to accept algorithmic recommendations [18]. Workers may become suspicious and frustrated about the opaque and unclear guidelines on how they are evaluated and rewarded [109].

To mitigate the explainability issues of algorithms in the workplace, algorithm controls that organizations acquire and use must be equipped with explainability measures, not only explainable to technical experts, but also to all workers who may be non-technical. NIST [24] proposed that risk from lack of explainability may be managed by descriptions of how models work; and this description should be tailored to consider individual differences such as the user’s knowledge and skill level. Therefore, developers of management algorithms should consider the knowledge and skill levels of intended users when designing and implementing explainability measures. In addition, providing training necessary for workers to better understand the algorithmic decision-making is an essential step to mitigate workplace inequality.

Toreini et al. [23] also proposed using explainability technologies to enhance explainability of AI algorithms. Explainability technologies are technologies that focus on explaining and interpreting the outcome to the stakeholders (including end users) in a humane manner. Overall, implementation of explainable algorithmic controls is one of the essential steps in mitigating workplace inequality introduced by algorithmic controls.

3.2.4 Fairness

Fairness is generally related to being free from biases and non-discrimination. NIST [24] has identified three major categories of AI bias to be considered and managed: systemic (e.g., biases exist in training datasets, organizational norms, practices, and processes in developing and using AI), computational (e.g., biases stem from systematic errors due to non-representative samples), and human (e.g., biased individuals or groups’ perception of AI system information in supporting decision-making), all of which can occur in the data used to train the algorithm, the algorithm itself, the use of the algorithm, or interaction of people with the algorithm. Many social and racial inequalities can be reinforced in algorithmic restricting, recommending, recording, rating, rewarding, and replacing [12, 69, 91, 92, 101,102,103, 106,107,108].

The most effective way of detecting biases in algorithms perhaps is the fairness audit. Fortunately, there have been fairness audit procedures established by governments [117] and in the literature [27, 28]. Debiasing tools and techniques are also well established in the literature to assist bias detection [21]. Fairness assessment may be continuously and periodically conducted while algorithmic controls are in use to prevent biases from developing through reinforcement learning and lack of monitoring.

3.2.5 Privacy

Privacy refers broadly to the norms and practices that help to safeguard human autonomy, identity, and dignity [24]. The use of algorithms in workplaces poses privacy challenges in organizations. For instance, data are sometimes collected outside the workplace and might not be job-related; organizations may also make inferences about workers at the individual or group levels without their knowledge [100]. Therefore, organizations under algorithmic controls may be more vulnerable to risks of a data breach, consequent legal liabilities, and non-compliance with privacy regulations [18]. To protect workers’ privacy, privacy considerations should be considered during design phases. Many organizations follow established privacy laws and standards (e.g., General Data Protection Regulation (GDPR)) or internally developed rules to enforce privacy measures in algorithms. Privacy serves as an important algorithmic principle in many AI audit standards and frameworks [24]. Privacy may be audited and enhanced prior to deployment and use by organizations and continue to be audited post deployment. In addition, workers’ awareness is also critical for their personal privacy [117], which can be improved through continuous organizational training.

3.3 How participatory AI-powered algorithmic controls reduce organizational inequalities

Participatory algorithmic controls have the potential to reduce the organizational inequality by involving a diverse range of stakeholders in the design, development, and decision-making processes of algorithms. By actively including individuals and communities who may be affected by algorithmic systems, participatory approaches can help mitigate biases, address power imbalances, and ensure that algorithms are developed and deployed in a fair and equitable manner. The following are several aspects in which participatory algorithmic controls can contribute to reducing organizational inequality.

3.3.1 Diverse perspectives and contextual knowledge

Participatory development is an approach that prioritizes the inclusion of diverse perspectives and contextual knowledge in the development of algorithms. This methodology acknowledges that stakeholders from a range of backgrounds can provide valuable insights and experiences that are instrumental in identifying and addressing biases, blind spots, and potentially discriminatory outcomes in algorithms [23]. By including voices often excluded from decision-making processes, participatory algorithmic controls mitigate biases and discriminatory effects in areas such as hiring, management, and employment. Collaborative efforts bring together individuals from marginalized communities, end users, domain experts, and civil society organizations to ensure that a diverse range of perspectives and contextual knowledge is considered [118]. For example, participatory AI involving domain experts in algorithm design can effectively address algorithmic biases by aligning models and decision-making criteria with specific domain needs and mitigating explicit and implicit biases in algorithms [119, 120]. It also empowers marginalized communities by actively involving them in the design and decision-making processes of algorithmic systems, leading to more equitable outcomes, bridging the digital divide, addressing systemic inequalities, democratizing AI, promoting social justice, and providing a sense of ownership and control over technologies that affect them [23, 118, 121].

3.3.2 Co-design and co-creation

When it comes to algorithmic controls, participatory approaches that involve co-design and co-creation are crucial. This means that stakeholders work together to design and develop algorithms, recognizing that each person brings unique insights and experiences to the table. By involving stakeholders in defining problems, shaping models, and establishing evaluation metrics, participatory algorithmic controls promote collective problem-solving and ensures that algorithms align with shared values and aspirations [122].

Research by Dekker et al. [123] emphasized the importance of co-design in algorithmic decision-making because involving diverse stakeholders in the design process can help identify ethical concerns, assess potential impacts, and explore alternative solutions. By actively involving stakeholders through co-design and co-creation, organizations can benefit from diverse perspectives and collective intelligence, ensuring that algorithmic systems are more responsive to the needs and values of different stakeholders [122]. For example, involving workers in the co-design and co-creation of algorithms used in hiring processes can help mitigate biases and power imbalances. Workers can contribute their insights and experiences to shape algorithms that reduce discrimination, promote fairness, and consider a broader range of qualifications beyond traditional metrics. Similarly, in performance management systems, involving workers in algorithm design can ensure that the evaluation criteria are aligned with their specific job requirements, thereby providing a more accurate and equitable assessment of their performance. This participatory approach empowers workers and gives them a sense of ownership and control over the technologies that affect them. It also mitigates the potential for algorithms to perpetuate or exacerbate power imbalances and inequalities in the workplace.

3.3.3 Accountability and transparency

Participatory algorithm development also prioritizes accountability and transparency, recognizing the importance of increased visibility and comprehension of the decision-making process of the algorithm. By involving stakeholders in the development and implementation of algorithms, organizations can enhance transparency, encourage scrutiny, and address biases and unfair outcomes [124, 125]. More specifically, participatory algorithms enhance transparency by making the decision-making criteria, data sources, and algorithmic models accessible to stakeholders. This transparency enables individuals to gain insights into the decision-making process, understand the factors that influence algorithmic outcomes, and identify underlying rules, assumptions, and biases embedded in the algorithms [126]. Therefore, stakeholders can scrutinize algorithmic systems, validate their fairness and effectiveness, and detect potential biases, discriminatory effects, or unintended consequences.

3.3.4 Algorithmic education and awareness

Algorithmic education and awareness programs are essential for fostering a deeper understanding of algorithmic systems. Brisson-Boivin and McAleese [127] highlighted the significance of providing educational opportunities that extend beyond technical knowledge and encompass broader societal perspectives. Participatory approaches foster a culture of learning and collaboration, where stakeholders can contribute their unique perspectives and experiences. Involving stakeholders from diverse backgrounds allows for a knowledge exchange that bridges the gap between technical experts and non-experts. This inclusive approach facilitates stakeholders’ comprehension of the underlying principles, processes, and implications of algorithms, thereby enabling them to engage in a more informed and critical manner with algorithmic decision-making. Through education, stakeholders develop a critical awareness of the potential harms, unintended consequences, and ethical dilemmas associated with algorithmic decision-making [127, 128].

Tsamados et al. [129] emphasized the importance of empowering stakeholders to actively identify and challenge biases. By providing workshops, training sessions, and dialogues, organizations employing algorithmic controls can cultivate a shared understanding of algorithmic controls. Moreover, workers can better understand how algorithms provide a recommendation, restrict their activities, rate and reward them, and drive other related activities. Such programs empower workers to recognize and fight against biases and unfair outcomes in algorithms.

Overall, participatory algorithmic controls contribute to the reduction of organizational inequality through their focus on diversity, inclusion, transparency, accountability, and empowerment. By actively engaging stakeholders in design and decision-making, organizations can tap into a wider range of perspectives, address biases, and develop algorithmic management systems that better serve the needs and values of a diverse population of workers. Through this inclusive approach, participatory algorithmic controls promote more equitable and responsible organizational practices.

4 Conclusion

This study provides an overview of differences among technical controls, bureaucratic controls, and algorithmic controls employed for organizational management, the organizational inequalities potentially introduced by algorithmic controls, and approaches to mitigate such inequalities associated with algorithmic controls. Our findings revealed various types of inequalities in the organizations that correspond to Kellogg et al.’s [1] six mechanisms of algorithmic controls that employers use to control workers (i.e., restricting, recommending, recording, rating, replacing, and rewarding). To mitigate these organizational inequalities, we propose the development of trustworthy algorithmic controls and participatory algorithmic controls.

Trustworthy algorithmic controls provide ways for workers to understand how a particular decision is made, thus enhancing the transparency and accountability of algorithmic recommendation and evaluation. Through effective internal review and external assurance of the algorithm, workers’ data privacy can be ensured and protected. As algorithms employed in the employment context are seen as high-risk algorithms to ensure accuracy, fairness, privacy, transparency, human oversight, and data governance, they must go through conformity assessment according to applicable laws and standards (e.g., the European AI Act). Future generations of algorithmic controls should be made lawful and ethical by undergoing conformity and compliance audits required by laws as well as voluntary audits and assessments that enhance trust and acceptance by workers.

Participatory development of algorithms offers a set of recommendations and implications that can promote fairness, equity, and inclusivity within organizations. By involving diverse stakeholders in algorithm design, development, and decision-making processes, organizations can address biases, enhance transparency, integrate ethical considerations, empower stakeholders through education, and continuously improve algorithms based on feedback. These practices help build trust, legitimacy, and accountability while ensuring that algorithms align with societal values and reduce organizational inequality. Ultimately, participatory algorithmic controls foster responsible and human-centric algorithmic controls that benefit workers in organizations.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

Code availability

Not applicable.

5. References

Kellogg KC, Valentine MA, Christin A. Algorithms at work: the new contested terrain of control. Acad Manag Ann. 2020;14(1):366–410. https://doi.org/10.5465/annals.2018.0174.

Rodgers W, Murray JM, Stefanidis A, Degbey WY, Tarba SY. An artificial intelligence algorithmic approach to ethical decision-making in human resource management processes. Hum Resour Manag Rev. 2023;33(1): 100925. https://doi.org/10.1016/j.hrmr.2022.100925.

Liu M, Huang Y, Zhang D. Gamification’s impact on manufacturing: enhancing job motivation, satisfaction and operational performance with smartphone-based gamified job design. Hum Factors Ergon Manuf Serv Ind. 2018;28(1):38–51. https://doi.org/10.1002/hfm.20723.

Liu YE, Mandel T, Brunskill E, Popovic Z. Trading off scientific knowledge and user learning with multi-armed bandits. In EDM, London, United Kingdom; 2014. pp. 161–168.

European Commission. Proposal for regulation of the European parliament and of the council—Laying down harmonised rules on artificial intelligence (artificial intelligence act) and amending certain Union legislative acts. 2021, April 21. https://digital-strategy.ec.europa.eu/en/library/proposal-regulation-laying-down-harmonised-rules-artificial-intelligence. Accessed 7 December 2023.

Burrell J. How the machine ‘thinks’: understanding opacity in machine learning algorithms. Big Data Soc. 2016;3(1):1–12. https://doi.org/10.1177/2053951715622512.

Anteby M, Chan CK. A self-fulfilling cycle of coercive surveillance: workers’ invisibility practices and managerial justification. Organ Sci. 2018;29(2):247–63. https://doi.org/10.1287/orsc.2017.1175.

Fourcade M, Healy K. Seeing like a market. Soc Econ Rev. 2016;15(1):9–29. https://doi.org/10.1093/ser/mww033.

Lee MK, Kusbit D, Metsky E, Dabbish L. Working with machines: The impact of algorithmic and data-driven management on human workers. In Proceedings of the 33rd annual ACM conference on human factors in computing systems, Seoul, Republic of Korea; 2015. pp. 1603–1612. https://doi.org/10.1145/2702123.2702548.

Castilla EJ. Gender, race, and meritocracy in organizational careers. Am J Sociol. 2008;113(6):1479–526. https://doi.org/10.1086/588738.

Petersen T, Saporta I. The opportunity structure for discrimination. Am J Sociol. 2004;109:852–901.

Eubanks V. Automating inequality: how high-tech tools profile, police, and punish the poor. New York: St. Martin’s Press; 2018.

Askay DA. Silence in the crowd: the spiral of silence contributing to the positive bias of opinions in an online review system. New Media Soc. 2015;17(11):1811–29. https://doi.org/10.1177/1461444814535190.

Filippas A, Horton JJ, Golden J. Reputation inflation. Proceedings of the 2018 ACM Conference on Economics and Computation. 2018. pp. 483–484.

Jhaver S, Karpfen Y, Antin J. Algorithmic anxiety and coping strategies of Airbnb hosts. In Proceedings of the 2018 CHI conference on human factors in computing systems, Montreal, QC, Canada; 2018. pp. 1–12. https://doi.org/10.1145/3173574.3173995.

Kittur A, Smus B, Khamkar S, Kraut RE. Crowdforge: Crowdsourcing complex work. In Proceedings of the 24th annual ACM symposium on User interface software and technology, Santa Barbara, California, USA; 2011. pp. 43–52. https://doi.org/10.1145/2047196.2047202.

Retelny D, Robaszkiewicz S, To A, Lasecki WS, Patel J, Rahmati N, et al. Expert crowdsourcing with flash teams. In Proceedings of the 27th annual ACM symposium on User interface software and technology, Honolulu, Hawaii, USA; 2014. pp. 75–85. https://doi.org/10.1145/2642918.2647409.

Barati M, Ansari B. Effects of algorithmic control on power asymmetry and inequality within organizations. J Manag Control. 2022;33(4):525–44. https://doi.org/10.1007/s00187-022-00347-6.

Cobbe J, Lee MSA, Singh J. Reviewable automated decision-making: A framework for accountable algorithmic systems. Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT '21). Canada; 2021. pp. 598–609.

Kroll JA. Outlining traceability: a principle for operationalizing accountability in computing systems. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ‘21), Canada; 2021. pp. 758–771.

Liu H, Wang Y, Fan W, Liu X, Li Y, Jain S, et al. Trustworthy AI: a computational perspective. ACM Trans Intell Syst Technol. 2022;14(1):1–59. https://doi.org/10.1145/3546872.

Raji ID, Smart A, White RN, Mitchell M, Gebru T, Hutchinson B, Smith-Loud J, Theron D, Barnes P. Closing the AI accountability gap: defining an end-to-end framework for internal algorithmic auditing. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ‘20), Barcelona, Spain; 2020. pp. 33–44.

Toreini E, Aitken M, Coopamootoo K, Elliott K, Zelaya CG, van Moorsel A. The relationship between trust in AI and trustworthy machine learning technologies. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ‘20), Barcelona, Spain, 2020. pp. 272–283.

NIST. AI Risk Management Framework: Second Draft. 2022. https://www.nist.gov/system/files/documents/2022/08/18/AI_RMF_2nd_draft.pdf. Accessed 2 July 2023.

Li B, Qi P, Liu B, Di S, Liu J, Pei J, Yi J, Zhou B. Trustworthy AI: from principles to practices. ACM Comput Surv. 2023;55(9):1–46. https://doi.org/10.1145/3555803.

de Almeida PGR, dos Santos CD, Farias JS. Artificial intelligence regulation: a framework for governance. Ethics Inf Technol. 2021;23(3):505–25. https://doi.org/10.1007/s10676-021-09593-z.

Saleiro P, Kuester B, Hinkson L, London J, Stevens A, Anisfeld A, et al. Aequitas: A bias and fairness audit toolkit. 2018. arXiv preprint arXiv:1811.05577.

Yan T, Zhang C. Active fairness auditing. International Conference on Machine Learning. PMLR; 2022. pp. 24929–24962.

Zhang A, Walker O, Nguyen K, Dai J, Chen A, Lee MK. Deliberating with AI: improving decision-making for the future through participatory AI design and stakeholder deliberation. Proc ACM Hum-Comput Interact. 2023;7(CSCW1):1–32. https://doi.org/10.1145/3579601.

Inie N, Falk J, Tanimoto S. Designing participatory AI: Creative professionals’ worries and expectations about generative AI. In Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery, Hamburg, Germany; 2023. https://doi.org/10.1145/3544549.3585657.

Gerdes A. A participatory data-centric approach to AI Ethics by design. Appl Artif Intell. 2022;36(1): e2009222. https://doi.org/10.1080/08839514.2021.2009222.

Osnowitz D. Occupational networking as normative control: collegial exchange among contract professionals. Work Occupations. 2006;33(1):12–41. https://doi.org/10.1177/0730888405280160.

Wu Z, Schrater P, Pitkow X. Inverse rational control: Inferring what you think from how you forage. 2018. arXiv preprint arXiv:1805.09864. https://doi.org/10.48550/arXiv.1805.09864.

Zilincik S. Emotional and rational decision-making in strategic studies: moving beyond the false dichotomy. J Strategic Security. 2022;15(1):1–13.

Yeh CP. Social control or bureaucratic control? The effects of the control mechanisms on the subsidiary performance. Asia Pac Manag Rev. 2021;26(2):67–77. https://doi.org/10.1016/j.apmrv.2020.08.004.

Rogers B. The law and political economy of workplace technological change. Harvard Civil Rights-Civil Liberties Law Review. 2020;55(2):531–84.

Aiello JR, Svec CM. Computer monitoring of work performance: extending the social facilitation framework to electronic presence. J Appl Soc Psychol. 1993;23(7):537–48. https://doi.org/10.1111/j.1559-1816.1993.tb01102.x.

Sewell G, Barker JR, Nyberg D. Working under intensive surveillance: when does ‘measuring everything that moves’ become intolerable. Human Relations. 2012;65(2):189–215. https://doi.org/10.1177/0018726711428958.

Mars G. Work place sabotage. Routledge; 2019.

Hodson R. Worker resistance: an underdeveloped concept in the sociology of work. Econ Ind Democr. 1995;16(1):79–110. https://doi.org/10.1177/0143831X950160010.

Atkinson RD, Wu JJ. False alarmism: technological disruption and the US labor market, 1850–2015. Information Technology & Innovation Foundation. 2017. https://doi.org/10.2139/ssrn.3066052.

McLoughlin IP, Badham RJ, Palmer G. Cultures of ambiguity: design, emergence and ambivalence in the introduction of normative control. Work Employ Soc. 2005;19(1):67–89. https://doi.org/10.1177/0950017005051284.

West WF, Raso C. Who shapes the rulemaking agenda? Implications for bureaucratic responsiveness and bureaucratic control. J Public Adm Res Theory. 2013;23(3):495–519. https://doi.org/10.1093/jopart/mus028.

Gill MJ. The significance of suffering in organizations: understanding variation in workers’ responses to multiple modes of control. Acad Manag Rev. 2019;44(2):377–404. https://doi.org/10.5465/amr.2016.0378.

Gillespie T. The relevance of algorithms. Media technologies: essays on communication, materiality, and society. Cambridge MA: The MIT Press; 2014. p. 167.

Rico R, Sánchez-Manzanares M, Gil F, Alcovery CM, Tabernero C. Coordination processes in work teams. Papeles del Psicólogo. 2011;32(1):59–68. http://www.cop.es/papeles.

Puranam P. The microstructure of organizations. Oxford: OUP; 2018.

Norlander P, Jukic N, Varma A, Nestorov S. The effects of technological supervision on gig workers: organizational control and motivation of Uber, taxi, and limousine drivers. Int J Hum Resour Manage. 2021;32(19):4053–77. https://doi.org/10.1080/09585192.2020.1867614.

Hardy S, Brougham D. Intelligent automation in New Zealand: adoption scale, impacts, barriers and enablers. N Z J Hum Resour Manag. 2022;22(1):15–31.

Borry EL, Getha-Taylor H. Automation in the public sector: efficiency at the expense of equity? Public Integrity. 2019;21(1):6–21. https://doi.org/10.1080/10999922.2018.1455488.

Christin A. Algorithms in practice: comparing web journalism and criminal justice. Big Data Soc. 2017;4(2):1–14. https://doi.org/10.1177/2053951717718855.

Burawoy M. Manufacturing consent: changes in the labor process under monopoly capitalism. Chicago: University of Chicago Press; 1979.

Robbins B. Governor Macquarie’s job descriptions and the bureaucratic control of the convict labour process. Labour Hist. 2009;96:1–18.

Pronovost P, Vohr E. Safe patients, smart hospitals: how one doctor’s checklist can help us change health care from the inside out. London: Penguin; 2010.

Lange D. A multidimensional conceptualization of organizational corruption control. Acad Manag Rev. 2008;33(3):710–29. https://doi.org/10.5465/amr.2008.32465742.

Cram WA, Wiener M. Technology-mediated control: case examples and research directions for the future of organizational control. Commun Assoc Inf Syst. 2020;46(1):4. https://doi.org/10.17705/1CAIS.04604.

Long CP, Bendersky C, Morrill C. Fairness monitoring: linking managerial controls and fairness judgments in organizations. Acad Manag J. 2011;54(5):1045–68. https://doi.org/10.5465/amj.2011.0008.

Hallonsten O. Stop evaluating science: a historical-sociological argument. Soc Sci Inf. 2021;60(1):7–26. https://doi.org/10.1177/053901842199220.

Gossett LM. Organizational control theory. Encyclopedia Commun Theory. 2009;1:706–9.

Sanford AG, Blum D, Smith SL. Seeking stability in unstable times: COVID-19 and the bureaucratic mindset. COVID-19. Routledge; 2020. pp. 47–60.

Jacobs A. The pathologies of big data. Commun ACM. 2009;52(8):36–44.

Katal A, Wazid M, Goudar RH. Big data: issues, challenges, tools and good practices. In 2013 Sixth international conference on contemporary computing (IC3). IEEE; 2013. pp. 404–409.

Cambo SA, Gergle D. User-centred evaluation for machine learning. In: Zhou J, Chen F, editors. Human and machine learning. Human-computer interaction series. Cham: Springer; 2018. https://doi.org/10.1007/978-3-319-90403-0_16.

Lix K, Goldberg A, Srivastava S, Valentine M. Expressly different: interpretive diversity and team performance. Working Paper. Stanford University; 2019.

Ahmed SI, Bidwell NJ, Zade H, Muralidhar SH, Dhareshwar A, Karachiwala B, et al. Peer-to-peer in the workplace: a view from the road. In Proceedings of the 2016 CHI conference on human factors in computing systems, San Jose, California, USA; 2016. pp. 5063–5075. https://doi.org/10.1145/2858036.2858393.

Bailey D, Erickson I, Silbey S, Teasley S. Emerging audit cultures: data, analytics, and rising quantification in professors’ work. Boston: Academy of Management; 2019.

King KG. Data analytics in human resources: a case study and critical review. Hum Resour Dev Rev. 2016;15(4):487–95. https://doi.org/10.1177/1534484316675818.

Sundararajan A. The sharing economy: the end of employment and the rise of crowd-based capitalism. Cambridge, MA: MIT Press; 2016.

Valentine M, Hinds R. Algorithms and the org chart. Working Paper. Stanford University; 2019.

Trinidad JE. Teacher response process to bureaucratic control: individual and group dynamics influencing teacher responses. Leadersh Policy Sch. 2019;18(4):533–43. https://doi.org/10.1080/15700763.2018.1475573.

Alvesson M, Karreman D. Unraveling HRM: identity, ceremony, and control in a management consulting firm. Organ Sci. 2007;18(4):711–23. https://doi.org/10.1287/orsc.1070.0267.

Moreo PJ. Control, bureaucracy, and the hospitality industry: an organizational perspective. J Hosp Educ. 1980;4(2):21–33. https://doi.org/10.1177/109634808000400203.

Bensman J, Gerver I. Crime and punishment in the factory: the function of deviancy in maintaining the social system. Am Sociol Rev. 1963;28(4):588–98. https://doi.org/10.2307/2090074.

Pollert A. Girls, wives, factory lives. London: Macmillan Press; 1981.

Bolton SC. A simple matter of control? NHS hospital nurses and new management. J Manage Stud. 2004;41(2):317–33. https://doi.org/10.1111/j.14676486.2004.00434.x.

Hodgson DE. Project work: the legacy of bureaucratic control in the post-bureaucratic organization. Organization. 2004;11(1):81–100. https://doi.org/10.1177/1350508404039659.

Lipsky M. Street-level bureaucracy: dilemmas of the individual in public service. New York: Russell Sage Foundation; 2010.

Rahman H. Reputational ploys: reputation and ratings in online labor markets. Working Paper. Stanford University; 2017.

Curchod C, Patriotta G, Cohen L, Neysen N. Working for an algorithm: power asymmetries and agency in online work settings. Adm Sci Q. 2020;65(3):644–76. https://doi.org/10.1177/0001839219867024.

Wood AJ, Graham M, Lehdonvirta V, Hjorth I. Good gig, bad gig: autonomy and algorithmic control in the global gig economy. Work Employ Soc. 2019;33(1):56–75. https://doi.org/10.1177/0950017018785616.

Tufekci Z. Twitter and tear gas: the power and fragility of networked protest. New Haven, CT: Yale University Press; 2017.

Chiarioni G, Popa SL, Ismaiel A, Pop C, Dumitrascu DI, Brata VD, et al. Herbal remedies for constipation-predominant irritable bowel syndrome: a systematic review of randomized controlled trials. Nutrients. 2023;15(19):4216. https://doi.org/10.3390/nu15194216.

Acker J. Inequality regimes: gender, class, and race in organizations. Gend Soc. 2006;20(4):441–64. https://doi.org/10.1177/0891243206289499.

Acker J. Hierarchies, jobs, and bodies: a theory of gendered organizations. Gend Soc. 1990;4:139–58. https://doi.org/10.1177/089124390004002002.

Ferguson KE. The feminist case against bureaucracy. Philadelphia: Temple University Press; 1984.

Kanter RM. Men and women of the corporation. New York: Basic Books; 1977.

Brown MK, Carnoy M, Currie E, Duster T, Oppenheimer DB, Shultz MM, Wellman D. White-washing race: the myth of a color-blind society. Berkeley: University of California Press; 2003.

Royster DA. Race and the invisible hand: how white networks exclude Black men from blue-collar jobs. Berkeley: University of California Press; 2003.

Miller SM, Keiser LR. Representative bureaucracy and attitudes toward automated decision making. J Public Adm Res Thoery. 2020;31(1):150–65. https://doi.org/10.1093/jopart/muaa019.

Martin D, Hanrahan BV, O’neill J, Gupta N. Being a Turker. In Proceedings of the 17th ACM conference on Computer supported cooperative work & social computing, Portland, Oregon, USA; 2014. pp. 224–235. https://doi.org/10.1145/2531602.2531663.

Angwin J, Larson J, Mattu S, Kirchner L. Machine bias: There’s software used across the country to predict future criminals. And it’s biased against blacks. ProPublica. 2016, May 23. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing. Accessed 2 July 2023.

Harcourt BE. Against prediction: profiling, policing, and punishing in an actuarial age. Chicago: University of Chicago Press; 2007.

Barocas S, Selbst AD. Big data’s disparate impact. California Law Rev. 2016;104:671.

Cappelli P, Tambe P, Yakubovich V. Can data science change human resources? In: Canals J, Heukamp F, editors. The future of management in an AI world. Springer: Cham; 2020. p. 93–115.

Johnson BAM, Coggburn JD, Llorens JJ. Artificial intelligence and public human resource management: questions for research and practice. Public Personal Manage. 2022;51(4):538–62. https://doi.org/10.1177/00910260221126498.

Bodie MT, Cherry MA, McCormick ML, Tang J. The law and policy of people analytics. Univ Colorado Law Rev. 2017;88(1):961–1042.

Angwin J. Dragnet nation: a quest for privacy, security, and freedom in a world of relentless surveillance. New York: Henry Holt; 2014.

Miller CC. Can an algorithm hire better than a human? The New York Times; 2015 (June 25). https://www.nytimes.com/2015/06/26/upshot/can-an-algorithm-hire-better-than-a-human.html. Accessed 2 July 2023.

Gal U, Jensen T, Stein MK. Breaking the vicious cycle of algorithmic management: a virtue ethics approach to people analytics. Inf Organ. 2020;30: 100301.

Schafheitle SD, Weibel A, Ebert IL, Kasper G, Schank C, Leicht-Deobald U. No stone left unturned? Towards a framework for the impact of datafication technologies on organizational control. Acad Manag Discoveries. 2020;6(3):455–87. https://doi.org/10.5465/amd.2019.0002.

Greenwood B, Adjerid I, Angst CM. How unbecoming of you: gender biases in perceptions of ridesharing performance. In HICSS (pp. 1–11). 2019.

Levy K, Barocas S. Designing against discrimination in online markets. Berkeley Technol Law J. 2017;32:1183.

Rosenblat A, Levy KE, Barocas S, Hwang T. Discriminating tastes: uber’s customer ratings as vehicles for workplace discrimination. Policy Internet. 2017;9(3):256–79. https://doi.org/10.1002/poi3.153.

Chan J, Wang J. Hiring preferences in online labor markets: evidence of a female hiring bias. Manage Sci. 2018;64(7):2973–94. https://doi.org/10.1287/mnsc.2017.2756.

Edelman B, Luca M, Svirsky D. Racial discrimination in the sharing economy: evidence from a field experiment. Am Econ J Appl Econ. 2017;9(2):1–22. https://doi.org/10.1257/app.20160213.

Engler A. Auditing employment algorithms for discrimination. Policy Commons; 2021. https://policycommons.net/artifacts/4143733/auditing-employment-algorithms-for-discrimination/4952263/.

Wood AJ, Lehdonvirta V, Graham M. Workers of the internet unite? Online freelancer organization among remote gig economy workers in six Asian and African countries. N Technol Work Employ. 2018;33(2):95–112. https://doi.org/10.1111/ntwe.12112.

Giermindl LM, Strich F, Christ O, Leicht-Deobald U, Redzepi A. The dark sides of people analytics: reviewing the perils for organisations and employees. Eur J Inf Syst. 2021. https://doi.org/10.1080/0960085X.2021.1927213.

Rahman H. From iron cages to invisible cages: algorithmic evaluations in online labor markets. Working Paper. Stanford University; 2019.

Hutchinson B, Smart A, Hanna A, Denton E, Greer C, Kjartansson O, Barnes P, Mitchell M. Towards accountability for machine learning datasets: practices from software engineering and infrastructure. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ‘21). Canada; 2021. pp. 560–575.

Knowles B, Richards JT. The sanction of authority: promoting public trust in AI. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ‘21), Canada; 2021. pp. 262–271.

Miceli M, Yang T, Naudts L, Schuessler M, Serbanescu D, Hanna A. Documenting computer vision datasets: an invitation to reflexive data practices. In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ‘21), Canada; 2021. pp. 161–172.

Wieringa M. What to account for when accounting for algorithms: a systematic literature review on algorithmic accountability. In Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency (FAT* ‘20), Barcelona, Spain, 2020. pp. 1–18.

Yam J, Skorburg JA. From human resources to human rights: Impact assessments for hiring algorithms. Ethics Inf Technol. 2021;23(4):611–23. https://doi.org/10.1007/s10676-021-09599-7.

Wexler R. The odds of justice: Code of silence: how private companies hide flaws in the software that governments use to decide who goes to prison and who gets out. Chance. 2018;31(3):67–72. https://doi.org/10.1080/09332480.2018.1522217.

Gebru T, Morgenstern J, Vecchione B, Vaughan JW, Wallach H, Iii HD, Crawford K. Datasheets for datasets. Commun ACM. 2021;64(12):86–92.

Shin D, Kee KF, Shin EY. Algorithm awareness: why user awareness is critical for personal privacy in the adoption of algorithmic platforms? Int J Inf Manage. 2022;65: 102494. https://doi.org/10.1016/j.ijinfomgt.2022.102494.

Adensamer A, Gsenger R, Klausner LD. “Computer says no”: algorithmic decision support and organisational responsibility. J Responsible Technol. 2021;7–8: 100014. https://doi.org/10.1016/j.jrt.2021.100014.

Crawford K, Calo R. There is a blind spot in AI research. Nature. 2016;538:311–3. https://doi.org/10.1038/538311a.

Simkute A, Luger E, Jones B, Evans M, Jones R. Explainability for experts: a design framework for making algorithms supporting expert decisions more explainable. J Responsible Technol. 2021;7–8: 100017. https://doi.org/10.1016/j.jrt.2021.100017.

Rakova B, Chowdhury R. Human self-determination within algorithmic sociotechnical systems. Comput Soc. 2019. https://doi.org/10.48550/arXiv.1909.06713.

Bondi E, Xu L, Acosta-Navas D, Killian JA. Envisioning communities: a participatory approach towards AI for social good. In Proceedings of the 2021 AAAI/ACM Conference on AI, Ethics, and Society. 2021. https://doi.org/10.1145/3461702.3462612.

Dekker R, Koot P, Birbil SI, van Embden Andres M. Co-designing algorithms for governance: ensuring responsible and accountable algorithmic management of refugee camp supplies. Big Data Soc. 2022. https://doi.org/10.1177/20539517221087855.

Ananny M, Crawford K. Seeing without knowing: limitations of the transparency ideal and its application to algorithmic accountability. New Media Soc. 2018;20(3):973–89.

Kossow N, Windwehr S, Jenkins M. Algorithmic transparency and accountability. Transparency International. 2021.

Lepri B, Oliver N, Letouze E, Pentland A, Vinck P. Fair, transparent, and accountable algorithmic decision-making processes: the premise, the proposed solutions, and the open challenges. Philosophy Technol. 2018. https://doi.org/10.1007/s13347-017-0279-x.

Nassar A, Kamal M. Ethical dilemmas in AI-powered decision-making: a deep dive into big data-driven ethical considerations. Int J Responsible Artif Intell. 2021;11(8):1–11.

Brisson-Boivin K, McAleese S. Algorithmic awareness: Conversations with young Canadians about artificial intelligence and privacy. MediaSmarts: Canada’s Centre for Digital and Media Literacy; 2021. https://priv.gc.ca/en/opc-actions-and-decisions/research/funding-for-privacy-research-and-knowledge-translation/completed-contributions-program-projects/2020-2021/p_2020-21_04/?wbdisable=true. Accessed 7 December 2023.

Tsamados A, Aggarwal N, Cowls J, Morley J, Roberts H, Taddeo M, Floridi L. The ethics of algorithms: key problems and solutions. Ethics Gov Policies Artif Intell. 2021. https://doi.org/10.1007/s00146-021-01154-8.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

Both authors have made substantial contributions to the design of the work and acquisition, analysis, and interpretation of data; drafted the work and revised it critically for important intellectual content; approved the version to be published; and agree to be accountable for all aspects of the work in ensuring that questions related to the accuracy and integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Competing interests

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Y., Xiang, B. Reducing organizational inequalities associated with algorithmic controls. Discov Artif Intell 4, 36 (2024). https://doi.org/10.1007/s44163-024-00137-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44163-024-00137-0