Abstract

We consider the numerical solution of the optimal transport problem between densities that are supported on sets of unequal dimension. Recent work by McCann and Pass reformulates this problem into a non-local Monge-Ampère type equation. We provide a new level-set framework for interpreting this nonlinear PDE. We also propose a novel discretisation that combines carefully constructed monotone finite difference schemes with a variable-support discrete version of the Dirac delta function. The resulting method is consistent and monotone. These new techniques are described and implemented in the setting of 1D to 2D transport, but they can easily be generalised to higher dimensions. Several challenging computational tests validate the new numerical method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of optimal transport [1] involves finding a mapping \(T:X\rightarrow Y\) that rearranges a density f supported on X into a density g supported on Y while minimising a given cost function

Here \(T\#f\) denotes the pushforward of a density f, which satisfies

for every measurable set \(E \subseteq Y\).

This problem has been widely studied, both theoretically and numerically, in the setting where the sets X and Y lie in same ambient space (e.g.: \(X,Y \subset \mathbb {R}^n\)). However, in many important problems, this simplifying assumption is not valid. In this article, we consider the problem of optimal transport between densities that are supported on sets of different dimension (\(X \subset \mathbb {R}^n\), \(Y\subset \mathbb {R}^m\), \(n<m\)). This setting is critical to applications such as economics and game theory (\(m \ge n \ge 1\)) [2,3,4], simulation of atmosphere and ocean dynamics (\(2 \le n \le 3 = m\)) [5], and Generalised Adversarial Networks (\(m \ne n\)) [6].

Despite the importance of this problem, almost nothing is known about its numerical solution except in a couple special cases. An algorithm exists that exploits the simplified structure of a one-dimensional target (\(n=1\)) combined with significant extra structure on the problem data [2]. The problem can also be solved in the special case of a quadratic cost (\(c(x,y) = \Vert x-y\Vert ^2\)) by making use of powerful techniques developed for semi-discrete optimal transport [7]. Utilising the linear programming structure of the optimal transport problem is theoretically possible but computationally infeasible as it requires the discretisation of an \((m+n)\)-dimensional domain.

In this article, we propose the first PDE-based approach to the problem of optimal transportation between unequal dimensions. The new method we propose relies on a reformulation in terms of a non-local Monge-Ampère type equation that was recently proposed by McCann and Pass [8]:

Here \(m \ge n \ge 1\), \(H_k\) denotes the k-dimensional Haussdorf measure, u is a scalar potential, \(\partial _cu\) is the c-subgradient of u that determines the desired (inverse) mapping \(T^{-1}\), and the matrix-valued function A and scalar-valued function \(\psi \) encode information about the cost and density functions.

While the numerical solution of the Monge-Ampère equation has received a great deal of attention in recent years [9,10,11,12,13,14,15,16,17,18,19,20], nothing is known about non-local extensions. We propose a novel numerical method for (2) that is inspired by recent developments in the numerical analysis of fully nonlinear elliptic equations [12, 21, 22] but requires significant new ideas in order to couple the unequal dimensions. Because the techniques we utilise are motivated by existing convergence theory, there is a great reason to hope that a proof of convergence of this method will soon be developed.

As a proof-of-concept, the new method is implemented in the 1D to 2D case. However, we emphasise that this method employs techniques that are designed to easily generalise to higher dimensions. A range of computational examples demonstrate that this is an effective approach for solving a very challenging problem.

2 Numerical Solution of Monge-Ampère Equations

While nothing is known about the numerical solution of general non-local Monge-Ampère equations of the form (2), there has been a great deal of progress in the simpler case resulting from optimal transport between equal dimensions (\(m=n\)) with quadratic cost (\(c(x,y) = \frac{1}{2}\left| x-y\right| ^2\)). In this setting, the PDE reduces to the second boundary value problem for the Monge-Ampère equation:

The Monge-Ampère equation is an example of a second-order fully nonlinear elliptic PDE. We use the convention that \(M \ge N\) indicates that \(M-N\) is positive semi-definite and define degenerate ellipticity as follows.

Definition 1

(Degenerate elliptic) The operator \(F:X\times \mathbb {R}\times \mathbb {R}^n\times \mathcal {S}^n\rightarrow \mathbb {R}\) is degenerate elliptic if

whenever \(u \le v\) and \(M \ge N\).

Much of the recent progress in the design of provably convergent methods for the Monge-Ampère equation is inspired by the convergence framework of Barles and Souganidis [21]. This foundational work demonstrated that a consistent, monotone, stable numerical method will converge to the weak (viscosity) solution of the PDE, provided that the underlying PDE satisfies a strong comparison principle (subsolutions always lie below supersolutions) and that the approximation scheme admits a solution.

This framework analyses approximation schemes defined on a subset \(\mathcal {G}^h\subset \bar{X}\) of the original domain X and its boundary.

Definition 2

(Consistency) The scheme \(F^h(x,u(x),u(x)-u(\cdot )) = 0\) is consistent with the PDE

if for any smooth function \(\phi \in C^\infty \) and \(x\in {\Omega }\),

Definition 3

(Monotonicity) The operator \(F^h(x,u(x),u(x)-u(\cdot ))\) is monotone if \(F^h\) is a non-decreasing function of its final two arguments.

Definition 4

(Stability) The operator \(F^h(x,u(x),u(x)-u(\cdot ))\) is stable if there exists a constant \(M\in \mathbb {R}\), independent of h, such that if \(u^h\) is any solution to the scheme \(F^h(x,u^h(x),u^h(x)-u^h(\cdot )) = 0\) then \(\Vert u^h\Vert _\infty \le M\) for all sufficiently small \(h>0\).

We cannot expect a general approximation scheme to admit a solution without some continuity assumptions.

Definition 5

(Continuity) The operator \(F^h(x,u(x),u(x)-u(\cdot ))\) is continuous if it is continuous with respect to its final two arguments.

A nice property of continuous, monotone schemes is that it is generally very easy to ensure the existence of a (stable) solution by including, at most, a small perturbation to make the scheme proper.

Definition 6

(Proper) The operator \(F^h(x,u(x),u(x)-u(\cdot ))\) is proper if there exists some \(C>0\) such that if \(u \ge v\) then \(F^h(x,u,p)-F^h(x,v,p) \ge C(u-v)\).

Any monotone scheme \(F^h\) can be perturbed to a proper scheme via

where \(\epsilon ^h(x) \rightarrow 0\) as \(h\rightarrow 0\). A typical choice, which lends itself to nice stability properties, is to let \(\epsilon ^h\) scale like the truncation error of \(F^h\).

Theorem 1

(Existence and uniqueness [23, Theorem 8]) Let \(F^h\) be a continuous, proper, monotone scheme. Then \(F^h(x,u(x),u(x)-u(\cdot )) = 0\) has a unique solution.

The Barles-Souganidis convergence framework has inspired many different monotone discretisations of the Monge-Ampère equation [9,10,11,12,13, 15, 20]. Typically, these discretisations involve a PDE operator that incorporates the convexity constraint (\(D^2u(x) \ge 0\)) through the use of a modified determinant satisfying

where r(M) can be any function that is non-positive whenever the condition \(M\ge 0\) is violated. Monotone discretisations can then be constructed for the modified Monge-Ampère equation

The Barles-Souganidis convergence framework does not immediately apply to the second boundary value problem for Monge-Ampère (3) since it not satisfies the required comparison principle. However, this powerful framework has recently been extended to include this important PDE [24, 25]. By adding mild additional structure to the numerical methods, these works establish the convergence of consistent, monotone methods. Moreover, these works demonstrate that as long as the interior problem is set up carefully, there is a great deal of flexibility in the choice of numerical boundary conditions. Indeed, even boundary conditions that are inconsistent with (3) can nevertheless yield a numerical solution that converges to the true solution in \(L^\infty (\Omega )\) for any compact subset \(\Omega \subset X\).

A similar convergence result was recently established by one of the authors for a class of more general Monge-Ampère type equations of the form

posed on the 2-sphere \(\mathcal {S}^2\) [22]. In particular, this work showed that consistent, monotone methods converge with the addition of mild additional structure (which is easily incorporated into the numerical method).

With these results in mind, a reasonable starting point for the numerical solution of non-local Monge-Ampère type equations (2) is to design consistent, monotone methods. Given recent progress in the numerical analysis of other Monge-Ampère type equations, there is great reason to hope that it will soon be possible to produce a proof of convergence for such methods.

3 PDE Formulation

The starting point of our numerical method for optimal transport between unequal dimensions is the PDE formulation (2) proposed by McCann and Pass. We begin this section with a formal overview of the derivation of the PDE, referring to [8] for complete details. We then propose a local level-set formulation that highlights the elliptic structure of the equation and will lead into the numerical method introduced in section 4.

To formulate the optimal transport problem, we begin with a density function f supported on a set \(X\subset \mathbb {R}^n\), a density g supported on a set \(Y\subset \mathbb {R}^m\), and a cost function \(c:X\times Y \rightarrow \mathbb {R}\). We require the data to satisfy the mass balance constraint

We assume here that \(n<m\) and seek a mapping \(T^*:Y\rightarrow X\) that minimises the cost

We remark here that, under appropriate conditions on the data, we can hope for a well-defined mapping from the higher-dimensional space Y to the lower-dimensional space. However, we expect that any “mapping” from X to Y would have to be multi-valued as points in the lower-dimensional space must “spread” to fill the higher-dimensional space.

3.1 Feasibility

In a traditional Optimal Transport problem (\(n=m\)), the pushforward condition \(T\#g = f\) can be expressed via the change of variables theorem as

We now seek a similar representation of the pushforward condition for the unequal dimension setting (\(n<m\)).

We begin by expressing the condition \(T\#g = f\) in the form

for every \(\Phi \in L^1(X)\).

Now we recall the coarea formula:

for every \(r\in L^1(Y)\) where \(H_k\) denotes the k-dimensional Hausdorff measure and

Given some \(\Phi \in L^1(X)\), we now make the particular choice of

to formally obtain

We notice that if \(y\in T^{-1}(x)\) then \(T(y) = x\), which allows us to rewrite the final integral as

for every \(\Phi \in L^1(X)\). From this,we conclude that almost everywhere,

We note that in the special case of equal dimensions (\(m=n\)), this coincides with the usual result of the change of variables formula (9).

3.2 Optimality

Having characterised the feasibility condition \(T\#g =f\), we now seek a representation of the optimal mapping. In the simplest case of equal dimensions (\(m=n\)) and a quadratic cost (\(c(x,y) = \frac{1}{2}\left| x-y\right| ^2\)), optimality is achieved by a mapping that can be expressed as the gradient of a convex function.

Our starting point in the current setting is the usual dual formulation of the Optimal Transport problem [1]. This leads us to seek a c-convex dual pair u(x), v(y) satisfying

with equality when \(x = T(y)\).

Equivalently, we require the inverse optimal mapping (which may be set-valued) to be contained in the c-subgradient of the function u, which we write as

From here, we formally obtain the following first- and second-order optimality conditions:

Differentiating the first-order optimality condition (16) leads to the expression

Under a slightly stronger version of the second-order optimality condition (16) (assuming positive definiteness instead of merely semi-positive definiteness), we can obtain the following expression for the Jacobian of the optimal mapping:

along with the equality \(T^{-1}(x) = \partial _cu(x)\).

We can now combine this with the feasibility condition (13). We first compute the relevant Jacobian determinant (12):

Finally, we substitute this into the pushforward condition (13) and simplify to obtain the non-local Monge-Ampère type equation

We recall that this equation is coupled to the (strict) c-convexity constraint (16),

which plays the role of the convexity constraint (\(D^2u(x) > 0\)) in the traditional Monge-Ampère equation.

3.3 Local Formulation

As written, equations (18)-(19) are non-local since the domain of integration involves the c-subgradient of u, which relies on global information (14). However, in many settings,it is possible to replace this global definition with a local construction derived from the first- and second-order optimality conditions [8].

To accomplish this, we first define sets on which the first- and second-order optimality conditions (15)-(16) hold:

These are necessary conditions for optimality in (14), which leads to the containment

However, when the problem has sufficient regularity (i.e. \(C^2\) solutions), this containment becomes an equality up to \(H_{m-n}\)-negligible sets [8]. In this setting, (18) simplifies to

subject to the c-convexity condition (19).

This simplification of the domain of integration reduces the problem to a local PDE. However, given the dependence of the domain of integration on second-order information, it is not at all obvious that this equation is actually elliptic and that the arguments developed in section 2 are reasonable.

To re-express this in a way that elucidates the elliptic structure of this equation, we recall that the c-convexity constraint (19) ensures that

where \({\det }^+\) is the particular choice of modified determinant given by

This allows us to absorb the c-convexity constraint into the PDE and reformulate (19) and (23) into the single equation

Following the arguments of [24, Lemma 2.6], we emphasise that solutions of this equation are automatically c-convex on the domain X since the density function f is positive and f and g are required to satisfy the mass balance constraint (7).

This observation makes a further simplification possible. We notice that for any \(x\in X\) and \(y\in Y_1(x,\nabla u(x))\), either \(y\in Y_2(x,\nabla u(x),D^2u(x))\) (if \(D^2u(x) + D^2_{xx}c(x,y) \ge 0\)) or \({\det }^+\left( D^2u(x) + D^2_{xx}c(x,y)\right) = 0\). Thus we can replace the domain of integration in (24) with the set of points satisfying the first-order optimality conditions to obtain

Importantly, equation (25) is now a degenerate elliptic Monge-Ampère type equation since the only second-order term is \({\det }^+\left( D^2u(x) + D^2_{xx}c(x,y)\right) \), while all coefficients of this term are non-negative.

3.4 Level-Set Formulation

While Monge-Ampère type equations (and their numerical solution) have received a great deal of attention in recent years, it is less clear how to deal with their integration over a manifold \(Y_1(x,\nabla u(x))\) that itself depends on the solution of the equation. Here,we propose a level-set formulation of (25) that transfers all of the u-dependence into the integrand.

First, we note that we can easily enlarge the domain of integration by introducing for each \(x\in X\) a Dirac delta function \(\delta \) supported on the \(m-n\) dimensional manifold \(Y_1(x,\nabla u(x))\). To make this precise, we let \(d_{Y_1(x,\nabla u(x))}(y)\) denote the Euclidean distance between y and the manifold \(Y_1(x,\nabla u(x))\). This allows us to rewrite (25) as

Next, we seek an easier representation of the delta function since the distance function may not be easy to compute in practice. To facilitate this, for each \(x\in X\), we define the n level-set functions

We notice immediately that the domain of integration in (25) is equivalent to the intersection of the zero level sets of the \(\phi ^x_i\). That is,

Following [26], we can rewrite equation (26) in terms of these level-set functions, though the specific form is dependent on the smaller dimension n. When \(n=1\), the equation is equivalent to

When \(n=2\), the PDE becomes

where \(P_v w\) denotes a projection of the vector w onto the subspace orthogonal to the vector v.

For higher values of the dimension n, this same procedure can be continued using additional orthogonal projections.

Finally, we note that the gradients of these level-set functions are given by

which do not depend on the potential function u.

4 Numerical Method

In this section, we introduce a numerical method for solving the optimal transport problem between unequal dimensions, starting from the level-set PDE formulation introduced in section 3. For simplicity of the exposition, we will describe the method in the low-dimensional setting (\(n=1\), \(m=2\)). However, all of the techniques we propose can be extended to higher dimensions.

Our goal here is to produce a consistent, monotone discretisation of the ODE operator in (29), which now takes the form

Here we let

which is a known function with no dependence on the potential u.

Since to date little is known about this equation except in the classical setting, we will focus our numerical analysis on the setting in which \(C^2\) solutions exist. However, we expect that with ongoing development of the theory of weak solutions, we will be able to extend the numerical analysis to the non-smooth setting using the techniques of [24].

In particular, we make the following assumptions.

Hypothesis 1

(Hypotheses on problem)

-

(H1)

The cost function \(c\in C^4(\bar{X}\times \bar{Y})\).

-

(H2)

The mixed partial derivatives of the cost \(\sqrt{\left( \frac{\partial ^2c}{\partial x\partial y_1}(x,y)\right) ^2 + \left( \frac{\partial ^2c}{\partial x\partial y_2}(x,y)\right) ^2}\) are bounded away from zero.

-

(H3)

The positive density functions \(f\in C^0(\bar{X})\), \(g\in C^2(\bar{Y})\) are bounded away from zero and infinity.

-

(H4)

The solution of (32) is \(u \in C^2(X)\cap C^1(\bar{X})\).

4.1 Discretisation of Domains

We begin by discretising the sets \(X\subset \mathbb {R}\) and \(Y\subset \mathbb {R}^2\). Since Y need only contain the support of the density g, we may assume that Y is a square.

To discretise \(X=[x_0,x_{max}]\), we choose an integer \(N\in \mathbb {N}\) and introduce the grid spacing \(h_X = (x_{max}-x_0)/N\). Then grid points in the domain are given by \(x_i = x_0+ih_X\) for \(i = 0, \ldots , N\).

To discretise \(Y = [y_0,y_{max}]^2\), we choose an integer \(M\in \mathbb {N}\) and introduce the grid spacing \(h_Y = (y_{max}-y_0)/M\). Then grid points in the domain are given by \(y_{jk} = (y_0+jh_Y,y_0+kh_Y)\) for \(j,k=0,\ldots ,M\).

We will require that asymptotically, \(M\ll N\). That is, the one-dimensional set X needs to be more highly resolved than the two-dimensional set Y.

Throughout, we use the convention that the values of a function u defined on the one-dimensional grid can be represented as \(u_i = u(x_i)\).

4.2 Discretisation of the ODE

We seek a finite difference discretisation of (32) of the form

for \(i = 1, \ldots , N-1\). Here \(\delta ^{h_X,h_Y}(u_i-u_{i-1},u_i-u_{i+1})\) is chosen to generate a consistent approximation of the Dirac delta function and the term \((h_Y^2+h_X/h_Y) u_i\) is introduced to make the scheme proper, with \(h_X \ll h_Y\) being a necessary condition for consistency. For \(F^{h_X,h_Y}\) to be monotone, it must be a non-decreasing function of all of its arguments. This, in turn, is guaranteed if each term appearing in the summation is non-negative and a non-increasing function of the differences \(u_i-u_{i-1}\) and \(u_i-u_{i+1}\).

The first term in the summation is easily approximated using standard centred differences via

This trivially has the required monotonicity properties, which are preserved in the operations of addition with a given grid function and restriction to the positive part.

Discretisation of the delta function, which is supported on a curve in \(\mathbb {R}^2\), is much more delicate. Indeed, naive extensions of typical discretisations of one-dimensional delta functions can lead to \(\mathcal {O}(1)\) errors in the approximation [27].

We take as a starting point an approximate one-dimensional delta function defined in terms of the linear hat function via

Typically, the support of this discrete delta function is chosen to be proportional to the underlying grid spacing, that is \(\epsilon \sim h_Y\).

It is tempting at this point to define the discrete delta function in (34) by

where \(\mathcal {D}_x^{h_X}u_i\) denotes some finite difference approximation of \(u'(x_i)\) and \(m\in \mathbb {N}\) is some fixed positive integer. However, there are two problems with this: (1) fixing the value of \(\epsilon \) in relation to the grid parameter \(h_Y\) can lead to \(\mathcal {O}(1)\) errors when the zero level sets of the level-set function are not well aligned with the Cartesian grid [27] and (2) because of the nonlinearity, we cannot expect the overall approximation to be monotone even if \(\mathcal {D}_x^{h_X}u_i\) is itself monotone.

Fortunately, the first problem can be resolved by allowing \(\epsilon \) to vary in space [28]. We begin by discretising the delta function in the y variable only, allowing x to be continuous. Then we can introduce the approximate delta function

where the support parameter in the linear hat approximation to the delta function is given by

We note that since the cost c is a given function, this spatially varying parameter can be computed a priori.

To achieve a monotone discretisation of this, we need to exploit the structure of the linear hat function. To this end, we notice that

This is automatically non-negative. We can also produce a fully discrete approximation with the required monotonicity properties by utilising a careful combination of forward and backward differences. This leads us to propose

with \(\epsilon \) allowed to vary according to (38).

4.3 Boundary Conditions

While Optimal Transport PDEs such as (25) typically do not require traditional boundary conditions [24], the corresponding finite difference approximation (34) will require some additional conditions to be supplied at the boundary grid points \(x_0\) and \(x_N\).

We have a great deal of freedom in the choice of condition to enforce at the remaining boundary point. For the usual second boundary value problem for the Monge-Ampère equation, it was shown in [24] that even enforcing a possibly inconsistent boundary condition such as

would lead to a convergent numerical method. However, this type of artificial boundary condition will result in a boundary layer in the computed solution.

To find a more natural boundary condition, we recall that the integrand in (29) is supported on the set of points y for which

In, particular, at the boundary point \(x_i\), there must be some \(y_i\in Y\) such that

The cost function c and its partial derivatives are all known functions. However, the relevant values of y are not obvious.

We choose to proceed using a monotonicity assumption and enforce the Neumann boundary conditions

These can easily be discretised in a consistent, monotone way by defining the approximations

4.4 Consistency and Monotonicity

We now verify the consistency and monotonicity of the approximation scheme described by (34)-(40).

First, we note that monotonicity was built into the approximation scheme by design.

Lemma 1

(Monotonicity) Under the assumptions of Hypothesis 1, the approximation scheme (34)-(40) is monotone.

Next, we verify consistency of the scheme. Because of the interplay between the finite difference discretisations and the approximation of the Dirac measure, this is a more delicate calculation that requires a careful balance between the discretisation parameters \(h_X\) and \(h_Y\).

Lemma 2

(Consistency) Under the assumptions of Hypothesis 1, consider the approximation scheme (34)-(40) where the grid parameters are chosen so that \(h_X/h_Y\rightarrow 0\) as \(h_Y\rightarrow 0\). Then the scheme is consistent with the ODE (32). Moreover, the formal truncation error is given by \(\mathcal {O}(h_Y^2+h_X/h_Y)\).

Before proving this lemma, we introduce some notation designed to simplify the representation of the semi- and fully discrete versions of the approximation.

Denote the coefficient of the delta distribution in (32) by

and let

be the discretised version.

We recall that the level-set function used to locate the curve \(Y_1(x,u'(x))\) is given by

while its discretised version satisfies

Using this notation, the integral appearing in equation (32) takes the form

while its semi- and fully discrete approximations are

Proof of Lemma 2

We begin by noting that the width of the support of the approximate delta function can be bounded in terms of the spatial discretisation parameter \(h_Y\). Let

Then \(\epsilon (h_X,\nabla \phi ^x(y)) \le Kh_Y\) for every x, y.

We also notice that many of the terms in the sum of (46) are zero. To identify the non-zero terms, we define

Since \(\phi ^x(y)\) is uniformly continuous in both x and y and its zero level set defines a curve in Y, the cardinality of this set will be

We can also try to identify the terms that are non-zero in the fully discrete sums in (47). To this end, we set

Any point (j, k) in this set will satisfy

for some \(y \in \mathcal {Z}^{h_Y}(x_i)\). This means that for each such y, we need to consider \(\mathcal {O}\left( \dfrac{h_X+h_Y}{h_Y}\right) = \mathcal {O}(1)\) possible neighbouring grid points that satisfy the conditions in (49). Adding up all possible points \(y\in I^{h_Y}(x_i)\) leads to the bound

Using the results of [28], we know that the discretisation of the Dirac delta function satisfies

since the integrand G(x, y) is \(C^2\) in the y-variable.

Finally, we can consider the approximation accuracy of the fully discrete version of the integral (47).

In the last step, we notice that the error term will only be non-zero at indices where the summand is non-zero in either the semi- or fully discrete approximation of (46)-(47).

We can further simplify this to

Finally, we recall that \(h_Y \le \epsilon = \mathcal {O}(h_Y)\) so that

By hypothesis, \(h_X \ll h_Y\) so that the approximation of the integral is consistent with a formal truncation error of \(\mathcal {O}(h_Y^2 + h_X/h_Y)\). Since the remaining terms in the approximation (34) share the same consistency error, this completes the proof. \(\square \)

We also make the remark here that the formal consistency error in the scheme can achieve an optimal value of \(\mathcal {O}(h_X^{2/3})\) if we make the scaling choice \(h_Y = \mathcal {O}(h_X^{1/3})\). In particular, this means that the two-dimensional set Y can (and should) have a much lower resolution than the one-dimensional set X. We also note that typical convergence analysis for Monge-Ampère equations in optimal transport involves compactness arguments without error bounds; actual computational error need not coincide with the formal truncation error.

4.5 Normalisation

Finally, we notice that solutions of the ODE (32) are only determined up to additive constants. The particular constant is not typically important to applications, as the information about the transport mapping is encoded in \(u'(x)\).

For the purposes of this article, we will seek to approximate the mean-zero solution of (32). There is no particular reason that the approximate solution we obtain from (34)-(40) should have mean zero, or even that the mean need be the same given different values of the discretisation parameters \(h_X, h_Y\). This is typical of Monge-Ampère equations in optimal transport, and an easy solution is to simply shift the approximate solution [22].

Thus we propose the following two-step procedure:

-

1.

Let \(v^{h_X,h_Y}\) be the unique solution of

$$\begin{aligned} F^{h_X,h_Y}_i(v_i^{h_X,h_Y}(x),v_i^{h_X,h_Y}(x)-v^{h_X,h_Y}_{i-1},v_i^{h_X,h_Y}(x)-v^{h_X,h_Y}_{i+1}) = 0 \end{aligned}$$for all \(i = 0, \ldots , N\).

-

2.

Normalise to

$$\begin{aligned} u^{h_X,h_Y}_i = v^{h_X,h_Y}_i - \frac{1}{N}\sum \limits _{j=1}^Nv^{h_X,h_Y}_j.\end{aligned}$$

5 Computational Results

Finally, we validate our proposed numerical method on several computational examples.

In each example, we take as our domain \(X = [0,1]\), which is discretised using N grid points. The computational target set is taken to be the smallest possible square that encloses the actual target Y.

Following the discussion about optimising formal consistency error at the end of subsection 4.4, we expect that the optimal formal consistency error is \(\mathcal {O}(h_X^{2/3})\). To this end, the grid spacing parameters are chosen to satisfy \(h_Y \approx h_X^{1/3}\), with slight deviations from equality used to ensure that this leads to an integer M number of grid points along each dimension of Y. We note that this leads to the scaling \(M \approx N^{1/3}\). Perhaps counter intuitively, the result is a two-dimensional set that is discretised with only \(M^2\approx N^{2/3}\) grid points, which is fewer than the number of grid points in the one-dimensional domain.

Evaluating the approximation scheme (34) at each of the N grid points in the domain involves evaluating a double sum containing \((M+1)^2 \approx N^{2/3}\) terms. In the current implementation, this is computed using a brute force approach. The overall cost of evaluating the discretisation scheme at all grid points then scales as \(\mathcal {O}(N^{5/3})\).

The resulting systems of nonlinear equations were solved using Newton’s method.

5.1 Rectangular Target

The first example we consider involves a rectangular domain

Note that this domain is rotated, so it will not line up with the grid used to discretise the y variables.

We solve (1) using the density functions

together with the quadratic cost function

See Fig. 1 for a visual of the problem data.

We can easily verify that the exact mean-zero solution of the resulting Monge-Ampère equation is

The resulting error is displayed in Fig. 2 and scales approximately as \(\mathcal {O}(h_X^{1/4})\). We note that this convergence rate is worse than the formal rate of \(\mathcal {O}(h_X^{2/3})\). This is not unexpected since typical convergence analysis for Monge-Ampère type equations (particularly with Optimal Transport type boundary conditions) does not guarantee convergence rates.

We also notice that for this particular example, the error decreases, but not monotonically. It is well known that monotone finite difference schemes do not have to yield monotonic convergence. In this case, it appears to be the simplicity of the geometry that leads to the oscillations in error. This is because for some choices of discretisation parameters \(M, h_Y\), the grid is by chance quite well aligned with the relevant curve \(Y_1(x,u'(x))\), the contours of g, and the boundaries of the target Y. This, in turn, can lead to artificially low error for certain values of the discretisation parameters.

5.2 Vanishing Densities

The next example involves another rectangular domain

and the quadratic cost

as in the previous example. This time, we use density functions that are allowed to vanish to zero at the boundaries of X and Y. This setting is known to lead to a loss of uniform ellipticity in the Monge-Ampère equation, which is often a challenge for numerical methods. We choose the particular density functions

See Fig. 3 for a visual of the problem data.

In this case, the exact solution is the constant function

Although the exact solution is constant, we cannot expect to recover this exactly (which would occur with a typical finite difference discretisation of a standard Monge-Ampère equation). This is because the approximation scheme will still involve (approximate) integration of a spatially varying function over a non-trivial curve, which will introduce numerical errors.

The error obtained for this example is displayed in Fig. 4. In this example, the error appears to decrease monotonically, likely because the contours of g are no longer straight lines that can align with the grid. Despite the degeneracy of this example, there is no difficult in computing this solution. In fact, the observed convergence rate of \(\mathcal {O}(h_X^{1/2})\) is actually better than that observed in the non-degenerate example. This is likely due to the fact that the density function g is now continuous in the extended computational domain.

5.3 Curved Target

The next example we consider involves the curved domain

We solve (1) using the density functions

These densities are also allowed to vanish along part of the boundary. In this example, we use the cost function

See Fig. 5 for a visual of the problem data.

The exact mean-zero solution of the resulting Monge-Ampère equation is

The resulting error is displayed in Fig. 6 and scales approximately like \(\mathcal {O}(h_X^{2/5})\). Once again, we observe clear monotonic convergence. The more complicated geometry does not pose any difficulty for the numerical method.

5.4 Disc Target

The final example we consider involves the disc

We solve (1) using the constant density functions

In this example, the cost function is taken to be the Euclidean distance

which assumes that the line segment \(X = [0,1]\) is embedded in \(\mathbb {R}^2\) with \(x_2 = 0\).

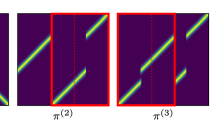

To illustrate the resulting optimal transport map, we split the one-dimensional domain into several line segments \(X_1, \ldots , X_5\), which are each assigned a colour. We then use our numerical method to estimate which portion of the disc each line segment is mapped onto. This is accomplished by looking at the support of the approximate delta function and defining

Each \(Y_k\) lying in the disc Y is then assigned the same colour as the corresponding \(X_k\).

We illustrate the results in Fig. 7. As expected given the relatively simple geometry and cost function, we observe a certain level of monotonicity in the computed mapping, with points farther to the left in the domain X being mapped into sections of the disc Y that are also farther to the left.

6 Conclusions

In this article, we have proposed a numerical method for a Monge-Ampère type equation arising in the problem of optimal transport between unequal dimensions. In addition to the strong nonlinearity of this problem, the equation involves an extra layer of complexity because it requires integrating the equation over a manifold that itself depends on the solution of the Monge-Ampère equation.

We have proposed a level-set formulation of this PDE, which allows the integral to be posed over a much simpler domain. However, the resulting equation still involves (1) a fully nonlinear elliptic operator of Monge-Ampère type and (2) integration against a Dirac delta distribution whose support is dependent on the solution gradient.

We have proposed a very novel method for numerically approximating the resulting equation. For simplicity, we focus on the setting of optimal transport from 1D to 2D. However, all of the techniques we proposed can be easily extended to higher dimensions. The numerical method utilises monotone finite difference approximations of the Monge-Ampère type operator. These are combined with a very careful discrete version of the Dirac delta function, which is allowed to have a spatially varying support width in order to preserve consistency. The level-set function that is input into this discrete delta function is discretised using a careful combination of forward and backward differences in order to preserve monotonicity.

The resulting numerical method is both consistent and monotone. For more well-understood Monge-Ampère type equations in optimal transport, consistency and monotonicity have proven to be the key ingredients in proofs of convergence [22, 24, 25]. With ongoing development of the theory for the non-standard Monge-Ampère equation considered in this article, there is great reason to hope that a convergence proof can be constructed for the method proposed here. A key ingredient that is currently missing is a robust theory of viscosity solutions for this form of generalised Monge-Ampère type equations. This will be an avenue of continued research. Once a robust theory is available for the development and analysis of monotone numerical methods, future implementations will also seek to improve accuracy through the design of filtered numerical methods [29].

We have performed computational tests using several challenging examples, which validate the performance of the new method.

A natural avenue for future work is to extend this numerical method to higher dimensions. A challenge here is that the level-set approach must be extended to a more complicated setting that requires multiple level-set functions (28). A second key issue that must be addressed in the move to higher dimensions is computational cost. As discussed in section 5, the current implementation uses a simple brute force approach to evaluate the double summation in the approximation scheme (34). This is reasonable in the one-to-two dimensional setting, but quickly becomes infeasible in higher dimensions. Future work will improve the computational cost by devising better strategies to estimate the support of the discrete delta function, allowing for a great reduction in the cost of the numerical quadrature. We expect the resulting method to be easy to implement, while having a computational cost equivalent to integrating over an \((m-n)\)-dimensional manifold (instead of the full m-dimensional target set) at each point in the lower-dimensional domain X.

Data availability

No datasets were generated or analysed during the current study.

References

Villani, C.: Topics in Optimal Transportation, vol. 58. American Mathematical Society, Providence (2021)

Chiappori, P., McCann, R.J., Pass, B.: Multi-to one-dimensional optimal transport. Commun. Pure Appl. Math. 70(12), 2405–2444 (2017)

Galichon, A.: Optimal Transport Methods in Economics. Princeton University Press, Princeton (2016)

Nenna, L., Pass, B.: Variational problems involving unequal dimensional optimal transport. J. Math. Pures Appl. 139, 83–108 (2020)

Cullen, M.J.P.: A Mathematical Theory of Large-Scale Atmosphere/Ocean Flow. World Scientific, London (2006)

Lin, J.Y., Guo, S., Xie, L., Xu, G.: Multi-projection of unequal dimension optimal transport theory for Generative Adversary Networks. Neural Networks 128, 107–125 (2020)

Mérigot, Q., Meyron, J., Thibert, B.: An algorithm for optimal transport between a simplex soup and a point cloud. SIAM J. Imaging Sci. 11(2), 1363–1389 (2018)

McCann, R.J., Pass, B.: Optimal transportation between unequal dimensions. Arch. Ration. Mech. Anal. 238(3), 1475–1520 (2020)

Froese, B.D., Oberman, A.M.: Convergent finite difference solvers for viscosity solutions of the elliptic Monge-Ampère equation in dimensions two and higher. SIAM J. Numer. Anal. 49(4), 1692–1714 (2011)

Feng, X., Lewis, T.: A narrow-stencil finite difference method for approximating viscosity solutions of Hamilton-Jacobi-Bellman equations. SIAM J. Numer. Anal. 59(2), 886–924 (2021)

Chen, Y.-Y., Wan, J., Lin, J.: Monotone mixed finite differencce scheme for Monge-Ampére equations. J. Sci. Comput. 76, 1839–1867 (2018)

Oberman, A.M.: Wide stencil finite difference schemes for the elliptic Monge-Ampère equation and functions of the eigenvalues of the Hessian. Discrete Contin. Dyn. Syst. Ser. B 10(1), 221–238 (2008)

Benamou, J.-D., Collino, F., Mirebeau, J.-M.: Monotone and consistent discretization of the Monge-Ampere operator. Math. Comput. 85(302), 2743–2775 (2016)

Feng, X., Jensen, M.: Convergent semi-Lagrangian methods for the Monge-Ampère equation on unstructured grids. SIAM J. Numer. Anal. 55(2), 691–712 (2017)

Nochetto, R., Ntogkas, D., Zhang, W.: Two-scale method for the Monge-Ampère equation: convergence to the viscosity solution. Math. Comput. 837, 637–664 (2018)

Dean, E.J., Glowinski, R.: Numerical methods for fully nonlinear elliptic equations of the Monge-Ampère type. Comput. Methods Appl. Mech. Eng. 195(13–16), 1344–1386 (2006)

Prins, C.R., Beltman, R., Thije Boonkkamp, J.H.M., JIzerman, W.L., Tukker, T.W.: A least-squares method for optimal transport using the Monge-Ampère equation. SIAM J. Sci. Comput. 37(6), 937–961 (2015)

Feng, X., Neilan, M.: Vanishing moment method and moment solutions for fully nonlinear second order partial differential equations. J. Sci. Comput. 38(1), 74–98 (2009)

Brenner, S.C., Gudi, T., Neilan, M., Sung, L.-Y.: \({C}^0\) penalty methods for the fully nonlinear Monge-Ampère equation. Math. Comput. 80(276), 1979–1995 (2011)

Hamfeldt, B.F., Lesniewski, J.: Convergent finite difference methods for fully nonlinear elliptic equations in three dimensions. J. Sci. Comput. 90, 35 (2022)

Barles, G., Souganidis, P.E.: Convergence of approximation schemes for fully nonlinear second order equations. Asymptotic Anal. 4(3), 271–283 (1991)

Hamfeldt, B.F., Turnquist, A.G.R.: A convergence framework for optimal transport on the sphere. Numer. Math. 151, 627–657 (2022)

Oberman, A.M.: Convergent difference schemes for degenerate elliptic and parabolic equations: Hamilton-Jacobi equations and free boundary problems. SIAM J. Numer. Anal. 44(2), 879–895 (2006)

Hamfeldt, B.F.: Convergence framework for the second boundary value problem for the Monge-Ampère equation. SIAM J. Numer. Anal. 57(2), 945–971 (2019)

Bonnet, G., Mirebeau, J.-M.: Monotone discretization of the Monge-Ampère equation of optimal transport. ESAIM 56(3), 815–865 (2022)

Cheng, L.-T.: The level set method applied to geometrically based motion, materials science, and image processing. PhD thesis, University of California, Los Angeles (2000)

Tornberg, A.-K., Engquist, B.: Numerical approximations of singular source terms in differential equations. J. Comput. Phys. 200(2), 462–488 (2004)

Engquist, B., Tornberg, A.-K., Tsai, R.: Discretization of Dirac delta functions in level set methods. J. Comput. Phys. 207(1), 28–51 (2005)

Froese, B.D., Oberman, A.M.: Convergent filtered schemes for the Monge-Ampère partial differential equation. SIAM J. Numer. Anal. 51(1), 423–444 (2013)

Acknowledgements

The second author was partially supported by NSF DMS-2308856.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cassini, M.A., Hamfeldt, B.F. Numerical Optimal Transport from 1D to 2D Using a Non-local Monge-Ampère Equation. La Matematica 3, 509–535 (2024). https://doi.org/10.1007/s44007-024-00092-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s44007-024-00092-3