Abstract

To use the benefits of Advanced Driver Assistance Systems (ADAS)-Tests in simulation and reality a new approach for using Augmented Reality (AR) in an automotive vehicle for testing ADAS is presented in this paper. Our procedure provides a link between simulation and reality and should enable a faster development process for future increasingly complex ADAS tests and future mobility solutions. Test fields for ADAS offer a small number of orientation points. Furthermore, these must be detected and processed at high vehicle speeds. That requires high computational power both for developing our method and its subsequent use in testing. Using image segmentation (IS), artificial intelligence (AI) for object recognition, and visual simultaneous localization and mapping (vSLAM), we aim to create a three-dimensional model with accurate information about the test site. It is expected that using AI and IS will significantly improve performance as computational speed and accuracy for AR applications in automobiles.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Advanced Driver Assistance Systems (ADAS) as the active lane departure warning (LDW)-system and traffic sign recognition support the driver, offer comfort, and take responsibility for increasing road safety. These complex systems endure an extensive testing phase resulting in optimization potential regarding quality, reproducibility, and costs. ADAS of the future will support ever-larger proportions of driving situations in increasingly complex scenarios. Due to the increasing complexity of vehicle communication and the rising demands on these systems in terms of reliability to function safely even in a complex environment and to support the driver and increase safety, the test scenarios for ADAS are constantly further developed and adapted to higher requirements. European New Car Assessment Programme (Euro NCAP) has introduced a series of new safety tests for ADAS into its program and created a road map until the year 2025 [1, 22].

Today’s test methods can be separated into two categories. On the one hand, the testing of the ADAS with the help of virtual worlds, and on the other, the testing in reality, on the test track using objects in real life. The central idea of the virtual test procedure is to transfer vehicle behavior to virtual test drives as realistically as possible. Virtual tests benefit from the advantages of simulation in terms of reproducibility, flexibility, and reduction of effort. In this way, specifications and solutions derived from them should be able to be tested and evaluated at an early stage of the development process. Using suitable simulation methods enables the efficient design, development, and application of vehicles and vehicle components. However, virtual development methods cannot yet replace real-life driving tests in all respects. Due to the complex physical conditions in which a vehicle is transferred when testing ADAS, real-life driving tests are still necessary for the current status. For example, the weather, the surface texture of the road, and other influencing parameters take a decisive role in the evaluation process of ADAS test drives [13, 14].

However, the effort for testing and evaluation correlates with the complexity of an ADAS. The more complex the system, the higher the testing effort. The robustness, functional safety, and reliability of upcoming ADAS must be verified in increasingly dynamic, complex, and chaotic traffic situations. That also includes interacting with different road users, each with their natural movements, as the interaction of road users with each other. Maurer and Stiller said: “If testing and assessment methods cannot keep pace with this functional growth, they will become the bottleneck of the introduction of Advanced Driver Assistance Systems to the market.” [1] Thus, new and efficient testing methods are required to pave the way for future ADAS [25]. A new approach Vehicle in the Loop (VIL) combines the advantages of simulation and reality. The approach in this paper is a new method besides existing VIL-Approaches.

2 Advanced driver assistance systems in automotive vehicles

This chapter is intended to give the reader an overview of how ADAS works and how it is subdivided. The relevance of ADAS for future mobility solutions is highlighted as well, and the functionality of different ADAS sensors and their advantages and disadvantages are listed. Furthermore, there is a focus on sensor data fusion to combine the benefits of individual sensors for desired ADAS functions.

2.1 Overview and function

ADAS support the driver in operating a vehicle. Depending on the type, they provide more driving comfort, improve safety, reduce energy consumption or enable a more efficient traffic flow. The systems use sensors to record the driving situation, process the collected information with powerful computers, and provide the driver with visual, acoustic, or haptic feedback. In some cases, they intervene automatically, semi-autonomously, or autonomously in the control and operation of the vehicle as operating the accelerator, brake, signaling, or steering. These can extend all the way to fully autonomous driving. Essential requirements for ADAS are fast processing of data in near real-time and high system reliability [16, 28].

2.2 Subdivisions of advanced driver assistance systems

Driver assistance systems can be divided into three categories, according to their functionality [11]:

-

Category A—Informing function: These systems inform the driver about events within the vehicle’s environment. They thus expand the possibilities for receiving information necessary for the safe guidance of an automobile. Human-Machine interfaces (HMIs) provide the information. These can be symbols on the speedometer, the exterior rearview mirror, or the head-up display. Acoustic signals such as warning tones are included as well. Category A functions have an “indirect” effect on the driver [11].

-

Category B—Continuously acting automating functions: These systems operate over a long period and intervene directly in driving the vehicle. This category includes, for example, Adaptive Cruise Control (ACC), which automatically maintains the preset speed while monitoring the distance to the vehicle in front. Within this category, the driver and assistance system share the driving task. The driver is always responsible for the car [11].

-

Category C—Intervening emergency systems: In emergencies, automated systems can often react faster than humans. For such cases, the automotive industry has developed so-called emergency systems, some of which are already mandatory by law or will become mandatory for new cars within the next few years. Examples are the emergency brake assistant (EBA) or an automatic avoidance system [11].

2.3 Advanced driver assistance systems in future mobility

For future mobility solutions, great attention is paid to autonomous driving systems. ADAS functions play a significant role on the road to autonomous vehicles. The classification of the Society of Automotive Engineers (SAE) has established itself worldwide. The SAE document J3016 divides the different driving modes (automation levels) into six levels [18]:

-

Level 0 stands for no automation. That means that the human driver is in complete control of the vehicle. Driver assistance systems only provide support in the form of acoustic signals, such as the parking aid or the blind-spot warning system [18].

-

Level 1 already describes the first support functions. The driver is actively supported when steering, accelerating, or braking. Examples are the Lane Departure Warning (LDW) system or an adaptive speed assistant with distance control [18].

-

Level 2 links steering intervention with speed control. Systems in this class can accelerate or brake a vehicle and keep it on track simultaneously [18].

With the systems mentioned so far, humans must always keep an eye on their surroundings. In addition, the driver must perform all remaining aspects of the dynamic driving task—such as a lane change or an overtaking maneuver. In levels 3 through 5, the vehicle’s systems monitor the environment. Drivers are allowed to turn their attention away from the driving task; for example, they may read, sleep or watch television. Level 3 has a particular feature: the system can ask the driver to take control of the car or truck within a specific time [18, 28].

-

A system fulfills Level 3 when it completely takes control of the car in a delimited, strictly defined situation. One example is the traffic jam pilot. When the traffic jam clears, the driver must take over the wheel again [18].

-

Level 4 is for highly automated vehicles. Intervention by the occupants is no longer necessary. However, vehicles in this category still have pedals and a steering wheel that can be used for manual steering if desired [18].

-

Level 5 is identical to Level 4 in terms of the system, but vehicles in this category no longer have a steering wheel or pedals. Therefore, the vehicle must be able to drive autonomously everywhere it travels [18].

To achieve the respective levels, different ADAS functions are relevant that take over the specific tasks. For this purpose, vehicle manufacturers use various sensor technologies to implement the distinct driving function [18]. The following section provides an overview of the sensors used for selected ADAS.

2.4 Sensors for advanced driver assistance systems

A growing number of environmental sensors such as radar, camera, ultrasonic, and lidar sensors are enabling the use of ADAS in modern vehicles and the associated functions for autonomous driving. At the same time, each sensor is very limited in its respective scope of application and cannot provide all the information on the vehicle environment required for safety functions. Only combining the data from different sensors (sensor fusion) results in a complete environment model—a fundamental requirement for the reliability and safety of driver assistance systems and autonomous driving [6]. In the first step, the individual environment sensors are described below, followed by a more detailed discussion of sensor data fusion.

2.4.1 Camera

Video sensors, also known as camera sensors or image sensors, are so-called image-based sensors. They provide essential optical information about the vehicle environment. Video sensors are different from simple cameras. These passively display the image to the driver (for reverse parking), who must evaluate the situation and react himself. On the other hand, video sensors are more intelligent and analyze image content fully automatically, including distance measurement and objective evaluation. That enables assistance systems to autonomously detect an impending collision and perform braking and steering maneuvers to avoid an accident. The core of today’s highly automated emergency brake assistants rely on video senors [20].

2.4.2 Radar

Radar is the abbreviation for “Radio Detection and Ranging”. The radar sensor is beam-based. It is used to detect objects such as other vehicles and pedestrians and measure their distance from the vehicle and their relative speeds. The sensor information forms the basis for the operation of numerous safety systems which are designed to prevent accidents with appropriate warnings and vehicle interventions. These include distance control systems, lane change assistance systems, and collision warning and avoidance systems [27].

Short-range radar sensors are used in a range starting at about 5 metres. Medium-range radar sensors are used at distances of approximately 160 metres in front and up to 100 metres in the rear. Long-range radar sensors are mainly used at distances up to about 250 metres. Radar sensors can also provide essential 360-degree environment information for automated/autonomous driving cars at a distance of up to 250 metres as one of several sensor principles [27].

2.4.3 Lidar

Light Detection and Ranging (Lidar) is a beam-based sensor that uses light waves to measure distances and speeds. Like the radar sensor, the lidar sensor also emits electromagnetic waves, only in a different frequency range. As with radar sensors, the sensor data form the basis for numerous driver assistance and safety systems designed to prevent accidents with appropriate warnings and vehicle interventions. The lidar sensor is used, for example, in EBA or distance control systems [21].

2.4.4 Ultrasonic sensors

Ultrasonic sensors are beam-based sensors as well. The frequencies of ultrasonic sensors resemble sound waves above the range perceivable by human hearing (above 16 kHz). In parking assistance systems, ultrasonic sensors are integrated into the front and back of the vehicle. Depending on the manufacturer and system, the distance to obstacles is indicated to the driver either acoustically or visually acoustically [17].

Ultrasonic sensors are required for autonomous/automated driving as well. Primarily for environment detection in the close range of up to six metres and at low speeds. Due to the low frequency and relatively large wavelength, ultrasonic technology is less demanding and thus relatively inexpensive. The inherent motion of the vehicle and the dependence on the carrier medium air have a disadvantage, limiting distance measurement to small distances and low driving speeds [17].

2.4.5 Sensor data fusion in advanced driver assistance systems

The primary goal of data fusion is to combine data from individual sensors so that strengths are profitably combined and/or individual weaknesses are reduced. There are driver assistance systems that are based exclusively on one-sensor solutions. Examples include ACC, which uses a radar or laser sensor, and LDW, which are mostly camera-based. As described in the previous chapters, the respective sensor technologies have specific advantages and disadvantages: For example, a radar sensor can be used to determine the longitudinal distance and speed of a vehicle in front with sufficient accuracy for the ACC-Application. However, due to the lateral resolution, ambiguities in the signal evaluation, and a lack of lane marking detection, the selection of the relevant object for keeping the distance can only be performed as precisely as that side lane disturbances during the operation of the system have to be accepted. In addition, a classification of the detected object is only possible to a limited extent, so usually, only moving objects are included in the control. The missing information can be provided, for example, by camera data. Information is available via lane marking detection that can be used for lane assignment. Classification algorithms can be used to distinguish vehicles in the video image from other objects, and image processing techniques can be used to determine the position of vehicles in the video image [2, 7, 8].

3 Visual simultaneous localization and mapping

Simultaneous Localization and Mapping (SLAM) is a technique for obtaining the 3D structure of an unknown environment and sensor movement in the environment. This technique was originally proposed to achieve autonomous control of robots in robotics [4]. Then SLAM-based applications became widespread. For example, online 3D modeling based on computer vision, augmented reality (AR)-based visualization, and self-driving cars. Several types of sensors were integrated into early SLAM algorithms, such as Laser range sensors, rotary encoders, inertial sensors, Global Positioning System (GPS), and cameras. In recent years, SLAM algorithms using only cameras are discussed because the sensor configuration is simple and the technical difficulties are grander than others. Because the input to such a SLAM consists of only visual information, the technique is specifically referred to as visual SLAM (vSLAM). vSLAM algorithms are widely used in computer vision, robotics and AR [5]. They are particularly useful for camera pose estimation in AR systems, as the configuration of the systems can be as simple as camera-mounted tablets or smartphones. An important requirement for AR systems is the real-time response to seamlessly and interactively merge real and virtual objects. To achieve the response with limited computational resources on a lightweight portable device, various low-computational vSLAM algorithms have been proposed in the literature. The application of such vSLAM algorithms is not limited to AR systems. It is also valuable, for example, for unmanned autonomous vehicles (UAV) in robotics [9]. Most vSLAM approaches are divided into five technical modules. On the one hand, there are three basic modules and on the other hand two additional modules, which are briefly presented below.

3.1 Basic modules of visual simultaneous localization and mapping

The basic modules of vSLAM are Initialization, Tracking, and Mapping and are shortly presented in the following:

-

Initialization: For using vSLAM, the fundamental step is to define a specific coordinate system for camera position estimation and 3D reconstruction in an unknown environment. Therefore, the global coordinate system should be defined first during initialization. A part of the environment is thus reconstructed as an initial map in the global coordinate system [26].

-

Tracking: After the initialization process, tracking and mapping are performed. Tracking involves following the reconstructed map in the image to continuously estimate the camera position of the image to the map. For this purpose, distinctive matches between the captured image and the created map are first determined by feature matching or feature tracking in the image [26].

-

Mapping: The mapping process expands the map by understanding and calculating the 3D structure of an environment when the camera detects unknown regions where mapping has not been done before [26].

3.2 Additional modules of visual simultaneous localization and mapping

The basic modules of vSLAM are supplemented by the additional modules Relocalization and Global Map Optimization:

-

Relocalization: When tracking has failed, relocalization is required. Reasons for this can be, among others, fast camera movements. In this case, relocalization makes it possible to recompute the current camera position about the reconstructed map [26].

-

Global Map Optimization (including Loop Closing): The map usually contains a cumulative estimation error corresponding to the distance of the camera movement. To eliminate this error, Global Map Optimization is usually performed. In this method, the map is refined considering the consistency of the whole map information. If previously recorded map elements are recognized, loops are closed, and the cumulative estimation error can be corrected from the beginning to the present. Loop Closing is a method for obtaining reference information. While closing loops, a closed loop is first searched by comparing a current image with previously acquired images. Generally, relocalization is utilized to recover the camera position, and loop detection is used to obtain a geometrically consistent map. Pose Graph Optimization is widely used to suppress cumulative error by optimizing the camera positions. Bundle Adjustment (BA) is also used to minimize the map reprojection error by optimizing the map and the camera positions. In large environments, this optimization method is used to efficiently minimize estimation errors. In small environments, BA can be performed without loop closure as the cumulative error is small [26].

For the use of vSLAM in automotive vehicles and the associated properties such as fast scene changes and low texturing of the environment, various approaches are available using the vSLAM-Algorithms, which can be found in [3]. Based on [3] various vSLAM approaches are compared in terms of accuracy and robustness, among others. The following chapter describes a particular version of vSLAM, which is necessary for our research work.

3.3 Oriented FAST and rotated BRIEF (ORB) for visual simultaneous localization and mapping

The Oriented FAST and Rotated BRIEF (ORB)-SLAM algorithm was first presented in 2015 and is the current state of the art as it has higher accuracy than comparable SLAM algorithms [15]. ORB-SLAM represents a complete SLAM system for monocular, stereo, and Red Green Blue-Depth- (RGB-D) cameras. The system operates in real time and achieves remarkable results in terms of accuracy and robustness in a variety of different environments. ORB-SLAM is used for indoor sequences, drones, and cars driving through a city. The ORB-SLAM consists of three main parallel threads: Tracking, Local Mapping, and Loop Closing. It is possible to create a fourth thread to execute the BA after a closed loop. This algorithm is a feature-based approach that represents the detected points in a three-dimensional Point cloud [3].

4 Testing of advanced driver assistance systems

Simulative test procedures during the development process and test procedures, in reality, are used to evaluate the functionality of individual ADAS sensors and their joined interaction in ADAS-relevant scenarios. While in the early concept stage, all components of the road test are still virtual and characterized by test procedures such as:

-

Model in the Loop (MiL)

-

Software in the Loop (SiL) or

-

Hardware in the Loop (HiL)

Through the various stages of development, a gradual exchange of virtual for the equivalent physical world test components enrolls. By the end, reality replaces the simulated elements completly [12]. In Table 1, an overview of the respective possibilities and the advantages and disadvantages in simulation and in reality is given.

4.1 Testing advanced driver assistance systems in simulation

The guiding idea of the virtual road test is to transfer the actual road test as realistically as possible into the virtual world. The aim is to benefit from the characteristic strengths of simulation in terms of reproducibility, flexibility, and effort reduction and to establish a test and evaluation facility for specifications and solutions derived from them early in the vehicle development process. Using suitable simulation methods enables more efficient design, development, and application of vehicles and vehicle components. These methods bridge and shorten the time until real-world vehicle prototypes are available. With actual driving tests and the reliability of real test results as a template, using simulation techniques is an optimization task. Modeling, parameterization, and the simulation effort must be in balance with the efficiency gained. The methods used for this approach are taken mainly from the repertoire of embedded mechatronic system development. SiL, MiL, and HiL methods come into question here [13].

4.2 Testing advanced driver assistance systems in reality

Despite their complexity and wide range of variants, vehicle dynamic control systems can still be validated at great expense in actual driving tests alone. However, this is no longer economically feasible for driver assistance systems with environment perception due to the system complexity, the complexity of the test cases, and the necessary scope of testing. Even if the tests are performed the same way, it is practically impossible to test under the same conditions due to numerous potential and unknown or unobserved influences. Thus, the reproducibility of results is not ensured. On one side, function-relevant features may involve the necessary interaction of several road users, and on the other, they may be subject to a complex interplay of general conditions, such as glare from the low sun and simultaneous reflection on a wet road surface at a certain angle. The functions of current ADAS access information about the environment, which is sometimes collected by several sensors with different roles and processed in an environment representation [14, 23].

The literature indicates that Euro NCAP is used as a standard. Euro NCAP is a standardized European test procedure for actual ADAS driving tests. The focus here is on the behavior and reaction of the vehicle in safety-critical situations. Dummies in form of cars driving ahead, pedestrians, and cyclists are used in manufactured dangerous situations to evaluate the functionality of ADAS systems. In order to increase road safety, the requirements for ADAS are being raised. For this reason, the Euro NCAP test procedures will also include increasingly complex test scenarios in the future. Thus, the roadmap up to 2025 includes additional road users such as scooters, motorcycles, and wild animals [10]. Figure 1 shows a test setup for pedestrian detection, where a pedestrian (child as a dummy) crosses the road behind a parked car. The test vehicle has to detect the pedestrian in time, and brake for no personal injury or material damage occurs [19].

Test setup for pedestrian detection, where a pedestrian (child as a dummy) crosses the road behind a parked car in a scenario for testing AEB of Euro NCAP [19]

Tests using prototypes in real road traffic with test persons are rarely possible for legal and safety reasons. However, validating numerous safety-critical functions like AEB for pedestrians) can only be inadequately replicated even on a test track within the framework of NCAP test scenarios. With the increasing complexity of the driving situation in which the assistance system under test acts, it becomes more and more difficult to realistically and reliably evaluate the interaction of system behaviour and the driver’s experience and behaviour. VIL closes the gap between driving simulation and actual tests. Through the virtual visual representation on the one hand and the experienced haptics, kinaesthetics, and acoustics through the real vehicle movement, on the other hand, VIL offers a new procedure based on augmented reality to develop and evaluate ADASs efficiently and safely [14]. This approach is described in the following chapter.

4.3 Vehicle in the loop

VIL stands for a newer method to meaningfully complement and improve the development of the V-model for driver assistance systems. It addresses the need for many driver assistance functions for an elaborate driving test and a high demand for functional safety. This group of driver assistance functions will increasingly gain in importance and scope. One main reason is the ever-growing number of vehicle derivatives in which driver assistance functions are offered, and the accompanying ever-higher level of automation and networking. The VIL method allows the operation of the test vehicle in a virtual environment. The coupling between the vehicle and the virtual environment can be done in two ways. One way is to replace the real sensor system with an interface. At this interface, the simulation environment feeds in simulated sensor signals which correspond to the sensor response from a physical environment. The second way is to retain and artificially stimulate real sensor technology, as is feasible, for example, with ultrasonic sensor technology exposed to artificially generated response signals via ultrasonic transducers [24]. In both variants, the physical test vehicle reacts to features and events in the virtual environment. Critical driving manoeuvres towards obstacles or objects on a collision course can thus be tested safely and reproducibly. The interface created can also be used to generate the sensor signals in a way that would occur due to a changed position in a vehicle derivative or due to different tolerances. Thus, this method provides the possibility to test corresponding derivatives or tolerances with a test vehicle.

In addition to the considerably safer test operation, this allows effective testing and application of driver assistance functions. That results in a considerable economic potential for driving tests in the field of driver assistance. The use of virtual integration in conjunction with the VIL method allows efficient application of the customer function. The efficiency and reproducibility of the test cases required for this can thus increase significantly. Figure 2 illustrates the general operating principle of the VIL by the architecture as well as the flow of information [28].

VIL-architecture as well as the flow of information [28]

5 Approach for using augmented reality for testing advanced driver assistance systems

To utilize the individual advantages of the test procedures in simulation as well as in reality, our approach shall represent a crucial link beside existing VIL-Approaches. In the following chapter, the approach, the hoped-for advantages, the associated challenges, and related thoughts are presented.

5.1 Approach of using augmented reality in advanced driver assistance systems

Focusing on the camera-based ADAS sensors, the environment of the test area shall be recorded as shown in Fig. 3. The path between the sensor fusion module and the eventual Advanced-Driver-Assistance-Systems-Electronic-Control-Unit (ADAS-ECU), which causes the vehicle to intervene, is to be interrupted and followed by a new path via the Augmented-Reality-Electronic-Control-Unit (AR-ECU). Within the AR-ECU, the recorded data of the environment is augmented by virtual objects such as traffic signs, cyclists, pedestrians, or road markings. The aim here is to achieve a realistic and consistent behavior of the ADAS-ECU compared to test approaches with physical objects.

For the final augmentation of the virtual objects into the real sensor image, a detailed 3D model of the test environment must be created first. For this purpose, a vSLAM approach will be chosen. The approach uses only visual inputs to perform localization and mapping. That means no vehicle sensors other than the vehicles camera system are needed to create an environment model, thus making this approach more flexible than Lidars, Radars, and Ultrasonics.

5.2 Technical steps to use augmented reality in advanced driver assistance systems

To use AR in ADAS, the steps are carried out as in Fig. 4. The individual steps are explained below.

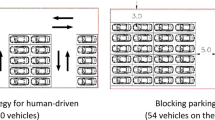

A stereo camera and the vSLAM approach ORB-SLAM3 are used to map the environment. The detected feature points are stored in a 3-dimensional point cloud. The test drives are carried out on corresponding NCAP-suitable test areas. The low texture of the environment must be taken into account here. As the scene images are repetitive, pylons are used along the test track. These are positioned at intervals of 20 metres in varying numbers to the left and right of the test track. That ensures feature matching and successive orientation in the 3-D map is possible. As typical for cars, the camera is mounted at the level of the rear-view mirror at the top of the windscreen.

Figure 5 shows the recorded scene of the test track and the detected feature points by the ORB-SLAM algorithm as green rectangles. The created point cloud can be seen in Fig. 6. The point cloud represents a straight roadway with pylons, road markings, and other elements such as trees on the left side or a hill on the right.

The next step is to extract the relevant feature points from the point cloud, which are needed for plane detection. As the first step, we use the random sample consensus (RANSAC)-algorithm, which makes it possible to calculate a plane on the road surface. This plane is used to ensure that the virtual objects can be realistically augmented at the correct height and at the correct position in the scene image. NCAP-relevant objects such as traffic signs, pedestrians, or road signs are created with the software Blender. Figure 7 shows a created cyclist for our approach.

The tool OpenGL is used to insert the virtual objects from Blender. OpenGL enables the renderingprocess. Figure 8 shows the augmentation of the scene by the virtual cyclist. It can also be seen from the grid displayed on the floor that the cyclist is on the recognised plane.

The last step in our AR-ADAS pipeline is to drive through the test scene again with the physical vehicle. Here, ORB-SLAM is not used again in mapping-mode, but in the localization-mode. That will result in a higher vehicle speed due to the lower computational effort. The real camera images are now enhanced with the virtual objects used.

5.3 Results so far

ORB-SLAM creates a point cloud of the environment in stereo mode. Figure 6 shows the NCAP environment. The first tests showed problems with orientation due to the low texture and the constantly repeating environment. For solving this problem, pylons have to be used to allow the algorithm a clear orientation. Pylons are allowed to be on the roadway for later evaluations during NCAP tests. These pylons are placed at different distances, see Fig. 5. The point cloud shows that the mapping is of high quality. However, the scaling is not 100% correct. For example, the distance between two pylons, which is 160 m in reality, is shown as 147 m at the point cloud. That results in a deviation of 8.125%. The difference must be minimised in the further course of the research work. The reasons for this difference at the current stage of research progress are incorrectly recognised feature points in the sky and on the bonnet of the test vehicle. For this reason, image preprocessing and image segmentation are required. Initial approaches to this have already been carried out.

The increase of the accuracy and the overlay of the point cloud created by ORB-SLAM with correspondingly recognized objects and surfaces are to be made possible by employing Image Segmentation using Artifical Intelligence (AI). Figure 9 shows object recognition. On the left side, the original image of the vehicle’s front view is captured. Since Euro NCAP tests are performed on unique test areas with low texture and therefore little information (cf. Figs. 1 and 5), the recognized classes for our approach are divided into pylons, own vehicle (engine hood), terrain, vegetation, road, and sky. Further classes can be added as the project progresses.

That is to derive planes from the point cloud, detect the road surface and augment the objects realistically into the virtual map. Another focus of this work is the realism of augmentation as occlusion, reflection, and shadow. The localization mode of the vSLAM will be used to augment the individual camera pixels to present the vehicle with a realistic, dangerous situation.

Furthermore, the virtual objects must be correctly inserted into the scene. The focus here is on the surface and material properties of the test objects. Figure 8 shows the virtual cyclist without material properties such as colour and shadows. Furthermore, it is necessary to animate the cyclist accordingly to make the realistic representation possible.

5.4 Advantages through our approach

Our approach shall combine the specific advantages of testing in simulation (reproducibility, flexibility, and reduction of effort) and reality (complexity of the vehicle and the environment) and thus represent a link between the testing methods. It intends to make it possible to test more complex scenarios and thus increase safety for road users.

Existing VIL approaches from the industry show the need for such an approach. In contrast to conventional VIL methods, in which the dynamics of the vehicle correspond to reality but the sensors are only stimulated by a virtual environment, our approach represents a further step towards realistic vehicle testing. Using AR, our approach allows us to use the real environment with all its characteristics. Simplifications of the environment through simulation are eliminated. Figure 10 shows the differences between the approach of existing VIL methods on the left and our approach on the right.

Existing VIL-approach on left side [28] and our approach of using augmented reality in advanced driver assistance systems on the right

The superimposition of lanes allows, for example, the testing of a lane departure warning system independent of the testing ground. Scenarios such as the appearance of temporary lane markings or the absence of sections can be tested on the same test area. Lane narrowing and widening can be represented as well as international differences between lane markings. Vehicles ahead can be superimposed on the camera image to test congestion assistance systems. In the first phase of testing, second vehicles including drivers can thus be dispensed with, reducing the costs of the tests and increasing the safety of the test engineers. Furthermore, test cases with traffic signs as well as pedestrians and cyclists can be augmented situationally and quickly. The combination of different test situations is also possible. The unlimited variety of test scenarios allows a significant increase in the depth of testing at an early stage of development. That increases the quality of the testing and thus of the overall system. Due to the increasing number of driver assistance systems and the constant development toward autonomous driving, the application area of the software program can be expanded at will.

5.5 Challenges for our approach

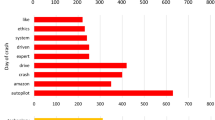

Various advancements and improvements in terms of accuracy, robustness and runtime can be found in further developments based on this approach of ORB-SLAM (ORB2-SLAM [15], and ORB3-SLAM [3]). While the performance of ORB-SLAM is impressive in well-structured sequences, error conditions can occur in poorly structured sequences as the Euro NCAP test scenarios or when feature points temporarily disappear, e.g., due to motion blur [29]. In addition to accuracy, the runtime of the overall algorithm is also of great importance. Nowadays, camera systems work with a frame rate of 30 to 60 Frames per Second [fps]. The resulting maximum overall runtime for handling one frame can be found in Table 2.

For a successful evaluation of ADAS-test scenarios, the AR system must be able to orient itself in the environment very accurately [15]. One cause is the missing feedback about the impact intensity of test dummies when crashing them. For this reason, it is necessary to know the exact position of the car on the test track to calculate the intensity of the impact based on the braking distance. When using Euro NCAP test scenarios, velocities up to

are tested [10]. The AR algorithm must have a faster runtime compared to the speed of the camera system. The distance d the vehicle covers within a frame at any given velocity and framerate can be calculated by:

At a speed of 130 \(\frac{km}{h}\) and a camera framerate of 30 fps, the vehicle travels

Accordingly, for a framerate of 60 fps at the same speed, a distance of

is covered. A deceleration of one frame means a deviation of the test results of 0.602 to 1.204 metres. Based on the high speed of the car and the camera, and the high need for precision in object placement, it is clear that the requirements for this application of AR are far more strict than for the usual application for human users.

5.6 Further thoughts about using augmented reality for testing advanced driver assistance systems

In the first step, our approach will be transferred to camera-based sensors. As already highlighted in the previous chapters, only a few ADAS functions, such as traffic sign recognition or lane Departure Warning, only access the camera. To evaluate further tests and achieve the equal behavior of the ADAS-ECU (cf. Fig. 3) in reality as in using AR, the integration of further sensor technology such as radar is needed. It should also be mentioned that Euro NCAP test scenarios according to the current state only take place under ideal conditions (sun position noon—no or only a few shadows and reflections, no other road users, no rain, …) [10]. Using our approach is intended to further increase the complexity and realism of Euro NCAP test scenarios. Another aspect is the visualization of the Augmentation for the driver. Here, one considerable aspect is the acceptance of the user by AR. Further investigations into a visualization for the user are being pursued as part of this project.

6 Conclusion

In this paper, we have proposed an approach using AR in automotive vehicles. The use of AR in ADAS is intended to combine the advantages of simulative test procedures, such as reproducibility and cost savings, with the advantages of test procedures in reality (complexity of the entire vehicle and the environment). We modeled the problem of creating an urban environment to use AR for testing in high-speed ADAS. Our approach is based on combining a vSLAM-Algorithms with AI to use Object Detection. That should help generate a better overall performance concerning computing speed and accuracy. Creating a virtual 3D environment with a superior understanding of the individual objects should, in a further step, make it possible to augment other sensors such as the car’s radar and lidar with objects in addition to the camera data. That should once again increase the overall performance of the entire system. In addition to providing a link between virtual and real test procedures, this approach intends to increase the complexity of potential test procedures, accelerate the development speed of ADAS functions, and improve safety for future mobility solutions.

Availability of data and materials

Not applicable.

Code availability

Not applicable.

References

K. Bengler, K. Dietmayer, B. Farbe et al., Three decades of driver assistance systems: review and future perspectives. IEEE Intell. Transp. Syst. Mag. 6, 6–22 (2014)

R.R. Brooks, S.S. Iyengar, Multi-Sensor Fusion: Fundamentals and Applications with Software (Prentice Hall, Hoboken, 1998). https://search.library.wisc.edu/catalog/999904353602121. ISBN 978-0-13-901653-0

C. Campos, R. Elvira, J.J.G. Rodríguez et al., ORB-SLAM3: an accurate open-source library for visual, visual-inertial, and multimap SLAM. IEEE Trans. Robot. 37, 1–17 (2021). https://doi.org/10.1109/TRO.2021.3075644

R. Chatila, J. Laumond, Position referencing and consistent world modeling for mobile robots, in Proceedings. 1985 IEEE International Conference on Robotics and Automation, vol. 2 (1985), pp. 138–145. https://doi.org/10.1109/ROBOT.1985.1087373

R. Chatila, J.-P. Laumond, Position referencing and consistent world modeling for mobile robots, in 1985 IEEE International Conference on Proceedings Robotics and Automation, vol. 2 (1985), pp. 138–145. https://doi.org/10.1109/ROBOT.1985.1087373

M. Darms, Eine Basis-Systemarchitektur zur Sensordatenfusion von Umfeldsensoren fur Fahreras-sistenzsysteme (TU Darmstadt, Dusseldorf, 2007). de. Zugl.: Darmstadt, Techn. Univ., Diss., 2007. PhD thesis. http://tubiblio.ulb.tu-darmstadt.de/35385/. ISBN 978-3-18-365312-6

M. Darms, Eine basis-systemarchitektur zur sensordatenfusion von umfeldsensoren fuer fahrerassistenzsysteme. PhD thesis, Technische Universitat (2007)

M. Darms, Fusion umfelderfassender Sensoren, in Handbuch Fahrerassistenzsysteme: Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort, ed. by H. Winner, S. Hakuli, G.W. Wiesbaden (Vieweg+Teubner, Wiesbaden, 2012), pp. 237–248. https://doi.org/10.1007/978-3-8348-8619-4_18. ISBN 978-3-8348-8619-4

J.J. Engel, J. Sturm, D. Cremers, Camera-based navigation of a low-cost quadrocopter, in 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (2012), pp. 2815–2821

R. Fredriksson, M.G. Lenné, S. van Montfort et al., European NCAP program developments to address driver distraction, drowsiness and sudden sickness. Front. Neuroergon. 2, 786674 (2021). https://doi.org/10.3389/fnrgo.2021.786674. https://www.frontiersin.org/article/10.3389/fnrgo.2021.786674

T.M. Gasser, A. Seeck, B. Walker Smith, Rahmenbedingungen fur die Fahrerassistenzentwicklung, in Handbuch Fahrerassistenzsysteme: Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort, ed. by H. Winner et al. (Springer, Wiesbaden, 2015), pp. 27–54. https://doi.org/10.1007/978-3-658-05734-3_3. ISBN 978-3-658-05734-3

S. Hakuli, M. Krug, Virtuelle integration, in Handbuch Fahrerassistenzsysteme, Grundlagen, Komponenten und Systeme fur aktive Sicherheit und Komfort, ed. by H. Winner et al. (Springer, Wiesbaden, 2015), pp. 125–138. https://doi.org/10.1007/978-3-658-05734-3_8

B.-J. Kim, S.-B. Lee, A study on the evaluation method of autonomous emergency vehicle braking for pedestrians test using monocular cameras. Appl. Sci. 10, 4683 (2020). https://doi.org/10.3390/app10134683. https://www.mdpi.com/2076-3417/10/13/4683

C. Miquet, New test method for reproducible real-time tests of ADAS ECUs: “vehicle-in-the-loop” connects real-world vehicle with the virtual world, in 5th International Munich Chassis Symposium 2014 (Springer, Wiesbaden, 2014), pp. 575–589. https://doi.org/10.1007/978-3-658-05978-1_40

R. Mur-Artal, J. Montiel, J. Tardos, ORB-SLAM: a versatile and accurate monocular SLAM system. IEEE Trans. Robot. 31, 1147–1163 (2015). https://doi.org/10.1109/TRO.2015.2463671

M. Nagai, Research into ADAS with autonomous driving intelligence for future innovation, in 5th International Munich Chassis Symposium 2014, ed. by P.E. Pfeffer (Springer, Wiesbaden, 2014), pp. 779–793. ISBN 978-3-658-05978-1

M. Noll, P. Rapps, Ultraschallsensorik, in Handbuch Fahrerassistenzsysteme: Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort, ed. by H. Winner, S. Hakuli, G.W. Wiesbaden (Vieweg+Teubner, Wiesbaden, 2009), pp. 110–122. https://doi.org/10.1007/978-3-8348-9977-4_12. ISBN 978-38348-9977-4

On-Road Automated Driving (ORAD) Committee. Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles. Technical repport (SAE International). https://doi.org/10.4271/J3016_202104. https://www.sae.org/content/j3016_202104 (visited on 05/30/2022)

A.E.B. Pedestrian—Euro NCAP, https://www.euroncap.com:443/en/vehicle-safety/the-ratings-explained/vulnerable-road-user-vru-protection/aeb-pedestrian/ (visited on 05/30/2022)

M. Punke, S. Menzel, B. Werthessen et al., Automotive camera (hardware), in Handbook of Driver Assistance Systems: Basic Information, Components and Systems for Active Safety and Comfort, ed. by H. Winner et al. (Springer, Cham, 2016), pp. 431–460. https://doi.org/10.1007/978-3-319-12352-3_20. ISBN 978-3-319-123523

T. Raj, F.H. Hashim, A.B. Huddin et al., A survey on LiDAR scanning mechanisms. Electronics 9, 741 (2020). https://doi.org/10.3390/electronics9050741. https://www.mdpi.eom/2079-9292/9/5/741

F. Schuldt, F. Saust, B. Lichte et al., Effiziente systematische Testgenerierung fiir Fahrerassistenzsysteme in virtuellen Umgebungen (2013). https://doi.org/10.24355/dbbs.084-201307101421-0. https://publikationsserver.tu-braunschweig.de/receive/dbbs_mods_00052570

P. Seiniger, A. Weitzel, Testverfahren für Verbraucherschutz und Gesetzgebung, in Handbuch Fahrerassistenzsysteme, Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort, ed. by H. Winner et al. (Springer, Wiesbaden, 2015), pp. 167–182. https://doi.org/10.1007/978-3-658-05734-3_11. ISBN 978-3658-05734-3

M. Sieber, G. Berg, I. Karl et al., Validation of driving behavior in the vehicle in the loop: steering responses in critical situations, in 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013) (2013), pp. 1101–1106. https://doi.org/10.1109/ITSC.2013.6728379

J.E. Stellet, M.R. Zofka, J. Schumacher et al., Testing of advanced driver assistance towards automated driving: a survey and taxonomy on existing approaches and open questions, in 2015 IEEE 18th International Conference on Intelligent Transportation Systems (2015), pp. 1455–1462. https://doi.org/10.1109/ITSC.2015.236

T. Taketomi, H. Uchiyama, S. Ikeda, Visual SLAM algorithms: a survey from 2010 to 2016. IPSJ Trans. Comput. Vis. Appl. 9, 16 (2017). https://doi.org/10.1186/s41074-017-0027-2

H. Winner, Radarsensorik, in Handbuch Fahrerassistenzsysteme: Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort, ed. by H. Winner, S. Hakuli, G. Wolf (Vieweg+Teubner, Wiesbaden, 2012), pp. 123–171. https://doi.org/10.1007/978-3-8348-8619-4_13. ISBN 978-3-8348-8619-4

H. Winner, S. Hakuli, F. Lotz et al., Handbuch Fahrerassistenzsysteme—Grundlagen, Komponenten und Systeme für aktive Sicherheit und Komfort (Springer, Berlin, 2015). ISBN 9783-658-05734-3

C. Yu, Z. Liu, X. Liu et al., DS-SLAM: a semantic visual SLAM towards dynamic environments, in CoRR (2018). arXiv:1809.08379

Funding

We thank our partners EVOMOTIV GmbH, an automotive company in Germany, and the German Federal Ministry of Economics and Energy for supporting this project with financial founds.

Author information

Authors and Affiliations

Contributions

MW, TW, FG and RK contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Weber, M., Weiss, T., Gechter, F. et al. Approach for improved development of advanced driver assistance systems for future smart mobility concepts. Auton. Intell. Syst. 3, 2 (2023). https://doi.org/10.1007/s43684-023-00047-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-023-00047-5