Abstract

Machine learning and in particular deep learning techniques have demonstrated the most efficacy in training, learning, analyzing, and modelling large complex structured and unstructured datasets. These techniques have recently been commonly deployed in different industries to support robotic and autonomous system (RAS) requirements and applications ranging from planning and navigation to machine vision and robot manipulation in complex environments. This paper reviews the state-of-the-art with regard to RAS technologies (including unmanned marine robot systems, unmanned ground robot systems, climbing and crawler robots, unmanned aerial vehicles, and space robot systems) and their application for the inspection and monitoring of mechanical systems and civil infrastructure. We explore various types of data provided by such systems and the analytical techniques being adopted to process and analyze these data. This paper provides a brief overview of machine learning and deep learning techniques, and more importantly, a classification of the literature which have reported the deployment of such techniques for RAS-based inspection and monitoring of utility pipelines, wind turbines, aircrafts, power lines, pressure vessels, bridges, etc. Our research provides documented information on the use of advanced data-driven technologies in the analysis of critical assets and examines the main challenges to the applications of such technologies in the industry.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There has been considerable literature concerning the deterioration of critical systems and infrastructure around the world, and the resulting health and safety implications, whether these are roads, bridges, or energy related infrastructure. As reported by [1], there are at least 150,000 bridges in the United States alone that have lost their structural integrity and are no longer fit for purpose. Mechanical systems and civil infrastructure deemed critical assets by both government and industry, are vulnerable to damage mechanisms, which can adversely affect social services and the overall productivity of an economy.

This has ensured that regular inspection and maintenance is now standard practice. The operation and maintenance (O&M) costs, resulting from standardized inspection and maintenance practices, have been quite considerable for government and industry. O&M cost accounts for a large proportion of lifecycle costs in critical systems; for instance, the O&M expenditure in the wind energy industry amounts to 25%-30% of total costs [2]. Challenges to conventional maintenance and inspection practices of civil infrastructure and mechanical systems involves the fact that most methods and protocols employed are bureaucratic and labour intensive. The inspection and monitoring of assets are usually undertaken manually, with technicians and operators having to travel sometimes to distant locations hundreds of miles away. In some cases, operators and technicians must work in environments which are subject to intensive conditions caused by heat, cold, noise, wetness, dryness, etc. In other cases, the location may be inaccessible to human technicians, as in the case of large storage tanks or underground pipelines.

Technological advancements and emergence of robotics and autonomous systems (RAS) have begun to revolutionize monitoring and inspection of mechanical systems and civil infrastructure. This revolution has provided an interest and demand for the use of RAS technologies to support the monitoring, inspection and maintenance of offshore wind farms, gas and utility pipelines, power lines, bridges, railways, high rise buildings, vessels, storage tanks, underwater infrastructure, etc., in order to mitigate the current health and safety risks that human operators currently experience while inspecting or monitoring such infrastructure within the energy, transport, aerospace and manufacturing sectors [3]. There is a drive in both industry and government for the development and availability of RAS technologies that can be deployed to provide data on the condition of assets and help technicians undertake actions deemed necessary, based on the information provided by RAS. This information can be signals provided by hardware instruments, or images taken by cameras from damaged, shadowed, rough, or rusty surfaces.

Robot inspections have been proven to be more efficient and faster than human inspections. For instance, the inspection of wind turbines using unmanned aerial vehicles (UAVs) take considerably shorter time than that using conventional visual inspection [3]. As indicated in a case study reported by [4], the traditional rope access method can inspect only one wind turbine per day, whereas a UAV can inspect up to three wind turbines in a day. Vast amount of data with diverse formats (such as, audio, video, or digital codes) can be collected by numerous RAS technologies that are deployed to monitor and inspect infrastructure. However, it will be quite time-consuming, if not impossible for human operators to analyze this volume of incoming data using conventional computing models. Machine learning (ML) techniques provide advanced computational tools to process and analyze all the data provided by RAS technologies efficiently, speedily, and accurately. The evolution from teleoperated robot systems which require remote human control to autonomous systems, which when pre-programmed can operate without human intervention has helped in the maturity and ascendance of RAS technologies which remove the need for travel, bureaucratic paperwork requirements, etc. While there might still be a number of RAS technologies that work offline, there is a growing number of RAS technologies that are wireless, remotely transferring data, e.g., images of structures, materials, etc. to a control office through inter-networks for analysis.

The aim of this paper is to provide an academic contribution by reporting on literature and research related to the use of ML in RAS-based inspection and monitoring of mechanical systems and civil infrastructure. It also proposes a classification and analysis of different ML techniques used for the analysis of data yielded from RAS-based inspections. This means that the research in this paper investigates and identifies which study, in which literature, has used which ML technique, to support different RAS technologies deployed for inspection purposes. To achieve this aim, we identify the relevant literature with keywords including: robotics, inspection, machine learning, maintenance, mechanical engineering, civil infrastructure, and asset. We will also provide a review and classification of ML techniques; the types of damage mechanisms being considered, e.g., corrosion, erosion, fatigue, cracks, etc.; the types of inspections; and robotic platforms that have been used to support both industry and academic research. In addition, a review will be conducted on the characteristics of datasets collected during RAS inspections of civil and mechanical infrastructure, including: sources of data (public or non-public); types of data (e.g., image, video, documents etc.); size of data; velocity or rate of data generation and transmission; and the variety of data (structured or unstructured). Following on from this, there will be an evaluation of the results and findings. Finally, there will an exploration of potential development in RAS for inspection and monitoring of future assets.

The rest of this paper is organized as follows. Section 2 reviews different types of RAS technologies that have been proposed and designed to support the inspection and monitoring of mechanical systems and civil infrastructure. Section 3 reviews the characteristics of the data collected by RAS systems for inspection and monitoring purposes. Section 4 reviews various types of ML techniques that can and have been used to process and analyze data from RAS inspections. Section 5 discusses the findings of the literature review undertaken in this research and then finally, Section 6 reviews some of the current technology gaps and challenges in the application of ML techniques for RAS based inspection of mechanical systems and civil infrastructure. The organization of this literature review is schematically illustrated in Fig. 1.

2 RAS technologies for monitoring and inspection

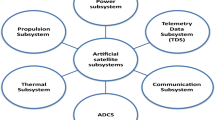

Today, there are a variety of robotic and autonomous systems being developed and deployed in various industries, including aerospace, manufacturing, energy, transport, agriculture, healthcare, etc. RAS systems are widely used to support monitoring, maintenance and inspection of mechanical systems and civil infrastructure. These technologies are provided with artificial intelligence (AI), sometimes referred to as machine learning (ML), to enable and complete complex tasks, as well as process vast amounts of data. The mechanical design for an RAS system used to support inspection, monitoring and maintenance purposes, can be categorized by specific locomotion and adhesion mechanisms. The adoption of an inspection robot’s locomotion and adhesion mechanisms is sometimes offset against task or application specific requirements such as payload, power requirements, velocity, and mobility [5, 6].

Locomotion in robotics specifically refers to directional movement that makes it possible for an object to move from location to another, the mechanism that makes a robot capable of moving in its environment. The literature states that there are four main types of locomotion that a robot system could be fitted with, depending on the task and environment they are being built to support [5]. These four locomotion types include: arms and legs, wheels and chains, sliding frame, and wires and rails. Considering the pros and cons of each type, arm and legged robots are better suited for maneuver around obstacles in the environment when compared to other locomotion systems. Conversely, wheels and chain-driven locomotion are best suited to environments with a flat and even surface and ill-suited for navigating obstacles in the environment. The sliding frame locomotion mechanism comprises of a mechanical design that has two frames which move against one another in rotation. This mechanical design however provides for low speeds. Finally, locomotion involving wires and rails comprises of a simple system where the robot is held in place by wires and rails [5]. Adhesion in robotics refers to the mechanism by which robot systems can attach or cling to surfaces in their environment. Common adhesion mechanisms for RAS systems that support inspection tasks ranging from magnetic adhesion and pneumatic adhesion (whether that be the passive suction cups type or active suction chambers type or vortex thrust systems type) to vacuum sucker, propeller, and dry adhesion.

In the following subsections, we review RAS-based inspection systems that are currently being used in different industry sectors. These systems range from platforms operating below sea level to those operating within the troposphere (ground level to about 10-20 km above sea level) and the ones purposed to operate in the thermosphere and exosphere (space and beyond). We therefore suggest five robot categories, including: unmanned marine robots, ground-based robots, climbing and crawler inspection robots, unmanned aerial robots, and space inspection robot systems.

2.1 Unmanned marine robot systems

Some literature use the term ‘unmanned marine vehicles (UMV)’ as an umbrella term for unmanned surface vehicles (USV) and unmanned underwater vehicles (UUV) respectively [6]. UUVs however can be further classified as either autonomous underwater vehicles (AUV) or remotely operated vehicles (ROV) [7].

AUVs are unmanned, pre-programmed robot vehicles, purposed and deployed into the ocean depth, autonomously without the support of cabling and human intervention. When the AUV completes its task, it returns to a pre-programmed location, where its data can be retrieved, downloaded, processed, and analyzed. An ROV is an unmanned robot which is deployed into ocean depths; however, the difference is that an ROV is connected to a ship by cables. An operator located on the ship pilots the ROV. The cables attached to an ROV are used to transmit commands and data between the operator and robot. AUVs can be deployed to support inspection of hazardous objects, surveying and mapping wrecks, and deep underwater infrastructure (e.g., subsea cables). ROVs are usually deployed into dangerous or challenging deep water environments for human divers. Therefore, both AUV and ROV robot systems are fitted with and supported by a variety of sensors to collect data. The data provided may be used for military or civilian surveys, inspections, surveillance, and exploration purposes. AUVs and ROVs are usually equipped with cameras for obtaining video images underwater. ROVs use cameras to transmit video telemetry to human operators for analysis and decision-making. Sound navigation ranging (sonar) and fiber optic gyros (FOG) support object detection, obstacle avoidance and navigation. ROVs might also be fitted with robotic arms for collecting underwater samples [8].

2.2 Unmanned ground robot systems

Unmanned ground-based robots operate autonomously on ground surfaces. They are sometimes referred to as mobile robots in some academic literature, alternatively they are otherwise referred to as unmanned ground vehicles (UGV) or land-based robots. Ground-based robots in literature are also sometimes categorized based on their locomotion, which among other criteria is based on the environment it is deployed into, which are usually even, stable environments. A ground-based robot has the advantage of being able to support and carry maximum amounts of payload where appropriate, however the disadvantage of these robot types is their lack of mobility with uneven terrain [6].

Unmanned ground-based robots can be categorized as wheeled robots, walking (or legged) robots, tracked robots, or robots with a hybrid of either wheeled, legged or tracking [9]. Wheeled robots navigate on the ground using motorized wheels to propel themselves [9]. Literature states that there are four types of wheeled robot, which can be differentiated by the number of degrees of freedom (DOF) they hold. DOF is defined as the number of independent variables that can define the motion or position of an object (or mechanism) in space. These four types include the fixed standard wheel; the castor wheel; Swedish wheel, and finally the ball or spherical wheel. There are also several types of wheeled robots, including the single-wheeled robot, two-wheeled robot, three-wheeled robot, and so on, each with their unique mobility feature or characteristic [9].

Legged (or walking) robots, unlike wheeled robots, navigate on both even and uneven surfaces, hard and soft surfaces, and can detect obstacles in their path or environment. Legged robots can be classified as one-legged (hoppers), two-legged (humanoid), three-legged, four legged (quadruped), five-legged, six-legged (hexapod), and so on [9]. Hybrid ground-based robots are robots that combine legged, wheel and track locomotion systems in any given configuration [9].

The applications of unmanned ground robots are numerous, and they range from the nuclear industry, where human operators are replaced by robots to operate in radioactive environments, to military operations for surface repairs, navigating minefields, explosive ordinance disposal (EOD), carrying and transporting payload, etc. Other state-of-the-art applications include reconnaissance, surveillance, and target acquisition operations; and space exploration as in the case of NASA’s planetary rovers [10]. Unmanned ground-based vehicles are also fitted with array of sensor payload options to support autonomous operations, navigation through the environment and data collection. Cameras are used to scan the robot’s environment and support calculation of its position. Furthermore, motion detectors, infrared (IR) sensors, temperature and contact sensors support object detection, obstacle and collision avoidance and obstacle localization. Laser range finder sensors which use a laser beam to generate distance measurements, producing range data, also support object detection and obstacle avoidance [8].

2.3 Climbing and crawler inspection robots

Wall climbing and crawler robots were developed for movement on vertical plane environments for the inspection and maintenance of a range of assets such as storage tanks, nuclear power facilities, and high-rise buildings [11, 12]. Oil refineries consist of storage tanks that require cleaning; along with a requirement for routine inspection and non-destructive testing (NDT) of these tanks to check for cracks and leaks. The traditional and manual implementation of these routine inspection and maintenance tasks results in very high labor and financial costs. The development and deployment of climbing and crawling robot systems can help with automating these tasks [12].

Climbing robots adopt an adhesion mechanism, based on the type of the environment they are deployed into [6]. These robots employ magnetic adhesion or pneumatic or negative pressure (depending on the suction or thrust type) adhesion. According to literature, climbing robot systems are usually fitted with arm and leg locomotion mechanism systems. The number of arms and legs can vary from two to eight legs, albeit eight legged robots are not as common. Alternatively, climbing and crawler robots can otherwise be fitted with wheels of chain-driven locomotion [5]. The adhesion mechanisms available today make climbing and crawling robots capable of attaching to structures and materials, while also providing a reliable platform for attached payload and tools [6].

Literature reports some information about the velocity, and mobility of the climbing and crawler robots. Climbing robot systems might need to reach high velocity on a vertical plane, for optimal movement between inspection locations. With respect to mobility, climbing robot systems which are fitted with arms and legs can navigate uneven surface, steps, and other objects in the environment [5]. When considering the payload requirements for climbing and crawler robots, some sensors such as ultrasonic sensors, gravity sensors, acceleration sensors etc. are used to measure and provide data about the distance of objects or obstacles in front [5, 6, 11–13]. The literature provides a varied advice on the weight of the payload capacity, ranging between 10 kg and 30 kg. Obviously, the weight requirements will be dependent on the tasks that the robot system is deployed to complete [5, 11, 12]. However, robotics engineers must engage with the problem that climbing and crawling robots will need to suitably offset the take up of heavy payloads, while securing adhesion on the challenging surfaces that they are designed for [6].

2.4 Unmanned aerial robot systems (or drones)

Unmanned aerial robot systems are interchangeably referred to as unmanned aerial vehicles (UAVs), and more commonly referred to as drones in the literature. Various multidisciplinary disciplines, ranging from environmental monitoring to civil engineering are increasingly deploying drones to support with various inspection type applications [14]. This is because research has continuously shown that the use of drones for inspection-based tasks reduce the need for human actors, their risk and exposure to injury and fatality, maintenance, and downtime costs [14–16]. Seo et al. [14] indicates that the selection of a drone for a particular application is based on some criteria including the mission duration, battery life, camera and video resolution, payload capacity, GPS and collision avoidance and cost performance [14]. Locomotion in drones is provided by propellers, or as referred to rotors in certain literature. The term propellers and rotors are used interchangeably in the literature, although technically, a rotor could be considered a horizontal propeller (such as those mounted on a helicopter), while propeller could technically be the vertical rotor mounted on an airplane. Nevertheless, they are identical objects seen from different angles. A propeller propels an object, using the thrust as the force for horizontal movement and lift for vertical movement – providing for a vertical take-off and landing (VTOL) capability. Motors provide power to propellers and spin them at high speeds. These high speeds in turn create the thrust or lift which provide the drone with the required locomotion [15]. Lattanzi et al. [6] contended that drones compromise with having reduced stability and how much payload they can support, with the advantage of increased movement and mobility [6]. In literature, flight time or duration of operation is inextricably linked to battery life or number of batteries available in a drone. The battery provides the electricity to power the drone, and most drones are only capable of providing enough power to support 20-30 minutes of flight time [14].

Drones are described in literature as technology platforms that can support a variety of applications and carry a variety of sensor payloads. Sensor payloads can vary from thermal and infrared to optical cameras. Light detection and ranging (LIDAR) is used to measure and provide data on the distance of objects, and with radar, the angle and velocity of objects as well [16]. Literature indicates that the higher the number of propellers (or rotors) supporting the drone, the greater the drone’s payload capacity. Although there are caveats to this guidance, one of which is that increased payload results in a decrease in the drone’s flight time and range capacity [17]. Drones can support payload from 150 g to 830 g, depending on battery life [17]. Literature and research that have deployed drones for infrastructure inspection have indicated the use of commercial cameras with resolutions of 12 to 18 megapixel, with each 15-minute flight instance providing over 1200 images [18].

2.5 Space inspection robot systems

RAS systems have increasingly become a critical aspect of space technology, supporting a variety of space missions. Space robotics can be classified as either small or large manipulators, or humanoid robots [19]. Space robots, also referred to as space manipulators in some literature, meet decreased and applied gravitational forces on them. In most cases, these robots rotate and hover and glide in orbit [20]. Literature demonstrates two types of applications for space-based robotics, these include on-orbit assembly and on-orbit maintenance (OMM). OMM applications involve repair, refueling, debris removal, inspection, etc.

Since the scope of this paper is concerned with inspection purposed platforms, we review only those space robot systems that are developed to support inspection tasks. This includes the development of the orbiter boom sensor system (OBSS) by the Canadian Space Agency (CSA). The OBSS was deployed to inspect the façade of the thermal protection system of space shuttles [19]. Space robots can be deployed to ferry varied payloads of kilograms to tons on space installations [21].

[22] provided a description of the teleoperated robotic flying camera called the Autonomous Extra Vehicular Robotic Camera (AERCam), that is used to support astronauts by providing them with a way to inspect and monitor the shuttle and space station. The first version of the robot was called AERCam Sprint and was deployed on a shuttle in 1997. The AERCam Sprint was fitted with a ring of twelve infrared detectors and two color cameras to enable vision capability [22]. [23] provided a report on a space inspection robot developed by NASA, called Tendril. The Tendril is a manipulator robot type purposed to support space missions by inspecting difficult to reach locations, e.g. fissures, craters, etc. Nishida et al. [24] provided a report on a prototype model for what they describe as an ‘end-effector’ for an inspection and diagnosis space robot. Pedersen et al. [25] mentioned a space robot designed to inspect the Mir station called Inspector, indicating however that it failed while in flight.

3 Features of data collected from RAS-based inspections

This section reviews the characteristics of data collected by RAS technologies, which are then processed and analyzed by analytical methods and techniques to support the monitoring and inspection of mechanical systems and civil infrastructure. We will explore the characteristics of input data collected by RAS systems using the four Vs data model [26–29]. Literature indicates that data can be categorized through its volume, which refers to the quantity of data that can be generated and stored; veracity, which refers to the quality of data collected as input; velocity, which refers to the speed at which data can be produced; and variety, which refers to the type and format of data collected. These four characteristics are briefly described below:

3.1 Volume (quantity or size of data)

Meyrowitz et al. [8] advised that there is a direct relationship between the type of sensor fitted to a robotic autonomous system and the volume of data produced. This position considers that certain sensors by default generate larger quantities of data compared to others, e.g., cameras which produce video data can generate millions of bits of data.

3.2 Variety (type and format of data)

Literature review showed that most research papers that have deployed climbing and crawler robots fit them with an array of sensors to collect a variety of data types. The data types include sound waves and their distance to an object, using ultrasonic sensors; acceleration and velocity using accelerometers and gravity sensors [13]. Climbing and crawling robots also collect image and video data using cameras [11, 13]. Literature review of the types of data collected by UAVs have demonstrated that they have been purposed to collect image and video data [15, 18, 30–32]. In a study by Alharam et al. [33], the UAVs have also been purposed to collect data on gas leakage, specifically methane (CH4) from oil and gas pipelines. The types of data collected by UUVs, specifically ROVs for underwater inspection, include image and video data; angular, velocity, orientation, depth, and pressure data, collected by optical and gyro sensors [7, 13]. The types of data collected by unmanned ground robots (UGRs) vary from images and videos collected by cameras; distance measurements collected by range finder sensors; and sound wave data collected by ultrasonic sensors [10].

3.3 Velocity (speed of data generation)

While the literature indicates that certain types of sensors produce higher volumes of data compared to others, it is also indicated that the speed of data generation and transmission has a direct correlation with the transmission medium or link used, and sometimes the environment the data is transferred within [8]. Except for on-board RAS data processing and analysis, data rates are slowed depending on their environment, Meyrowitz et al. [8] demonstrated that, with current technology, underwater RAS systems operate in an environment that reduce the rate of data transmission [8].

3.4 Veracity (quality and accuracy of data)

Literature indicates that the quality and accuracy of data can be directly linked to the frequency and type of transmission link used for data on RAS systems. In the instance of UUVs, Meyrowitz et al. [8] provided the instance of sonar imaging, where the combination of low frequency and bad transmission links result in reduced resolution and lots of interference that will require cleaning in image and acoustic data [8]. This has led to research into the development of better, high performance data transmission links, ranging from fibre optics to laser links. Alternatively, RAS systems with ML technology on-board, can process and analyze data with greater veracity because the data has not yet been subject to the degradation that is directly linked to transmitting the input data.

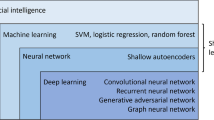

4 Machine learning techniques

A review of literature provides a distinct categorization between different ML algorithms. These algorithms are referred to as either supervised learning, unsupervised learning, or reinforcement learning algorithms. This section provides an overview of some of the popular ML techniques documented in literature and used to process and analyze data collected from RAS-based inspection operations.

4.1 Supervised learning

There are supervised learning techniques that are used to provide prediction-based solutions for problems that can either be categorized as classification or regression. These techniques require vast amounts of labelled data as input. In this approach to ML, the outputs (sometimes referred to as targets in literature) are pre-determined and directed towards interpretation and prediction. The dataset provided is separated into a training set and test set respectively, and then it is labelled with features of interest. When trained, the system can identify and apply these labels to new data, e.g., when taken through the supervised ML process, a system can be trained to identify new images of an object. Therefore, given input (x) and output (y), a supervised learning algorithm can learn the mapping function y = f(x); so that given input (x), this can lead to a prediction of output (y) for the given data [34–37].

There are two types of supervised learning approaches to ML. The first type is the regression learning process, where a model predicts a continuous quantity based on its input variables. Regression predicts continuous values such as height, weight etc., these values are referred to as continuous because they reside within an infinite number of possibilities, e.g., weight is a continuous value because there is an infinite number of possible values for a person’s weight [38, 39]. The other type refers to the classification-based supervised learning process, where the output or target is categorical (discrete or finite number of distinct groups or classes). Classification refers to the process of identifying a model that takes a given dataset and sorts the data within it into distinct or separate classes or labels. Classification models in supervised machine learning are often described as a technique for predicting a class or label [34–37, 40, 41].

While most ML algorithms can be applied to solve both classification and regression problems, algorithms best suited to support classification-based problems include the K-nearest neighbors (KNN), logistic regression, support vector machine (SVM), decision tree, naive bayes, random forest, and artificial neural network (ANN). Conversely, algorithms best suited to support with regression-based problems include linear regression as well as random forest [39]. These techniques are briefly reviewed in the following.

4.1.1 Linear regression

Linear regression is a supervised model or algorithm used to predict the value of a variable based on the value of another variable. The model assumes that linear relationship between the input variables (x) and a single output (y), and that the output (y) can be calculated from a linear combination of the input variable (x). This is referred to as a simple linear regression. Literature describes multiple input variables as multiple linear regression. Linear regression models fit a straight line to a dataset, to describe the relationship between two variables.

4.1.2 Support vector machine (SVM)

SVM is a supervised machine learning algorithm that can be applied to both classification and regression approaches. SVM algorithm identifies a “decision boundary” or “hyper plane” to separate a dataset into two distinct classifications. The algorithm attempts to maximize the distance between the nearest data points of the two classes within the dataset. Support vectors are the data points nearest to the decision boundary; and a change in the position of the support vectors will result in the change in the position of the decision boundary. The greater the distance the data points are from the decision boundary, the more concrete their classification. The distance between the decision boundary and the nearest data point is called the margin. SVM is very accurate and works very well with small datasets. However, with large datasets it usually results in longer training times [33, 35, 42–44].

4.1.3 Decision trees (DT)

Decision Trees (DTs) are a supervised ML algorithm, that builds classification or regression models. DTs take the form of a tree diagram, breaking down a dataset into smaller subsets, to facilitate the development of a tree with a root node, a decision node, with each outward branch of the node representing a possible decision, outcome, or reaction. The decision tree comprises of decision nodes and leaf nodes, with leaf nodes representing a classification or a decision. DTs are typically used to determine a statistical probability or more simply, a course of action for complex problems. DTs provide a visual output of a given decision-making process and they can process both numerical and categorical data. However, DTs are susceptible to unbalanced datasets which generate biased models. DTs are also susceptible to overfitting - which occurs when a model fits too closely to the training data and is less accurate when introduced to new and previously unseen data [33–35, 42–44].

4.1.4 Random forest (RF)

Random forest is a supervised ML algorithm that can be applied to both classification and regression-based problems. It grows multiple individual decision trees for a given problem and merges them together to make a more accurate prediction. The RF technique uses randomness and ensemble learning to produce uncorrelated forests of decision trees. Ensemble learning is a method that combines various classifiers such as decision trees, and takes the aggregation of their predictions to provide solutions. The most commonly known ensemble methods are bagging and boosting. Bagging creates a different subset from the training data, with the final output based on majority voting. Boosting on the other hand is a method (e.g., ADA Boost, XG Boost) that combines “weak learners” into “strong learners” by creating sequential models so that the final model generated delivers the highest accuracy. Random forests, unlike DTs, are not susceptible to over-fitting, however, they are a time-consuming and resource-intensive technique [33].

4.1.5 XGBoost (Extreme Gradient Boosting)

XGBoost is short for ‘Extreme Gradient Boosting’. The XGBoost is a supervised ML algorithm implementing the gradient boosting decision tree framework. The algorithm can be applied to solve classification, regression, and prediction problems. It creates and works to optimize (through a boosting technique) each upcoming decision tree, so that the errors of each following decision tree are reduced compared to the previous tree that came before it. The boosting technique involves a process where there is a gradual learning from data, resulting in improved prediction for building subsequent decision iterations.

4.1.6 K-Nearest Neighbor (K-NN)

The KNN algorithm is a supervised ML algorithm, best suited to classification models. The algorithm makes an estimation of the probability that a new data point belongs to a particular group. This process involves looking at the data points in proximity and then identifying which data points have similar features to the new data point. The new data point is then assigned to the group which has most data points with similar features close the new data point. The KNN algorithm is very easy to implement and fast to execute. However, KNN does not classify data points very well and the accuracy of the algorithm is dependent on the quality of the dataset [35, 42–44].

4.1.7 Naive Bayes

Naive Bayes is a supervised ML technique used to solve classification problems, and is based around counting and conditional probability. It uses the Bayes theorem to classify data. The Naive Bayes algorithm naively assumes that all characteristics of a data point are independent of one another. The Bayes’ theorem is based on the understanding that the probability of an event may require to be updated as new data becomes available. The algorithm seems to perform much better with categorical data (for example, it works well when applied to document classification and spam filtering) than with numerical data [34, 35, 42].

4.1.8 Logistic regression

Logistic regression is a supervised learning and classification algorithm for predicting a binary outcome, where an event occurs (True) or does not occur (False). The algorithm is used to distinguish between two distinct classes. It is considered a supervised ML algorithm because it has X input features and a y target value, and uses labels on the dataset for training. The algorithm works to find the logistic function of best fit to describe the relationship between X and y. Logistic regression algorithm is similar to the linear regression algorithm, except that the linear regression works with continuous target variables (numbers within a range) while the logistic regression is used when the target variable is categorical. The algorithm transforms its output using the sigmoid function to return a value which is then mapped to two or more discrete classes. Binary regression, multinominal logistic regression and ordinal regression are the three main types of logistic regression. Binary regression is used to process Boolean values, multinomial is used to process n≥3 values, and ordinal logistic regression processes n≥3 ordered classes. Logistic regression has been used to support various applications from medical diagnosis to fraud detection in banking [34, 42].

4.1.9 Artificial Neural Network (ANN)

Artificial Neural Networks (ANNs), which can solve both regression and classification problems, are modelled on the neural networks in the human brain. Like the human brain that contains billions of neuron cells that are connected and distribute signals in the human brain, ANNs are made up of artificial neurons, called units, grouped into three different layers. The first layer is called the ‘input layer’ which receives data and then forwards the data received to the second layer called the ‘hidden layer’. The hidden layer performs mathematical computations on the data received from the input layer. The last layer is the output layer, which returns data as output. Deep neural networks (sometimes called deep learning in academic literature) refers to neural networks that contain multiple hidden layers [35, 42–46].

4.1.10 Convolutional Neural Network (CNN)

Convolutional Neural Network (CNN) is a type of ANN that detects patterns and helps with processing of vision-based tasks. CNN is made up of an ML unit algorithm, called perceptions. A CNN can make predictions by analysing an image, check to identify features, and classify the images based on this analysis. CNN consists of multiple layers that process and extract features from data. These layers include the Convolutional Layer, Rectified Linear Unit (ReLU), Pooling Layer, and Fully Connected Network (FCN). The Convolutional Layer contains filters that perform the convolution operation, while the ReLU layer performs operations on elements and outputs a rectified feature map. The Pooling Layer takes the rectified feature map as input, and performs a ‘down-sampling’ operation that reduces the dimensions of the feature map. The pooling layer then converts the two-dimensional array output from the pooled feature map into a linear vector by flattening it. FCN layer takes the flattened matrix from the pooling layer as input and then proceeds to classify and identify the images [45–48].

4.2 Unsupervised learning

Literature tells us that unsupervised learning is where algorithms identify patterns within a given dataset. Unsupervised learning process involves searching for similarities that can be used to group data. Some of the most used unsupervised learning algorithms include the K-means clustering algorithm, Hierarchical clustering, Anomaly detection, principle component analysis (PCA), Independent Component Analysis, Apriori algorithm, singular value decomposition [34–37].

4.3 Reinforcement learning

Reinforcement learning (RL) takes an alternative approach to supervised and unsupervised learning. RL does not require the system to learn from data, instead learning is the result of feedback and reward. This involves a series of trial and error by a software agent. Some of the most common unsupervised learning algorithms include: SARSA – Lambda algorithm; Deep Q Network (DQN) algorithm; Deep Deterministic Policy Gradient (DDPG) and the Asynchronous Advantage Actor-Critic algorithm (A3C) [35–37].

4.4 Deep learning

The term deep learning refers to a subset of ML techniques that requires vast amounts of data to train models to output values, interpretations, or predictions. Deep learning methods are ANNs with more than one hidden layer and they can be supervised or unsupervised. Applications that require the application of deep learning techniques as supervised learning, include image classification, object detection and face recognition. Alternatively, applications that require the deep learning techniques as unsupervised learning are usually instances where there is no labelled data and for clustering problems, e.g., image encoding and word embedding.

4.4.1 Deep Neural Network (DNN)

A deep neural network (DNN) are ANNs that have more than one hidden layer (therefore the term “deep”), that are trained with vast amounts of data. Each hidden layer comprises of neurons that map a function to input to provide an output. DNNs are trained through the adjustment of its neurons, biases and weight features. These types of neural networks are also supported by various techniques such as the back-propagation algorithm and optimization methods such as stochastic gradient descent. Three types of deep neural networks include multi-layer perceptrons (MLP), convolutional neural networks (CNN) and recurrent neural networks (RNN). DNN features support speech recognition systems, and translation systems like Google Translate [49, 50].

4.4.2 Deep Belief Networks (DBNs)

Deep belief networks (DBNs) consist of unsupervised networks, that comprise of a stack and sequence of connected restricted Boltzmann machines (RBMs). The DBN trains each of the Boltzmann machine layers until they converge. The value of the output layer of a Boltzmann machine is input into the next Boltzmann machine in the sequence, then again trained until convergence is reached. This process is repeated with each Boltzmann machine until the whole network has been successfully trained. Applications of DBNs vary from generating of images to video sequences and motion capture [51–53].

4.4.3 Recurrent Convolutional Neural Networks (RCNN)

Recurrent Convolutional Neural Networks (RCNN) algorithms detect and localize objects in an image. This is done by drawing rectangular boundary like boxes around objects contained within an image, placing a label on, or categorizing each defined box in an image, extracting features in the image using the SVM algorithm, and then processing the features using a pre-trained CNN. The last stage in the process brings separate regions together to obtain the original image with the identification of the objects within the image [47, 54–58].

4.4.4 Fast R-CNN

An iteration or evolution and improvement of the R-CNN model can be found with the Fast R-CNN algorithm. Fast R-CNN model takes the image as a whole and passes it to its neural network to output, the output is then sliced into region of interests (ROI).

4.4.5 Faster R-CNN

A further evolution of the R-CNN model is the Faster R-CNN algorithm [59]. Faster R-CNN is a better performing and faster algorithm than R-CNN and Fast R-CNN, because it only uses CNNs and does not use SVMs, and provides a single feature extraction of an image, instead of region-by-region extractions of an image like R-CNN. According to literature, this results in Faster R-CNN training networks at least nine times faster, with more accuracy than R-CNN [59, 60]. However, what makes Faster R-CNN distinct to its predecessor Fast R-CNN is the use of the Region Proposal Network (RPN) technique [60].

4.4.6 Mask R-CNN

The Mask R-CNN is an extension of the Faster R-CNN technique. Literature describes the Mask R-CNN technique as an advanced image segmentation method, which takes a digital image and breaks it down into segments or pixels, and then categorizes the segments. For example, a single image is segmented and categorized to identify multiple objects in the image [61].

4.4.7 R-FCN

Literature describes the R-FCN model as being based on region proposal. The difference between the R-FCN and R-CNN techniques (which is also based on region proposal) is that R-FCN applies the selective pooling technique that extracts features for prediction on the last layer of its network [62].

4.4.8 Single Shot Detector (SSD)

The Single Shot Detector (SSD) is an ML technique that breaks down an image into a grid of cells. In turn each cell has the function of detecting objects, by predicting the category and location of objects in the region where the images are located within. Literature indicates that the SSD model is faster than the Faster R-CNN model. However, when the object size is small, the model’s performance decreases [63].

4.4.9 You Only Look Once (YOLO)

The YOLO (You Only Look Once) algorithm uses CNNs to detect and recognize objects in a picture in real-time. YOLO first takes an entire image as input, divides the image into grids (this is different to R-CNN that uses regions to localize objects in an image), image classification and localization are applied to each grid. The algorithm then predicts the rectangular (bounding) boxes and their associated classes. The YOLO model does however find it difficult to localize objects properly compared with R-CNN [37, 64].

4.4.10 Recurrent Neural Networks (RNNs)

Classic neural networks are described as ‘feed forward’ networks because they channel information in a single forward direction, through a series of mathematical operations performed at the nodes of the network. Data is fed through each node as input, never visiting a node more than once, before being processed and converted into an output. Feed forward networks only perceive the current sample data that has been provided in present time and have no facility for memory with respect to previous data samples processed. In other words, classic neural networks do not have the facility for data persistence.

RNNs are a type of deep neural network, and unlike classic neural networks they take both the current data sample and the previously received samples as input. RNNs can process data from the first input to the last output and initiate feedback loops throughout the entire computation process, enabling the loop of data back into the network. RNNs are distinct from feed forward networks by the feedback loop connected to their past decisions. RNNs allow previous outputs to be provided as inputs, while also having hidden states. RNN models are commonly used in the natural language processing (NLP) and speech recognition domain [65, 66].

4.4.11 Long Short-Term Memory Networks (LSTMs)

LSTMs are a special type of RNN that help preserve the error that can be back propagated through layers and time. LSTMs provide recurrent networks with the ability to learn over time. This is made possible in large part to LSTMs’ gated cell, from which data can be written to, read from and stored into, all external to the back and forth of the recurrent network [67].

4.4.12 Generative Adversarial Networks (GANs)

GANs are described as generative deep learning unsupervised learning algorithms. The technique was introduced in 2014 by Ian Goodfellow. The premise of GANs involves a neural called a generator, which produces fake data samples. The generator works in concert with another network called the discriminator which has to differentiate between two different input data samples. The first being the original data samples and the second being the fake data samples being created and output by the generator. The discriminator has to evaluate, learn and make decisions as to which data sample is from the actual training set and which are form the generator [68, 69].

4.4.13 Multilayer Perceptrons (MLPs)

A perceptron is an input layer and an output layer that are fully connected; and comprise of input values, weights and bias, net sum, and an activation function. A fully connected neural network with multiple layers is called Multilayer Perceptron (MLP). MLP is a supervised learning feed forward deep neural network that connects multiple layers in a directed graph, in other words, where the signal path is through a single direction through the nodes, between the input and output layers. In this network, every node, with the exception of the input nodes, contains a non-linear activation function. MLPs can be used to build speech-recognition, image-recognition, and machine-translation applications [70, 71].

4.4.14 Restricted Boltzmann Machines (RBMs)

Boltzmann machines are non-deterministic, generative deep learning models with only two types of nodes - hidden and visible nodes. There are no output nodes, which provides them with the non-deterministic feature. Boltzmann Machine has connections among the input nodes, with all nodes connected to all other nodes including input or hidden nodes. This allows universal information sharing of parameters, patterns and correlations of the data. Restricted Boltzmann Machine (RBM) are a special class of Boltzmann Machines. RBM is an unsupervised two-layered (visible layer and hidden layer) neural network. RBM is characterized by restrictions where every node in the visible layer is connected to every node in the hidden layer but no two nodes in the same group are connected to each other [51–53].

4.4.15 Autoencoders

Autoencoders are an unsupervised type of neural network that can be used to detect patterns or structure within data to learn a compressed representation of data provided as input. The autoencoder learns how to compress the data based on its attributes during training. An autoencoder is a feed forward neural network where the input is the same as the output. It is made up of encoder and decoder models. The encoder compresses the input, and the decoder works to re-create the input from the compressed version of the input that was provided by the encoder. Applications of autoencoders vary from anomaly detection, data denoising (audio and images), dimensionality reduction etc. [72–76].

Table 1 reviews the advantages and disadvantages of different ML techniques reviewed in Section 4 of this paper.

4.5 Performance evaluation metrics for machine learning techniques

In this section, we examine the academic conventions in literature for measuring the performance of machine learning techniques in the detection of material defects and equipment failures. Current academic literature has provided methods for evaluating the performance of computer vision and especially machine learning methods and techniques, when applied to extract, process and analyze datasets. The literature indicates that while ML techniques can be used to extract and analyze data, these techniques however can also generate false results due to misclassification or misinterpretation of data collected. This is the reason for performance measurement metrics, which evaluate the performance of ML methods and the ratio of correct predictions or classifications to misclassification or incorrect predictions.

Classifiers or classification-based ML techniques in literature usually use the confusion matrix, accuracy/error, precision, recall, F1 measure (or F-measure), ROC, AUC, Hypothesis test (t-test, Wilcoxon signed-rank test, Kappa test) as the metric system or evaluation measurements [78]. Regression based problems use MSE (mean squared error), MAE (mean absolute error), MAPE (mean absolute percentage error), RMSE (root mean squared error) which is the square root of the average distance between the actual score and the predicted score, and quantiles error for evaluating the performance of machine learning methods applied as solutions [79].

4.5.1 Confusion matrix

Given that classification is when the output data is one or more discrete labels, regression however is when the output data or prediction of the model is a continuous quantity or value. Binary classification is where there are only two class categories of vector y (target label) (e.g., True or False, 1 or 0, etc.) in the dataset. Conversely, multi-class classification is when there are three or more classes or categories of the vector y (target label) in the dataset. The performance measure of ML techniques, specifically for classification tasks (e.g., binary classification, multi-class classification) are commonly analyzed using a confusion matrix, which is a two-dimensional matrix that measures the False Negative (FN), True Negative (TN), False Positive (FP), and True Positive (TP) for each model used. TP is in reference to the positive points that are correctly labelled by the classifier. TN refers to the negative points that were correctly labelled by the classifier. FP are the negative points that were incorrectly labelled as positive, and FN are the positive points that we mislabeled as negative.

These measurements are then used to calculate the performance metrics: recall, F1 score, accuracy, and precision. The accuracy is a percentage of predictions that are correct (TP + TN). The precision measures how accurate the model being used is for predicting values. The recall measures the sensitivity of the model to predict positive outcomes. The F-measure is a combination of the precision score and recall score [33, 80].

4.5.2 Mean Square Error (MSE) and Mean Absolute Error (MSD)

The performance measure of a regression model is commonly analyzed using either the mean square error (MSE) or the mean absolute error (MSD). The MSE measures the average of the squares of the errors, that is the average squared difference between the predicted value and the target value. The lower the MSE, the better. The MSD measures the average of the absolute differences between model prediction and target value.

4.5.3 Mean Average Precision (MAP)

Some studies have recommended the use of the evaluation metric called the mean average precision (MAP) to analyse the performance measure of object detection (localization and classification) models such as SSD, R-CNN and Faster RCNN and YOLO [31]. The MAP is also commonly applied to analyze the performance of computer vision models and image segmentation problems. In MAP, an object (the predicted value) is taken as accurate on the condition it overlaps with what is labelled as the ground truth (the original damage annotated by human inspectors) that is greater than a given threshold. This is calculated using the Intersection over Union measure, which is expressed as the area overlap (predicted value overlap with the target value) over the area of union (area of the union of the predicted value and target value) [81].

5 Findings of literature review

This section provides an overview of the findings of the literature review in terms of types of mechanical systems and civil infrastructure assets. We then proceed to examine different types of damage mechanisms that can be found on these assets. This is followed by a review of our findings regarding the application of ML techniques used to support RAS-based monitoring and inspection.

5.1 Types of assets under inspection

We begin the review of our findings with discussing the types of various mechanical systems and civil infrastructure assets that literature indicate are subject to routine inspection and monitoring due to their vulnerability to catastrophic damage.

5.1.1 Pipelines

Pipelines in the energy industry support the transport and distribution of water, oil, and gas. Similar to most infrastructure, pipelines are subject to internal and external mechanical stresses. Such stresses can lead to damage mechanisms ranging from corrosion, cracks scale formation etc. This phenomenon can be mitigated through regular monitoring, inspection, and maintenance. There has been some research aimed at methods and techniques to support the inspection of pipes or pipelines more efficiently, this research ranges from Mohamed et al. [82], who looked at the use of mobile in-pipe inspection robots (IPIR) for inspection of corrosion and cracks in pipelines, using NDT sensors, e.g., ultrasonic. There is also the work carried out by Bastian et al. [30], who applied CNN architecture-based techniques to detect corrosion from pipeline images and DNN to extract features from those images.

5.1.2 Wind turbines

Wind turbines are another part of the energy industry and are generally located in remote environments, which are subject to extreme external stresses, e.g., wind, water, heat, etc. Similarly, just like pipelines, wind turbine infrastructure is also subject to mechanical stresses resulting in damage mechanisms such as erosion, cracks, etc. This is the reason for the emphasis and importance placed on regular inspection, monitoring and maintenance in industry planning and cost allocation. While wind turbine inspection is generally carried out using traditional methods, involving manual climbing of heights by technical inspection teams, in sometimes hazardous conditions, the last decade has seen a lot of research into the development of cost effective and safe methods and techniques that support the inspection and monitoring of asset infrastructure. This research has provided the alternative use of robot platforms, which vary from magnetic climbing robots to unmanned aerial drones. Current research has also seen the development of artificial intelligence-based algorithms and techniques that will analyze the vast amount of data collected by robot platforms, during inspection. Wang and Zhang [2] has researched the use of Haar-like feature algorithms for the automatic detection of surface crack images collected by UAVs; while Frank et al. [83] proposed applying a deep convolutional neural network (DCNN) technique on images from a wind turbine, taken by a multi-robot system. Shihavuddin et al. [31] carried out research using convolutional neural network (CNN) techniques to extract feature descriptors from images of wind turbines taken by drones; and the Faster R-CNN technique to train for object detection.

5.1.3 Aircraft fuselage

In the aerospace industry, the aircraft fuselage is one of the core and most important regular inspection tasks performed by maintenance technicians. The process involves deploying platforms that elevate technicians so that they can reach and inspect the external surface of the aircraft’s fuselage, searching for damage mechanisms or defects. The main damage or defect mechanism found on aircraft surfaces is corrosion. Corrosion is a main cause of fuselage fatigue. Alongside checks for corrosion, technicians also look for rust, cracks, and deformation of the aircraft surface during their inspections. This process has traditionally involved a painstaking, methodical task, undertaken by a technician, equipped with a flashlight and mobile elevation platform [84]. Research into alternative methods of inspection have been published over recent years. This includes Malekzadeh et al. [85] work which applied deep learning techniques: SURF, AlexNet and VGG-F to images of aircraft fuselage, taken by a custom-made platform. Miranda et al. [86] did similar work when they applied a CNN based application, along with an SVM model to images of an aircraft fuselage, taken by UAVs. The most recent work is research carried out by Brandoli et al. [84] which involved applying CNN models: DenseNet and SqueezeNet to detect corrosion pillowing in images taken from an aircraft fuselage.

5.1.4 Power lines

In the energy industry, power transmission lines act as connections between the source of power (the power plants) and endpoints (the consumers) [87]. The regular inspection of power transmission lines is considered vital in ensuring uninterrupted power supply, as damage to this part of the electrical infrastructure through rusted conductors etc. can result in downtime and power interruption [88]. As indicated by Titov et al. [88], the traditional operation for power line inspection involves a process characterized by high cost and safety risk, where human technicians manually taking images from the ground or from above with the support of a helicopter. There has been research in recent years that have demonstrated the use of UAVs (drones) to support the collection of image data. Jalil et al. [87] carried out work on power lines, employing a drone, fitted with image capture equipment to collect data, which is then passed on to a neural network model for detection and analysis of damage or defects from the image dataset. Research undertaken by Titov et al. [88] employed UAVs to take images of power lines for detection and analysis of cracks on concrete poles, missing or dirty insulator plates etc. using the YOLO version 3 deep learning technique.

5.1.5 Vessels

The maintenance of vessels, especially maritime transport ships such as oil tankers or very large crude carriers (VLCC) require regular monitoring and inspection schedules [89, 90]. These vessels are subject to typical internal and external phenomenon such as cracks, corrosion etc. Current inspection procedures for vessels today require are expensive and the vessel to dock at a shipyard, where inspectors, with the support of all sorts of mobile platforms undertake visual assessment of the structural health and condition of the vessel. Recent research over the years have studied the use of robot platforms in support of vessel inspections [89, 90]. This research has been accompanied with the development of various image processing and damage (e.g., corrosion and cracks) detection algorithms to analyze image datasets. However, there is evidence of very little to no research of the use of deep learning (neural network) based techniques.

5.1.6 Bridges

Bridges are typical civil infrastructures that are subject to external phenomenon such as wind, heat, water, and vibrations. The inspection of bridges currently requires manual visual inspection by human inspectors, working at different levels of elevation, with various levels of risk associated, to access and view parts of the bridge. This manual approach requires high time durations, in some cases road closures, high costs and due to the vast number of bridges in cities today, a lot of manpower. The last decade has seen quite a significant amount of research into the use of robot and autonomous systems (RAS) to support bridge inspections. These robot platforms are fitted with sensors ranging from infrared (IR) cameras to ultrasonic sensors [14, 91]. This has been accompanied with research into computer vision and image processing techniques that detect damage mechanisms, defects, and features (e.g., cracks) [1]. This research has recently moved towards the use of deep learning methods to analyze images and detect cracks [49, 92, 93].

5.1.7 Automotive vehicles (cars)

Currently most literature available has concentrated on the analysis of image datasets have been focused on vehicle make and model classification in support of the transport and security industry [94] defect and damage detection and analysis in support of the automotive industry and the insurance sector that supports it [95, 96]. Most automotive inspection required are focused on analysis of vehicular accidents, requiring image analysis of damages such as bumper dents, door dents, glass shutters, broken head lamps and tail lamps, as well as scratches etc. [95–97]. Recent years has produced research in the development of computer vision and neural network techniques for image analysis of car damage [95–99].

5.2 Damage mechanisms on mechanical systems and civil infrastructure

Corrosion and cracks are damage mechanisms associated with bridges, roads, rail, and levees in literature [1, 49, 93, 100]. Most of the current literature focus on the inspection of wind turbines on surface damage, specifically, leading edge erosion, surface cracks, damaged lightning receptors and damaged vortex receptors [2, 31, 83]. In the case of pipelines, literature focus inspection of cracks, corrosion, or erosion [30, 33, 37]. Literature discussions of aircraft inspection consistently focuses on the fuselage, for damage mechanisms such as corrosion pillowing and insulator surface cracks. Some literature on aircraft inspection however do not specify damage mechanisms, and instead uniformly refer to defect regions [84–86].

Power lines and transmission lines in literature, are associated with cracks on concrete poles, identification of missing or dirty insulator plates, rusted conductors, broken cables, insulator damage, conductor corrosion and cracks on insulator surfaces [87, 88, 101, 102]. The concentration on the inspection of vessels or ships in literature surrounds the detection, localization and classification of cracks, corrosion, or coating breakdown, pitting, and buckling [89, 90, 103].

While there could be a case for the monitoring and inspection of automotive vehicles during servicing and checkups for damage mechanisms from internal and external phenomenon (e.g., wind, water, heat), such as corrosion, fatigue etc. there seems to be little to no literature on such research available at this time. The literature available is mostly focused on damage to cars because of vehicular accidents [95–99]. A review of literature shows that corrosion and cracks are the most addressed damage mechanism, while erosions and fatigue seem to be the least addressed damage mechanisms. Table 2 demonstrates the typical research into damage mechanisms on mechanical infrastructure, that machine learning techniques have been used to detect, classify, and model.

Based on the literature reviewed, manual visual, data collection is still the norm for bridge inspection, however, in research where a robot platform is deployed to collect image datasets of damage mechanisms on bridges, most papers have reported the deployment of UAVs [49, 93, 100]. Likewise, literature on wind turbine damage inspection showed a majority preference for drones to support with data collection [17, 31, 83].

In the case of pipelines, the literature shows a mixed picture, with a balanced preference for deploying drones for some, and a preference for the use of mobile in-pipe inspection (IPIR) robots for others [33, 37]. The literature, however, provides a different landscape for the use of RAS systems in aircraft inspections. While some literature has demonstrated the use of UAVs in recent years, the literature however, indicates a preference for the use of D-Sight Aircraft inspection system (DAIS) platforms. These are portable non-destructive devices, that support the visual analysis of aircraft surface areas of the fuselage [84–86].

While in some cases, piloted helicopters are still used to gather data, however, literature demonstrates that power lines and transmission lines inspection overwhelmingly deploy UAVs for data collection of damage mechanisms [87, 88, 101, 102]. Literature regarding vessels or ships, present a very mixed preference for the use of semi-autonomous micro-aerial vehicles (MAVs) and more recently, climber or UAV robot platforms for inspection and data collection of damage mechanisms [89, 90, 103]. Literature review of automotive vehicles for cars, has unfortunately provided very little to no research with respect to the use of robot and autonomous system platforms for damage inspection.

5.3 Application of machine learning techniques for RAS inspection

This section reviews the application of machine learning techniques used to support RAS system inspection of civil and mechanical infrastructure (wind turbines, pipelines, rail, aircraft fuselage, power lines, vessels, and automobiles) in literature and performance evaluations of their use where documented. Gopalakrishnan et al. [49] looked at the processing and analysis of images of cracks on civil and mechanical infrastructure, obtained through UAVs (or drones). They applied deep convolutional neural networks (DCNN) models to process and analyze the image data and lauded the efficacy of DCNN as the more efficient technique for processing and analyzing both images and video type data [49]. Nguyen et al. [104] reviewed the use of various deep learning techniques in support of RAS systems inspection of power line infrastructure and indicated that Region-Based Convolutional Neural Networks (R-CNN) and the You Only Look Twice (YOLO) techniques as the optimal techniques for object detection for inspection tasks by RAS systems.

Shihavuddin et al. [31] explored the use of drones to obtain image data of damage on wind turbines (WT), for processing, analysis and classification using deep learning techniques. The research paper indicates that the research used Convolutional Neural Networks (CNN) as the backbone framework for processing and extracting features from image data obtained from WT using drones. Shihavuddin report their research proceeded to then use the faster R-CNN technique to train their models for object detection. They report achieving high accuracy results compared to other deep learning type algorithms used to train the models during their research. Shihavuddin et al. [31] also indicated that employing the technique called advanced image augmentation, allows you to expand your dataset, as this technique creates additional images for the training model, by altering existing image data obtained and fed into training sets. The utility of this technique is invaluable, as the larger the dataset, the more efficient the training model.

Franko et al. [83]’s research provieded findings in the use of a combined, multiple RAS platform, ranging from climbing and multicopter robots, fitted with LiDAR, RGB and ZED cameras, ultrasonic, radar and other vision type sensors, to inspect and detect corrosion and welding line damage mechanisms on the tower surfaces of WTs [83]. Alharam et al. [33] provided a case study in the use of UAVs to provide inspection of oil and gas pipelines in Bahrain. The UAVs are fitted with GPS, thermal cameras, and gas detectors to obtain image and methane (CH4) readings from gas and oil pipelines. The research looked at the use of the Decision Tree (DT), Support Vector Machine (SVM) and Random Forest (RF) techniques to process and analyze the data obtained from the drones. Franko et al. [83] reported that the RF technique provided 93% accuracy and much better performance than other classification techniques used in their research [33].

Bastian et al. [30] studied the external corrosion on pipelines and used deep neural networks to process and analyze the image and video data obtained from inspections. In their research paper, they proposed the use of a DNN technique, based on the CNN architecture, to extract and distinguish between images with corrosion and those without, from image data taken from pipelines by the UAV. They boast in their paper that CNNs have the most optimal results in terms of object detection and image classification.

Table 3 shows us that the literature demonstrates that the DCNN architecture provides up to 90% accuracy for the detection of cracks in civil infrastructure [49]; 92% accuracy in defect detection in rail infrastructure [105]. Table 1 also indicates that while the Random Forest algorithm is the best performing algorithm when compared to the Decision Tree and Support Vector Machine algorithms for detection of cracks, corrosion, and erosion on pipelines, with SVM yielding the least precision and accuracy between them [33]. However, custom CNN architectures have been reported to provide over 93% with respect to precision, accuracy, and other metrics within the confusion matrix [30]. Table 3 also advises that research on the RAS inspection and monitoring of aircraft fuselage has demonstrated that CNNs can provide up to 92% accuracy in the detection of surface and joints corrosion [84], while DNNs can provide a better performing accuracy of 96% [85]. In the case of power lines, Table 1 shows us that there is a preference for the use of custom -CNNs, Faster R-CNN or the YOLO v3 technique for extracting, analyzing, and classifying data collected from the RAS inspection of power lines; with these techniques having been reported to provide over 90% in their precision or accuracy in classifying image data [30, 88, 104]. There seems to currently be very little literature on the use machine learning techniques in the support of RAS inspection of vessels, one of the exceptions is research documented by Ortiz et al. [103], where the ANN technique has been used to extract, classify, and analyze corrosion, cracks and coating breakdown from image data collected by a micro-aerial vehicle [103].

Most of the limited literature that examines the application of machine learning techniques supporting the RAS inspection of automobiles focuses on the damage classification of damaged vehicles, because of accidents and associated insurance claims. The research shows a preference for CNNs or Mask R-CNN object recognition or damage detection of automobiles, with CNNs providing accuracy as high as 87% and the Mask R-CNN as high as 94% [95, 96].

Figure 2 provides an illustration of our findings regarding the frequency of use of popular ML techniques to process, analyze and model damage mechanisms on mechanical systems and civil infrastructure.

Table 3 lists and maps the machine learning methods used in the robot inspection of mechanical systems and infrastructure that have been reviewed in this paper. Also, following on from the performance evaluation metrics discussed in Section 4 of this paper, Table 3 provides us with performance evaluation figures and results of the machine learning techniques deployed to process and analyze data collected by RAS platforms for civil mechanical infrastructure in reviewed papers.

6 Technology gaps and challenges

This section reviews technology gaps and challenges in the application of machine learning techniques for robotic inspection of mechanical systems and civil infrastructure.

6.1 Challenges of small object detection for deep learning techniques

Object detection of small (perhaps even undetectable to the human eye) damage mechanisms on mechanical and civil infrastructure has invaluable application to industries ranging from aerospace (for detecting cracks on aircraft) to energy and utility (for detecting erosion or corrosion). A small object has been defined by [106, 107] as a 32 × 32 pixels object within an image. Current literature acknowledges that while object detection of medium to large size objects in image data is now a proven technology, accurate detection of small objects has not yet been mastered and it remains a challenge for researchers [106, 108–111].

The reasons for this research gap are a result of several realities and constraints of current state of the art object detection technology. The first is that small objects are challenging to detect because high-level resolution-based feature maps, that are characteristic of CNN architectures, used to identify large objects in images, do not support the identification of small objects in images. This is because small objects in images are mostly in low resolution. The second is due to currently limited context data; there is significantly less pixels associated with small objects, resulting in little to nothing for the detection algorithms to identify. Furthermore, there is a class imbalance in the datasets that are currently being used to train deep learning models. Current image datasets usually comprise of large to medium sized objects, this results in an imbalance in the groups of object sizes in images available to deep learning models for training.

There is a gap in the research and development of deep learning techniques or models that could provide the higher precision required for accurate localization for small objects in images; as well as an on-going race between researchers in the improvement of current object detection deep learning algorithms for small objects in image datasets [106, 108–111].

6.2 Evaluating accuracy and performance of machine learning techniques

Following this paper’s review of the methods and metrics used in academic literature to evaluate the performance of machine learning methods, techniques and models when trained on datasets to output values, interpretations, or predictions; this section briefly reviews critics of these metrics.

There are arguments in literature that contend that the current methods (such as the confusion matrix, accuracy, precision, MAP, RSME and quantile error) used for evaluating the performance and utility of machine learning techniques when applied as solutions to extract or analyze data, et al., can only be understood and applied by subject matter experts in statistics, computer science, artificial intelligence (AI), etc. [78, 112].

Both Shen et al. [112] and Beauxis-Aussalet et al. [78] contended that non-subject matter experts do not always have the background knowledge to understand terminology such as true negative (TN) or false positive (FP), that form part of the underlying metric framework for evaluating machine learning techniques. Furthermore, Shen et al.’s [112] research found that non-experts found it challenging to both use and relate some of the evaluation metrics back to the problem that the techniques are being applied to solve. Beauxis-Aussalet et al. [78] underscored the fact that some of the evaluation metrics that exist can even be misunderstood, misinterpreted, or even deployed incorrectly to case studies by non-subject matter experts [78]. It is therefore a contention in literature that there is a gap or requirement for more accessible methods for evaluating the performance of machine learning techniques, that can be understood and used by both subject matter experts in AI and their lay colleagues.

6.3 Machine learning challenges with unstructured data