Abstract

Autonomous driving has attracted significant research interests in the past two decades as it offers many potential benefits, including releasing drivers from exhausting driving and mitigating traffic congestion, among others. Despite promising progress, lane-changing remains a great challenge for autonomous vehicles (AV), especially in mixed and dynamic traffic scenarios. Recently, reinforcement learning (RL) has been widely explored for lane-changing decision makings in AVs with encouraging results demonstrated. However, the majority of those studies are focused on a single-vehicle setting, and lane-changing in the context of multiple AVs coexisting with human-driven vehicles (HDVs) have received scarce attention. In this paper, we formulate the lane-changing decision-making of multiple AVs in a mixed-traffic highway environment as a multi-agent reinforcement learning (MARL) problem, where each AV makes lane-changing decisions based on the motions of both neighboring AVs and HDVs. Specifically, a multi-agent advantage actor-critic (MA2C) method is proposed with a novel local reward design and a parameter sharing scheme. In particular, a multi-objective reward function is designed to incorporate fuel efficiency, driving comfort, and the safety of autonomous driving. A comprehensive experimental study is made that our proposed MARL framework consistently outperforms several state-of-the-art benchmarks in terms of efficiency, safety, and driver comfort.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Autonomous driving has received significant research interest in the past two decades due to its many potential societal and economical benefits. Compared to traditional vehicles, autonomous vehicles (AVs) not only promise fewer emissions [1] but are also expected to improve safety and efficiency. However, there exists a huge challenge in the task of high-level decision-making in AVs due to the complex and dynamic traffic environment, especially in mixed traffic co-existing with other road users. Lane changing is one of the largest challenges in the high-level decision-making of AVs, which has significant influences on traffic safety and efficiency [2, 3].

The considered lane-changing scenario is illustrated in Fig. 1, where AVs and HDVs co-exist on a one-way highway with two lanes. The AVs aim to safely travel through the traffic while making necessary lane changes to overtake slow-moving vehicles for improved efficiency. Furthermore, in the presence of multiple AVs, the AVs are expected to collaboratively learn a policy to adapt to HDVs and enable safe and efficient lane changes. As HDVs bring unknown/uncertain behaviors, planning, and control in such mixed traffic to realize safe and efficient maneuvers is a challenging task [4].

Recently, reinforcement learning (RL) has emerged as a promising framework for autonomous driving due to its online adaptation capabilities and the ability to solve complex problems [5, 6]. Several recent studies have explored the use of RL in AV lane-changing [4, 7, 8], which consider a single AV setting where the ego vehicle learns a lane-changing behavior by taking all other vehicles as part of the driving environment for decision making. While this single-agent approach is completely scalable, it will lead to unsatisfactory performance in the complex environment like multi-AV lane-changing in mixed traffic that requires close collaboration and coordination among AVs [9].

On the other hand, multi-agent reinforcement learning (MARL) has been greatly advanced and successfully applied to a variety of complex multi-agent systems such as games [10], traffic light control [11] and fleet management [12]. The MARL algorithms have also been applied to autonomous driving [13–16], with the objective of accomplishing autonomous driving tasks cooperatively and reacting timely to HDVs. In particular, the MARL methods [15, 17] have been applied to highway lane change tasks and show promising and scalable performance, in which AVs learn cooperatively via sharing the same objective (i.e., reward/cost function) that considers safety and efficiency. However, those reward designs often ignore the passengers’ comfort, which may lead to sudden acceleration and deceleration that can cause ride discomfort. In addition, they assume that the HDVs follow unchanged, universal human driver behaviors, which is clearly oversimplified and impractical in the real world as different human drivers tend to behave quite differently. Learning algorithms should thus work with different human driving behaviors, e.g., aggressive or mild behaviors.

To address the above issues, we develop a multi-agent reinforcement learning algorithm by employing a multi-agent advantage actor-critic network (MA2C) for multi-AV lane-changing decision making, featuring a novel local reward design that incorporates the safety, efficiency, and passenger comfort as well as a parameter sharing scheme to foster inter-agent collaborations. The main contributions and the technical advancements of this paper are summarized as follows.

-

1.

We formulate the multi-AV highway lane changing in the mixed traffic as a decentralized cooperative MARL problem, where agents cooperatively learn a safe and efficient driving policy.

-

2.

We develop a novel, efficient, and scalable multi-agent advantage actor-critic network model, by introducing a parameter-sharing mechanism and effective reward function design.

-

3.

We conduct a comprehensive empirical study on three different traffic densities and two levels of drivers’ behavior modes and compare with other state-of-the-art models to demonstrate the driving safety, efficiency, and driver comfort of our models.

The rest of the paper is organized as follows. Section 2 reviews the state-of-the-art dynamics-based and RL/MARL algorithms for autonomous driving tasks. The preliminaries of RL and the proposed MARL algorithm are introduced in Sect. 3. Experiments, results, and discussions are presented in Sect. 4. Finally, we summarize the paper and discuss future work in Sect. 5.

2 Related work

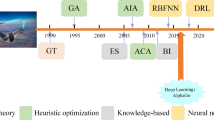

In this section, we survey the existing literature on decision-making tasks in autonomous driving, which can be mainly classified into two categories: non-data-driven and data-driven methods.

2.1 Non-data-driven methods

Conventional rule-based or model-based approaches [18–20] rely on hard-coded rules or dynamical models to construct predefined logic mechanisms to determine the behaviors of ego vehicles under different situations. For instance, lane changing guidance can be realized by establishing virtual trajectory references for every vehicle, and a safe trajectory is then planned by considering the trajectories of other vehicles [18]. In the previous literature [19], a low-complexity lane-changing algorithm was developed by following heuristic rules such as keeping appropriate inter-vehicle traffic gaps and time instances to perform the maneuver. After that, an optimization-based lane change approach was proposed [20], which formulates the trajectory planning problem as coupled longitudinal and lateral predictive control problems and is then solved via Quadratic Programs under specific system constraints. However, the rules and optimization criteria for real-world driving problems may become too complex to be explicitly formulated for all scenarios. Such a problem is more serious in mixed-traffic scenarios with unknown or stochastic behaviors of drivers.

2.2 Data-driven methods

Recently, data-driven methods, such as reinforcement learning (RL), have received great attention and have been widely explored for autonomous driving tasks. Particularly, a model-free RL approach based on deep deterministic policy gradient (DDPG) was proposed in the recent literature [5] to learn a continuous control policy efficient lane changing. Afterward, a safe RL framework [4] was presented by integrating a lane-changing regret model into a safety supervisor based on an extended double deep Q-network (DDQN). In the significant literature [21], a hierarchical RL algorithm was developed to learn lane-changing behaviors in dense traffic by applying the designed temporal and spatial attention strategies, and promising performance is demonstrated in the open racing car simulator (TORCS) under various lane change scenarios. However, the aforementioned methods are designed for the single-agent (i.e., one ego vehicle) scenarios, treating all other vehicles as part of the environment, which makes them implausible for the considered multi-agent lane-changing setting where collaboration and coordination among AVs are required.

On the other hand, multi-agent reinforcement learning (MARL) algorithms have also been explored for autonomous driving tasks [13, 14, 22, 23]. An MARL algorithm with hard-coded safety constraints [13] was proposed to solve the double-merge problem. In such a framework, a hierarchical temporal abstraction method was applied to reduce the effective horizon and the variance of the gradient estimation error. In the recent literature [23], an MARL algorithm was delivered to solve the on-ramp merging problem with safety enhancement by a novel priority-based safety supervisor. In addition, a novel MARL approach [22] was realized with the combination of Graphic Convolution Neural Network (GCN) [24] and Deep Q Network (DQN) [25] to better fuse the acquired information from collaborative sensing, showing promising results on a 3-lane freeway containing 2 off-ramps highway environment. While these MARL algorithms only consider the efficiency, and safety in their designed reward function, another important factor, the passenger comfort, is not considered in their reward function design. Furthermore, those approaches assume the HDVs follow a constant, universal driving behavior, which has limited implications for real-world applications as different human drivers may behave totally differently.

In this paper, we formulate the decision-making of multiple AVs on highway lane changing as an MARL problem, where a multi-objective reward function is proposed to simultaneously promote safety, efficiency, and passenger comfort. A parameter-sharing scheme is exploited to foster inter-agent collaborations. Experimental results on three different traffic densities with two levels of driver aggressiveness show the proposed MARL performs well on different lane change scenarios.

3 Problem formulation

In this section, we review the preliminaries of RL and formulate the considered highway lane-changing problem as a partially observable Markov decision process (POMDP). Then we present the proposed multi-agent actor-critic algorithm, featuring a parameter-sharing mechanism and efficient reward function design, to solve the formulated POMDP.

3.1 Preliminary of RL

In the standard RL setting, the agent aims to learn an optimal policy \(\pi ^{*}\) to maximize the accumulated future reward \(R_{t} = \sum_{k=0}^{T} \gamma ^{k} r_{t+k}\) from the time step t with discount factor \(\gamma \in (0,1]\) by continuous interacting with the environment. Especially, at time step t, the agent receives a state \(s_{t} \in \mathcal{R}^{n}\) from the environment and selects an action \(a_{t} \in \mathcal{A}^{m}\) according to its policy \(\pi :\,\mathcal{S}\rightarrow \Pr (\mathcal{A})\). As a result, the agent receives the next state \(s_{t+1}\) and receives a scalar reward \(r_{t}\). If the agent can only observe a part of the state \(s_{t}\), the underlying dynamics becomes a POMDP [26] and the goal is then to learn a policy that maps from the partial observation to an appropriate action to maximize the rewards.

The action-value function \(Q^{\pi }(s, a) = E[R_{t}{\mid }{s=s_{t}}, a]\) is defined as the expected return obtained by selecting an action a in state \(s_{t}\) and following policy π afterwards. The optimal Q-function is given by \(Q^{*}(s,a) = \max_{\pi } Q^{\pi }(s,a)\) for state s and action a. Similarly, the state-value function is defined as \(V^{\pi }(s_{t}) = E_{\pi }{[R_{t}{\mid }{s=s_{t}}]}\) representing the expected return for following the policy π from state \(s_{t}\). In model-free RL methods, the policy is often represented by a neural network denoted as \(\pi _{\theta }(a_{t}{\mid }s_{t})\), where θ is the learnable parameters. In actor-critic (A2C) algorithms [27], a critic network, parameterized by ω, learns the state-value function \(V_{\omega }^{\pi _{\theta }}(s_{t})\) and an actor network \(\pi _{\theta }(a_{t}{\mid }s_{t})\) parameterized by θ is applied to update the policy distribution in the direction suggested by the critic network as follows:

where the advantage function \(A_{t}= Q^{\pi _{\theta }}(s,a) - V_{\omega }^{\pi _{\theta }}(s_{t})\) [27] is introduced to reduce the sample variance. The parameters of the state-value function are then updated by minimizing the following loss function:

where \(\mathcal{B}\) is the experience replay buffer that stores previously encountered trajectories and \(\omega '\) denotes the parameters of the target network [25].

3.2 Lane changing as MARL

In this subsection, we develop a decentralized, MARL-based approach for highway lane-changing of multiple AVs. Discontinuous evaluation is a very common way to design in the autonomous driving field, which is widely used by many papers [28–32]. In particular, we model the mixed-traffic lane-changing environment as a multi-agent network: \(\mathcal{G} = (\nu , \varepsilon )\), where each agent (i.e., ego vehicle) \(i \in \nu \) communicates with its neighbors \(\mathcal{N}_{i}\) via the communication link \(\varepsilon _{ij}\in \varepsilon \). The corresponding POMDP is characterized as \((\{\mathcal{A}_{i}, \mathcal{O}_{i}, \mathcal{R}_{i}\}_{i\subseteq \nu }, \mathcal{T})\), where \(\mathcal{O}_{i} \in \mathcal{S}_{i}\) is the partial description of the environment state as stated in [33]. In a multi-agent POMDP, each agent i follows a decentralized policy \(\pi _{i}: \mathcal{O}_{i} \times \mathcal{S}_{i} \rightarrow [0, 1]\) to choose the action \(a_{t}\) at time step t. The described POMDP is defined as:

-

1.

State Space: The state space \(\mathcal{O}_{i}\) of Agent i is defined as a matrix \(\mathcal{N}_{N_{i}}\times \mathcal{F}\), where \(\mathcal{N}_{N_{i}}\) is the number of detected vehicles, and \(\mathcal{F}\) is the number of features, which is used to represent the current state of vehicles. It includes the longitudinal position x, the lateral position y of the observed vehicle relative to the ego vehicle, the longitudinal speed \(v_{x}\), and the lateral speed \(v_{y}\) of the observed vehicle relative to the ego vehicle.

-

2.

Action Space: The action space \(\mathcal{A}_{i}\) of agent i is defined as a set of high-level control decisions, including speed up, slow down, cruising, turn left, and turn right. The action space combination for AVs is defined as \(\mathcal{A}=\mathcal{A}_{1}\times \mathcal{A}_{2}\times \cdots \times \mathcal{A}_{N}\), where N is the total number of vehicles in the scene.

-

3.

Reward Function: Multiple metrics including safety, traffic efficiency, and passenger’s comfort are considered in the reward function design:

-

safety evaluation \(r_{s}\): The vehicle should operate without collisions.

-

headway evaluation \(r_{d}\): The vehicle should maintain a safe distance from the preceding vehicles during driving to avoid collisions.

-

speed evaluation \(r_{v}\): Under the premise of ensuring safety, the vehicle is expected to drive at a high and stable speed.

-

driving comfort \(r_{c}\): Smooth acceleration and deceleration are expected to ensure safety and comfort. In addition, frequent lane changes should be avoided.

As such, a multi-objective reward \(r_{i,t}\) at the time step t is defined as:

$$ r_{i,t}=\omega _{s}r_{s}+ \omega _{d}r_{d}+\omega _{v}r_{v}- \omega _{c}r_{c}, $$(3)where \(\omega _{s}\), \(\omega _{d}\), \(\omega _{v}\) and \(\omega _{c}\) are the weighting coefficients. We set the safety factor \(\omega _{s}\) to a large value, because safety is the most important criterion during driving. The details of the four performance measurements are discussed next:

-

(1)

If there is no collision, the collision evaluation \(r_{s}\) is set to 0, otherwise, \(r_{s}\) is set as −1.

-

(2)

The headway evaluation is defined as

$$ r_{d}=\log \frac{d_{\text{headway}}}{v_{t}t_{d}}, $$(4)where \(d_{\text{headway}}\) is the distance to the preceding vehicle, and \(v_{t}\) and \(t_{d}\) are the current vehicle speed and time headway threshold, respectively.

-

(3)

The speed evaluation \(r_{v}\) is defined as

$$ r_{v}=\min \biggl\{ \frac{v_{t}-v_{\min }}{v_{\max }-v_{\min }},1 \biggr\} , $$(5)where \(v_{t}\), \(v_{\min }\) and \(v_{\max }\) are the current, minimum, and maximum speeds of the ego vehicle, respectively. Within the specified speed range, higher speed is preferred to improve the driving efficiency.

-

(4)

The driving comfort \(r_{c}\) is defined as

$$ r_{c}=r_{a}+r_{lc}, $$(6)where

$$ r_{a}=\textstyle\begin{cases} -1,& \vert a_{t} \vert \geq a_{th}, \\ 0,& \vert a_{t} \vert < a_{th} \end{cases} $$is the penalty term of rapid acceleration and deceleration than a given threshold \(a_{th}\). Here \(a_{t}\) presents the acceleration at time t.

$$ r_{lc}=\textstyle\begin{cases} -1,&\text{change lane},\\ 0,&\text{keep lane} \end{cases} $$is defined as the lane change penalty. Excessive lane changes can cause discomfort and safety issues. Note that this lane-changing penalty term is to avoid frequent, unnecessary lane changes while necessary lane changes (i.e., to maintain safety and efficiency) are still promoted through the safety and speed evaluation terms.

-

-

4.

Transition Probability: The transition probability \(T(s^{,}\mid s,a)\) characterizes the transition from one state to another. Since our MARL algorithm is a model-free design, we do not assume any prior knowledge about transition probability.

3.3 MA2C for AVs

In this paper, we extend the actor-critic network [27] to the multi-agent setting as a multi-agent actor-critic network (i.e., MA2C). MA2C improves the stability and scalability of the learning process by allowing certain communication among agents [33]. To take the advantage of homogeneous agents in the considered MARL setting, we assume all the agents share the same network structure and parameters, while they are still able to make different maneuvers according to different input states. The goal in cooperative MARL setting is to maximize the global reward of all the agents. To overcome the communication overhead and the credit assignment problem [34], we adopt the local reward design [23] as follows:

where \(\mid \nu _{i} \mid \) denotes the cardinality of a set containing the ego vehicle and its close neighbors. Compared to the global reward design previously used in [22, 35], the designed local reward design mitigates the impact of remote agents.

The backbone of the proposed MA2C network is shown in Fig. 2, in which states separated by physical units are first processed by separate 64-neuron fully connected (FC) layers. Then all hidden units are combined and fed into the 128-neuron FC layer. Then the shared actor-critic network will update the policy and value networks with the extracted features. As mentioned in the recent literature [23], the adopted parameter sharing scheme [12] between the actor and value networks can greatly improve the learning efficiency.

The architecture of the proposed MA2C network with shared actor-critic network design, where x and y are the longitudinal and lateral position of the observed vehicle relative to the ego vehicle, and \(v_{x}\) and \(v_{y}\) are the longitudinal and lateral speed of the observed vehicle relative to the ego vehicle

The pseudo-code of the proposed MA2C algorithm is shown in Algorithm 1. The hyperparameters include the (time)-discount factor γ, the learning rate η, the politeness coefficient p and the epoch length T. Specifically, the agent receives the observation \(O_{i,t}\) from the environment and updates the action by its policy (Lines 3-6). After each episode is completed, the network parameters are updated accordingly (Lines 9-11). If an episode is completed or a collision occurs, the “DONE” signal is released and the environment will be reset to its initial state to start a new epoch (Lines 13-14).

4 Experiments and discussion

In this section, we evaluate the performance of the proposed MARL algorithm in terms of training efficiency, safety, and driving comfort in the considered highway lane changing scenario shown in Fig. 1.

4.1 HDV models

In this experiment, we assume that the longitudinal control of HDVs follows the Intelligent Driver Model (IDM) [36], which is a deterministic continuous-time model describing the dynamics of the position and speed of each vehicle. It takes into account the expected speed, distance between the vehicles and the behavior of the acceleration/deceleration process caused by the different driving habits. In addition, Minimize Overall Braking Induced By Lane Change model (MOBIL) [37] is adopted for the lateral control. It takes vehicle acceleration as the input variable of the model and can work well with most car-following models. The acceleration expression is defined as follows:

where \(\tilde{a}_{n}\) is the acceleration of the new follower after the lane change, and \(b_{\text{safe}}\) is the maximum braking imposed to the new follower. If the inequality in Eqn. (8) is satisfied, the ego vehicle is able to change lanes. The incentive condition is defined as:

where a and ã are the accelerations of the ego vehicle before and after the lane change, respectively, \(\Delta a_{th}\) is the threshold that determines whether to trigger the lane change or not, and p is a politeness coefficient that controls how much effect we want to take into account for the followers, where \(p=1\) represents the most considerate drivers whose decision on change lanes may give way to the following blocked vehicles whereas \(p=0\) characterizes the most aggressive drivers where the HDV makes selfish lane-changing decisions by only considering their own speed gains and ignoring other vehicles. The performance evaluation of different p values is discussed in Sect. 4.3.3.

4.2 Experimental settings

The simulation environment is modified from the gym-based highway-env simulator [38]. We set the highway road length to 520 m, and the vehicles beyond the road are ignored. The vehicles are randomly spawned on the highway with different initial speeds 25–30 m/s (56 mph–67 mph). The vehicle control sampling frequency is set as the default value of 5 Hz. The motions of HDVs follow the IDM and MOBIL model, where the maximum deceleration for safety purposes is limited by \(b_{\text{safe}}=-9~\text{m/s}^{2}\), politeness factor p is 0, and the lane-changing threshold \(\Delta a_{th}\) is set as 0.1 m/s2. To evaluate the effectiveness of the proposed methods, three traffic density levels are employed, which correspond to low, middle, high levels of traffic congestion, respectively. The number of vehicles in different traffic modes is shown in Table 1.

We train the MARL algorithms for 1 million steps (10,000 epochs) by applying two different random seeds and the same random seed is shared among agents. We evaluate each model 3 times every 200 training episodes. The parameters γ and learning rate η are set as 0.99 and \(5 \times 10^{-4}\), respectively. The weighting coefficients in the reward function are set as \(\omega _{s}=200\), \(\omega _{d}=4\), \(\omega _{v}=1\) and \(\omega _{c}=1\), respectively. These experiments are conducted on a macOS server with a 2.7 GHz Intel Core i5 processor and 8 GB of memory.

4.3 Results & analysis

4.3.1 Local v.s. Global reward designs

Figure 3 shows the performance comparison between the proposed local reward and the global reward design [22, 35] (with shared actor-critic parameters). In all three traffic modes, the local reward design consistently outperforms the global reward design in terms of larger evaluation rewards and smaller variance. Although the global reward design outperforms the local reward design before 2000 epochs, the variance of the global reward is relatively large. In addition, the performance gaps are enlarged as the number of vehicles increases. This is due to the fact that the global reward design is more likely to cause credit assignment issues as mentioned in the significant monograph [34].

4.3.2 Sharing v.s. Separate actor-critic network

Figure 4 shows the performance comparison between strategies with or without sharing the actor-critic network parameters during training. Obviously, sharing an actor-critic network has better performance than without sharing. Specifically, sharing actor-critic parameters in all three modes results in higher rewards and lower variance. The reason is that, in separate actor-critic networks, the critic network can only guide the actor network to the correct training direction until the critic network is well-trained which may take a long time to achieve. In contrast, the actor network can benefit from the shared state representation via the critic network in a shared actor-critic network [23, 39].

4.3.3 Verification of driving comfort

In this subsection, we evaluate the effectiveness of the proposed multi-objective reward function with the driving comfort in Eqn. (3). Figure 5 shows the acceleration and deceleration of the AV with or without the comfort measurement defined in Eqn. (6). It is clear that the proposed reward design with the comfort measurement has a low variance (average deviation: 0.455 m/s2) and is more smooth than the reward design without comfort term (average deviation: 0.582 m/s2), which shows the proposed reward design presents good driving comfort.

4.3.4 Adaptability of the proposed method

In this subsection, we evaluate the proposed MA2C under different HDV behaviors, which is controlled by the politeness coefficient p denoted in Eqn. (9), in which \(p=0\) means the most aggressive behavior while \(p=1\) represents the most polite behavior. Figure 6 shows the training performance of two different HDV models (i.e., aggressive or politeness) under different traffic densities. It is clear that the proposed algorithm achieves scalable and stable performance whenever the HDVs take aggressive or courteous behaviors.

4.3.5 Comparison with the state-of-the-art benchmarks

In order to demonstrate the performance of the proposed MARL approach, we compared it with several state-of-the-art MARL methods:

-

1.

Multi-agent Deep Q-Network (MADQN) [40]: This is the multi-agent version of Deep Q-Network (DQN) [25], which is an off-policy RL method by applying a deep neural network to approximate the value function and an experience replay buffer to break the correlations between samples to stabilize the training.

-

2.

Multi-agent actor-critic using Kronecker-Factored Trust Region (MAACKTR): This is the multi-agent version of actor-critic using Kronecker-Factored Trust Region (ACKTR) [41], which is an on-policy RL algorithm by optimizing both the actor and the critic using Kronecker-factored approximate curvature (K-FAC) with trust region.

-

3.

Multi-agent Proximal Policy Optimization (MAPPO) [42]: This is a multi-agent version of Proximal Policy Optimization (PPO) [43], which improves the trust region policy optimization (TRPO) [44] by using a clipped surrogate objective and adaptive KL penalty coefficient.

-

4.

The Proposed MA2C: This is our proposed method with the designed multi-objective reward function design, parameter sharing, and local reward design schemes.

Table 2 shows the average return for the MARL algorithms during the evaluation. Obviously, the proposed MA2C algorithm shows the best performance under the density 1 scenario than other MARL algorithms. It also shows promising results on the density 2 and density 3 scenarios and outperforms MAACKTR and MAPPO algorithms. Note that even though MADQN shows a better average reward than the MA2C algorithm, it shows larger reward deviations which may cause unstable training and safety issues. MADQN depends on the current state during calculation, and cannot draw specific control strategies, and is not suitable for complex control with large state gaps. Indeed, MA2C appears more robust performances, which shows a very clear increasing and plateauing tendency. Similarly, the evaluation curves during the training process are shown in Fig. 7. As expected, the proposed MA2C algorithm outperforms other benchmarks in terms of evaluation reward and reward standard deviations.

4.3.6 Policy interpretation

In this subsection, we attempt to interpret the learned AVs’ behavior. Figure 8 shows the snapshots during testing at time steps 20, 28, and 40. As shown in Fig. 8(a), ego vehicle ➅ attempts to make a lane change to achieve a higher speed. To make a safe lane change, ego vehicle ➅ and ego vehicle ➆ are expected to work cooperatively. Specially, the ego vehicle ➆ should slow down to make space for the ego vehicle ➅ to avoid collisions, which is also represented in Fig. 9, where the ego vehicle ➆ starts to slow down at about 20-time steps. Then the ego vehicle ➅ begins to speed up to make the lane change as shown in Fig. 8(b) and Fig. 9. Meanwhile, the ego vehicle ➆ continues to slow down to ensure a safe headway distance with ego vehicle ➅ as shown in Fig. 9. Figure 8(c) shows the completed lane changes, at which time the ego vehicle ➆ starts to speed up. This demonstration shows the proposed MARL framework learns a reasonable and cooperative policy for ego vehicles.

5 Conclusion

In this paper, we formulated the highway lane-changing problem in mixed traffic as an on-policy MARL problem, and extended the A2C into the multi-agent setting, featuring a novel local reward design and parameter sharing schemes. Specifically, a multi-objective reward function is proposed to simultaneously promote the driving efficiency, comfort, and safety of autonomous driving. Comprehensive experimental results, conducted on three different traffic densities under different levels of HDV aggressiveness, show that our proposed MARL framework consistently outperforms several state-of-the-art benchmarks in terms of efficiency, safety, and driver comfort.

Availability of data and materials

Not applicable.

References

B. Paden, M. Cáp, S.Z. Yong, D.S. Yershov, E. Frazzoli, A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 1(1), 33–55 (2016)

D. Desiraju, T. Chantem, K. Heaslip, Minimizing the disruption of traffic flow of automated vehicles during lane changes. IEEE Trans. Intell. Transp. Syst. 16(3), 1249–1258 (2015)

T. Li, J. Wu, C.-Y. Chan, M. Liu, C. Zhu, W. Lu, K. Hu, A cooperative lane change model for connected and automated vehicles. IEEE Access 8, 54940–54951 (2020)

D. Chen, L. Jiang, Y. Wang, Z. Li, Autonomous driving using safe reinforcement learning by incorporating a regret-based human lane-changing decision model, in American Control Conference (ACC) (2020), pp. 4355–4361

P. Wang, H. Li, C.-Y. Chan, Continuous control for automated lane change behavior based on deep deterministic policy gradient algorithm, in IEEE Intelligent Vehicles Symposium (IV) (2019), pp. 1454–1460

C. Xi, T. Shi, Y. Wu, L. Sun, Efficient motion planning for automated lane change based on imitation learning and mixed-integer optimization, in 23rd International Conference on Intelligent Transportation Systems (ITSC) (2020), pp. 1–6

G. Wang, J. Hu, Z. Li, L. Li, Harmonious lane changing via deep reinforcement learning. IEEE Trans. Intell. Transp. Syst. PP(99), 1–9 (2021)

R. Du, S. Chen, Y. Li, J. Dong, P.Y.J. Ha, S. Labi, A cooperative control framework for CAV lane change in a mixed traffic environment (2020). CoRR. arXiv:2010.05439

C.-J. Hoel, K. Wolff, L. Laine, Automated speed and lane change decision making using deep reinforcement learning, in 21st International Conference on Intelligent Transportation Systems (ITSC), ed. by W.-B. Zhang, A.M. Bayen, J.J. Sánchez Medina, M.J. Barth (2018), pp. 2148–2155

O. Vinyals, I. Babuschkin, W.M. Czarnecki, M. Mathieu, A. Dudzik, J. Chung, D.H. Choi, R. Powell, T. Ewalds, P. Georgiev et al., Grandmaster level in starcraft ii using multi-agent reinforcement learning. Nature 575(7782), 350–354 (2019)

T. Chu, J. Wang, L. Codecà, Z. Li, Multi-agent deep reinforcement learning for large-scale traffic signal control. IEEE Trans. Intell. Transp. Syst. 21(3), 1086–1095 (2020)

K. Lin, R. Zhao, Z. Xu, J. Zhou, Efficient large-scale fleet management via multi-agent deep reinforcement learning, in Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining (2018), pp. 1774–1783

S. Shalev-Shwartz, Shaked Shammah, and Amnon Shashua. Safe, multi-agent, reinforcement learning for autonomous driving (2016). CoRR. arXiv:1610.03295

J. Wang, T. Shi, Y. Wu, L. Miranda-Moreno, L. Sun, Multi-agent graph reinforcement learning for connected automated driving, in Proceedings of the 37th International Conference on Machine Learning (ICML) (2020), pp. 1–6

P. Young Joun Ha, S. Chen, J. Dong, R. Du, Y. Li, S. Labi, Leveraging the capabilities of connected and autonomous vehicles and multi-agent reinforcement learning to mitigate highway bottleneck congestion (2020). CoRR. arXiv:2010.05436

P. Palanisamy, Multi-agent connected autonomous driving using deep reinforcement learning, in International Joint Conference on Neural Networks (IJCNN) (2020), pp. 1–7

S. Chen, J. Dong, P. Ha, Y. Li, S. Labi, Graph neural network and reinforcement learning for multi-agent cooperative control of connected autonomous vehicles. Comput.-Aided Civ. Infrastruct. Eng. 36(7), 838–857 (2021)

M.L. Ho, P.T. Chan, A.B. Rad, Lane change algorithm for autonomous vehicles via virtual curvature method. J. Adv. Transp. 43(1), 47–70 (2009)

J. Nilsson, J. Silvlin, M. Brannstrom, E. Coelingh, J. Fredriksson, If, when, and how to perform lane change maneuvers on highways. IEEE Intell. Transp. Syst. Mag. 8(4), 68–78 (2016)

J. Nilsson, M. Brännström, E. Coelingh, J. Fredriksson, Lane change maneuvers for automated vehicles. IEEE Intell. Transp. Syst. Mag. 18(5), 1087–1096 (2016)

Y. Chen, C. Dong, P. Palanisamy, P. Mudalige, K. Muelling, J.M. Dolan, Attention-based hierarchical deep reinforcement learning for lane change behaviors in autonomous driving, in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops (2019), pp. 137–145

J. Dong, S. Chen, P. Young Joun Ha, Y. Li, S. Labi, A drl-based multiagent cooperative control framework for CAV networks: a graphic convolution Q network (2020). CoRR. arXiv:2010.05437

D. Chen, Z. Li, Y. Wang, L. Jiang, Y. Wang, Deep multi-agent reinforcement learning for highway on-ramp merging in mixed traffic (2021). CoRR. arXiv:2105.05701

T.N. Kipf, M. Welling, Semi-supervised classification with graph convolutional networks, in 5th International Conference on Learning Representations (ICLR) (2017)

V. Mnih, K. Kavukcuoglu, D. Silver, A. Graves, I. Antonoglou, D. Wierstra, M.A. Riedmiller, Playing atari with deep reinforcement learning (2013). CoRR. arXiv:1312.5602

M.T. Spaan, Partially observable Markov decision processes, in Reinforcement Learning (Springer, Berlin, 2012), pp. 387–414

V. Mnih, A.P. Badia, M. Mirza, A. Graves, T. Lillicrap, T. Harley, D. Silver, K. Kavukcuoglu, Asynchronous methods for deep reinforcement learning, in International Conference on Machine Learning (ICML) (2016), pp. 1928–1937

M. Kaushik, N. Singhania, K.M. Krishna, Parameter sharing reinforcement learning architecture for multi agent driving behaviors (2018). CoRR. arXiv:1811.07214

L. Schester, L.E. Ortiz, Longitudinal position control for highway on-ramp merging: a multi-agent approach to automated driving, in 22nd IEEE Intelligent Transportation Systems Conference (ITSC) (2019), pp. 3461–3468

P. Palanisamy, Multi-agent connected autonomous driving using deep reinforcement learning, in International Joint Conference on Neural Networks (IJCNN) (2020), pp. 1–7

P. Wang, H. Li, C.-Y. Chan, Continuous control for automated lane change behavior based on deep deterministic policy gradient algorithm, in IEEE Intelligent Vehicles Symposium (IV) (2019), pp. 1454–1460

A. Mavrogiannis, R. Chandra, D. Manocha, B-GAP: behavior-guided action prediction for autonomous navigation (2020). CoRR. arXiv:2011.03748

T. Chu, S. Chinchali, S. Katti, Multi-agent reinforcement learning for networked system control, in 8th International Conference on Learning Representations (ICLR) (2020), pp. 1–17

R.S. Sutton, A.G. Barto, Reinforcement Learning: An Introduction (MIT press, Cambridge, 2018)

M. Kaushik, S. Phaniteja, K.M. Krishna, Parameter sharing reinforcement learning architecture for multi agent driving behaviors (2018). CoRR. arXiv:1811.07214

M. Treiber, A. Hennecke, D. Helbing, Congested traffic states in empirical observations and microscopic simulations. Phys. Rev. E. 62, 1805–1824 (2000)

A. Kesting, M. Treiber, D. Helbing, Connectivity statistics of store-and-forward intervehicle communication. IEEE Trans. Intell. Transp. Syst. 11(1), 172–181 (2010)

E. Leurent, An environment for autonomous driving decision-making (2018). https://github.com/eleurent/highway-env

L. Graesser, W.L. Keng, Foundations of Deep Reinforcement Learning: Theory and Practice in Python (Addison-Wesley, Reading, 2019)

G. Ji, J. Yan, J. Du, W. Yan, J. Chen, Y. Lu, J. Rojas, S.S. Cheng, Towards safe control of continuum manipulator using shielded multiagent reinforcement learning. IEEE Robot. Autom. Lett. 6(4), 7461–7468 (2021)

Y. Wu, E. Mansimov, R.B. Grosse, S. Liao, J. Ba, Scalable trust-region method for deep reinforcement learning using Kronecker-factored approximation, in Isabelle Guyon, Ulrike Von Luxburg, Samy Bengio, ed. by H.M. Wallach, R. Fergus, S.V.N. Vishwanathan, R. Garnett. Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems (NeurIPS) (2017), pp. 5279–5288

J. Schulman, F. Wolski, P. Dhariwal, A. Radford, O. Klimov, Proximal policy optimization algorithms (2017). CoRR. arXiv:1707.06347

J. Schulman, F. Wolski, P. Dhariwal, A. Radford, O. Klimov, Proximal policy optimization algorithms (2017). CoRR. arXiv:1707.06347

J. Schulman, S. Levine, P. Abbeel, M. Jordan, P. Moritz, Trust region policy optimization, in International Conference on Machine Learning (ICML) (2015), pp. 1889–1897

Acknowledgements

The authors are grateful for the efforts of our colleagues in the Sino-German Center of Intelligent Systems, Tongji University. We are grateful for the suggestions on our manuscript from Dr. Qi Deng.

Funding

Jun Yan is supported by the National Natural Science Foundation of China under Grant No. 61701348 hosted by Pro. Huilin Yin. Jun Yan, Prof. Huilin Yin, and Prof. Wancheng Ge are grateful for the generous support of TÜV SÜD. Wei Zhou is supported by the DAAD scholarship for a dual-degree program between Tongji University and Technical University of Munich.

Author information

Authors and Affiliations

Contributions

WZ, DC, JY, and ZL participated in the framework design and manuscript writing, and WZ implemented experiments inspired by the encouragement and guidance of DC. HY helped revise the manuscript. WG is the master supervisor of WZ in Tongji University which provides the opportunity to complete the research work. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Code availability

Not applicable.

Wei Zhou and Dong Chen contributed equally to this work.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhou, W., Chen, D., Yan, J. et al. Multi-agent reinforcement learning for cooperative lane changing of connected and autonomous vehicles in mixed traffic. Auton. Intell. Syst. 2, 5 (2022). https://doi.org/10.1007/s43684-022-00023-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-022-00023-5