Abstract

Unmanned aerial vehicles (UAVs) have been found significantly important in the air combats, where intelligent and swarms of UAVs will be able to tackle with the tasks of high complexity and dynamics. The key to empower the UAVs with such capability is the autonomous maneuver decision making. In this paper, an autonomous maneuver strategy of UAV swarms in beyond visual range air combat based on reinforcement learning is proposed. First, based on the process of air combat and the constraints of the swarm, the motion model of UAV and the multi-to-one air combat model are established. Second, a two-stage maneuver strategy based on air combat principles is designed which include inter-vehicle collaboration and target-vehicle confrontation. Then, a swarm air combat algorithm based on deep deterministic policy gradient strategy (DDPG) is proposed for online strategy training. Finally, the effectiveness of the proposed algorithm is validated by multi-scene simulations. The results show that the algorithm is suitable for UAV swarms of different scales.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Unmanned aerial vehicle (UAV) with the characteristics of low cost, strong mobility, high concealment and no need of pilot control, have been more and more widely used to replace manned aircraft to perform military tasks such as detection, monitoring and target strike, and is a typical representative of “non-contact” combat equipment [1]. Due to the limitations of single UAV’s mission and combat capability, the swarms and intelligence of unmanned combat have become a research hotspot in recent years. With the increase of the operating range of airborne detection equipment, the scope of modern air combat has gradually developed from line of sight to beyond line of sight [2]. Swarms beyond visual range air combat refers to the situation assessment [3–5], environment awareness [6, 7], and maneuver strategy [8] of UAVs through sensing or detection equipment, and maneuver strategy is the basis of the above tasks.

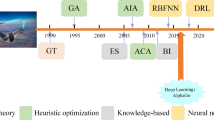

The existing air combat maneuver strategies can be divided into two categories: rule-based strategy and learning-based strategy. Rule-based strategy mainly select actions according to the given behavior rules in air combat, and not need online training and optimization, including matrix game algorithm [9, 10], expert system [11], influence graph method [12, 13], differential game method [14], etc. The matrix game method is prone to the phenomenon that the overall strategy effect is not good. On the one hand, the score function used in this method is difficult to design, which can not accurately discribe the actual air combat. On the other hand, this method has reward delay in the sequential decision making, which does not have the ability of long-term planning. Expert experience is difficult to cover all air combat situations, so is very complex to establish rule base and constraint conditions by using expert system method. Moreover, the UAVs cannot make dicision independently by using expert system method. When UAV can not find the appropriate strategy scheme in the rule base, it must introduce human intervention. In [15] and [16], multi-level influence graph can realize multiple-to-one air combat, but it is only suitable for small-scale UAV swarms. Moreover, influence diagram method relies on prior knowledge, and the algorithm reasoning process is cumbersome, which can not meet the requirements of real-time and high dynamic air combat. The two methods introduced in [14] can’t be applied to the air combat scene without model or with incomplete environment information, because these methods need to accurately model and describe the strategy model. In short, rule-based strategies are monotonous and rigid, which can not adapt to the complex and highly dynamic air combat scenarios, and can not meet the requirements of intelligent operations.

Learning-based strategies optimize the model and structural parameters by means of online learning or training data, which mainly includes artificial immune system, genetic algorithm, heuristic learning algorithm, neural network method, deep reinforcement learning (DRL), etc. Artificial immune [17] uses training data to make UAV’s maneuver system can deal with different air combat situations, but the convergence is slow. The fuzzy tree proposed in [18] is too complex to be used in beyond visual range air combat. According to the characteristics of beyond visual range air combat, the University of Cincinnati has built the “alpha” intelligent air combat system [19], which has used more than 150 dimensional input data in a two-to-four air combat scene. The data dimension will be further increased if the control of airborne sensors is considered in the future. Therefore, the processing and application of high-dimensional massive data are the main dificulty in multi-UAVs autonomous air combat.

Deep learning (DL) has a good application effect in data processing [20–23], and reinforcement learning (RL) is widely used in autonomous maneuver [24–26]. With the successful application of deep reinforcement learning (DRL) in complex sequential decision making problems such as Alpha-Go [27] and Alpha-Go Zero [28], it is possible to solve air combat maneuver strategy problems by using reinforcement learning. Reinforcement learning is a learning method that uses “trial and error” to interact with the environment [29], which is a feasible method for autonomous decision making of UAV intelligent air combat maneuver strategy. The application of reinforcement learning in air combat is mainly based on value function search [30, 31] and strategy search [32, 33]. Deep Q network (DQN) algorithm is improved in [32] and realizes the UAV close range one-to-one air combat, but the algorithm uses discrete state and motion spaces, which makes the results of air combat quite different from reality. The Actor-Critic (A-C) framework is used in [34] to realize the continuous expression of UAV maneuver strategy in state space, but the algorithm is only effective in two-dimensional space. In [35], the deep deterministic policy gradient (DDPG) is applied to air combat decision making. However, the design of reward and punishment function is relatively simple, and it is difficult for agents to learn complex tactics. In general, the above studies improve the effect and ability of air combat maneuver strategy algorithm to a certain extent, but ignores the generalization ability of the algorithm. That is, the existing research on UAV air combat based on reinforcement learning algorithm mainly focuses on specific scenarios. Whether it is one-to-one air combat or multi-UAVs cooperation, the number of UAVs on both sides must be fixed, has poor compatibility.

To sum up, the strategy of swarm autonomous maneuver in dynamic environment is an urgent problem to be solved. That is, the maneuver module should have good compatibility, which is suitable for swarms of different sizes. Because the number of UAVs is constantly changing in the process of combat, the amount of information of both sides is dynamically changing.

In this paper, an autonomous maneuver strategy of swarm combat in beyond visual range air combat based on reinforcement learning is proposed. In this strategy, the autonomous maneuver decision and cooperative operation of UAV in swarm are realized, and the scalability of the algorithm is improved. The main contributions are as follows: First, the overall framework of autonomous maneuver strategy methods of UAV swarm is designed. Second, the swarm beyond visual range air combat model is established, the target entry angle and target azimuth are defined, and the situation assessment function is designed. Third, a two-stage maneuver decision strategy is proposed to solve dimension explosion. Two maneuver actions are designed in the first stage to realize the rapid collision avoidance and good communication in swarm. Multi-to-one air combat is transformed into one-to-one air combat in the second stage, avoiding directly dealing with high-dimensional swarm information. Forth, based on the basic principle of reinforcement learning and the requirements of swarms control, the Actor-Critic network framework is designed, the state space and action strategy are given, and the reward function is designed based on the distance to realize the rapid convergence of the algorithm. Fifth, based on memory bank and target network, an algorithm is designed and A-C network is trained to obtain an autonomous cooperative maneuvering strategy method for UAV swarm. The simulation experiments are carried out to verify that the algorithm can be applied to UAV swarms of different scales.

The following parts are arranged as follows: Section 2 gives the algorithm design framework, establishes the model of air combat. Section 3 designs the swarm combat maneuver decision, gives the algorithm flow of swarm air combat based on DDPG. Section 4 conducts simulation analysis. Section 5 summarizes the full paper.

2 Swarm air combat model

2.1 Overall framework

The process of UAV swarm combat includes three modules [36]: environment awareness, situation assessment and maneuver decision as shown in Fig. 1. Each UAV obtains the current battlefield information through environment awareness module via data links or airborne sensors, which mainly includes the states of the enemy target and other UAVs. Through the situation assessment module, each UAV assesses its current situation such as “advantageous” or “disadvantageous” situations, based on which the decision on how to maneuver at next time step is made. The three modules form a closed loop, which ultimately achieve the effect of inter-aircraft cooperation and air combat tasks.

2.2 Air combat model

In this paper, a multi-to-one air combat is studied as shown in Fig. 2, where multiple UAVs are deployed to monitor and attack one enemy target. In the ground coordinate system Oxyz as shown in Fig. 3, Ox axis takes the east, Oy axis the north, and Oz axis the vertical. Denote the i−th UAV by UAVi and its position at time t by Pi,t, where t is the discrete time index under fixed sampling period T. The way-point motion model of the UAVs is given by

where \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i,t}}\) is the vector from Pi,t to Pi,t+1, which is seen as the control input to be designed in the follow parts. The position of target is denoted by PT,t.

Remark 1

In general, the actual position Pi,t+1 at time t+1 may not be exactly equal to \(\phantom {\dot {i}\!}P_{i,t} + v_{\mathrm {U}_{i,t}}\) due to the flight interferences or the model uncertainties, even if \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i,t}}\) is within the physical control limitations. It is a classical control problem to minimize the error \(\left \|P_{i,t+1}^{*}-P_{i,t+1} \right \|\) given an approximated UAV flight model which can be solved by conventional PID or robust control methods, where \(P_{i,t+1}^{*}=P_{i,t} + v_{\mathrm {U}_{i,t}}\) is the desired next-time position. This problem is not the focus of our research, and in this paper it is assumed that \(P_{i,t}=P_{i,t}^{*}\) holds all the time. The control limitations will be considered in practice to make \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i,t}}\) applicable for a specific type of UAV.

In the same coordinate system, a multi-to-one air combat model is established as shown in Fig. 4. Denote by \(\phantom {\dot {i}\!}{\varphi _{\mathrm {U}_{i},t}}\) the angle between the vectors PT,t−Pi,t and \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i},t}\), which is named as the target azimuth, and similarly, φT,t for the angle between the vectors Pi,t−PT,t and vT,t, which is named as the target entry angle. They can be computed in real time as follows, where “ ⊤” denotes the transpose operation,

In the situation assessment module, we use the situation assessment function to evaluate the real-time situation of UAV. Based on the attack model [34] and evaluation function [37], the effective attack range of a UAV in the air combat is a cone with an axis in the direction of \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i},t} \) and angle of φm, which is truncated by a ball of radius RW as shown in Fig. 4, where RW represents the attack range of weapons. Similarly, we can define the cone-shape attack range for the target. Heuristically, if UAVi is in the attack range of the target, UAVi is said to be in the “disadvantageous” situation; if the target is in the attack range of UAVi while UAVi is not in the attack range of the target, UAVi is said to be in the “advantageous” situation; otherwise, UAVi is said to be in the “balance” situation. The three different situation assessment results can be denoted by “-1”, “1” and “0” respectively, which are defined as follows,

where RiT,t=∥Pi,t−PT,t∥.

3 Maneuver strategy optimization based on deep reinforcement learning

3.1 Maneuver strategy for air combat

A two-stage maneuver decision strategy is proposed in this paper. In the first stage, each UAV only considers the interactions between vehicles in the swarm by checking the stable communication and safety constraints. If all the constraints are satisfied, then the UAV moves to the second stage of maneuver decision, in which the UAV only considers the situation between the target and itself. In the following part, we will discuss the two stages separately.

In the first stage, we consider two basic principles [37]: 1) UAVs should keep a safe maneuver distance to avoid inter-vehicle collisions; 2) UAVs should stay in a closed ball to ensure stable communication. Thus, we define two maneuver actions: separating and gathering.

Separating

If the distance between two UAVs is less than a predefined range dmin, there might be a potential threat of collision. That is, if ∥Pj,t−Pi,t∥<dmin we set

where λ1 and λ2 are positive weight numbers, which are selected such that (λ1∥Pi,t−Pj,t∥)/(λ2∥vi,t−1∥) is small and ∥vi,t∥ satisfies the speed limitations.

Gathering

The swarm should be constrained in a ball of a predefined radius Rm. For each UAVi, if the condition of separating is not satisfied, then it computes the center of the ball by the average of all UAV positions excluding itself, i.e.,

where n is the total number of UAVs. If the distance between UAVi and Pci is less than Rm, it needs to approach the center by making a small maneuver. Thus, we set

where λ3 and λ4 are positive weight numbers, and their selection rules are similar to those for λ1 and λ2.

If the UAV does not need to execute the first stage maneuver actions, it will enter the second stage. In the second stage, we consider two principles for combat against target : 1) UAV should escape from the attack range of target if the situation is disadvantageous; 2) UAV should approach the target if the situation is advantageous. Swarm air combat is converted into one-to-one air combat in this stage, we design DDPG algorithm to realize it and explain it in detail in the following section.

3.2 One-to-one Maneuver decision algorithm design

Reinforcement learning algorithm obtains the optimal action strategy π∗ by finding the optimal action value function [38].

where γ is the discount factor, it can control the proportion of future rewards in cumulative rewards. (9) is to look up the table to find the optimal strategy in different states, and it is only suitable for discrete space. In order to solve the autonomous decision making problem in continuous air combat environment, we add neural network and use DDPG algorithm to realize the continuous motion control of UAV.

DDPG uses Actor-Critic network to fit the action strategy π and the action value Qπ, and the parameters are θμ and θQ respectively. Actor network is used to generate maneuver strategy π(s,a,θμ), Critic network outputs action value \({Q_{^{\pi }}}\left (s,a,{\theta ^{Q}}\right)\) to evaluate π(s,a,θμ), and π(s,a,θμ) is optimized by optimizing \({Q_{^{\pi }}}\left (s,a,{\theta ^{Q}}\right)\).

Network Structure

The input st of the Actor network is a vector from Pi,t to PT,t, and the output Oa is target speed of UAV \({v_{\mathrm {U}_{i},t}^{*}}\).

Oaand st are used as the inputs of Critic network, and Oc is the evaluation of the results of Oa. The DDPG network structure is shown in Fig. 5, and the parameters will be given in the simulation part.

One-to-one Maneuver Design

Maneuver at realizes swarm air combat by controlling \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}\), which is generated by A network:

In order to realize the agent’s exploration of the environment and the optimization of at, the noise N generated by OU process [39] is usually added to Oa to synthesize \(a_{t}^{\prime }\) to participate in network training. The function of N and \({a_{t}^{\prime }}\) are shown in (13) and (14).

where θ and σ are weights, μ represents the mean value, W is Gaussian noise. ξ is an exploration coefficient that decreases with the increase of training rounds. OU process can make exploration more efficient.

Reward Function

The reward function designed in this paper is as follows:

When the UAV approaches the PT,t, that is, \(\left \| {s_{_{t + 1}}} \right \| < \left \| {s_{_{t}}} \right \|\), a positive reward will be given, otherwise a penalty proportional to the distance will be given. Using (15) as reward function, regardless of the initial position of UAVs and enemy, UAVs will eventually fly to the rear of the target to attack it.

The algorithm flow is shown in Algorithm 1. According to Pi,t and PT,t, the current state st is determined. The online Actor network generates Oa according to the network parameter θμ. The OU process generates N and adds it to Oa to synthesize the action at. The UAV moves to the next state and gets a reward rt. Set (st,at,rt,st+1) to the memory bank. UAV repeats the above steps many times to collect a large number of samples. When the number of memory samples meets the requirements, random samples are selected to train A-C network. A batch of samples {(si,ai,ri,si+1)m|m=1,2,....,M} are randomly selected from the memory bank to calculate the target value yi.

where γ is the decay rate, the meaning is the same as that in (12), \({O^{\prime }_{a}} ({s_{i + 1}}:{\theta ^{\mu ^{\prime }}})\) is the output of target A network, representing the target action, \(Q^{\prime }({s_{i + 1}},{O^{\prime }_{a}}({s_{i + 1}}:{\theta ^{\mu ^{\prime }}});{\theta ^{Q^{\prime }}})\) is the output of target C network, representing the expected value of taking action \({O^{\prime }_{a}}({s_{t + 1}})\) under the state st+1, and the parameters of target A and C networks are \({\theta ^{\mu ^{\prime }}}\), \({\theta ^{Q^{\prime }}}\). Update target network parameters:

Gradient descent method is used to minimize (17) and optimize the parameters of Critic network. (18) and (19) are loss function and parameter optimization equation of Actor network. The parameters of the neural network are updated by (20). DDPG uses soft update method to update network parameters. Each time, the parameters are only updated a little, which makes the learning process more stable. With the increase of the number of training rounds, the agent’s Maneuver selection in different states tends to be optimal.

3.3 Multi-to-one Maneuver strategy design

When (17) is close to 0 or there is no obvious change, the training should be stopped. After the trained neural network is saved, the one-to-one air combat maneuver decision can be obtained. In the following part, we use the DDPG algorithm and two maneuver actions to realize swarm multi-to-one air combat, and the process is as Algorithm 2.

In Algorithm 2, Rij,t,PT,t, Ki,t and Pci,t are put to determine whether the UAV needs to excute “separating” or “gathering”. If necessary, get the \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}\) through (5) and (7); If it is not necessary, enter Algorithm 1 and get \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}\).

Limited by UAV maneuver ability, \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}\) given by two maneuver actions or neural network makes UAV unable to reach. Assumming that the angle between \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}\) and \(\phantom {\dot {i}\!}{v_{{\mathrm {U}_{i}},t-1}}\) is ϕ, the maximum turning angle of UAV is α as shown in Fig. 3. The maximum speed of UAV is Vmax, and the minimum speed is Vmin. The actual \(\phantom {\dot {i}\!}v_{\mathrm {U}_{i},t}\) is as shown in (22). Then we use (1) to get Pi,t+1.

When ϕ≤α, \(\phantom {\dot {i}\!}{v_{{\mathrm {U}_{i}},t}}\) is given by (21) and (22) to meet \(\phantom {\dot {i}\!}V_{\min } \le {v_{{\mathrm {U}_{i}},t}} \le V_{\max } \). When ϕ>α, \(\phantom {\dot {i}\!}{v_{{\mathrm {U}_{i}},t+1}}\) is calculated by (22) and (23), β is the complement of the angle between \(\phantom {\dot {i}\!}{v_{{\mathrm {U}_{i}},t}}\) and \(\phantom {\dot {i}\!}{v_{\mathrm {U}_{i},t}}-{v_{{\mathrm {U}_{i}},t-1}}\).

4 Simulation

4.1 Simulation setup

The default parameters of this simulation experiment are shown in Table 1. The velocity of our UAV and target refers to the displacement of UAV in unit step time in the simulation system. The enemy plane moves in a straight line or curve at a constant speed, while our UAV moves in a variable speed. The speed of UAV is determined by the output of the neural network. The position of our UAVs is initialized randomly. The structure of A-C network is shown in Fig. 5. Actor network consists of two layers, and the number of nodes is 400 and 800. In Critic network, the number of common layer nodes that input information is 800, and the others are 400. After unit conversion, the real combat environment of 50km×50km×50km is simulated. The maximum turning angle of UAV is 60 ∘. The maximum speed is 180km/h. The maximum communication radius is 20km, and the maximum attack distance is 500m.

To test the effectiveness of the proposed algorithm, we define the following three metrics : Compatibility, \(\phantom {\dot {i}\!}{\varphi _{\mathrm {U}_{i},t}}\), RiT,t. Compatibility refers to whether the algorithm can be extended from one-to-one air combat to multi-to-one combat, \(\phantom {\dot {i}\!}{\varphi _{\mathrm {U}_{i},t}}\) measures the change of KiT,t and the rationality of maneuvering of UAV in air combat. RiT,t reflects whether the algorithm converges.

4.2 Simulation results

The UAV fixed-point arrival capability is simulated and verified, as shown in Fig. 6. Given the target point, whether it is a single UAV or a multi aircraft environment, our aircraft can independently plan the path to reach the target point through DDPG algorithm.

Aiming at the dynamic target, the combat effect of a single UAV is verified first, and the enemy plane moves in a uniform straight line or circular motion. As shown in Fig. 7, our UAV can monitor the position of the target in real time and accurately track it. The change of the distance and angle between UAV and target is shown in Fig. 8.

In the early stage of simulation, the angle between the enemy and UAV is obtuse, so UAV is in an inferiority situation. On the one hand, the UAV is far away from the enemy, on the other hand, it quickly adjusts the azimuth, so UAV situation has changed from an inferiority to an advantage. Then the UAV constantly approaches the target and adjusts the azimuth angle in real time to keep it within the maximum attack angle. Finally, the UAV can lock the target for more than 2s to complete the tracking and attacking of the target.

Next, verify the effect of multi Aircrafts cooperative combat, the target still do uniform linear motion or circular motion. The results of swarms cooperative combat are shown in Figs. 9 and 10.

Compared with Fig. 8, due to factors such as aggregation and separation need to be considered in the swarms, the UAV can not all approach the target point at the same time, so the distance between the UAV and the target is close or far. In the process of tracking, UAV constantly adjusts the azimuth angle, and the azimuth angles of several aircraft close to the target are kept in the maximum attack range, showing a dominant situation. It can also be seen from Fig. 10 that all UAVs are kept within the communication range from the swarm center.

In the above simulation process, each simulation step corresponds to a decision making, which is converted to 1s of the real environment. Therefore, the simulation steps of the abscissa in Figs. 8 and 10 can be regarded as time.

5 Conclusion

A maneuver strategy based on DDPG algorithm is proposed to realize UAV swarm combat. Based on swarm framework, the air combat model and behavior set are designed for UAV to realize autonomous decisiom making. According to the characteristics of DDPG algorithm and task requirements, the distance between UAV and target point and the velocity value of UAV are taken as the input and output of actor network, and the reward function is constructed by relative distance to train the neural network parameters, so that the network converges quickly. A visual simulation environment is built to verify the application effect of the algorithm. The results show that the swarm maneuver strategy based on deep reinforcement learning algorithm can complete the attacking of the target on the premise of clear tasks. No matter the target moves in a straight line or curve, it has good simulation effect. Our UAVs can lock it in the attack range and keep it for a certain period of time, It has a certain practical value.

6 Nomenclature

DDPG: Deep Deterministic Policy Gradient; DL: Deep Learning; DRL: Deep Reinforcement Learning; RL: Reinforcement Learning; UAV: Unmanned Aerial Vehicle

Availability of data and materials

Not applicable.

Code availability

Not applicable.

References

Y. Li, X. Qiu, X. Liu, Q. Xia, Deep reinforcement learning and its application in autonomous fitting optimization for attack areas of ucavs. J. Syst. Eng. Electron.31(4), 734–742 (2020).

D. Hu, R. Yang, J. Zuo, Z. Zhang, Y. Wang, Application of deep reinforcement learning in maneuver planning of beyond-visual-range air combat. IEEE Access. PP(99), 1–1 (2021).

A. Xu, X. Chen, Z. W. Li, X. D. Hu, A method of situation assessment for beyond-visual-range air combat based on tactical attack area. Fire Control Command Control. 45(9), 97–102 (2020).

Z. H. Hu, Y. Lv, A. Xu, A threat assessment method for beyond-visual-range air combat based on situation prediction. Electron. Opt. Control. 27(3), 8–1226 (2020).

W. H. Wu, S. Y. Zhou, L. Gao, J. T. Liu, Improvements of situation assessment for beyond-visual-range air combat based on missile launching envelope analysis. Syst. Eng. Electron.33(12), 2679–2685 (2011).

H. Luo, Target detection method in short coherent integration time for sky wave over-the-horizon radar. Sadhana. 45(1) (2020).

T. Liu, R. W. Mei, in Proceedings of 2019 International Conference on Computer Science, Communications and Multimedia Engineering (CSCME 2019), Shanghai, China. Over-the-horizon radar impulsive interference detection with pseudo-music algorithm, (2019). Computer Science and Engineering (ISSN 2475-8841).

H. Wu, H. Li, R. Xiao, J. Liu, Modeling and simulation of dynamic ant colony’s labor division for task allocation of uav swarm. Phys. A Stat. Mech. Appl., 0378437117308166 (2017). https://doi.org/10.1016/j.physa.2017.08.094.

F. Austin, G. Carbone, H. Hinz, M. Lewis, M. Falco, Game theory for automated maneuvering during air-to-air combat. J. Guid. Control Dyn.13(6), 1143–1149 (1990).

J. S. Ha, H. J. Chae, H. L. Choi, A stochastic game-theoretic approach for analysis of multiple cooperative air combat. Am. Autom. Control Counc., 3728–3733 (2015). https://doi.org/10.1109/acc.2015.7171909.

R. P. Wang, Z. H. Gao, Research on decision system in air combat simulation using maneuver library. Flight Dyn.27(6), 72–75 (2009).

V. Kai, T. Raivio, R. P. Hmlinen, Modeling pilot’s sequential maneuvering decisions by a multistage influence diagram. J. Guidance Control Dyn.27(4), 665–677 (2004).

K. Virtanen, J. Karelahti, T. Raivio, Modeling air combat by a moving horizon influence diagram game. J. Guidance Control Dyn.29(5), 5 (2004).

H. Ehtamo, T. Raivio, On applied nonlinear and bilevel programming or pursuit-evasion games. J. Optim. Theory Appl.108(1), 65–96 (2001).

L. Zhong, M. Tong, W. Zhong, Application of multistage influence diagram game theory for multiple cooperative air combat. J. Beijing Univ. Aeronaut. Astronaut.33(4), 450–453 (2007).

Z. Liu, A. Liang, C. Jiang, Q. X. Wu, Application of multistage influence diagram in maneuver decision-making of ucav cooperative combat. Electron. Opt. Control. 33(4), 450–453 (2010).

J. Kaneshige, K. Krishnakumar, in Proceedings of SPIE - The International Society for Optical Engineering, 6560:656009. Artificial immune system approach for air combat maneuvering, (2007).

N. Ernest, D. Carroll, C. Schumacher, M. Clark, G. Lee, Genetic fuzzy based artificial intelligence for unmanned combat aerialvehicle control in simulated air combat missions. J. Defense Manag.06(1) (2016).

N. Ernest, D. Carroll, C. Schumacher, M. Clark, G. Lee, Genetic fuzzy based artificial intelligence for unmanned combat aerialvehicle control in simulated air combat missions. J. Defense Manag.06(1), 1–7 (2016).

L. Fallati, A. Polidori, C. Salvatore, L. Saponari, A. Savini, P. Galli, Anthropogenic marine debris assessment with unmanned aerial vehicle imagery and deep learning: A case study along the beaches of the republic of maldives. Sci. Total Environ.693:, 133581 (2019).

B. Neupane, T. Horanont, N. D. Hung, Deep learning based banana plant detection and counting using high-resolution red-green-blue (rgb) images collected from unmanned aerial vehicle (uav). PLoS ONE. 14(10), 0223906 (2019).

Z. Jiao, C. G. Jia, C. Y. Cai, A new approach to oil spill detection that combines deep learning with unmanned aerial vehicles. Comput. Ind. Eng.135:, 1300–1311 (2018).

X. Zhao, Y. Yuan, M. Song, Y. Ding, F. Lin, D. Liang, D. Zhang, Use of unmanned aerial vehicle imagery and deep learning unet to extract rice lodging. Sensors (Basel, Switzerland). 19(18) (2019). https://doi.org/10.3390/s19183859.

C. Qu, W. Gai, M. Zhong, J. Zhang, A novel reinforcement learning based grey wolf optimizer algorithm for unmanned aerial vehicles (uavs) path planning. Appl. Soft Comput. J.89:, 106099 (2020).

Z. X, Q. Zong, B. Tian, B. Zhang, M. You, Fast task allocation for heterogeneous unmanned aerial vehicles through reinforcement learning. Aerosp. Sci. Technol.92: (2019). https://doi.org/10.1016/j.ast.2019.06.024.

J. Yang, Y. X, G. Wu, M. M. Hassan, A. Almogren, J. Guna, Application of reinforcement learning in uav cluster task scheduling. Futur. Gener. Comput. Syst.95:, 140–148 (2019).

S. D, A. Huang, C. J. Maddison, A. Guez, L. Sifre, G. Driessche, J. Schrittwieser, I. Antonoglou, V. Panneershelvam, M. Lanctot, Mastering the game of go with deep neural networks and tree search. Nature. 529(7587), 484–489 (2016).

D. Silver, J. Schrittwieser, K. Simonyan, I. Antonoglou, D. Hassabis, Mastering the game of go without human knowledge. Nature. 550(7676), 354–359 (2017).

Y. Ma, W. Zhu, M. G. Benton, J. Romagnoli, Continuous control of a polymerization system with deep reinforcement learning. J. Process Control. 75:, 40–47 (2019).

Q. Zhang, R. Yang, L. X. Yu, T. Zhang, Z. J, Bvr air combat maneuvering decision by using q-network reinforcement learning. J. Air Force Eng. Univ. (Nat. Sci. Ed.)19(6), 8–14 (2018).

C. U. Chithapuram, A. K. Cherukuri, Y. V. Jeppu, Aerial vehicle guidance based on passive machine learning technique. Int. J. Intell. Comput. Cybern.9(3), 255–273 (2016).

X. Zhang, G. Liu, C. Yang, W. Jiang, Research on air combat maneuver decision-making method based on reinforcement learning. Electronics. 7(11), 279 (2018).

B. Kurniawan, P. Vamplew, M. Papasimeon, R. Dazeley, C. Foale, in AI 2019: Advances in Artificial Intelligence, 32nd Australasian Joint Conference, Adelaide, SA, Australia, December 2–5, 2019, Proceedings. An empirical study of reward structures for actor-critic reinforcement learning in air combatmanoeuvring simulation (Springer, 2019), pp. 2–5.

Q. Yang, J. Zhang, G. Shi, J. Hu, Y. Wu, Maneuver decision of uav in short-range air combat based on deep reinforcement learning. IEEE Access. PP(99), 1–1 (2019).

Q. Yang, Y. Zhu, J. Zhang, S. Qiao, J. Liu, in 2019 IEEE 15th International Conference on Control and Automation (ICCA). Uav air combat autonomous maneuver decision based on ddpg algorithm, (2019), pp. 16–19. https://doi.org/10.1109/icca.2019.8899703.

H. C. Tien, A. Battad, E. A. Bryce, J. Fuller, A. Simor, Multi-drug resistant acinetobacter infections in critically injured canadian forces soldiers. BMC Infect. Dis.7(1), 1–6 (2007).

R. Z. Xie, J. Y. Li, D. L. Luo, in 2014 11th IEEE International Conference on Control and Automation (ICCA). Research on maneuvering decisions for multi-uavs air combat (IEEE, 2014).

M. Volodymyr, K. Koray, S. David, A. A. Rusu, V. Joel, M. G. Bellemare, G. Alex, R. Martin, A. K. Fidjeland, O. Georg, Human-level control through deep reinforcement learning. Nature. 518(7540), 529–33 (2019).

T. P. Lillicrap, J. J. Hunt, A. Pritzel, N. Heess, T. Erez, Y. Tassa, D. Silver, D. Wierstra, Continuous control with deep reinforcement learning. Comput. ence. 8(6), 187–200 (2015).

Funding

This work is supported by National Natural Science Foundation of China under Grant 61803309, the Key Research and Development Project of Shaanxi Province under Grant 2020ZDLGY06-02, the Aeronautical Science Foundation of China under Grant 2019ZA053008, the Open Foundation of CETC Key Laboratory of Data Link Technology under Grant CLDL-20202101, the China Postdoctoral Science Foundation under Grant 2018M633574.

Author information

Authors and Affiliations

Contributions

The authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, L., Hu, J., Xu, Z. et al. Autonomous maneuver strategy of swarm air combat based on DDPG. Auton. Intell. Syst. 1, 15 (2021). https://doi.org/10.1007/s43684-021-00013-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43684-021-00013-z