Abstract

In design-oriented biomedical engineering courses, some instructors teach need-driven methods for health technology innovation that use a “need statement” to reflect a student team’s hypothesis about the most fruitful direction for their project. While need statements are of the utmost importance to the projects, we were not aware of any comprehensive rubric for helping instructors evaluate them. Leveraging resources such as the Biodesign textbook along with input from faculty teaching health technology design at our university, we created a rubric for evaluating the construction of need statements. We then introduced the rubric to undergraduate students in a 3-week intersession course in fall 2023. Afterward, we used the rubric to compare the de-identified final need statements from 2023 to the de-identified final need statements from students in the course in 2022 and 2021. Our assumption that need statements from 2023 would score better against the rubric than those from previous years proved not to be the case. However, we gleaned valuable lessons about the role of rubrics in supporting student learning and increasing alignment among faculty, as well as insights about rubric development and areas for future study. In this article, we also share the initial version of the rubric so that other instructors can adapt and improve upon it for their own courses.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Challenge Statement

In design-oriented biomedical engineering courses, some instructors have adopted need-driven methods like the biodesign innovation process for teaching health technology innovation. At the heart of this type of approach is the need statement, which describes in one sentence the problem or health-related dilemma that requires attention, the population most affected by the problem, and the targeted change in outcome that is most vital and against which all potential solutions to the need will eventually be evaluated [1]. The need statement is dynamic, reflecting at any given moment a student team’s hypothesis about the most fruitful direction for their project. Students learn to scope and refine their need statement over time based on increasingly in-depth primary and secondary research.

Directional information about how to write a need statement, including the standard format it takes and common pitfalls to avoid in drafting one, are available in resources, like the Biodesign [1] textbook and the online Student Guide to Biodesign [2]. However, while rubrics are a key tool in biomedical engineering education to assess a range of outcomes and assignments [3], we were unaware of any comprehensive rubric for helping instructors systematically evaluate student need statements or directly aiding students in learning how to write them. One study provided students with a series of worksheets to lead them in the construction of a need statement, but the emphasis of this effort was on assessing whether they had identified a “problem worth solving” according to criteria set forth by the university office of technology transfer rather than evaluating the need statement itself [4].

The education community generally defines a rubric as outlining the expectations for an assignment by providing (1) the criteria that will be used for assessment; (2) detailed descriptions of different quality levels for each criterion (e.g., excellent to poor); and (3) a rating scale that can be used to provide a score for each criterion [5]. Multiple studies find that undergraduate and graduate students consider rubrics valuable to their learning because they clarify key targets to focus on for an assignment; allow for self-evaluation and improvement before assignment submission; and align the expectations of students and instructors around quality standards and corresponding grades [5, 6]. As for instructors, the literature suggests that they value rubrics for the role they play in enabling expeditious, objective, and accurate grade assignment [5].

Motivated by the potential to enhance the student learning experience, we decided to create a rubric for evaluating need statements. We deemed this work especially important because (1) need statements play such a foundational role in setting the direction for student projects and (2) need statement development has been described as more “art” than science [1]. With regard to this second point, conversations with individuals teaching across our undergraduate, graduate, and post-graduate programs quickly revealed that our faculty did not share a common point of view on what constitutes a high-quality need statement. Even those teaching in the same courses had differing factors they looked for when assessing a need statement. As a result, we determined that a clear and objective rubric would be a useful tool to facilitate greater alignment across our faculty as they interact with and provide feedback to students on their needs. It also could serve as a learning aid that students use to help demystify the “art” of need statement creation.

Novel Initiative

We took an iterative approach, using input from approximately a dozen faculty members who teach undergraduate, graduate, and fellowship-level health technology design courses/programs at our university, to define assessment criteria, performance levels, and a scoring construct for need statement evaluation. This work resulted in a set of six objective and semi-objective factors that any instructor could apply when evaluating a need statement. These six criteria primarily assess the construction of a need statement, with a focus on the presence of a problem, population, and outcome, as well as the alignment and interplay between those aspects (see Table 1). The construct we propose explicitly separates need statement construction from need statement content (e.g., whether the need statement will be compelling to key stakeholders in the need area or if it represents a promising innovation project) because content assessment is far more subjective and accurate judgment often requires comprehensive expertise in the clinical need area itself. Considering the number of different clinical specialties addressed in any given course or program, most faculty members will not have sufficient depth of knowledge to fully assess the content of each need. The proposed approach uses the rubric for need statement construction to assign scores/grades. With regard to content, instructors can gauge and comment on the level of “fluency” the team has achieved in the need area, encourage the students to share what they believe to be unique insights uncovered through their research, and involve relevant subject matter experts to lend their input to the evaluation of content.

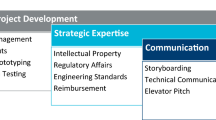

We introduced the initial version of the rubric to students participating in a 3-week intersession course that provides a total of 12 rising sophomores per year with the opportunity to practice the earliest stages of the biodesign innovation process. Specifically, they receive didactic instruction on performing clinical observations and then gain access to the hospital and clinics to conduct clinical shadowing in pairs over 3 days. Then, in parallel with lectures on each of the following topics, individual students draft preliminary need statements from their observations, perform research in each need area, and use what they have learned to scope and refine their need statements before filtering to a lead project. For the fall 2023 offering of the course, we shared the rubric with all 12 students and provided it to the instructors to use as a guide when coaching the students through need statement development and refinement.

After the course concluded, our course manager compiled and de-identified the final need statements for each student’s top project in the current (2023) cohort, along with the final need statements from students enrolled in the same course in fall 2021 and 2022 (prior to the rubric’s development and use). Students in all 3 years of the class had consented to have their work anonymized and reviewed for the purposes of educational research and publication (under IRB approval, protocol ID 56713). The de-identified need statements were “shuffled” across years and made available to three instructors who independently assigned retrospective scores to each one using the rubric. The three instructors had deep familiarity with need statements, as well as experience teaching in undergraduate health technology design courses.

Our assumption was that the need statements from 2023 would score better because the rubric was available to students and the instructors during the course (note that the instructors were the same and student demographics were similar across all 3 years the class was offered). However, data analysis of the 12 final student need statements from 2021, 2022, and 2023 (36 need statements in total) revealed that the highest scoring need statements were split across all 3 class years (within one standard deviation of each other) as shown in Table 2. A Wilcoxon sum ranks test confirmed that there was no statistically significant difference among the cohorts. The results also were characterized by a high level of inter-rater variability in the scores assigned to each need statement as shown in Table 3.

Reflection

While these results do not support the assumption that using the rubric would directly improve student-created need statements, we are sharing this work because we believe there is a great deal to be learned from this first attempt at rubric development, implementation, and evaluation. We hope that other instructors who teach need statement development in their design-oriented biomedical engineering courses will find the rubric (Table 1) interesting and adapt and improve upon it for their own use. We also would like to highlight the following lessons.

First, this experiment convinced us of the value that rubrics can provide in aiding student learning. Anecdotally, students told us that the rubric clarified, in more concrete terms than the didactic instruction and need statement examples they were shown in lectures and readings, what constituted a well-constructed need statement. Additionally, as the literature suggested, they liked that they could use the rubric to “self-check” and improve their work. Table 4 shows the first draft and final need statements of two students from the 2023 cohort as subjective examples of how students applied the criteria in the rubric to produce stronger need statements as they evolved from “first draft” to “final.”

Second, the teaching team felt more aligned as a result of having the rubric to collectively refer to and we believe that it helped us deliver a more unified approach when individually coaching students 1:1 to fine-tune their needs. We also found the distinction between need statement construction and content to be helpful. We often explain to students that there is no “right answer” when considering unmet clinical needs. Separating need statement construction from content allowed us to firmly hold students accountable for gaining skills in constructing need statements while taking a more exploratory, co-learning approach to need statement content.

Third, the experience uncovered improvements we can make to the rubric itself. For example: (1) The performance levels for the first criterion (“Does the need statement include a problem, population, and outcome, with each element clearly and singularly articulated?”) focus on whether or not the problem, population, and outcome are all clearly and singularly articulated, but they do not take into account whether a student may have failed to include one of these important parts, which is a common problem with need statement construction. (2) The way that criterion 4 is stated (“Is there a temporal and causal linkage between the problem and outcome?”) refers to the connect between the problem and the outcome, but more accurately should reference the link between the problem if solved and the desired outcome. (3) Criterion 5 (“Is the outcome objectively measurable within a timeframe that’s reasonable given the magnitude of the problem?”) has a compound focus—objective measurability and the required timeframe to perform the measurement. These may be better evaluated by two separate criteria. We are eager to revise the rubric to address these and other insights gleaned through trialing the preliminary version.

Fourth, we have the opportunity to proactively develop a robust study protocol that will strengthen our understanding of the rubric’s effectiveness. This approach should include a clear methodology for introducing and testing the rubric with students, evaluating the work they produce using the tool, and capturing their perceptions of its value through surveys. Our approach also should be informed by best practices in rubric development and implementation. For example, a frequent measure of rubric effectiveness is the consistency of grading between different raters [6]. However, as shown in Table 3, we had high inter-rater variability in the scores assigned to each need statement. A debrief with the members of the teaching team who evaluated the need statements revealed inconsistencies in how we understood the criteria and performance levels and, accordingly, how we assigned our scores. An effective rubric depends on clear, understandable language that is consistently interpreted and applied by raters and students alike [5], so we should seek input from students and faculty when updating the rubric and then allocate time to training raters on the revised rubric, using sample need statements to achieve greater score alignment, before initiating a formal study.

In addition to these improvements, we see other interesting opportunities for future investigation. For instance, given advances in generative AI, we have had discussions about what role this technology could play in applying rubrics to student work. Designing a study to compare and contrast how instructors and generative AI evaluate a common set of need statements against a rubric could be a fascinating experiment in further exploring inter-rater variability, as well as the capabilities of tools, such as ChatGPT (OpenAI, San Francisco, CA). Another idea is to explore one of the most common criticisms of rubrics that they can promote “instrumentalism” if students only do the minimum necessary to receive their desired grade rather than completing more thoughtful and creative work [7]. As we design future studies, we hope to do so in a way that provides insights into this possibility, perhaps through the use of a control group to enable direct comparisons. Finally, we see great opportunity to develop additional rubrics to help clarify other key tools in the biodesign innovation process, such as need criteria (the requirements students create as a culmination of their need research to enable ideating and screening solution concepts). As with need statements, we are not aware of any objective rubric for guiding the development or evaluation of need criteria. We look forward to sharing the outcomes of future studies as our efforts continue.

Data Availability

Not applicable.

Code Availability

Not applicable.

References

Yock PG, et al. Biodesign: the process of innovating medical technologies. 2nd ed. Cambridge: Cambridge University Press; 2015.

A student guide to biodesign. https://bioedsignguide.stanford.edu. Accessed 28 May 2024.

Higbee S, Miller S. Tracking capstone project quality in an engineering curriculum embedded with design. In: IEEE frontiers in education conference. 2020. https://ieeexplore.ieee.org/document/9273929. Accessed 28 May 2024.

Cash HL, DesJardins J, Przestrzelski B. The DMVP (detect, measure, valuate, propose) method for evaluating identified needs during a clinical and technology transfer immersion program. In: American Society for Engineering Education Conference. 2018. https://peer.asee.org/31087. Accessed 28 May 2024.

Reddy Y, Andrade H. A review of rubric use in higher education. assessment & evaluation in higher education. In: Assessment & evaluation in higher education. 2010. https://www.researchgate.net/publication/248966170_A_review_of_rubric_use_in_higher_education. Accessed 28 May 2024.

Cockett A, Jackson C. The use of assessment rubrics to enhance feedback in higher education: an integrative literature review. Nurse Education Today. 2018. https://www.sciencedirect.com/science/article/pii/S0260691718302764. Accessed 28 May 2024.

Torrance H. Assessment as learning? How the use of explicit learning objectives, assessment criteria and feedback in post-secondary education and training can come to dominate learning. Assess Educ. 2007. https://doi.org/10.1080/09695940701591867. Accessed 28 May 2024.

Acknowledgments

Our appreciation goes to all of the faculty who provided input to the rubric, as well as the students who participated in the course, applied the rubric to their work, and consented to have their work anonymized and reviewed as part of this and other studies. The authors also would like to thank Meghana Nerurkar for her assistance with background research, as well Kevin Bui for lecturing in the course and participating in need statement evaluation.

Funding

Course activities were supported in part by the Stanford University Sophomore College program. Research reported in this publication was supported in part by the National Institutes of Health under Award Number R25EB02938703. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and Affiliations

Contributions

All authors contributed to the development of the preliminary rubric. LD and RP evaluated need statements against the rubric (along with another instructor). JT led data analysis. LD prepared the manuscript. RP, KS, JT, and RV reviewed and provided revisions.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Consent to Participate

Informed consent was obtained from all individual students to have their work anonymized and reviewed for this and other studies.

Consent for Publication

Informed consent for the publication of results related to this course was obtained from all participating students.

Ethical Approval

This study was conducted under Stanford University IRB approval (protocol ID 56713, March 2022).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Denend, L., Venook, R., Pamnani, R.D. et al. Lessons from Developing a Rubric for Evaluating Need Statements on Health Technology Innovation Projects. Biomed Eng Education 4, 437–442 (2024). https://doi.org/10.1007/s43683-024-00153-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43683-024-00153-7