Abstract

With the advancement of DNNs into safety-critical applications, testing approaches for such models have gained more attention. A current direction is the search for and identification of systematic weaknesses that put safety assumptions based on average performance values at risk. Such weaknesses can take on the form of (semantically coherent) subsets or areas in the input space where a DNN performs systematically worse than its expected average. However, it is non-trivial to attribute the reason for such observed low performances to the specific semantic features that describe the subset. For instance, inhomogeneities within the data w.r.t. other (non-considered) attributes might distort results. However, taking into account all (available) attributes and their interaction is often computationally highly expensive. Inspired by counterfactual explanations, we propose an effective and computationally cheap algorithm to validate the semantic attribution of existing subsets, i.e., to check whether the identified attribute is likely to have caused the degraded performance. We demonstrate this approach on an example from the autonomous driving domain using highly annotated simulated data, where we show for a semantic segmentation model that (i) performance differences among the different pedestrian assets exist, but (ii) only in some cases is the asset type itself the reason for this reduction in the performance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Recently, there has been great interest in deploying deep neural networks (DNNs) for computer vision tasks in safety-critical applications like autonomous driving [37] or medical diagnostics [20]. However, rigorous testing for verification and validation (V &V) of DNNs is still an open problem. Without sufficient V &V, using DNNs for such safety-critical applications can lead to dangerous situations. While a large body of work has focused on improving robustness [18], defending against adversarial attacks [1, 4], and improving domain generalization [32, 42], only recently, a few works have focused on identifying systematic weaknesses of DNNs [10, 13, 15, 29, 38]. Such systematic weaknesses, however, pose a significant challenge from a safety perspective, as seen by several real-world examples [3, 9], where DNNs have been shown to perform worse for certain subsets of data. Such effects have been extensively studied in the context of Fairness, see, e.g., [43], where often bias due to skin color or gender is addressed. However, this is not sufficient to investigate the safety of a DNN. Instead, all dimensions that could potentially influence performance have to be taken into account.

This can be seen in the broader context of trustworthiness assessments for ML, specifically regarding reliability. Multiple high-level requirements or upcoming standards point out the issues of data completeness or coverage to varying levels of detail. For instance, [21] not only discusses both data completeness in the context of Fairness (as presented above) but also states that the robustness of the application is bound to a specific application domain. Other approaches, such as [27], see a rigorous definition of the application’s input space and aligning the used data to it as an important aspect of trustworthiness. Likely, such approaches will reside on semantic, human-understandable definitions of data dimensions to define the domain and, potentially, also to demonstrate coverage.

An informal example to illustrate the concept of data specification and its relation to potential systematic weaknesses could be a classifier that distinguishes between cats and dogs. While it would be a common procedure to investigate the performance of such a classifier by, e.g., a confusion matrix between the two classes, one could (and for critical algorithms) should extend both the task description and the investigation to include sub-classes. For instance, one could evaluate the classifier’s performance on the various dog breeds the classifier was built for (definition of the input space) and ascertain whether each of these breeds is recognized with sufficient performance. To achieve the latter, data, in most cases, must come with sufficiently detailed attribution. Obtaining such meta-information for unstructured data, e.g., images as used for object detection is a challenging objective in its own right. Recent works, see, for instance, [10, 13], use dedicated neural networks or parts of the investigated networks to obtain information on the data while other approaches, such as ours [15] or, e.g., [14], focus on a controlled data generation process. For example, by using synthetic data, which yields detailed data description as a “by-product”. The latter, more labeling-oriented approaches, have the advantage that obtained information is aligned with the semantic dimensions of the data. For the former approaches, outcomes are often raw data subsets whose descriptive attributes have to be uncovered in an additional step.

The analysis of identified (potential) weaknesses is often more complex as multiple data dimensions can influence the outcome simultaneously. For instance, in the above example, is the dog breed a performance limiting factor, or can the weakness be attributed to another factor, either in a causal (e.g., size of the breed) or only correlated fashion (e.g., if many images of one dog breed were taken by a different camera model)? Answering such questions requires a detailed (meta-)description of the data, which is challenging outside of synthetic data and requires consideration of the impact of multiple dimensions and their interactions. The latter point often leads to a “combinatorial explosion” as soon as two, and higher order interactions are taken into account. At the same time, correct identification of data subsets with weak performance is important as it is otherwise hard to mitigate such systematic failure modes. For instance, the common approach for mitigation is by generating or obtaining more data samples and adding them to the training data.

As data gathering or generation is costly, it is important to understand better which data is needed to improve coverage and training. Toward this goal, we propose a computationally cheap method that helps to determine whether identified performance differences between two semantically distinguished subsets \({\mathcal {X}}\) and \({\mathcal {Y}}\) can be attributed to either the semantically distinguishing property between the sets or other described but not considered factors. For this, we adapt the concept of counterfactual explanations [40], where we pair the elements of \({\mathcal {X}}\) only to those elements in \({\mathcal {Y}}\), which are most similar w.r.t. all other known attributes. Suppose our method determines that the distinguishing semantic property between the sets can be considered as the true cause for the performance difference. In that case, generating more data for the weak set should be a useful measure to improve the DNN performance. If not, the method allows for identifying semantic subsets of \({\mathcal {X}}\) or \({\mathcal {Y}}\), respectively, that are likely to contain the true cause of the performance gap.

2 Related work

In this section, we discuss literature related to finding systematic weaknesses of machine learning models by classifying them based on the input data type. We first present approaches working with structured, e.g., tabular data, and then present approaches for unstructured data such as images. Drawbacks of the existing approaches are presented subsequently. Lastly, we introduce works on counterfactuals.

For structured data, SliceFinder [6] identifies weak slices (subsets) of data by ordering slices based on certain criteria like performance, effect, and slice size. These weak slices correspond to the systematic weakness of the models. Similarly, SliceLine [36] provides an enumeration of all slice combinations using a scoring function and different pruning methods. In addition, subgroup search [2, 19] is an extensively researched field in data mining for identifying interesting subgroups or subsets of data based on certain quality criteria. However, at higher dimensions, subgroup search methods are computationally very intensive. Complexity grows even further when going from structured to unstructured data, such as images. In these cases, spaces are intrinsically (very) high dimensional. Accordingly, there has been no widespread use of such methods for computer vision.

Due to this complexity and the difficulty of obtaining metadata for unstructured data like images, some approaches have been proposed to use intrinsic information from the DNNs. Eyuboglu et al. [13] developed DOMINO for finding systematic weaknesses by using cross-modal representations generated from a pre-trained CLIP [34] model. However, using CLIP, a black-box DNN model in the embedding generation process, adds further complexity to validating the DNN-under-test. Spotlight [10] uses representations from the final layers of DNNs to identify contiguous regions of high loss. These contiguous regions (slices) with the highest loss are then considered weak slices that could lead to potential systematic weaknesses of the DNN. Both these approaches are restricted to classification tasks. Furthermore, due to the nature of the approach, resulting weaknesses need to be interpreted, either by other ML models or humans, to derive actionable insight into its nature, i.e., to identify the common cause of all found weakly performing elements. This approach hinders a systematic evaluation and might also be prone to errors.

With a particular focus on autonomous driving, several works [15, 29, 38] have used computer simulators to generate metadata to identify systematic weaknesses in object detection and semantic segmentation models. In our earlier work [15], we made modifications to the Carla simulator [12] to generate pedestrian-level metadata. With the generated data, we trained a DeepLabv3+ [5] model and identified several (potential) systematic weaknesses w.r.t. digital asset type and skin color. Similarly, Lyssenko et al. [29] evaluated DNN performance along a semantic feature, the distance of the pedestrian to the ego vehicle, using data generated from their modified Carla simulator and showed a linear decrease in performance with increasing distance. Syed Sha, Grau, and Hagn [38] proposed a validation engine, ‘VALERIE’, and evaluated the performance of two different DNNs w.r.t. metadata attributes like pixel area, occlusion rate, and distance of pedestrians. All those works circumvent complexity by investigating features in isolation. In this work, we, however, take all (available) features into account.

With regards to counterfactuals, Verma, Dickerson, and Hines [40] show that a large body of work in explainable AI has proposed counterfactual methods to provide explanations of DNN behavior by investigating “what if” scenarios. Several methods [8, 16, 23, 31, 41] structure finding counterfactuals as an optimization problem using gradients of the model-under-test similar to finding adversarial attacks. However, unlike in adversarial attacks, counterfactual methods can possess several additional constraints [40] such as validity [41], actionability [25, 39], closeness to data manifold [11, 22, 30] and/or sparsity in feature changes [17, 24]. Counterfactuals also differ from feature attribution methods like LIME [35] or Shapley Values [28] as counterfactuals identify new inputs which lead to change in predictions rather than attributing predictions to a set of features.

While these methods have mostly restricted themselves to tabular data and simple image datasets like MNIST [26], our method performs counterfactual evaluation on a semantic segmentation dataset for autonomous driving using metadata descriptions of pedestrians in the images. In addition, the goal of most counterfactual methods, as they concern fairness, is to (actively) change a decision or output, while our focus is on investigating performance differences. Furthermore, the gradient-based approaches require additional inference steps to investigate their (newly created) counterfactual sample. Our method instead uses a statistical formulation of counterfactuals [33] as for unstructured data creating new “what if” scenarios is (computationally) too expensive, even when using simulators, to afford a meaningful analysis over many data points.

3 Method

In this section, we provide a general description of our counterfactual algorithm and how it may be used to investigate performance differences within a given dataset \({\mathcal {D}}\). As our approach is general, we relegate instantiating \({\mathcal {D}}\) (and its properties) to the next section. Here, it is sufficient that all elements of \({\mathcal {D}}\) can be seen as individual inputs or points of interest (later: those will be separate pedestrians, and the task will be their recognition), for each of which we can obtain a respective performance value (later: intersection-over-union (IoU)) and a semantic description based on multiple dimensions \({\mathcal {S}}= \{s_1,\ldots ,s_n\}\) (later: for instance, distance from the vehicle or asset membership).

As motivated, we are interested in identifying DNN weaknesses in terms of data subsets that perform weakly due to properties inherent to the data, e.g., occlusion or under-represented input types. Furthermore, we want to validate whether the decreased performance can be attributed to the identified property.

For this, let us consider slices \({\mathcal {X}},{\mathcal {Y}}\subseteq {\mathcal {D}}\) of the data where variances in performance exist between them. Such slices can be obtained, e.g., by selecting all elements which have one (or multiple) fixed semantic properties (or ranges thereof) in common, e.g., \({\mathcal {X}}= \{ x \, | \, x\in {\mathcal {D}}\wedge s_\text {asset}(x) = \text {asset}_1 \}\). For this notation, we assume that P(x) and \(P({\mathcal {X}})\) are the performance value of the element \(x\in {\mathcal {X}}\) or the set of performance values over all of \({\mathcal {X}}\), respectively.Footnote 1 This allows us to write the “conventional” performance difference between the two slices as

where \(\mu\) denotes the mean-value of the respective set. We may also formulate a related quantity based on the set of local performance differences

where i provides a fixed but arbitrary index of the sets \({\mathcal {X}},{\mathcal {Y}}\), respectively. If we were to average over all possible such indices, we would find that

holds in expectation with \(\Delta _\text {rnd}({\mathcal {X}},{\mathcal {Y}}) = \mu [{\mathcal {A}}_\text {rnd}({\mathcal {X}},{\mathcal {Y}})]\).Footnote 2 Looking at eq. (2), we observe that differences disregard the semantic contexts of the pairings \(x_i,y_i\) and could compare datapoints with entirely different properties (e.g., pedestrians of high occlusion with clearly visible ones). We, therefore, build a dedicated paired dataset \({\mathcal {C}}_\text {cf}({\mathcal {X}}, {\mathcal {Y}})\), where for each element of the reference dataset \({\mathcal {X}}\) we find the most similar (by semantic description) datapoint in the other dataset \({\mathcal {Y}}\) as shown in equation (4),

where the counterfactual datapoints in \({\mathcal {Y}}\) are selected by

which minimizes the distance \({\text {dist}}_d\) using a subset \(d\subseteq {\mathcal {S}}\) of the semantic features \({\mathcal {S}}\).Footnote 3 Refer to the next section for a concrete example of our metric. Based on this paired set, we can calculate the set of counterfactual differences

taking into account only those pairings that are closer than a threshold \(\tau\) to ensure sufficiently close proximity of points. The corresponding average performance difference is given by \(\Delta _\text {cf}({\mathcal {X}},{\mathcal {Y}}) = \mu [{\mathcal {A}}_\text {cf}^\tau ({\mathcal {X}},{\mathcal {Y}})]\). Please note that, importantly, the counterfactual difference \(\Delta _\text {cf}\) does not have to coincide with \(\Delta _\text {con}\). If, for instance, \(| \Delta _\text {cf}| \ll |\Delta _\text {con}|\) the performance differences between \({\mathcal {X}}\) and \({\mathcal {Y}}\) can likely not be attributed to the semantics used to separate both sets from \({\mathcal {D}}\), but instead are a property of some other latent discrepancy between the two sets. In these cases, one might want to investigate the non-matched elements, i.e., those elements of \({\mathcal {X}},{\mathcal {Y}}\) that are not part of \({\mathcal {C}}_\text {cf}\), as they might carry another distinguishing attribute. Please note that the matching procedure of eq. (5) is, in most cases, not commutative between the sets. However, when \({\mathcal {X}},{\mathcal {Y}}\) do not intersect our fixed order of operations ensures \(\Delta _\text {cf}({\mathcal {X}},{\mathcal {Y}})=- \Delta _\text {cf}({\mathcal {Y}},{\mathcal {X}})\). Conversely, if \({\mathcal {X}}={\mathcal {Y}}\), our definition avoids collapse as the points cannot be matched onto themselves. A more procedural definition to determine the counterfactual difference \(\Delta _\text {cf}\) can be found in algorithm 1 below.

4 Experimental Setup

In this section, we describe the used dataset, the DNN-under-test and the concrete implementation of the counterfactual calculations and their metrics.

Dataset

For our experiments, we use the dataset generated from our previous work, Gannamaneni, Houben, and Akila [15], which contains extracted meta-informationFootnote 4 for each pedestrian visible within an image. It was generated using Carla Simulator v0.9.11 [12] and contains 23 classes following a mapping similar to Cityscapes [7]. It consists of images with traffic scenes, corresponding semantic segmentation ground truth, and pedestrian meta-information. This way, and using additional computer vision post-processing, we obtain the set \({\mathcal {S}}\) of our semantic features encompassing {dist, visibility, num_pixels, size, asset_id, \(x_\text {min}, y_\text {min}, x_\text {max}, y_\text {max}\)}. Here, dist refers to the euclidean distance of the pedestrian from the ego vehicle, visibility to the percentage of the pedestrian that is unoccluded, num_pixels to the (absolute) number of pixels belonging to the pedestrian, asset_id gives an identifier for the 3D model used by the simulator. The coordinates x, y belong to the bounding box of the pedestrian, and size provides its respective area. With this setup, we generated \(7\,394\) images (all from the “Town02” map in Carla) and trained the semantic segmentation model DeepLabv3+ [5] on them. We follow the same training setup and data pre-processing as our prior work [15]. To investigate the performance of the said model, we added the individually achieved IoU (Intersection-over-Union) to the collection of our per-pedestrian metadata. In the remainder, all analysis will be performed on this resulting table of performance and metadata descriptions containing a total of \(24\,424\) entries.Footnote 5

Implementation of counterfactual similarity

To build counterfactual datasets as given by eq. (4), we need to specify a distance among the semantic dimensions. For this, we use the euclidean metric, where we used one-hot-encoding for the categorical asset_id and re-scaled all numerical dimensions to the unit range [0, 1]. If not stated otherwise, the cut-off parameter \(\tau\), see eq. (6), is chosen as 0.2. In the cases where \({\mathcal {X}}\) and \({\mathcal {Y}}\) do not intersect, we can calculate counterfactual pairings (more) efficiently using a ball-tree algorithm to find the nearest points. More concretely, the problem can be seen as a k-NN classification for \(k=1\), where we are interested only in the performance of the nearest point in \({\mathcal {Y}}\) w.r.t. a sample from \({\mathcal {X}}\).

5 Results

We conducted three different experiments. First, we evaluate the expressive power of semantic features in the similarity search. These results provide insight into the noise level of the performance values and to which degree they depend on the known semantic attributes. In the second subsection, the results of the counterfactual analysis for a semantic-dimension-under-test are provided and discussed. Finally, using results from the counterfactual analysis, we show that interesting subsets of the slices can be discovered, which can be used by the developer to further narrow down which data dimensions to investigate.

5.1 Evaluating expressive power of semantic features

Our counterfactual analysis assumes that the semantic features are expressive enough to provide on average some indication on the performance of the DNN-under-test, i.e., that more similar points are more likely to have a similar performance. We perform the following check to validate this assumption: Setting \({\mathcal {X}}={\mathcal {Y}}={\mathcal {D}}\), we can evaluate the statistics of \({\mathcal {A}}_\text {cf}^\infty ({\mathcal {D}},{\mathcal {D}})\), see eq. (6), while including an increasing number of semantic dimensions into \(d\), the special case of \(d=\{\}\) corresponds to a purely random pairing of the elements. To provide a baseline, assuming a flat i.i.d. distribution of the performance values in the full range of [0, 1] \({\mathcal {A}}_\text {cf}\) would follow a triangular distribution with element-wise differences ranging from \(-1\) to \(+1\). The standard deviation of such a triangular distribution would be \(\sigma _\text {tri}=1/\sqrt{6}\approx 0.41\). Any decrease of \(\sigma [{\mathcal {A}}_\text {cf}^\infty ]\) from this threshold indicates a deviation from pure randomness. We provide an overview of the development of \(\sigma\) on the r.h.s. of Fig. 1 for increasing numbers of used semantic properties. The random pairing (\(|d|=0\)) is still close to the threshold with \(\sigma [{\mathcal {A}}_\text {rnd}]\approx 0.35\). Taking a single feature into account (\(|d| = 1\)) already leads to a decrease of (depending on the feature selected) up to \(13\%\), while including multiple features leads to stronger but saturating decay.Footnote 6 The l.h.s. of Fig. 1 provides a graphical representation of this statement by showing histograms of \({\mathcal {A}}_\text {cf}^\infty\) for some selected \(d\).

We can interpret the decay of the standard deviation further by comparison to a toy experiment. For this, we consider two uniform distributions, each with standard deviation \(\sigma _\text {sing}\), that are shifted against one another by an offset \(\Delta \mu\). We can see the membership of an element to either of these distributions as a semantic property of this toy example. If we are unaware of it and investigate elements that are equally likely to be drawn from either of the distributions, we will naturally observe a larger spread of \(\sigma _\text {both}\). If we identify this spread with the standard deviation we have seen for the case of \(|d|=0\) and likewise use the \(|d|=5\) case as value for \(\sigma _\text {sing}\), we can estimate (in a rough fashion) the scale of potential shifts \(\Delta \mu\). For this, we use

which holds for the toy model only. It, however, suggests a value of \(\Delta \mu \approx 0.2\) as an approximate range.

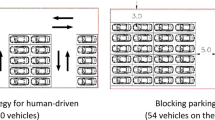

Left: The histogram depicting the difference in performance for samples between two datasets using three matching techniques: using a single feature with nearest neighbor matching (red), using five features with nearest neighbor matching (green), and random matching (blue), (Best seen in color). Right: The reduction in the standard deviation of the performance difference distributions as more semantic features are used in the NN search

5.2 Counterfactual analysis

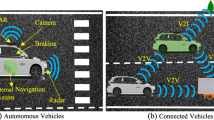

Having established that using all five semantic features is beneficial in the similarity search, we focus on the left-out asset_id to identify if there are indeed systematic weaknesses present for slices in this semantic feature (semantic-feature-under-test). To identify interesting \(({\mathcal {X}},{\mathcal {Y}})\) combinations, we make use of the average performance of the assets for the entire training data as shown on the left in Fig. 2. We consider the highest-performing assets (26, 24, 4, 8) and the lowest performing assets (9, 23) as candidates for counterfactual analysis. The results for different combinations of these assets are shown in Table 1. For each combination, we provide the conventional difference in performance, \(\Delta _\text {con}\) see eq. (1), the counterfactual difference in performance, \(\Delta _\text {cf}\) eq. (5), and the random pairing difference, \(\Delta _\text {rnd}\) eq. (2). The latter we provide as a sanity check and find that it, as expected according to eq. (3), approximately follows the conventional difference.Footnote 7 Additionally, observed performance differences do roughly abide, in their maximal values, to the scale of \(\Delta \mu\) from the previous section. However, when comparing counterfactual and conventional performance differences, we, in some cases find strong discrepancies. In the case of the weakly performing asset 9, the counterfactual difference to all strong performing assets is negligible, suggesting that the decreased performance of this asset is due to the presence of pedestrians that are challenging due to some properties other than their membership to asset 9, i.e., this asset (in itself) does not constitute a systematic weakness. Hence, just generating more (training) data for asset 9 would be an ineffective way to increase its performance. Yet, the circumstances leading to the decreased performance in the slice, even if unrelated to the asset type, should be investigated further; see the results from the next experiment. In contrast to asset 9, for asset 23 (the other weak candidate), the counterfactual differences rather emphasize the performance discrepancy to all well-performing assets making it a likely candidate for an actual systematic weakness. Therefore, generating more data for asset 23 could be useful. This is also supported by investigating the number of samples of the different assets in the training data and the average performance per asset as shown on the right in Fig. 2. We can see that assets 4, 9, and 24 have a similar amount of samples. However, 23 only has half the numbers compared to 26 (the asset with the highest performance).

Left: Comparison of the average performance of the different digital assets. The numbers on the y-axis represent the unique asset IDs. Right: Comparison of the average performance of the different assets and the count of samples in the training data. The numbers next to a point represent the asset ID

5.3 Discovering residual subsets

As discussed above, asset 9 is underperforming, but asset membership does not seem to constitute the true cause of the issue. Hence, just generating more data for this asset is unlikely to resolve the problem efficiently, which is also supported by the fact that there is already a relatively high number of data available for asset 9 (see right-hand side of Fig. 2). For example, looking at asset 8, we see that it has much less data, but significantly higher performance than asset 9. In the following, we intend to identify, purely based on the semantic description, the data causing this effect.

When building counterfactual pairs among the samples from \({\mathcal {X}}\), \({\mathcal {Y}}\), only a subset of the larger set (here w.l.o.g. named) \({\mathcal {Y}}\) might be used.

In such a case, this provides a way to slice \({\mathcal {Y}}\) into two subsets, i.e., a paired subset

and a residual set of samples that are never used for pairing,

Intuitively, we expect that the average performance of the paired subset should be closer to the one of the reference set, \(\mu [P({\mathcal {X}})]\), while the residual set shifts in the opposite direction.Footnote 8.

We demonstrate this approach by contrasting the weakly performing asset 9 with the four highest-performing assets. In the three cases where those asset sets contain more samples, we use the samples from asset 9 to split those sets into paired and residual sets, respectively. As seen from Table 2 the paired sets have a performance that is comparable to the one of 9. The other way around, the residual sets are performing better than the slices as a whole. Using the smaller but equally well-performing set of asset 8, we can also split the slice of asset 9 into two, of which the paired one shows high performance. Importantly, although these sub-divisions have a strong impact on the observed performance, they are determined purely based on the semantic properties of the elements. Having ruled out asset_id as a likely cause, the residual sets provide a reduced dataset for further, iterative exploration of the underlying semantic cause of the observable weakness.

6 Conclusion

In this work, we have motivated a more detailed investigation of performance differences to identify systematic weaknesses in DNNs. This analysis is based on slicing, where semantic features of the data are used to form meaningful subsets. Within our approach, these features stem from the data generation process but, in theory, can also be be obtained through other methods. It is, however, impossible to attribute all potential performance influencing dimensions, which forms a limitation of many approaches that aim to find weak slices as non-annotated or non-discovered characteristics can contain unresolved weaknesses. Nonetheless, even with a limited amount of information, it is computationally challenging to identify semantic weaknesses correctly as accounting for the interaction between them leads to a “combinatorial explosion.” However, it is also not straightforward to simply attribute performance loss to specific semantic features when studying them in isolation. This is caused, for instance, by inhomogeneities and sparseness of the data in high dimensions. For this reason, we propose a method inspired by counterfactual explanations where we identify neighboring points between two slices of data based on their semantic similarity. As these points are neighbors in the \((n-1)\)-dimensional feature space, i.e., all features except the semantic-feature-under-test that defines the respective slices, the influence of these additional features is reduced. This allows us to study whether the semantic-feature-under-test constitutes an actual weakness of the model while avoiding prohibitive computational costs of considering the interactions of all features. In our experiment from the autonomous driving domain, we could, thus, show that when considering the pedestrian asset type as semantic-dimension-under-test, from the two weakest performing assets, only in one case, the asset membership is the likely factor for the degraded performance. Such insight is valuable for further improvement of the DNN, as the generation of additional (training) data to mitigate weaknesses becomes costly for complex tasks such as object detection or semantic segmentation. In a second experiment, as an extension to the one before, we investigate one asset in further detail, where the asset itself was not the cause of the performance degradation. Here, we demonstrate that counterfactual matching can be used to sub-partition existing slices based on their semantic features such that the weakly performing subset is carved out. This allows a more refined analysis and can help find the actually relevant semantic dimensions among the remaining \(n-1\) features more easily, given that the sub-partition forms a smaller dataset.

Data availability

The Carla dataset analyzed as part of the study is generated using the Carla Simulator (http://carla.org//) similar to Gannamaneni et al. [15].

Notes

These statements hold for all subsets of \({\mathcal {D}}\), e.g., also for elements of \({\mathcal {Y}}\).

This is the case as the average over means of random subsets converges to the mean of the full set. In the special case of \(|{\mathcal {X}}| = |{\mathcal {Y}}|\) we find \(\Delta _\text {con}({\mathcal {X}},{\mathcal {Y}}) = \Delta _\text {rnd}({\mathcal {X}},{\mathcal {Y}})\) directly.

In cases where the \({{\,\mathrm{arg\,min}\,}}\) is not unique, we select the first element given a fixed but arbitrary order of all elements in \({\mathcal {D}}\). Ideally, \(d\) should exclude those elements of \({\mathcal {S}}\) that were used to construct \({\mathcal {X}},{\mathcal {Y}}\subseteq {\mathcal {D}}\).

The full table contains even more pedestrians, we, however, limit ourselves to true positives (\(IoU > 0\)), exclude entities beyond a euclidean distance threshold of 100 (as their detection is rather a chance event and not indicative of actual DNN performance) and removed bounding-boxes larger than \(10^5\) pixels (as they constitute rare outliers).

Here, for \(|d|=5\) we use all features of \({\mathcal {S}}\) except for the categorical asset_id and limit the bounding box coordinates to \(x_\text {min}\).

One could expect deviations in this quantity, if either the sample size becomes too small or there are strong inhomogeneities in the distribution of the data.

As \({\mathcal {Y}}_\text {res}({\mathcal {X}}) \cap {\mathcal {Y}}_\text {pair}({\mathcal {X}}) = \emptyset\) we have \(\mu [P({\mathcal {Y}})] = \left( \mu [P({\mathcal {Y}}_\text {res}({\mathcal {X}}))]\, |{\mathcal {Y}}_\text {res}({\mathcal {X}})|+ \mu [P({\mathcal {Y}}_\text {pair}({\mathcal {X}}))]\, |{\mathcal {Y}}_\text {pair}({\mathcal {X}})|\right) /|{\mathcal {Y}}|\)

References

Akhtar, N., Mian, A.: Threat of adversarial attacks on deep learning in computer vision: A survey. IEEE Access 6, 14410–14430 (2018)

Atzmueller, M.: Subgroup discovery. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 5(1), 35–49 (2015)

Buolamwini, J., Gebru, T.: Gender shades: Intersectional accuracy disparities in commercial gender classification. In: Conference on fairness, accountability and transparency, 77–91. PMLR 2018

Chakraborty, A., Alam, M., Dey, V., Chattopadhyay, A., Mukhopadhyay, D.: Adversarial attacks and defences: A survey. arXiv preprint arXiv:1810.00069 (2018)

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., Yuille, A.L.: Deeplab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFS. IEEE Trans. Pattern. Anal. Mach. Intell. 40(4), 834–848 (2017)

Chung, Y., Kraska, T., Polyzotis, N., Tae, K.H., Whang, S.E. Slice finder: Automated data slicing for model validation. In: 2019 IEEE 35th International Conference on Data Engineering (ICDE), 1550–1553. IEEE (2019)

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S., Schiele, B.: The Cityscapes Dataset for Semantic Urban Scene Understanding. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3213–3223 (2016)

Dandl, S., Molnar, C., Binder, M., Bischl, B.: Multi-objective counterfactual explanations. In International Conference on Parallel Problem Solving from Nature, 448–469. Springer (2020)

De Vries, T., Misra, I., Wang, C., Van der Maaten, L.: Does object recognition work for everyone? In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 52–59 (2019)

d’Eon, G., d’Eon, J., Wright, J.R., Leyton-Brown, K.: The spotlight: A general method for discovering systematic errors in deep learning models. In: 2022 ACM Conference on Fairness, Accountability, and Transparency, 1962–1981 (2022)

Dhurandhar, A., Chen, P.-Y., Luss, R., Tu, C.-C., Ting, P., Shanmugam, K., Das, P.: Explanations based on the missing: Towards contrastive explanations with pertinent negatives. Advances in neural information processing systems, 31 (2018)

Dosovitskiy, A., Ros, G., Codevilla, F., Lopez, A., Koltun, V.: CARLA: An Open Urban Driving Simulator. In: Proceedings of the Conference on Robot Learning, 1–16. PMLR (2017)

Eyuboglu, S., Varma, M., Saab, K., Delbrouck, J.-B., Lee-Messer, C., Dunnmon, J., Zou, J., Ré, C.: Domino: Discovering systematic errors with cross-modal embeddings. arXiv preprint arXiv:2203.14960 (2022)

Fingscheidt, T., Gottschalk, H., Houben, S.: Deep Neural Networks and Data for Automated Driving: Robustness, Uncertainty Quantification, and Insights Towards Safety (2022)

Gannamaneni, S., Houben, S., Akila, M.: Semantic Concept Testing in Autonomous Driving by Extraction of Object-Level Annotations From CARLA. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV) Workshops, 1006–1014 (2021)

Goyal, Y., Wu, Z., Ernst, J., Batra, D., Parikh, D., Lee, S.: Counterfactual visual explanations. In: International Conference on Machine Learning, 2376–2384. PMLR (2019)

Guidotti, R., Monreale, A., Ruggieri, S., Pedreschi, D., Turini, F., Giannotti, F.: Local rule-based explanations of black box decision systems. arXiv preprint arXiv:1805.10820 (2018)

Hendrycks, D., Mu, N., Cubuk, E. D., Zoph, B., Gilmer, J., Lakshminarayanan, B.: Augmix: A simple data processing method to improve robustness and uncertainty. arXiv preprint arXiv:1912.02781 (2019)

Herrera, F., Carmona, C.J., González, P., Del Jesus, M.J.: An overview on subgroup discovery: foundations and applications. Knowl. Inform. Syst. 29(3), 495–525 (2011)

Hesamian, M.H., Jia, W., He, X., Kennedy, P.: Deep learning techniques for medical image segmentation: achievements and challenges. J. Digit. Imaging 32(4), 582–596 (2019)

High-Level Expert Group on AI (AI HLEG): Ethics Guidelines for Trustworthy AI. Technical report, European Commission. (2019)

Joshi, S., Koyejo, O., Vijitbenjaronk, W., Kim, B., Ghosh, J.: Towards realistic individual recourse and actionable explanations in black-box decision making systems. arXiv preprint arXiv:1907.09615 (2019)

Kanamori, K., Takagi, T., Kobayashi, K., Arimura, H.: DACE: Distribution-Aware Counterfactual Explanation by Mixed-Integer Linear Optimization. In: IJCAI, 2855–2862 (2020)

Karimi, A.-H., Barthe, G., Balle, B., Valera, I.: Model-agnostic counterfactual explanations for consequential decisions. In: International Conference on Artificial Intelligence and Statistics, 895–905. PMLR (2020)

Karimi, A.-H., Schölkopf, B., Valera, I.: Algorithmic recourse: from counterfactual explanations to interventions. In: Proceedings of the 2021 ACM conference on fairness, accountability, and transparency, 353–362 (2021)

LeCun, Y., Cortes, C., Burges, C.: MNIST handwritten digit database (2010)

Loh, W., Hauschke, A., Puntschuh, M., Hallensleben, S.: VDE SPEC 90012 V1.0 - VCIO based description of systems for AI trustworthiness characterisation. Technical report, Verband der Elektrotechnik Elektronik Informationstechnik e.V. (VDE) (2022)

Lundberg, S. M., Lee, S.-I.: A unified approach to interpreting model predictions. Advances in neural information processing systems, 30 (2017)

Lyssenko, M., Gladisch, C., Heinzemann, C., Woehrle, M., Triebel, R.: From evaluation to verification: Towards task-oriented relevance metrics for pedestrian detection in safety-critical domains. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 38–45 (2021)

Mahajan, D., Tan, C., Sharma, A.: Preserving causal constraints in counterfactual explanations for machine learning classifiers. arXiv preprint arXiv:1912.03277 (2019)

Mothilal, R. K., Sharma, A., Tan, C.: Explaining machine learning classifiers through diverse counterfactual explanations. In: Proceedings of the 2020 conference on fairness, accountability, and transparency, 607–617 (2020)

Oza, P., Sindagi, V. A., VS, V., Patel, V. M.: Unsupervised domain adaptation of object detectors: A survey. arXiv preprint arXiv:2105.13502 (2021)

Pearl, J.: The seven tools of causal inference, with reflections on machine learning. Communications of the ACM 62(3), 54–60 (2019)

Radford, A., Kim, J. W., Hallacy, C., Ramesh, A., Goh, G., Agarwal, S., Sastry, G., Askell, A., Mishkin, P., Clark, J., et al.: Learning transferable visual models from natural language supervision. In: International Conference on Machine Learning, 8748–8763. PMLR (2021)

Ribeiro, M. T., Singh, S., Guestrin, C.: " Why should i trust you?" Explaining the predictions of any classifier. In: Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, 1135–1144 (2016)

Sagadeeva, S., and Boehm, M.: Sliceline: Fast, linear-algebra-based slice finding for ml model debugging. In: Proceedings of the 2021 International Conference on Management of Data, 2290–2299 (2021)

Siam, M., Gamal, M., Abdel-Razek, M., Yogamani, S., Jagersand, M., Zhang, H.: A comparative study of real-time semantic segmentation for autonomous driving. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, 587–597 (2018)

Syed Sha, Q., Grau, O., Hagn, K.: DNN analysis through synthetic data variation. In: Computer Science in Cars Symposium, 1–10 (2020)

Ustun, B., Spangher, A., Liu, Y.: Actionable recourse in linear classification. In: Proceedings of the conference on fairness, accountability, and transparency, 10–19 (2019)

Verma, S., Dickerson, J., Hines, K.: Counterfactual explanations for machine learning: A review. arXiv preprint arXiv:2010.10596 (2020)

Wachter, S., Mittelstadt, B., Russell, C.: Counterfactual explanations without opening the black box: Automated decisions and the GDPR. Harv. JL & Tech. 31, 841 (2017)

Wang, M., Deng, W.: Deep visual domain adaptation: A survey. Neurocomputing 312, 135–153 (2018)

Wang, Z., Qinami, K., Karakozis, I. C., Genova, K., Nair, P., Hata, K., Russakovsky, O.: Towards fairness in visual recognition: Effective strategies for bias mitigation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 8919–8928 (2020)

Acknowledgements

This work has been funded by the German Federal Ministry for Economic Affairs and Climate Action as part of the safe.trAIn project.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gannamaneni, S.S., Mock, M. & Akila, M. Assessing systematic weaknesses of DNNs using counterfactuals. AI Ethics 4, 27–35 (2024). https://doi.org/10.1007/s43681-023-00407-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-023-00407-0