Abstract

This paper presents an initial exploration of the concept of AI system recall, primarily understood as a last resort when AI systems violate ethical norms, societal expectations, or legal obligations. The discussion is spurred by recent incidents involving notable AI systems, demonstrating that AI recalls can be a very real necessity. This study delves into the concept of product recall as traditionally understood in industry and explores its potential application to AI systems. Our analysis of this concept is centered around two prominent categories of recall drivers in the AI domain: ethical-social and legal considerations. In terms of ethical-social drivers, we apply the innovative notion of “moral Operational Design Domain”, suggesting AI systems should be recalled when they violate ethical principles and societal expectation. In addition, we also explore the recall of AI systems from a legal perspective, where the recently proposed AI Act provides regulatory measures for recalling AI systems that pose risks to health, safety, and fundamental rights. The paper also underscores the need for further research, especially around defining precise ethical and societal triggers for AI recalls, creating an efficient recall management framework for organizations, and reassessing the fit of traditional product recall models for AI systems within the AI Act's regulatory context. By probing these complex intersections between AI, ethics, and regulation, this work aims to contribute to the development of robust and responsible AI systems while maintaining readiness for failure scenarios.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past few years, the ethical discourse surrounding artificial intelligence (AI) has predominantly centered on the principles and values that should guide the design, development, and use of AI systems [1]. This approach seeks to mitigate the risks inherent in AI technologies, while encouraging their responsible use across various sectors. By placing ethical principles at the forefront of AI design, development and use, stakeholders aim to ensure that these powerful tools not only provide innovative solutions but also adhere to and uphold the values and expectations of individuals and societies. This focus on ethics serves as a proactive measure to prevent unintended negative consequences, fostering users and public trust, and promoting the adoption of AI systems.

However, despite the best intentions and rigorous efforts to implement AI principles and align AI systems with ethical values, societal expectations, and legal requirements, failures can still occur. In certain instances, these failures might be severe enough to warrant the recall of the AI systems in question. Three recent cases demonstrate that such situations are becoming increasingly likely and widespread. However, there is limited exploration regarding the reasons behind conducting such recalls. These cases present an opportunity to leverage insights from the field of AI ethics to navigate these challenges.

On November 15, 2022, Meta launched Galactica, an advanced language model designed to aid scientists [2]. Galactica was trained on 48 million examples of scientific content and promoted as a tool to assist researchers and students with various tasks. However, instead of providing the anticipated support, Galactica generated biased and inaccurate information. The model’s inability to discern fact from fiction resulted in the production of misleading and erroneous content. Users discovered that Galactica created false papers and spurious wiki articles on bizarre topics, such as bears in space. Although these examples are easily identifiable as fictional, it becomes more challenging when users are less familiar with the subject matter. Numerous scientists expressed concern about the model’s potential hazards, with some suggesting that its output should be treated with skepticism, akin to unreliable secondary sources. Consequently, due to intense criticism, the model was withdrawn after a mere 3 days [3].

An additional case study involves Midjourney, a frontrunner in the realm of AI-powered text-to-image generation. Initially, Midjourney proffered a complimentary trial service, enabling users to generate images from text descriptions. However, the unexpected human exploitation of AI technology soon began to surface, inciting a series of disconcerting events that necessitated a reassessment of the company’s policies. The predicament precipitously escalated when a sequence of AI-engineered deepfake images captured public attention [4]. These strikingly realistic representations, empowered by Midjourney’s sophisticated v5 update, were not innocuous imaginative creations, but depicted public figures in peculiar, frequently deceptive contexts. While these depictions engendered amusement for some, they simultaneously highlighted the potential for misappropriation of Midjourney’s AI capabilities. The AI art service became a platform for the fabrication and propagation of deepfakes involving public figures and the misrepresentation of historical events, stirring public unease. The exacerbation of this trend, along with a surge in demand and a rise in trial misuse, impelled Midjourney to intervene. The company suspended free trials, intending to reintroduce them once they had refined their system to mitigate the misuse of their technology. Subsequently, Midjourney instituted a ban on certain words within its image prompt system. For example, upon receipt of a request to generate an image of a US politician being arrested in New York, Midjourney rejected the request, citing the word “arrested” as being among the banned terms. The company further cautioned against any attempts to bypass these limitations, threatening to revoke access to the service [5].

The temporary suspension of OpenAI’s ChatGPT in Italy exemplifies a different scenario. Unlike previous instances where companies like Meta and Midjourney initiated recalls voluntarily, the interruption of ChatGPT was triggered by public authorities, specifically the Garante per la protezione dei dati personali (Italian Data Protection Authority). The decision stemmed from concerns over data protection and the absence of an age verification mechanism on the platform, which could potentially expose minors to inappropriate content [6]. The decision to block ChatGPT access in Italy occurred after a data breach involving user data was reported. Specifically, the Garante emphasized the absence of information available to users regarding the data processed by OpenAI and the large-scale processing of personal data to train generative systems like ChatGPT. In response, OpenAI blocked access to ChatGPT in Italy and expressed its willingness to cooperate with the Garante to comply with the temporary order. The Garante required OpenAI to implement safeguards, such as providing a privacy policy, allowing users to exercise individual rights over data protection, and disclosing the company’s legal basis for processing personal data. After OpenAI complied with the Garante’s requests, ChatGPT became available again in Italy [7].

These three cases highlight a broad spectrum and forms of AI system recall, each motivated by distinct circumstances and leading to different outcomes. Regardless of these variances, however, the cases emphasize the paramount role of ethical, societal, and regulatory concerns in compelling organizations to initiate a recall. These cases also accentuate the pressing need for a more rigorous discourse on this understudied topic within the academic sphere, particularly within the realm of AI ethics, governance, and regulation.

This paper aims to fill this scholarly gap as follows. The next section delves into the issue at hand, collating crucial insights from the existing literature on the subject of product and AI systems recall. The third section addresses the policy ramifications stemming from our research, honing in on AI system recall within the scope of the AI Act. The fourth section explores the circumstances in which a recall should be carried out due to ethical and societal concerns. In the conclusion, we encapsulate the key findings of the paper and suggest possible paths for future research.

2 Overview of “product recall”

Product recalls are an essential mechanism for protecting consumers. A product recall represents a methodical, premeditated course of action instigated by a manufacturer or a regulatory authority with the primary aim of withdrawing or amending a product circulating in the marketplace. This action is generally precipitated by the detection of defects, non-compliance with established standards, the identification of potential risks that could compromise consumer safety or public health, and other causes. Despite regular quality control and verification practices implemented by companies, defective or unsafe products continue to be a prevalent issue in the market. This problem is not exclusive to any specific industrial sector or jurisdiction; cases have been recorded across various sectors, including the food industry [8], automotive [9], toys [10], and pharmaceutical products [11], among others. Similarly, recalls are a common occurrence in countries across different continents and levels of economic or industrial development [12].

The impetus for initiating a recall can either be voluntary, spurred by the manufacturer’s internal discovery of potential issues, or mandatory, enforced by a regulatory body as a measure of consumer protection. Voluntary and mandatory recalls are distinguished by several factors including the timing, transparency, control over the process, and the sources of information made available to the public. Voluntary recalls, often executed in the early stages of identifying a potential issue, provide companies with the ability to control the scope and process of the recall. However, they are often accompanied by limited or potentially biased information, resulting from the firm’s self-disclosure. On the other hand, mandatory recalls, typically instituted by a government body, provide more detailed and potentially unbiased information about the product’s safety issues, lending credibility to the process despite the negative implications for the company’s reputation. The nature of the recall can impact public perception, investor confidence, and ultimately, the financial outcomes for the firm [13].

Product recalls can have a significant impact on firms, regulators, and consumers [14]. The impact of a recall can vary depending on a number of factors, including the size of the recall, the severity of the defect, and the way in which the recall is managed. Furthermore, a product recall can lead to two outcomes: it may entail a temporary withdrawal of the product to rectify the underlying issues that instigated the recall, or it may lead to a permanent withdrawal of the product from the market. In the following discussion regarding recalls, we consider both potential outcomes.

For firms, product recalls can lead to financial losses, damage to reputation, and loss of customers. Financial losses are a frequent consequence of product recalls, necessitating firms to shoulder the cost of the recall process, from consumer notification to product collection and disposal, and even compensation for affected customers. In certain instances, the firm may also be subjected to fines or penalties. Moreover, a firm’s reputation can suffer significantly following a product recall, leading to eroded consumer trust and reduced future sales, impacting profits. In the aftermath of a recall, consumers, particularly those directly affected by a faulty product, may opt for alternative brands, resulting in a diminishing market share for the firm [14].

Product recalls can have a significant impact on regulators. Increased scrutiny is one of the most common consequences of product recalls, potentially involving thorough inspections, record reviews, and employee interviews. Enforcement actions may also be implemented against the recalling firm, including fines, injunctions, or other punitive measures. Finally, regulators may change regulations in response to product recalls. This may involve updating existing regulations, creating new regulations, or changing the way in which regulations are enforced [14].

From a consumer perspective, product recalls can lead to injuries, property damage, financial losses, and loss of trust in the recalling firm. Injuries are a common repercussion of product recalls. Use of a recalled product can result in medical expenses, lost wages, and emotional distress for consumers. Property damage is another typical outcome, leading to repair or replacement costs, as well as inconvenience and disruption. Financial losses can also arise from product usage, either through repair or replacement costs or the inability to use the product. Finally, loss of trust is a significant consequence, with consumers potentially avoiding future purchases from the recalling firm [14].

The recall process encompasses several stages, usually including problem identification, investigation of the defect, decision to recall, notification of pertinent authorities and consumers, implementation of the recall strategy, and a post-recall evaluation to assess the effectiveness and learning opportunities [15]. Each of these steps is integral to the overall recall strategy. Given the significant impacts and potential consequences for various stakeholders, it is important that firms have processes in place to effectively manage product recalls [16]. Robust product recalls processes are crucial not only to protect the firm’s reputation and minimize potential legal implications, but most importantly, to ensure the safety and protection of consumers from potentially harmful products.

2.1 Recall of AI systems

Product recall is traditionally associated with tangible goods that one can see, touch, and physically interact with. Examples abound on both the OECD Global portal on product recalls [17] and the EU Safety Gate’s alert system [18], which list recalled products in categories such as toys, accessories, cosmetics, construction products, furniture, etc. Hence, applying this concept to intangible products like AI systems and general software is not a straightforward task. However, with careful adaptations, we can extend this idea to AI systems. This section will first elaborate on the unique aspects of the recall of AI systems in order to conceptually clarify this term. This foundation will then be used to develop a normative approach to the issue in subsequent sections.

Contrary to product recalls in other industries, the recall of AI system does not necessitate the physical collection, replacement, or repair of the systems in question. This is due to the unique way these systems are distributed and delivered to users. AI systems are primarily distributed through cloud-based services, known as AI as a Service (AIaaS) [19], and via Application Programming Interfaces (APIs) [20]. These digital distribution methods allow for remote updates, modifications, and fixes, thus obviating the requirement for physical retrieval or substitution. Even in circumstances where failures are significant enough to necessitate a recall or withdrawal of the AI system, this can be efficiently accomplished by restricting access to the respective AIaaS or APIs or disabling the AI system altogether, as exemplified by the case studies presented in the introduction. While this method of recall distinctly diverges from conventional product recall procedures, it serves the same purpose: to prevent users from using the faulty AI system and thereby prevent the manifestation of any potentially deleterious effects.

Even when alternative solutions are deployed for the distribution and use of AI systems, such as edge AI [21], the need for physical collection to implement corrections is eliminated. Updates and patches can be distributed over the internet, mirroring the distribution methods of traditional software updates. This lack of physical collection does not suggest that intangible products like software and AI systems are immune to recalls. On the contrary, software-related issues are the primary cause of product recalls in the U.S. medical devices sector according to Sedwick’s 2022 Product Recall Index [22]. Furthermore, an exploration of the OECD Global portal on product recalls [17] reveals some recalls precipitated by the failure of software embedded in a variety of devices, including cars, electric bikes, watches, and televisions. In the absence of internet connectivity, these devices necessitate physical recalls for essential software updates or patches, thus blurring the demarcation between recalls necessitating physical collection of a product and the more nebulous modalities applicable to software and AI systems.

As AI components become increasingly integrated into these devices, one can expect them to become an additional reason for recall. This trend is already visible in the automotive sector, where the general growth in product recalls in recent years is attributed to the expanding integration of AI-based Advanced Driving Assistance Systems (ADAS) and Autonomous Vehicles (AV) [23]. An annual growth of 13.6% in Europe and 10.2% in the U.S. is projected for recalls due to issues associated with software, sensors, or electronic control units, which are vital components of AI-powered ADAS/AV-enabled vehicles.

Finally, AI systems also differ from traditional products due to the unique types of risks they present, which can result in different grounds for potential recalls. For tangible products, risks typically originate from physical, mechanical, and chemical characteristics, often leading to recalls driven primarily by health and safety concerns. In contrast, AI systems can introduce not only these conventional risks but also additional, unique ones. As illustrated in the introduction, certain AI systems were recalled because they raised ethical issues around scientific integrity (as in the case of Galactica), concerns regarding the dissemination of fake news (Midjourney), and infringements of fundamental rights like privacy (ChatGPT). The rise of new recall triggers makes the recall of AI systems a more complex and intricate topic, warranting an ethical approach toward the issue. While the subject of product recalls has traditionally been confined to industry practices and research in economics and management, the discussion surrounding AI system recalls needs to be broader and more nuanced.

Even with these differences in recall methods, triggers, and risks associated with AI systems, we can still craft a fitting definition. According to the proposed definition, recalling an AI system refers to “any action aimed at preventing temporarily or permanently the use of the faulty AI system and thereby avoiding the manifestation of any potentially harmful effects”. This functional definition helps us to employ the term “recall” in the context of AI systems, while maintaining awareness of the contrasts with recalls of traditional tangible products.

3 The recall of AI systems in the context of the AI Act

The proposed definition closely aligns with that set forth in the European proposal for a regulation on artificial intelligence (AI Act) [24]. The Act defines recall of an AI system as “any measure aimed at achieving the return to the provider of an AI system made available to users”. Despite subtle differences, the AI Act, like our proposed definition, adopts a functional perspective, concentrating on the recall’s purpose rather than the methods or triggers. In addition, the AI Act presents a definition for “withdraw of an AI system”, understood as “any measure aimed at preventing the distribution, display and offer of an AI system”. Both of these definitions are inspired by the General Product Safety Regulation [25], where recall is defined as “any measure aimed at achieving the return of a product that has already been made available to the consumer”, and withdrawal as “any measure aimed at preventing a product in the supply chain from being made available on the market”. Our proposed definition does not distinguish between these two cases, but instead encompasses both scenarios.

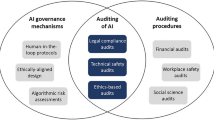

The AI Act, likely to be enacted in 2024, establishes a comprehensive framework for the marketing and use of AI systems across Europe. The principal objective of this proposed regulation is to safeguard the health, safety, and fundamental rights of individuals. It adopts a risk-based approach, classifying AI systems into four distinct risk categories: unacceptable risk, high risk, limited risk, and minimal risk.

AI systems that present an unacceptable risk, such as those used for social scoring, are explicitly prohibited by the Regulation. High-risk AI systems, which include, among others, those utilized in education and vocational training, employment and worker management, self-employment access, and law enforcement, are subject to several requirements. These comprise requirements related to management, data quality, governance, technical documentation, record keeping, transparency, information provision to users, human oversight, accuracy, robustness, and cybersecurity. Conversely, limited risk systems are only subject to transparency requirements, while minimal risk systems have no imposed obligations.

Adopting a common regulatory approach in European safety legislation, the AI Act is operationalized through a combination of conformity assessment, accreditation, and market surveillance [26]. While the technical details of these legal mechanisms fall outside the scope of this paper, emphasis must be placed on the pivotal role of market surveillance authorities (MSAs). Within the framework of the AI Act, these authorities are responsible for overseeing and monitoring the market, investigating compliance with obligations and requirements for all AI systems in circulation. Thus, they possess the authority to recall AI systems under circumstances outlined in the Regulation.

With regard to recalls, the AI Act builds on the general European regulatory framework for product recalls, which principally includes the General Product Safety Regulation [25] and Regulation (EU) 2019/1020 on market surveillance and product conformity [27]. Within this regulatory framework, recalls represent strategies implemented to retrieve hazardous products already in the possession of consumers or end-users. As previously mentioned, product recalls are slightly different from withdrawals. Recalls, in the legal context, refer to actions taken to remove products already available to consumers. This scenario is exemplified in the cases of Galactic, Midjourney, and ChatGPT. These systems were accessible to users, and steps were then undertaken to retract their availability. Conversely, withdrawals refer to the proactive removal of a product from the supply chain before it reaches the final consumer. This might be the case for an AI system embedded in a product that is not yet on the market. It is essential to note that the terms “recall” and “withdrawal” can have varying definitions in jurisdictions outside of Europe. Furthermore, practices surrounding recalls and withdrawals may also differ between industries.

Finally, to ensure product safety, this regulatory framework provides for other corrective actions, such as labeling a product that presents potential risks under certain conditions with suitable warnings, imposing conditions on the marketing of a product to ensure its safety, prohibiting the marketing of a product entirely, or conducting technical repairs or modifications on the product. Recalls, withdrawals, and other corrective measures are to be conducted according to the regulatory framework indicated above and the product recall guide provided by the European Commission [28].

Under the EU AI Act, there are several situations in which a recall might be initiated. According to Article 21, providers of high-risk AI systems are obligated to initiate recall actions if they have reason to believe that their AI system, which is currently in the market or in service, does not conform to the Act’s requirements. They need to take immediate corrective actions to bring the system into conformity. If that is not feasible, they must withdraw it from the market or recall it. Distributors of AI systems have similar obligations, according to Article 27.4.

If providers or distributors of an AI systems do not take appropriate corrective action within a prescribed period, the MSAs will step in. The MSAs have the power to enact provisional measures to prohibit or restrict the AI system’s availability in the national market or to initiate a recall. In addition, MSAs have the power to recall AI systems in cases of non-compliance. As per Article 65, if an MSA finds that an AI system does not comply with the Regulation during an evaluation, it must promptly require the relevant operator to take corrective actions. These may involve bringing the AI system into compliance, withdrawing it from the market, or initiating a recall within a reasonable period. Furthermore, under Article 67, even if the AI system is found to be in compliance with the Regulation, but still presents a risk to health, safety, and fundamental rights, or other aspects of public interest protection, the MSAs can demand that the operator take appropriate measures.

In the EU AI Act, the recall provision serves as a safeguarding mechanism to ensure the protection of health, safety, and fundamental rights in instances of non-compliance or continuous risk associated with AI systems. This aspect is of notable significance as MSAs have the power to mandate a recall of AI systems, even when these systems adhere to existing requirements. Thus, compliance with the law does not inherently translate to the reduction of associated risk to an acceptable level. It thereby underscores the imperative for AI system providers to continuously monitor the potential impact and risks stemming from their AI systems. They need to be ready to enforce corrective measures, including system recall or withdrawal, when necessitated.

While the rationale for the recall of an AI system is straightforward, uncertainties continue to surround its practical implementation. The product recall regulatory framework underlying in the AI Act primarily pertains to tangible, physical products, which still presents challenges when applied to the distinct, intangible nature of many AI systems, as discussed in Sect. 2.1. For example, in cases where AI systems are distributed via cloud services, providers could theoretically restrict access within Europe, but the use of a Virtual Private Network (VPN) could readily enable users to circumvent such restrictions. These complexities underline the need for additional considerations and potential regulatory measures to effectively enforce recall actions within the unique distribution paradigm of AI systems and other intangible product and services.

4 Ethical and societal concerns warranting AI systems recall

The AI Act empowers MSAs to mandate the recall of AI systems. As noted in Sect. 2, however, recalls are not always driven by public authority directives (mandatory recall). Companies may also choose to initiate these recalls independently (voluntary recall), typically due to concerns regarding product safety and consumer protection [29].

The voluntary recall of Galactica and Midjourney introduce a novel element in contrast to this traditional scenario. Safety concerns did not act as the catalyst for these voluntary recalls. Although the official reasons for these recalls have remained elusive, it is plausible that public opinion concerns, intensified by social media, were significant contributors to the voluntary recalls. In the Galactica case, concerns were raised about its reliability as a scientific research support tool, and more generally about the potential negative impact of generative AI on the rules governing scientific endeavors. For Midjourney, concerns were about the propagation of hyper-realistic but misleading images of sensitive current events, contributing to the spread of fake news and misinformation via generative AI.

These concerns about scientific integrity and fake news dissemination represent “ethical and social concerns”. This expression mirrors a growing unease about how disruptive technologies like AI are impacting ethical values and social relations. This is evidenced by the growing academic and public conversation around AI and ethics, and emerging regulations like the AI Act. Ethical and societal concerns, therefore, do not denote two separate categories but encapsulate a spectrum of AI implications on matters of importance, demonstrated by research on AI in relation to transparency, explainability, responsibility, human autonomy, meaningful human control and more, as well as the impact of AI on work, education, the judicial system, democratic institutions and more. Accordingly, the recall of AI systems due to ethical and societal concerns refers to the process of recalling AI systems that exhibit behavior or produce outcomes that are deemed unethical, harmful, or inconsistent with social norms and expectations.

Thus, it is justifiable to conclude that ethical and societal concerns sparked the voluntary recalls of Galactica and Midjourney. However, to extract valuable insights from these cases, we must discern when and why ethical and societal concerns may justify an AI system recall. The remainder of this section focuses on this question. Given the nature of this analysis, we will employ a normative approach, exploring the circumstances under which an AI system should be recalled due to ethical and societal concerns.

For all normative analyses, it is crucial to adopt a specific viewpoint for the examination. In fact, different stakeholders may have diverse viewpoints, resulting in varied conditions that could necessitate a product recall. In Sect. 2, we identified three primary stakeholders involved in recall scenarios: firms, regulators, and consumers. For the purposes of this section, we approach the subject from the consumers’ viewpoint, expanding this category to encompass individuals who, although not technically consumers or users, may nevertheless be adversely affected by a harmful AI system, thus making them legitimate stakeholders. For instance, consider an individual denied access to social benefits due to the decisions made or suggested by an AI system. Should this denial be unfair, the individual may demand the withdrawn or recall of the faulty AI system, irrespective of their status as a consumer or user. In analogy to the concept of “data subjects” [30], this category of stakeholders can be defined “AI subjects”, a group which also encompasses consumers and users and, more generally, the public.

The choice to adopt the perspective of the AI subjects is substantiated by the fact that the highlighted instances of voluntary recall largely occurred due to ethical and societal concerns. Although Meta might have been motivated by the apprehension of reputational damage, it is undeniable that this decision was catalyzed by ethical and societal considerations, such as threats to scientific integrity, articulated by the public through media and social platforms. In a similar vein, while Midjourney might have acted to pre-empt potential repercussions from public authorities, their decision was once again spurred by public outcry over worries related to the propagation of misinformation and fake news. Consequently, the firms’ perspective appears less pertinent since their decision to recall AI systems is ultimately driven by the pressure exerted by stakeholders broadly identified as “AI subjects”.

4.1 A moral ODD for AI systems recall

As pointed out in the introduction, one of the core debates in AI ethics largely revolves around AI principles encapsulating ethical values and social expectations. However, the practical implementation of these principles often faces challenges due to the inherent complexities that come with the operationalization of vague concepts, like ethical principles [31]. Despite this challenge, these principles can serve as critical guidelines within the context of an AI system recall. Any transgression of these principles should instigate the recall of the AI system in question.

For instance, designing AI systems that embed the value of fairness presents a significant implementation challenge. Nevertheless, it appears considerably more attainable to assess the fairness of an AI system during its operations. Similarly, while it is challenging to embed the value of human autonomy within an AI system, it is more straightforward to ascertain whether an AI system infringes upon this principle in its application. In such cases, recalling the AI system is advisable to curtail further propagation of its negative impacts and to afford the providers an opportunity to rectify the AI system prior to its re-release.

When used in this way, AI principles describe the perimeter of a moral operational design domain (moral ODD) [32]. This concept builds upon the concept of ODD (Operational Design Domain), which is utilized in the autonomous driving industry to define a range of conditions in which an autonomous vehicle is intended to operate. For instance, an autonomous vehicle may have a designated ODD that specifies particular weather conditions, e.g., rain intensity of up to 8 mm per hour, beyond which the system is deemed unsafe and cannot function. Similarly, the moral ODD refers to ethical and social conditions that set limits on system operation, beyond which it should not operate. Ethical principles can be employed to establish the boundaries of the moral ODD, thus determining the circumstances under which an AI system should be recalled. When used in this way, ethical principles prove to be more useful despite the challenges associated with their implementation.

To put the proposed approach into practice, we use the Galactica case as an example to outline a moral ODD for AI systems in scientific research. Such a moral ODD should identify, among all the things that such an AI system can do, those it should not do. If the system ventures into prohibited areas, beyond the boundaries of the moral ODD, it may warrant a recall.

Scientific knowledge and practices are grounded in a set of norms and values, termed the “ethos of science” by sociologist of science Robert K. Merton [33]. This ethos comprises institutional and cognitive norms. Institutional norms guide scientists’ professional behavior, while cognitive norms establish theoretical and methodological standards for acquiring scientific knowledge.

In his work, Merton categorized institutional norms into four groups. Firstly, the norm of universalism states that scientific claims should be evaluated based on impartial criteria, regardless of the scientist’s personal attributes. This ensures that scientific knowledge is universally applicable and free from personal or social biases. Secondly, the norm of communism posits that scientific knowledge is a collective product and should be freely shared, promoting the idea that scientific findings belong to everyone and should be widely disseminated for societal benefit. Thirdly, the norm of disinterestedness emphasizes that scientists should act without personal gain or bias, prioritizing knowledge advancement over personal gain. This ensures the integrity and objectivity of scientific research. Lastly, the norm of organized skepticism encourages scientists to question and test all scientific claims, fostering a culture of critical thinking and rigorous testing essential for scientific progress.

Cognitive norms include theoretical assumptions, such as the truth value of scientific claims and explanations, and methodological rules like testability, empirical support, simplicity, reproducibility, and others. These norms, which are widely discussed in the philosophy of science, validate that the knowledge scientists acquire is indeed scientific. In essence, what makes scientific knowledge “scientific” is its adherence to the cognitive norms within a relevant field. For example, the theory of intelligent design is not recognized as a scientific theory by the scientific community, because it fails to meet the cognitive norms in the field of biology. The concept of a designer influencing the intricate complexity of life cannot be empirically tested. Consequently, this hypothesis lacks scientific value as it does not align with the cognitive norms of biology. As aptly noted, “because it does not generate testable hypotheses and cannot be subjected to empirical inquiry, ID is not science” [34].

These institutional and cognitive norms can be used to define a moral ODD for AI systems used in scientific research. Just as human scientists are expected to abide by these rules, so should AI systems. When scientists breach these norms, they face consequences from the scientific community, ranging from a loss of credibility to disciplinary sanctions, to complete ouster from the scientific community. In a similar vein, if AI systems violate these norms, it is reasonable to expect that these systems would face comparable penalties, such as being recalled, withdrawn, or discontinued.

The Galactica case exemplifies this scenario. It was recalled because it violated some of the institutional and cognitive norms of scientific practice and knowledge. This breach of norms constitutes an overstepping of the moral ODD boundaries, and therefore, justifies the recall of an AI system, as we argue in this paper.

A significant issue identified with Galactica was the creation of false yet highly believable scientific references. Galactica could generate citations from a relevant author in a specific field and create a title consistent with the author’s research interests, even though the paper did not exist. What rules does Galactica violate in this case? A fake citation cannot be evaluated based on pre-established impersonal criteria because it does not accurately represent the source of the information, and so it violates the norm of universalism. Moreover, a fake citation prevents others from being able to fully scrutinize and verify the information, thus violating the norm of organized skepticism, which encourages scientists to question and test all scientific claims. In addition to these institutional norms, Galactica also breached cognitive norms. Primarily, by generating false claims, it violated the principle that scientific claims should be truth-oriented. Compounding this, these false claims were made to appear plausible by mimicking the characteristics of scientific writing. By violating numerous norms that form the ethos of science, Galactica exceeded the boundaries of the moral ODD. We conclude that this violation is a sufficient reason to warrant the recall of such an AI system.

This approach can be generalized to other contexts. For instance, medical practice is governed by norms that differ from those in science. By identifying these norms, we can establish a moral ODD for AI applications in healthcare. Once the moral ODD is defined, we can assess whether AI applications in healthcare operate within the moral ODD or cross its boundaries. If they cross these boundaries, it would be a sufficient reason to recall the AI system and cease its use until it complies with the moral ODD. This approach can be similarly applied to other fields where AI is used, such as education, law, public administration and more.

While this approach is conceptually simple, it does present some challenges. Identifying the norms that should define the boundaries of the moral ODD can be difficult for two main reasons. Primarily, the morally relevant principles fluctuate contingent upon the context. For instance, in certain cultural settings, a breach of individual privacy constitutes an unacceptable infraction necessitating the withdrawal of AI systems [35]. Conversely, in different contexts, an encroachment on group privacy [36], potentially without an individual privacy violation, could warrant the recall of an AI system.

Secondarily, the interpretation of a given principle may vary across different societal and cultural environments. For example, the definition of privacy varies markedly depending upon context, thereby highlighting the complexities in universally defining it [37]. This was exemplified during the application of AI ethical principles within the scope of geospatial technologies, where interpreting universal principles in local contexts was identified as a key obstacle in the implementation of AI ethics [38].

Therefore, defining the principles that should frame the boundaries of the moral ODD is challenging, as is understanding the context-specific significance of a given principle. As a consequence, the perimeters of the moral ODD will inevitably be somewhat nebulous and demand the capacity for dynamic adaptation to diverse contexts. This means that certain AI systems may be recalled in specific circumstances and locations, but not others.

5 Conclusion

The recall of an AI system functions as a last resort to ensure that these systems do not breach ethical, societal, and legal boundaries. Despite concerted efforts to align AI systems with ethical norms, societal expectations, and legal mandates, risk remains inherent and damage can potentially occur. In such circumstances, it is imperative to recall these AI systems.

The decision to recall an AI system poses significant challenges to an organization due to the potential financial, reputational, and legal implications. Unsurprisingly, recall is often considered as the final recourse, frequently prompted by a directive from MSAs. However, recent incidents such as those involving Galactica, Midjourney, and ChatGPT illustrate that this remote possibility can indeed materialize. The rapid advancements in AI technology, coupled with the competitive “AI race” that can precipitate the premature release of AI-based products and services, amplifies this likelihood.

This paper serves as an initial contribution to understanding the complex issue of AI system recall. Primarily, it offers a conceptual clarification of what constitutes a product recall and how this concept could be tailored and applied within the context of AI systems and other intangible products. Additionally, it outlines the circumstances that may necessitate an AI system’s recall, which can broadly be divided into two categories.

On one hand, legal regulations could mandate a recall. The AI Act provides a set of measures that govern the recall of AI systems, with risks and harms to health, safety, and fundamental rights forming the rationale for AI system recall under the proposed European regulation. On the other hand, a normative analysis based on the concept of “moral ODD” suggests that an AI system should be recalled on ethical and societal grounds when it oversteps the boundaries of its moral ODD. We have applied this approach to the case of Galactica, demonstrating that its recall is justified as it breaches several norms of the ethos of science.

While it is always desirable to release robust, fair, and trustworthy AI systems, failures can and do occur. Acknowledging this reality represents a more pragmatic, humble approach to the mission of AI ethics. Having contingency plans in place to address these scenarios allows us to maintain our commitment to responsible AI even amidst these failures.

However, several issues remain unresolved and merit further investigation. Firstly, it is necessary to establish more precise trigger conditions that, from an ethical and societal standpoint, necessitate the recall of an AI system. This involves the selection of certain values and principles that, while not universal, can be instrumental under specific circumstances. Such values would constitute the boundaries of a contextual moral ODD.

Moreover, it is critical to formulate a clear framework on how organizations should manage a recall, considering diverse aspects, from risk assessment leading to the recall decision to communication strategies with users and stakeholders.

Finally, within the regulatory context of the AI Act, the appropriateness of traditional product recall frameworks for innovative products such as AI systems should be evaluated. This paper is a stepping stone in this direction, furthering the conversation on how best to manage the increasingly complex intersection of AI, ethics, and policy.

References

Floridi, L., Cowls, J.: A unified framework of five principles for AI in society. In: Machine Learning and the City, pp. 535–545. Wiley (2022). https://doi.org/10.1002/9781119815075.ch45

Taylor, R., et al.: Galactica: a large language model for science. arXiv preprint https://doi.org/10.48550/arXiv.2211.09085 (2022)

Why Meta’s latest large language model survived only three days online. MIT Technology Review. https://www.technologyreview.com/2022/11/18/1063487/meta-large-language-model-ai-only-survived-three-days-gpt-3-science/. Accessed 10 May 2023

Editor, S.: Midjourney halts free trials after fake AI images go viral. PCWorld. https://www.pcworld.com/article/1677533/midjourney-halts-free-trials-after-ai-photos-go-viral.html. Accessed 15 May 2023

AI generator Midjourney pauses service over deepfake “abuse”. https://www.zawya.com/en/world/americas/ai-generator-midjourney-pauses-service-over-deepfake-abuse-gnib2k24. Accessed 15 May 2023

Provvedimento del 30 marzo 2023 [9870832]. https://www.garanteprivacy.it:443/home/docweb/-/docweb-display/docweb/9870832. Accessed 10 May 2023

ChatGPT: OpenAI riapre la piattaforma in Italia garantendo più trasparenza e più diritti a utenti e non utenti europei. https://www.garanteprivacy.it:443/home/docweb/-/docweb-display/docweb/9881490. Accessed 10 May 2023

Potter, A., Murray, J., Lawson, B., Graham, S.: Trends in product recalls within the agri-food industry: empirical evidence from the USA, UK and the Republic of Ireland. Trends Food Sci. Technol. 28(2), 77–86 (2012). https://doi.org/10.1016/j.tifs.2012.06.017

Nassar, S., Kandil, T., Erkara, M., Ghadge, A.: Automotive recall risk: impact of buyer-supplier relationship on supply chain social sustainability. Int. J. Product. Perform. Manag. 69(3), 467–487 (2019). https://doi.org/10.1108/IJPPM-01-2019-0026

The effect of a toy industry product recall announcement on shareholder wealth. Int. J. Prod. Res. 54(18). https://www.tandfonline.com/doi/abs/10.1080/00207543.2015.1106608. Accessed 15 May 2023

Miglani, A., Saini, C., Musyuni, P., Aggarwal, G.: A review and analysis of product recall for pharmaceutical drug product. J. Gener. Med. 18(2), 72–81 (2022). https://doi.org/10.1177/17411343211033887

Cogollo-Flórez, J.M., Restrepo-Hincapié, M.: Una propuesta de clasificación taxonómica del problema de recogida de productos defectuosos. Rev. UIS ing. (2021). https://doi.org/10.18273/revuin.v20n3-2021007

Chang, S.-C., Chang, H.-Y.: Corporate motivations of product recall strategy: exploring the role of corporate social responsibility in stakeholder engagement. Corp. Soc. Responsib. Environ. Manag. 22(6), 393–407 (2015). https://doi.org/10.1002/csr.1354

Li, H., Bapuji, H., Talluri, S., Singh, P.J.: A cross-disciplinary review of product recall research: a stakeholder-stage framework. Transp. Res. Part E: Logist. Transp. Rev. 163, 102732 (2022). https://doi.org/10.1016/j.tre.2022.102732

Mukherjee, A., Carvalho, M., Zaccour, G.: Managing quality and pricing during a product recall: an analysis of pre-crisis, crisis and post-crisis regimes. Eur. J. Oper. Res. 307(1), 406–420 (2023). https://doi.org/10.1016/j.ejor.2022.08.012

Berman, B.: Managing the Product Recall Process. Rochester. [Online]. Available: https://papers.ssrn.com/abstract=3832116 (2021). Accessed 15 May 2023

Global Recalls portal (OECD). https://globalrecalls.oecd.org/#/. Accessed 16 May 2023

Safety Gate: the EU rapid alert system for dangerous non-food products. https://ec.europa.eu/safety-gate-alerts/screen/search?resetSearch=true. Accessed 11 Jul 2023

Lins, S., Pandl, K.D., Teigeler, H., Thiebes, S., Bayer, C., Sunyaev, A.: Artificial intelligence as a service. Bus. Inf. Syst. Eng. 63(4), 441–456 (2021). https://doi.org/10.1007/s12599-021-00708-w

Liu, B.: Artificial Intelligence and Machine Learning Capabilities and Application Programming Interfaces at Amazon, Google, and Microsoft. Thesis, Massachusetts Institute of Technology, [Online]. Available: https://dspace.mit.edu/handle/1721.1/146689 (2022). Accessed 16 May 2023

Singh, R., Gill, S.S.: Edge AI: a survey. Internet Things Cyber Phys. Syst. 3, 71–92 (2023). https://doi.org/10.1016/j.iotcps.2023.02.004

Sedwick’s 2022 Product Recall Index. https://marketing.sedgwick.com/acton/media/4952/dwf-law-q3-2022. Accessed 16 May 2023

Murphy, F., Pütz, F., Mullins, M., Rohlfs, T., Wrana, D., Biermann, M.: The impact of autonomous vehicle technologies on product recall risk. Int. J. Prod. Res. 57(20), 6264–6277 (2019). https://doi.org/10.1080/00207543.2019.1566651

European Commission: Proposal for a regulation of the European Parliament and of the Council laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts. [Online]. Available: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=celex%3A52021PC0206 (2021)

Regulation (EU) 2023/988 of the European Parliament and of the Council of 10 May 2023 on general product safety, amending Regulation (EU) No 1025/2012 of the European Parliament and of the Council and Directive (EU) 2020/1828 of the European Parliament and the Council, and repealing Directive 2001/95/EC of the European Parliament and of the Council and Council Directive 87/357/EEC (Text with EEA relevance), vol. 135. [Online]. Available: http://data.europa.eu/eli/reg/2023/988/oj/eng (2023). Accessed 11 Jul 2023

Tartaro, A.: Regulating by standards: current progress and main challenges in the standardisation of Artificial Intelligence in support of the AI Act. Eur. J. Priv. Law Technol. 1. Available: https://universitypress.unisob.na.it/ojs/index.php/ejplt/article/view/1792 (2023)

Regulation (EU) 2019/1020 of the European Parliament and of the Council of 20 June 2019 on market surveillance and compliance of products and amending Directive 2004/42/EC and Regulations (EC) No 765/2008 and (EU) No 305/2011 (Text with EEA relevance.), vol. 169. [Online]. Available: http://data.europa.eu/eli/reg/2019/1020/oj/eng (2019). Accessed 11 Jul 2023

European Commission: Recall process from A to Z: Guidance for economic operators and market surveillance authorities. [Online]. Available: https://ec.europa.eu/safety/consumers/consumers_safety_gate/effectiveRecalls/documents/EU_guide_on_the_Recall_process_from_A_to_Z_en.pdf (2021)

Bernstein, A.: Voluntary recalls. U. Chi. Legal F. 2013, 359 (2013)

Blume, P.: The data subject. Eur. Data Prot. Law Rev. 1, 258 (2015)

Tidjon, L.N., Khomh, F.: The Different Faces of AI Ethics Across the World: A Principle-Implementation Gap Analysis. arXiv:2206.03225 [cs]. [Online]. Available: http://arxiv.org/abs/2206.03225 (2022). Accessed 14 Jun 2022

Cavalcante Siebert, L., et al.: Meaningful human control: actionable properties for AI system development. AI Ethics 3(1), 241–255 (2023). https://doi.org/10.1007/s43681-022-00167-3

Merton, R.K.: Science and technology in a democratic order. J. Legal Political Sociol. 1, 115–126 (1942)

An intelligently designed response. Nat. Methods. 4(12), Art. no. 12 (2007). https://doi.org/10.1038/nmeth1207-983

Floridi, L.: Open data, data protection, and group privacy. Philos. Technol. 27(1), 1–3 (2014). https://doi.org/10.1007/s13347-014-0157-8

Majeed, A., Khan, S., Hwang, S.O.: Group privacy: an underrated but worth studying research problem in the era of artificial intelligence and big data. Electronics 11(9), Art. no. 9 (2022). https://doi.org/10.3390/electronics11091449

Ess, C.: Ethical pluralism and global information ethics. Ethics Inf. Technol. 8(4), 215–226 (2006). https://doi.org/10.1007/s10676-006-9113-3

Micheli, M., et al.: AI ethics and data governance in the geospatial domain of Digital Earth. Big Data Soc. 9(2), 20539517221138770 (2022). https://doi.org/10.1177/20539517221138767

Funding

Open access funding provided by Università degli Studi di Sassari within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tartaro, A. When things go wrong: the recall of AI systems as a last resort for ethical and lawful AI. AI Ethics (2023). https://doi.org/10.1007/s43681-023-00327-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43681-023-00327-z