Abstract

Recently, artificial intelligence (AI) systems have been widely used in different contexts and professions. However, with these systems developing and becoming more complex, they have transformed into black boxes that are difficult to interpret and explain. Therefore, urged by the wide media coverage of negative incidents involving AI, many scholars and practitioners have called for AI systems to be transparent and explainable. In this study, we examine transparency in AI-augmented settings, such as in workplaces, and perform a novel analysis of the different jobs and tasks that can be augmented by AI. Using more than 1000 job descriptions and 20,000 tasks from the O*NET database, we analyze the level of transparency required to augment these tasks by AI. Our findings indicate that the transparency requirements differ depending on the augmentation score and perceived risk category of each task. Furthermore, they suggest that it is important to be pragmatic about transparency, and they support the growing viewpoint regarding the impracticality of the notion of full transparency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Modern artificial intelligence (AI) systems routinely outperform humans in a variety of cognitive tasks.Footnote 1 AI is commonly defined as a system’s ability to think like a human by gathering information from its environment, processing it, and then adaptively applying what it has learned to achieve specific objectives [25]. AI systems outperform humans in terms of accuracy, speed, cost, and availability. Every year, AI surpasses parity with humans in increasingly complex tasks. As the amount of available data, the computing power, and the capacity of machine learningFootnote 2 models increase, the abilities of AI will continue to improve.

Since AI has lower cost, higher availability, higher consistency, and often higher accuracy, it augments humans and has the potential to replace them in some tasks or jobs [3, 8]. Every year, more decisions are made using AI, including decisions that affect human lives and well-being. Even though AI systems create economic value, their decision-making processes are often opaque and uninterpretable by humans. As AI systems become more complex, they turn into black boxes, and it becomes challenging to explain to the user the reasoning and logic behind the systems’ recommendations and predictions [34]. This in turn affects the user’s ability to make informed decisions based on the systems’ outputs as they can be arbitrary and biased. Scholars and practitioners have become increasingly interested over the past decade in transparent and explainable AI, where the output from machines leading to decisions could be traced back, explained and communicated to the stakeholders impacted by the systems such as end-users, developers, society, and regulators [1, 38]. The general public has also added pressure for transparent and explainable AI, especially after the emergence of extreme examples of catastrophic decision-making failures by AI systems such as Amazon Alexa recommending a child to undertake a lethal challenge,Footnote 3 a Tesla vehicle crashing due to autopilot failure,Footnote 4 and a Microsoft chatbot offering sexist and racist remarks.Footnote 5

While some decisions are entirely automated with AI, such as deciding which emails are considered spam on an email client or what additional products to recommend for a user on an e-commerce website, AI systems are often employed as decision support systems rather than as decision-makers. For example, AI systems are now being used to screen resumes to shortlist candidates for interviews while the ultimate decision is still in the hands of a human using the system. Thus, examining transparency in AI systems from the perspective of AI augmentation is helpful. In this perspective, humans use AI to enhance their cognitive and analytical decision-making capacities; however, humans retain control over and responsibility for the decisions. In a survey conducted by Gartner in 2021,Footnote 6 over two-thirds of employees in the US indicated that they would like AI to assess their decisions and accomplish tasks. Examples of these tasks include reducing mistakes, solving problems, discovering information, and simplifying processes. While the employees expressed their desire for AI to assist them with their jobs, they also wanted to retain control over the decisions made.

Whether the control over the final decision lies with a machine or an employee, this does not negate the significance of unlocking the black box and having transparency about decisions made by, or assisted by, AI. There is always the risk that unexplainable wrong decisions can be made. This raises potential liability and ethical concerns related to deploying AI tools in high-risk decision-making contexts, such as healthcare. Despite increased efforts to achieve transparent and explainable AI, current studies have yet to examine whether transparency as a concept is required in all contexts or whether AI transparency requirements differ across different jobs and tasks. This paper addresses the following question: Are jobs and tasks at various workplaces subject to different AI transparency requirements?

To systematically study AI transparency in different roles, we examined AI transparency at the level of “job tasks” that AI systems can augment. To do this, we developed a model to determine the jobs and tasks that are highly susceptible to AI augmentation. Then we analyzed the required level of transparency for the adoption of AI systems. We found that the required level of transparency, in most cases, is not different from the transparency required when adopting other traditional technologies. Moreover, we observed that the required level of transparency differed based on the perceived risk of the performed task. Hence, we argue in this paper that AI transparency should be pragmatic and that each job and task might require different levels of transparency.

This paper is organized as follows. In Sect. 2, we present an overview of the burgeoning literature on AI transparency and note the need for a perspective that considers transparency through the lens of AI augmentation. Moreover, the tradeoffs between making AI systems either fully or less transparent are examined, and an overview of the relationship between typical AI errors and transparency is provided. In Sect. 3, we detail the methodology used to determine the jobs and tasks that are susceptible to AI augmentation. In Sect. 4, we describe how the data were analyzed and we present the results. In Sect. 5, we discuss the implications of our analysis. We conclude in Sect. 6 with recommendations for future work.

2 Background and literature review

The notion of transparency is multifaceted, and its theoretical conceptualization has been expanding, especially in the last ten years. This idea has been discussed in many disciplines, including computer science, social sciences, law, public policy, and medicine [26]. In fields related to AI, machine learning, and data science, transparency has become an important issue, as AI systems are becoming more complex, and their characteristics, processes, and outcomes have become more difficult to unpack and understand [14]. Scholars refer to this as the issue of the black box, in which algorithmic models become opaque either by intention or due to the increasing complexity of the models, causing the process that occurs before an input becomes an output to be opaque and difficult to understand [7, 17, 24]. The main objective of efforts to promote transparency in the field of AI has become to resolve the issue of the black box and to enable an understanding of how and why an AI system derives a decision or an output [19]. By resolving this issue, organizations and individuals using AI systems can be held accountable for their decisions, and users affected by AI-assisted decisions can contest the outcomes created by these systems [1, 28]. In this section, we review the different meanings of transparency, articulate its benefits and limitations, and provide an overview of the notion of AI errors and their impact on transparency.

2.1 Definition of transparency

A survey of the literature indicated that ambiguity exists regarding what transparency means. It is frequently used interchangeably with other terms, such as explainability, interpretability, visibility, accessibility, and openness [18]. Moreover, scholarly works have assigned different forms and typologies to AI transparency [2]. For example, Walmsley [35] distinguished between functional and outward transparency. Functional transparency is associated with the inner elements of the AI system, and outward transparency is related to external elements that are not part of the system, such as developers and users. Similarly, Preece et al. [29] tied transparency to explainability and distinguished between the transparency-based explanation, which is concerned with understanding the inner workings of the model, and the post hoc explanation, which is concerned with explaining a machine’s decision without unpacking the model’s inner workings. Similar to the work by Preece et al. [29], Zhang et al. [39] also tied transparency to explainability and distinguished between the local explanation, which refers to the explanation of the logic behind a single outcome, and the global explanation, which refers to the explanation of how the entire algorithmic model works. Felzmann et al. [19] classified transparency as prospective and retrospective. Prospective transparency deals with unveiling information about the working of the system before the user starts interacting with it, whereas retrospective transparency refers to the ability to backtrace a machine’s decision or outcome and provide post hoc explanations of how and why a decision or an outcome was derived.

In this paper, we adopt the definition of transparency provided by the High-Level Expert Group on Artificial Intelligence (AI HLEG) and view transparency as achieving three elements: traceability, explainability, and communication [1]. Traceability refers to the enabling of the retrospective examination of a system by keeping a log of the system’s development and implementation, including information about the data and processes implemented by the system to produce an output (e.g., a decision). Explainability refers to the ability to explain the technical process and the rationale for the AI system’s output. Communication refers to the communication of information about the AI system to the user, including information about the system’s accuracy and limitations so that the user is aware of what they are interacting with [1].

To achieve transparency, system owners might disclose information about data training and analysis, release source code, and provide output explanations [5]. Scholars have argued that achieving transparency requires viewing AI systems as sociotechnical artifacts, meaning that they cannot be separated from the context in which they are developed and deployed, and they cannot be isolated from cultures, values, and norms [16]. Moreover, AI systems are governed by different stakeholders, each requiring different levels of transparency to satisfy their needs. For example, Weller [38] identified developers, users, society, experts/regulators, and deployers as distinct stakeholders with different transparency requirements. A developer might require transparency to verify whether the system is working as intended, eliminate errors, and enhance the system, while users require transparency to ensure that the outcome of the AI system is not flawed or biased and to increase trust in future outcomes [19]. Since different stakeholders have different needs, releasing the source code, for example, might meet the transparency requirements of developers but not users, as they might not understand what the code does.

2.2 The benefits and limitations of transparency

Enhancing the transparency of AI systems could lead to several benefits. The first and one of the most assumed is an increase in users’ trust [12]. Schmidt et al. [32] indicated that the general perception in the literature is that transparency increases trust in AI systems and that system owners can enhance such trust by providing users with simple and easy-to-understand explanations of the system’s output [27, 40]. In the context of AI systems, trust is more important than in other traditional engineering systems because AI systems are based on induction, meaning that they make generalizations by learning from specific instances rather than applying general concepts or laws to specific applications. The second benefit of transparency is ensuring that AI systems that directly impact people do not engage in discrimination to achieve fairness [36]. The third benefit of transparency is the enabling of accountability by reducing information asymmetry, thus allowing organizations and individuals to be held accountable for their decisions [18, 20, 28].

Despite the purported benefits of transparency, several studies have indicated that these benefits might be limited, and in some cases, transparency might have negative consequences [2, 13, 38]. De Laat [13] listed four different areas where transparency might lead to limited benefits and negative consequences. The first is the tension between transparency and privacy. Releasing datasets publicly might violate the privacy of the individuals included in the dataset. Existing research suggests that individuals can be reidentified in many publicly available anonymized datasets (see [31]). The second area is the possible manipulation of an AI system. If information about the inner workings of a system, such as a source code, is released, the system can be manipulated to either prevent it from working as intended or to produce a favorable outcome for the manipulator. For example, knowing that an autonomous vehicle will force a stop if a moving object appears less than 1.5 m from the car, a person can use this information to make an autonomous car permanently idle and prevent it from moving [16]. The third area is related to protecting the property rights of firms that own AI systems. Requiring firms to publish the source code of their AI systems might infringe upon their property rights, affect their competitive stances, and disincentivize them from innovating [12, 33]. The fourth area deals with “inherent opacity,” in which the information disclosed about an AI system might not necessarily be interpretable and understandable, thus failing to achieve the objective of transparency [13].

Ananny and Crawford [2] identified additional issues and limitations related to transparency. First, transparency can be intentionally used to mislead or conceal. Firms might purposefully disclose huge amounts of information and data when adhering to regulations, making it costly and time-consuming to understand and process these data, thus limiting the usefulness of transparency. Second, the correlation between transparency and trust has yielded mixed outcomes in different studies. For example, in their study on recommender systems in the field of cultural heritage, Cramer et al. [9] found no positive correlation between transparency and trust. In the field of public policy, De Fine Licht [11] and Grimmelikhuijsen [22] also found no strong evidence that increasing transparency increases trust. Therefore, whether users consider predictions and recommendations from machines or humans to be more trustworthy is also inconclusive. In their study, Dietvirst, Simmons, and Massey [15] found that people are averse to algorithmic predictions and recommendations and prefer recommendations from humans, even if they could observe that the algorithms outperformed humans. They also found that people lose confidence in algorithms more quickly than humans when witnessing mistakes. Contrary to these findings, Logg, Minson, and Moore [27] found that people actually appreciate predications and recommendations coming from algorithms more than from humans, even when they do not understand how the algorithms make the recommendations [35]. Given the aforementioned issues and limitations of transparency, many scholars argue that achieving full transparency is undesirable, if not impossible [12, 13, 24, 30].

In summary, there is no agreed-upon definition of transparency, as it takes on different meanings in different disciplines. The literature also indicates that transparency is beneficial, although absolute transparency could have negative consequences. Despite several attempts to formalize the concept and definition of transparency in AI, to our knowledge, no work has examined transparency from the lens of AI augmentation in the workplace.

2.3 AI errors and transparency

The nature of AI errors has implications for the type of transparency required of AI systems. Current AI algorithms, or more precisely, machine learning algorithms, are based on statistical generalization; in other words, they learn from data samples to make out-of-sample decisions. Thus, AI algorithms are inductive. This is in contrast to systems that are deductive, as they are based on applications of generalized laws. For example, the automatic take-off and landing system in aircraft is an application of physics laws that are universally true for all practical purposes.

Consider an AI system that screens resumes to shortlist candidates for a job. Suppose an HR department decides to test such a system. They prepare a job description, manually curate a list of 100 resumes, and select 10 that match the position. When the AI system is fed the job description and the 100 resumes, it returns 15 resumes as candidates for screening. What level of transparency should accompany the resume screening system? In this particular case, the HR department can calculate both the precision and recall of the system, as it has curated a ground truth set. In practice, this will not always be possible. It is important to know that most AI systems will be based on some form of scoring and that there will usually be a score threshold that a user can tune based on their needs. A high threshold usually results in high precision and low recall, and a low threshold leads to low precision and high recall. In practice, if the advertised job is for a routine, low-skilled position, then high precision is sufficient to select candidates for further evaluation. However, if the position is for a high-skilled job, then high recall is important to avoid overlooking a suitable candidate whose skill set matches the job but is not presented in a standard resume template.

Another important aspect of using AI systems is understanding the bias–variance trade-off of the underlying algorithms. For practical purposes, this is a specialized skill that somebody in the organization should possess. Linear or shallow models generally have high precision and low recall while the opposite is true for non-linear and deep models [23]. From a transparency perspective, if coarse information on the complexity of the model is provided, then this should be sufficient to appreciate the behavior of the AI system over time. With more experience, an organization may ask a vendor to customize shallow or deep models for different groups of jobs. For example, shallow models for low-skill jobs and deep models for high-skill jobs can be created, with the caveat that the vendor of the AI system had sufficient data to calibrate across the job spectrum.

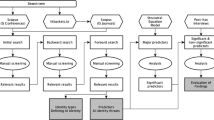

3 Methodology

We have developed a machine learning model for computing AI augmentation scores (AIAScores) for all jobs and job tasks that appear in the Occupational Information Network (O*NET)Footnote 7 database. Our model extends the work of Michael Webb’s method of creating AI exposure scores, which is based on counting the number of common verb–noun pairs that appear in O*NET and a database of AI patents [37]. Instead, we used word embeddings for both job descriptions and patents and designed a supervised learning model (see Sect. 3.1) to create an AIAScore. The O*NET database is maintained by the US Department of Employment and has been used worldwide for studies on the impact of AI on the future of employment [21, 37]. We used Google’s patent database to extract more than 1.5 million patents related to AI. Once we were able to obtain augmentation scores for the different jobs in the O*NET database, we carried out another analysis to understand the transparency requirements for the tasks of the different jobs. We took all the job tasks’ embedding vectors and applied a k-medoid algorithm to obtain 100 clusters (i.e., each job task, independent of the job description to which it belonged, was assigned to 1 of 100 clusters). In k-medoid clustering, the representative member of the cluster is an actual task, not a hypothetical “average job task.”Footnote 8

3.1 AIAScore model

As mentioned in Sect. 3, we extend Michael Webb’s method [37] for computing an “exposure score” for jobs to AI technology. For each task in a job, Webb computes a propensity score based on the frequency of co-occurrence between verb–noun pairs that appear in a job task and AI patents. The higher the propensity score between a job task and an AI task, the greater the exposure score. The propensity score of a job is the aggregate of the propensity scores for all tasks within the job description. For example, a typical task for an accountant is analyzing accounting records. The verb is analyzed, and the noun is accounting. The percentage of AI patents that contain verb–noun pairs similar to analyze–accounting is a measure of the exposure of the task to AI technology. Another common verb–noun pair for an accountant is maintain–records, which might be associated with software (database) technology but not AI technology. Our extension of Webb’s work is to use modern language models and supervised learning to compute an AIAScore. Our model was developed as follows:

-

1.

We first used FastText embeddings to create a vector embedding of all patents [6]. We denoted a vector embedding for patent P as wP. The dimensionality of each vector was 300.

-

2.

We used supervised learning to build a neural network-based softmax classifier that uses patent embeddings as the input and output of the Cooperative Patent Classification (CPC) code of a patent. All patents are assigned one or multiple CPC codes (by the patent office), and a subset of known CPC codes is used for AI patents. For example, suppose there are three CPC codes [C1, C2, and C3], and C1 is an AI CPC code. Furthermore, suppose the classifier assigns scores of 0.6, 0.3, and 0.1 to a patent vector wP. Then, the AIAScore of the patent is 0.6.

-

3.

To compute the AIAScore of a job task t, we first compute the FastText embedding wt and pass it through the trained classifier. Let Fθ be the trained classifier, C be the set of CPC codes, and CA be the subset of CPC codes related to AI.

$$\mathrm{AIAScore}\left({\mathrm{w}}_{\mathrm{t}}\right)= \sum_{\mathrm{i}\in {\mathrm{C}}_{\mathrm{A}}}{\mathrm{F}}_{\uptheta ,\mathrm{i}}$$ -

4.

Let TJ represent a set of tasks in a job J. Then, the

$${\text{AIAScore}}(J) = \frac{1}{{\left| {T_{J} } \right|}}\sum\limits_{{t \in T_{J} }} {{\text{AIAScore}}(w_{t} )}$$

3.2 Data

To train our AIAScore model, we used two datasets. The O ∗ NET database has an extensive dataset covering most aspects of jobs and occupations in the US market. The information of interest for us in this database included data on more than 20,000 tasks from over 1000 job descriptions and the importance rating of a task within a job. The second dataset is the Google database, with over three million full-text patents and their associated CPC codes.Footnote 9 Our AIAScore model is available online.Footnote 10

4 Results

Using the O*NET database, we extracted a list of 100 tasks belonging to different jobs and representing the whole population of the tasks in the database; see Appendix A for the full list of tasks and the distribution of AI augmentation scores. This was achieved by clustering tasks into 100 groups and selecting the centroid tasks in each one. The distribution intervals of the AI augmentation scores for the tasks are illustrated in Table 1.

To analyze the different types of tasks, we extracted the verbs describing each one and calculated the average AI augmentation score associated with each verb. Based on this analysis, as presented in Appendix B, most tasks with low AI augmentation scores appear to be physical or mechanical in nature (e.g., position, set, grind, clean, install, remove). As the AI augmentation score increases, the verbs become strongly associated with cognitive tasks (e.g., design, act, determine, analyze, review, advise, evaluate, instruct, supervise). These findings are consistent with the existing literature that indicates AI is likely to impact cognitive jobs and tasks more significantly than mechanical [21].

Following the extraction of the 100 tasks, we selected two from each of the eight distribution intervals for further analysis. The main objective was to determine the risk category of the task if it was performed by an AI system and ultimately the required degree of transparency associated with it. Table 2 presents the 16 tasks and their assigned risk categories. To determine the risk categories, we implemented the categorization of risk for AI systems developed by the European CommissionFootnote 11 as follows:

Minimal risk: AI systems that pose minimal or no risk to the safety and rights of people. Such systems typically do not cause physical or psychological harm and have no or limited active interaction with humans, that is, they tend to work in the background. Examples of such AI systems are video games and spam filters.

Limited risk: Systems that are generally believed to be safe but have a very low likelihood of causing harm to people on rare occasions. These systems typically have direct and active interaction with humans, for example chatbots.

High risk: AI systems that have a high likelihood of causing damage to people if misused. Such systems can be found in multiple fields, such as education, law enforcement, transport, and healthcare with examples including surgical robots and facial recognition systems.

Unacceptable risk: Systems that are evidently causing harm or damage to humans and should not be implemented and/or deployed. Examples of this kind of AI are social scoring systems and real-time remote biometric identification.

To ensure that tasks were assigned the appropriate risk category, each was reviewed to determine if it involved direct human interaction and/or if it could lead to physical or psychological harm. First, the task was assessed to determine if it involved direct communication with a person (e.g., chatting) or had direct implications on a human subject (e.g., whether someone should get a bank loan or not). Subsequently, we analyzed whether the task could cause physical (i.e., injury or fatality) or psychological (i.e., depression or anxiety) harm to another human being.

From the analysis outlined in Table 2, several observations emerged. First, tasks with low AI augmentation scores (i.e., tasks 1 to 6) involve no direct interaction with humans and are not associated with physical or psychological harm. As the augmentation score increases, tasks involve higher levels of human interaction and greater potential for physical and/or psychological harm. For example, tasks 7 and 10 both involve direct human interaction and could cause harm. Second, we observed that most of the tasks in which AI could lead to physical or psychological harm are associated with the healthcare or medical professions, and, third, harm was a possibility here even without direct human interaction, as in task 14. Fourth, none of the extracted tasks are associated with unacceptable risk.

5 Discussion

The main objective of this paper was to examine the level of AI transparency required by different tasks that are performed by employees in different occupations in an AI-augmented setting. Based on our analysis, the first finding indicated that tasks with low augmentation scores (i.e., less susceptible to AI augmentation) did not involve direct human interaction and were not associated with physical or psychological harms; therefore, they were categorized as minimal-risk tasks. This indicates that tasks that require physical or mechanical labor are less likely to be fully augmented by AI and would always involve a human leading the activities associated with them. Since a human will always be leading the activities associated with these tasks, and they pose no risk of physical or psychological harms, we expect that such tasks would have low or no transparency requirements. This expectation stems from the fact that responsibility for such tasks is clearly held by the person performing the task, and the transparency requirement would not differ from situations in which an AI system is not used at all. On the other hand, the findings indicated that, as the augmentation score increased beyond 30%, the tasks involved more human interaction and posed a higher risk of physical and psychological harms; hence, most of the tasks with a 30% augmentation score or higher were categorized as minimal or limited risk tasks. In this kind of task, the role of humans is diminished, while the role of the AI system becomes more prominent. As a result, these types of tasks are associated with higher transparency requirements. However, what does higher transparency mean here? Higher transparency refers to the need to communicate the process required to complete the activities associated with the task, providing a confidence interval or accuracy rate for the AI system(s) used to complete the task activities, and enabling contestability if the task results in any physical or psychological harm. Let’s consider task no. 8 from Table 2, involving a teacher supervising students, as an example. Assume that to monitor and evaluate the work of the students, the teacher uses an AI system to auto-grade the students’ assignments. The task of supervising the student is considered to pose a limited risk as it involves direct human interaction and might involve psychological harm. In this case, transparency does not refer to explaining how the grading system works and publishing its source code. Rather, transparency refers to communicating how the teacher evaluates the students’ work, how and when the AI system is involved in the evaluation, and what the accuracy rate of the AI system is. Moreover, students should be allowed to contest an evaluation in which an AI system was involved, requiring the intervention of a human (i.e., the teacher) to verify the outcome of the AI system and correct any errors. In this example, even though the role of the AI system might be more prominent than that of the human, it is always the human (i.e., the teacher) who bears responsibility for the outcome of the task.

The findings indicated that tasks categorized as high risk were typically found in the medical profession, and they usually involved direct human interaction and had a high likelihood of causing physical and/or psychological harm. Let’s consider task no. 9 from Table 2 as an example of one that involves a medical practitioner (i.e., a pharmacy assistant). Let’s assume that the pharmacy assistant uses an AI system that can process hand-written prescriptions, convert them to digital format, and prescribe the medicine, or an alternative if the prescribed medicine is not available, to the patient. In the event of an error, such as failing to read a doctor’s prescription correctly or prescribing the wrong medicine to a patient, the consequences might be severe, leading to physical and/or psychological harm to the patient. In this example, the transparency requirement is certainly higher than in the previous examples. First, traceability is essential, so there must be a record of what was input into the system and what the output was. Moreover, the data as well as the source code of the system must be verified and made available to regulators. Secondly, the output of the system should be explainable. For example, the system must justify why an alternative medicine was prescribed. Third, the process of prescribing the medicine should be communicated to the patient, informing him/her that an AI system was involved in completing the prescription task.

The above examples demonstrate that the requirement for transparency differs based on the risk associated with the task. In tasks with low augmentation scores, the transparency requirements are minimal or nonexistent because the tasks are performed mostly by a human, who clearly holds responsibility. On the other hand, transparency requirements increase as the tasks become more augmented by AI systems and the role of the human is reduced. Nevertheless, it remains the responsibility of humans to verify the outcome generated by AI systems. We believe that adapting the performance of the AI system to the context in which they are deployed will reduce the burden of responsibility on humans and ease the effort of verifying the outcomes of such systems. To achieve performance adaptation, we suggest paying more attention to the different types of AI errors—namely, precision vs. recall and also AI model design, for example, the bias vs. variance tradeoffs. In contexts in which an inaccurate outcome of an AI system (e.g., failing to recognize a stock item) might not lead to any physical or psychological harm, the classification threshold of the AI system can be set as “high,” leading to higher precision but lower recall. By having higher precision but lower recall, the outcome generated by the AI system is expected to be precise, but with a likelihood of omitting relevant outcomes. In settings where relevant outcomes pose no physical or psychological risk to other humans, the burden of responsibility on the human is reduced. On the other hand, in contexts in which an inaccurate outcome of a task (e.g., failing to identify the prescribed medicine for a patient) might lead to physical or psychological harm, the classification threshold of the AI system can be lowered, leading to higher recall but lower precision. By having higher recall but lower precision, the outcome generated by the AI system is expected to identify more relevant outcomes, but at the expense of having some noise (e.g., more false positives). In the example of the AI system aiding in prescribing medicine to patients, having a lower threshold will increase the likelihood that the pharmacy assistant will not miss medicines prescribed by the doctor, yet they will still need to verify with the patient that all the prescriptions identified by the AI system were indeed prescribed by the doctor. In this example, the burden of responsibility on the pharmacy assistant is reduced, as they can be more confident that they did not miss any prescribed medicine. At the same time, the verification process is eased, as the pharmacy assistant must verify the readily available information (i.e., the list of medicines prescribed to a patient), rather than searching for the omitted information (e.g., a missed drug prescription). As the use of AI-augmented systems becomes more widespread, employees will become more familiar with notions like precision and recall. However, appreciating more advanced concepts like the bias-variance trade-off will require expertise that only a few in any organization are likely to possess. However, mapping transparency requirements to AI-augmentation score and that in turn to model complexity will advance the debate on this topic in a quantifiable manner.

Although the focus of our work is on AI transparency in workplaces, our approach and findings could be applied in different settings. First, our machine learning model for computing AIAScores could be used to inform other research on the impact of AI on jobs. For example, our model could be used to identify jobs that are highly susceptible to AI augmentation and to examine the implications of that on gender and diversity in future jobs. Similarly, our model could be used to identify the jobs that are highly impacted by AI, which would enable other researchers to investigate the skills that should be developed by people whose jobs are impacted by AI. Second, our findings on AI transparency in the workplace could be applicable in other settings in which AI systems are implemented. For example, whether a human is involved in verifying the output of an AI system or not, classification thresholds and risk are still important factors to consider to achieve transparency. For example, when businesses implement online AI systems, such as chatbots (e.g., a bot that provides automated customer support), or recommendation systems (e.g., a system that recommends content for users), the perceived risk of the content generated by the AI system should influence the adjustment of the classification threshold for the system.

6 Conclusion

In this study, we analyzed job data from the O*NET database to examine the level of transparency required for tasks in AI-augmented settings. Our analysis shows that different tasks require different levels of transparency, depending on the AI augmentation score and perceived risk category. Our findings also indicate that tasks with low AI augmentation scores are likely to be physical or mechanical and require no algorithmic transparency, as such tasks are mostly performed by humans. We also found that as the perceived risk and AI augmentation score increase, the tasks become more cognitive and the required level of transparency increases. However, our analysis indicates that the transparency requirements for AI-augmented tasks are not much different from those of other traditional technologies.

This study offers several opportunities for future work. First, our data sample did not include instances of tasks that can be categorized under the unacceptable risk category. Although the European Commission advises against the deployment of AI systems falling under such a category, future studies should investigate the implications of these systems as well as the level of transparency required to mitigate their harms. Second, our study results indicate that having a good understanding of AI errors can help adapt the performance of AI systems and reduce the burden of responsibility for humans. Hence, future studies should focus on examining the impact of adaptive performance (e.g., tweaking the classification threshold of AI systems) on trust and the adoption of AI systems.

Notes

On ImageNet, an image database made for visual object recognition, the human's top-5 classification error rate is 5.1%, while the current state-of-the-art neural network has an error rate of less than 1%. See https://paperswithcode.com/sota/image-classification-on-imagenet (Accessed 27th October 2022).

Machine learning is a type of artificial intelligence in which the machine can learn and find patterns from data without direct human intervention [10].

https://www.theverge.com/2021/12/28/22856832/amazon-alexa-challenge-child-dangerous-electricity-algorithm (Accessed: 20th February 2022).

https://www.nytimes.com/2021/08/17/business/tesla-autopilot-accident.html (Accessed: 20th February 2022).

https://www.theguardian.com/technology/2016/mar/26/microsoft-deeply-sorry-for-offensive-tweets-by-ai-chatbot (Accessed: 20th February 2022).

https://venturebeat.com/automation/report-70-of-u-s-consumers-want-to-use-ai-for-their-jobs/ (Accessed 15th October 2022).

https://www.onetcenter.org/overview.html (Accessed 20th October 2022).

https://link.springer.com/referenceworkentry/10.1007/978-0-387-30164-8_426 (Accessed: 27th February 2022).

https://patents.google.com/ (Accessed 15th October 2022).

https://ai-job.qcri.org/ (Accessed 27th October 2022).

https://digital-strategy.ec.europa.eu/en/library/proposal-regulation-laying-down-harmonised-rules-artificial-intelligence (Accessed 27th February 2022).

References

AI HLEG: Assessment list for trustworthy artificial intelligence (ALTAI). Brussels: European Commission. https://futurium.ec.europa.eu/en/european-ai-alliance/document/ai-hleg-assessment-list-trustworthy-artificial-intelligence-altai (2020). Accessed 21 July 2022

Ananny, M., Crawford, K.: Seeing without knowing: limitations of the transparency ideal and its application to algorithmic accountability. New Media Soc. 20(3), 973–989 (2018). https://doi.org/10.1177/1461444816676645

Autor, D.H.: Why are there still so many jobs? The history and future of workplace automation. J. Econ. Perspect. 29, 3–30 (2015)

Bengio, Y., Lecun, Y., Hinton, G.: Deep learning for AI. Commun. ACM 64(7), 58–65 (2021). https://doi.org/10.1145/3448250

Bhatt, U., Xiang, A., Sharma, S., Weller, A., Taly, A., Jia, Y., Eckersley, P.: Explainable machine learning in deployment [Paper presentation]. FAT* 2020—Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 648–657 (2020). https://doi.org/10.1145/3351095.3375624

Bojanowski, P., Grave, E., Joulin, A., Mikolov, T.: Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 5, 135–146 (2017)

Bollen, L.H., Meuwissen, M., Bollen, L.: Transparency versus explainability in AI (2021). https://doi.org/10.13140/RG.2.2.27466.90561

Brynjolfsson, E., McAfee, A.: The second machine age. WW Norton (2016)

Cramer, H., Evers, V., Ramlal, S., Van Someren, M., Rutledge, L., Stash, N., Wielinga, B.: The effects of transparency on trust in and acceptance of a content-based art recommender. User Model. User-Adapt. Interact. 18 (2008). https://doi.org/10.1007/s11257-008-9051-3

Davenport, T. H., Brynjolfsson, E., McAfee, A., Wilson, H. J.: Artificial intelligence: The insights you need from Harvard Business Review. Harvard Business Press (2019)

De Fine Licht, J.: Policy area as a potential moderator of transparency effects: an experiment. Undefined 74(3), 361–371 (2014). https://doi.org/10.1111/PUAR.12194

De Fine Licht, K., De Fine Licht, J.: Artificial intelligence, transparency, and public decision-making. AI & Soc. 35(4), 917–926 (2020). https://doi.org/10.1007/s00146-020-00960-w

De Laat, P.B.: Algorithmic decision-making based on machine learning from Big Data: can transparency restore accountability? Philos. Technol. 31(4), 525–541 (2018). https://doi.org/10.1007/s13347-017-0293-z

Dexe, J., Franke, U., Rad, A.: Transparency and insurance professionals: a study of Swedish insurance practice attitudes and future development. Geneva Pap. Risk Insur.: Issues Pract. 46(4), 547–572 (2021). https://doi.org/10.1057/S41288-021-00207-9/TABLES/1

Dietvorst, B.J., Simmons, J.P., Massey, C.: Algorithm aversion: People erroneously avoid algorithms after seeing them err. J. Exp. Psychol. Gen. 144(1), 114–126 (2015). https://doi.org/10.1037/xge0000033

Dignum, F., Dignum, V.: How to center AI on humans. In: NeHuAI@ECAI (2020)

Ebers, M.: Regulating explainable AI in the European Union. An overview of the current legal framework(s). SSRN Electron. J. (2021). https://doi.org/10.2139/SSRN.3901732

Felzmann, H., Fosch, E., Christoph, V.: Towards transparency by design for artificial intelligence. Sci. Eng. Ethics 26(6), 3333–3361 (2020). https://doi.org/10.1007/s11948-020-00276-4

Felzmann, H., Villaronga, E.F., Lutz, C., Tamò-Larrieux, A.: Transparency you can trust: transparency requirements for artificial intelligence between legal norms and contextual concerns. Big Data Soc. 6(1), 1–14 (2019). https://doi.org/10.1177/2053951719860542

Forssbaeck, J., Oxelheim, L.: The multi-faceted concept of transparency. https://www.ifn.se (2014)

Frey, C.B., Osborne, M.A.: The future of employment: How susceptible are jobs to computerisation? Technol. Forecast. Soc. Change 114, 254–280 (2017). https://doi.org/10.1016/j.techfore.2016.08.019

Grimmelikhuijsen, S.: Linking transparency, knowledge and citizen trust in government: an experiment. Int. Rev. Adm. Sci. 78(1), 50–73 (2012). https://doi.org/10.1177/0020852311429667

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction, 2nd edn. Springer, New York (2009)

Hayes, P.: An ethical intuitionist account of transparency of algorithms and its gradations. Bus. Res. 13(3), 849–874 (2020). https://doi.org/10.1007/S40685-020-00138-6

Kaplan, A.: Haenlein, M: Siri, Siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 62(1), 15–25 (2019)

Larsson, S., Heintz, F.: Transparency in artificial intelligence. Intern Policy Rev. 9(2), 1–16 (2020). https://doi.org/10.14763/2020.2.1469

Logg, J.M., Minson, J.A., Moore, D.A.: Algorithm appreciation: People prefer algorithmic to human judgment. Org. Behav. Hum. Decis. Process. 151, 90–103 (2019). https://doi.org/10.1016/j.obhdp.2018.12.005

Matthews, J.: Patterns and anti-patterns, principles and pitfalls: accountability and transparency in AI. AI Mag. 41(1), 82–89 (2020). https://doi.org/10.1609/aimag.v41i1.5204

Preece, A., Harborne, D., Braines, D., Tomsett, R., Chakraborty, S.: Stakeholders in Explainable AI. http://arxiv.org/abs/1810.00184 (2018). Accessed 21 July 2022

Rai, A.K.: Machine learning at the patent office: lessons for patents and administrative law. SSRN Electron. J. (2019). https://doi.org/10.2139/ssrn.3393942

Rocher, L., Hendrickx, J.M., De Montjoye, Y.-A.: Estimating the success of re-identifications in incomplete datasets using generative models. (n.d.). https://doi.org/10.1038/s41467-019-10933-3

Schmidt, P., Biessmann, F., Teubner, T.: Transparency and trust in artificial intelligence systems. J. Decis. Syst. 29(4), 1–19 (2020). https://doi.org/10.1080/12460125.2020.1819094

Umbrello, S., Yampolskiy, R.V.: Designing AI for explainability and verifiability: a value sensitive design approach to avoid artificial stupidity in autonomous vehicles. Int. J. Soc. Robot. 3, 1–10 (2021). https://doi.org/10.1007/S12369-021-00790-W/FIGURES/2

Vorm E. S.: Assessing demand for transparency in intelligent systems using machine learning. In: Proceedings of the 2018 Innovations in Intelligent Systems and Applications. IEEE, 1–7 (2018)

Walmsley, J.: Artificial intelligence and the value of transparency. AI & Soc. 36(2), 585–595 (2021). https://doi.org/10.1007/s00146-020-01066-z

Warner, R., Sloan, R.H.: Making artificial intelligence transparent: fairness and the problem of proxy variables. SSRN Electron. J. (2021). https://doi.org/10.2139/SSRN.3764131

Webb, M.: The impact of artificial intelligence on the labor market. SSRN Electron. J. (2019). https://doi.org/10.2139/SSRN.3482150

Weller, A.: Transparency: motivations and challenges. In: Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, 23–40 (2019)

Zhang, Y., Vera Liao, Q., Bellamy, R.K.E.: Effect of confidence and explanation on accuracy and trust calibration in AI-assisted decision making [Paper presentation]. FAT* 2020—Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency, 295–305 (2020). https://doi.org/10.1145/3351095.3372852

Zhao, R., Benbasat, I., Cavusoglu, H.: Do users always want to know more? Investigating the relationship between system transparency and users’ trust in advice-giving systems. https://aisel.aisnet.org/ecis2019_rip/42 (2019). Accessed 21 July 2022

Funding

Open Access funding provided by the Qatar National Library.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Al-Sulaiti, G., Sadeghi, M.A., Chauhan, L. et al. A pragmatic perspective on AI transparency at workplace. AI Ethics 4, 189–200 (2024). https://doi.org/10.1007/s43681-023-00257-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-023-00257-w