Abstract

Choices and preferences of individuals are nowadays increasingly influenced by countless inputs and recommendations provided by artificial intelligence-based systems. The accuracy of recommender systems (RS) has achieved remarkable results in several domains, from infotainment to marketing and lifestyle. However, in sensitive use-cases, such as nutrition, there is a need for more complex dynamics and responsibilities beyond conventional RS frameworks. On one hand, virtual coaching systems (VCS) are intended to support and educate the users about food, integrating additional dimensions w.r.t. the conventional RS (i.e., leveraging persuasion techniques, argumentation, informative systems, and recommendation paradigms) and show promising results. On the other hand, as of today, VCS raise unexplored ethical and legal concerns. This paper discusses the need for a clear understanding of the ethical/legal-technological entanglements, formalizing 21 ethical and ten legal challenges and the related mitigation strategies. Moreover, it elaborates on nutrition sustainability as a further nutrition virtual coaches dimension for a better society.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Individual choices of people in our society are constantly influenced by online media, recommendations and suggestions powered by artificial intelligence, impacting all sorts of domains on a daily basis. Consequently, industry and academia are intensifying their effort to improve the number and quality of possible alternatives to be suggested to the user [1]. By doing so, the services consumption and user satisfaction could be maximized, but at what cost? Conflicting interests can be identified, e.g., recommendation of food according to user’s taste, but in conflict with dietary recommendations, healthy behavior goals, or sustainability principles.

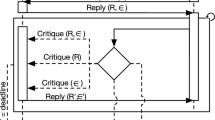

Recommender systems (RS) [2] have reached remarkable accuracy and efficacy in several domains, including lifestyle, infotainment, and e-commerce [3, 4]. Nevertheless, more sensitive areas (i.e., nutrition) demand more complex dynamics beyond conventional RS’ capabilities. Indeed, today’s nutrition support and education domain demands Virtual Coaching Systems (VCS), which are considered to integrate additional dimensions w.r.t. conventional RS and, therefore, are more suitable for such sensitive scenarios. In particular, VCS leverage persuasion techniques, argumentation, informative systems, and RS (see Fig. 1). Early approaches show promising results, and their efficacy is impending the human coaches. However, the cutting-edge techniques and technologies used to push the boundaries of modern VCS’ efficacy and efficiency raise important ethical and legal concerns. In particular, to this end, the generation of a clear understanding of the ethical/legal-technological entanglements is outstanding. Prior studies principally focus on Food RS, proposing evaluation frameworks [5, 6]. However, to the best of our knowledge, they do not scale to address the more complex nutrition virtual coaches (NVC).

The contribution of this paper focuses on the adaption and extension of existing frameworks to evaluate ethical, legal, and sustainability concerns w.r.t. NVC. In particular, it

-

identifies and analyzes ethical and legal challenges characterizing all the dimensions (overlapping areas included) of NVC;

-

amends earlier studies (i.e., [5, 6]) applying an analysis from a more comprehensive perspective and discussing mitigation strategies;

-

elaborates on legal boundaries, concerns, and possible solutions w.r.t. NVC;

-

tackles nutrition sustainability as an ethical dimension.

The rest of the paper is organized as follows. Section 2 provides the needed background on the NVC’s components, and ethics in AI pivoting around the concept of nudges. Section 4 presents and elaborates on the ethical challenges and possible mitigation strategies. Section 6 raises questions about the sustainability of nutrition patterns and articulates possible NVC’s contribution to create sustainable habits or lifestyles. Section 5 evolves from the ethical concerns toward the analysis of concrete legal boundaries and possible gray areas that impel clarifications.

2 Virtual coaching components and the ethics of nudges

Ethics has focused on the study of human behavior since the time of ancient Greek philosophers, and it has been later referred to as moral by the Romans [7]. As human societies evolve, these moral and ethical principles may evolve or be questioned and nuanced. Likewise, the continuous evolution of AI research and its application to recommender systems produces new requirements, understandings, practices, architectures, models, and norms (e.g., see VCS, and AI predictive systems in general). Therefore, given the influence of AI-based systems in individual decision-making, the considerations about ethics and morals principles must timely evolve accordingly, especially in sensitive scenarios. By evolve, we refer to re-assessments and possible adjustments/extensions of ethical concepts, aspects, and assets.

As a matter of fact, ethical concerns have been entangled with AI since its beginnings. The numerous domains of application (e.g., nutrition, behavioral change, and ehealth [5, 6, 8]), the increasing computation capabilities and communication means (e.g., via natural language processing, empathy communication [9]) exacerbate the implications characterizing such concerns and require new careful considerations. Hagendorff [10] analyzed 22 major AI ethics guidelines from academy institutions and industry leaders and identified in accountability, privacy and fairness the ethical aspects present in 80% of the reviewed guidelines [10].

As a general response to the accountability-related concerns, the AI community has undertaken the challenge of reducing machine learning and deep learning predictors’ opacity [11, 12]. Such a challenge entails the capability of explaining their behaviors. Moreover, it has been highlighted the need for multi-modal explanations to reduce human biases in interpretation [13].

Concerning privacy, several works and regulations have outlined good practices for treating sensitive data within an AI system. The General Data Protection Regulation (GDPR) is a legal code valid in the European Union (EU) that regulates personal data and privacy treatment and circulation. The GDPR introduces a set of privacy requirements and practices and legal enforcement for those systems that deal with personal data [14, 15]. Moreover, recent research started to develop decentralized and personalized approaches allowing for user-centric, distributed, and automated management of privacy in mobile apps and social network applications [16].

Concerning fairness, the need to reduce/eliminate biases affecting the data collection, process, and analysis within an AI system is particularly challenging to tackle. Indeed, such biases can affect user experience, data, and algorithms [17]. Being ML and DL highly data-dependent, the magnitude of the bias effect can be extremely significant. Several toolkits have been developed to assess and mitigate biases in AI. For instance, the AI Fairness 360 (AIF360) Python toolkit, allows detecting, understanding, and mitigating algorithmic bias within industrial critical support decision systems [18, 19]. Moreover, IBM, Microsoft, FAO, and the Italian Ministry of Innovation have signed an agreement to promote six principle (transparency, inclusion, accountability, impartiality, reliability, and security and privacy) within ethical approaches to AI that must rely on a sense of shared responsibility among the international organizations, governments, institutions, and the private sector [20].

As mentioned in the previous section, NVC go beyond RS’ capabilities (see Fig. 1)—henceforth responsibilities. In particular, they could involve the users in debates to inform, educate, and persuade them, as well as learn from them via argumentation-based negotiations. In other words, the users and the NVC are expected to interact dynamically over the recommendation, enriching both parties’ knowledge and adherence. To do so, NVC have to leverage techniques and domain-specific components (e.g., food) recommendation, informative and assistive, persuasive, and argumentation-based systems and techniques. Below follows a brief description of such cornerstones.

2.1 Informative and assistive systems

These systems are often conceived including principles of multi-agent systems, i.e., intelligent, autonomous, collaborative/competitive, virtual entities with bounded rationale and knowledge [21, 22]. Such agents are a virtual embodiment of the user, collecting their data, and providing personalized interactions [23, 24]. Moreover, virtual agents can interact among themselves, asking for and providing services/data to each other.

The agents’ intelligence, autonomy (i.e., proactivity), knowledge, and overall behavior raise several ethical concerns when dealing with users’ sensitive data. Sanz [25] analyzed several models for constructing moral or ethical-aware agents (e.g., the Artificial Moral Agent—AMA—which is supported by the cognitivist moral theory). In particular, AMA includes the implementation of intelligence, autonomy (self-governance), self-reflection, and at least one practical identity (e.g., personality) [26]. However, AMA implies limitations, such as a lack of self-awareness. For example, on one hand, artificial identity can only conceive precoded values. On the other hand, an artificial identity can rewrite and reconstitute their identities, becoming too volatile [25]. After all, virtual entities (even AMA) perform symbolic and sub-symbolic data processing, which lack the means to punctually deal with contextual information and cannot develop a social awareness as a human would. Thus, ensuring moral behaviors purely from a “thinking machine” perspective is still a hot topic under discussion.

2.2 Recommender systems (RS)

Such tools are decision-support systems intended to deliver suitable suggestions of products or services to a user based on their profile and collected data [27, 28]. RS are increasingly used in domains including e-commerce [29,30,31,32,33], food and nutrition [3, 34,35,36], and e-health [37,38,39]. The nature and impact of recommendations can vary significantly and, therefore, have long-term influence on user choices. Recommendations can be non-personalized (not requiring any prior knowledge about any specific user [40] or personalized (requiring a remarkable amount of knowledge about the targeted user). Non-personalized recommender systems employ techniques leveraging generic information, including items’ popularity, novelty, price, and distance, to sort the possible items of interest. Basket modeling and analysis are among the most prominent techniques in retail companies to identify complementary items (items that are usually bought together) [41, 42]. Personalized RS leverages users’ preferences, behavior, demographics, location, language, and other characteristic (personal) details to achieve a deep understanding of them [43, 44]. Such data are usually obtained by tracking the users [45, 46]. A popular mechanism to determine users’ preferences is the rating. Such user feedback can qualify an item explicitly or implicitly (if the preference is deduced from the user’s behavior) [47, 48]. For instance, when a user buys an item or puts it on the wish list, this behavior can be interpreted as a favorable inclination toward that item. As summarized in [49], the most adopted techniques include collaborative filtering (CF)—(leveraging users’ similarities and ratings [50]), content-based filtering (CBF)—(recommending similar items based on similar profiles’ previously liked items [51]), Knowledge-based recommendation (KB)—(based on the user preferences and constraints [52]), and Hybrid recommendation (HR)—(combining the techniques mentioned above [53]). In particular,

-

CF

exploits data collected from many users to identify tastes and similarities between items and users [28, 54, 55]. Based on such information, the expected ratings of unseen items are calculated [56, 57]. CF algorithms can be classified as memory-based (using neighborhood) [58], model-based (matrix factorization, tensor completion) [59, 60], hybrid CF (combining model-based and memory-based) [61], and deep learning-based [62, 63] approaches.

-

CB

leverages items’ characteristic features to provide new recommendations, and it is suitable when the user is directly interested in them [64, 65].

-

KB

encodes the knowledge about a given domain, and it is suitable when the variability and personalization options are broad and require both domain and item-specific knowledge [28, 66].

-

HR

includes combinations of two or more approaches to produce more robust recommender systems. For example, the dependency on ratings entails disfavoring unrated items in a CF approach. Nevertheless, CF could be combined with a KB approach (if items contain attributes) [61, 67].

2.3 Persuasion techniques and processes

These approaches are intended as “activities that involve one party trying to induce another party to believe something or to do something” [68]. Persuasion techniques have been predominantly used in healthcare, where the benefits of behavioral change are remarkably beneficial for the individual in all the nuances of the domain. Indeed, healthcare systems have evolved toward patient-centered care over the past decades to improve medical indicators and quality of life in general. As a result, people have progressively become more autonomous in adopting healthy behaviors, mainly through active health education, ensuring appropriate follow-up of care, and monitoring by health professionals. The use-cases adopting these techniques include psychological support [69], elderly care [70], chronic diseases [71], wellness [72], healthy diet [49], smoking cessation [73], telerehabilitation [74], and weight activities [75]. Intelligent systems and Web-based applications are typically at the core of most of the proposed solutions in the literature. Indeed, the concomitant market growth of mobile applications, devices, sensors, and connected watches has fostered the development of online health and wellness applications [76].

The most implemented/associated persuasion theories are the Persuasive System Design (PSD) [77,78,79], Fogg’s behavioral models [79, 80], Social cognitive theory (SCT) [81, 82], and Self-determination theory (SDT) [83]. Persuasive technology should be produced as closely as possible to the needs and context of the users and, when possible, involve key people in a co-creation initiative. Indeed, persuading users to improve their physical activity would be different from persuading them to take medications or stop bad habits.

Unfortunately, this research area and market seem to be still in their early stages. Most scientific contributions present computational persuasion techniques at a conceptual level, with only a few prototypes operating on a large scale, and little concrete evidence of the large-scale applicability of these technologies. The most plausible explanations are that medical applications have more stringent requirements (for both procedures and devices) as well as that compliance with ethical principles is burdensome to be proven and enforced [84]. This entails that there is still a long way to develop effective persuasion technologies providing a real benefit from changing user behavior and improving user health.

2.4 Argumentation techniques

Such methodologies are reasoning and logic-based approaches aiming to draft conclusions from “conflictual” information [85]. The reasoning can occur in dynamic and uncertain environments (e.g., possibly inconsistent information and time/resource restrictions). Among the most relevant theoretical frameworks, it is worth mentioning the non-monotonic logic, which has developed to deal with circumstances [86]. In particular, it allows managing inconsistency and conflicting arguments, invalidating previous theorems and conclusions as new evidence requires it [86, 87]. Based on the non-monotonic logic theory, several reasoning frameworks were developed [88, 89]. One of those reasoning systems derived from non-monotonic logic is the defeasable logic, a reasoning framework that enables updates and retraction of inference [90, 91]. Defeasible logic is widely used in argumentative systems due to its flexibility and ability to reach conclusions from conflictive information [92]. Additional to defeasible logic, probabilistic reasoning [93], causal reasoning [94], and fuzzy logic [95] are commonly used to infer conclusions from uncertain information and incomplete knowledge. For example, probabilistic reasoning is frequently used to find causal relationships between random variables [96], causal reasoning is useful for explaining complex behaviors and generating arguments, and fuzzy logic is used in situations characterized by various degrees of truth. Another example is the Dung’s Abstract Argumentation Framework –AA [97]. Here, the arguments are connected via attack relationships, and the conflicts are resolved by finding an acceptable argument, which is strongly supported by arguments [97,98,99,100]. Further studies have extended such a framework by introducing preferences to weight the arguments [101,102,103,104,105], arguments ranking [106,107,108], and generalizing the existing approaches into the Assumption-Based Argumentation framework (ABA) [109]. The approaches mentioned above are widely employed by studies dealing with complex and dynamic environments. In such studies, distributed virtual entities (namely, agents) leverage the approaches mentioned above to solve conflicting situations with other virtual agents or with human users. Multi-agent Systems (MAS) are composed of several intelligent and autonomous entities interacting in a shared environment and mimicking social interactions [110]. Each agent has its own knowledge, beliefs, and a set of behaviors to actuate their intentions. This can generate conflicts and divergencies, which are solved via argumentation techniques. However, they might not be enough to reach a consensus and cooperation. Hence, argumentation has been “included” within a negotiation process, where conflicting agents exchange proposals, arguments, and knowledge. This process is known as argument-based negotiation (ABN). It is characterized by a reasoning mechanism, negotiation protocol, and strategy [111,112,113,114,115].

2.5 Virtual coaches as nudges

As outlined in Fig. 1, besides the pure domain-specific features, the areas composing a virtual coach present clear overlaps. For example, food recommender systems, informative systems, and persuasive technologies’ features can blend into health educational nudges. According to Sunstein and Thale [116], a nudge is an intentional modification of “any aspect of the choice architecture that alters people’s behavior in a predictable way without forbidding any options or significantly changing their economic incentives”.

Classic examples of health nudges are placing vegetables at eye level to increase consumption in a canteen or, for example, highlighting important information about a risky product in red to discourage its rapid use. Such interference with the nudge’s target effectively modifies the environment of the user’s choice without forcing the individual to do anything and without modifying their options. Therefore, why consider NVC as health nudges? First, it promotes goals, such as health and the environment, not only because of their intrinsic value but because they are the users’ goals. Indeed, suppose the user chooses to use an application that is transparently devoted to improving their health and ecological behavior, we can legitimately consider that they consider health and ecological behavior as personal goals. Second, NVC seek to influence the user’s “choice architecture” in a predictable way in favor of these goals without ever constraining them.

However, it is worth noticing at the outset that NVC belong to a specific subset of nudges, namely, educational or informational nudges. If NVC do indeed seek to promote the consumption of healthy and sustainable products, they do not intend to use primarily an unconscious influence (often referred to in studies on e-coaching as the Automatic Decision System) but seek to convince through information and argumentation made possible by a virtual assistant (often referred to as the Deliberative System).

3 Methology for ethical and legal challenges elicitation

The context and focus of the paper revolve around nutritional virtual coaches. Being a heavily multi-disciplinary subject, we have decomposed the NVC and provided the most important underlying notions of its components. In turn, we tackled the elicitation of ethical challenges and mitigations by employing an ethical framework inspired by the recommendations of the European High-Level Expert Group on AI (AI HLEG) European Expert Group (2019) and the Ethix laboratory.Footnote 1 In particular, it requires systematically addressing four questions:

-

what do we want to produce with our innovation?

-

who are the actors involved, and what are their respective interests?

-

what are the ethical risk areas (i.e., the interests of the different actors) that could be threatened?

-

for which of these risks are we responsible or not responsible?

Elaborating on the initial findings generated by such questions, we have further investigated the growing literature on the ethics of recommender systems and more specifically, Food Recommender Systems. This is notably due to the ongoing discussion about the societal, legal, and ethical consequences of recommender systems, including polarization, filter bubbles, and echo chambers. In this context, the works of Milano et al. [5], Karpati et al. [6], and Kampik et al. [117] were instrumental in defining the ECs related to recommender systems. In particular, we extracted and extended the ECs identified in these studies, scaling them to the full NVC picture w.r.t. food, nutrition, opacity, and user data. Given the scarcity of information on the matter, to address the challenges concerning informative and assistive systems, we had to adopt a different approach. We leveraged the (AI HLEG) questions to assess the literature focusing on the applications of care for older adults, children, and other sensitive populations, where ethical challenges are most critical [118, 119]. Moreover, to identify EC (notably those related to informative assistive systems also relate to persuasive technologies), we relied on user (i.e., professional nutritionists and individuals interested in NVC) interviews reporting the determinants of the system’s trustworthiness in question. Moreover, conforming to the latest EU guidelines about trustworthy and explainable AI, we focused on EC related to transparency and explainability. Finally, elaborating on argumentative systems has revealed to be particularly challenging. Although automated argumentation is a well-established research field, notably in the domain of multi-agent argumentation, very few works attempt to identify and discuss ethical concerns or challenges associated with argumentation-based systems. To cope with this problem, we resorted to guidelines, recommendations, and ethical challenges identified in the domain of human argumentation and scaled them to human–machine–machine–human scenarios (e.g., argumental integrity, fairness, etc.) [120]. Once all the challenges have been elicited, populating the overall vision of the ECs in NVC, a bottom-up approach has been employed to propose the envisioned mitigation strategies.

Concerning the elicitation and formalization of the legal challenges, the conducted analysis is based on in-depth knowledge and precise examination of the technical–functional features of the NVC. These elements constituted the indispensable basis for structuring legal reflections on the implications and effects of using these systems for ordinary users. Starting from a detailed study of the technological state of the art, law experts have tried to identify profiles of possible or overt criticalities, applying their knowledge in terms of ethical principles and legal categories. In particular:

-

ethical and legal concepts were carefully (re)constructed w.r.t. specific human–NVC interaction contexts;

-

possible concerns were identified, either by analogy or contrast with scenarios already addressed in literature, doctrine, and/or case law;

-

possible challenges have been highlighted, starting with problems that ethical theories and legal instruments seem not yet able to address/to address efficiently, with regard to the classes of systems here examined;

-

mitigation strategies have been proposed, trying to anticipate the needs and spheres of fragility to which the individuals involved in the interaction may be exposed and addressing them with the approach and resources proper of both disciplines.

Although the analysis of ethical and legal implications has been organized in two distinct sections (to respect the specificities, theoretical, and applicative potentialities of each of the two disciplines), the complementarities have not been overlooked. Indeed, Fig. 2 displays a schematization of the envisioned ethical-legal liaisons among the challenges.

4 Ethical challenges and mitigation strategies in NVC

The systemic evolution from RS to NVC entails a distinct set of challenges that requires impelling attention. Thus, to pave the way for ethics-aware Personalized Food E-Coaching Systems, we have analyzed and extended previous studies on food RS, such as Milano et al. [5], Kampik et al. [117], the very recent food recommender system handbook [118], and [3], whose focus is on the health-aspects of food recommender systems. As a result, two sets of ethical and legal challenges (EC–LC) are below organized per subsystem (NVC components—see Fig. 1). The following section elaborates on the methodology adopted to elicit such sets of challenges.

4.1 Personalized food recommender system

-

EC1.1

To circumvent inappropriate recommendations: suggestions that could endanger the users’ health and cause moral damage to their fundamental beliefs and values must be avoided. A possible first mitigation strategy could be to cross-check the recommendation with (semi)official sources. For example, in the case of NVC, using the nutriscore as reference [121].

-

EC1.2

To ensure privacy: the generation of personalized food recommendations entails the access to personal and sensitive user information. Collaborative filtering, one of the most widely used approaches in recommender system [2], has shown to be vulnerable to data leakage in the inference phase [122, 123]. Recommender systems involve an inherent trade-off between the accuracy of recommendations and the extent to which users are willing to release information about their preferences. In the literature, this trade-off has been tackled by relying on a layered notion of privacy for corresponding user groups [124]. We envision further investigation in this direction.

-

EC1.3

To safeguard autonomy and personal identity: RS could affect the user’s autonomy and personal identity by (i) intentionally limiting their freedom of choice with biased recommendations and a reduced set of options and (ii) manipulating the user’s community to create a filter bubble and hide/ignore their personal identity. This would lead to echo chambers, filter bubbles, and cyber-balkanization [117]. It is necessary to strike a balance between exploitation (i.e., providing the user with recommendations derived from her personal preferences) and exploration (i.e., providing the user with unforeseen content) [125]). Moreover, techniques inspired by social choice architectures, where a recommendation has to comply with predefined opinion/product distributions [117], can be further extended.

-

EC1.4

To reduce the RS opacity: modern RS engines leverage conventional ML/DL predictors (currently black boxes) and provide no transparency on the recommendation production process. Such a lack induces mistrust and a lack of accountability. A reliable solution would be embedding explainable predictors into conventional RS. Such a path has been recently undertaken in the coaching and recommender system communities [126].

-

EC1.5

To overcome the absence of fairness: skewed data sets, biased stakeholders, and inappropriate recommendations are prone to generate unfair recommendations (see the concerns raised about the fairness of the Yuka app [127] toward Italian producers). The opacity of the system makes it harder to detect such biases and unfair outcomes. Thus, to overcome such a challenge, a key measure would be to adopt techniques for debiasing [17].

-

EC1.6

To deflect social pressure: Since the early days of recommender systems [128], polarization and cyber-balkanization have been identified as one of the most dangerous side-effects of using these systems [129, 130]. This problem has been accentuated in the recent decade with the widespread use of social networks as a source of news and information which led to the formation of filter-bubbles [131] and echo-chambers [132] and increased already existing polarization (i.e., market, societal, and political). Proposed solutions include a better understanding of user experiences [130] and devising new algorithms aiming at reinforcing the center of the political spectrum, as well as aiming for technology-facilitated societal consensus [133].

4.2 Argumentative systems

Although automated and multi-agent argumentation are well- established [134] and used in socially implicated domains (e.g., eDemocracy [135]), only a few works address the entailed ethical challenges. Thus, we rely on the guidelines and recommendations defined for human argumentation. In particular, Schreier et al. [136] introduced the concept of argumental integrity, derived from the strong relationship between argumentation and fairness. To achieve an integral argumentation in NVC, the challenges are:

-

EC2.1

To attain formal validity: arguments must satisfy rational criteria that guarantee the transition from premise to conclusion.

To do so, before injecting an argument into the reasoning process, we have to prove its premises with a rule of inference [137] page 6. If the rule of inference uses conclusions derived from another argument, this process must be done first on this upper argument.

-

EC2.2

To leverage sole sincerity/truth: the argumenting participants must be sincere (i.e., only express opinions and argue in favor of “facts” honestly and transparently considered correct.

Authors in [138] propose the FIPA ACL protocol. This protocol proposes full transparency of the rules. Then, all the participants would be able to assess the arguments of other participants.

-

EC2.3

To ensure content justice: the arguments selected by a party must be both morally and legally just toward other participants. To ensure that, it is possible to define a list of forbidden premises. This list should be based on some moral or legal rules. An argument conclusion cannot be derived with premises from this list.

-

EC2.4

To enact fair and just procedures: the argument generation and exchange procedure must allow equal capabilities/opportunities to all the participants to contribute toward a solution according to their individual (relevant and justifiable) beliefs.

To do so, it is recommended to use a dialogue game protocol enabling individual rationality and respecting fairness (i.e., the rules treat the participants equally). Individual rationality means that agents cannot advance arguments that are counterproductive to them. One example of protocol fitting with this prerequisite (besides FIPA ACL) is the inquiry dialogue [139]. It allows both the individual purpose and equal treatment of the participants by the rules.

-

EC2.5

To ensure compliance-verification convergence: the evaluation of an argument can extend to assessing the source, selection mechanism, etc.

To avoid diverging investigations, we envision (i) employing the concept of source/speaker’s reputation (well-established practice in the MAS domain [140]) and (ii) belief checking, verifying the “honesty” of an agent’s mistake and enabling its “correction” via other agents or human user/experts feedback (assuming their unbiased honesty).

-

EC2.6

To simplify or aggregate arguments: in some cases/applications, the time available for an agent to reply—henceforth arguments generation, exchange, and assessment can be remarkably affected. Operating in a limited time may entail simplifying arguments and increasing the risks for unethical outputs.

In Fan and Toni [141], the authors propose a method to select an extension of minimal size to provide the most concise explanation. It is possible to use this method to compute the minimal set of arguments allowing the defense/attack of the decision argument. Such a method allows to focus on the most important arguments, and so, saving time.

-

EC2.7

To produce multi-modal arguments: depending on the context and receiver knowledge, the same argument might be required to be communicated using multiple channels (e.g., audio, visual, or textual). The argument production process might differ according to the select channel, and this may cause inconsistencies, divergences, and non-conformities. The proposed FIPA ACL protocol is at best semi-decidable.

4.3 Informative and assistive systems

Intelligent assistive technology (IAT) is the umbrella term defining assistive solutions boosted by recent breakthroughs in AI, social robotics, ambient intelligence, and wearables. Such solutions primarily target care for the elderly and individuals with special needs [23, 119]). However, the advancement of IAT has raised several ethical challenges. Notably, the applications intended to be used by sensitive individuals leverage data collection remarkably and operate in human proximity. The ethical challenges involving IAT can be formalized as follows:

-

EC3.1

To facilitate technology access and IAT rightful behaviors: IAT are approaching individuals with special needs and cognitive impairments (e.g., dementia [142, 143] to drive their the decision-making process. Therefore:

-

Enabling the dynamic consent management and empowering the user over it is a challenge already tackled in clinical trials [144]. However, assistive systems are still behind w.r.t. such aspects. A first necessary step would be to enable the user to revoke their consent and verify their correct understanding of the system functionality, scope, and use of their data. Moreover, the terms of service are rarely really read/understood by the users. It is indeed questionable whether reading-accepting these terms would truly qualify as informed consent [145]. This problem is exacerbated in the case of adults with dementia or Alzheimer. User awareness should be the key to access to IAT and should, somehow, be measured/assessed. Moreover,

-

IAT never substitute medical personnel, but it is supposed to work alongside them. Although critical decisions are not left on the IAT hand, several sensitive tasks can be automatized and, especially those relying on AI-based learning mechanisms, operate unsupervised and evolve in unexpected/undesired directions. Hence, after some time, the system or its functionalities might no longer be the same for which the consent was originally given [119]. A needed intervention is to develop mechanisms ensuring the user’s data are only used as “originally” intended or that the user is informed about possible systems shifts. Moreover, it should be ensured that additional sensitive data possibly provided by the user but not required by the IAT would not be processed or trigger the attention of specialists and system administrators.

-

-

EC3.2

To ensure the system identity: untruthful information is not the sole source of deception. Indeed, sensitive or cognitively impaired users can be deceived by the unclear nature of virtual assistants (agents) and not being able to “treat” them as non-humans. This risk is exacerbated in the case of robotics (both humanoid and zoomorphic), since their shapes are inherently deceptive (e.g., the user might tend to treat the robot as a pet [119, 146]). In particular, in the case of individuals with cognitive difficulties or disabilities, such effects cannot be completely removed [9]. Therefore, to mitigate the harmful consequences of such dynamics, it is advisable to ensure that these AI systems are always used as tools and not as alternatives to human care and assistance. In other words, for instance, to make sure that AI systems are used under the supervision or in the presence of trained personnel and that just auxiliary roles are delegated to them. Furthermore, crucial aspects of the psycho-physical health of frail people involved in the interaction should not rely on them only.

-

EC3.3

To ensure medical data confidentiality: IAT might be required to store, process, and selectively share even medical data [120]. Therefore, besides the well-known privacy concern, the confidentiality challenge assumes a broader spectrum [147]. Although many pieces of information acquired by IAT are not considered strictly medical according to the regulations in effect (e.g., swiping behavior on mobile devices, wearables, and surveillance systems data), they can be used to infer health status and behaviors. To overcome the ambiguities due to gray areas in the management of user data for assistive and clinical purposes, this possibility should be disclosed to the user when the data are required and collected. Moreover, tracing the actual use of such sensitive data should be ensured, as well as that the user health data are not used for profiling to ends other than strictly care and that they are not shareable with third parties.

-

EC3.4

To make the solutions affordable: some IAT come with a cost that not every user can afford (e.g., the cost of social robots is relatively high and prohibitive). Thus, affordability is a key ethical concern, since it can unfairly determine who can access and benefit from a given service [147]. To ensure parallel development of web/mobile applications mimicking or somehow replicating the behavior of robots-based systems could mitigate such a phenomenon. Although the benefits from interacting with an anthropomorphic robot might be lacking, it can still be a service enabler. For example, a user can start their journey in increasing nutrition knowledge by profiting from virtual assistants, which are remarkably more affordable (if not free) than human nutrition counseling. Nevertheless, it is worth highlighting that virtual assistants can help achieve given goals but not entirely replace medical professionals. For example, if a user wants to have a better performance in his daily life and eat more sustainably but cannot afford nutrition counseling, they could already take advantage of several mobile applications. In this case, the app is a good option to increase his/her nutrition knowledge and could contribute to the early promotion of new healthy habits in his/her daily life.

-

EC3.5

: To ensure safety boundaries: IAT are intended to assist and not replace medical doctors or care providers. To this end, the investigation of mechanisms that certify or monitor boundaries, safety, and pertinence of IAT services and behaviors must be a priority.

4.4 Persuasive technologies and processes

Recalling that persuasive technologies explicitly intend to influence individuals imperatively demands careful considerations when planning for their adoption. In particular, to verify which interests are served (designers or users’) and the actual contents validity, honesty, and production must go under the magnifying lens.

-

EC4.1

To provide transparency: similar to other NVC’s sub-systems, several user studies have confirmed that users do not easily identify the persuasion in action, while they correctly do it when persuasion is not present [9, 148]. Awareness is the key. Indeed, it puts the user in a completely different mindset when interacting with the system. Unfortunately, to date, many systems adopting such approaches do not disclose to the user the underlying technique, goals, and interests. To overcome such a lack of transparency, mechanisms clearly notifying/warning the user about their exposition to persuasion strategies must be set in place, since the very first interactions with the given system.

-

EC4.2

To state the goals clearly: Several interviews have reported users demanding trust into the persuader and the intention of a given system [148, 149]. Nevertheless, too often, the persuasion goals are vague or unclear. A user approaching a persuasive system should be timely notified about goals formulated in a thorough, clear, and understandable manner. Current research suggests as a first approach to include an “information leaflet” advising the user about the method and the goals of the persuasive system [150].

-

EC4.3

To prevent unintended behavior change: the designer of the persuasion strategy should take responsibility for unintended, unforeseen, and unpredicted outcomes. However, new persuasive approaches might include autonomous AI-based strategies, actions, and contents. Therefore, a first step would be to investigate explainable and debuggable mechanisms [12], followed by the investigation of new ways to identify the liability of intelligent autonomous systems.

Table 1 summarizes the challenges discussed above.

4.5 Cross-cutting ethical challenges in NVC

Concerning personalized food recommender systems (EC1), as mentioned by the European Expert Group of the High-Level Expert Group on AI (AI HLEG), information ethics is essentially based on four principles: explicability, respect for autonomy, non-harmfulness, and fairness [151]. However, it is essential to highlight that respect for “user autonomy” is a prerequisite challenge and a cross-cutting principle. This is because free user consent relies on an effective explicability strategy (how can one consent to what one only half understands?). Indeed, there is an empirical link between justice and respect for autonomy: the less the people affected by a decision or technology (i.e., RS) consent freely and understand what is at stake, the less their aspirations and needs are generally taken into account. Such a concept clearly applies to food recommendation systems. Indeed, the desire to make recommendation mechanisms less opaque (EC1.4) or to ensure the compatibility of recommendations with the user’s values (EC1.3), or to protect the user from peer pressure (EC1.6) only makes sense to ensure user control. Similarly, in Western democracies, the notion of privacy (EC1.2) that emerged at the end of the 19th century was not a loose end but a condition for personal autonomy [151]. Therefore, coping with the aforementioned challenges means promoting user autonomy, understood as informed consent. As mentioned above and summarized by the third column of Table 1, there are already a number of risk mitigation strategies explored in the existing literature for each of these challenges. Although detailing them goes beyond the scope of this article, Sect. 5.6 suggests some improvements of informed consent from the legal and ethical perspectives.

Concerning argumentative systems (EC2), argumental integrity grounds the identified challenges. In particular, to have argument integrity, it should be rational and transition reasonably from the premise to the conclusion (i.e., EC2.1). At the same time, it should be sincere—participants need to express opinions and argue in favor of honest and transparent “facts”. (EC2.2). Moreover, the arguments that satisfy EC2.1 and EC2.2 must undergo EC2.3—guarantee the integrity and be morally and ethically just toward the participants. Therefore, a set of new mechanisms and protocols should be developed to ensure these three properties (i.e., rationality—EC.2.1, truth—EC2.2, and content justice EC2.3). Such protocol(s) would verify if an argument is reaching its conclusions reasonably, ensure full transparency of the rules and allow participants to assess the arguments of their peers, as well as forbid the use of unethical or illegal premises. In addition, the same protocol(s) should enforce the fair treatment of participants and provide them with equal opportunities and capabilities (EC2.4)—features that should be verifiable (EC2.5). Finally, to facilitate their understandability and improve their presentability to the users, arguments could be simplified and communicated to the user using multi-modal channels—yet ensuring that no bias or error-in-translation is introduced (EC2.6).

Concerning Intelligent assistive technology (EC3), the ethical challenges typically relate to their access and rightful behavior (EC3.1), ensuring the system identity (EC3.2), the protection of the user data(EC3.3), their affordability (EC3.4), and the fact that they do not replace human personnel (EC3.5). In this context, transparency and explainability play a crucial role in helping tackle the other challenges. In particular, explainability allows for inspection and verification of rightful behaviors (EC3.1), conveys a clear definition of the system identity (EC3.2 ), ensures a transparent processing and storage of user data (EC3.3), and helps to define the boundaries of the system clearly (EC3.5). Finally, the affordability of the IAT systems (EC3.4) should be dealt with separately, since it is mainly a technological-, market-, and national health-related challenge.

Concerning persuasive technologies (EC4), they explicitly intend to influence individuals. For this reason, transparency is a challenge of chief importance. More specifically, transparency enables users to identify when they are subject to persuasion strategy and helps them understand its consequences (EC4.1). Moreover, transparency forces the system to state its objectives clearly—thereby avoiding ambiguities—and guarantees that users are notified about their goals and informed about their progress and divergence (EC4.2). Finally, with explainable, debuggable, and transparent mechanism(s), unintended outcomes could be identified and rectified (EC4.3), at least to a partial extent.

Abstracting the analysis across the four characterizing domains, some questions and cross-consideration arise. For example, “is it enough to respect autonomy understood as informed consent?”—Not quite. The reader should note that the overall challenge of autonomy is not limited to the specific challenges linked to informed consent. For example, EC1.3. is not mainly related to informed consent. Nor is it the case for challenges EC4.1-2-3. The point of keeping the user informed about the running persuasive strategy and its purpose/goal(s) and preventing unintended/unadmissible behavioral changes is not only to meet the minimum standards of autonomy as informed consent but extends to empower the user over the habits they want to change. Hence, we can consider that EC1.3 and EC4.1-2-3 require a global and more ambitious approach—i.e., a genuine empowerment strategy that helps the reader to clarify rules from their standpoint and supports their behavioral adherence. In turn, “what could a more ambitious and comprehensive empowerment strategy look like?”—we could invite the user not only to pre-define options but to explicitly state (e.g., via a checklist) what they expect from an FRS or NVC. For example, it might be useful to compel the user to answer a few questions, such as “do you expect to lose weight?”, “do you expect to eat more locally?”, etc. Above all, it is important to understand the priority of their goals. Certainly, the user’s reaction can already give an idea of their priorities. EC1.3 mentions predefining the possible options. However, precedent experiences (confirmed by scientific research in behavioral studies [152]) show that “to wish for something” and “to act on what is wished” can be remarkably different. Therefore, it is not enough to recommend what they usually like to the user and take it as a good recommendation. Instead, users must be prompted to question and brake down their objectives and, only then, engage them with an empowerment strategy. If they are persistently dissatisfied with the recommendations, we must engage them in motivation assessment or objectives revision. By doing so, users can be able to see whether the behavior is/has changing/(ed) in a (un)wanted direction (EC4.3) and renew or clarify the acceptance of a given persuasion strategy (EC4.1) and the related goals.

Overall, the challenges presented above are complementary and, to a certain extent, share underlying points. For instance, transparency and explainability are present in EC1, EC2, EC3, and EC4. Moreover, while recommender systems can be seen as the bedrock of the NVC, Informative and assistive technologies (IAT) layout the application background and the case study, while persuasive and argumentation technologies define the methods used (or not to be used) to persuade the user to undergo the desired change. Furthermore, user data management and data protection are also transversal challenges shared by EC1, EC2, EC3, and EC4. In particular, in EC1, recommender systems require access to user data to propose the best recommendations. To produce the best arguments (EC2), the system should also access data related to the moral, ethical, and legal principles of the user and other participants. To deliver personalized care, user data is essential for the IAT (EC3), and finally, persuasion (EC4) cannot be effective without being familiar with the user preferences and concerns.

5 From ethical to legal concerns in nutrition virtual coaching

To date, the debate on new technologies is primarily formulated in terms of ethical guidelines rather than legal dispositions [153]. The main reason for this choice has been the need to foster innovation, avoiding as much as possible any form of constraint typically embodied—in the common imaginary—by the law. On the contrary, ethics is considered a flexible and soft regulatory tool. However, this sharp division between the two fields of analysis is due to a fundamental misconception and a misinterpretation of the profound nature of both disciplines. The result is that conceiving this tool as an alternative to regulation, we are encouraging an ethics-washing that makes “ethics, rights, and technology suffer” [154].

Overall, the abstraction of general principles does not allow for a unique and universal agreement on given situations [155]. Specific investigations, necessary for the maintenance of the society, are both time- and culture-sensitive in terms of scope, content, and countermeasures—which are not explicit in the general principles. For example, coordination cannot be defined nor regulated just leveraging principles, and the application of general principles in very particular cases might require “prudence” to defend, enforce, and ensure human rights and general norms [156].

Therefore, the specifications of ethical values and general principles can lead to different perceptions and standing points even within same cultures/contexts (i.e., divided and inconsistent European proposals [157]). Indeed, many of the initiatives that have arisen around the concept of AI systems and their concrete uses share common values. However, all of these—including fairness, transparency, explainability, responsibility etc.—can be interpreted differently depending on the ideology, culture, and country we take into account [158].

Moreover, we should notice that due to the heterogeneous nature of the actors of the ethical debate around AI (i.e., academic research [159], corporations and organizations [160], civil society [161]), it is not possible to detect homogeneity in the methodological approach and authority in ensuring concrete application of ethics. Indeed, the adherence to ethics codex is voluntary, and there are no mechanisms able to enforce the respect among the consociates [162].

Therefore, the fact that many ethical and legal principles can relate should not lead to the conclusion that they mirror the same type of rules or that they can be equally interchangeable. Both these instruments are necessary for the debate, even if not sufficient, the one without the other.

The law is very often misunderstood in its meaning, functioning, and methodology. The most common understanding of legal norms is the one that connects them to prohibitions, rigid dispositions, and limitations [163]. In other words, they have a negative connotation very commonly. On the contrary, the law is a structural body of positive principles and rules through which society is organized and which can be considered effective when it proves to be adaptable and to respond efficiently to the specific reality (or component of reality) that it is deemed to regulate [164].

Positive norms are intended to be mandatory and binding for any individual (or agent). For example, being fair is more than just an option. Nevertheless, sanctions occur only if the second type of norms are violated. Moreover, positive norms constitute a progress toward more clear and uniform standards. Positive norms can be uniform at different levels, for they are the result of an agreement among States—as is the case of International Law—or of an agreement among political forces at the end of a legislative process—as is the case of domestic law [165]. Finally, any norm is the sum of the evaluation and balancing of political, social, and economic needs, not always—concurrently—considered applying a mere ethical approach. Moreover, any norm is the sum of the evaluation and balancing of political, social, and economic needs, not always—concurrently—considered applying a mere ethical approach.

Then, against the idea that AI would highlight gaps in the law, such that it would be an inappropriate regulatory instrument, in 2019, the High-Level Experts Group stated that “no legal vacuum currently exists, as Europe already has regulation in place that applies to AI” [166]. This is because no legal vacuum exists in the legal system, generally speaking. Indeed, it does not consist of legal norms only, but even of legal interpretation, legal doctrine, and legal decisions of the courts of justice, at different levels [163].

This does not mean that the law is error-free, that it is always just, or that the norms we have today are the most appropriate for dealing with the challenges that new technologies pose. There are rules which may need to be revised in light of the uniqueness that, to some extent, AI displays. As mentioned above, one example is the data protection regulation (GDPR), which cannot guarantee to be always effective—due to the huge amount of data stored and used by modern applications and the variety of scenarios in which this occurs [167]. Moreover, even the concepts of explicability and explanation should receive particular attention and a precise theorization at the legal level [168], so as to heal the spaces of ambiguity that a concrete principle of explainable AI still poses to the jurists. Nevertheless, these considerations do not lead to the idea that a regulation of new technologies based on the law should be avoided. On the contrary, they demonstrate the need to focus our attention on what is the most appropriate and functional way to regulate the matter and not on the advisability of doing so [169].

Despite the differences, methodology, and scope here highlighted, ethical and legal analysis go hand in hand and mutually benefit from each other. For this reason, as we shall see, some of the challenges they could face may appear, at first, to correspond. In particular, we have identified the following legal challenges (LC) on the subsystems which compose a personalized food e-coaching system (i.e., NVC):

5.1 Personalized food recommender system

-

LC1.1

To avoid inappropriate/harmful recommendations: it is often possible to identify a recommendation as inappropriate or harmful only through an ex-post evaluation. However, the law has not only a punitive dimension but, above all, a dimension of prevention of damages/risks. Therefore, even applying a purely “restorative” approach, there are difficulties in allocating responsibility. Indeed, sometimes, there may be a user’s competition in the harmful consequence that occurred, which could not be promptly predicted or mitigated by the developers/service provider.

-

LC1.2

To sidestep manipulation and coercion: recommender systems could induce choices that users would not have made otherwise. They might also induce changes in their perceptions of themselves as individuals or of some aspects of reality (desires, preferences, needs). These could be considered manipulative—aiming at distorting the relevance and nature of the options available at the time of choice—or coercive—in the case, where the aim is to restrict the number of options considered available—dynamics [170].

-

LC1.3

To avoid steering the market unfairly: recommendations could have large-scale effects, affecting consumer choices so as to direct economic and market balances. An example could be that an NVC recommends foods of a specific brand, as the producer has economic interests in this regard. This could be a clear case of a manipulative dynamic that falls into the remit of unfair competition practices, for it conditions the choices of individuals to favor the producers’ financial interests.

-

LC1.4

To limit over-trust or mistrust: people’s biases and lack of technical knowledge can lead to a distorted representation of what the system is in concrete and which expectations it is reasonable to place. These issues are often addressed with XAI techniques. However, we should underline that, most of the time, explainability is not very much related to accountability [148].

5.2 Argumentative systems

-

LC2.1

To limit the side-effects of a data-based argument: in the case of automatic argumentation, the skepticism of legal experts may arise from the nature of the data on which they rely. Considering that biases are in the data, not only/directly in the AI itself, and that, at the moment, it is not easy to remove them, the final result could be discriminatory, offensive, fallacious, or misleading.

5.3 Informative and assistive systems

-

LC3.1

To discourage unsupervised use: NVC are specially designed to be used with commonly defined fragile subjects—due to age and physical/mental health conditions. These categories are subjected to specific safeguards by the legal system. In particular, in the case of choices that may impact health, autonomy, and the economic sphere. The possibility of solely unsupervised use of these systems/devices may violate such rules and expose the parties involved to possible material and psychological damages.

-

LC3.2

To handle deception: even if we cannot consider deception in human–robot/human–computer interaction a prerogative of fragile individuals, the impact of such deceptive dynamics can be more critical. In particular, deception in the context of assistive systems—especially if social and emotional robots are involved—can induce isolation, dehumanization, infantilization, and human dignity infringement [171].

-

LC3.3

To curb social discrimination: at a global level, the population is aging, and there are not enough trained personnel to cope with it. The diffusion of assistive and care systems may appear as an effective and efficient solution to solve the problem, slowly becoming the prevailing one. However, this would also undermine the very concept of “equality of starting points” [172], which underpins the right to health in Western democracies. This would create a form of discrimination, whereby only those who can afford large sums of money would be able to obtain appropriate assistance.

5.4 Persuasive technologies

-

LC4.1

To deal with conceptual ambiguity: the line between the concept of persuasion and the one of manipulation is still somewhat blurred. This nourishes a crucial uncertainty for legal argumentation, which makes the clear identification of the object of analysis its foundational basis. As a result, some harmful dynamics might still be considered lawful. An example can be the case in which a person is led to a behavioral change induced by the machine without the user having decided or realized it during the interaction.

-

LC4.2

To overcome the mere transparency requirement: trying to make a persuasive system transparent might not always be the most appropriate solution and certainly not the most effective one. First, it is possible that implementing systems that increase transparency makes the device less efficient and introduces additional errors (i.e., inaccurate interpretation of AI predictors), leading to failures [173]. Second, human cognition mechanisms are influenced by multiple subjective, biological, and contextual factors, making it, as of today, difficult to prove what is truly transparent for the end-user.

Table 2 summarizes the challenges presented above.

5.5 Informed consent: a transverse challenge

We have so far analyzed the challenges that AI systems could pose to the legal sciences, divided by classes of applications. Behind these, however, there is one common to all: informed consent.

Informed consent is considered one of the main pillars of contractual law, consumer protection law, and lawful economic transactions. Such a perspective is built around the figure of the so-called homo oeconomicus. This is the prototype of a perfectly rational, always wise human being, capable of deciding, to the best of their knowledge and conscience, the most advantageous decision to be taken in all circumstances [174]. Thus, the prerequisite necessary to allow individuals to embody the “rational consumer” would be to provide appropriate information that, once fully understood, will lead them to naturally make the choice that best pursues their own interests [175]. However, social sciences and legal practice have demonstrated that the idea of a fully aware decision-making process is essentially an illusion [176]. This is due to many concurrent factors.

First, we should consider the structural complexity. End users are usually ordinary individuals with a limited understanding (if any) of either the device’s technical characteristics or legal terms. Despite GDPR provisions, the information they should be made aware of often contains terms that are overly specialized or, on the contrary, too vague [177]. On one hand, this is justified by the need to be accurate. On the other hand, with the need to match the expertise/knowledge of the most. Moreover, regarding privacy documents themselves, it has been shown that only people with a Ph.D. education level would be able to analyze them accurately and really understand them, as a matter of structure and length [178]. In addition, very often, data collection and storage practices are characterized by a degree of discretion that does not allow to know what will actually happen to the data entered in the system. This, combined with the language issues, increases the difficulty of weighing the future risks of the current choice to share given information [179].

This leads to the second main challenge posed by the principle of informed consent. The fact that the information provided is, at the very end, GDPR compliant cannot guarantee per se balance of power between the economic actors involved. Indeed, the paradigm of consent in private law should protect the authenticity of individuals’ will rely on the perfect correspondence between what the users have preconceived in their minds and what they have concretely consented to. However, as many neuroscience and behavioral psychologists show us, this is not very often the case because of cognitive limitations that are intrinsic to human beings.

For a piece of information to be considered effective and meaningful, many subjective elements should be considered. A non-exhaustive list may include motivation, personal biases, knowledge, level of education, and cultural education. Even the way in which the information is provided may influence the willingness to receive it [145]. Furthermore, we should consider that when consent is required at the very beginning of the interaction, the user has the primary desire to start or carry on the activity. That can cause a lack of accuracy in understanding the real implications and the content itself [180]. This phenomenon is called “present bias”. It clearly highlights that, even if people rationally consider personal data protection relevant, this is not enough to overcome instinctive reactions triggered in a subconscious way by their own mind [181].

Consequently, we should admit the profound difficulty in considering the principle of informed consent as truly effective in solving the criticality posed by AI systems as a whole

5.6 Mitigation strategies and functional requirements

The discussion conducted in this section wants to highlight the challenges posed by new technologies and approaches (in particular revolving around NCV) from both an ethical and a legal point of view. It emerges a constantly evolving field of research in which the demand for technical experts is inevitably intertwined with those of the human sciences. Therefore, the most appropriate solution is to create a balance that allows their coexistence in the ultimate interest of the individuals involved. That is why it is not possible, as of today, to provide clear-cut solutions but rather strategies for risk mitigation. However, these will be developed and implemented in future works, always starting from multidisciplinary and integrated research.

Clear statement about what the system is not and what it cannot do/replace For instance, it could be useful to explicitly clarify that the virtual assistants or chatbots can provide recommendations based on scientific and nutritional researches, which cannot replace the consultation of a doctor or a nutritionist. This is even more true in the presence of specific health conditions or subjective body responses to the suggestions provided by the system. Such information should be stressed at the very beginning of the interaction before the actual use of the system/device (i.e., NCV). However, changing nutritional behavior is not just an action, it is a process. Therefore, people’s bodies and minds are involved at different levels and in different ways through the use. Consequently, such content should be repeated when any change is made to the initial settings or as soon as the pre-determined goals/sub-goals are reached.

Explicit mention of categories of individuals for whom the use of the system is not indicated To this end, it is necessary to identify, with the support of experts, for which specific diseases unsupervised access to dietary advice could be harmful. In doing so, it will be necessary to take into account not only physical profiles but also the psychological dimension. Mental pathology, eating disorders, and body dysmorphia will have to be taken seriously into account. Regarding the latter categories, it should be considered that those who are directly affected are also the ones who find it most difficult to be consciously aware of them or admit them, even to themselves. Therefore, it would be appropriate to propose examples alongside each of the clinical categories indicated. This will serve a twofold purpose. On the one hand, it will ensure a softer approach, mitigating the emotional impact of being stigmatized in a pathology. On the other hand, it will raise awareness of the issue and raise doubts in those who might recognize themselves in the dynamics/symptoms mentioned without having valuated that they might be dysfunctional.

Clear, user-centered goals The goal should be set by the user only and could be subjected to unidirectional modification at any level of the interaction. Any mechanism which can force, directly or subliminally, people to follow the instructions/recommendations should be strictly avoided. To such an end, the persuasive techniques implemented should be ex-ante evaluated by a multidisciplinary team—which may include psychologists and neuroscientists—so as to foresee possible deceptive or coercive dynamics. Specularly, the right to refuse suggestions and stop the interaction should be guaranteed any time the user feels overwhelmed or bothered. Even in this case, the above-mentioned team should evaluate which communicative techniques should be applied/developed so as to cope with normal reluctance to change, without resulting in manipulation of will.

Timing and design of consent The theme of informed consent is certainly one of the most sensitive, given the structural inconsistencies of this principle and the fallacy of its assumptions. Therefore, what we suggest is to structure the request for consent with characteristics that take into account the real nature—not fully aware and rational—of the average user. First, consent must be required any time the user modifies what was previously established. This may make the interaction less fluid. However, the constant reminder to agree with new conditions forces individuals to pay attention and objectively realize what is happening. This would help mitigate the problem of giving consent out of inertia or without really dwelling on the implications of subsequent choices. In addition, the provision of consent should not be a mechanical exercise, summarizing it in a single click. It would be useful to make this an interactive moment, at the end of which the device presents the user with quizzes through which to demonstrate a real and not fictitious understanding. If the user fails the test, new and more targeted explanations/information will be provided. The expected functionality will be unlocked only after passing the quiz.

Access to professional opinions If there are concerns on the part of the user that the device does not understand or cannot resolve, or if the user develops reactions that were not expected or that the device fails to handle, the access to professional opinion should be guaranteed. This can result in to direct intervention of a technician who takes control of the system and can solve the issue (i.e., due to malfunctioning) and in the invitation to contact a personal doctor or a specialist who is part of the team that developed/supported the NVC. In both cases, the system should prevent any subsequent action until one of the two solutions mentioned above has been taken.

Overall, ethics and law are disciplines with different (although somewhat related) methodologies, scopes, and purposes. The instances they both express and advocate are often interconnected, like in the case of NVC, where the two disciplines appear similar or even overlap. Nonetheless, they must be analyzed differently, respecting the specificities and potentials that both ethics and law, each in its sphere of competence, can express. Figure 2 shows the possible relationships identified w.r.t. the elicited challenges.

6 Practical implications of EC and LC on nutrition and food sustainability

NVCs are primarily intended to provide appropriate and tailored nutritional recommendations to users to optimize dietary health outcomes. However, in the last decade, growing concern about the environmental and social impacts of food production and consumption has raised the consciousness about shifting our dietary patterns toward more sustainable ones [182]. In this context, recommendations for healthy diets are paired with environmental-aware diet recommendations. Therefore, opening to the sustainability perspective in NVC demands the assessment of an additional dimension of ethical challenge: the process of informing, educating, and learning about sustainable eating patterns. To address this challenge, an NVC should adopt a precise and transparent definition of sustainable diets, which pairs dietary intake references to environmental consumption thresholds by also considering cultural and social aspects. So far, the most widely accepted definition of sustainable diets is the one provided by FAO (2012) [183], which intend them as “diets with low environmental impacts which contribute to food and nutrition security and to healthy life for present and future generations. Sustainable diets are protective and respectful of biodiversity and ecosystems, culturally acceptable, accessible, economically fair and affordable; nutritionally adequate, safe, and healthy, while optimizing natural and human resources” [184]. This definition is broad and poses significant boundaries to its adoption in NVC. Indeed, the definition does not provide detailed information on the nutritional, environmental, social, and economic criteria that should be considered to formulate the recommendations, the data used, and their reliability as well as their processing and modeling. In the last decade, many studies have focused on environmental boundaries of diets, special attention has been given to dietary Greenhouse Gasses emissions—GHGe and water footprint—WF i.e., [185,186,187,188]; The EAT-Lancet commission provided a comprehensive overview on healthy and environmental outcomes of diets with the aim to reach scientific consensus on health and environmental targets for a sustainable food system. The study identified a safe operating space for food production, which allows feeding “healthy diets to about 10 billion people within biophysical limits of the Earth system”, defining the so-called “healthy planetary diet” [182].

In the previous paragraphs, ethical and legal challenges that can arise in the development and adoption of NVC have been identified and argued. In following, the challenges are linked to practical implications (PIs) for NVC in the domain of nutrition and sustainability of diets.

6.1 Personalized food recommender system

-

PI1.1

Inappropriate/harmful recommendations (linked to EC1.1 and LC1.1): it may include (i) recommendations on the consumption of a specific food/food group that is excluded by the user’s religion or for ethical reasons (i.e., a statement on animal welfare) [189]; (ii) the existence of specific conditions such as food intolerances or allergies of which the user is unaware; (iii) recommending food that can interact/reduce the effect of specific medicines (active compounds), such in the case of food containing vitamin K which interacts with anticoagulants vitamin K antagonist [190]).

-

PI1.2

Disclosure of private information on the health status (linked to EC1.2): The privacy of the disclosed weight, date of birth, specific health conditions (e.g., food intolerances, food allergies), and other sensitive information has to be ensured. Furthermore, the user has to be aware of who has access to the data and under what conditions (i.e., research use) [191].

-

PI1.3

Disclosure of nutritional and environmental criteria adopted to model the recommendations (linked to EC1.4 and LC1.4): personalized food recommender produces tailored recommendations according to modeling assumptions, which might not take into account social and ethical beliefs that the user is not required to disclose or for whom entry data is missing. This knowledge gap can impact the quality of the recommendations produced. Furthermore, in the case of environmental recommendations, it is necessary to ensure transparent and understandable information on the modeling process with special reference to the indicators used, their normalization, the functional units, data sources, and impact categories considered. In the case of nutritional recommendations, the following information has to be disclosed: dietary guidelines adopted for reference intake per age, gender, weight, and physical activity level; coefficients used for physical activity conversion in terms of Kcal expenditure; basal metabolism Kcal expenditure per age, gender, and weight [192].

-

PI1.4

Data set uniformity and reliability (linked to EC1.5): data set used for modeling the environmental impact of diet has to be scientifically recognized and reliable, transparent information has to be provided on the type of data: primary data, secondary or tertiary data; type of harmonization of data in relation to the system boundaries, when more than one database is used; type of functional unit used [192].

-

PI1.5

Guarantying science-based and neutral information on healthy and sustainable diets (linked to EC1.6 and LC1.3): healthy and sustainable diets have been shown to have both positive outcomes on consumers’ health and on the environment [182]. Since multiple dietary patterns are possible in the framework of the described planetary diet, a neutral information on food substitutes and dietary supplements should be provided. Special attention has to be given to the reduction of meat consumption (when high), which has high environmental impact [153, 158], the information can be complemented with neutral and science-based information on meat substitutes [193] and complementary food or food supplements to guarantee proper nutritional intake of vitamin B12, Iron and Zinc [194]. PI1.4 and PI1.5 also apply to Argumentative Systems as practical implications of EC2.1, EC2.5, and LC2.1.

6.2 Argumentative system

-

PI2.1

The trade-off with understandability and accuracy in environmental recommendation (linked to EC2.6): aggregation or simplified information on the environmental impact of food or diets can lead to misunderstanding and confusion as shown in the case of EcoLabels [195]. Indeed, the environmental assessment of food and diets is the result of articulated modeling systems which adopt multi-indicators and take into account different scenarios (e.g., miles traveled by a food product), an extreme simplification can alter the information and incur into misunderstanding.

-

PI2.2