Abstract

Consider how much data is created and used based on our online behaviours and choices. Converging foundational technologies now enable analytics of the vast data required for machine learning. As a result, businesses now use algorithmic technologies to inform their processes, pricing and decisions. This article examines the implications of algorithmic decision-making in consumer credit markets from economic and normative perspectives. This article fills a gap in the literature to explore a multi-disciplinary approach to framing economic and normative issues for algorithmic decision-making in the private sector. This article identifies optimal and suboptimal outcomes in the relationships between companies and consumers. The economic approach of this article demonstrates that more data allows for more information which may result in better contracting outcomes. However, it also identifies potential risks of inaccuracy, bias and discrimination, and ‘gaming’ of algorithmic systems for personal benefit. Then, this article argues that these economic costs have normative implications. Connecting economic outcomes to a normative analysis contextualises the challenges in designing and regulating ML fairly. In particular, it identifies the normative implications of the process, as much as the outcome, concerning trust, privacy and autonomy and potential bias and discrimination in ML systems. Credit scoring, as a case study, elucidates the issues relating to private companies. Legal norms tend to mirror economic theory. Therefore, this article frames the critical economic and normative issues required for further regulatory work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Commercial use of artificial intelligence (AI) is accelerating and transforming nearly every economic, social and political domain. AI is used to assess financial risk, diagnose medical conditions, evaluate job applicants, pilot autonomous vehicles and score potential recidivism rates. These task-based processes are just a few well-known and widely discussed applications that demonstrate machine intelligence [1]. What they share in common is the aim to identify patterns in data to classify people and for classifications to be statistically correlated in making predictions. Companies have been attempting to classify and label items, processes and people for a long time. However, the modern capacities of converging foundational technologies enable analytics of the vast data required for AI.

Banks rapidly adopted these new capacities to transform their front and back-end services.Footnote 1 The most prevalent use of AI in the financial sector is algorithmic credit scoring.Footnote 2 The reason for its prevalence is twofold. First, the traditional statistical processes of credit granting and scoring are well suited to the correlation and classification techniques of ML. Second, inadequate processes to analyse creditworthiness may affect repayment capacity, exposure to credit risk, inaccurate portfolio quality and may materially affect earnings and capital. Ensuring responsible lending is essential to protect consumers from over-indebtedness and potential bankruptcy [4]. While access to credit is necessary for many consumers to afford a car, house or daily expenses, credit has historically been inaccessible to many consumers [5]. Discriminatory and exclusionary practices bar or price out many people from the consumer credit market. Such practices have been reinforced over time as banks rely on historical data and behaviours to assess future applications [6,7,8].

Academic commentary on algorithmic decision-making in financial services has primarily raised the alarm on historical data that will result in biased algorithmic tools [9,10,11,12]. Biases, among other risks, are essential considerations. However, there is a gap in recent literature on the potential optimal outcomes that can arise if risks are mitigated. Algorithmic credit scoring can significantly improve banks’ assessment of consumers and credit risk, especially for previously marginalised consumers. It is, therefore, helpful to examine the commercial considerations often discussed in isolation from potential normative risks [13]. Providing a rigorous analysis of the economic and normative considerations that influence and incentivise behaviour in consumer credit contracting is both urgent and important.

The article makes three principal contributions. First, it examines the economic implications of using ML to address traditional challenges in consumer credit contracts. In particular, it highlights the reasons for enthusiasm for well-designed ML, given the corporate momentum behind such technology. That is, from an interest-led analysis, there are opportunities to shift interest, information asymmetries and incentives for better outcomes for both banks and their consumers. Second, it introduces critical aspects of machine learning that unveil some of the misconceptions about algorithmic credit scoring. Third, the article provides an evaluation of risks that, if mitigated, potentially improve economic and normative outcomes in the traditional consumer credit contract market.

The article is structured as follows. Section 2 begins by exploring the evolution of algorithmic tools in financial services, particularly in consumer credit markets. Section 3 provides a brief introduction to ML. Section 4 provides an overview of the economic and normative implications of using algorithmic decision-making. Here, the article explores four interrelated issues: accuracy and efficiency, trust, privacy and autonomy, and the risk of bias and discrimination. Finally, Sect. 5 concludes.

2 The evolution of algorithmic decision-making in financial services

Banks have two basic operations—they accept deposits and grant loans [14]. Historically, lending was generally restricted to homebuyers, and credit institutions depended on borrowing short (paying interest for deposits that could be withdrawn at will) and lending long (granting 30-year mortgages). Economist Krugman describes it as ‘an exchange of a privilege (deposit insurance) for a responsibility (low-risk investing)’ [15]. Loans are one form of consumer credit contract; loans are bank assets as they profit from the interest consumers repay over long-term contracts. Therefore, when offering to grant a loan, banks analyse financial data and other relevant information to determine whether to grant a loan and determine the terms of repayments (such as interest rates) [14]. Accurately selecting the right consumers to lend to is important to a bank’s profitability as they seek to maximise the usually slim profit margins of retail banking and mitigate against risk [14, 16, 17]. It is similarly important for consumers that they are offered access to credit, but only to the extent they can repay. Default on a credit contract like a home loan will have lifelong consequences for consumers [18,19,20]. Therefore, the decision to enter a consumer credit contract is important to both parties.

2.1 Challenges in traditional consumer contracting decisions

Consumer credit contracting relates to the relationship between banks and their consumers. Their interests, incentives and informational position affect their respective market behaviours and illuminate some of the challenges with the provision of credit. Banks, like most private organisations, are interested in profit maximisation. Profit or shareholder value maximisation as the primary interest of private companies is an assumption that underpins the dominant legal theory of the company [21, cf. 22]. Whether or not one agrees with the standard Anglo-American company law assumption that shareholder value maximisation is the most efficient tool to achieve overall social wealth, based on their current practices, this article assumes that it is the primary interest of private companies [21, 23,24,25,26].

Credit institutions profit by ‘taking in deposits and lending the funds out at a higher rate of interest’ [27]. At its simplest, a bank’s profit is maximised when it maintains a net margin on loans and deposits, net of its management costs [28]. A competitive bank will adjust the number of loans it grants and deposits it accepts in such a way as to balance its margins with the marginal management costs. Economists have revealed that banks with more market power will quote lower deposit rates and higher loan rates [28]. Despite the significant value in gross lending and cost of credit, financial institutions have persistently weak profitability in consumer lending [29, 30]. This is due to intense competition and the cyclical nature of lending [31]. Researchers have shown that the number of loans positively affects profit but also creates a higher credit risk, which may, in turn, deteriorate profits [32, 33]. Empirical evidence suggests that, on balance, lending positively affects banks’ profitability [32, 34,35,36]. Therefore, banks are increasingly focused on increasing the profit derived from interest rates charged to borrowers.

Banks’ incentives to maximise profit are limited by their risk exposure. While facing credit risk, they also must mitigate liquidity, operational, compliance, strategic, currency and reputational risks [16]. Reputation damage may reduce revenue due to loss of consumer base and higher financing or contracting costs [37, 38]. Therefore, banks have a relatively low-risk appetite, so they favour not granting loans to higher risk consumers over potential income from loan repayments.

Consumer interests and incentives are much harder to classify. Individuals display wildly contradictory decision-making behaviour—they can be both risk-averse and risk-seeking. For example, the same consumer may purchase an insurance policy (risk-averse behaviour) and lottery tickets (risk-seeking behaviour) [39]. The interests of individuals that drive such consumer behaviour are a widely explored concept within economics. Traditional approaches consider consumers to be rational and undertake a cost–benefit analysis to determine which options are suitable for them [25, 40, 41]. The ‘rational choice theory’ prescribes that a rational person makes decisions based on consistent and relevant reasons [42].

However, the rise in economic psychology theory has demonstrated the effects of heuristics and biases deviating consumers’ actions away from rational utility maximisation [43, 44]. Behavioural economic theories reveal that the inconsistency of consumer behaviour is often influenced by people’s emotions such as love, fear and anxiety [45,46,47]. Relevant to this article, consumers are generally risk-averse in their financial decisions and will choose certainty over uncertain potential benefits [14]. Consumers choose their spending and saving between bank loans in a way that maximises their utility under budgetary constraints [48]. Therefore, consumers will not borrow unless the benefit of borrowing outweighs the bank’s cost of providing the loan (i.e., repayments of interest rates) [49]. While active and well-informed consumers may spend some effort to find the most competitive price for financial services, others may not [50], because cognitive biases influence consumer behaviour [51]. Consumers will generally seek to maximise the utility of their financial decisions, improve their financial outcomes and maximise their happiness and satisfaction derived from home loans [41, 52].

2.2 Information asymmetry between contracting parties

To achieve their goals, banks need to be in strong informational positions to produce credible information about borrowers and use that information to filter consumers’ applications. Banks have a dominant position over consumers in determining contractual terms. Banks generally hold the borrower’s bank account, so they oversee their finances [14]. Due to this information position, banks are strongly adjusting actors and can negotiate the terms of their contracts with consumers to meet their profit margins and monitoring requirements.Footnote 3 However, without robust information and monitoring, banks are less accurate in determining profitable consumers (or adjusting the conditions of their credit contracts) [54].

Despite having some information, consumers themselves are in the best position to predict their future behaviour [16]. Information about their personal circumstances, behaviours and intentions is vital to a bank’s lending decisions [16]. So, to the extent consumers use borrowed money, they have an incentive to disguise their ability and willingness to repay the debt and protect the financial project’s full details at the lenders’ expense [54].

Such conflict is exacerbated because consumers lack information that would help them assess the risks associated with their credit contracts [47, 55, 56]. Consumers often fail to undertake a cost–benefit analysis accurately [55]. Therefore, even if they intend to be risk-averse in their decision and maximise the utility of a loan, they may lack the information to make such an assessment.

Such a problem is called adverse selection [14], an economic problem caused by asymmetric information between contracting parties. While first identified concerning the management of the firm, Williamson identified lack of information disclosure as a transaction cost in contracting [57]. Transaction costs are ‘the economic equivalent of friction’ [58]; lack of information creates barriers to optimal outcomes in consumer credit contracts. Another economic problem, an ex post problem, is moral hazard. Borrowers may take on higher risks to obtain higher returns because it is not their money at risk. This moral hazard problem arises when banks cannot continually monitor the activities of the consumers, which poses a default risk [14].

In consumer credit markets, there are also problems arising from endogenous and exogenous uncertainty. Endogenous uncertainty is within a bank’s or consumers’ control, and exogenous uncertainty is outside their control. For example, uncertainty and systematic risks often result from an external market shock, like an ‘act of god’ or policy change that shifts credit supply [59]. Problems arising from exogenous uncertainty will not always be the same as those originating from adverse selection or moral hazard and are difficult to predict but important to factor into financial decision-making [60].

The provision of debt to consumers, even in best-case scenarios, is an unequal endeavour with a knowledge, wealth and power gap between borrowers and lenders, which may be and often are exploited [61]. The introduction of ML decision-making introduces a risk that consumers may seek to ‘game the algorithm’, and banks must monitor for this new self-interested behaviour [62, 63]. More than previously, consumers can withhold information from financial institutions, and banks can obtain more data about consumers they do not disclose (including outside the context of traditional contractual limits, such as through new alternative data sources).

3 Examining the technical aspects of algorithmic decision-making

Banks must undertake a ‘creditworthiness’ assessment of consumers before granting or changing the details of a credit contract.Footnote 4 Traditionally, officers within banks would personally conduct a review of a loan application drawing on disclosed financial information, such as salary and savings, and anecdotal experience from similar borrowers [64]. Granting loans and calculating credit contract terms, such as the price of credit, were solely human-led judgments [65]. Introducing new data and analytics requires briefly reviewing the underlying challenges in this decision-making. As new technology emerged, more sophisticated statistical models were formalised to determine how likely consumers are to default and classify consumers with a correlating credit score [64]. Different banks and credit reference agencies use different methods, such as linear regression, logistic regression and decision trees, to cluster groups of people and calculate credit scores [63]. These methods are primarily machine learning (ML) approaches.

In order to use ML approaches, banks needed large amounts of data to analyse, more than the present financial position of a consumer. Consider how much data is newly created and newly usable for the financial sector: from basic data and personal information to richer data from online transactions, web browsing and location settings which informs a more holistic picture of individual lifestyles and spending habits. The information available from online behaviours and choices has drastically changed the scope of credit scoring processes. The increasing use of data in decision-making demonstrates an intentional shift away from the human judge. While still using an actuarial or statistical method, it now relies on conclusions from empirically established relations between data and conditions [66].

Proprietary and commercial barriers limit what is known about the details of each bank’s use of ML [3, 63, 67]. Now and then, a high-street bank will announce a partnership with an AI start-up to improve credit scoring. Barclays and Simudyne partnered to analyse the risk of lending to loan applicants [68], Standard Chartered partnered with Truera to develop new models to determine the creditworthiness of borrowers with minimal credit histories [69], and Prestige Financial Services is one of many banks working with ZestAI to improve credit decision models [70], and unnamed banks partnering with Datrics [71]. The Open Banking initiative has led to a surge in fintech companies focusing on algorithmic credit scoring with alternative data [72]. A well-known example is the Goldman Sachs and Apple Card algorithmic credit card model (see discussion in Sect. 4.2). Before investigating the implications of ML for banks and consumers, it is helpful to consider what ML tools constitute in our present reality.

The development of AI has a rich history. Its early computational beginnings were first rigorously pursued soon after World War II [1]. Renowned mathematician Alan Turing posed the question, ‘can machines think?’ and designed the Turing Test in 1950 [73]. In 1955, John McCarthy coined the term ‘artificial intelligence’ at the first academic conference on the topic [74]. Practitioners convened the field from disciplines such as ‘logic, mathematics, engineering, philosophy, psychology, linguistics and, of course, computer science’ [75].

The focus of this article is machine learning (ML). Put simply, ML is learning from data [76]. It is generally limited to automating tasks to solve a pre-determined problem [75, 77]. In this case, ML learns from data to detect and extrapolate patterns for predictions of future consumer behaviour. Understanding the impact of ML requires understanding how such systems are designed and the assumptions invoked at different points in such processes. It can be challenging to bridge the gap between legal and technical concepts. Even while operating with the same syntax across disciplines, there are essential and often difficult to identify logical and lexical semantics. Lawyers and ML scientists will use the same terms to mean different things. Introductory ML concepts applied to lending decisions are outlined below for ease of understanding in the following analysis.

There are several important and relevant stages of ML development. For this article, problem formulation and model development are the most relevant. The other relevant stages of ML development that are of great importance should be the focus of future work concerning the deployment of value-led design of ML but are out of the scope of this analysis [78].

3.1 Introduction to machine learning

Starting with an example of a simple rule-based algorithm, for explanatory purposes, consider a hypothetical rule where a bank always approves a consumer for a primary credit card if they earn over £20,000 yearly. If a bank has the dataset of consumers shown in Table 1, they could apply a simple rule-based algorithm to decide the outcome.Footnote 5 Here, \(\widehat{y}\) is the predicted output, \(\mathrm{d}\left(\widehat{y}\right)\) is the decision based on \(\widehat{Y}\), and \({X}^{E}\) is the earnings of a consumer.

This rule-based algorithm reads: for the predicted value of Y (shown as \(\widehat{Y}\)), if a consumer’s earnings are equal to or over £20,000, they should be labelled 1, for will not default or 0, for will default.

Rule-based algorithms are immutable. It does not account for probability nor ‘learns’ its own rules. So, banks may use such rules to process more administrative applications, combining more rules that correlate to known information about a consumer. However, more advanced ML is used in credit scoring for larger loans, such as home loans.

ML learns and defines its own set of rules from data [1]. One type of ML, supervised ML models, then creates a predictive model for more nuanced outputs. For example, if a bank wants to use inferences from consumer data to help decide whether or not it should grant a consumer a home loan [79]. A supervised ML prediction model starts with a training dataset that contains known inputs \(\mathcal{X}\) and outputs \(\mathrm{Y}\) and learns an approximation of the function \(f\) [76]. The function \(f\) is a true underlying data generating function, and an ML algorithm is trying to predict as closely as possible to the truth [76]. It is called supervised ML because the correct values of Y are provided by a supervisor [79]. Typically, humans make judgements about the relevant data points or labels that have been collected in previous processes. For instance, bank officers will have labelled whether a person has or has not defaulted on a previous loan.

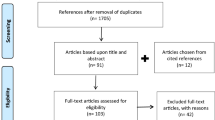

The approximation can be measured by having access to data outside the dataset. Usually, this is simulated by dividing the dataset into three parts, training, validation and test sets. First, the training set into two parts—training and validation sets (Fig. 1).

Supervised ML learns from the patterns in datasets. Table 2 demonstrates what a relevant training dataset might look like, although in reality, ML usually works best with an entire demographic dataset with a large number of variables (sometimes called ‘rich data’). Generally, the value of Y is a binary value represented by a binary digit 1 or 0. ML creates its rules for prediction during this training process.

The prediction that is the most accurate on the validation set is the best one (a process called cross-validation) [79]. Once a model learns rules from this training data, it can then be run over a new validation dataset that contains unknown values of Y. Usually, a prediction model will try to predict Y. In practice, it will most often use the expected value of Y given the input values of X for an individual x drawn randomly from the dataset. The goal is to find a valuable approximation of the ‘trained’ prediction function \(\widehat{f}\) to the ‘true’ predictive function \(f\) that underlies the predictive relationship between inputs and outputs [76]. Then, the best model that makes the most accurate predictions in cross-validation can be chosen (Fig. 2).

The third dataset, a test set, is then used to show examples not used in training or validation. Test data may hide the known value of Y so we can review whether the model has accurately predicted the same value (training data is usually split into test and train sets). The aim is to make the predictions as similar to the known value as possible so that it is considered an ‘accurate’ model when used on new datasets [76]. Usually, this means an accurate model has high accuracy on the test dataset, i.e., it generalises well to new data. Naturally, when deployed in the real world, there will not be a known Y value. Therefore, errors may arise in the difference between the true outcome and the predicted outcome. Before a model is deployed on new, real data, it is evaluated on test data to evaluate and minimise the generalisation error [76]. Error matters in assessing the model’s performance; in some cases, error may be a false positive or false negative prediction [80, 81]. A prediction error may result where a consumer is predicted a high default risk when they are actually a low default risk (a false positive) or a consumer predicted a low default risk when they are a high default risk (a false negative). Error rates across groups may reveal that consumers with certain attributes may be more likely to receive a false positive or false negative prediction. However, when the prediction error carries through to decision error of false positive or false negative rates, real-world consequences arise challenging the legality of equalising these measures. False positives may, in some cases, be more costly than false negatives and vice versa. Further, error disparity may be more costly for one group, say a marginalised, group than the majority, for example. While different parameters for ML systems can lead to improved accuracy—because error can never be zero—prediction accuracy can never be 100% [82, 83].

This ML model will predict the expected default of an individual i as either \({y}_{i}=1\) as the probability of no default or \({y}_{i}=0\) as the probability of default. Therefore, a bank can change its model to apply a threshold classification rule so that consumers only fall into a high default risk category if they are over a certain probability of default [79]. Such a classification rule can be defined as:

For example, if we consider information about Andrew, he would be classified as:

Therefore, Andrew is not classified as a high default risk despite the binary default prediction. Claire would be classified as:

Table 3 includes example probabilities for expected default.

The threshold for classification is dependent on the risk profile of the decision-maker. For risk-averse banks, the probability may even be lower to classify many consumers who pose some risk, even a 70% or lower probability of default. In sum, the ML predicts a binary category and a classification for consumers based on the probability of such a prediction arising.

3.2 Decision rule

Importantly, supervised ML does not itself make a real-world decision. There is a separate task of making the decision based on the prediction, in which predictions from the model may inform the optimal action to take. Statistical decision theory can consider the costs and benefits of making different decisions based on ML prediction under uncertainty [79]. Decision theory first requires that all possible actions are enumerated, denoted as \(\mathcal{A}\). For explanation’s sake, let us assume that there are only two possible actions a bank would take in respect of a consumer—granting a loan (\(\mathcal{A}\) = 1) or denying a loan (\(\mathcal{A}\) = 0). As a first step, banks may add a threshold decision rule similar to the above. A bank may deny a loan (\(\mathcal{A}\) = 0) for all consumers predicted with a probability of high default risk. If this was formalised as a decision rule, the function of possible decisions for a consumer is (\(d\left({\widehat{y}}_{i}\right)={\alpha }_{i}\)) (Table 4).

This introductory representation demonstrates the key delineation between the prediction model and the decision analysis in an automated decision-making situation using ML.

3.3 Further decision-making development

Statistical decision theory then requires consideration of the probability distribution of the possible, real-world effects for each decision option [84, 85]. First, most clearly, is whether the individual will default. Second, the bank will be interested in the outcomes of decisions on its net profit. The bank also has a significant opportunity to consider other morally relevant outcomes that will be considered in this section. Perhaps considering whether it increased its number of ‘thin-file’ consumers who could access finance; if more accurate predictions allowed personalised pricing or cross-subsidisation of consumers; the invasion of privacy or autonomy. This probability distribution helps define a utility function concerned with choosing an outcome that maximises the highest expected utility. The utility function is the sum of all potential benefits or costs from the real-world effect of selecting an action based on the supervised ML prediction. Such analysis requires deliberation of economic and normative considerations that should be subject to future work. The policy implications therefore require a shift in focus on regulating how humans use and design AI, both in terms of the value judgements and thresholds for decision.

4 Implications of ML

4.1 Accuracy and efficiency

As introduced above, the consumer credit market is beset by inefficiency, power and knowledge asymmetries and behavioural weaknesses of consumers [61]. The use of data processing and ML in consumer credit markets may both exacerbate and alleviate such challenges. While consumer credit contracting is heavily regulated, the article uses an economic method to reveal how banks and consumers would behave if unregulated.Footnote 6

4.1.1 Accuracy and information asymmetries

Given the intrinsic uncertainty of consumer credit lending, banks will attempt to overcome the information problem that gives rise to adverse selection by collating and analysing all available financial data and qualitative characteristics to determine a borrower’s financial viability [16]. However, rational consumers are not incentivised to be fully honest in their disclosures [54]. Such conflict of interests significantly impacts bank profitability [54], and consumers’ cost–benefit analysis for a credit contract [49]. Where consumers are inaccurately evaluated by a bank or do not fully grasp their financial capacity, they are more prone to default [14]. Optimal outcomes then arise when a bank lends amounts with interest rates that generate profit while being viable repayments for consumers to achieve their financial goals to maximise their happiness and satisfaction [55, 86].

ML may increase the accuracy of the creditworthiness assessment of consumers. Collecting more data and identifying more correlations between new types of information and consumers may allow better classification of high and low-risk borrowers. ML can provide outputs that relate specific consumer characteristics (i.e. their income and savings) with the probability of default [87]. Banks will increasingly draw on alternative data sources, including non-traditional finance data, to overcome their adverse selection problem [88]. Due to increasingly large datasets rich in personal characteristics, ML can create complex rules that are increasingly accurate at predicting a consumer’s probability of default, risk-based interest rates and improved credit scores based on new data. Credit institutions could use ML to learn otherwise hidden correlations between personal information and default risk and segment consumers based on their risk profile. The better a bank can predict the probability of default (i.e., \(\widehat{y}\)) the higher its revenue can be.

Minimising the effects of adverse selection through ML requires an improved understanding of statistical indicia for consumer default risk and shifting the incentive structure for consumers to want to disclose more information to credit institutions. Alternative personal data may encourage banks to consider essential variables for financial viability beyond traditional methods. Many consumers have traditionally been excluded from accessing credit markets because they did not hold assets nor meet outdated creditworthiness indicators. For example, traditional banks have not considered timely rent payments as an indicator of the creditworthiness or government benefits as a steady source of income [88, 89]. These policies have notably excluded minority groups, for example, people of colour and recent immigrants [90]. Such individuals may now demonstrate financial viability with other personal disclosures, such as rental history [91], public records, social media posts and online transaction history [92]. Using ML allows previously excluded consumers, often called ‘thin-file’ consumers, to access consumer credit to improve their credit [93]. If well-designed, this use of ML is win–win. Consumers have more opportunities to be accurately assessed by banks and improving access to finance diversifies banks’ consumer base.

4.1.2 Efficient pricing

More accurate creditworthiness assessments may, in turn, benefit customers who are more likely to receive a more accurately predicted, viable interest rate specific to their financial circumstances and goals. The barriers to entry into the consumer credit market give banks oligopolistic discretion in pricing credit repayments and interest rates according to consumers’ risk characteristics [16]. However, one way to alter incentives is to offer more accurate or personalised pricing for consumers who disclose more information. ML may allow for more efficient pricing structures, including a competitive advantage for banks with more accurate models. Personalised pricing, or price discrimination, based on the consumer’s perceived value of the loan and their ability to repay may create a more efficient way for lenders to recover their costs [50]. The distributional consequences of price discrimination are that consumers charged higher interest rates might be subsidising consumers who pay lower interest rates. One group of consumers may be offered lower interest rates based on certain assumptions about their financial ability, which the bank recaptures by charging higher interest rates to another group of consumers. ML-informed price discrimination may also improve efficiency by ensuring consumers can access credit where uniform interest rates would ordinarily price that consumer out of the loan market. This increases the banks’ consumer base that purchases financial services products, creating higher overall utility.Footnote 7 Therefore, banks can make profits, even marginally, above the competitive level and with improved accuracy may extract higher surplus [94].

Let us further consider this best-case scenario. In combination with consumer data (particularly alternative, online and behavioural data), ML may enable lenders to offer individually tailored financial services products with optimal interest rates or loan conditions [94]. Advances in ML then bring into view the otherwise theoretical, or at least otherwise impractical, first-degree price discrimination,Footnote 8 where each consumer is offered a unique price [94, 96]. First-degree price discrimination requires access to complete information on consumers to propose a personalised price [94]. Such pricing may be possible through more precise estimates of consumers’ willingness to pay or risk-based pricing to different consumers. Research has shown that using ML with a high-dimensional vector of consumer features shows profits increased by over 10% (relative to uniform pricing). While consumer surplus declined by less than 1%, nearly 70% of customers are charged less than the uniform price [97, 98]. The classic creditworthy characteristics, demographic variables and alternative data captured by consumers’ online activity could be used [96, 97, 99]. However, inaccuracy and underdeveloped rules for personalised pricing may equally produce suboptimal outcomes. If consumers are incorrectly categorised, they may be priced out of the market or not be offered competitive pricing driving them to alternative financial institutions [88, 100]. While certain businesses have long been attempting such forms of price discrimination [101], banks are yet to deploy such practices widely.

When considering accuracy, it is important to remember that there will always be some generalisation error in any supervised ML model. Designers of ML then need to consider the consequences of different types of inaccuracy. For example, false positives will result in individuals offering loans with inaccurate predictions as a low default risk when they are a high default risk. The potential effects of such action are twofold: (1) a bank’s costs increase with a rise in credit risk, and if default eventuates, they are unlikely to benefit from any profit; (2) a consumer’s costs increase with potential default, but they may receive a benefit of being offered a loan they may otherwise have not been granted. False negatives will result in individuals being refused loans with inaccurate predictions of high default risk when they are a low default risk. Again, the potential effects of such action are twofold: (1) a bank faces a lost opportunity cost in rejecting a potentially profitable consumer but has no increase in credit risk; (2) a consumer faces the loss of the home loan but is not put in an unaffordable loan position. Inaccurate labelling of consumers is not only a critical legal and financial risk for businesses to mitigate but also a reputational risk.Footnote 9

4.1.3 Predicting future behaviour

As noted above, the moral hazard is one of the major transaction costs that creates suboptimal outcomes [103]. Moral hazard arises during a contract because it is impossible for credit institutions to observe all the future actions of their borrowers. The ability to predict future decisions more accurately depends on access and informed consent to use consumer’s financial and personal data.

In some cases, credit institutions will collect more data from their consumers. In addition, consumers who agree to increased disclosure may receive more credit contract terms, offset by the credit institutions’ reduction in moral hazard effects [104]. Over time, using ML predictions in partnership with disclosures from borrowers can lead to an overall improvement of moral hazard. Such relationships build trust and reputation, requiring less monitoring effort from credit institutions [105]. Trust is important in many aspects of this relationship.

4.2 Trust

Good reputation and trust in corporations are necessary for generating profit and retaining consumers [106]. However, in recent years, there has been evidence of an erosion of trust in the financial sector of advanced economies [107]. When an institution’s reputation is called into question, especially when consumers no longer trust their interests are protected, corporations lose considerable profits and market standing [106]. Introducing algorithmic decision-making to the financial sector can further erode consumer trust and institutions’ reputations.

Consider the notable example where the Apple Card (underwritten by Goldman Sachs Bank USA) was widely criticised for alleged discrimination against female credit card applicants, especially on social media [108, 109]. Some women were offered lower credit limits or denied a card, while their husbands did not face the same challenges. The claims ‘sparked vigorous public conversation about the effects of sex-based bias on lending, the hazards of using algorithms and ML to set credit terms, as well as reliance on credit scores to evaluate the creditworthiness of applicants’ [110]. The New York State Department of Finance investigated the algorithms involved and concluded there were valid reasons for these instances of disparity and could not find any discriminatory practices [110]. The Department acknowledged that in the context of algorithmic lending, there are risks, including ‘inaccuracy in assessing creditworthiness, discriminatory outcomes and limited transparency’ [110]. In particular, limited transparency is a severe issue for trust and accountability in consumer credit.

An essential part of this discussion is the ability to explain the decisions made with ML. The lack of explainability of ML is somewhat of a misnomer. Some ML models are inherently explainable (i.e., linear models and decision trees). Other models may be too complex to comprehend (i.e., random forests and neural networks) [111]. However, even in large neural networks, we do know how they make predictions and usually understand what information is used. As shown in Sect. 3, there are transparent parameters and thresholds that are used to precisely guide the design of ML. The problem is instead that predictions are made in a difficult way for humans to grasp due to the millions of parameters involved [112]. This reality is often confused with the well-known statement that ML decisions do not inherently generate reasons or explanations [113,114,115]. As a result, ML is often referred to as a ‘black box’, obscuring the rationale or reasons for a decision [116]. Depending on different moral assumptions, this may be seen as morally problematic because decision-making cannot be scrutinised publicly. The concept of ‘explainability’ has arisen as a solution [115]. There is growing awareness of the importance of explainable AI in underpinning accountability, but there is still a long way to deliver full transparency. Such processes are relevant in making the complexity of ML systems more accessible. ML may also be unexplainable because non-traditional data sources and modelling methods may generate novel predictive inferences. ML often generates insights from big data that a human decision-maker would not otherwise have made [61].

Creating trust in corporations and their ML processes will impact the opportunities and risks discussed in this article. The investigation into the Goldman Sachs Apple Card algorithm is a prime example of a regulator evaluating the underlying model and data for bias in algorithmic decision-making through statistical analysis [110]. When creditworthiness assessments were still a human-led judgement, such analysis was not possible. Therefore, with a greater understanding of interdisciplinary methods there is a substantial opportunity for transparent and accountable use of ML in financial processes.

4.3 Privacy and autonomy

That companies should maximise profit, for the benefit of their shareholders, to the detriment of their consumers’ privacy and autonomy seems incongruous with good governance [117]. Such contradiction seems particularly relevant to credit institutions with millions of citizens in their consumer base. Using non-traditional data sources raises severe privacy and autonomy concerns, as well as the potential impacts of classifying different consumer behaviours [118]. Banks may also purchase data from third-party data brokers to collate information from online surveillance to introduce new types of data. Therefore, big data for ML contains more personal information than previous decision-making, which can alleviate or exacerbate issues of information asymmetry [119].

Actors who collect all aspects of online interactions may know everything compared to individuals who lack knowledge of what is known about them [119]. In addition, in many cases, consumer data is obtained without fully informed consent [120, 121]. More broadly, individuals may feel that ML technology is intrusive and untrustworthy. The ubiquity of ML used to shape decision-making has significant consequences on human behaviour. ML can be used to personalise choice environments, nudge decisions, deceive and even manipulate behaviour [122, 123]. Deferring decision-making to ML creates a seismic shift in how users make choices [124]. It, therefore, changes individuals’ capacity for self-determination [125, 126]. Increasing reliance on algorithmic systems raises questions of whether users’ choices are authentic and whether this tendency will impoverish our capacity for self-determination [124, 126].

The denial of autonomy may lead to suboptimal outcomes by exacerbating the informational effects on consumers’ ability to make rational financial decisions. Emerging research suggests that a lack of autonomy introduces a psychological cost in consumers that exacerbates existing transaction costs [127]. While nudging-like actions may, in some cases, provide more information to consumers [128], there is a risk that the inherent conflict between credit institutions and consumers will mean the denial of autonomy will not be in all parties’ best interests [129]. Consumers’ ability to achieve their expected utility in home loans will only become more complex as choice architectures exacerbate existing information asymmetries.

In data-driven lending, consumers face not only the traditional asymmetries in credit contracts but also asymmetries from the volume and manner of personal data processing. Invasions of privacy are then itself a cost that limits their consumers’ ability to make well-informed decisions [130]. In addition, once companies have obtained such large datasets, rich in personal information, they become targets for cyberattacks. Recent years have seen an increase in frequency and magnitude of cybersecurity breaches [131]. For instance, in 2017, the credit reporting agency Equifax lost hundreds of millions of names, social security numbers, addresses and more in a cyber breach. Equifax settled allegations with the FTC that the credit reporting company’s failed to take reasonable steps to secure its network [132]. The loss of privacy and confidentiality of consumers is an immense cost. Businesses cannot pay an equal price, but similarly face loss of reputation and trust of their consumers and broader society, in addition to the direct and operational costs of a cyber incident [133]. The protection of consumers private information is an essential consideration in the use of ML.

4.4 Risk of bias and discrimination

The goal of credit institutions using ML is ‘in fact, to discriminate’ [134]. Naturally, it is to legally discriminate based on relevant and legitimate characteristics when determining consumer creditworthiness [134]. Biases towards specific personal characteristics, such as race, gender, marital status or sexual orientation, have historically impacted loan and credit decision-making processes. Then, such processes have historically strayed into unlawful discrimination and introducing ML has amplified concerns for minority groups accessing finance [9, 11, 87, 135,136,137]. The variables from consumers’ digital footprint often serve as proxies for income, character and reputation, which are highly valuable for default prediction [134].

Algorithmic bias is not a term of art; it is a general term that can refer to various biases [138, 139]. Bias may arise because of unsuitable training data or a poorly designed ML model that makes predictions unrepresentative of reality. Also, ML may replicate existing societal disparities captured externally in the data but are not intrinsic to the ML system. Although the system may technically be accurate, it may result in a protected group receiving different outcomes because the ML prediction system mimics the structural bias in the data. In particular, the use of ML in credit scoring and access to financial services has raised concerns about the use of ‘digital reputation’ as a significant determinant of a person’s life chances [18,19,20]. Pasquale notes that ‘unlike the engineer, whose studies do nothing to the bridges she examines, a credit scoring system increases the chance of a consumer defaulting once it labels him a risk and prices a loan accordingly’ [18].

A wealth of literature has identified the likelihood of ML models replicating human biases in training datasets [134, 140, 141]. Individuals with protected attributes may be charged higher interest rates or be rejected more often than other consumers [142]. Sometimes, this is an unintended effect of ML identifying subtle historical patterns in datasets. ML does not independently acquire discriminatory associations. It learns and amplifies those evident in training datasets before applying them to future decisions. ML will do precisely what it is designed to do; that is not always what we thought we designed it to do. Some institutions may act in their interest and opportunistically take such insights to target vulnerable consumers with unfavourable contracts [143]. In any event, intended or not, the use of ML that unfairly or unlawfully correlates default risk to protected characteristics should be prevented [135, 144, 145]. When ML learns from data, it absorbs social bias from the data provided and reverberates the patterns learned onto the predictions [81, 146].

Recently, many scholars have attempted to define what fairness means when overcoming algorithmic bias [147,148,149,150,151]. The area has been led by the ML science community, which has proposed measuring fairness using statistical metrics that attempt to measure disparity in a legally meaningful way. There are a growing number of suggestions on creating more fair outcomes using these technical metrics. Fairness metrics can identify different predictions for groups of individuals by comparing the random \(X\) prediction against individuals in groups with a protected attribute \((g\left({X}^{P}\right))\). Using fairness metrics may help identify where bias arises from and how to mitigate against it on average (or over multiple decisions). The question here is whether the ML that generates predictions or decisions tend to either reinforce injustice against members of a protected category or introduce new forms of inequality against such individuals.

There are four general categories of technical fairness metrics. The first two, statistical parity and conditional statistical parity, evaluate the proportion of expected predictions as the same across groups defined by protected characteristics (including conditions where it would be legitimate for expected predictions to differ).Footnote 10 The goal of conditional statistical parity is to measure group parity while allowing some difference in distribution where there is a legitimate alternative explanation. For example, some people have identified that in deciding on a home loan, legitimate variables may include the consumer's earnings and employment [117]. However, even with variables that are seemingly neutral on protected attributes, it is difficult to restrict legitimate features that do not at least partly correlate with a protected attribute (variables can be a proxy for a protected attribute) [153].

The third type of metric evaluates error parity, whether the probability of an outcome is equally precise for individuals irrespective of any group membership based on protected attributes [150, 152, 154]. As noted earlier in this article, prediction error and decision error measuring false positives and false negatives reveal important information about the design of an ML model. Depending on the context, a false positive error may be more economically costly or morally significant than false negatives and vice versa.

Further, this disparity may be more acutely felt by for one group, say a marginalised group, than for the majority. Finally, Dwork proposed the individual fairness metric that evaluates: ‘any two individuals who are similar with respect to a particular task should be classified [on the target variable] similarly’ [153]. Critically, instead of focusing on comparisons at the group level where simple statistical parity could be intuitively unfair at the individual level, individual fairness approaches measure disparities in treatment for individuals with similar features.

Notably, ‘if there is structural bias in the decision pipeline, no [group fairness] mechanism can guarantee fairness’ [155]. Where there is a structural and systemic issue in a dataset, such as historic or label bias, precluding any systematic bias downstream of the ML pipeline will be almost futile. It may be argued that implementing fairness metrics does not prevent decisions taken from mirroring existing social inequalities that we may judge to be unjust, such as discrimination towards women consumers. However, fairness metrics may allow the measurement of disparate outcomes on the ground of protected characteristics in correlation with legitimate factors. Morally, the systemic injustice that makes this arise ought to be removed, but the law may constrain the modification of statistical metrics. Applying the law to these questions of unfairness in algorithms and compounding inequality is particularly challenging [140, 156]. For example, one approach to equalising outcomes across groups could be to vary the cut-offs for a loan application to ensure that majority and minority groups have equal opportunities to secure a loan [157]. However, such affirmative measures raise normative and legal issues [157]. Each group fairness metric requires different assumptions.

Most ML techniques still focus on traditional objectives of maximising accuracy and performance with respect to the known value of \(Y\). Merely adding constraints to this optimisation in the form of technical metrics creates tensions between fairness and accuracy. This is often referred to as the ‘cost of fair classification’ or the ‘fairness accuracy trade-off’ [150, 158, 159]. Such trade-off arises when there is biased (noisier) information on an underprivileged group due to historical inequalities making it difficult to separate the positive and negative labels of that group. When an algorithm is instructed to preference the level of fairness, this objective is specified before optimising the subsidiary objective of accuracy. So, based on the discussions here, if a woman of colour is less likely to be offered a loan by a perfectly accurate model, prioritising group fairness metrics will limit some accuracy.

Algorithmic bias as a general phenomenon presents a material legal, financial and reputational risk for banks, although particular instances will be fact-sensitive [144]. Without delving into the legal intricacies of discriminatory algorithms, this article observes that in the context of private ordering, the corporate ecosystem shapes the incentives of banks and their role in purpose-driven activities [160]. To overcome the incidental or intentional risk of biased algorithms, greater awareness and better technical design could help introduce more objectivity and greater accuracy in decision-making.

4.5 Summary of costs and benefits of ML in financial services

The outcomes of using ML systems in financial services are complex and it would be wrong to conclude that their implementation will instinctively derive positive outcomes. However, many potential benefits could be explored with awareness of and strategies to mitigate the potential suboptimal outcomes it may cause. As discussed in this article, the potential optimal outcomes of ML include the reduction of transaction costs, primarily opportunism created by information asymmetry, including adverse selection and moral hazard effects; reducing monitoring and operational costs allowing for redistribution of increased profit margin to consumers and improving the categorisation of consumers to allow more efficient and tailored pricing of financial products to maintain the firm’s profit margins while improving financial viability and satisfaction of consumers.

However, the potential suboptimal outcomes include the risk of inaccurate predictions that misjudge individual characteristics of consumers leading to them being priced out of the market and losing competitive pricing; poor design in delivering new ML systems lacking important normative considerations may lead to inaccuracies and inefficiencies, exacerbating existing self-interested behaviour that leads to ‘gaming the algorithm’ and increase monitoring costs for new behaviours; biased ML systems may conflate features of certain individuals with inaccurate risk factors, resulting in unfairness or discriminatory outcomes by biased ML systems entrenching historical bias or conflating the correlation between protected attributes and financial risk factors. These findings highlight both the opportunities and risks and the fact that these issues often turn on good design and connection of normative principles to technical understanding. Good design can build trust, promote optimal economic outcomes and protect the rights of consumers while improving the profit of banks. Bad design leads to the inverse.

The rapid development of academic literature and policy reviews that examine or propose regulatory reform is often ‘preoccupied with the moral obligation to prevent harm’ [161, 162]. As this article has outlined, the prevention of harm is essential to mitigate the potential economic and moral costs of using ML. However, there is an opportunity to focus on promoting these principles and aligning reform with optimal outcomes for both consumers and companies. Future work on regulatory issues will benefit from the analysis in this article which informs the underlying incentives and interests that shape behaviour in this area.

5 Conclusions

This article has challenged the persistent assumption that the use of algorithmic credit scoring and alternative data will only result in discriminatory outcomes or harm to consumers. Potential benefits from well-designed tools should not be so readily dismissed. The ethical desiderata such as trust, privacy, autonomy and fairness, initially studied in isolation, benefit from intersectional research alongside corporate perspectives. At least in respect of banking regulation, legal norms tend to mirror economic theory [17]. Therefore, this economic analysis frames the critical issues for further regulatory work. Banks, like most companies, will continue to function with the prioritisation of profit [160]. They are not, and should not, be charities [160]. However, the future of corporations may shift with the knowledge, as described by Larry Fink, that, ‘in fact, profits and purpose are inextricably linked’ [163]. At the same time, as many consider the purpose and values of corporations, there is a similar impetus for the ethical design of AI.

The serendipitous timing of such challenges should be harnessed. Some of the critical challenges for credit institutions to overcome regarding their purpose and profits overlap with the moral challenges posed by ML. At present, academics argue that ‘we lack a moral framework of justice in respect of evaluating the inputs, outcomes and practices of AI’ [164, 165]. Normative questions about the moral framework that guides AI cannot be divorced from questions of how we evaluate the moral framework that guides corporations. Because, despite the misnomer, that treats AI like something ephemeral or autonomous. As if it is not the tangible decision rules and utility functions informed by the assumptions and goals set by the architect.

The observations in this article culminate in two essential contributions to the vast growing literature on algorithmic decision-making. First, examining the outcomes of using ML from a combined economic and normative method is unique and allows for more rigorous consideration of the real-world costs and benefits. Second, that despite the risk of harm that has been identified by many experts in the field, there is a clear opportunity to design ML in ways that improve and optimise economic and normative outcomes for banks and individuals in the consumer credit market. This article proposes renewed enthusiasm for positive economic and social opportunities from more accurate and ethically designed ML that aims to maximise optimal outcomes from algorithmic decision-making.

Data availability

Data sharing is not applicable to this article as no new data was created or analysed.

Notes

The article concerns retail banks and consumers following the definition of ‘consumer’ as ‘an individual acting for purposes that are wholly or mainly outside that individual’s trade, business, craft or profession’, in Consumer Rights Act 2015 (UK) s 2.

Warren and Westbrook use the term ‘strongly adjusting creditors’ to describe creditors with sufficient informational position and resources that would be able to negotiate for or adjust fully to new bankruptcy regimes by being able to negotiate the best possible terms for themselves [53].

FCA Handbook, Consumer Credit Sourcebook (CONC) 2022.

Denoting all input variables is \(\mathcal{X}\). \(X\) represents a randomly selected input variable from a dataset. An individual, or observed variable, is \(x\). The \(\mathcal{Y}\) refers to the generic output variable. In training datasets, \(Y\) will be known and when training \(\widehat{Y}\) is the predicted outcome.

Assuming the presence of enforceable contracts and the rule of law (without express consideration of specific legal obligations). See modalities of regulation (law, markets, norms, and architecture) [89].

There are legal questions related to price discrimination, however, the article aims to first consider its economic effects.

Second-degree price discrimination is altered pricing for high quantities (i.e., wholesale discounts). Third-degree price discrimination is altered pricing for different consumer groups (i.e., student discounts), [95].

References

Russell, S., Norvig, P.: Artificial Intelligence: A Modern Approach. Pearson, Hoboken (2021)

Institute of International Finance: Machine Learning in Credit Risk. https://www.iif.com/Portals/0/Files/content/Research/iif_mlcr_2nd_8_15_19.pdf (2019). Accessed 25 Oct 2022

Cambridge Centre for Alternative Finance, World Economic Forum: Transforming Paradigms: A Global AI in Financial Services Survey. (2020)

European Banking Authority: Guidelines on Loan Origination and Monitoring. (2020)

Financial Conduct Authority: Retail Lending. (2019)

Black, H., Schweitzer, R.L., Mandell, L.: Discrimination in mortgage lending. Am. Econ. Rev. 68, 186–191 (1978)

Rice, L., Swesnik, D.: Discriminatory effects of credit scoring on communities of color. Suffolk Univ. Law Rev. 46, 935–966 (2013)

Rice, L.: Long Before Redlining: Racial Disparities in Homeownership Need Intentional Policies. https://shelterforce.org/2019/02/15/long-before-redlining-racial-disparities-in-homeownership-need-intentional-policies/. (2019). Accessed 23 May 2021

Barocas, S., Selbst, A.: Big data’s disparate impact. Calif. Law Rev. 104, 671–732 (2016). https://doi.org/10.2139/ssrn.2477899

Bruckner, M.: The promise and perils of algorithmic lenders’ use of big data. Chic. Kent. Law Rev. 93, 3–60 (2018)

Hurley, M., Adebayo, J.: Credit scoring in the era of big data. Yale J. Law Technol. 18, 148–216 (2017)

Citron, D., Pasquale, F.: The scored society: due process for automated predictions. Wash. Law Rev. 89, 1–33 (2014)

Ahmed, S., Alshater, M.M., Ammari, A.E., Hammami, H.: Artificial intelligence and machine learning in finance: a bibliometric review. Res. Int. Bus. Financ. 61, 101646 (2022). https://doi.org/10.1016/j.ribaf.2022.101646

Mishkin, F.: The Economics of Money, Banking, and Financial Markets. Pearson, London (2019)

Krugman, P.: The Age of Diminished Expectations: US Economic Policy in the 1990s. MIT Press, Cambridge (1998)

Matthews, K., Thompson, J.: The Economics of Banking. Wiley, Hoboken (2008)

Dragomir, L.: European Prudential Banking Regulation and Supervision: The Legal Dimension. Routledge, New York (2010)

Pasquale, F.: The Black Box Society. Harvard University Press, Cambridge (2019)

Benjamin, R.: Race After Technology. Polity Press, Cambridge (2019)

Noble, S.U.: Algorithms of Oppression. New York University Press, New York (2018)

Kraakman, R., Armour, J., Davies, P., Enriques, L., Hansmann, H., Hertig, G., Hopt, K., Kanda, H., Pargendler, M., Ringe, W.-G., Rock, E.: The Anatomy of Corporate Law: A Comparative and Functional Approach. Oxford University Press, Oxford (2017)

Deakin, S.: The corporation as commons: rethinking property rights, governance and sustainability in the business enterprise. Queens Law J. 37, 339–381 (2012)

Friedman, M.: Capitalism and Freedom. University of Chicago Press, Chicago (1962)

Daily, C., Dalton, D., Cannella, A.: Corporate governance: decades of dialogue and data. Acad. Manage. Rev. 28, 371–382 (2003). https://doi.org/10.2307/30040727

Jensen, M., Meckling, W.: Theory of the firm: managerial behavior, agency costs and ownership structure. J. Financ. Econ. 3, 305–360 (1976). https://doi.org/10.1016/0304-405X(76)90026-X

Raelin, J., Bondy, K.: Putting the good back in good corporate governance: the presence and problems of double-layered agency theory. Corporate Governance Int. Rev. 21, 420–435 (2013). https://doi.org/10.1111/corg.12038

Krugman, P.: The Rage of the Bankers. https://www.nytimes.com/2015/09/21/opinion/paul-krugman-the-rage-of-the-bankers.html (2015). Accessed 21 June 2021

Freixas, X., Rochet, J.-C.: Microeconomics of Banking. MIT Press, Cambridge, MA (2008)

European Central Bank: Financial Stability Review. (2020)

Committee on the Global Financial System: Structural Changes in Banking After the Crisis. https://www.bis.org/publ/cgfs60.htm (2018). Accessed 25 Oct 2022

Saghi-Zedek, N., Tarazi, A.: Excess control rights, financial crisis and bank profitability and risk. J. Bank. Financ. 55, 361–379 (2015). https://doi.org/10.1016/j.jbankfin.2014.10.011

Bikker, J., Vervliet, T.: Bank profitability and risk-taking under low interest rates. Int. J. Financ. Econ. 23, 3–18 (2018). https://doi.org/10.1002/ijfe.1595

Bikker, J., Hu, H.: Cyclical Patterns in Profits, Provisioning and Lending of Banks. (2003)

Dietrich, A., Wanzenried, G.: Determinants of bank profitability before and during the crisis: evidence from Switzerland. J. Int. Financial Mark. Inst. 21, 307–327 (2011). https://doi.org/10.1016/j.intfin.2010.11.002

Kok, C., Móré, C., Pancaro, C.: Bank Profitability Challenges in Euro Area Banks: The Role of Cyclical and Structural Factors. (2015)

Trujillo-Ponce, A.: What determines the profitability of banks? Evidence from Spain. Account. Finance. 53, 561–586 (2013). https://doi.org/10.1111/j.1467-629x.2011.00466.x

Walter, I.: Reputational risk. In: Finance Ethics. pp. 103–123. Wiley, Hoboken (2011)

Fombrun, C.: Reputation: Realizing Value from the Corporate Image. Harvard Business School Press, Boston (1996)

Bhattacharyya, N., Garrett, T.: Why People choose negative expected return assets—an empirical examination of a utility theoretic explanation. Federal Reserve Bank of St. Louis. (2006). https://doi.org/10.20955/wp.2006.014

Simon, H.A.: A behavioral model of rational choice. Q. J. Econ. 69, 99–118 (1955). https://doi.org/10.2307/1884852

Posner, R.: Rational choice, behavioral economics, and the law. Stanford Law Rev. 50, 1551–1575 (1998). https://doi.org/10.2307/1229305

Sugden, R.: Rational choice: a survey of contributions from economics and philosophy. Econ. J. 101, 751–785 (1991). https://doi.org/10.2307/2233854

Kahneman, D., Wakker, P.P., Sarin, R.: Back to Bentham? Explorations of experienced utility. Q. J. Econ. 112, 375–405 (1997). https://doi.org/10.1162/003355397555235

Read, D.: Utility theory from Jeremy Bentham to Daniel Kahneman. (2004)

Sum, R.M., Nordin, N.: Decision making biases in insurance purchasing. J. Adv. Res. Social Behav. Sci. 10, 165–179 (2018)

Kunreuther, H., Pauly, M.: Insurance decision-making and market behavior. Found. Trends Microecon. 1, 63–127 (2005). https://doi.org/10.1561/0700000002

Kahneman, D.: Maps of bounded rationality: psychology for behavioral economics. Am. Econ. Rev. 93, 1449–1475 (2003). https://doi.org/10.1257/000282803322655392

Roach, B., Goodwin, N., Nelson, J.: Consumption and the consumer society. In: Microeconomics in Context. Routledge. (2019). https://doi.org/10.4324/9780429438752-10

Bar-Gill, O.: Consumer transactions. In: Zamir, E., Teichman, D. (eds.) The Oxford Handbook of Behavioral Economics and the Law, pp. 464–490. Oxford University Press, Oxford (2014)

Lukacs, P., Neubecker, L., Rowan, P.: Price discrimination and cross-subsidy in financial services. (2017)

McKechnie, S.: Consumer buying behaviour in financial services: an overview. Int. J. Bank Mark. 10, 4–13 (1992). https://doi.org/10.1108/02652329210016803

Stigler, G.J.: The Theory of Price. Macmillan, New York (1987)

Warren, E., Westbrook, J.L.: Contracting out of bankruptcy: an empirical intervention. Harv. Law Rev. 118, 1197–1254 (2005)

Bebczuk, R.: Asymmetric Information in Financial Markets: Introduction and Applications. Cambridge University Press, Cambridge (2003)

Jolls, C.: Bounded Rationality, Behavioral Economics, and the Law. Oxford University Press, Oxford (2017)

Tversky, A., Kahneman, D.: Availability: a heuristic for judging frequency and probability. Cogn. Psychol. 5, 207–232 (1973). https://doi.org/10.1016/0010-0285(73)90033-9

Williamson, O.: The Economic Institutions of Capitalism: Firms, Markets, Relational Contracting. Collier Macmillan Publishers, London (1987)

Williamson, O.: Transaction-cost economics: the governance of contractual relations. J. Law Econ. 22, 195–223 (1979). https://doi.org/10.1086/466942

Zinman, J.: Consumer credit: too much or too little (or just right)? J. Legal Stud. 43, S209–S237 (2014). https://doi.org/10.1086/676133

Alchian, A.A.: Uncertainty, evolution, and economic theory. J. Polit. Econ. (2015). https://doi.org/10.1086/256940

Aggarwal, N.: The new morality of debt. Finance Dev. 58, 28–31 (2021)

Competition & Markets Authority: Algorithms: how they can reduce competition and harm consumers. (2021)

Kear, M.: Playing the Credit Score Game: Algorithms, ‘positive’ data and the personification of financial objects. Econ. Soc. 46, 346–368 (2017). https://doi.org/10.1080/03085147.2017.1412642

Hand, D.J., Henley, W.E.: Statistical classification methods in consumer credit scoring: a review. J. R Stat. Soc. Ser. A Stat. Soc. 160, 523–541 (1997). https://doi.org/10.1111/j.1467-985x.1997.00078.x

Thomas, L.: A survey of credit and behavioural scoring: forecasting financial risk of lending to consumers. Int. J. Forecast. 16, 149–172 (2000). https://doi.org/10.1016/s0169-2070(00)00034-0

Dawes, R., Faust, D., Meehl, P.: Clinical versus actuarial judgment. Science 243, 1668–1674 (1989). https://doi.org/10.1126/science.2648573

Bank of England, Financial Conduct Authority: Machine Learning in UK Financial Services. (2019)

Ross, B.: Barclays Innovating in use of AI in Banking. AI Trends. https://www.aitrends.com/financial-services/barclays-innovating-in-use-of-ai-in-banking/ (2020). Accessed 23 Feb 2021

Standard Chartered: Partnering with Truera to tackle unjust bias in AI assisted decision making. https://www.sc.com/en/media/press-release/partnering-with-truera-to-tackle-unjust-bias-in-ai-assisted-decision-making/ (2020). Accessed 5 May 2021

ZestAI: Prestige Financial Services: Saying Yes to Better Buyers. https://www.zest.ai/resources/prestige-financial-services-saying-yes-to-better-buyers. (2020). Accessed 5 May 2021

Datrics AI: Improving Credit Scoring for the Challenger Bank in the UK. Case Studies. https://datrics.ai/improving-credit-scoring-for-the-challenger-bank-in-the-uk. (2022). Accessed 5 May 2021

Prove: AI and Open Banking’s Strides to Transform Lending. https://www.prove.com/blog/ai-open-banking-strides-to-transform-lending. (2021). Accessed 10 August 2022

Turing, A.: Computing machinery and intelligence. Mind LIX, 433–460 (1950). https://doi.org/10.1093/mind/lix.236.433

McCarthy, J., Minsky, M., Rochester, N., Shannon, C.: A proposal for the dartmouth summer research project on artificial intelligence. In: AI Magazine. Association for Computing Machinery, New Hampshire (1955). https://doi.org/10.1609/aimag.v27i4.1904

Frankish, K., Ramsey, W.: Introduction. In: The Cambridge Handbook of Artificial Intelligence. pp. 1–12. Cambridge University Press, Cambridge (2014)

Hastie, T., Tibshirani, R., Friedman, J.: The Elements of Statistical Learning: Data Mining, Inference, and Prediction. Springer, New York (2009)

Franklin, S.: History, motivations, and core themes. In: Frankish, K., Ramsey, W. (eds.) The Cambridge Handbook of Artificial Intelligence, pp. 15–33. Cambridge University Press, Cambridge (2014)

Suresh, H., Guttag, J.V.: A Framework for understanding sources of harm throughout the machine learning life cycle. In: Proceedings of the Equity and Access in Algorithms, Mechanisms, and Optimization. pp. 1–9. Association for Computing Machinery, New York (2021). https://doi.org/10.1145/3465416.3483305

Alpaydin, E.: Introduction to Machine Learning. MIT Press, Cambridge (2020)

Hardt, M., Price, E., Srebro, N.: Equality of Opportunity in Supervised Learning. In: Proceedings of the 30th Conference on Neural Information Processing Systems. NIPS, Barcelona, Spain (2016). http://arxiv.org/abs/1610.02413

Corbett-Davies, S., Goel, S.: The measure and mismeasure of fairness: a critical review of fair machine learning. arXiv. (2018). https://doi.org/10.48550/arxiv.1808.00023

Gupta, P.: Balancing Bias and Variance to Control Errors in Machine Learning. Towards Data Science. https://towardsdatascience.com/balancing-bias-and-variance-to-control-errors-in-machine-learning-16ced95724db. (2017). Accessed 23 June 2021

Bishop, C.: Pattern Recognition and Machine Learning. Springer, New York (2006)

Gelman, A., Carlin, J., Stern, H., Dunson, D., Vehtari, A., Rubin, D.: Bayesian Data Analysis. CRC Press (2021)

Parmigiani, G., Inoue, L.: Decision Theory: Principles and Approaches. Wiley, Hoboken (2009)

Starmer, C.: Developments in non-expected utility theory: the hunt for a descriptive theory of choice under risk. J. Econ. Lit. 38, 332–382 (2000). https://doi.org/10.1257/jel.38.2.332

Gillis, T., Spiess, J.: Big data and discrimination. Univ. Chic. Law Rev. 86, 459–487 (2019)

Aggarwal, N.: The norms of algorithmic credit scoring. Camb. Law J. 80, 42–73 (2021). https://doi.org/10.2139/ssrn.3569083

Jagtiani, J., Lemieux, C.: Do Fintech lenders penetrate areas that are underserved by traditional banks? (2018). https://doi.org/10.21799/frbp.wp.2018.13

Board of Governors of the Federal Reserve System: Report to the Congress on Credit Scoring and Its Effects on the Availability and Affordability of Credit. (2007)

Experian Boost: Boost Your Score Instantly. www.experian.co.uk/consumer/experian-boost.html. (2021). Accessed 24 June 2021

Truby, J., Brown, R., Dahdal, A.: Banking on AI: mandating a proactive approach to AI regulation in the financial sector. Law Financial Markets Rev. 14, 110–120 (2020). https://doi.org/10.1080/17521440.2020.1760454

Brainard, L.: What are we learning about artificial intelligence in financial services? https://www.bis.org/review/r181114g.htm (2018). Accessed 25 Oct 2022

Gautier, A., Ittoo, A., Van Cleynenbreugel, P.: AI algorithms, price discrimination and collusion: a technological, economic and legal perspective. Eur. J. Law Econ. 50, 405–435 (2020). https://doi.org/10.1007/s10657-020-09662-6

Kallus, N., Zhou, A.: Fairness, Welfare, and Equity in Personalized Pricing. In: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. pp. 296–314. Association for Computing Machinery, New York (2021). https://doi.org/10.1145/3442188.3445895

Chen, X., Owen, Z., Pixton, C., Simchi-Levi, D.: A statistical learning approach to personalization in revenue management. Manage. Sci. 68, 1923–1937 (2022). https://doi.org/10.1287/mnsc.2020.3772

Dubé, J.-P., Misra, S.: Personalized pricing and consumer welfare. J. Polit. Econ. (2022). https://doi.org/10.1086/720793

Abrardi, L., Cambini, C., Rondi, L.: The economics of artificial intelligence: a survey. (2019). https://doi.org/10.2139/ssrn.3425922

Ban, G., Keskin, N.B.: Personalized dynamic pricing with machine learning: high dimensional features and heterogeneous elasticity. Manage. Sci. 67, 5549–5568 (2021). https://doi.org/10.2139/ssrn.2972985

Cortis, D., Debattista, J., Debono, J., Farrell, M.: InsurTech. In: Lynn, T., Mooney, J.G., Rosati, P., Cummins, M. (eds.) Disrupting Finance: FinTech and Strategy in the 21st Century, pp. 71–84. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-02330-0_5

Azzolina, S., Razza, M., Sartiano, K., Weitschek, E.: Price discrimination in the online airline market: an empirical study. J. Theor. Appl. Electron. Commer. Res. 16, 2282–2303 (2021). https://doi.org/10.3390/jtaer16060126

Townley, C., Morrison, E., Yeung, K.: Big data and personalized price discrimination in EU competition law. Yearb. Eur. Law. 36, 683–748 (2017). https://doi.org/10.1093/yel/yex015

Zweifel, P.: Insurance Economics. Springer, Heidelberg (2012)

Tirole, J.: Digital economies: the challenges for society. In: Economics for the Common Good. Princeton University Press, Princeton (2018). https://doi.org/10.1515/9781400889143-017

Diamond, D.W.: Monitoring and reputation: the choice between bank loans and directly placed debt. J. Polit. Econ. 99, 689–721 (1991). https://doi.org/10.1086/261775

Vanston, N.: Trust and Reputation in Financial Services. (2012)

Reddy, Y.: Society, Economic Policies, and the Financial Sector. https://www.bis.org/events/agm2012/sp120624.pdf (2012). Accessed 25 Oct 2022

Heinemeier Hansson, D.: https://twitter.com/dhh/status/1192540900393705474. (2019). Accessed 18 August 2022

Wozniak, S.: https://twitter.com/stevewoz/status/1193330241478901760. (2019). Accessed 18 August 2022

Department of Financial Services: Report on Apple Card Investigation. (2021)

Verma, S., Lahiri, A., Dickerson, J.P., Lee, S.-I.: Pitfalls of explainable ML: an industry perspective. arXiv (2021). https://doi.org/10.48550/arxiv.2106.07758

Doshi-Velez, F., Kim, B.: Towards a rigorous science of interpretable machine learning. arXiv. (2017). https://doi.org/10.48550/arxiv.1702.08608

Miller, T.: Explanation in artificial intelligence: insights from the social sciences. Artif. Intell. 267, 1–38 (2019). https://doi.org/10.1016/j.artint.2018.07.007

Rudin, C.: Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat. Mach. Intell. 1, 206–215 (2019). https://doi.org/10.1038/s42256-019-0048-x

Weller, A.: Transparency: motivations and challenges. In: Samek, W., Montavon, G., Vedaldi, A., Hansen, L.K., Müller, K.-R. (eds.) Explainable AI: Interpreting, Explaining and Visualizing Deep Learning, pp. 23–40. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-28954-6_2

Linardatos, P., Papastefanopoulos, V., Kotsiantis, S.: Explainable AI: a review of machine learning interpretability methods. Entropy 23, 18 (2020). https://doi.org/10.3390/e23010018

Véliz, C.: Privacy is Power. Bantam Press, London (2020)

The Behavioural Insights Team: The Perception of Fairness of Algorithms and Proxy Information in Financial Services. (2019)