Abstract

Commentators interested in the societal implications of automated decision-making often overlook how decisions are made in the technology’s absence. For example, the benefits of ML and big data are often summarized as efficiency, objectivity, and consistency; the risks, meanwhile, include replicating historical discrimination and oversimplifying nuanced situations. While this perspective tracks when technology replaces capricious human judgements, it is ill-suited to contexts where standardized assessments already exist. In spaces like employment selection, the relevant question is how an ML model compares to a manually built test. In this paper, we explain that since the Civil Rights Act, industrial and organizational (I/O) psychologists have struggled to produce assessments without disparate impact. By examining the utility of ML for conducting exploratory analyses, coupled with the back-testing capability offered by advances in data science, we explain modern technology’s utility for hiring. We then empirically investigate a commercial hiring platform that applies several oft-cited benefits of ML to build custom job models for corporate employers. We focus on the disparate impact observed when models are deployed to evaluate real-world job candidates. Across a sample of 60 jobs built for 26 employers and used to evaluate approximately 400,00 candidates, minority-weighted impact ratios of 0.93 (Black–White), 0.97 (Hispanic–White), and 0.98 (Female–Male) are observed. We find similar results for candidates selecting disability-related accommodations within the platform versus unaccommodated users. We conclude by describing limitations, anticipating criticisms, and outlining further research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is widely acknowledged that progress on workforce diversity in the U.S. has been insufficient [1–3]. Various factors contribute to this stagnancy, but the procedures employers use to screen job candidates are clearly relevant to the problem. Since the passage of Title VII of the Civil Rights Act of 1964, employers have been prohibited from engaging in two forms of discrimination: disparate treatment (e.g., intentional exclusion of a person because of their identity) and disparate impact (e.g., unintentional disadvantage of a protected class via a facially neutral procedure) [4]. While the former has become less common over time, the latter remains very widespread [5], effectively barring diverse candidates from job opportunities.

Why have hiring procedures that disadvantage minority group members persisted since long after the civil rights era sought to eliminate them? In short, until fairly recently, there were very few equitable alternatives. Contrary to popular perception, the disparate impact provision of Title VII of the Civil Rights Act was intended as a form of pro-innovation regulation [6, 7]. In the 1960s, virtually, all hiring procedures were designed with white middle-class men in mind, and policymakers and testing experts recognized that new instruments needed to be created to facilitate equal access to opportunities [8].Footnote 1 Unfortunately, progress toward this goal was never fully realized, even as scientific research on human aptitudes expanded in adjacent fields [9]. Employers instead settled into compliance strategies that provided legal justification for use of biased selection tools [10].Footnote 2

The emergence of so-called fairness-aware machine learning has brought renewed attention to quest for less-biased hiring procedures for the first time in decades.Footnote 3 Various authors have written about the potential for AI to overcome barriers to progress in standardized evaluations [11, 12], but these perspectives are limited in two important ways. First, contemporary investigations of AI are seldom framed in terms that resonate with the practical concerns of employers operating in the present day [13]. As technologists have rushed to comment on a future in which companies have already adopted novel hiring solutions en masse, little attention has been paid to the pros and cons of machine learning vis-à-vis incumbent selection methods. Exacerbating this disconnect is the tendency of machine learning researchers to frame empirical investigations of decision-making procedures as unprecedented when, in reality, employers have been conducting such analyses for over half a century. Second, data from employment procedures, whether algorithmic or paper-and-pencil, are seldom available to the public. Some AI experts have attempted to overcome this challenge with synthetic datasets [14, 15], but such tactics further dilute the research’s immediate relevance for employers.

In this paper, we deviate from work that has positioned AI for hiring in a futuristic vacuum, instead evaluating the technology for its potential to overcome familiar challenges in employment selection.Footnote 4 Since the passage of Title VII of the Civil Rights Act, the industrial–organizational (I/O) psychology literature has struggled with the development of hiring procedures that simultaneously demonstrate validity and avoid significant racial impact [16]. The field’s general consensus that such assessments are not achievable has dramatically influenced how employers approach compliance with anti-discrimination regulations [17]. Namely, if an organization is considering a novel hiring procedure designed with less disparate impact, the assumption is that it lacks the validity evidence necessary to respond to litigation. Because machine learning models can be trained with both aspects of Title VII compliance in mind, we argue that a critical advantage offered by the technology is an unprecedented capacity to disrupt this paradigm, known as the “diversity–validity dilemma.”Footnote 5

Our investigation further stands out from previous investigations of AI for hiring using data sourced directly from a commercial talent platform to empirically test our theory. The platform is an example of a system that uses fairness-aware machine learning, with the purpose being to provide large corporate employers with models to evaluate the alignment of candidates’ “soft skills” to the needs of a particular role. Beyond simply explaining how these models are trained and tested prior to deployment, we examine the magnitude of disparate impact ratios observed when they are used to screen real-world job applicants. By benchmarking these results against the typical impact observed with traditional hiring assessments, such as cognitive ability tests, we provide a practical interpretation of how machine learning may influence employment selection.

The paper is organized in three parts, with the first unpacking the status quo of the hiring technology industry, the second focusing on the opportunity for progress with AI, and the third presenting empirical results from a modern hiring platform utilized in high-volume screening contexts.Footnote 6 In part one, we describe how the limitations of science and technology in the post-civil rights era led employers to assume that they could only achieve compliance with Title VII through the use of highly traditional assessments and rigid validation methods. Over time, despite the law’s clear mandate for employers to consider both the fairness and validity of selection procedures, a consensus emerged that only the latter could be practically addressed. In part two, we explain how machine learning allows for a shift away from employment selection’s hyper-focus on traditional psychometric validity, which has perpetuated the so-called “diversity–validity dilemma.” For example, since algorithms can be used to efficiently build hundreds of versions of an assessment, the technology facilitates identifying the one that simultaneously meets standards for group-level statistical parity (e.g., disparate impact) and cross-validated accuracy (e.g., concurrent criterion validity). In part three, we conduct an empirical investigation of the utility of ML for mitigating using data directly sourced from a commercial AI platform. We conclude by responding to anticipated concerns and providing directions for further research.

2 How Title VII of the Civil Rights Act tried (and failed) to disrupt ineffective employment testing

Lack of progress on disparate impact is often attributed to the failure of regulations to meaningfully influence employers’ behavior. We offer a more-nuanced reality: while employers have always been highly motivated to avoid discrimination liability, their options for doing so were historically constrained by the state of assessment science. In this section, we provide some important historical background on the use of standardized selection tests and the rationale for their inclusion in the Civil Rights Act. We explain how the enactment of Title VII effectively offered two pathways for employers to comply with the law: develop new hiring procedures without disparate impact or justify the bias in legacy assessments with evidence of job-relevance. As validation of traditional tests took off and research on fairer alternatives stagnated, the marketplace of employment selection technology was frozen in the biased science of the mid-twentieth century. To demonstrate the persistence of this problem in the present day, we summarize the available empirical literature on the disparate impact of various common hiring assessments. This overview of the status quo frames our explanation of ML’s advantages in the next section.

2.1 Some background on why employment selection needed regulation

Most people are unaware of the fact that standardized assessments are developed and sold as commercial products, but the industry dates back to the early twentieth century [18]. During World War I and II, the U.S. military experimented with the use of psychometric tests to classify recruits into appropriate roles [19, 20]. By the 1940s, American businesses had begun to develop formal personnel management functions, and supervisors “were no longer fully responsible for hiring workers, and they were not expected to have technical knowledge of all the jobs of their subordinates” [21]. Employment assessments became highly popular during this time, with some 3000 new products flooding the market between WWII and the civil rights movement; by the 1960s, some 80% of employers were using standardized selection tools [22].

While the quantity of products available in the testing industry grew rapidly in the mid-twentieth century, improvements in quality were not comparable. In 1963, one sociologist wrote that “virtually nothing” was known about the administration of most standardized assessments, let alone their efficacy in predicting important outcomes [23]. As employers adopted the new technology with “unchecked enthusiasm,” test publishers faced minimal incentives to engage with proper scientific methodology [24]. In the years leading up to the Civil Rights Act, professional psychologists began articulating concerns about the integrity of common hiring practices, emphasizing that profiteering consultants were selling organizations inappropriate products [25]. Additionally, commentators expressed a general suspicion that many selection tools on the market were facilitating covert discrimination against Black candidates under the auspices of objectivity [26]. When hiring procedures ultimately became a legislative issue under Title VII of the Civil Rights Act of 1964, lawmakers were concerned both with the immorality of discrimination and with the economic inefficiency of needlessly biased tests [27].

The historical context of the early assessment industry is important, because it disputes a common narrative regarding anti-discrimination regulations. Critics of disparate impact doctrine have long claimed that this statute impedes an organization’s ability to make optimal hiring decisions [28, 29], but when Title VII was enacted, it was hardly the case that employers were forced to do away with carefully designed procedures. Instead, the concern for lawmakers was that most hiring tools were extremely inefficient and responsible for significant “economic waste” in the labor market, as evidenced by the high unemployment rate among Black jobseekers [30]. By repeatedly emphasizing that Title VII would in no way restrict use of “bona fide qualification tests,” Congress was clear that the goal of disparate impact doctrine was to restrict only those hiring practices that were failing businesses and society [27]. Therefore, as legal scholar Steven Greenberger summarizes, disparate impact was intended “as a form of governmental regulation intended to enhance the nation’s labor productivity by fostering the creation and implementation of personnel practices which will insure that business accurately evaluates its applicants and employees” [6].

In practical terms, once the Civil Rights Act acknowledged disparate impact as a form of workplace discrimination, the task for employers became considering whether their hiring procedures presented liability risks, in accordance with the three-pronged analysis outlined by the disparate impact provision of Title VII.Footnote 7 First, an employer should evaluate whether a hiring procedure disproportionately excludes members of a protected class. Second, they should ensure that the procedure is designed to measure job-relevant criteria. Third, the employer should consider whether the procedure could be replaced by an equally valid alternative with less disparate impact [4]. To reiterate, while the threat of discrimination litigation certainly created the impetus to ask these questions, the intent of regulators has never been to rely on lawsuits to effect change [31]. On the contrary, as summarized in Albemarle Paper Co. v. Moody, Title VII’s preference for voluntary compliance instructs organizations “to self-examine and to self-evaluate their employment practices and to endeavor to eliminate, so far as possible, the last vestiges” of discrimination [32].

2.2 Title VII: a strong focus on voluntary compliance and self-reflection from employers

Once the Civil Rights Act was enacted and employers were required to take a closer look at their hiring procedures, it became clear that “almost all contemporary employment testing” was characterized by the “ubiquitous design defect of failing to account for the nationwide impacts of segregation” [33]. Assessments were so likely to disproportionately filter out minority candidates that one commentator described finding evidence of disparate impact as “no more difficult than picking up stones from a gravel road” [24]. Adding to this insult was the fact that “employers, for the most part, had never tried to articulate their job performance goals in a systematic fashion, to develop selection devices carefully targeted to serve those goals, or to measure the success of such devices by validity studies” [34]. Since virtually all hiring procedure filtered out diverse candidates, the new regulatory regime offered two options for achieving voluntary compliance: identify selection tools with less disparate impact or establish evidence of job-relevance.

2.2.1 Compliance option 1: mitigating disparate impact

The years immediately following the passage of the Civil Rights Act saw testing professionals launched into optimistic investigations of “test fairness,” spurred by the belief that scientific research could promote social equity. Prominent I/O psychologist Philip Ash described the mindset in a 1965 statement before the American Psychological Association: “Psychologists face, in the matter of civil rights, not a threat to their instruments but a challenge to their talents: to serve as resource people and advisers, to organize and administer programs, to make effective use of our tools, and to do research to clarify the problem” [35]. Efforts to develop “culture free” and “culture fair” tests were undertaken [36], and psychometricians “hastened to provide definitions of bias in terms of objective criteria, to develop rigorous and precise methods for studying bias, and to conduct empirical investigations of test bias” [37].

While assessment experts in the post-civil rights era demonstrated an openness to innovation, they were also limited by the state of science and the availability of data. Theoretically, strategies to reduce disparate impact could either focus on refining existing assessments or on measuring novel constructs [38]. Regarding the former option, researchers could hardly rely on historical datasets, because employers did not generally track candidates’ protected class information until it was required by law [39]. Additionally, even after demographic data became readily available, employment contexts were often extremely homogenous, making group-level comparisons unviable. Proposals that may have seemed promising for reducing disparate impact, like using non-verbal intelligence assays and ensuring work samples were properly scoped to focus on relevant tasks, were difficult to explore in depth [40, 41]. Regarding the latter option, new means of identifying and measuring psychological constructs were in their infancy in the 1960s and 1970s. The development of psychological instruments that did not rely on self-report would only occur after dramatic advancements in emerging fields, like cognitive psychology, cognitive neuroscience, and behavioral economics. Not until the dawn of the computer age would scientific progress in these disciplines translate to behavioral assessment technology [9].

The assessment industry’s initial enthusiasm to find real-world solutions to combat employment discrimination did not last long enough to bring about meaningful change. By the mid-1970s, measurement experts were no longer exploring questions like “how can we better assess worker capabilities?” and were instead engaged in an esoteric debate about the statistical definitions of bias, led by prominent psychometricians like Cleary, Thorndike, and Darlington [42–44]. The shift was understandably frustrating for organizations awaiting improved assessments, as one commentator working for Educational Testing Services in the late 1970s noted: “I find disturbing…the behavior of many…social scientists who…retreat from all the controversies over testing and evaluation by retiring into cozy littler coteries where they write beautiful essays to one another that are so heavily laced with mathematical equations that it is a rare person…who can understand what they are talking about” [45]. As machine learning researchers, Hutchinson and Mitchell summarize: “The fascination with determining fairness ultimately died out as the work became less tied to the practical needs of society, politics and the law, and more tied to unambiguously identifying fairness” [46].

The erudite debates of measurement experts may have distracted from the goal of improving hiring tests, but academic scholarship did not singlehandedly derail progress on fair testing. Beginning in the 1970s, fairness-centered innovation was also deprioritized by a shift in demand from employers. The civil rights era had been motivated by a broad acknowledgement of the realities of systemic discrimination, but this consensus dissipated quickly. An alternative perspective emerged that appealed to the majority class, claiming that “racial subordination was largely past and…social inequalities, if any, reflected the cultural failings of minorities themselves” [47]. According to this narrative, efforts to remediate racial disparities were equivalent to “reverse discrimination” against deserving White people [48]. This narrative was bolstered by the re-emergence of hereditarian theories of intelligence by the likes of Arthur Jensen [49]. Unsurprisingly, as the popularity of this position increased, employers lost interest in voluntarily exploring tests with less disparate impact. In the words of legal scholar Ian López, “The window for fundamental change opened just slightly before blowing shut again in the face of a quickly gathering backlash” [47].

2.2.2 Compliance option 2: establishing validity evidence

The search for new hiring procedures may have faltered in the post-civil rights era, but efforts to validate existing assessments took off in full force. As one former president of the Society for Industrial and Organizational Psychology (SIOP) summarizes, “Before [the Civil Rights Act of 1964], I/O psychologists were interested in test validity, but their interest was a scientific one, not a legal one. The CRA began a tidal wave of work on test validation…Once we realized how important it was to be able to validate tests, the race was on to discover factors that led to lower than desired validities, and to validate tests more efficiently” [50]. Particularly after the landmark case Albemarle Paper Co. v. Moody, in which the Supreme Court interrogated the technical soundness of the defendant’s business-necessity evidence, employers realized that “defenses based on apparent commonsense and rationality” were insufficient to shield against disparate impact liability [34].

Lawmakers may have hoped that increased validation research would improve the quality of selection tests, but in reality, the most pressing concern for employers was to avoid litigation. As sociologist Robin Stryker and co-authors observe, because the cumulative logic of legal precedent is “backward looking,” this created a tension with the “forward looking” logic of scientific progress [17]. Psychologists working to arm employers with a legally sound validity defense unsurprisingly felt more secure in relying on large datasets and well-established psychometric constructs than in betting on the frontiers of research. Influential psychometrician Robert Guion lamented his field’s stagnancy after serving as president of SIOP, noting that many researchers “[threw] out good hypotheses about predictors because of inadequate sample sizes” and continued to rely on problematic supervisor ratings as criteria simply because they were ubiquitous [51].

Over the years, psychometric pedagogy has dovetailed with legal incentives to make the dominance of established hiring assessments inevitable. Assessment experts Cole and Zieky explain: “Traditional validity studies focus on existing measures. They do not seek out alternative measures that may measure the same construct in different ways, nor do they seek out other constructs of likely utility. Furthermore, changes that do not add to validity are not sought after, even if such changes might allow some individuals to display strengths that might otherwise remain hidden” [52]. In light of these constraints, it is perhaps unsurprising that deference to g-based theories of intelligence continues to dominate employment research, with many contemporary I/O psychologists believing “most critical questions regarding intelligence that are pertinent to personnel selection have been answered” [53]. Legal scholar Micheal Selmi summarizes the implications: “Most written examinations today continue to have substantial disparate impact; what has changed is that the tests are better constructed, in the sense that they are harder to challenge in court because they have been properly validated, but not better in the sense of being better predictors of performance” [7].

2.3 Today: understanding the status quo for hiring assessments

Today, several decades after Title VII was enacted, the two pieces of guidance employers receive regarding selection procedures remains very aligned with the conclusions of post-civil-rights-era psychologists: (1) the task of drastically reducing disparate impact and maintaining validity is largely futile and (2) the best evidence of job-relevance is existing psychometric literature. Acceptance of these tenets is so widespread in the testing industry that they are frequently combined and stylized as “the diversity–validity dilemma” [16], or the idea that selection tools with less impact necessarily “limit the capability of the workforce” [54]. Sales collateral from companies that develop standardized assessments further reinforce this position. For example, one 2017 marketing document from the cognitive test publisher Wonderlic reviews “the multitude of commonly used hiring tools…which typically exhibit disparate impact, along with how employers can justify their use” [55].

While industry rhetoric may suggest that disparate impact is an inevitable feature of effective hiring tests, it is important to note that the magnitude of the problem is rarely discussed in specific terms.Footnote 8 The assessment industry’s reticence on disparate impact metrics was likely only further cemented by the passage of the Civil Rights Act of 1991, which placed the initial burden of proof for disparate impact litigation on plaintiffs. Stated plainly: without publicly available evidence of a procedure’s biased outcomes, job candidates cannot even begin the process of filing a charge with regulators [56].

But the fact that most hiring procedures inhibit workforce diversity is not mere conjecture, since an extensive body of research exists on the topic. As organizational consultant Nancy Tippins states, “Regardless of where an employer stands on the topic of adverse impact, it must be measured” [54]. Over the course of a century, test publishers and employment experts have developed fairly standardized means of summarizing group disparities in assessment performance. I/O psychologists particularly reference the standardized mean difference d (or Cohen’s d) as an index of demographic differences in average construct scores. As Sackett and Shen explain, “This is the majority mean minus the minority mean divided by the pooled within-group standard deviation.Footnote 9 This index expresses the group difference in standard deviation units, with zero indicating no difference, a positive value indicating a higher mean for the majority group, and a negative value indicating a higher mean for the minority group” [57].Footnote 10 In a 2008 review, Ployhart and Holtz provide an overview of d values for psychometric constructs common in employment [16], reproduced in Table 1.Footnote 11

To estimate the implications of a particular hiring test, the values summarized in Table 1 must be combined with information about the relevant selection context. In other words, how is the employer actually using the test to select candidates? The ultimate metric of interest is known as the impact ratio (IR), which is calculated as the selection rate for a given minority group divided by the selection rate of the majority group. Since 1978, EEOC guidance known as the four–fifths rule has suggested that an impact ratio below 0.8 may indicate disparate impact. In cases where average tests scores for the minority group and the majority group are very different (e.g., the d value is large), it is more likely that a test’s IR will be relatively small. Group-level differences in scores on a hiring procedure are therefore a “precursor” of disparate impact [58], but it is worth noting that an exact impact ratio (IR) depends on how aggressively an employer uses a procedure to filter candidates. Generally, “the effects of group differences are greater as an organization becomes more selective (e.g., has a higher cutoff)” [59].

Consider the magnitude of disparate impact resulting when a cut-off score on a cognitive ability test is used to filter job candidates. While it is widely known that Black and Hispanic individuals tend to score lower on such assessments than White individuals, the practical implications of such gaps are seldom discussed. Using a methodology derived from I/O psychologists Sackett and Ellingson, Fig. 1 plots the impact ratio of cognitive ability tests as a function of the majority selection rate, or the proportion of White candidates who receive acceptable scores on the assessment [59]. Notably, neither the Black–White or Hispanic–White impact ratio falls above 0.8 unless the employer is already selecting over 90% of the White candidates in the pool. In other words, because the d value for cognitive tests is so large, it is virtually impossible for use of these procedures to not result in systematic exclusion of minorities.

Figure 2 alternatively focuses on the effects of tests with varying d values. The bar chart depicts the impact ratios observed when an assessment is used to screen in 50% of the White population. According to Sackett and Ellingson’s methodology, the Black–White d value of 0.99 corresponds to only 16% of Black candidates being screened in, or an impact ratio of 0.32. For work sample tests, the d value of 0.52 means only 30% of Black candidates are screened in, or an impact ratio of 0.60. Strikingly, even when implemented in a relatively uncompetitive selection context, the vast majority of conventional hiring tests do not align with the EEOC’s four–fifths rule.

While the above discussion demonstrates that the extent of racial impact in traditional assessments has held constant for decades, it is worth noting that I/O psychologists’ attitudes toward this have changed over time. In the 1960s, assessments that consistently produced lower scores for Black Americans than their White peers were interrogated for possible “design defects” that were disadvantaging minority applicants [33]. But as testing experts tried and failed to build hiring procedures with less racial impact, questions began to emerge about whether avoidance of outcome disparities was actually necessary. By the 1970s, an increasing number of researchers were arguing that test bias should be framed solely in terms of under- or overprediction of performance for a given subgroup [42]. According to these commentators, analyses of fairness should instead look for evidence of predictive bias, also known as differential validity.Footnote 12

In the trajectory of the hiring assessment industry, investigations of predictive bias have played an important role in sustaining I/O psychology’s tolerance of disparate impact.Footnote 13 While this formulation of fairness as “lack of predictive bias” might seem reasonable at first glance, it requires a major assumption that is rarely true in selection contexts: the target variable cannot be biased against one of the subgroups. Guion called attention to this issue as early as 1965: “Most personnel research relies on ratings; the ratings of a potentially prejudiced supervisor can hardly be used in research on discrimination” [60]. In cases where a biased performance metric is used—such as if prejudiced white supervisors systematically assign lower ratings to black workers—“examinations of differential validity…may mask the presence of criterion bias and falsely indicate that no bias exists in either the test or criterion” [61]. Put differently, where I/O psychologists have used differential validity analyses to support the “fairness” of a test, historical employment data are treated as “ground truth,” effectively characterizing the status quo as meritocratic.

3 Why a paradigm shift is needed in employment research and how machine learning can help

If the history of employment selection reveals anything, it is that traditional hiring procedures have been sustained by very premature conclusions about the nature of work, human potential, and job performance. In an effort to provide employers with actionable guidance on how to navigate anti-discrimination regulations, psychologists in the post-civil rights era were motivated to frame their research findings to date as universal truths. While it may have been important for testing experts to express resolute confidence in their validity studies in a world where all assessments had racial impact, the mindset has outlived its practical utility. Today, despite ongoing advancements in the evaluation of human aptitudes, some I/O psychologists remain steadfast in characterizing the field’s knowledge of employment selection with a “mission accomplished mentality” [62]. In this section, we explain this mentality as a product of psychology’s epistemological orientation, which has positioned the validity of established tests as truths that exist independently of available technology. In doing so, we set the stage to discuss machine learning as a tool for disrupting the dominance of inflexible theory-driven models for employment selection.

3.1 When science is an impediment to innovation: the epistemology of testing

How did testing experts, who were supposedly encouraged to develop new assessments under Title VII, come to such definitive interpretations of existing research? In a word: epistemology, or how a field distinguishes justified belief from opinion. For I/O psychologists,Footnote 14 validity studies represent “accurate, precise, value-free and context-independent knowledge about the relationship between predictors and criteria” [63]. The perspective reflects the field’s foundations in positivism, which assumes that social science truths exist and can be discovered using the same research methods as the natural sciences [64].Footnote 15 Positivism has been a useful framework for the social sciences that enforced falsifiable experimentation and the formation and reformulation of theories based on observable phenomena [65]. One consequence of this position, however, is that oft-cited older findings are frequently intepreted in the literature as robustly established truths [66]. In recent years, as foundational tenets of employment research have been revisited by scientists equipped with modern statistical methods and contemporary data sources, a replication crisis in the field has been revealed [67].

The fallout of the replication crisis is perhaps best demonstrated by the dominant perspective among employment researchers that cognitive ability tests predict job performance in virtually any role. Support for this position first emerged in the 1970s when researchers began experimenting with meta-analysis and “artifact” corrections to aggregate validity studies. Prior to the adoption of these methods, experiments on the efficacy of IQ tests as hiring tools had produced notoriously inconsistent results. For many employment researchers, this situation was untenable: “It was well recognized that until and unless some form of generalization of validity results was possible, personnel psychology would lack legitimacy…[and] would also fall short of the goal of all scientific endeavor—the discovery of general laws” [68]. With the advent of meta-analysis, I/O psychologists argued that it was possible “to demonstrate generalizable results…that [had been] obscured, distorted, or unclear” due to noise in the primary studies [69]. In the 1980s, when John Hunter and Frank Schmidt applied these methods to studies of employment procedures dating back to the early twentieth century, they concluded that cognitive ability (as measured by commercial IQ tests) is universally the most valid predictor of job performance [70]. Meta-analysis still proves to be a useful tool for aggregating trends over time; however, as with many statistical abstractions, the interpretation of these results is not without subjectivity.

The most noteworthy aspect of this conviction is not which types of abilities have been favored in the scholarship but how these conclusions are articulated. In order for a test to have “generalizable” validity, researchers must maintain that variable results in different situations “can be attributed to sampling error variance, direct or incidental range restriction, and other statistical artifacts” [71]. In other words, there must be something inherent about the relationship between the construct measured by a test and job performance.Footnote 16 As Landers and Behrend summarize, “I/O psychology [is] unusual among social sciences in [its] insistence that theory should always precede facts” [72]. The problem, however, is that when psychologists are committed to proving the validity of a particular theory, there is a risk of “clarifying a finding that was never really there in the first place” [73].

The science of human performance evaluation is of two minds: one which seeks validation of new measures with previously validated experiments, and another which grapples with a history of overt prejudice. The foundation of psychometrics by Francis Galton and other social scientists held that the existing social order represented a “natural” hierarchy on the basis of innate ability. Helms summarizes the implications of this thinking: “For Galton, race, intelligence, and environment (i.e., socioeconomic status) were tautological. White men of eminence were inherently more intelligent than everyone else, as demonstrated by their accomplishments, which occurred because they were more intelligent than others. Galton equated intelligence with the quality of these men’s sensory or psychological attributes” [74]. Thus, traditional psychometric research began from the position that the human aptitudes worth measuring were those exhibited by “superior” individuals in the majority group.Footnote 17 I/O psychologists (and many other social and behavioral sciences) have begun to reconcile with their troubling past, leading to reconsideration of fundamental assumptions of measurement and human aptitudes.

Far from providing an “objective” and “value-free” picture of the correlates of candidate success then, the reality of the traditional literature on employment selection is more accurately described as repeated investigations into a limited set of theories about human aptitudes. In general, these investigations are framed for the purpose of explaining the observed social order, meaning questions that may potentially detract from this goal are dismissed. Helms, for example, observes that “measurement experts have been quite resistant to…seek explanations for racial-group differences in test performance in the groups’ mean scores” [75]. One article from Jeffrey Cucina and co-authors demonstrate the myopic commitment to established evidence: “Given the vast empirical support for existing theories of intelligence…we do not believe that future attempts to create new models of intelligence will be fruitful. Instead, new intelligence literature [should] focus on bolstering the existing body of research and addressing common misconceptions among laypersons” [76]. At the same time, as Rabelo and Cortina observe, “Social groups that are underrepresented and/or marginalized are often excluded from organizational research, so existing theories and frameworks may not even apply to them” [77]. Thus, the epistemology of assessment science has been to validate previous assessments, with their inherent biases toward existing social hierarchies.

3.2 A persistent trope: three factors perpetuating the fairness–validity tradeoff

As previously mentioned in this paper, I/O psychology’s epistemological orientation is significant, because it affects the types of studies that are conducted in employment contexts, which in turn affects how organizations consider hiring procedures. The goal of mitigating disparate impact is readily dismissed as not possible when researchers assume that the most efficacious assessments have already been discovered. I/O psychologists express this sentiment to employers using the framing of the “diversity–validity dilemma,” or the notion that selection methods with less disparate impact necessarily sacrifice the procedure’s predictivity [16]. While many commentators have demonstrated an eagerness to espouse the “dilemma” as an uncontestable fact [78, 79], others have countered that the theory is largely a product of how personnel selection has studied over the last century. In particular, the pretense of a tradeoff has been supported by inflated validity benchmarks for traditional tests, reliance on narrow psychological theories, and use of rudimentary statistical models.

3.2.1 Issue 1: validity coefficients distorted by publication bias

The first issue sustaining the “diversity–validity dilemma” in the discourse on employment selection is the fact that commonly cited validity coefficients for traditional tests are severely inflated. By referencing effect sizes that are virtually unheard of in the psychology literature (e.g., IQ scores account for 30%Footnote 18 of job performance [70]), I/O psychologists have constrained demand for assessments with less adverse impact. For example, organizational consultant Nancy Tippins emphasizes that employers who attempt to reduce racial disparities in selection rates “will also suffer the costs associated with a lower-ability group of employees” [54]. However, in recent years, psychologists across all subdisciplines have come to acknowledge the high proportion of false-positive findings in the literature due to the “methodological flexibility” granted researchers in terms of data collection, analysis, and reporting [73]. In I/O psychology specifically, Kepes and McDaniel contend that the literature has disseminated “an uncomfortably high rate of false-positive results…and other misestimated effect sizes” over the years [67].Footnote 19

Individual validity studies in I/O psychology are likely to overestimate effect sizes, but meta-analytical reviews have generally been used to support the existence of the diversity–validity tradeoff [16]. According to Kepes and co-authors, “publication bias poses multiple threats to the accuracy of meta-analytically-derived effect sizes and related statistics,” but “research in the organizational sciences tends to pay little attention to this issue” [80]. As Siegel and Eder explain, “the detrimental effect of publication bias is exacerbated in meta-analyses” which “serve as statistical and also conceptual ‘false anchors’ by biasing subsequent power-calculations and serving as authoritative sources due to their higher citation rate” [81]. The distortion is even further compounded by unique validity generalization procedures used by I/O psychologists, which rely on statistical corrections based on loose assumptions about range restriction and criterion reliability [82]. As one example of the severity of this inflation, a 2021 article from Sackett and co-authors replicates the meta-analysis that has been used to benchmark the validity of cognitive ability tests for the last 40 years. Upon reconsidering the corrections proposed by Hunter and Schmidt in the 1980s, the authors find that the reported effect size of 0.51 is overestimated by as much as 70% [83].

3.2.2 Issue 2: inflexible thinking on human ability

A second issue that has contributed to the perpetuation of the diversity–validity tradeoff in personnel selection is the field’s resistance to engage with interdisciplinary research on human ability and its measurement. As Goldstein and co-authors explain, I/O psychology has embraced the psychometric perspective on intelligence to the virtual exclusion of other models [53]. At a high level, psychometric theories of intelligence are informed by “studying individual differences in test performance on cognitive tests.” Alternative models include cognitive theories (which “study the process involved in intelligent performance”), cognitive–contextual theories (which “emphasize processes that demonstrate intelligence in a particular context, such as a cultural environment”), and biological theories (which “emphasize the relationship between intelligence, and the brain and its functions”) [84]. Importantly, the most common perspective on intelligence in the testing community was largely established in the early twentieth century, and has a fairly rigid perspective of intelligence as referenced to a specific social standard. In contrast, newer research from fields like neuropsychology and cognitive science treat intelligence as modular, flexible, situationally dependent, and multifaceted [62]. Although most testing professionals would agree that intelligence is best measured through multiple angles of assessment, the reality is that their definition of intelligence is implicitly linked to a general intelligence that one either has or does not. This contributes to particular cultures and people being more likely to be “intelligent”.

Newer theories of human ability can mitigate racial impact in measurement. For example, intelligence tests developed for vocational purposes within the public sector have historically emphasized the role of crystallized intelligence (i.e., acculturated learning) as a driver of overall performance [85]. In contrast, task-based assessments may focus on information-processing abilities, like fluid intelligence (i.e., reasoning ability), working memory, and attention control, which are less likely to vary with factors like educational attainment [86]. Traditional tests also tend to measure a fairly narrow set of aptitudes and therefore imply “deficit thinking” about individuals who do not demonstrate those aptitudes [87, 88]. Conversely, broader models of human capability, like Howard Gardner’s theory of multiple intelligences, emphasize the fact that individuals have different intellectual strengths and weaknesses [89]. Overall, as West-Faulcon summarizes, “differences in racial group average scores are smaller on tests based on more complete theories of intelligence (multi-dimensional conceptions of intelligence) than on [traditional] tests” [90].Footnote 20

While multi-dimensional models of human abilities can theoretically facilitate the development of less-biased hiring procedures, it is worth noting that certain methods have practically constrained this possibility. Historically, whenever testing researchers have investigated the validity of “specific abilities” (in contrast to a “general ability” factor, or g), they have done so using “incremental validity analysis.” Kell and Lang explain: “Scores for an external criterion (e.g., job performance) are regressed first on scores on g, with scores for specific abilities entered in the second step of a hierarchical regression. If the specific ability scores account for little to no incremental variance in the criterion beyond [g], the specific aptitude theory is treated as being disconfirmed” [91]. In other words, researchers often base analyses of specific abilities on the assumption that a person’s general intelligence causes any variance in other aptitude tests, making it virtually impossible to isolate the predictive utility of unique constructs.Footnote 21 Further exacerbating this problem is the fact that testing research has historically been constrained to small sample sizes, limiting the number of independent variables that can be included in an experiment at one time [92]. As a result, it has seldom been feasible to consider how different narrow aptitudes contribute to job performance.

3.2.3 Issue 3: models that do not support multi-objective optimization

A final characteristic of historical methods that have sustained the diversity–validity dilemma is the tendency to build assessments with the sole goal of maximizing validity coefficients. In technical terms, employment researchers generally approach hiring as a single-objective optimization problem (focusing on validity) rather than a multi-objective optimization problem (focusing on validity and fairness simultaneously). As De Corte and co-authors explain, I/O psychologists are often asked by employers if the available predictors could be combined in a manner “that comes close to the optimal solution in terms of level of criterion performance achieved but does so with less adverse impact.” However, according to the authors, “practitioners do not know how to respond to such a request other than by trial and error with various predictor weights” [93]. Stated differently, rather than framing avoidance of disparate impact as an explicit goal of a hiring procedure at the outset, assessment developers have largely viewed group-level disparities “as an afterthought or an unfortunate consequence of the organization’s attempt to attain a single goal of maximizing job performance” [94]. In considering this significant methodological oversight, Hattrup and Roberts conclude that “when it comes to [adverse impact] versus validity, it is less a dilemma and more a question that has not been answered or perhaps a question that has not even been asked” [95].Footnote 22

3.2.4 Summary of issues

According to the disparate impact theory of discrimination, the types of hiring procedures that employers relied upon in the mid-twentieth century served as an impediment to equality of opportunity. Policymakers in the 1960s had believed that employers who sought guidance from I/O psychologists would inevitably implement better and fairer tests, but this perspective unfortunately overestimated how much would first need to change about the study of employment selection. As one recent commentary from ones and several other I/O psychologists summarizes: “There is an absence of innovation and new ideas in the field… ‘modern’ measures…have been used in employee selection for over 90 years” [96]. Unfortunately, the issues that initially led to the development of tests with significant disparate impact—limited psychological theories, unsophisticated analytical tools, and skewed expectations about effect sizes—have remained intact as impediments to progress. Psychologist Jennifer Wiley poses a question that aptly summarizes the problem: “Experts generally solve problems in their field more effectively than novices because their well-structured, easily activated knowledge allows for efficient search of a solution space. But what happens when a problem requires a broad search for a solution?” In such cases, domain knowledge can “confine experts to an area of the search space in which the solution does not reside” [97].

3.3 A path forward: three benefits of machine learning for overcoming scientific convention

While the risk that hiring assessments can perpetuate discrimination has been clear for decades, public attention to the issue has hardly been consistent since the passage of the Civil Rights Act. However, “the explosion in the use of software in important sociotechnical systems has renewed focus on the study of the way technical constructs reflect policies, norms, and human values” [98]. With the advent of big data and machine learning, many commentators have reengaged with societal consequences of employment procedures, though through a framing that largely how candidates have been evaluated for jobs since the early twentieth century. Much of the disconnect seems to stem from the misconception that employers have generally relied exclusively on human decision-making to screen, meaning that algorithms might be introduced as a substitute for the capricious and time-consuming judgements of flawed recruiters. Under this assumption, the benefits of machine learning to the employment process are therefore described as “efficiently winnowing down the increasingly large volume of applications that employers now regularly receive” [99] and “providing more objective outcomes than humans” [100]. Meanwhile, the risks of such technology are described as “amplifying biases of the past” [98], “facilitating and obfuscating employment discrimination” [101], and “inflicting unintentional harms on individual human rights” [102].

In sum, the current discourse on innovations in employment selection is flawed for two reasons: (1) it ignores the fact that large corporate employers seldom rely solely on human decision-making and often use traditional hiring tests,Footnote 23 and (2) it fails to consider the benefits of recent technological advancements in light of the domain-specific challenges of employment selection that have sustained the “diversity–validity dilemma.” Regarding the former point, some recent progress has been made. For example, one report from Upturn observes that “many employers are using traditional selection procedures at scale—including troubling personality tests—even as they adopt new hiring technologies” [103]. In light of broad societal engagement with the persistence of systemic racism in the U.S. since the death of George Floyd in 2020, the American Psychological Association has also acknowledged that “psychologists created and promoted the widespread application of…instruments that have been used to disadvantage many communities of color” [104]. Regarding the latter point, however, progress is lacking. We respond to this gap in the literature by explaining the utility of machine learning and big data in overcoming the three above-mentioned barriers to innovation in employment selection.

3.3.1 Advantage 1: providing realistic estimates of assessment validity

The first reason machine learning is useful for overcoming the diversity–validity dilemma is the provision of more realistic estimates of the predictive validity of a selection procedure in a particular context, using larger sources of data and modern validation techniques, such as out-of-sample validation. This advantage is crucial for ensuring that inflated validity estimates (cited from decontextualized meta-analyses) are not used to justify the use of a hiring procedure with significant racial impact.Footnote 24 In addition to cross-validation, inflated effect sizes are also now tempered with the availability of larger sample sizes, which have historically been rare in standardized testing research. Generally, “the reason effect sizes in many domains have shrunk is that they were never truly big to begin with, and it’s only now that researchers are routinely collecting enormous datasets that we are finally in a position to appreciate that fact” [73]. Additionally, researchers have argued that inflated effect sizes for traditional assessment predictors are likely exacerbated by the use of ordinary least-squares (OLS) regression models in psychology. Speer and co-authors explain that, while the method “is particularly susceptible to capitalizations on chance…most modern prediction methods have advantages over OLS that help guard against overfitting” [105].

3.3.2 Advantage 2: identifying novel, context-specific predictors of job performance

A second benefit of machine learning in the employment selection context is making it easier for assessment researchers to consider a much broader scope of relevant constructs. In recent years, as I/O psychologists have increasingly come to terms with the limitations of traditional aptitude testing in facilitating progress, various commentators have argued that the field needs to revisit its earlier experimentation with inductive research strategies [106].Footnote 25 In terms of the advantages over purely theory-driven methods, inductive methods can help researchers “identify nonobvious, subtle relationships between items and the criterion that other scoring techniques might miss” [107] and “combine many facets of personality for the sake of understanding the comprehensive profile of a person” [108]. As in other contexts, inductive research applied to employment selection involves “bottom-up, data-driven, and/or exploratory” analyses [109]. Because machine learning and big data effectively facilitate the automation of such methods, the technology allows for consideration of more-nuanced information sources, including those derived from modern psychological instruments.

It is worth emphasizing that, historically, inductive research in the employment context has been used to interpret inventories whose items did not have inherently “correct” answers, such as biographical application forms, personality inventories, and situational judgement tests [110, 111]. This aspect of inductive research is particularly relevant in considering the interpretation of the type of data that is often collected from modern aptitude assessments. While the traditional psychometric approach examines the “top-down” relationship of intelligence to complex cognitive tasks, the “bottom-up” nature of cognitive psychology focuses on identifying lower-order information processes, like memory, attention, and perception [112]. Psychologists have long theorized that detection of between-subject variation in these processes could inform research on specific aptitudes, or “cognitive styles” [113]. Such research began in the 1940s and 1950s, with psychologists like George Klein observing that individuals tended to vary in their perceptions of changes to visual stimuli; while some people are “sharpeners” who notice contrasts, others are “levelers” who focus on similarities [114]. However, unlike a unidirectional ability, the utility of being a leveler or a sharpener is context-dependent; “the adaptiveness…depends on the nature of the situation and the cognitive requirements of the task at hand” [115]. Given a particular job then, inductive research strategies are useful for identifying the aspects of cognitive style associated with job performance. For example, an AI classification model might use an incumbent sample’s cognitive styles as a training data set, distinguished from a general applicant pool as a reference set [9].Footnote 26 ,Footnote 27

3.3.3 Advantage 3: optimizing models based on specified fairness and validity goals

A final way in which recent advances in data science can help testing experts overcome the diversity–validity dilemma is through the use of more sophisticated modeling techniques that allow for iterations on a model to be tested for their utility in achieving multiple objectives. Because the range of solutions that can be proposed by machine learning techniques is inherently limited to the data provided, the options “are plausible or credible, but are nonetheless not certain” [116]. The implied uncertainty is an important feature of any research based on available empirical evidence, because in contrast to theory-driven models, the “correct” answer is somewhat open to interpretation. With data-driven models, then “the goal is not omniscient certainty but contextual certainty,” such that “one can properly say, ‘on the basis of the available evidence, i.e., within the context of the factors so far discovered, the following is the proper conclusion to draw’” [117]. Models can account for any number of observed factors in deciding on the “proper” conclusion, including “side effects” or “potential consequences” of organizational interventions, such as disparate impact yielded by a hiring procedure [118, 119].

Data science techniques that specifically facilitate evaluation of multiple goals in the employment context include the use of (1) constrained optimization during the model-building process and (2) pre-testing that model for disparate impact prior to deployment on candidates. Regarding the former, Roth and Kearns summarize: “Machine learning already has a ‘goal,’ which is to maximize predictive accuracy…Instead of asking or a model that only minimizes error…we ask for the model that minimizes error subject to the constraint that it not violate [a] particular notion of fairness ‘too much’’’ [120]. Fairness-constrained training processes, also sometimes known as “fairness-aware” or “discrimination-aware,” have been applied to various contexts where minorities have experienced systemic discrimination, with the goal being to find the version of a model that disrupts the paradigm of a fairness–validity tradeoff [121, 122]. Regarding the latter, with the availability of Big Data, once a model has been trained assessment developers, can conduct controlled tests to compare the scores of protected classes and look for evidence of disparate impact. If disparities are identified, pre-testing signals developers to interrogate underlying assumptions and make adjustments before the model is ever used to score real candidates [123].

3.3.4 Summary of advantages

In sum, when the benefits of machine learning are aligned to the specific problems that have plagued employment science for decades, there is a strong case to be optimistic about the potential for progress. The ability of machine learning and Big Data to facilitate data-driven model development, and to iterate and refine those models with respect to fairness and validity goals, could theoretically drive innovative in an unprecedented manner. As stated in Trindel et al.:

“There is an air of prescience in Title VII’s simultaneous inclusion of business objectives and fairness considerations. For many decades, employers and the courts have struggled to navigate these dual considerations, because it was not entirely clear how they could be evaluated in tandem. Today’s technology actually makes voluntary compliance with the 1964 statute significantly easier; with advancements in data science, employers are empowered with the ability to simultaneously consider the efficacy and fairness of many variations of a hiring procedure, effectively adhering to all three prongs of adverse impact theory at once.” [123]

While the technical possibility may exist for such progress, the outstanding question is whether or not the theory bears out in reality. As Tippins and co-authors state, “[An] advantage often expressed by vendors of technology enhanced assessments is a lack of or reduction in adverse impact and/or increases in criterion-related validity, as compared with traditional testing. However, the empirical evidence behind such claims is often unavailable, making relevant comparisons impossible” [124]. Legal scholar Pauline Kim similarly notes that “implementing the best available technical tools can never guarantee that algorithms are unbiased” so “examining the actual impact of algorithms on protected classes” is critical [125]. For these authors and others, the basic concern is whether so-called fairness-aware algorithms actually have the desired effect of mitigating disparate impact.

4 A real-world evaluation of machine learning’s theoretical advantages

Thus far in this paper, we have pointed out that neither employers nor researchers have been sufficiently incentivized to implement better, fairer hiring procedures, as the architects of the Civil Rights Act had hoped. While these barriers to innovation may not be featured in popular discourse, the fact that hiring technology is flawed is also hardly a secret. Other researchers have therefore investigated AI through the lenses described above: use of machine learning out-of-sample testing as a local validation procedure [126], data-driven identification of job-relevant predictor–criterion relationships [127, 128], and simultaneous optimization of selection models for fairness and validity [129, 130]. However, the literature currently lacks a demonstration of how machine learning-based selection models perform when used to screen candidates. In this section, we source data directly from pymetrics, an algorithmic screening platform currently used by dozens of Fortune 1000 companies. Our central question is: What are the comparative advantages of candidate selection by machine learning? Namely, can it actually select candidates without disparate impact?

Previous work has described pymetrics’ use of behavioral assays from the cognitive science literature to evaluate job candidates’ “soft skills” [9]. Similar to the logic of “profile matching” in the I/O psychology literature, the platform contrasts soft-skill data from top-performing incumbents to that of a general reference set, ultimately building hiring models that rely on narrower cognitive, social, and personality measures than those measured by traditional assessments. pymetrics is also an example of a platform that builds models to simultaneously mitigate disparate impact and maximize classification accuracy on out-of-sample testing. This platform address the potential for a fairness/validity tradeoff through the constrained optimization approach described in Sect. 3.c.iii above. This method maximizes model performance, within the constrained that model fairness exceeds a minimum threshold—in this context, the EEOC 4/5ths success threshold between all groups. Notably, the platform only deploys models marked as fair and performant, which is itself a refutation of the fairness/validity dilemma. While the platform is not unique in claiming to use the so-called fairness-aware machine learning methods, the system’s automated procedures for avoidance of disparate impact were the subject of a third-party audit in 2020 [131].

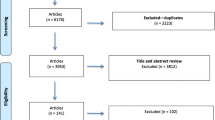

Over an 18-month period in 2019 and 2020, 26 North American employers commissioned the platform to build job models for an average of 2 roles per organization. Prior to the model-building process, a sample of at least 50 successful incumbents in each role completed the 25-min behavioral assessment to provide training data, or a “success profile” for the role.Footnote 28 In total, 60 models were built, each of which served as a custom standardized assessment.These models were built for employers in diverse industries (40% finance, 15% consumer goods, 12% consulting, 8% real estate, 8% HR, 7% logistics, and 10% other) to predict success in a variety of role types (23% office and administrative support, 28% business and financial operations, 15% computer and mathematical, 13% management, 13% sales and related, and 7% other). The concurrent criterion-related validity of each model was estimated prior to deployment, using k-fold cross-validation (Table 2). Cross-validation involved testing how successfully a model trained on 80% of the incumbent sample could identify the remaining 20%, repeated several times using different segments of the data.

Candidates who were screened by the platform took the same behavioral assessment as incumbents, and their results are translated into a fit score based on alignment to the incumbent profile. Candidates who received a percentile-ranked fit score above the 50th percentile were considered “recommended” by the model, and those who fell below the threshold were “not recommended.” Upon deployment, these models were each used to screen applicant pools that included an average of 7000 job candidates. The platform, therefore, provided a total of approximately 400,000 predictions about future job success.

We considered four group-level fairness measures: (1) the impact ratio comparing Black and White candidates, (2) the impact ratio comparing Hispanic and White candidates, (3) the impact ratio comparing Female and Male candidates, and (4) the impact ratio comparing candidates who request disability-related accommodations in the hiring process and those who do not. We focused on these classes, because critics of AI hiring procedures have frequently cited bias against racial minorities, women, and individuals with disabilities as primary concerns [132–134]. Additionally, these demographic groups have all notably faced disproportionate levels of unemployment for decades [135–138]. Other classes certainly face discrimination in the labor market, but we were limited to studying those that are commonly reported in the hiring process and that consistently make up at least 2% of applicant pools, per the legal definition of disparate impact.

To determine the disparate impact of each model, we used demographic data that were voluntarily provided by candidates in an exit survey. The average response rate for the exit survey was 85% (SD = 13%). For the disability-related impact analysis, before beginning the assessment, candidates were offered accommodations for three disabilities: colorblindness, dyslexia, and ADHD. This allows standard testing accommodations to be applied automatically, as specified by best practices in the educational psychology literature.Footnote 29 For each model, an average of 6% of candidates (SD = 2%) elected to take an accommodated version of the assessment. According to EEOC regulations, adverse impact testing is inappropriate for groups that are less than 2% of the candidate pool. Using this rule, our analyses include 52 models for the Black–White comparison (n = 392,448); 56 models for the Hispanic–White comparison (n = 404,952); 60 models for the Female–Male comparison (n = 412,219), 17 models for colorblindness accommodations (n = 59,604); 18 models for dyslexia accommodations (n = 189,557); and 44 models for ADHD accommodations (n = 347,959).

Across the models with sufficient minority sizes (e.g., at least 2%), we found that the average minority-weighted IR for Black versus White candidates was 0.93 (SD = 0.10). For Hispanic versus White candidates, it was 0.97 (SD = 0.04). For Female versus Male candidates, it was 0.98 (SD = 0.05). For accommodations: colorblindness was 1.06 (SD = 0.14), dyslexia was 1.01 (SD = 0.10), and ADHD was 1.00 (SD = 0.10). In addition to these top-level results, we report impact ratios for subgroups of models by ONET Job Family (Tables 3 and 4). Overall, the analysis demonstrates that when a model is built to mitigate disparate impact, it does.

5 Discussion

Automated methods of building employment selection procedures have certainly proliferated in recent years, but much of the discourse about the advantages and risks has felt oddly detached from the nature of existing hiring methods. While it is certainly true that machine learning can make evaluating job candidates efficient and consistent, as many commentators have suggested, employers have been relying on standardized selection procedures precisely for this purpose for nearly a century. Additionally, while it is true that machine learning can introduce harms in the form of systematizing bias and obscuring discrimination, these effects are already pervasive due to widespread use of traditional assessments in many industries.

A more productive evaluation of the implications of machine learning for employment selection begins by identifying the problems that have sustained the suboptimal nature of hiring procedures for the last 50 years. Regulation is often conceived of as the primary impediment to innovation, but the implicit goal of Title VIII of the Civil Rights Act was for employers to use science and evidence to produce better, fairer methods of screening job candidates, regardless of their demographic identity. However, meaningful progress toward this goal was never made, as demonstrated by the persistent trope in the I/O psychology literature that an “inevitable” tradeoff exists between fairness and validity in personnel selection. While regulation explicitly rejected the notion of such a tradeoff, the epistemology of testing experts effectively barred innovation in the field. Employers were therefore limited by the state of assessment technology in terms of their options for complying with anti-discrimination law.

In recent years, as the broader field of psychology has grappled with its replication crisis and society has reengaged with the systemic nature of discrimination, the flawed assumptions of employment researchers have come to light. The tendency of psychologists to view foundational research as established fact has been replaced with a recognition that theories of human hierarchy developed in the early twentieth century come with significant cultural baggage. Various commentators have recognized that the so-called fairness–validity dilemma in I/O psychology has largely been a product of how the field has framed its investigations. Specifically, by prioritizing established theories of human ability, developing assessments solely to maximize predictive validity, and overstating the benefits of legacy tools, employment researchers made it exceedingly difficult for hiring procedures to emerge outside the confines of the fairness–validity tradeoff.

Unfortunately, employment law is a context that has come to be defined by risk aversion and deference to precedent. Even though the shortcomings of traditional employment tests have been apparent since the latter half of the twentieth century, little has been done to deviate from the methods that employers first grew accustomed to shortly after the Civil Rights Act was passed. The practical constraints of the employment environment—small samples, litigation concerns, and subjective metrics, to name only a few—have dovetailed with the deductive epistemology of I/O psychologists to disincentivize exploratory research. From this perspective, it seems very unlikely that machine learning could further exacerbate the extent of disparate impact in the modern hiring process, since it is highly entrenched under the status quo.

On the contrary, if the fundamental problems of employment research are related to reliance on simplistic theories and cumbersome manual research, an extensive literature suggests that machine learning can certainly help address these issues. Importantly, the point of the technology is not to replace subject matter experts or to argue for undiscerning use of irrelevant candidate information. Rather, the goal is to align the benefits of the technology to the specific challenges that have plagued employers and their advisors for decades. Where a large subset of psychometricians have spent the last century collecting evidence to identify universal selection methods, machine learning, and big data can help unpack more subtle and context-specific models. Where testing experts have sought to maximize the predictive validity coefficients yielded in scholarly articles, machine learning can optimize an assessment to align with multiple organizational practical objectives. Where employers have struggled with the robustness of manual validity studies and the technical challenges of proactively analyzing disparate impact, machine learning can facilitate efficient and iterative back-testing on large sample sizes.

The theoretical possibility of machine learning to improve on the hiring process has been discussed by various commentators. In some cases, authors have also conducted empirical investigations into the narrow benefits of the technology, often using synthetic data or very old samples. However, such piecemeal research is only so effective, particularly given the extent of public scrutiny regarding automation in high-stakes decision-making contexts. To provide a more grounded perspective on the real-world implications of machine learning, it is important to source data from a commercial AI platform that actually implements several of the prominently discussed advantages of the technology. pymetrics is useful for this exercise, because the platform utilizes the so-called fairness-aware training to build custom soft skill assessments for employers, relying on assays sourced from the cognitive and behavioral science literature to measure various job-relevant aptitudes. Overall, results indicate that the theorized benefits of machine learning for hiring bear out in practice: models that are simultaneously optimized for fairness and validity in the training process also result in much fairer outcomes when used to screen real candidates.

In considering the potential offered by machine learning to address the issue of disparate impact, it is worth anticipating a few likely reactions. At a high level, criticisms fall into two categories: concerns that technology will shield employers from disparate impact liability and complaints about the supposed “quality” of algorithmic decisions. Scholars interested in the former category emphasize the potential for AI systems to conceal disparities in outcomes from candidates [139], avoid scrutiny by claiming intellectual property rights [101], and provide statistical support for spurious predictors [124]. While such risks may be technically possible with machine learning technology, to focus on them suggests that progress toward the eradication of discrimination rests on disparate impact litigation. The reality is that, even absent automated screening tools, it is not especially difficult for employers to provide the necessary evidence to support the validity of a hiring practice that disadvantages a protected class. To put it differently: given that many employers perfected strategies for using biased hiring procedures lawfully decades ago, it seems unlikely that any additional shield offered by algorithms will meaningfully change their calculus.

The second category of criticisms about AI for hiring is made up of claims that fairness-aware algorithms are ineffective at identifying successful candidates. According to these researchers, hiring models that proactively mitigate group-level disparities necessarily trade accuracy for fairness [140], ignore characteristics that genuinely predict job performance [141], fail to account for inherent differences between groups [142] and hinder optimal prediction [143]. This literature further emphasizes that efforts to reduce the disparate impact can be highly unfair to qualified members of the majority group and warns that underqualified minority candidates will be harmed when they fail on the job. Such critiques are ostensibly directed at algorithmic hiring technology, but they are more precisely attacks on selection procedures that do not assume historical trends reflect objectivity. Consider Hardt and co-authors’ argument that group-level statistical parity is “seriously flawed” as a fairness metric, because it “permits that we accept the qualified applicants in one demographic, but random individuals in another” [143]. Barocas and co-authors similarly describe algorithmic fairness constraints are “crude”, because they “don’t incorporate a notion of deservingness” for members of disadvantaged groups [141]. In both instances, the authors imply that “qualified” and “deservingness” are unambiguous concepts. Much like the deductive epistemology of I/O psychology, Birhane observes that such assumptions are common in computational research because of the field’s roots in the Western “rationalist” worldview [144].