Abstract

Artificial intelligence (AI) governance is required to reap the benefits and manage the risks brought by AI systems. This means that ethical principles, such as fairness, need to be translated into practicable AI governance processes. A concise AI governance definition would allow researchers and practitioners to identify the constituent parts of the complex problem of translating AI ethics into practice. However, there have been few efforts to define AI governance thus far. To bridge this gap, this paper defines AI governance at the organizational level. Moreover, we delineate how AI governance enters into a governance landscape with numerous governance areas, such as corporate governance, information technology (IT) governance, and data governance. Therefore, we position AI governance as part of an organization’s governance structure in relation to these existing governance areas. Our definition and positioning of organizational AI governance paves the way for crafting AI governance frameworks and offers a stepping stone on the pathway toward governed AI.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Algorithmic systems that use artificial intelligence (AI) promise both significant benefits [1] and equally momentous risks related to biases, discrimination, opacity, and dissipation of human accountability [2,3,4]. To reap the benefits and manage the risks, there is widespread consensus that AI systems need to be governed to operate in line with human and societal values [5, 6]. However, current AI governance work faces the challenge of translating abstract ethical principles, such as fairness, into practicable AI governance processes [7, 8]. In a global overview of AI governance, Butcher and Beridze [9] conclude that “AI governance is an unorganized area.” While this statement refers to the number of stakeholders seeking to influence global AI governance, we suggest a different sense in which AI governance scholarship and practice are currently unorganized. Specifically, there is a lack of understanding of the position of AI governance within the organizational governance structure. Established scholarship on corporate, IT, and data governance understandably cannot include the more recent AI governance [10, 11]. However, emerging organizational AI governance literature has also devoted little attention to other governance areas such as IT governance [12, 13]

AI governance efforts do not take place in a vacuum. On the contrary, AI governance is entering an increasingly complex organizational governance landscape, where corporate governance [10], information technology (IT) governance [11], and data governance [14] already require management attention [15]. Thus, the current unorganized state of AI governance literature is unfortunate because organizations deploying AI in their operations play a key role in implementing AI governance in practice [13, 16, 17].

We bring increased conceptual clarity to the AI governance literature through two contributions. First, we draw on previous scholarly work on AI ethics and governance [9, 12, 18,19,20,21,22] and propose a synthesizing definition of AI governance at the organizational level. Second, we position AI governance as part of an organization’s governance structure, together with corporate, IT, and data governance. In doing so, we advance the body of knowledge on implementing AI ethics (e.g., [7, 8, 13, 23]) through AI governance (e.g., [12, 18]). Our contributions clarify the significance of AI governance as part of organizational governance that helps align the use of AI technologies with organizational strategies and legal and ethical requirements coming from the operating environment.

2 Defining AI governance

There is a growing body of research acknowledging the importance of governed AI. Georgieva and her colleagues [8] call this the “third wave of scholarship on ethical AI,” which focuses on turning AI principles into actionable practice and governance. The third wave aims at promoting practical accountability mechanisms [24]. In order to structure this complex domain, researchers have presented layered AI governance structures, which include, for example, ethical and legal layers and levels ranging from AI developers to regulation and oversight [18, 23]. At the societal level, AI regulation and policy [25], and particularly human rights law [19], have also been raised as critical considerations.

Despite this scholarly attention, there have been few explicit attempts to define AI governance. In their global overview, Butcher and Beridze [9] characterize AI governance as “a variety of tools, solutions, and levers that influence AI development and applications.” In its broad scope, this definition comes close to Floridi’s [20] concept of digital governance, defined as “the practice of establishing and implementing policies, procedures and standards for the proper development, use and management of the infosphere.” In a similar vein, Gahnberg [22] operationalizes governance of AI as “intersubjectively recognized rules that define, constrain, and shape expectations about the fundamental properties of an artificial agent.” The focus on rules is helpful, but Gahnberg’s definition focuses on drafting societal rules, such as standards and legislation, rather than organizational AI governance. Overall, these macro-level conceptions remain silent on how organizations should govern their AI systems.

Schneider et al. [12] define AI governance for businesses as “the structure of rules, practices, and processes used to ensure that the organisation’s AI technology sustains and extends the organisation’s strategies and objectives.” They conceptualize the scope of AI governance for businesses as including the machine learning (ML) model, the data used by the model, the AI system that contains the ML model, and other components and functionalities (depending on the use and context of the system). Although AI governance for businesses is a promising starting point, the concept largely omits ethical and regulatory questions present in previous AI governance literature. In doing so, the concept stands in contrast to the AI ethics literature and downplays established AI-specific ethical and regulatory issues stemming from the organization’s environment.

In contrast, Winfield and Jirotka [21] highlight ethical governance, which goes beyond good governance by instilling ethical behaviors in designers and organizations. They define ethical governance as “a set of processes, procedures, cultures and values designed to ensure the highest standards of behavior” [21]. The list of governance elements is instructive, but the objective, ensuring “the highest standards of behavior,” remains underdefined for clarifying organizational AI governance.

Cihon et al. [26], investigating corporate governance of AI, come close to our focus area and provide actor-specific means of improving AI governance. However, they do not explicitly define AI governance. Moreover, their study focuses on large corporations at the forefront of AI development, such as Alphabet and Amazon, and how they can better govern AI to serve the public interest [26]. In our effort to define organizational AI governance, we also aim to include smaller organizations that use AI systems but do not exercise such leverage over global AI technology development.

In addition, none of the previously mentioned AI governance conceptualizations explicate the role of technologies used to manage and govern AI systems. These include, for example, tools for data governance [27], explainable AI (XAI) [28], and bias detection [29]. Bringing together the ethical, organizational, and technological aspects, and considering the definitions of related governance fields, we propose the following definition of AI governance at the organization level:

AI governance is a system of rules, practices, processes, and technological tools that are employed to ensure an organization’s use of AI technologies aligns with the organization’s strategies, objectives, and values; fulfills legal requirements; and meets principles of ethical AI followed by the organization.

Our definition of AI governance is essentially normative in that the intentions are to be action-oriented and to guide organizations in implementing effective AI governance [cf. 30]. In particular, the definition draws on that of AI governance for business [12] while incorporating the regulatory constraints [19, 31] and ethical AI principles [6, 32]. Intra-organizational strategic alignment is a necessary condition for AI governance. However, it is not a sufficient condition because environmental and technical layers also need to be included.

In what follows, we explain the key elements of the definition. First, AI governance is a system whose constituent elements should be interlinked to form a functional entity (cf. [31]). The systemic perspective highlights how AI governance unifies heterogeneous tools to articulate and attain a central objective, which is the purpose of the system [33, 34]. The AI governance system can also include structural arrangements such as ethical review boards [35]. When AI governance is understood as a system, synergies between different tools, such as bias testing methods and participatory design, can be identified.

Second, the key elements of an AI governance system are rules, practices, processes, and technological tools. Essentially, these are all methods of regulating behavior to keep it within acceptable boundaries and enable desirable behavior. We have included technological tools in the definition to highlight the involvement of both human and technological components in AI governance. Third, these elements are in place to govern an organization’s use of AI technologies. Here, the term “use” is broadly understood to mean all engagement with AI technologies in the organization’s operations throughout the system’s life cycle, ranging from use case definition and design to maintenance and disposal. In other words, AI governance needs to address the entire AI system life cycle [13].

Fourth, the use of AI technologies is governed to ensure multiple alignments, both in internal operations and with external requirements. The use of AI should align with organizational strategies, objectives, and values. In addition, the use of AI technologies should comply with relevant legal requirements. Finally, AI use should align with ethical AI principles followed by the organization. These alignments may set differing requirements for AI technology; consequently, any possible trade-offs should be carefully considered [36].

3 AI governance as part of an organization’s governance structure

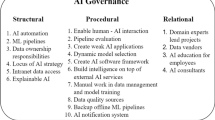

Having defined organizational AI governance, we position AI governance (understood as organizational practices) within an organization's governance structure. In particular, we highlight the relationship of AI governance with three relevant areas of governance, which are as follows: corporate, IT, and data (see Fig. 1). To the best of our knowledge, AI governance has not been explicitly connected with corporate, IT, and data governance beyond adapting definitions from these established fields to cover AI [12]. Placing AI governance in the organizational governance structure is important because effective AI governance should be parsimonious, meaning it should build on other areas of governance and avoid duplicated processes. We provide an overview of the connections between AI governance and the other governance areas and acknowledge that each particular connection provides future research topics beyond the scope of this paper.

Figure 1 places AI governance as a subset of corporate governance and IT governance and in partial overlap with data governance. The rationale for this position is that corporate governance provides the overarching governance structure within an organization, and AI systems, as IT systems with particular capabilities [37], are governed via mechanisms that fall under IT governance. Finally, AI governance and data governance partially overlap because data are inputs and outputs of AI systems. However, the models and algorithms of AI systems may not fall under data governance as it is commonly conceived. We note that this positioning considers AI governance as governance of AI systems as IT systems. The recent argument that governance may be increasingly conducted by AI [38] should, however, be explored in future research. The relationships between AI governance and the other governance areas are explored in more detail below.

3.1 Corporate governance

The governance of AI does not take place in isolation; rather, it occurs as a part of the overall governance system of an organization. Corporate governance sets the premises for how an organization operates in relation to its internal and external stakeholders [10, 39], regulating its systemic exchanges with its environment [40]. Corporate governance allows a firm to articulate rules and processes for governing the relationships between its management and shareholders, managing the potential tensions between its shareholders and other stakeholders, and controlling its environmental and social impact. In addition to legal compliance [41], corporate governance can entail elements delineating desirable conduct beyond the law's requirements, such as codes of conduct. Accordingly, corporate governance sets the principles through which organizations interpret and enact the desirable behavior of their agents. The behavior regulation function of corporate governance has garnered increasing attention during the last few decades as calls for corporate environmental and social responsibility have proliferated. This function also provides a crucial bridge to AI governance.

Although corporate governance activities and their expected impact focus primarily on the organization, the framing of corporate governance underscores the importance of accountability to external stakeholders, and more generally, corporate social responsibility [42]. Scalability and increased autonomy compared with traditional IT systems are central benefits of AI. This makes accountability and responsibility particularly important for AI [5]. For example, deploying AI in the financial sector may have far-reaching effects on individuals (and groups represented by them) subjected to decisions made by AI. Cihon et al. [26] provide valuable groundwork in the case of large corporations aiming to advance the public interest using AI. Thus, alignment with organizational objectives and managing impacts that transcend organizational boundaries are both important aspects of AI governance [12, 43]. However, here the landscape is fractured. For example, public sector and governmental organizations operate subject to more restrictive regulatory and behavioral frameworks than private companies. Consequently, their stakeholders and decision-making logics may be different from companies. Moreover, even private actors may face different regulatory and stakeholder constraints depending on their industry or field. At the same time, however, similar general organizational and governance issues are important across the public, private, and third sectors and different industries.

3.2 IT governance

Organizations are entities that process information, and much of this processing takes place in (or is mediated by) IT [44,45,46]. Hence, the governance of IT systems is both a means to execute corporate governance and a key element of an organization’s governance structure. Technically, AI is executed via algorithms written in the form of software code; these algorithms can be stand-alone applications or integrated into organizations’ information systems (IS) to provide additional capabilities or increased performance. As a result, AI governance at the organizational level can be viewed as a subset of IT governance.

Weill and Ross [11] define IT governance as “specifying the decision rights and accountability framework to encourage desirable behavior in the use of IT.” They further identify five interrelated IT decision domains: IT principles, IT architecture, IT infrastructure, business application needs, and IT investment [11]. Gregory et al. [47] build on previous IS literature related to IT governance (e.g., [48,49,50]), and define IT governance as “the decision rights and accountability framework deployed through a mix of structural, processual, and relational mechanisms and used to ensure the alignment of IT-related activities with the organization’s strategy and objectives.” These authors further identified three key dimensions of IT governance in relation to its definition ([47], cf. [49]). The first dimension is the focus of IT governance (what to govern), with the intention of determining what IT-related activities and artifacts must be aligned with organizational strategies and objectives. The second is the scope of IT governance (whom to govern), clarifying which actors and stakeholders are held accountable for ensuring IT’s (positive) contribution to the organization. The third dimension is patterns of IT governance (how to govern), which refers to the mechanisms in place to ensure desirable IT-related activities and outcomes.

Similar mechanisms and dimensions to the above can be identified in AI governance. The IT governance decision domains [11] and what/whom/how questions [47] also apply to AI governance. These conceptual frameworks on IT governance offer valuable guides to operationalizing AI governance beyond the initial definition developed in this article. However, because of their learning capabilities and dynamic evolution, AI systems should initially be viewed as special cases of IT systems that require distinct governance tools compared with general IT governance. As AI governance matures, it may eventually be subsumed under IT governance. This has not yet materialized, and further speculative discussion is beyond the scope of this article. It also remains for future research to address in which specific domains (e.g., principles, architecture, investment) AI systems are likely to bring novel governance questions compared to other IT systems (cf. [51]).

3.3 Data governance

Because AI systems depend on data to operate and learn, certain aspects of data governance are also central to AI governance. Based on their review of the data governance literature, Abraham et al. [14] conceptualize data governance as comprising six elements. In their conception, first, data governance is a cross-functional effort, enabling collaboration across functional boundaries and data subject areas. Second, data governance is a framework that sets a structure and formalization for the management of data. Third, data governance considers data a strategic enterprise asset and views data as a representation of facts in different formats. Fourth, data governance specifies decision rights and accountabilities for an organization’s decision-making about its data. This involves determining what decisions need to be made about data, how these decisions are made, and who in the organization has the right to make them. Fifth, data governance involves the development of data policies, standards, and procedures. These artifacts should be consistent with an organization’s strategy and should promote desirable behavior in the use of data. Sixth, data governance facilitates the monitoring of compliance, which entails the implementation of controls to ensure that predefined data policies and standards are followed.

The elements of data governance are relevant for AI governance, particularly regarding technical layers concerned with particular algorithmic systems. Doneda and Almeida [52] argue that governing datasets is one of the most fundamental ways to govern algorithms. However, AI governance goes beyond governing data because data represent only one element in algorithmic systems. Accordingly, while data governance is necessary for effective AI governance, it is not sufficient on its own.

Table 1 provides definitions for corporate, IT, data, and AI governance for businesses and their significance for AI governance. In particular, the table highlights mechanisms of alignment and consideration of an organization’s internal strategies and objectives, as well as its accountability toward stakeholders. The significance of these concepts for AI governance underscores their mutual relationships: Corporate governance places AI governance in a broader frame, IT governance outlines governing mechanisms, and data governance specifies frameworks for managing the data on which AI systems depend.

4 Conclusion and future research directions

To contribute to tackling the unorganized state of AI governance research and practice, we presented a positioning of AI governance as part of an organization’s governance structure and a definition of organizational AI governance. A concise definition is only the first step. It must be complemented with descriptions of the processes, mechanisms, and structures that need to be implemented—in short, an AI governance framework is required. Thus, our definition and positioning of organizational AI governance represent a stepping stone on the pathway toward governed AI.

We can identify at least four necessary steps in developing AI governance towards a mature field. First, the current study indicates that AI governance literature is fragmented. Therefore, there is a need for syntheses of the academic and grey literature. Second, we need a more contextual understanding of how organizations translate AI ethics principles to practice. In-depth interviews and ethnographic studies can provide such understanding. Third, organizations need practical AI governance tools and frameworks, which opens avenues for design science research [53]. Fourth, AI auditing is needed to ensure that appropriate AI governance mechanisms are in place and to communicate AI governance to stakeholders. Scholars can study AI auditing literature, practices, and tools similarly to studies on AI governance and continuously build bridges to AI governance literature. These research streams can be advanced in parallel. However, they should not become separate silos but rather contribute to a growing general understanding of AI governance within academia and practice.

References

Cowls, J., Tsamados, A., Taddeo, M., Floridi, L.: A definition, benchmark and database of AI for social good initiatives. Nat. Mach. Intell. 3, 111–115 (2021). https://doi.org/10.1038/s42256-021-00296-0

Pasquale, F.: The Black Box Society: The Secret Algorithms That Control Money and Information. Harvard University Press, Cambridge (2015)

Bechmann, A., Bowker, G.C.: Unsupervised by any other name: hidden layers of knowledge production in artificial intelligence on social media. Big Data Soc. 6, 2053951718819569 (2019). https://doi.org/10.1177/2053951718819569

Veale, M., Binns, R.: Fairer machine learning in the real world: mitigating discrimination without collecting sensitive data. Big Data Soc. 4, 2053951717743530 (2017). https://doi.org/10.1177/2053951717743530

Dignum, V.: Responsibility and artificial intelligence. In: Dubber, M.D., Pasquale, F., Das, S. (eds.) The Oxford Handbook of Ethics of AI, pp. 213–231. Oxford University Press, Oxford (2020). https://doi.org/10.1093/oxfordhb/9780190067397.013.12

Fjeld, J., Achten, N., Hilligoss, H., Nagy, A., Srikumar, M.: Principled artificial intelligence: mapping consensus in ethical and rights-based approaches to principles for AI. SSRN J. (2020). https://doi.org/10.2139/ssrn.3518482

Morley, J., Floridi, L., Kinsey, L., Elhalal, A.: From what to how: an initial review of publicly available ai ethics tools, methods and research to translate principles into practices. Sci. Eng. Ethics 26, 2141–2168 (2020). https://doi.org/10.1007/s11948-019-00165-5

Georgieva, I., Lazo, C., Timan, T., van Veenstra, A.F.: From AI ethics principles to data science practice: a reflection and a gap analysis based on recent frameworks and practical experience. AI Ethics (2022). https://doi.org/10.1007/s43681-021-00127-3

Butcher, J., Beridze, I.: What is the state of artificial intelligence governance globally? RUSI J. 164, 88–96 (2019). https://doi.org/10.1080/03071847.2019.1694260

Solomon, J.: Corporate Governance and Accountability. Wiley, Hoboken (2020)

Weill, P., Ross, J.W.: IT Governance: How Top Performers Manage IT Decision Rights for Superior Results. Harvard Business School Press, Boston (2004)

Schneider, J., Abraham, R., Meske, C.: AI governance for businesses. arXiv:2011.10672 [cs] (2020)

Eitel-Porter, R.: Beyond the promise: implementing ethical AI. AI Ethics 1, 73–80 (2021). https://doi.org/10.1007/s43681-020-00011-6

Abraham, R., Schneider, J., vom Brocke, J.: Data governance: a conceptual framework, structured review, and research agenda. Int. J. Inf. Manag. 49, 424–438 (2019). https://doi.org/10.1016/j.ijinfomgt.2019.07.008

Ocasio, W.: Towards an attention-based view of the firm. Strateg. Manag. J. 18, 187–206 (1997)

Benjamins, R.: A choices framework for the responsible use of AI. AI Ethics 1, 49–53 (2021). https://doi.org/10.1007/s43681-020-00012-5

Ibáñez, J.C., Olmeda, M.V.: Operationalising AI ethics: how are companies bridging the gap between practice and principles? An exploratory study. AI Soc. (2021). https://doi.org/10.1007/s00146-021-01267-0

Gasser, U., Almeida, V.A.F.: A layered model for AI governance. IEEE Internet Comput. 21, 58–62 (2017). https://doi.org/10.1109/MIC.2017.4180835

Yeung, K., Howes, A., Pogrebna, G.: AI governance by human rights-centered design, deliberation, and oversight: an end to ethics washing. In: Dubber, M.D., Pasquale, F., Das, S. (eds.) The Oxford Handbook of Ethics of AI, pp. 75–106. Oxford University Press, Oxford (2020). https://doi.org/10.1093/oxfordhb/9780190067397.013.5

Floridi, L.: Soft ethics, the governance of the digital and the general data protection regulation. Phil. Trans. R. Soc. A 376, 20180081 (2018). https://doi.org/10.1098/rsta.2018.0081

Winfield, A.F.T., Jirotka, M.: Ethical governance is essential to building trust in robotics and artificial intelligence systems. Phil. Trans. R. Soc. A 376, 20180085 (2018). https://doi.org/10.1098/rsta.2018.0085

Gahnberg, C.: What rules? Framing the governance of artificial agency. Policy Soc. 40, 194–210 (2021). https://doi.org/10.1080/14494035.2021.1929729

Shneiderman, B.: Bridging the gap between ethics and practice: guidelines for reliable, safe, and trustworthy human-centered AI systems. ACM Trans. Interact. Intell. Syst. 10, 26 (2020). https://doi.org/10.1145/3419764

Hickok, M.: Lessons learned from AI ethics principles for future actions. AI Ethics 1, 41–47 (2021). https://doi.org/10.1007/s43681-020-00008-1

Stix, C.: Actionable principles for artificial intelligence policy: three pathways. Sci. Eng. Ethics 27, 1–17 (2021). https://doi.org/10.1007/s11948-020-00277-3

Cihon, P., Schuett, J., Baum, S.D.: Corporate governance of artificial intelligence in the public interest. Information 12, 275 (2021). https://doi.org/10.3390/info12070275

Antignac, T., Sands, D., Schneider, G.: Data minimisation: a language-based approach. In: Capitani, De., di Vimercati, S., Martinelli, F. (eds.) ICT Systems Security and Privacy Protection, pp. 442–456. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-58469-0_30

Barredo Arrieta, A., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., Garcia, S., Gil-Lopez, S., Molina, D., Benjamins, R., Chatila, R., Herrera, F.: Explainable artificial intelligence (XAI): concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 58, 82–115 (2020). https://doi.org/10.1016/j.inffus.2019.12.012

Bellamy, R.K.E., Dey, K., Hind, M., Hoffman, S.C., Houde, S., Kannan, K., Lohia, P., Martino, J., Mehta, S., Mojsilović, A., Nagar, S., Ramamurthy, K.N., Richards, J., Saha, D., Sattigeri, P., Singh, M., Varshney, K.R., Zhang, Y.: AI fairness 360: an extensible toolkit for detecting and mitigating algorithmic bias. IBM J. Res. Dev. 4(1–4), 15 (2019). https://doi.org/10.1147/JRD.2019.2942287

van der Helm, R.: Defining the future: concepts and definitions as linguistic fundamentals of foresight. In: Giaoutzi, M., Sapio, B. (eds.) Recent Developments in Foresight Methodologies, pp. 13–25. Springer, Boston (2013). https://doi.org/10.1007/978-1-4614-5215-7_2

Kaminski, M.E.: Binary governance: lessons from the GDPR’s approach to algorithmic accountability. South. Calif. Law Rev. 92, 1529–1616 (2019)

Jobin, A., Ienca, M., Vayena, E.: The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399 (2019). https://doi.org/10.1038/s42256-019-0088-2

Luhmann, N.: Introduction to Systems Theory. Polity, Cambridge (2012)

Mingers, J., White, L.: A review of the recent contribution of systems thinking to operational research and management science. Eur. J. Oper. Res. 207, 1147–1161 (2010). https://doi.org/10.1016/j.ejor.2009.12.019

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P., Vayena, E.: AI4People—an ethical framework for a good Ai society: opportunities, risks, principles, and recommendations. Minden Mach. 28, 689–707 (2018). https://doi.org/10.1007/s11023-018-9482-5

Aizenberg, E., van den Hoven, J.: Designing for human rights in AI. Big Data Soc. 7, 2053951720949566 (2020). https://doi.org/10.1177/2053951720949566

Kaplan, A., Haenlein, M.: Siri, Siri, in my hand: who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 62, 15–25 (2019). https://doi.org/10.1016/j.bushor.2018.08.004

Hickman, E., Petrin, M.: Trustworthy AI and corporate governance: the EU’s ethics guidelines for trustworthy artificial intelligence from a company law perspective. Eur. Bus. Org. Law Rev. 22, 593–625 (2021). https://doi.org/10.1007/s40804-021-00224-0

Sahut, J.-M., Dana, L.-P., Teulon, F.: Corporate governance and financing of young technological firms: a review and introduction. Technol. Forecast. Soc. Chang. 163, 120425 (2021). https://doi.org/10.1016/j.techfore.2020.120425

Schneider, A., Wickert, C., Marti, E.: Reducing complexity by creating complexity: a systems theory perspective on how organizations respond to their environments. J. Manag. Stud. 54, 182–208 (2017). https://doi.org/10.1111/joms.12206

Griffith, S.J.: Corporate governance in an era of compliance. Wm. Mary L. Rev. 57, 2075–2140 (2015)

Harjoto, M.A., Jo, H.: Corporate governance and CSR nexus. J. Bus. Ethics 100, 45–67 (2011). https://doi.org/10.1007/s10551-011-0772-6

Metcalf, J., Moss, E., Watkins, E.A., Singh, R., Elish, M.C.: Algorithmic impact assessments and accountability: the co-construction of impacts. In: Proceedings of the 2021 ACM conference on fairness, accountability, and transparency. pp. 735–746. Association for Computing Machinery, New York, NY, USA (2021). https://doi.org/10.1145/3442188.3445935

Galbraith, J.R.: Organization design: an information processing view. Interfaces 4, 28–36 (1974)

Anand, V., Manz, C.C., Glick, W.H.: An organizational memory approach to information management. Acad. Manag. Rev. 23, 796–809 (1998). https://doi.org/10.2307/259063

Kmetz, J.L.: Information Processing Theory of Organization: Managing Technology Accession in Complex Systems. Routledge, Abingdon (2020)

Gregory, R.W., Kaganer, E., Henfridsson, O., Ruch, T.J.: IT consumerization and the transformation of IT governance. MIS Q. 42, 1225–1253 (2018)

Sambamurthy, V., Zmud, R.W.: Arrangements for information technology governance: a theory of multiple contingencies. MIS Q. 23, 261–290 (1999). https://doi.org/10.2307/249754

Tiwana, A., Konsynski, B., Venkatraman, N.: Special issue: information technology and organizational governance: the IT governance cube. J. Manag. Inf. Syst. 30, 7–12 (2013). https://doi.org/10.2753/MIS0742-1222300301

Wu, S., Straub, D., Liang, T.: How information technology governance mechanisms and strategic alignment influence organizational performance: insights from a matched survey of business and IT managers. MIS Q. 39, 497–518 (2015). https://doi.org/10.25300/MISQ/2015/39.2.10

Dor, L.M.B., Coglianese, C.: Procurement as AI governance. IEEE Trans. Technol. Soc. 2, 192–199 (2021). https://doi.org/10.1109/TTS.2021.3111764

Doneda, D., Almeida, V.A.F.: What is algorithm governance? IEEE Internet Comput. 20, 60–63 (2016). https://doi.org/10.1109/MIC.2016.79

Hevner, A.R., March, S.T., Park, J., Ram, S.: Design science in information systems research. MIS Q. 28, 75–105 (2004). https://doi.org/10.2307/25148625

Funding

Open Access funding provided by University of Turku (UTU) including Turku University Central Hospital. This research has been conducted within the Artificial Intelligence Governance and Auditing (AIGA) project, funded by Business Finland’s AI Business Program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mäntymäki, M., Minkkinen, M., Birkstedt, T. et al. Defining organizational AI governance. AI Ethics 2, 603–609 (2022). https://doi.org/10.1007/s43681-022-00143-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43681-022-00143-x