Abstract

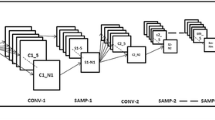

The convolution neural networks are well known for their efficiency in detecting and classifying objects once adequately trained. Though they address shift in-variance up to a limit, appreciable rotation and scale in-variances are not guaranteed by many of the existing CNN architectures, making them sensitive towards input image or feature map rotation and scale variations. Many attempts have been made in the past to acquire rotation and scale in-variances in CNNs. In this paper, an efficient approach is proposed for incorporating rotation and scale in-variances in CNN-based classifications, based on eigenvectors and eigenvalues of the image covariance matrix. Without demanding any training data augmentation or CNN architectural change, the proposed method, ‘Scale and Orientation Corrected Networks (SOCN)’, achieves better rotation and scale-invariant performances. SOCN proposes a scale and orientation correction step for images before baseline CNN training and testing. Being a generalized approach, SOCN can be combined with any baseline CNN to improve its rotational and scale in-variance performances. We demonstrate the proposed approach’s scale and orientation invariant classification ability with several real cases ranging from scale and orientation invariant character recognition to orientation invariant image classification, with different suitable baseline architectures. The proposed approach of SOCN, though is simple, outperforms the current state of the art scale and orientation invariant classifiers comparatively with minimal training and testing time.

Similar content being viewed by others

References

Banerjee B, Bhattacharjee T, Chowdhury N (2010) Image object classification using scaleinvariant feature transform descriptor with support vector machine classifier with histogramintersection kernel. In: International Conference on Advances in Information andCommunication Technologies, Springer, Berlin, Heidelberg, pp 443–448

Bay H, Tuytelaars T, Van Gool L (2006) Surf: speeded up robust features. In: Leonardis A, Bischof H, Pinz A (eds) Computer vision—ECCV 2006. Springer, Berlin, pp 404–417. ISBN:978-3-540-33833-8

Cheng G, Zhou P, Han J (2016) Rifd-cnn:Rotation-invariant and fisher discriminative convolutional neural networks for object detection.In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2884–2893

Ciregan D, Meier U, Schmidhuber J (2012) Multi-column deep neural networks for image classification.In: 2012 IEEE conference on computer vision and pattern recognition, IEEE, pp. 3642–3649

Ciregan D, Meier U, Schmidhuber J (2012) Multi-column deep neural networks for image classification. In: 2012 IEEE conference on computer vision and pattern recognition, pp 3642–3649. https://doi.org/10.1109/CVPR.2012.6248110

Cohen T, Welling M (2016) Group equivariant convolutional networks.In: International conference on machine learning, pp 2990–2999

Depeursinge A, Foncubierta-Rodríguez A, Van De Ville D, Müller H (2014) Rotation-covariant texture learning using steerable Riesz wavelets. IEEE Trans Image Process 23:898–908

Dieleman S, De Fauw J, Kavukcuoglu K (2016) Exploiting cyclic symmetry in convolutional neural networks.In: International conference on machine learning, PMLR, pp 1889–1898

Esteves C, Allen-Blanchette C, Zhou X, Daniilidis K (2017) Polar transformer networks. arXiv preprint arXiv:1709.01889

Fasel B, Gatica-Perez D (2006) Rotation-invariant neoperceptron. In: 18th International Conference on Pattern Recognition (ICPR 06), IEEE Vol. 3, pp 336–339

Greenspan H, Belongie S, Goodman R, Perona P (1994) Rotation invariant texture recognition using a steerable pyramid. In: Proceedings of the 12th IAPR international conference on pattern recognition, vol 3—conference C: signal processing (Cat. No.94CH3440-5), Oct 1994, vol 2, pp 162–167. https://doi.org/10.1109/ICPR.1994.576896

Hamid NA, Sjarif NNA (2017) Handwritten recognition using SVM, KNN and neural network arXiv preprint arXiv:1702.00723

Huang FJ, LeCun Y (2006) Large-scale learning with SVM and convolutional nets for generic object categorization. In: Proceedings—2006 IEEE computer society conference on computer vision and pattern recognition, CVPR 2006, vol 1, pp 284–291. ISBN:0769525970. https://doi.org/10.1109/CVPR.2006.164

Jaderberg M, Simonyan K, Zisserman A, Kavukcuoglu K (2015) Spatial transformer networks. In: Cortes C, Lawrence ND, Lee DD, Sugiyama M, Garnett R (eds) Advances in neural information processing systems, vol 28, pp 2017–2025. Curran Associates, Inc., Red Hook. http://papers.nips.cc/paper/5854-spatial-transormer-networks.pdf

Jain A, Subrahmanyam GRKS, Mishra D (2017) Stacked features based CNN for rotation invariant digit classification. In: Shankar BU, Ghosh K, Mandal DP, Ray SS, Zhang D, Pal SK (eds) Pattern recognition and machine intelligence, pp 527–533. Springer International Publishing, Cham. ISBN:978-3-319-69900-4

Kandi H, Jain A, Chathoth SV, Mishra D, Subrahmanyam GRS (2019) Incorporating rotational invariance in convolutional neural network architecture. Pattern Anal Appl 22(3):935–948

Ke Y, Sukthankar R (2004) PCA-SIFT:A more distinctive representation for local image descriptors. In: Proceedings of the 2004 IEEE ComputerSociety Conference on Computer Vision and Pattern Recognition, 2004. CVPR 2004. Vol. 2, pp. II-II. IEEE

Khotanzad A, Hong YH (1990) Invariant image recognition by Zernike moments. IEEE Trans Pattern Anal Mach Intell 12(5):489–497. ISSN:0162-8828. https://doi.org/10.1109/34.55109

Kreutz M, Völpel B, Janßen H (1996) Scale-invariant image recognition based on higher-order autocorrelation features. Pattern Recognit 29:19–26. https://doi.org/10.1016/0031-3203(95)00078-X

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Neural Inf Process Syst 25:01. https://doi.org/10.1145/3065386

Laptev D, Savinov N, Buhmann JM, Pollefeys M (2016) Ti-pooling:transformation-invariant pooling for feature learning in convolutional neural networks.In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 289–297

Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324. ISSN:0018-9219. https://doi.org/10.1109/5.726791

Li J, Yang Z, Liu H, Cai D (2017) Deep rotation equivariant network. Neurocomputing 290:26–33

Lin K, Lu J, Chen C, Zhou J, Sun M (2019) Unsupervised deep learning of compact binary descriptors. IEEE Trans Pattern Anal Mach Intell 41(6):1501–1514. ISSN:0162-8828. https://doi.org/10.1109/TPAMI.2018.2833865

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 14(1):234–778

Marcos D, Volpi M, Komodakis N, Tuia D (2017)Rotation equivariant vector field networks.In: Proceedings of the IEEE International Conference on Computer Vision, pp 5048–5057

Nam G, Choi H, Cho J, Kim I (2018) PSI-CNN: a pyramid-based scale-invariant CNN architecture for face recognition robust to various image resolutions. Appl Sci 8:1561. https://doi.org/10.3390/app8091561

Patel S (2018) A-z handwritten alphabets in .csv format. Available: https://www.kaggle.com/sachinpatel21/azhandwritten-alphabetsin-csv-format

Rifai S, Vincent P, Muller X, Glorot X, Bengio Y (2011) Contractive auto-encoders: Explicit invariance during feature extraction. In Icml

Ryu J, Yang MH, Lim J (2018) Dft-based transformation invariant pooling layer for visual classification. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 84–99

Rodriguez R, Dokladalova E, Dokládal (2019) Rotation invariant CNN using scattering transform for image classification.In: 2019 IEEE International Conference on Image Processing (ICIP), pp. 654–658. IEEE

Santosh KC (2011) Character recognition based on DTW-radon. In: International conference on document analysis and recognition, ICDAR, Sept 2011, pp 264–268. https://doi.org/10.1109/ICDAR.2011.61

Satoh S, Kuroiwa J, Aso H, Miyake S (1997) Recognition of rotated patterns using neocognitron. In: ICONIP

Scherer D, Behnke S (2010) Evaluation of pooling operations in convolutional architectures for object recognition. In: 20th International conference on artificial neural networks (ICANN)

Shang Y, An T, Meng Z (2010) A study on methods for rotation invariant image recognition based on texture characteristic. In: 2010 International conference on computer application and system modeling (ICCASM 2010), Oct 2010, vol 9, pp V9-568–V9-571. https://doi.org/10.1109/ICCASM.2010.5622967

Shen X, Tian X, He A, Sun S, Tao D (2016) Transform-invariant convolutional neural networks for image classification and search. In: ACM multimedia

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations

Takahashi R, Matsubara T, Uehara K (2017) Scale-invariant recognition by weight-shared CNNs in parallel. In: Zhang M-L, Noh Y-K (eds) Proceedings of the ninth Asian conference on machine learning. Proceedings of machine learning research. PMLR, vol 77, 15–17 Nov 2017, pp 295–310. http://proceedings.mlr.press/v77/takahashi17a.html

Vincent P, Larochelle H, Lajoie I, Bengio Y, Manzagol P-A (2010) Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion. J Mach Learn Res 11:3371–3408

Worrall DE, Garbin SJ, Turmukhambetov D, Brostow GJ (2016) Harmonic networks: deep translation and rotation equivariance. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR), pp 7168–7177

Wu W, Wei S (1996) Rotation and gray-scale transform-invariant texture classification using spiral resampling, subband decomposition, and hidden Markov model. IEEE Trans Image Process 5(10):1423–1434. ISSN:1057-7149. https://doi.org/10.1109/83.536891

Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. arXiv preprint arXiv:1708.07747

Xu Y, Xiao T, Zhang J, Yang K, Zhang Z (2014) Scale-invariant convolutional neural networks

Xu W, Wang G, Sullivan A, Zhang Z (2020) Towards learning affine-invariant representations via data-efficient CNNs. In: Proceedings of the IEEE/CVF winter conference on applications of computer vision (WACV)

Zhang H, Li Z, Liu Y (2016) Fractional orthogonal Fourier–Mellin moments for pattern recognition. In: Tan T, Li X, Chen X, Zhou J, Yang J, Cheng H (eds) Pattern recognition. CCPR. Communications in computer and information science, vol 662, pp 766–778. Springer, Singapore. ISBN:978-981-10-3002-4

Zhou Y, Ye Q, Qiu Q, Jiao J (2017) Oriented response networks. In: The IEEE conference on computer vision and pattern recognition (CVPR), July 2017

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chathoth, S.V., Mishra, A.K., Mishra, D. et al. An eigenvector approach for obtaining scale and orientation invariant classification in convolutional neural networks. Adv. in Comp. Int. 2, 8 (2022). https://doi.org/10.1007/s43674-021-00023-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43674-021-00023-7