Abstract

In this paper, we present new regularized Shannon sampling formulas which use localized sampling with special window functions, namely Gaussian, \(\mathrm B\)-spline, and \(\sinh \)-type window functions. In contrast to the classical Shannon sampling series, the regularized Shannon sampling formulas possess an exponential decay and are numerically robust in the presence of noise. Several numerical experiments illustrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The classical Whittaker–Kotelnikov–Shannon sampling theorem plays a fundamental role in signal processing, since it describes the close relation between a bandlimited function and its equidistant samples. More precisely, this sampling theorem states that any function \(f \in L^2({\mathbb {R}})\) with bandwidth \(\le \frac{N}{2}\), i.e., the support of the Fourier transform

is contained in \(\big [- \frac{N}{2},\frac{N}{2}\big ]\), can be recovered from its samples \(f\big (\frac{\ell }{L}\big )\), \(\ell \in {\mathbb {Z}}\), with \(L \ge N\) and it holds

with the \(\mathrm {sinc}\) function

Unfortunately, the practical use of this sampling theorem is limited, since it requires infinitely many samples, which is impossible in practice. Further, the \(\mathrm {sinc}\) function decays very slowly such that the Shannon sampling series

has rather poor convergence. Moreover, in the presence of noise or quantization in the samples \(f\big (\frac{\ell }{L}\big )\), \(\ell \in {\mathbb {Z}}\), the convergence of Shannon sampling series may even break down completely (see [4]).

To overcome these drawbacks, one can use the following three techniques (see [6, 8, 13, 18]):

1. The function \({\mathrm {sinc}}(L \pi \, \cdot )\) is regularized by a truncated window function

where the window function \(\varphi : {\mathbb {R}} \rightarrow [0,\,1]\) belongs to the set \(\Phi _{m,L}\) (as defined in Section 3***) and \({\mathbf{1}}_{[-m/L,\,m/L]}\) denotes the indicator function of the interval \(\big [-\frac{m}{L},\,\frac{m}{L}\big ]\) with some \(m \in {\mathbb {N}} \setminus \{1\}\). Then we recover a function \(f \in L^2({\mathbb {R}})\) with bandwidth \(\le \frac{N}{2}\) by the regularized Shannon sampling formula

where \(L \ge N\). Obviously, this is an interpolating approximation of f, since it holds

2. The use of the truncated window function \(\varphi _m\) with compact support \(\big [- \frac{m}{L},\,\frac{m}{L}\big ]\) leads to localized sampling of f, i.e., the computation of \((R_{\varphi ,m} f)(t)\) for \(t \in {\mathbb {R}} \setminus \frac{1}{L}\,{\mathbb {Z}}\) requires only 2m samples \(f\big (\frac{k}{L}\big )\), where \(k\in {\mathbb {Z}}\) fulfills the condition \(|k - L t| \le m\). If f has bandwidth \(\le N/2\) and \(L \ge N\), then the reconstruction of f on the interval \([0,\,1]\) requires only \(2m + L\) samples \(f\big (\frac{\ell }{L}\big )\) with \(\ell = -m,\,1-m,\,\ldots ,\,m+L\).

3. In many applications, one usually employs oversampling, i.e., a function \(f \in L^2({\mathbb {R}})\) of bandwidth \(\le N/2\) is sampled on a finer grid \(\frac{1}{L}\, {\mathbb {Z}}\) with \(L > N\).

This concept of regularized Shannon sampling formulas with localized sampling and oversampling has already been studied by various authors, e.g. in [13, 16] and references therein for the Gaussian window function. An improvement of the theoretical error bounds for the Gaussian window function was made by [6], whereas oversampling was neglected in this work. Rather, the special case \(L=N=1\) was studied. The case of erroneous sampling for the Gaussian window function was examined in [15]. Generalizations of the Gaussian regularized Shannon sampling formula to holomorphic functions f were introduced by [17] using contour integration and by [19] for the approximation of derivatives of f. A survey of different approaches for window functions can be found in [14]. Furthermore, in [18] the problem was approached in Fourier space. Oversampling then is equivalent to continuing the Fourier transform of the \(\mathrm {sinc}\) function on the larger interval \([- L/2, L/2]\). Here the aim is to find a regularization function whose Fourier transform is smooth. However, the resulting function does not have an explicit representation and therefore cannot directly be used in spatial domain. Nevertheless, the complexity and efficiency of the received methods was not the main focus of the aforementioned approaches. On the contrary, we now propose a new set \(\Phi _{m,L}\) of window functions \(\varphi \) such that smaller truncation parameters m are sufficient for achieving high accuracy, therefore yielding short sums being evaluable very fast.

In this paper we present new regularized Shannon sampling formulas with localized sampling and derive new estimates of the uniform approximation error

where we apply several window functions \(\varphi \). It is shown in the subsequent sections that the uniform approximation error decays exponentially with respect to m, if \(\varphi \in \Phi _{m,L}\) is the Gaussian, \(\mathrm B\)-spline, or \(\sinh \)-type window function. Otherwise, if \(\varphi \in \Phi _{m,L}\) is chosen as the rectangular window function, then the uniform approximation error of the regularized Shannon sampling formula has a poor decay of order \(m^{-1/2}\). Moreover, we show that the regularized Shannon sampling formulas are numerically robust for noisy samples, i.e., if \(\varphi \in \Phi _{m,L}\) is the Gaussian, \(\mathrm B\)-spline, or \(\sinh \)-type window function, then the uniform perturbation error (3.29) only grows as \(m^{1/2}\).

In our approach, we need the Fourier transform of the product of \({\mathrm {sinc}}(L \pi \, \cdot )\) and the window function \(\varphi \). Since the \({\mathrm {sinc}}\) function belongs to \(L^2({\mathbb {R}})\), but not to \(L^1({\mathbb {R}})\), we present the convolution property of the Fourier transform for \(L^2({\mathbb {R}})\) functions in the preliminary Sect. 2 (see Lemma 2.2). In Sect. 3, we consider regularized Shannon sampling formulas for an arbitrary window function \(\varphi \in \Phi _{m,L}\). Here the main results are Theorem 3.2 with a unified approach to error estimates for regularized Shannon sampling formulas and Theorem 3.4 on the numerical robustness of regularized Shannon sampling formulas. Afterwards, we concretize the results for several choices of window functions. In Sect. 4, we consider the Gaussian window function (as in [6, 13]). Then Theorem 4.1 shows that the uniform approximation error decays exponentially with respect to m. In Sect. 5, we use the \(\mathrm B\)-spline window function and prove the exponential decay of the uniform approximation error with respect to m in Theorem 5.3. In Sect. 6 we discuss the \(\sinh \)-type window function, where it is proven in Theorem 6.1 that the uniform approximation error decays exponentially with respect to m. Several numerical experiments illustrate the theoretical results. Finally, in the concluding Sect. 7, we compare the proposed window functions and show the superiority of the new \(\sinh \)-type window function.

2 Convolution property of the Fourier transform

Let \(C_0({\mathbb {R}})\) denote the Banach space of continuous functions \(f :{\mathbb {R}}\rightarrow {\mathbb {C}}\) vanishing as \(|x|\rightarrow \infty \) with norm

As known (see [10, p. 66]), the Fourier transform defined by

is a continuous mapping from \(L^1({\mathbb {R}})\) into \(C_0({\mathbb {R}})\) with

Here we are interested in the Hilbert space \(L^2({\mathbb {R}})\) with inner product and norm

By the theorem of Plancherel, the Fourier transform is also an invertible, continuous mapping from \(L^2({\mathbb {R}})\) onto itself with \(\Vert f\Vert _{L^2({\mathbb {R}})} = \Vert {\hat{f}}\Vert _{L^2({\mathbb {R}})}\).

For \(f,\,g \in L^1({\mathbb {R}})\) the convolution property of the Fourier transform reads as

where the convolution is defined by

However, for any f, \(g \in L^2({\mathbb {R}})\) the convolution property of the Fourier transform is not true in the form (2.2), since by Young’s inequality \(f * g \in C_0({\mathbb {R}})\), by Hölder’s inequality \({{\hat{f}}}\,{{\hat{g}}} \in L^1({\mathbb {R}})\) and since the Fourier transform does not map \(C_0({\mathbb {R}})\) into \(L^1({\mathbb {R}})\). Instead, the convolution property of the Fourier transform in \(L^2({\mathbb {R}})\) has the following form.

Lemma 2.1

For all \(f,\,g \in L^2({\mathbb {R}})\) it holds

where \({\check{h}}\) denotes the inverse Fourier transform of \(h \in L^1({\mathbb {R}})\) defined as

Proof

. For arbitrary \(f,\,g \in L^2({\mathbb {R}})\) it holds \({{\hat{f}}},\,{{\hat{g}}} \in L^2({\mathbb {R}})\), see [10, p. 80]. Since the Schwartz space \({{\mathcal {S}}}({\mathbb {R}})\), cf. [10, p. 167], is dense in \(L^2({\mathbb {R}})\), see. [10, p. 170], there exist sequences \((f_n)_{n=1}^{\infty }\) and \((g_n)_{n=1}^{\infty }\) in \({\mathcal S}({\mathbb {R}})\) such that

Since the Fourier transform is a continuous mapping on \(L^2(\mathbb R)\), it follows that

If we now write

we see by the triangle inequality and Young’s inequality that

and hence by (2.5) that

On the other hand, if we write

we see by the triangle inequality and Hölder’s inequality that

and hence by (2.6) that

By the convolution property of the Fourier transform in \({\mathcal S}({\mathbb {R}})\), we have for \(f_n\), \(g_n \in {{\mathcal {S}}}({\mathbb {R}})\) that

Note that \(f_n * g_n \in {{\mathcal {S}}}({\mathbb {R}})\) and \({{\hat{f}}}_n\, {{\hat{g}}}_n \in {{\mathcal {S}}}({\mathbb {R}})\) (see [10, p. 175]). Since the Fourier transform on \({{\mathcal {S}}}({\mathbb {R}})\) is invertible (see [10, p. 175]), it holds

Moreover, since the inverse Fourier transform is a continuous mapping from \(L^1({\mathbb {R}})\) into \(C_0({\mathbb {R}})\), it holds by [10, pp. 66–67] that

From (2.8) it follows that

Thus, by (2.9) we conclude that

For \(n\rightarrow \infty \) the right hand side of above estimate converges to zero by (2.7) and (2.10). This implies (2.3). \(\square \)

The following equivalent formulation of the convolution property in \(L^2({\mathbb {R}})\) can be obtained, if we replace \(f \in L^2(\mathbb R)\) by \({\hat{f}} \in L^2({\mathbb {R}})\) and \(g \in L^2({\mathbb {R}})\) by \({\hat{g}} \in L^2({\mathbb {R}})\) in (2.3).

Lemma 2.2

For all f, \(g \in L^2({\mathbb {R}})\) it holds

Proof

. For any f, \(g \in L^2({\mathbb {R}})\) it holds \(\hat{{\hat{f}}} = f(-\,\cdot )\) as well as \(\hat{{\hat{g}}} = g(-\, \cdot ). \) Note that by Hölder’s inequality we have \(f\,g \in L^1({\mathbb {R}})\). Then by above Lemma 2.1 it follows that

This completes the proof. \(\square \)

Note that Lemma 2.2 improves a corresponding result in [3, p. 209]. There it was remarked that for f, \(g \in L^2({\mathbb {R}})\) it holds \((f \,g)\hat{} = {{\hat{f}}} * {{\hat{g}}} \in L^{\infty }({\mathbb {R}})\), but by Lemma 2.2 the function \({{\hat{f}}} * {{\hat{g}}}\) indeed belongs to \(C_0({\mathbb {R}}) \subset L^{\infty }({\mathbb {R}})\).

3 Regularized Shannon sampling formulas with localized sampling

Let

be the Paley–Wiener space. The functions of \({\mathcal B}_{\delta }({\mathbb {R}})\) are called bandlimited to \([- \delta ,\,\delta ]\), where \(\delta > 0\) is the so-called bandwidth. By definition the Paley–Wiener space \({\mathcal B}_{\delta }({\mathbb {R}})\) consists of equivalence classes of almost equal functions. Each of these equivalence classes contains a smooth function, since by inverse Fourier transform it holds for each \(r \in {{\mathbb {N}}}_0\) that

i.e., \(f^{(r)} \in C_0({\mathbb {R}})\), because \((2 \pi {\mathrm i}\,\cdot )^r\,{{\hat{f}}}\in L^1([-\delta ,\, \delta ])\). In the following we will always select the smooth representation of an equivalence class in \({{\mathcal {B}}}_{\delta }({\mathbb {R}})\).

In this paper we consider bandlimited functions \(f \in {\mathcal B}_{\delta }({\mathbb {R}})\) with \(\delta \in (0,\,N/2)\), where \(N\in {\mathbb {N}}\) is fixed. For \(L:=N(1+\lambda )\) with \(\lambda \ge 0\), and any \(m \in {\mathbb {N}} \setminus \{1\}\) with \(2m \ll L\), we introduce the set \(\Phi _{m,L}\) of all window functions \(\varphi : {\mathbb {R}} \rightarrow [0,\,1]\) with the following properties:

-

The window function \(\varphi \in L^2({\mathbb {R}})\) is even, positive on \((-m/L,\,m/L)\) and continuous on \({\mathbb R}\setminus \{- m/L,\, m/L\}\).

-

The restricted window function \(\varphi |_{[0,\,\infty )}\) is monotonously non-increasing with \(\varphi (0) = 1.\)

-

The Fourier transform

$$\begin{aligned} {{\hat{\varphi }}}(v) :=\int _{{\mathbb {R}}} \varphi (x)\,{\mathrm e}^{- 2 \pi {\mathrm i}v x}\,{\mathrm d}x = 2 \int _0^{\infty } \varphi (x)\,\cos ( 2 \pi \,v x)\,{\mathrm d}x \end{aligned}$$is explicitly known.

Examples of such window functions are the rectangular window function

where \({{\mathbf {1}}}_{[-m/L,\,m/L]}\) is the indicator function of the interval \([-m/L,\,m/L]\), the Gaussian window function

with some \(\sigma >0\), the modified \(\mathrm B\)-spline window function

where \(M_{2s}\) is the centered cardinal \(\mathrm B\)-spline of even order 2s, and the \(\sinh \)-type window function

with certain \(\beta > 0\). All these window functions are well-studied in the context of the nonequispaced fast Fourier transform (NFFT), see e.g. [11] and references therein.

Now let \(\varphi \in \Phi _{m,L}\) be a given window function. We define the truncated window function

and study the regularized Shannon sampling formula with localized sampling

to rapidly reconstruct the values f(t) for \(t \in {\mathbb {R}}\) from given sampling data \(f\big (\frac{\ell }{L}\big )\), \(\ell \in \mathbb Z\), with high accuracy.

It is known that \(\{\mathrm {sinc}\big (L \pi \,\big (\cdot -\frac{\ell }{L}\big )\big ) : \, \ell \in {\mathbb {Z}}\}\) forms an orthogonal system in \(L^2({\mathbb {R}})\), since by the shifting property the Fourier transform of \(\mathrm {sinc}\big (L \pi \,\big (\cdot -\frac{\ell }{L}\big )\big )\) is equal to

and by the Parseval identity it holds for all \(\ell \), \(k \in \mathbb Z\) that

with the Kronecker symbol \(\delta _{\ell ,k}\). Moreover, it follows directly from the Whittaker–Kotelnikov–Shannon sampling theorem (see (1.1)) that the system \(\{\mathrm {sinc}\big (L \pi \,\big (\cdot -\frac{\ell }{L}\big )\big ) : \, \ell \in {\mathbb {Z}}\}\) forms an orthogonal basis of \({{\mathcal {B}}}_{N/2}({\mathbb {R}})\). From (1.1) and (3.7) it follows that for any \(f \in {{\mathcal {B}}}_{\delta }({\mathbb {R}}) \subset {\mathcal B}_{L/2}({\mathbb {R}})\) with \(\delta \in (0,\, N/2]\) and \(L\ge N\) it holds

Firstly, we consider the regularized Shannon sampling formula (3.6) with the simple rectangular window function \(\varphi = \varphi _{\mathrm {rect}}\), see (3.1), i.e., for some \(m \in {\mathbb {N}} \setminus \{1\}\) we form the rectangular regularized Shannon sampling formula with localized sampling

Obviously, the rectangular regularized Shannon sampling formula (3.9) interpolates f on the grid \(\frac{1}{L}\,{\mathbb {Z}}\), i.e., for all \(m\in {\mathbb {N}}\setminus \{1\}\), the interpolation property

is fulfilled since \(\mathrm {sinc}(\pi k)=0\) for \(k\in {\mathbb {Z}}\setminus \{0\}\). Due to the definition of the indicator function \({\mathbf{1}}_{[-m/L,\,m/L]}\), for \(t \in \big (0,\,\frac{1}{L}\big )\) the rectangular regularized Shannon sampling formula reads as

with the index set \({\mathcal {J}}_m :=\{-m+1,\,-m+2,\ldots ,\,m\}\). Indeed, on any interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\) with \(k\in {\mathbb {Z}}\) the rectangular regularized Shannon sampling formula reads as

However, since the \(\mathrm {sinc}\) function decays slowly at infinity, (3.9) is not a good approximation to f on \(\mathbb R\). As a consequence of a result in [8], it can be seen that the convergence rate of the sequence \(\big (f - R_{{\mathrm {rect}},m} f \big )_{m=1}^{\infty }\) is only \({\mathcal {O}}(m^{-1/2})\) for sufficiently large m.

Lemma 3.1

Let \(f \in {\mathcal {B}}_{N/2}({\mathbb {R}})\) with fixed \(N \in {\mathbb {N}}\), \(L:=N(1+\lambda )\) with \(\lambda \ge 0\) and \(m \in {\mathbb {N}} \setminus \{1\}\) be given. Then it holds

Proof

Since \(R_{{\mathrm {rect}},m} f\) possesses similar representations (3.11) on each interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\), \(k\in {\mathbb {Z}}\), we consider \(f(t) - (R_{m,{\mathrm {rect}}} f)(t)\) only for \(t \in \big [0,\,\frac{1}{L}\big ]\) and show that

The Whittaker–Kotelnikov–Shannon sampling theorem (see (1.1)) implies that

Then by the Cauchy–Schwarz inequality and (3.8) it follows that

with the auxiliary function

By the integral test for convergence of series we estimate the function

for \(t \in \big (0,\, \frac{1}{L}\big )\). Then (3.13), (3.14) combined with (3.10) imply (3.12).

By the same technique, the above estimate of the approximation error can be shown on each interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\), \(k\in {\mathbb {Z}}\). This completes the proof. \(\square \)

In view of the slow convergence of the sequence \(\big (R_{{\mathrm {rect}},m} f(t)\big )_{m=1}^{\infty }\) it has been proposed to modify the rectangular regularized Shannon sampling sum (3.9) by multiplying the \(\mathrm {sinc}\) function with a more convenient window function \(\varphi \in \Phi _{m,L}\) (see [6, 13]). For any \(m \in {\mathbb {N}} \setminus \{1\}\) the regularized Shannon sampling formula with localized sampling is given by

with the truncated window function (3.5). Note that it holds the interpolation property

Especially for \(t \in \big (0,\,\frac{1}{L}\big )\), we obtain the regularized Shannon sampling formula

where

is the regularized \(\mathrm {sinc}\) function. For the reconstruction of f on any interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\) with \(k \in {\mathbb {Z}}\), we use

i.e., we reconstruct f by \(R_{\varphi ,m}f\) separately for each open interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\), \(k\in \mathbb Z\). Now we estimate the uniform approximation error

of the regularized Shannon sampling formula.

Theorem 3.2

Let \(f\in {{\mathcal {B}}}_{\delta }({\mathbb {R}})\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\) and \(m \in {\mathbb N}\setminus \{1\}\). Further let \(\varphi \in \Phi _{m,L}\) with the truncated window function (3.5) be given.

Then the regularized Shannon sampling formula (3.15) with localized sampling satisfies

where the corresponding error constants are defined by

Proof

. By (3.18) we split the approximation error

on each interval \(\big [\frac{k}{L},\,\frac{k+1}{L}\big ]\) with \(k \in {\mathbb {Z}}\) into the regularization error

where \(\psi \) denotes the regularized \(\mathrm {sinc}\) function (3.17), and the truncation error

Initially, we only consider the error on the interval \(\big [0,\,\frac{1}{L}\big ]\). We start with the regularization error (3.23). By Lemma 2.2, the Fourier transform of \(\psi \) reads as

Hence, using the shifting property of the Fourier transform, the Fourier transform of \(\psi \big (\cdot \, - \frac{\ell }{L}\big )\) reads as

Therefore, the Fourier transform of the regularization error \(e_1\) has the form

By the assumption \(f \in {{\mathcal {B}}}_{\delta }({\mathbb {R}})\) with \(\delta \in (0,\,N/2)\) and \(L\ge N\), it holds \( \mathrm {supp}\,{\hat{f}} \subseteq [- \delta ,\, \delta ] \subset [- L/2,\,L/2 ] \) and hence the restricted function \({\hat{f}}|_{[-L/2,\,L/2]}\) belongs to \(L^2([-L/2,\,L/2])\). Thus, this function possesses the L-periodic Fourier expansion

with the Fourier coefficients

In other words, \({{\hat{f}}}\) can be represented as

Introducing the auxiliary function

we see by inserting (3.26) into (3.25) that \({{\hat{e}}}_1(v) = {{\hat{f}}}(v)\, \eta (v)\) and thereby \(|{{\hat{e}}}_1(v)| \le |{{\hat{f}}}(v)|\,|\eta (v)|\). Thus, by inverse Fourier transform (2.4) we get

Using Cauchy–Schwarz inequality and Parseval identity, we see that

In summary, using the error constant (3.21), this yields \( \Vert e_1 \Vert _{C_0({\mathbb {R}})} \le E_1(m,\delta ,L)\,\Vert f\Vert _{L^2(\mathbb R)} . \)

Now we estimate the truncation error. By (3.24) it holds for \(t \in \big (0,\,\frac{1}{L}\big )\) that

Using (3.17) and the non-negativity of \(\varphi \), we receive

For \(t \in \big (0,\, \frac{1}{L}\big )\) and \(\ell \in {{\mathbb {Z}}} \setminus {{\mathcal {J}}_m}\) we obtain

and hence

Then the Cauchy–Schwarz inequality implies that

By (3.8) it holds

Since \(\varphi |_{[0,\,\infty )}\) is monotonously non-increasing by assumption \(\varphi \in \Phi _{m,L}\), we can estimate the series for \(t \in \big (0,\, \frac{1}{L}\big )\) by

Using the integral test for convergence of series, we obtain that

By (3.16) it holds \(e_{2,0}(0) = e_{2,0}\big (\frac{1}{L}\big ) = 0\). Hence, we obtain by (3.22) that

By the same technique, this error estimate can be shown for each interval \(\big [\frac{k}{L},\,\frac{k+1}{L}\big ]\) with \(k \in \mathbb Z\). This completes the proof. \(\square \)

Remark 3.3

Theorem 3.2 can be simplified, if the window function \(\varphi \in \Phi _{m,L}\) is continuous on \({\mathbb {R}}\) and vanishes on \({\mathbb {R}} \setminus \big [- \frac{m}{L},\, \frac{m}{L}\big ]\). Then the truncation errors \(e_{2,k}(t)\) vanish for all \(t \in \big (0,\,\frac{1}{L}\big )\) and \(k \in {\mathbb {Z}}\), such that \(E_2(m,\delta ,L) = 0\). Thereby, we obtain the simple error estimate

We remark that this is the case for the \(\mathrm B\)-spline (3.3) as well as the \(\sinh \)-type window function (3.4), but not for the Gaussian window function (3.2) since \(\varphi _{\mathrm {Gauss}}\) does not vanish on \({\mathbb {R}} \setminus \big [- \frac{m}{L},\, \frac{m}{L}\big ]\). Also the rectangular window function (3.1) does not fit into this setting since \(\varphi _{\mathrm {rect}}\) is not continuous on \({\mathbb {R}}\).

If the samples \(f\big (\frac{\ell }{L}\big )\), \(\ell \in {\mathbb {Z}}\), of a bandlimited function \(f\in {{\mathcal {B}}}_{\delta }({\mathbb {R}})\) are not known exactly, i.e., only erroneous samples \({{\tilde{f}}}_{\ell } :=f\big (\frac{\ell }{L}\big ) + \varepsilon _{\ell }\) with \(|\varepsilon _{\ell }| \le \varepsilon \), \(\ell \in {\mathbb {Z}}\), with \(\varepsilon >0\) are known, the corresponding Shannon sampling series may differ appreciably from f (see [4]). Here we denote the regularized Shannon sampling formula with erroneous samples \({{\tilde{f}}}_{\ell }\) by

In contrast to the classical Shannon sampling series, the regularized Shannon sampling formula is numerically robust, i.e., the uniform perturbation error

is small, as shown in the following.

Theorem 3.4

Let \(f\in {{\mathcal {B}}}_{\delta }(\mathbb R)\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\) and \(m \in {\mathbb N}\setminus \{1\}\) be given. Further let \(\varphi \in \Phi _{m,L}\) with the truncated window function (3.5) as well as \({{\tilde{f}}}_{\ell } = f(\ell /L) + \varepsilon _{\ell }\), where \(|\varepsilon _{\ell }| \le \varepsilon \) for all \(\ell \in {\mathbb {Z}}\), with \(\varepsilon >0\) be given.

Then the regularized Shannon sampling sum (3.15) with localized sampling satisfies

Proof

. On each open interval \(\big (\frac{k}{L},\,\frac{k+1}{L}\big )\) with \(k \in {\mathbb {Z}}\) we denote the error by (3.18) as

Initially, we consider the interval \(\big [0,\,\frac{1}{L}\big ]\). Using (3.17), the non-negativity of \(\varphi \) and \(|\varepsilon _{\ell }| \le \varepsilon \), we receive

Since \(\varphi |_{[0,\,\infty )}\) is monotonously non-increasing by assumption \(\varphi \in \Phi _{m,L}\), we can estimate the sum for \(t \in \big (0,\, \frac{1}{L}\big )\) by

Using the integral test for convergence of series, we obtain that

By the definition of the Fourier transform (2.1) it holds for \(\varphi \in \Phi _{m,L}\) that

and therefore

Additionally, by the interpolation property (3.16) it holds \(|{\tilde{e}}_0(0)| = |\varepsilon _0| \le \varepsilon \) as well as \(\left| {\tilde{e}}_0\big (\frac{1}{L}\big )\right| = |\varepsilon _1| \le \varepsilon \). By \(\varphi \in \Phi _{m,L}\) we have \(\varphi (0)=1\) and therefore we obtain that

By the same technique, this error estimate can be shown for each interval \(\big [\frac{k}{L},\,\frac{k+1}{L}\big ]\) with \(k \in \mathbb Z\). Then the triangle inequality yields (3.31), which completes the proof. \(\square \)

Now it merely remains to estimate the error constants \(E_j(m,\delta ,L)\), \(j=1,2,\) for a certain window function, which shall be done for some selected ones in the following sections.

4 Gaussian regularized Shannon sampling formula

Firstly, we consider the Gaussian window function (3.2) with some \(\sigma >0\) and show that in this case the uniform approximation error (3.19) for the regularized Shannon sampling formula (3.15) decays exponentially with respect to m.

Theorem 4.1

Let \(f\in {{\mathcal {B}}}_{\delta }(\mathbb R)\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\), and \(m \in {\mathbb N}\setminus \{1\}\) be given.

Then the regularized Shannon sampling formula (3.15) with the Gaussian window function (3.2) and \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\) satisfies the error estimate

Proof

(cf. [6, 13]) By Theorem 3.2 we have to compute the error constants \(E_j(m,\delta ,L)\), \(j=1,2,\) for the Gaussian window function (3.2). First we study the regularization error constant (3.21). The function (3.2) possesses the Fourier transform

Thus, we obtain by substitution \(w=\sqrt{2} \pi \sigma u\) that the auxiliary function (3.27) is given by

For \(v \in [- \delta ,\, \delta ]\), the function \(\eta \) can be evaluated as

By [1, p. 298, Formula 7.1.13], for \(x \ge 0\) it holds the inequality

which can be simplified to

Therefore, the auxiliary function \(\eta (v)\) can be estimated by

Since the function \(\frac{1}{x}\,{\mathrm e}^{- \sigma ^2 x^2/2}\) decreases for \(x>0\), and \(L/2 - v\), \(L/2 + v \in \) \([L/2 - \delta , \, L/2 + \delta ]\) by \(v \in [- \delta ,\, \delta ]\) with \(0< \delta <L/2\), we conclude that

Hence, by (3.21) and (3.27) we receive

Now we examine the truncation error constant (3.22). Here it holds

From (4.3) it follows

Thus, by (3.21) we obtain

For the special parameter \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\), both error terms (4.4) and (4.5) have the same exponential decay such that

For \(\delta \in (0,N/2)\) and \(m\in {\mathbb {N}}\setminus \{1\}\) it additionally holds

This completes the proof. \(\square \)

We remark that Theorem 4.1 improves the corresponding results in [6, 13] since the actual decay rate could be improved in Theorem 4.1 from \((m-1)\) to m.

Example 4.2

We aim to visualize the error bound from Theorem 4.1. For a given function \(f\in {{\mathcal {B}}}_{\delta }({\mathbb {R}})\) with \(\delta =\tau N \in (0,\,N/2)\) and \(L= N(1+\lambda )\), where \(0<\tau <\frac{1}{2}\) and \(\lambda \ge 0\), we consider the approximation error

For \(\varphi = \varphi _{\mathrm {Gauss}}\) we show that by (4.1) it holds \(e_{m,\tau ,\lambda }(f) \le E_{m,\tau ,\lambda }\,\Vert f\Vert _{L^2({\mathbb {R}})}\) where

with \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\). The error (4.6) shall here be approximated by evaluating a given function f and its approximation \(R_{\varphi ,m} f\) at \(S=10^5\) equidistant points \(t_s\in [-1,\,1]\), \(s=1,\dots ,S\). By the definition of the regularized Shannon sampling formula in (3.15) it can be seen that for \(t \in [-1, 1]\) we have

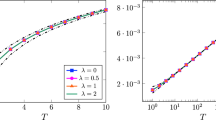

Here we study the function \(f(t) = \sqrt{2 \delta } \,\mathrm {sinc}(2 \delta \pi t)\), \(t \in {\mathbb {R}}\), such that it holds \(\Vert f\Vert _{L^2({\mathbb {R}})}=1\). We fix \(N=128\) and consider the evolution for different values \(m \in {\mathbb {N}} \setminus \{1\}\), i.e., we are still free to make a choice for the parameters \(\tau \) and \(\lambda \). In a first experiment we fix \(\lambda =1\) and choose different values for \(\tau <\frac{1}{2}\), namely we consider \(\tau \in \{\nicefrac {1}{20},\,\nicefrac {1}{10},\,\nicefrac {1}{4},\,\nicefrac {1}{3},\,\nicefrac {9}{20}\}\). The corresponding results are depicted in Fig. 1a. We recognize that the smaller the factor \(\tau \) can be chosen, the better the error results are. As a second experiment we fix \(\tau =\frac{1}{3}\), but now choose different \(\lambda \in \{0,0.5,1,2\}\). The associated results are displayed in Fig. 1b. It can clearly be seen that the higher the oversampling parameter \(\lambda \) is chosen, the better the error results get. We remark that for larger choices of N, the line plots in Fig. 1 would only be shifted slightly upwards, such that for all N we receive almost the same error results.

Maximum approximation error (4.6) and error constant (4.7) using \(\varphi _{\mathrm {Gauss}}\) in (3.2) and \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\) for the function \(f(x) = \sqrt{2\delta } \,\mathrm {sinc}(2 \delta \pi x)\) with \(N=128\), \(m\in \{2, 3, \ldots , 10\}\), as well as \(\tau \in \{\nicefrac {1}{20},\,\nicefrac {1}{10},\,\nicefrac {1}{4},\,\nicefrac {1}{3},\,\nicefrac {9}{20}\}\), \(\delta = \tau N\), and \(\lambda \in \{0,0.5,1,2\}\), respectively

Now we show that for the regularized Shannon sampling formula with the Gaussian window function (3.2) the uniform perturbation error (3.29) only grows as  . We remark that a similar result can also be found in [15].

. We remark that a similar result can also be found in [15].

Theorem 4.3

Let \(f\in {\mathcal B}_{\delta }({\mathbb {R}})\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\) Jand \(m \in {{\mathbb {N}}}\setminus \{1\}\) be given. Further let \(R_{\mathrm {Gauss},m}{{\tilde{f}}}\) be as in (3.28) with the noisy samples \({{\tilde{f}}}_{\ell } = f\big (\frac{\ell }{L}\big ) + \varepsilon _{\ell }\), where \(|\varepsilon _{\ell }| \le \varepsilon \) for all \(\ell \in {\mathbb {Z}}\) and \(\varepsilon >0\).

Then the regularized Shannon sampling formula (3.15) with the Gaussian window function (3.2) and \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\) satisfies

Proof

By Theorem 3.4 we only have to compute \(\hat{\varphi }_{\mathrm {Gauss}}(0)\) for the Gaussian window function (3.2). By (4.2) we recognize that

such that (3.30) yields the assertion. \(\square \)

Example 4.4

Now we visualize the error bound from Theorem 4.3. Similar to Example 4.2, we consider the perturbation error

For \(\varphi = \varphi _{\mathrm {Gauss}}\) we show that by (4.8) it holds \(\tilde{e}_{m,\tau ,\lambda }(f) \le {\tilde{E}}_{m,\tau ,\lambda }\), where

We conduct the same experiments as in Example 4.2 and introduce a maximum perturbation of \(\varepsilon =10^{-3}\) as well as uniformly distributed random numbers \(\varepsilon _{\ell }\) in \((-\varepsilon ,\varepsilon )\). Due to the randomness we perform the experiments one hundred times and then take the maximum error over all runs. The associated results are displayed in Fig. 2.

Maximum perturbation error (4.9) over 100 runs and error constant (4.10) using \(\varphi _{\mathrm {Gauss}}\) in (3.2) and \(\sigma = \sqrt{\frac{m}{\pi L\,(L - 2\delta )}}\) for the function \(f(x) = \sqrt{2\delta } \,\mathrm {sinc}(2 \delta \pi x)\) with \(\varepsilon =10^{-3}\), \(N=128\), \(m\in \{2, 3, \ldots , 10\}\), as well as \(\tau \in \{\nicefrac {1}{20},\,\nicefrac {1}{10},\,\nicefrac {1}{4},\,\nicefrac {1}{3},\,\nicefrac {9}{20}\}\), \(\delta = \tau N\), and \(\lambda \in \{0,0.5,1,2\}\), respectively

5 B-spline regularized Shannon sampling formula

Now we consider the modified \(\mathrm B\)-spline window function (3.3) with \(s,\,m \in {\mathbb {N}} \setminus \{1\}\) and \(L= N\,(1 + \lambda )\), \(\lambda \ge 0\), where \(M_{2s}\) denotes the centered cardinal \(\mathrm B\)-spline of even order 2s. Note that (3.3) is supported on \(\big [-\frac{m}{L},\, \frac{m}{L}\big ]\).

Lemma 5.1

For the value \(M_{2s}(0)\), \(s\in {\mathbb {N}}\), it holds the formula

The sequence \(\big (\sqrt{2s}\,M_{2s}(0)\big )_{s=1}^{\infty }\) has the limit

Proof

. By inverse Fourier transform of \({{\hat{\varphi }}}_{\mathrm B}\) it holds

Hence, for \(x = 0\) it follows that

The above integral can be determined in explicit form (see [7, 9, p. 20, 5.12] or [2, (4.1.12)]) as

such that (5.1) is shown. Especially, it holds \(M_2(0) = 1\), \(M_4(0) = \frac{2}{3}\), \(M_6(0) = \frac{11}{20}\), \(M_8(0) = \frac{151}{315}\), \(M_{10}(0) = \frac{15619}{36288}\), and \(M_{12}(0) = \frac{655177}{1663200}\). A table with the decimal values of \(M_{2s}(0)\) for \(m =15,\,\ldots ,\,50,\) can be found in [7]. For example, it holds \(M_{100}(0) \approx 0.137990\).

By [20, (3.6)], there exists the pointwise limit

such that for \(x = 0\) we obtain (5.2). \(\square \)

Remark 5.2

By numerical computations we can see that the sequence \(\big (\sqrt{2s}\,M_{2s}(0)\big )_{s=2}^{50}\) increases monotonously, see Fig. 3. For large s we can use the asymptotic expansion

(see [7]) such that the whole sequence \(\big (\sqrt{2s}\,M_{2s}(0)\big )_{s=2}^{\infty }\) increases monotonously. Hence, for \(s \in {{\mathbb {N}}}\setminus \{1\}\) it holds

Now we show that for the regularized Shannon sampling formula (3.15) with \(\mathrm B\)-spline window function (3.3) the uniform approximation error (3.19) decays exponentially with respect to m.

Theorem 5.3

Let \(f\in {{\mathcal {B}}}_{\delta }(\mathbb R)\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N\in {\mathbb {N}}\), \(L = N\,(1 + \lambda )\), \(\lambda \ge 0\), and \(m \in {\mathbb {N}} \setminus \{1\}\) be given. Assume that

Then the regularized Shannon sampling formula (3.15) with the \(\mathrm B\)-spline window function (3.3) and \(s = \left\lceil \frac{m+1}{2} \right\rceil \) satisfies the error estimate

Proof

. By Theorem 3.2 we only have to estimate the regularization error constant (3.21), since it holds \(\varphi _{\mathrm B}(x) = \varphi _{\mathrm B}(x)\,{\mathbf{1}}_{[-m/L,\,m/L]}(x)\) for all \(x \in {\mathbb {R}}\) and therefore the truncation error constant (3.22) vanishes for the \(\mathrm B\)-spline window function (3.3) by Remark 3.3.

such that the auxiliary function (3.27) is given by

By inverse Fourier transform (2.4) we have

Then, for \(v \in [- \delta , \, \delta ]\), the function \(\eta \) can be determined by (5.7) in the following form

Applying the simple estimates

the function \(\eta \) can be estimated for \(v \in [- \delta , \delta ]\) by

By \(v \in [- \delta ,\,\delta ]\) with \(0< \delta < N/2 \le L/2\), it holds \(L/2 - v\), \(L/2 + v \in [L/2 - \delta ,\,L/2 + \delta ]\). Since the function \(x^{1-2s}\) decreases for \(x > 0\), we conclude that

Hence, by (3.21), (3.27) and (5.3) we receive

To guarantee convergence of this result, we have to satisfy

By means of logarithmic laws we recognize that \(c^{\,2s-1} = \mathrm e^{\ln (c^{2s-1})} = \mathrm e^{(2s-1)\,\ln c}\). Thus, the condition \(c < 1\) yields \(\ln c<0\) and therefore an exponential decay of (5.8) with respect to \((2s-1)\). Thereby, we need on the one hand that \(\ln c\) is as small as possible, which is equivalent to choosing s as small as possible. On the other hand, we aim achieving a decay rate of at least m, i.e., we need to fulfill \(2s-1\ge m\). These two conditions can now be used to pick the optimal parameter \(s\in {\mathbb {N}}\) in the form \(s = \left\lceil \frac{m+1}{2} \right\rceil \). Then (5.9) holds, if (5.4) is fulfilled, and

yields the assertion. We remark that it holds \(\pi m\,(1 + \lambda - 2 \tau ) > 2s(1 + \lambda )\) since \(c<1\). \(\square \)

Example 5.4

Analogous to Example 4.2, we now visualize the error bound from Theorem 5.3, i.e., for \(\varphi = \varphi _{\mathrm {B}}\) we show that for the approximation error (4.6) it holds by (5.5) that \(e_{m,\tau ,\lambda }(f) \le E_{m,\tau ,\lambda }\,\Vert f\Vert _{L^2({\mathbb {R}})}\), where

with \(s = \left\lceil \frac{m+1}{2} \right\rceil \). Additionally, we now have to observe the condition (5.4). For the first experiment in Example 4.2 with \(\lambda =1\) this leads to \(\tau <1-\frac{2}{\pi }\approx 0.3634\), while in the second experiment we fixed \(\tau = \frac{1}{3}\) and therefore have to satisfy \(\lambda >\frac{2\pi }{3\pi -6}-1\approx 0.8346\). Thus, only in these settings the requirements of Theorem 5.3 are fulfilled, and therefore only those error bounds are plotted in Fig. 4 while the approximation error (4.6) is computed for all constellations of parameters as given in Example 4.2. We recognize that we have almost the same behavior as in Fig. 1, which means that there is hardly any improvement using the \(\mathrm {B}\)-spline window function in comparison to the well-studied Gaussian window function.

Maximum approximation error (4.6) and error constant (5.10) using \(\varphi _{\mathrm {B}}\) in (3.3) and \(s = \left\lceil \frac{m+1}{2} \right\rceil \) for the function \(f(x) = \sqrt{2\delta } \,\mathrm {sinc}(2 \delta \pi x)\) with \(N=128\), \(m\in \{2, 3, \ldots , 10\}\), as well as \(\tau \in \{\nicefrac {1}{20},\,\nicefrac {1}{10},\,\nicefrac {1}{4},\,\nicefrac {1}{3},\,\nicefrac {9}{20}\}\), \(\delta = \tau N\), and \(\lambda \in \{0,0.5,1,2\}\), respectively

Now we show that for the regularized Shannon sampling formula with the \(\mathrm B\)-spline window function (3.3) the uniform perturbation error (3.30) only grows as  .

.

Theorem 5.5

Let \(f\in {{\mathcal {B}}}_{\delta }(\mathbb R)\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\) and \(m \in {\mathbb N}\setminus \{1\}\) be given. Let \(s \in {\mathbb {N}}\) be defined by \(s = \left\lceil \frac{m+1}{2} \right\rceil \). Further let \(R_{\mathrm {B},m}{{\tilde{f}}}\) be as in (3.28) with the noisy samples \({{\tilde{f}}}_{\ell } = f\big (\frac{\ell }{L}\big ) + \varepsilon _{\ell }\), where \(|\varepsilon _{\ell }| \le \varepsilon \) for all \(\ell \in {\mathbb {Z}}\) and \(\varepsilon >0\).

Then the regularized Shannon sampling formula (3.15) with the \(\mathrm B\)-spline window function (3.3) and \(s = \left\lceil \frac{m+1}{2} \right\rceil \) satisfies

Proof

By Theorem 3.4 we only have to compute \(\hat{\varphi }_{\mathrm B}(0)\) for the \(\mathrm B\)-spline window function (3.3). By (5.6) and (5.3) we recognize that

Due to \(s = \left\lceil \frac{m+1}{2} \right\rceil \) it holds \(\sqrt{s} \ge \frac{\sqrt{m}}{\sqrt{2}}\) such that (3.30) yields the assertion (5.11). \(\square \)

Similar to Example 4.4, one can visualize the error bound from Theorem 5.5, which leads to results analogous to Fig. 2.

6 sinh-type regularized Shannon sampling formula

We consider the \(\sinh \)-type window function (3.4) with the parameter \(\beta :=\frac{s\pi \,(1 + 2 \lambda )}{1 + \lambda }\) for \(s>0\), \(m \in {\mathbb {N}} \setminus \{1\}\), and \(L= N\,(1 + \lambda )\), \(\lambda \ge 0\), and show that in this case the uniform approximation error (3.19) for the regularized Shannon sampling formula (3.15) decays exponentially with respect to m.

Theorem 6.1

Let \(f\in {{\mathcal {B}}}_{\delta }({\mathbb {R}})\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N\in {\mathbb {N}}\), \(L = N\,(1 + \lambda )\), \(\lambda \ge 0\), and \(m \in {\mathbb {N}} \setminus \{1\}\) be given.

Then the regularized Shannon sampling formula (3.15) with the \(\sinh \)-type window function (3.4) and \(\beta = \frac{\pi m\,(1 + \lambda + 2 \tau )}{1 + \lambda }\) satisfies the error estimate

where \(w_0 = \frac{1 + \lambda - 2\tau }{1 + \lambda + 2\tau } \in (0,\,1)\). Further, the regularized Shannon sampling formula (3.15) with the \(\sinh \)-type window function (3.4) and \(\beta = \frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\) fulfills

Proof

. By Theorem 3.2 we only have to estimate the regularization error constant (3.21), since it holds \(\varphi _{\sinh }(x) = \varphi _{\sinh }(x)\,{\mathbf{1}}_{[-m/L,\,m/L]}(x)\) for all \(x \in {\mathbb {R}}\) and therefore the truncation error constant (3.22) vanishes for the \(\sinh \)-type window function (3.4) by Remark 3.3.

By [9, p. 38, 7.58] or [12] it holds

where \(w :=2\pi m v/L\) denotes a scaled frequency. Thus, the auxiliary function (3.27) is given by

Substituting \(u = \frac{sL\,(1+ 2\lambda )}{m(2 + 2\lambda )}\,w\), we obtain for \(v \in [- \delta , \, \delta ]\) that

with

Since the integrand of (6.2) behaves differently for \(w \in [-1, 1]\) and \(w \in {\mathbb {R}} \setminus (-1, 1)\) we have to distinguish between the cases \(w_1(v)\le 1\) and \(w_1(v)\ge 1\) for all \(v \in [-\delta ,\, \delta ]\). By definition \(w_1(v)\) is linear and monotonously increasing. Thus, we have \(\min \{w_1(v):\,v\in [-\delta ,\,\delta ]\} = w_1(-\delta )\) and \(\max \{w_1(v):\,v\in [-\delta ,\,\delta ]\} = w_1(\delta )\). This fact can now be used to choose an optimal parameter \(s = s(m,\tau ,\lambda ) > 0\) such that either \(w_1(\delta )\le 1\) or \(w_1(-\delta )\ge 1\) is fulfilled.

Case 1 (\(\varvec{w_1(\delta )\le 1}\)): Note that by [5, 6.681–3] and [1, 10.2.13] as well as \(J_1({\mathrm i}\,z) = {\mathrm i}\,I_1(z)\) for \(z \in {\mathbb {C}}\) it holds

Then from (6.2) and (6.4) it follows that

with

By \(2\,\big (\sinh \frac{\beta }{2}\big )^2 < \sinh \beta \) we have

Since the integrand of (6.5) is positive, it is easy to find an upper bound of \(\eta _1(v)\) for all \(v \in [-\delta ,\,\delta ]\), because by (6.4) it holds

Further, for arbitrary \(v \in [- \delta , \, \delta ]\) we obtain

since the integrand is positive and \(w_0 :=w_1(-\delta ) = \min \{w_1(v):\,v\in [-\delta ,\,\delta ]\}\). Substituting \(w = \sin t\) in (6.7), for all \(v \in [-\delta ,\,\delta ]\) we can estimate

with \(\mathrm {arcsin}\,w_0 \in \big (0,\, \frac{\pi }{2}\big )\). The above integral can now be approximated by the rectangular rule (see Fig. 5) such that

Further it holds \(4\, (\sinh \frac{\beta }{2})^2 = {\mathrm e}^{\beta } - 2 + {\mathrm e}^{-\beta } > {\mathrm e}^{\beta } - 2\). Since by [11, Lemma 7] we have \(\sqrt{2 \pi x}\,{\mathrm e}^{-x}\,I_1(x) < 1\), it holds

and therefore we obtain

Additionally using (3.21) and (3.27) as well as \(\mathrm {arcsin}\,w_0 \in \big (0,\, \frac{\pi }{2}\big )\) this yields

What remains is the choice of the optimal parameter \(s > 0\), where we have to fulfill \(w_1(\delta ) \le 1\). To obtain the smallest possible error bound we are looking for an \(s>0\) that minimizes the error term \(\max _{v\in [-\delta ,\,\delta ]} |\eta (v)|\). By (6.5) and (6.6) we maximize the second integral in (6.5). Since the integrand of (6.5) is positive, the integration limit \(w_1(v)\) should be as large as possible for all \(v \in [-\delta ,\, \delta ]\) and therefore \(w_1(\delta ) = 1\). Rearranging this by (6.3) in terms of s we see immediately that

and hence

such that \(\beta \) depends linearly on m by definition.

Case 2 (\(\varvec{w_1(-\delta )\ge 1}\)): From (6.2) it follows that

with

By (6.4) we obtain

Further it holds

Substituting \(w=\cosh t\) in above integrals, we have

In order to estimate these integrals properly, we now have a closer look at the integrand. As known, the Bessel function \(J_1\) oscillates on \([0,\,\infty )\) and has the non-negative simple zeros \(j_{1,n}\), \(n \in {{\mathbb {N}}}_0\), with \(j_{1,0} = 0\). The zeros \(j_{1,n}\), \(n = 1,\,\ldots ,\,40\), are tabulated in [21, p. 748]. On each interval \(\big [\mathrm {arsinh}\,\frac{j_{1,2n}}{\beta },\,\mathrm {arsinh}\,\frac{j_{1,2n+2}}{\beta }\big ]\), \(n \in {{\mathbb {N}}}_0\), the integrand \(J_1(\beta \,\sinh t)\) is firstly non-negative and then non-positive, see Fig. 6. Due to this properties and the fact that the amplitude is decreasing when \(x\rightarrow \infty \), the integrals are positive on each interval \(\big [\mathrm {arsinh}\,\frac{j_{1,2n}}{\beta },\,\mathrm {arsinh}\,\frac{j_{1,2n+2}}{\beta }\big ]\), \(n \in {{\mathbb {N}}}_0\). Note that by [5, 6.645–1] it holds

where \(K_{\alpha }\) denotes the modified Bessel function of second kind and \(I_{1/2}\), \(K_{1/2}\) denote modified Bessel functions of half order (see [1, 10.2.13, 10.2.14, and 10.2.17]). In addition, numerical experiments have shown that for all \(T\ge 0\) it holds

Therefore, we obtain

and hence by (6.9) it holds

Thus, by (3.21) and (3.27) we conclude that

What remains is the choice of the optimal parameter \(s > 0\), where we have to fulfill \(w_1(-\delta ) = c\) with \(c\ge 1\). Rearranging this by (6.3) in terms of s we see that

To obtain the smallest possible error bound we are looking for a constant \(c\ge 1\) that minimizes the error term \(\max _{v\in [-\delta ,\,\delta ]} |\eta (v)|\). By (6.10) we minimize the upper bound \(3\,{\mathrm e}^{-\beta (c)}\). Since \(3\,{\mathrm e}^{-\beta (c)}\) is monotonously increasing for \(c\ge 1\), we recognize that the minimum value is obtained for \(c=1\). Hence, the suggested parameters are

such that \(\beta \) depends linearly on m by definition. This completes the proof. \(\square \)

Now we compare the actual decay rates of the error constants (6.8) with \(\beta = \frac{\pi m\,(1 + \lambda +2 \tau )}{1 + \lambda }\) and (6.11) with \(\beta = \frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\). It can be seen that the decay rate of (6.8) reads as

with \(w_0 = \frac{1 + \lambda - 2\tau }{1 + \lambda + 2\tau }\). On the other hand, the decay rate of (6.11) is given by \(\frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\). Since \(1 + \lambda > 2\tau \) for all \(\lambda \ge 0\) and \(\tau \in \big (0,\,\frac{1}{2}\big )\), simple calculation shows that

Hence, the error constant (6.11) decays faster than the one in (6.8). Therefore, we will use the \(\sinh \)-type window function (3.4) with \(\beta = \frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\) in the remainder of this paper.

Example 6.2

Analogous to Example 4.2, we visualize the error bound of Theorem 6.1, i. e., for \(\varphi = \varphi _{\mathrm {sinh}}\) we show that for the approximation error (4.6) it holds by (6.11) that \(e_{m,\tau ,\lambda }(f) \le E_{m,\tau ,\lambda }\,\Vert f\Vert _{L^2(\mathbb R)}\), where

with \(\beta = \frac{\pi m\,(1+\lambda - 2 \tau )}{1+\lambda }\). The associated results are displayed in Fig. 7, where a substantial improvement can be seen compared to the Figs. 1 and 4. We also remark that for larger choices of N, the line plots in Fig. 7 would only be shifted slightly upwards, such that for all N we receive almost the same results. This is to say, the \(\sinh \)-type window function is by far the best choice as a regularization function for regularized Shannon sampling sums.

Maximum approximation error (4.6) and error constant (6.12) using \(\varphi _{\sinh }\) and \(\beta = \frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\) in (3.4) for the function \(f(x) = \sqrt{2\delta } \,\mathrm {sinc}(2 \delta \pi x)\) with \(N=128\), \(m\in \{2, 3, \ldots , 10\}\), as well as \(\tau \in \{\nicefrac {1}{20},\,\nicefrac {1}{10},\,\nicefrac {1}{4},\,\nicefrac {1}{3},\,\nicefrac {9}{20}\}\), \(\delta = \tau N\), and \(\lambda \in \{0,0.5,1,2\}\), respectively

Now we show that for the regularized Shannon sampling formula with the \(\sinh \)-type window function (3.4) the uniform perturbation error (3.30) only grows as  .

.

Theorem 6.3

Let \(f\in {\mathcal B}_{\delta }({\mathbb {R}})\) with \(\delta = \tau N\), \(\tau \in (0,\,1/2)\), \(N \in {\mathbb {N}}\), \(L= N(1+\lambda )\) with \(\lambda \ge 0\) and \(m \in {{\mathbb {N}}}\setminus \{1\}\) be given. Further let \(R_{\sinh ,m}{{\tilde{f}}}\) be as in (3.28) with the noisy samples \({{\tilde{f}}}_{\ell } = f\big (\frac{\ell }{L}\big ) + \varepsilon _{\ell }\), where \(|\varepsilon _{\ell }| \le \varepsilon \) for all \(\ell \in {\mathbb {Z}}\) and \(\varepsilon >0\).

Then the regularized Shannon sampling formula (3.15) with the \(\sinh \)-type window function (3.4) and \(\beta = \frac{\pi m\,(1 + \lambda - 2 \tau )}{1 + \lambda }\) satisfies

Proof

By Theorem 3.4 we only have to compute \(\hat{\varphi }_{\sinh }(0)\). By (6.1) and \(\sqrt{2 \pi \beta }\,{\mathrm e}^{-\beta }\,I_1(\beta ) < 1\) (see [11, Lemma 7]) we recognize that

If we now use \(\beta = \frac{\pi m\,(1+\lambda - 2 \tau )}{1+\lambda }\), then (3.30) yields the assertion (6.13). \(\square \)

Similar to Example 4.4, one can visualize the error bound from Theorem 6.3, which leads to results analogous to Fig. 2.

Maximum approximation error (4.6) and error constant (3.20) using \(\varphi \in \{\varphi _{\mathrm {Gauss}},\varphi _{\mathrm {B}}, \varphi _{\sinh }\}\) for the function \(f(x) = \delta \,\mathrm {sinc}^2(\delta \pi x)\) with \(N=256\), \(\tau = 0.45\), \(\delta = \tau N\), as well as \(m\in \{2, 3, \ldots , 10\},\) and \(\lambda \in \{0.5,1,2\}\)

7 Conclusion

To overcome the drawbacks of classical Shannon sampling series—which are poor convergence and non-robustness in the presence of noise—in this paper we considered regularized Shannon sampling formulas with localized sampling. To this end, we considered bandlimited functions \(f\in \mathcal {B}_{\delta }({\mathbb {R}})\) and introduced a set \(\Phi _{m,L}\) of window functions. Despite the original result, where \(\varphi \in \Phi _{m,L}\) is chosen as the rectangular window function, and the well–studied approach of using the Gaussian window function, we proposed new window functions with compact support \([-m/L,\,m/L]\), namely the \(\mathrm B\)-spline and \(\sinh \)-type window function, which are well-studied in the context of the nonequispaced fast Fourier transform (NFFT).

In Sect. 3, we considered an arbitrary window function \(\varphi \in \Phi _{m,L}\) and presented a unified approach to error estimates of the uniform approximation error in Theorem 3.2, as well as a unified approach to the numerical robustness in Theorem 3.4.

Then, in the next sections, we concretized the results for special choices of the window functions. More precisely, it was shown that the uniform approximation error decays exponentially with respect to the truncation parameter m, if \(\varphi \in \Phi _{m,L}\) is the Gaussian, \(\mathrm B\)-spline, or \(\sinh \)-type window function. Moreover, we have shown that the regularized Shannon sampling formulas are numerically robust for noisy samples, i. e., if \(\varphi \in \Phi _{m,L}\) is the Gaussian, \(\mathrm B\)-spline, or \(\sinh \)-type window function, then the uniform perturbation error only grows as  . While the Gaussian window function from Sect. 4 has already been studied in numerous papers such as [6, 13,14,15,16], we remarked that Theorem 4.1 improves a corresponding result in [6], since we improved the exponential decay rate from \((m-1)\) to m.

. While the Gaussian window function from Sect. 4 has already been studied in numerous papers such as [6, 13,14,15,16], we remarked that Theorem 4.1 improves a corresponding result in [6], since we improved the exponential decay rate from \((m-1)\) to m.

Throughout this paper, several numerical experiments illustrated the corresponding theoretical results. Finally, comparing the proposed window functions as done in Fig. 8, the superiority of the new proposed \(\sinh \)-type window function can easily be seen, since even small choices of the truncation parameter \(m\le 10\) are sufficient for achieving high precision. Due to the usage of localized sampling the evaluation of \(R_{\varphi ,m}f\) on an interval [0, 1/L] requires only 2m samples and therefore has a computational cost of  flops. Thus, a reduction of the truncation parameter m is desirable to obtain an efficient method.

flops. Thus, a reduction of the truncation parameter m is desirable to obtain an efficient method.

References

Abramowitz, M., Stegun, I.A. (eds.): Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York (1972)

Chui, C.K.: An Introduction to Wavelets. Academic Press, Boston (1992)

Constantin, A.: Fourier Analysis, Part I: Theory. Cambridge University Press, Cambridge (2016)

Daubechies, I., DeVore, R.: Approximating a bandlimited function using very coarsely quantized data: a family of stable sigma-delta modulators of arbitrary order. Ann. Math. 2(158), 679–710 (2003)

Gradshteyn, I.S., Ryzhik, I.M.: Table of Integrals, Series, and Products. Academic Press, New York (1980)

Lin, R., Zhang, H.: Convergence analysis of the Gaussian regularized Shannon sampling formula. Numer. Funct. Anal. Optim. 38(2), 224–247 (2017)

Medhurst, R.G., Roberts, J.H.: Evaluation of the integral \(I_n(b) = \frac{2}{\pi }\,\int _0^{\infty } (\sin \, x/x)^n\,\cos (b x)\,{\rm d }x\). Math. Comp. 19, 113–117 (1965)

Micchelli, C.A., Xu, Y., Zhang, H.: Optimal learning of bandlimited functions from localized sampling. J. Complexity 25(2), 85–114 (2009)

Oberhettinger, F.: Tables of Fourier Transforms and Fourier Transforms of Distributions. Springer, Berlin (1990)

Plonka, G., Potts, D., Steidl, G., Tasche, M.: Numerical Fourier Analysis. Springer, Cham (2018)

Potts, D., Tasche, M.: Uniform error estimates for nonequispaced fast Fourier transforms. Sampl. Theory Signal Process. Data Anal. 20, 25 (2021)

Potts, D., Tasche, M.: Continuous window functions for NFFT. Adv. Comput. Math. 25, 25 (2021)

Qian, L.: On the regularized Whittaker–Kotelnikov–Shannon sampling formula. Proc. Am. Math. Soc. 131(4), 1169–1176 (2003)

Qian, L.: The regularized Whittaker–Kotelnikov–Shannon sampling theorem and its application to the numerical solutions of partial differential equations. PhD thesis, National Univ. Singapore (2004)

Qian, L., Creamer, D.B.: Localized sampling in the presence of noise. Appl. Math. Letter 19, 351–355 (2006)

Qian, L., Creamer, D.B.: A modification of the sampling series with a Gaussian multiplier. Sampl. Theory Signal Image Process. 5(1), 1–20 (2006)

Schmeisser, G., Stenger, F.: Sinc approximation with a Gaussian multiplier. Sampl. Theory Signal Image Process. 6(2), 199–221 (2007)

Strohmer, T., Tanner, J.: Fast reconstruction methods for bandlimited functions from periodic nonuniform sampling. SIAM J. Numer. Anal. 44(3), 1071–1094 (2006)

Tanaka, K., Sugihara, M., Murota, K.: Complex analytic approach to the sinc-Gauss sampling formula. Jpn. J. Ind. Appl. Math. 25, 209–231 (2008)

Unser, M., Aldroubi, A., Eden, M.: On the asymptotic convergence of B-spline wavelets to Gabor functions. IEEE Trans. Inform. Theory 38(2), 864–872 (1992)

Watson, G.N.: A Treatise on the Theory of Bessel Functions, 2nd edn. Cambridge University Press, Cambridge (1944)

Acknowledgements

Melanie Kircheis gratefully acknowledges the support from the BMBF grant 01\(\mid \)S20053A (project SA\(\ell \)E). Daniel Potts acknowledges the funding by Deutsche Forschungsgemeinschaft (German Research Foundation)—Project-ID 416228727—SFB 1410. Moreover, the authors thank the referees and the editor for their very useful suggestions for improvements.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Hanna Veselovska.

This article is part of the topical collection “Recent advances in computational harmonic analysis” edited by Dae Gwan Lee, Ron Levie, Johannes Maly and Hanna Veselovska.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kircheis, M., Potts, D. & Tasche, M. On regularized Shannon sampling formulas with localized sampling. Sampl. Theory Signal Process. Data Anal. 20, 20 (2022). https://doi.org/10.1007/s43670-022-00039-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43670-022-00039-1

Keywords

- Regularized Shannon sampling formulas

- Whittaker–Kotelnikov–Shannon sampling theorem

- Bandlimited function

- Window functions

- Error estimates

- Numerical robustness