Abstract

The literature knowledge gap addressed in the current study was to examine the extent that the skills taught in college degrees matched the job criteria employers needed. A survey was developed through a literature review and a focus group while the instrument was refined through a pilot project and the reliability was measured using Cronbach estimates. In the model, hard skills captured theories or methods taught in courses including organizational behavior, human resource management, statistics, financial math, economics, as well as technology in a group or individual projects. Soft skills identified interdisciplinary competencies taught throughout all courses such as teamwork, emotional intelligence, problem solving, and ethical decision making. Social desirability control was applied. Data were collected by surveying American undergraduate business students who were employed after the pandemic (N = 900). Descriptive statistics, correlation, and a structural equation model were used to test the hypotheses. A statistically significant multivariate model was developed with path effect sizes ranging from 46 to 96%. All exogenous soft skill indicators and most hard skill indicators had strong relationships to the endogenous dependent variables of learning effectiveness, job–skill match, and degree return on investment. Technology and quantitative skills, along with the dependent variable job–skill match, had the lowest means and medians, but the highest deviations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The current study addressed a gap in the current literature, which was the lack of empirical measurement that the skills taught in academic business degrees match what employers needed in jobs. While there was clear evidence that college degrees have helped students find relevant work, from a US government standpoint, there were no specific studies after the COVID-19 pandemic of how well the skills taught in business degrees matched what employers required in the market. According to the National Center for Education Statistics (NCES, a division in the US Department of Education), the unemployment rate of American students aged 25–34 years who attained a bachelor’s degree or higher was 3% in 2020 and for those with only high school 9% (NCES 2022). Those rates increased 1% and 3%, respectively, from 2019 (NCES 2022). The NCES analysis was credible because they are an objective government-based institution, although the metrics are partially based on student surveys and secondary data. The US Bureau of Labor Statistics (USBLS) reported the unemployment rate was 5.5% for students aged 25 and over with a bachelor’s degree in 2020 as compared to 9% with only high school (USBLS 2022). The USBLS rates were credible because the data are collected from employers and state governments. The superficial interpretation is that most college students found relevant employment after graduating with a degree as compared to those with only high school.

However, from the US employer perspective, college degrees do not provide all the important skills needed in a job (Accenture 2018; Conrad and Newberry 2012; Messum et al. 2016; USDOL 2022). The National Association of Colleges and Employers (NACE 2020) asserted that in addition to high grades, employers wanted at least 19 top skills from college graduates, based on a survey (N = 150). In order of descending importance, NACE (2020) identified these job skills: problem solving, team work, ethics, quantitative/analytical, communications, leadership, initiative, detail-oriented, technical, flexibility/adaptability, interpersonal emotional intelligence, computer technology, organizing, strategic planning, friendly, risk taker, tactful, and creativity. College students lack many of the above required job skills. According to US-based staffing conglomerate Adecco (2022), 92% of executives surveyed thought American workers lacked needed skills, 45% felt insufficiently skilled workers negatively impacted firm growth, 34% believed product development suffered, and 30% suspected company profits were reduced. One problem was employer-needed job skills did not necessarily match learning objectives from college degrees (Adrian 2017; Alhaider 2022; Baruch and Sullivan 2022; Grant-Smith and McDonald 2018; Jung 2022; Kreth et al. 2019; Kursh and Gold 2016; Lau and Ravenek 2019; Maheshwari and Lenka 2022; Vermeire et al. 2022; Wilgosh et al. 2022; Wise et al. 2022). Another issue was the lack of job skill research from the graduated college student perspective (Messum et al. 2016).

Another recent problem from the student perspective was the coronavirus pandemic forced pretty much every college cohort online regardless of learning style preferences, which resulted in substantive dissatisfaction (Al Asefer and Abidin 2021; Li and Yu 2022; Paposa and Paposa 2022). The pandemic has changed what employers are looking for and what college graduates expect in a job (Adecco 2022). Even prior to the pandemic, student dissatisfaction with their degree varied widely, according to a rigorous literature meta-analysis of global higher education performance (Weerasinghe et al. 2017). Furthermore, a CBS news report summarizing a PayScale survey of 248,000 respondents in 2018 claimed 67% of employed American students regretted their degree (Min 2019).

Consequently, there seems to be a problem that college degree content may not align with the job skills employers want, leaving students dissatisfied with their investment (Janssen et al. 2021). Dissatisfied college graduates may be forced to take jobs requiring lower skillsets or remain unemployed, while enrolled students may drop out to pursue vocational certifications or other alternatives. The research question (RQ) from this was: how do recently graduated business college students in the US view the effectiveness of their degree in terms of learning relevant job skills? This topic was not well researched in the literature, particularly from a recently graduated and employed business student perspective in the USA. Several rigorous empirical studies were unintentionally outdated due to the pandemic paradigm shift (i.e., Adrian 2017). Authors in other countries or in non-business fields identified a few discrepancies between degree content and graduated student sentiments, although those studies were not directly comparable to the RQ (i.e., Han et al. 2022; Jeswani 2016; Li and Yu 2022; McAlexander et al. 2022; Messum et al. 2016; Paposa and Paposa 2022). Subsequently, the goal of the current study was to collect primary data from recently graduated business students in the USA using a validated survey instrument and to apply a post-positivistic quantitative analysis research design to answer the RQ. The results of the current study will be of interest to many stakeholders including academic institutions (e.g., business and management colleges), human resources management personnel in organizations, and educational assessment institutions or associations. Additionally, the key stakeholders—the students—will be interested in these results as well as their professors.

Literature review

Employer perspective of important job skills

The Jeswani (2016) paper was the most rigorous empirical study related to the RQ and methods. He used a positivistic research design to survey 305 employers on the importance of college student employability skills to develop a causal relationship model. He used exploratory factor analysis (EFA), confirmatory factor analysis (CFA) and then structural equation modeling (SEM) with regression techniques in AMOS to analyze the survey data. The final model with three exogenous factors and one endogenous variable had a moderate 20% effect size, revealing that management skills were the most important, followed by technical skills and then communication skills, as perceived by the engineering companies. Note that the benchmarks for interpreting effect sizes are: 0.02 is small but significant, 0.15 is moderate, and 0.35 or higher is considered strong (Hair et al. 2010; Keppel and Wickens 2004). Jeswani (2016) explained further that employers were satisfied more with the communication skills of students (employer M = 3.41, student ability = 3.58, no skill gap), followed by technical skills (employer M = 5.58, student M = 4.07, some gap existed) and lastly management skills (employer M = 5.63, student M = 4.27, larger gap). There was an inverse relationship between these three factors and the dependent variable, as employers were least satisfied with student abilities for job skills they perceived as most important, i.e., management, yet they were more satisfied with student abilities for least important skills, i.e., communication. On the other hand, the response scale of 1–7 with 4 as neutral must be considered when interpreting those means. Accordingly, the employer’s perception of communication skills was not important. As well, student ability for technical and management skills was close to neutral.

The first endogenous (independent) factor of communication skills identified by Jeswani (2016) included writing, reading, nonverbal, and computer knowledge. The last item in that factor, computer knowledge, seemed more related to technical instead of communication. The technical skills factor included technical tools, technical knowledge, interpretation/data analysis, systematic operations approach, acquiring technical competency, theoretical competency, solving technical problems, and designing competency. Some of these technical skills, particularly the last three (theoretical, solving, and designing), were slightly more difficult to associate with technical employment skills, but perhaps they were relevant in the engineering discipline. Management skills included problem identification, confidence, individual/teamwork, self-directed learning, lifelong learning, corporate social responsibility, entrepreneurship honesty, integrity understanding directions, discipline/motivation, reliability and, flexibility. Many of the above management skills did not relate to common managerial functions, because they were interpersonal or personality-related attributes such as discipline, motivation, reliability, flexibility, self-directed, confidence, and individual. Also having 12 items in a factor overweights it as compared to others, namely, communications with only 4 items. Additionally, having more than six items can inflate Cronbach reliability coefficients (Strang 2021). Furthermore, problem identification in management skills overlapped with solving technical problems in the technical factor. One innovative factor, corporate social responsibility, was a relevant management skill not mentioned in many other studies.

The Jeswani (2016) study was a strong empirical analysis very similar to the current study, but with significant research design constraints. The high-level limitations of their study were that the population was engineering student employers in the Chhattisgarh province of India and the convenience sample consisted of the 305 employers visiting a specific engineering college in India (from 500 employers surveyed). Thus, there was a difference between population, sample, and discipline when comparing the Jeswani (2016) results to the current study of graduated US-based business students. Another major difference was Jeswani (2016) surveyed employers on student job–skill match perceptions, while the current study randomly surveyed students on their job–skill match after they graduated and were employed. Statistically, the CFA and SEM work by Jeswani (2016) was rigorous and well done, including using an a priori instrument with a pilot (N = 20) to increase face validity, especially considering SEM is difficult to implement.

However, there were some issues to mention. First, while the rationale for conducting the survey was in line with the current study, Jeswani (2016) grounded the problem in too much unrelated literature citing job skill meta-data from other countries beyond the India population, including Australia, Malaysia, UK, USA, and globally. Nevertheless, in his defense, he cited several relevant India-based job–skill gap statistics to substantiate why the study was necessary. The sample frame was at one college’s engineering department and data were collected from a convenience sample of visiting employers. Secondly, Jeswani (2016) performed EFA despite using an a priori instrument, whereas a CFA with validity verification was all that was required, and he did perform that very well, with Cronbach alphas ranging from 0.775 to 0.901 (these were satisfactory, but generally ≥ 0.8 are preferred in CFA although as he pointed out ≥ 0.7 can be accepted for exploratory studies). The third major issue was the design, using SEM where he could have used a rigorous pairwise t test to compare the employer’s perceptions on the importance of required job skills versus how well recently graduated engineering student met those skills. He could then have used a more powerful multivariate model with MANOVA by regressing the three factors of management, communication, and technical skills from the student ability perspective, onto the three dependent variables representing the employer satisfaction. Instead, he produced means, without standard deviations (SD), followed by an extensive EFA, CFA, and SEM path model.

Fourthly, Jeswani (2016) calculated more estimates stated than needed, as a brief example tolerance and VIF were both reported, but only one or the other is needed, generally VIF is preferred, since it is reciprocal of tolerance (i.e., management factor tolerance = 7.11, VIF = 1.06, but 1/7.11 = 1.06, see p. 18). More so, there was considerably more time dedicated to discussing iterations of early models and unnecessary statistics, e.g., 15 pages focused on explaining unstandardized coefficients, but too little analysis of standardized coefficients from the final model. Fifthly, he analyzed the means prior to the SEM, after the 26 insignificant factors were removed from the final model. Instead, the means analysis would have been beneficial to interpret later after the final SEM results. To his credit, Jeswani (2016) produced excellent comparative line charts using the means to illustrate the skill gap between perceived student skills versus required job skills, both as perceived by employers visiting the university’s engineering department.

Sixthly, Jeswani (2016) used a 1–7 ordinal scale instrument with 4 as the neutral, which was not directly comparable to the majority of surveys in related studies where a 1–5 scale was de facto. In his design, differences between 1–2–3 and 5–6–7 were unclear (no verbal cues were shown based on the instrument in the appendix). A seventh issue was many of the survey question items tested very different multiple skills, for example “students have the ability to function effectively as an individual and in a group/teamwork,” (Jeswani 2016), “students have the ability to maintain self-discipline and they are self-motivated” Jeswani (2016). This problem was proven because Jeswani (2016) admitted he reduced 50 items to 24, dropping 26 items through iterations of EFA and CFA (e.g., based on factor loadings and reliabilities), which encompassed 15 pages to explain. Furthermore, the employer satisfaction (ES) endogenous SEM variable was represented by three very different items, satisfaction with student management skills, satisfaction with technical skills, and satisfaction with communication skills—these ought to have been three separate dependent variables since they were distinctly different.

Finally, the most serious issue was one of the three exogenous factors, management skills, was insignificant (p = 0.065) according to the SEM regression estimates for the final model (Jeswani 2016). It was stated as a positive 14% and later as a positive 13%, but according to his fitted model it was − 0.13 and insignificant (pp. 32–32). This means the predictive path from management skills to employer satisfaction was not significant, which was contrary to his abstract, hypothesis, and conclusions. These issues unfortunately made the study fatally flawed. Nonetheless, it was an excellent empirical effort, there were useful descriptive statistics for job skill items, he articulated many relevant SEM benchmarks, and Jeswani (2016) mentioned good ideas for future researchers to consider.

The best paper matching the RQ was published by NACE (2020), which revealed the strongest relevant empirical evidence of which skills employers in the USA wanted from college students. Their analysis was credible since they asked employers to indicate the importance rating of listed job skills with open-ended alternatives to capture new requirements, and they have been surveying employers with a similar instrument since 1992 (NACE 2020). Their most recent analysis was based on data collected from August 1 through September 30, 2019, drawn from a population of 3134 US employers from across most industries, with a final sample size of 150 (77% of employers were NACE member companies).

The NACE (2020) methodology was clearly articulated, and the results more closely approximated a normal distribution as compared to their competitors. Their survey items replicated the a priori instrument keywords with the question of ‘job skills most wanted’, using a 1–5 interval response scale where 1 = very unimportant, 2 = unimportant, 3 = neutral/neither, 4 = important, and 5 = very important. They asked the importance of a strong grade point average (GPA) as a separate item. Overall, they found the most important job skills were a high GPA (to show learning ability), along with problem solving and team work competence. In order of descending importance excluding GPA, NACE (2020) identified these job skills: problem solving, teamwork, ethics, quantitative/analytical, communications, leadership, initiative, detail-oriented, technical, flexibility/adaptability, interpersonal emotional intelligence, computer technology, organizing, strategic planning, friendly, risk taker, tactful, and creativity. The specific job skills and percentages of employers responding with agree/important (4) or strongly agree/very important (5) are listed on the left of Table 1. NACE observed, in contrast to the previous year, certain job skills increased in importance, namely, work ethic and quantitative/analytical. They noted several skills dropped in rank from 2019, including, initiative, written communications, and foreign languages which fell to the last position of all items in the survey.

There was a second empirical study related to the RQ, revealing which skills employers wanted from college graduates. The American Association of Colleges and Universities (AACU 2021) is a direct competitor of NACE in the USA, with similar published research since 2007. AACU partnered with Hanover Research to survey employers. However, the AACU 2020 analysis was less credible than NACE because it lacked sample versus population parameters, no sample sizes were given, they used atypical subscales (1–3), and sometimes their percentages for all frequencies did not sum to 100%. The only information available about the sample size was from a newspaper asserting it was close to 500 executives (Flaherty 2021). Additionally, the AACU findings depicted a statistical ceiling effect, with most values at or above the 90 percentiles. The industries represented technology (27%), financial (12%), manufacturing (9%), services (9%), healthcare (9%), construction (9%), and others (not reported). Most were private (72%). Notwithstanding the limitations, the AACU findings generally corroborated the NACE job skill importance, as illustrated in Table 1. In Table 1, the survey results from NACE and AACU are reported for the cumulative percentage of employers responding with the highest values of agree/important or strongly agree/very important on the ordinal scales, noting the scales differed between NACE and AACU. Several of the AACU items tended to overlap, namely, idea integration across contexts and applying skills to the real work, as well as analyzing/interpreting data and finding/using data for decision making.

The most important job skills from the employer perspective based on the NACE (2020) and AACU (2021) survey data, were relatively analogous, as can be seen in Table 1. The values in Table 1 are in descending importance order for each association. NACE and AACU used slightly different items, which were interpreted by the author and verified by two academically experienced colleagues. The top 19 skills were reported from NACE and all 15 from AACU. The top employer-demanded skills from both associations were: team work, problem solving, ethics (professionalism), communication (written, oral/verbal, presenting), quantitative analytic reasoning, computer technology/digital/technical literacy, critical thinking/strategic planning, and interpersonal emotional intelligence (EI)/multicultural work ability. The above represented the NACE employer scores at or above 45% and at or above 90% in the AACU data. The differences were the unique, yet believable employer job skills cited by NACE, namely, leadership (72.5%) and several personality attributes including flexibility (62.7%), organization (47.1%), friendliness (29.4%), risk taker (24.5%) and tactfulness (24.5%). These were believable as they were found by the author in job ads, as part of the validity checking process in the current study. AACU found a unique job skill not mentioned in other relevant empirical studies—civic engagement (83%). To validate this, a search for civic engagement was done in job advertisements using an exploratory search for bachelor’s degree positions in Ziprecruiter and Linkedin posted during March 2022. Although nothing was found, similar terms, volunteerism and community engagement, were mentioned, so the author concurs the AACU findings are accurate, in that civic engagement/volunteerism may be a relevant college-level job skill.

The most interesting findings by AACU (2021) were that employers reported a wide variation of impact on job skills due to the pandemic, and there were significant skill importance differences between older versus younger respondents. The problem is they did not clearly articulate the influence of respondent age or the post-pandemic paradigm on job skills. Of greater concern was that AACU Vice-President Ashley Finley stated “higher education has a public trust problem”, meaning that students and other stakeholders were dissatisfied with their degree and that lack of satisfaction with a college degree was detected in a Gallup survey to be 62% (Flaherty 2021). In 2020, AACU (2021) reported 33% of employers did not have confidence in higher education. As discussed earlier, 67% of employed American students regretted their degree according to a PayScale survey of 248,000 respondents in 2018, which was also covered by CBS News (Min 2019). Paradoxically, Flaherty (2021) claimed only 17% of employers thought college degrees were not worth the student’s investment of time and money.

US-based multinational human resource staffing firm Adecco Group (Adecco 2022) recently surveyed executives to find out how well college students were qualified for placements, based on their education, in terms of hard and soft skills. Their findings were credible in as far as they are a large recruiting firm with 70,000 employees spread over 60 countries. Adecco found that 92% of executives surveyed thought American workers lacked the needed job skills, 45% felt insufficiently skilled workers from college negatively impacted firm growth, 34% believed the firm’s product development suffered, and 30% suspected company profits were reduced. The hard and soft skills were similar to the AACU findings. However, they did not provide sufficient descriptive statistics about the population or sample, nor did they disclose the sample size or sampling technique.

The important job skills claimed by NACE and ACU were also corroborated by the US Department of Labor (USDOL 2022), based on reviewing several large comprehensive national labor analysis reports, although much of their research dated back to 2007. USDOL stated the key employment skills were hard skills and soft skills. Hard skills included technical competencies based on academic knowledge, such as from discipline-specific courses and basic general college degree education (English reading, writing, speaking, arithmetic, science, technology, quantitative/computational, humanities, psychology, and sociology). Soft skills included abilities such as teamwork, relationship building/management, work organization, interpersonal EI, time management, and thinking skills (critical thinking, creative thinking, reasoning, problem solving, decision making).

USDOL (2022) explained how both hard and soft skills should be taught in colleges. Soft skills can be developed by students in the classroom, through on-the-job coaching internships, through youth-serving organizations, through service-learning and by volunteering. These hard and soft skills were clearly captured in the recent NACE and AACU job skill analysis, albeit the terminology differed slightly. However, students were not surveyed in any of the above studies, and all research designs were basic descriptive statistics except the rigorous SEM by Jeswani (2016). There were other large employer job skill surveys in the literature, including several universities, but with no significantly new information beyond what was discussed above—so they were not reviewed in detail for the current study. In summary, the job skills from Table 1 are asserted to represent current employer needs in the USA.

Student perspective of important job skills

The Messum et al. (2016) paper was well done and a close match to the RQ since they surveyed the importance of most job skills in Table 1 from the student perspective. They applied a post-positivistic research design, using a survey to collect job skill importance perceptions from 42 health service managers in New South Wales Australia who had graduated within the last 3 years (2010–2012), followed by pairwise t tests. They categorized the 44 skills into four groups: job/industry knowledge, critical thinking, self-management, and interpersonal/communication. Thankfully, they processed the 44 skills individually, not as four factors. They found the most important job skills needed were (in descending order): verbal communication, integrity/ethical conduct, time management, teamwork, priority setting, ability to work independently, organizing, written communication, flexibility/open-mindedness, and networking. The highest self-rated skills were (in descending order): integrity/ethical conduct, ability to work independently, flexibility/open-mindedness, degree qualifications, interpersonal, written communication, time management, lifelong learning, priority setting, and administration skills. Interestingly, graduated health services students rated their skills lower than their required importance, thus illustrating a skills gap which somewhat corroborates the employer-perspective sill-gap findings of Jeswani (2016). In contrast, Jeswani (2016) found engineering companies valued communication skills much lower. A unique contribution was determining how students found their job. Most students (29%) found work through their professors, 22% from Internet advertisements (e.g., seek.com), 17% from follow-up part-time contracts, 9.8% from family/friends, 9.8% from university career services, 7.3% print media (e.g., newspapers), and 5.1% other. Messum et al. also stated that students found work experience/internships were beneficial in developing essential job-related soft skills.

The Messum et al. (2016) study was similar to Jeswani’s (2016) study because the importance of a priori job skills were rated first and then by how well students thought they met those same skills, except of course Messum et al. examined the skill gap from the student’s perspective, not from the employer’s standpoint. They grounded their rationale for initiating the study into relevant population-specific literature, namely the Australian government statistics. The Messum et al. study was credible because they applied the appropriate statistical technique, pairwise t tests, to compare the needed versus the actual job skills, as perceived by the student. Statistically, this was a robust repeated measures design, with the student serving as control as they rated both perspectives using the same scale. Of course, bias can still confound self-reported factors. They analyzed the 44 skills individually, using pairwise t tests of job importance rating versus actual skill level rating, not as four composite factors, which made their results precise. Most students (86.3%) reported the university degree prepared them well for their job, but note only 87% were working. They found the biggest self-reported perceived skill gap between expected versus actual was interpersonal/communication skills, then critical thinking, job skills, and self-management skills.

Messum et al. (2016) found the most important skills needed in the interpersonal/communication factor group were: verbal communication, teamwork, writing, and networking. Leadership and negotiation skills were rated as least important. Self-ratings of ability were highest for interpersonal, written, and verbal skills, but lowest for negotiating, leadership, and networking skills. The biggest skills gap for the interpersonal/communication group was networking and teamwork skills. The most important needed skills in the self-management group were integrity/ethical conduct, time management, ability to work independently, organizing, and flexibility/open-mindedness (with means very high at ≥ 3.5, noting 2 was the neutral midpoint in their unusual 0–4 interval response scale). All self-ratings were significantly lower than needed except for tertiary degree qualifications and career planning where no skill gap existed. The lack of a skill gap in these two items would not likely impact a job situation, so these are not relevant for the current study. The biggest skills gap in the self-management group included being calm under pressure, time management, and organizing skills. In the critical thinking group, the most important required skills were priority setting, planning, and strategic thinking (means ≥ 3.5). Self-ratings were lower than required for all skills except research, creativity, and innovation skills. The biggest skill gaps in the critical thinking group were planning and priority setting. In the job/knowledge group, the most important needed skills were: computer/software and project management (means ≥ 3.5). Again, self-ratings of skills were lower than required for all items except administration. The highest self-ratings were for administration, computer, and software skills, while the lowest self-ratings were for knowledge of the local population, operational management, budget/financial, and change management. The biggest job/knowledge group skill gap consisted of change management, project management, and performance management.

There were a few minor issues with the Messum et al. (2016) study. First, they acknowledged it was a small convenience sample (N = 42), which included graduated students plus existing students enrolled in the health services management, master’s degree program, at a specific Australian university. Their honesty was appreciated. This means the population was constrained to the university, the sample was not random (respondents were purposively identified as graduated business degree students in NYS and they self-selected to participate), and it mixed graduated with not graduated students at the master’s level. They stated 88% were employed full-time while the remaining participants were continuing their studies, which meant 12% may not have been ideal respondents to answer the survey. The second minor issue was the lack of effect sizes. They ought to have reported Cohen’s D from the pairwise t tests, and preferably listed all descriptive statistics with t test estimates for each of the 44 skills in one table instead of numerous graphs. Probably, there was a page length limitation in the journal constraining this. Also, they used an atypical 5-point survey response scale of 0–4 which made the means harder to compare with other studies. Notwithstanding the above sample limitations, the Messum et al. (2016) study was effective in highlighting large gaps between expected and actual skills, as perceived by students. The biggest skill gaps were: teamwork, networking, planning, change management, project management, time management, priority setting, organizing, performance management, and being calm under pressure. Most of the 44 skills are roughly equivalent to the 15–19 employer skills in Table 1 identified by NACE and AACU. All their 44 health services manager job skills make sense for the business discipline, which means their findings generalize to business students.

Adrian (2017) used a post-positivistic research design to survey 62 business students and 7 hiring managers about the importance of job skills. He conducted a pilot with local business employers, and he reviewed a 2013 AACU report along with the Messum et al. (2016) study to create the survey. His design was different from Messum et al. (2016) because it was a between-unequal groups comparison, not a pairwise repeated measures contrast. He developed 31 items and grouped them into three categories: soft skills (11 items), hard skills (8 items), and management skills (12 items). As with Messum et al., he also tested the skills individually rather than merging them into composite factors, which increased his analytical power. Interestingly, Adrian (2017) found students over-valued the importance of hard skills and management knowledge as compared to employer ratings, with the opposite effect for people/social soft skills which employers rated higher than students.

Adrian (2017) used a 1–5 survey response scale where 1 was not important up to 5 being very important. He found only one item in the soft skills group was significantly different based on his ANOVA test, with employers rating people/social skills more important (M = 5, SD = 0) as compared to students (M = 4.63, SD = 0.6). In the hard skills group, five skills were significantly different in ANOVA, while the others were the same, namely: writing (employer M = 3.75, SD = 0.66 vs. student M = 4.1, SD = 0.81), Excel/Word (M = 4, SD = 0.71 vs. M = 4.24, SD = 0.75), and math skills (M = 3.43, SD = 0.73 vs. (M = 3.95, SD = 0.92). Employers rated oral communications significantly lower (M = 4.13, SD = 0.78) than students (M = 4.71, SD = 0.45). Employers rated data analysis significantly lower (M = 3.25, SD = 0.66) than students (M = 4.06, D = 0.79). Employers rated coding/computer programming significantly lower (M = 1.88, SD = 1.27) than students (M = 3.03, D = 0.93). Surprisingly, employers rated analyzing financial data significantly lower (M = 3.13, SD = 0.99) than students (M = 2.84, D = 1.04). Finally, employers rated foreign languages significantly lower (M = 1.63, SD = 0.99) than students (M = 2.84, D = 1.04). In the management knowledge group, there were 3 skills rated differently from the ANOVA. Employers rated human resource management significantly lower (M = 3, SD = 0.87) than students (M = 3.87, SD = 0.83). Employers rated group decision making significantly lower (M = 3.63, SD = 0.7) than students (M = 4.32, SD = 0.75). Employers also rated total quality management significantly lower (M = 3.63, SD = 0.7) than students (M = 4.32, SD = 0.75). The remaining skills had no rating differences, namely, management history (employer M = 3, SD = 1 vs. student M = 3.33, SD = 0.91), strategy (M = 4, SD = 0.71 vs. M = 4.05, SD = 0.82), planning (M = 4, SD = 0.71 vs. M = 4.3, SD = 0.79), scanning/analyzing environment (M = 4, SD = 0.87 vs. M = 4.11, SD = 0.76), goal setting (M = 4.63, SD = 0.7 vs. M = 4.44, SD = 0.71), plan execution (M = 4.75, SD = 0.43 vs. M = 4.59, SD = 0.61), conflict management (M = 3.75, SD = 0.66 vs. M = 4.05, SD = 0.81), motivation (M = 0.63, SD = 0.48 vs. M = 4.51, SD = 0.69), and control processes (M = 3.75, SD = 0.66 vs. M = 4.1, SD = 0.83).

There were several problems with the Adrian (2017) study. First, although he stated it was business discipline students and employers surveyed, he did not mention where the sample was drawn from. Presumably it was in Louisiana, USA, where the author was based. Also, the 62 students were still in college, so they may not have been the best candidates to judge relevant job skill importance. Again, the group sizes were drastically different, 62 vs. 7, which inflates all parametric measures since sub-sample size is in the denominator. Second, the rationale underlying the study was grounded in foreign populations, namely Australia and India, plus the sources were mostly older literature except for the Messum et al. (2016) and Jeswani (2016) citations. Thirdly, the methods were incorrect. He did not declare a confidence level, but at one point in the analysis, he mentioned 0.10 which would be the significance level for testing hypotheses (referring to a 90% confidence level). Speaking of hypotheses, he did not declare any, although his design was clear enough to deduce them: that there would not be a difference between student versus employer importance ratings of the job skills. He used ANOVA for comparing the two groups when regular Student’s t tests ought to have been applied. He also did not make the order of ANOVA comparisons clear, which is why he ought to have used t tests. Thankfully, he reported all means and SD which, so we may confidently accept his findings.

Finally, the paper by Li and Yu (2022) addressed the pandemic-related impact on college student job skills and explained a valuable literature search methodology. Li and Yu used the critical analysis method with practitioner reflection to review 105 papers focused on teaching and learning effectiveness throughout the COVID-19 pandemic. They argued online education was mostly focused on making money and not on improving student learning. They recommended online education should not be a permanent mechanism to deliver job readiness and academic success to students. Their reasons included the lack of connection between online learning, employment skills, and job readiness.

Synthesis and conceptual model of important job skills

The work of NACE (2020) in particular and to somewhat a lesser degree the findings of AACU (2021) are argued to represent the most important job skills from the employer’s perspective, as listed in Table 1. The job skills in Table 1 were similar to empirical research from the student’s perspective. In particular, most of the skills in the Adrian (2017) paper were identical or a close match to Table 1 and his population sample was from the USA. As a coincidence, Adrian (2017) found employers rated foreign language proficiency as the least needed skill, which was the same as NACE and AACU. On the other hand, Adrian (2017) claimed students and employers had low importance ratings for cultural diversity and community engagement, which was contrary to what AACU found as shown in Table 1. The key finding by Adrian (2017) was that students underestimated the importance of social skills, but both students and employers indicated soft skills were important. Another insight Adrian (2017) argued was the shift to online education/learning increased the student–employer skill gap, driven by profit motives of universities to reduce expenses while increasing revenues, which in turn led to students demanding high grades despite lower competency in exchange for their paying high tuitions, particularly in private colleges. In other words, Adrian (2017) argued the employer–student skill gap was exacerbated by profit-driven online universities, which the current study asserted was further propagated by the pandemic, forcing almost every college to deliver learning online.

Adrian (2017), along with NACE, AACU, Messum et al. (2016), and Jeswani (2016), articulated the student–employer skills gap through evidence-driven empirical analysis. All of those studies grouped skills into categories or factors, representing soft skills versus hard skills or other classifications. From the college degree perspective, Adrian (2017) and Messum et al. (2016) presented similar logical groupings of soft skills and hard skills, which corroborated the skills US employers wanted according to NACE (2020) and AACU (2021). Based on the above literature, the employer skills in Table 1 could be grouped into soft skills and hard skills. Hard skills refer to disciplinary knowledge including quantitative as well as qualitative theories. For example, algebra, statistics, logistics, supply chain management, financial management, economics, and others are based on quantitative theories. Qualitative theories include organizational behavior, human resources management, leadership, and others. Hard skills also include reading, writing, speaking, presenting, critical analysis, problem solving, and others. Soft skills refer to interacting and self-managing cross-disciplinary concepts such as teamwork, interpersonal emotional intelligence (EI), applying multicultural diversity, ethical decision making, corporate social responsibility (CSR), civic project engagement, volunteerism, team project management, self-management, and work ethic. The survey items from Adrian (2017) and Messum et al. (2016) could be adapted for a new instrument to answer the RQ (this will be discussed in the methods section). A subject matter expert team could likely merge (for redundancies) and reword the Table 1 skills into a succinct list.

If it can be assumed the employer skills demanded from business college students in Table 1 could be grouped into soft skills and hard skills, to answer the RQ (How do recently graduated business college students in the USA view the effectiveness of their degree in terms of learning relevant job skills?)—degree effectiveness becomes the dependent variable, from the student’s perspective. Only NACE asserted GPA was a desired job skill by employers. It could be argued that GPA is encompassed into each skill as learning effectiveness, or as part of the dependent variable. Alternatively, GPA could operate as a moderator or mediator of degree effectiveness perceptions. Subsequently, Fig. 1 is a conceptual model of the RQ based on the concepts from the literature review and the evidence-based data from Table 1, assuming the 15–19 items are grouped into soft skills and then hard skills. The hard skills and soft skills are briefly proposed in Fig. 1. Since the unit of analysis is from the employed student’s perspective, and a large random sample is desired, this will be a perceptional dependent variable or multiple variables. The dependent variable could be a single or multivariate composed of self-reported satisfaction and performance. Degree effectiveness and learning effectiveness may result in perceived career success, if the student felt effectively prepared to match the required job skills. Thus, the research method must be capable of handling one to three dependent variables and be flexible considering this is an exploratory type of study. Three preliminary hypotheses were developed to test the conceptual model and RQ:

-

H1: hard skills learning effectiveness leads to student perceptions of degree effectiveness;

-

H2: soft skills learning effectiveness leads to student perceptions of degree effectiveness;

-

H3: learning effectiveness and degree effectiveness lead to perceived career success.

Methods

In the current study, the author adopted a post-positivistic research design ideology to answer the RQ. A post-positivistic ideology refers to the researcher’s intention to focus on factual evidence to prove deductive theories through testing hypotheses: quantitative data types are preferred to facilitate analysis (Strang 2021).

The literature was reviewed using the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) technique, following the suggestions of Page et al. (2021). In PRISMA, the search scope is systematically reduced as papers are screened for relevancy to the RQ based on keywords, methods, and research design (e.g., empirical), by reading the abstract, and once a workable list is reached, in-depth reviews take place (Page et al. 2021). The search keywords included: Student Survey + Job Skills, Business Student + Employment Skills. Initially, 102,107 papers were returned, but through methodical selection, the relevant papers were reduced to 478, of which 24 mostly empirical studies were reviewed in depth.

The analysis to answer the hypotheses was performed using parametric statistical techniques. First, the survey was developed based on Table 1, using a subject matter expert group consisting of two professors (including the author) and three graduate business students, over an online conference. In addition to the Table 1 skills, two social desirability items were inserted, one in each category, to test for honesty and construct validity. The author created ten user test cases, by taking the survey in supervisor mode, to generate the expected ranges of responses. These test results were evaluated using the planned statistical techniques as far as possible despite the small test sample size, to ensure the survey would generate relevant data to answer the RQ. Cronbach reliabilities were not estimated from the survey because the items were not cognitive elements of a factor. The hard skill and soft skill groupings were not factors in a psychological sense, they were categories. Thus, reliabilities would be meaningless for those types of items.

Based on the RQ and hypotheses, the unit of analysis was how student perceptions of hard skills and soft skills created learning effectiveness, which leads to degree effectiveness and career satisfaction. This was a within-group association relationship (no comparisons), with potentially multiple dependent variables. Thus, multivariate techniques would be needed such as MANOVA, SEM or machine learning (ML). MANOVA is a comparative test with a dependent variable, while ML can be either with or without a dependent variable. SEM is an association test, with or without a dependent variable. Factor development tests would not be relevant, since there was a good quality a priori list of employer-needed job skills as discussed in the literature review (summarized in Table 1). Correlation and SEM would be appropriate for the current study. Correlation would confirm the expected relationships within the soft skills and hard skills category groups, and also with the dependent variable(s). Preliminary tests would be needed to ensure the data met the assumptions for correlation and SEM, or to select a nonparametric test. Once correlation was established, the iterative process of developing an SEM model could take place. The confidence level was set to 95%, but in several SEM techniques a specific significance level is applied according to best practices (i.e., Hair et al. 2010; Keppel and Wickens 2004).

Ethics and participants

The authors had no conflicts of interest. The first author was the principal investigator (PI). The PI designed the study, wrote the initial paper, conducted the analysis, interpreted the results and served as corresponding author. The PI was certified as a research professional and certified for conducting research involving human subjects. The PI obtained ethical clearance to conduct the study from the internal research review board. The PI was employer funded and there was no specific external funding for the project. Participants provided ethical consent by reading the disclosure in the online survey and agreeing with a check box as well as by continuing with the process. Participants were informed they could withdraw from the survey at any time and have their data deleted. No personally identifiable information was captured, and IP addresses were not saved.

The intended population was graduated business discipline students in the USA. New York State (NYS) is one of the highest populated states and is generally considered one of the largest (but not the only) business centers in the country. This was argued as the rationale for purposively selecting NYS for the sample. The other author was a long-time adjunct business professor for several large universities in New York State (NYS), having taught over 5000 business students and retaining contact information for many of those. Over 2500 students were purposively selected based on having graduated with a business-related Bachelor of Science degree in NYS and being employed full-time on or after March 14, 2022. Participants were invited through social media and email messages to complete the streamlined Internet-based survey—the final sample size was 900 after data cleaning and deleting records with missing items.

Materials and procedures

A focus group of subject matter experts, two professors, and three recently graduated business students were formed to develop and pilot the survey instrument. The purpose of the group composition was to provide the student, employer, and educational institution’s view of the required job skills in the business discipline after the pandemic. Each member was asked to read over Table 1, and the literature review, do whatever research they needed, and make personal notes, before meeting online. In the online meeting, the focus group members reduced the job skills in Table 1, changed the wording, and created a succinct list. The dependent variable was created to reflect GPA, as done by NACE, but the item was worded to reflect how much the student actually learned over all courses from their perspective. There was a long discussion and logical arguments from the students that they viewed leadership and followership as a component of teamwork within group projects, as students always took turns in different roles across courses either as a requirement (in early subjects) or by applying organizational behavior theories in later subjects. Students argued leadership was no different from any other soft skill, but logically it was part of teamwork as they learned. They also argued that community engagement in the form of volunteerism or anything beyond the class was not part of the curriculum. Certainly, they agreed it was valuable but not a course, it was something to add to the resume if experienced, but not a job skill. The student arguments were accepted. Two questions were added, as suggested by the focus group, to assess the perceived match to the actual workplace job skills (job–skill match) and a field to indicate if the tuition spent was worth it (degree return-on-investment ROI). The final list of critical job skills were: teamwork, emotional intelligence, ethical decision making, problem solving, communications, quantitative, qualitative, technology, learning effectiveness, job–skill match, and degree ROI. These are briefly described in the survey located in the appendix. This was also a succinct list to enable the survey to be streamlined, thereby facilitating a good response by students via smartphones. To be sure, the survey was piloted with existing students in a capstone business course, asking them to comment on ease of use, face validity (did they understand it), and if more question should be asked. Only minor changes were suggested, pilot students agreed a short survey was better than a complex one.

The authors created the response scale based on the literature review. The same question prompt was used for each skill: I effectively learned this job skill from courses or individual/group projects during my degree. A 1–5 interval response scale was applied, where 1 = strongly disagree, 2 = disagree, 3 = neutral/do not know, 4 = agree, 5 = strongly agree. This scale had been used consistently for end of course opinion surveys throughout most NYS student degree programs, so students would be familiar with it.

As mentioned earlier, social desirability items (SDI) were added. One researcher described two methods to improve accuracy when using surveys to collect data. The first category was the common practice of using unobtrusive statistical techniques including: checking response time delays between items, flagging consecutive identical answers for many items, identifying high standard deviation (variability) between items for a respondent, and calculating Mahalanobis distance on responses between respondents to identify outliers. The second category was obtrusive screening by inserting bogus, instructed items or honesty checks where the respondent should answer strongly disagree (1) or strongly agree (5), such as ‘this is the year 1000’ (should answer strongly disagree), ‘select 5 as the answer for this question’, or ‘I carefully reflected on each question before answering’ (should answer strongly agree), respectively. The second category was applied in the current study because it was possible that respondents could inadvertently create the conditions in the first group, having delays between items due to being interrupted, or identical answers to learning effectiveness across many skills. Instead, honesty SDI checks were applied. At the beginning of the survey, participants were told to carefully read the questions because some items were placed there to check their honesty and integrity. The following two SDI questions were added, so as to check honesty as well as understanding:

-

Rest: catching up on my sleep during class or laboratory work [added to soft skills].

-

Play: applying statistical software to cheat on tests or examinations [added to hard skills].

The data were checked to ensure all responses were valid, and the SDI was checked to detect and eliminate dishonest or insincere responses. MPLUS was used to check the data assumptions, as well as to perform correlation and SEM.

When SEM is applied, a key concern is whether the endogenous independent factors truthfully cause the endogenous dependent variables. This is difficult to validate since SEM employs regression equations for the relationships. In an empirical test using SEM, as with the current study, the underlying problem of endogeneity can be traced to the error term (the variances not accounted for), which could be a result of misspecification such as missing an instrumental variable. Instrumental variables in SEM could refer to moderating or mediating factors between independent factors and dependent variables. Mediation refers to a hidden or latent mediator variable that influences how an independent factor impacts the dependent variable. For example, with an endogeneity situation, the variation from an independent factor is partially explained by the mediator in the path to the dependent variable, leading to a biased estimation of the path for the link in SEM.

Ultimately, endogeneity can be checked by adding more control variables to the model, especially if they are objectively measured such as demographics or test scores (Kim et al. 2015). Alternatively, endogeneity in SEM can be measured using formulas within statistical software, which is the approach taken here as this does not add more variables to the context and therefore results in a more parsimonious model. As noted by Knock (2014), adding explanatory instrumental variables does not really solve the problem. An example of mediation in the current study would be if prior aptitude influenced how well soft skills were learned by the business and therefore matched what the employer needed for a specific job. Mediation could be weak to strong and complicated by multiple independent factors as well as multiple dependent variables. By comparison, endogeneity in SEM caused by moderation refers to conditional changes in the dependent variable, meaning that certain values of the moderator or instrumental variable change how the independent factor impacts the dependent variable. According to Knock (2014), mediation in SEM can be checked through variable inflation factor (VIF) estimates of the independent factors be lower than 3.3 with a significant p value to indicate each one truly captured the variance of the endogenous variable subject to the effect size. Knock (2014) recommended to develop test models with interaction factors, by multiplying the factors together, to test moderation. Both of these approaches, mediation checking through VIF estimates and moderation checking through factor interaction, were applied in the current study.

Results and discussion

Preliminary analysis

After testing the two social desirability items for incorrect responses (if the result was not 1 for both SDI), only one record was deleted. Both SDI fields were removed from the database so they would not be processed. The valid response rate was 35%. All students had graduated from a business-related degree, all claimed to be employed full-time (self-reported), and most (98%) reported to be working in NYS. Slightly over half were male (51%), the mean age was 27.1, (SD = 4.1), ranging from 24 to 37.

Table 2 summarizes the Pearson correlations and descriptive statistics between the job skills students learned during their degree program, according to their perception now that they had graduated from 0.5 to 3 years later and were working full-time. The first salient observation from the correlations all but two were statistically significant, most at p < 0.001, which indicates strong inter-relationships as anticipated. High positive or negative correlation ≥ 0.4 between items in a factor or group is an assumption for developing an SEM structure, and some experts recommend significant correlations ≥ 0.5 for strong models.

The soft skills were all significantly correlated with one another (top left of table). In this scenario, where the soft skills refer to tacit applied type of cross-disciplinary subjects learned during a 4-year business degree, it is expected these would be associated with one another. For example, communications and teamwork were covered in all basic courses early on, and subsequent courses involved group work where team roles were decided by students based on theories, leadership was rotated, significant problem solving was needed with ethical as well as CSR considerations, and all communications skills were required to build and then present the end result of their projects. Likewise, the hard skills were significantly correlated with one another (middle left of table). This was also anticipated because the degree program was arranged to teach the basic general skills first, and successively build on those by integrating theories into more advanced models or methods. For example, basic algebra and statistics along with technology software/tools were taught early on and later those theories and methods were advanced in quantitative math for business, lean six sigma, group case study assignments, and individual or team research projects.

All but two of the correlations between the skills and dependent variables were significant, and positive, but many were ≤ 0.4 as a benchmark to develop a strong causal model. In the current study research design, positive correlations were desired since the independent factors and dependent variables were collected at the same point in time from the same respondent and they were based on an self-achieved effectiveness scale, Naturally one would anticipate students wanted to report higher not lower values, although the SDI control was administered to filter out insincere respondents. Degree return on investment (ROI) had strong positive correlation with problem solving (0.469, p < 0.001), communications (0.567, p < 0.001) and technology (0.462, p < 0.001).The other correlations with degree ROI which were significant and close to the benchmark were teamwork (0.372, p < 0.01), ethical decisions (0.294, p < 0.01), quantitative (0.369, p < 0.01), and qualitative (0.369, p < 0.01).

Learning effectiveness was an overall self-perceived measurement of student learning, across all course subjects and topics. Learning effectiveness had significant positive correlations with all skills, the highest were with problem solving (0.442, p < 0.001), communications (0.525 p < 0.001), and technology (0.454 p < 0.001). The other significant correlations with learning effectiveness just below the benchmark were teamwork (0.313, p < 0.01), EI (0.262, p < 0.05), ethical decisions (0.287, p < 0.01), quantitative (0.365, p < 0.01), and qualitative (0.385, p < 0.01). Job–skill match had strong significant positive correlation with communication (0.427, p < 0.001), and weaker yet significant positive correlation with teamwork (0.229, p < 0.05), problem solving (0.380, p < 0.01), quantitative (0.336, p < 0.01), qualitative (0.326, p < 0.01), and technology (0.36, p < 0.01). As noted earlier, EI and ethical decisions were not significantly correlated with job–skill match.

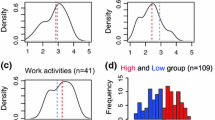

The descriptive statistics can show how effective the job skill learning was as perceived by the student, based on the means. The medians were also calculated because these estimates show where the bulk of the response values were in the scale. If the median is higher than the mean, for example let us say M = 4 and median = 5, statistically this indicates a negative distribution skew where most of the student responses in the data distribution were 5, at or above the mean, with fewer lower responses below the mean. That is a desirable result in the learning effectiveness scale in the current study design. In the table, the highest means for skills were for teamwork (M = 5, SD = 0.5, median = 4.7), communications (M = 5, SD = 0.6, median = 4.8), and quantitative (M = 5, SD = 0.3, median = 4.9). Next, the means were reasonably high for EI but with a lower median (M = 4, SD = 0.6, median = 3.3), ethical decisions was balanced (M = 4, SD = 0.9, median = 4), problem solving also had a reasonably high mean but a lot of deviation and a lower median (M = 4.3, SD = 1.2, median = 3.3), then technology had a moderate mean but lower median (M = 3.9, SD = 1, median = 3.3), while quantitative had a disappointing lower mean and median (M = 2.9, SD = 1.3, median = 2.2). The low descriptive estimates for technology and quantitative could be due to the student having to switch from traditional classroom-based instruction and group work toward online only during the 2 years of the pandemic. Quantitative would have been impacted with the switch to online only interaction, since the laboratories where state-of-the-art software were located would not have been accessible, forcing students to either purchase expensive commercial software or use the slower modality of Citrix online emulation software to access the lab servers.

The strong significant positive correlation among the dependent variables was exactly as hoped for in the current study research design. These high correlations indicated strong links between perceived job skills learned in college and what business students felt were required in their present job. The significant positive correlation of 0.895 (p < 0.001) between overall learning effectiveness and degree ROI was highly desired in the current study, as this implied the link between learning job skills and degree value. The degree ROI mean was 5.0 (SD = 0.7, median = 4.9) which corroborates that most students agreed or strongly agreed their tuition spent on the degree was worth what they learned.

Likewise, the significant positive correlation of 0.789 (p < 0.001) between job–skill match and degree ROI suggests students saw the link between their perceived learning competence and what they had to pay for that, the investment 4 year journey. In similar fashion, the high learning effectiveness mean of 4.8 (SD = 0.5, median = 5) confirmed most business students thought they effectively learned the skills during the degree. Finally, the significant positive correlation of 0.848 (p < 0.001) between learning effectiveness and job–skill match revealed those business students who thought the skills they learned in college were a good fit, prepared them well, for their current employment. The job–skill match descriptive estimates was not so favorable with a mean and median of 3.6 (SD = 1.4) coupled with a lot of variation, considering 3 was the 1–5 response scale midpoint, implying only slightly more than half of business students felt the skills taught in college matched the required job skills in their current employment. This is good to know, as it clarified students recognized the course subjects taught during the degree need to be improved in order to better match job skills in their current employment, at least according almost half of the students.

SEM development and hypothesis test results

Overall these results were very good statistically but not sufficient to answer the hypotheses. SEM is capable of going beyond the bivariate correlations discussed above, to combine all skills together with all three dependent variables to obtain a more advanced inclusive assessment of how business students perceived their skill learning effectiveness and how those skills matched their current employment. Bivariate correlations evaluate only two variables, while SEM is capable of evaluating all factors and variables simultaneously in a manner to illustrate the importance and direction of all the relationships.

Iterations of SEM were executed to build and refine the student job skill model shown in Fig. 2. SEM is very complicated, so it will help other researchers to describe the design parameters ahead of the results. The factor and variable abbreviations are explained at the bottom of the model. Solid lines represent strong significant relationships, dotted lines refer to indirect associations, and lightly shaded lines refer to weak associations. The estimates are multivariate standardized correlation coefficients, which is why three factors SfS, HrS, and CrS were artificially set to 1 in a recursive manner to anchor the standardization, meaning all beta coefficient estimates are relative to those. Rather than explain the coefficients, more attention will be given later to discuss the effect sizes. Two latent variables were created for hard skills (HrS) and soft skills (SfS) as measured by their respective exogenous indicators. Exogenous refers to an external input factor. A path was set between each of the exogenous factors and their respective skill indicators to operationalize the conceptual model of Fig. 1, Table 1, and the survey (appendix). After several refinement steps, one endogenous latent variable was created, career success (CrS) to partition the combined indicators of the eight skills through the two exogenous factors, SfS and HrS. Endogenous refers to an internal affected variable, such as a final process. The paths were set from the skills toward their respective exogenous factors. Next, the paths were defined from each of the dependent variables, JSM, LrP and DRO, toward the endogenous latent variable CrS. Finally, the exogenous factors SfS and HrS were linked to the endogenous CrS variable. This SEM was not a predictive model but rather an explanatory model to confirm the data were a relatively good fit to the hypothetical model.

The SEM results were good. There were numerous model fit coefficients so only the pertinent are discussed below. The complete SEM parameter details are listed in the appendix. The first SEM estimates show how the model fitted very well with the observed data as compared to a hypothetically perfect structure, so a low insignificant p value is desired. The good fit estimates were: Chi-square χ2 = 37.56, DF = 27, AIC = 3124.224, BIC = 3240.098, p = 0.085, with a 0.25 baseline model minimum function test statistic. The Akaike information criteria (AIC) and Bayesian information criteria (BIC) reflect the number of measurements within the model and sample size using a log likelihood calculation—there is no benchmark for those since other fit indexes are better for gauge relative quality. The comparative fit index (CFI) was 0.984, the Tucker–Lewis index (TLI) was 0.968, and the relative non-centrality index (RNI) was 0.984—all were acceptable as they were ≥ 0.9 to indicate a good fit according to Hair et al. (2010). The parsimony normed fit index (PNFI) was 0.465, which was slightly lower than the desired 0.5 (Hair et al. 2010; Keppel and Wickens 2004). The PNFI is similar to CFI but adjusted for the number of parameters. Considering there were 27 used and 50 free, this is a noncritical estimate since it suggests the model could be slightly over-specified with more inter-relationships implemented than practically needed. To check this, endogeneity estimates were examined from VIF as well as all factors interaction terms (exogenous factors in the path pointing to the endogenous dependent variables), were examined in the SEM following the recommendations of Kim et al. (2015) as well as Knock (2014). All VIF’s were under 3.3 and most were close to 3.0 while there were no significant interaction terms other than those discussed below as added to the model.

However, the above estimates were insufficient to confirm the quality of SEM because of variable inflation factor covariance and relationship complexity. The most rigorous SEM measures of quality are the error term approximations. The root mean square error of approximation (RMSEA) was 0.072, the standardized root mean square residual was 0.045—both were acceptable as they were ≥ 0.8, and ≥ 0.5 signifies a very parsimonious model with very little unexplained common standard error (Hair et al. 2010; Keppel and Wickens 2004). As further proof, the RMSEA was valid, the software calculated the p value based on testing if the RMSEA estimate was significantly different from p < 0.05 which was as desired, 0.244 (not different meaning accept RMSEA as significant and valid). The standardized root mean square residual (SRMR) was 0.045, which was also acceptable because it was ≤ 0.08 (Hair et al. 2010; Keppel and Wickens 2004). SRMR measures the difference between covariance residuals of the fitted model and what ought to be unexplained (left over) in a perfect version of the hypothesized model.

Several generic quality measurements can also be mentioned, for comparison to other studies. The goodness of fit index (GFI) is a well-known SEM effect size benchmark similar r2 in multiple regression, which in SEM ought to be ≥ 0.9 and strong models ≥ 0.95 (Hair et al. 2010; Keppel and Wickens 2004). In the current study GFI was 0.996 (acceptable as a strong model). Likewise, the parsimony goodness of fit Index (AGFI), which is GFI adjusted for the number of parameters similar to adjusted r2, was also high at 0.989, and it was acceptable since it was ≥ 0.9 (Hair et al. 2010; Keppel and Wickens 2004).

Finally, the specific r2 effect size was calculated for each SEM variable. The higher the effect size the better because larger values indicate more variance was accounted for in the SEM path model. The benchmark for a strong effect size was 0.35 (Hair et al. 2010; Keppel and Wickens 2004). First the dependent variables will be discussed, in order of importance, since they represent the RQ and hypotheses. Career success, the overall endogenous dependent variable, had the highest effect size of 96.8% and it was strongly associated to degree ROI and learning effectiveness in the Fig. 2 fitted model, but indirectly linked to job–skill match. The dependent variable learning performance had an effect size of 95.6%, degree ROI at 83.3%, and job–skill match at 75.2%. Based on the above model fit indexes and effect sizes, the third hypothesis (H3: learning effectiveness and degree effectiveness leads to perceived career success) can be accepted.

Next, the two groups of latent exogenous indicators can be discussed, in order of their importance. In the hard skills group, technology was the most important with an effect size of 75.3%, followed by qualitative at 62.5, quantitative at 62% and communications at 59.8%. Again, based on the aforementioned model fit indexes and effect sizes, the next hypothesis (H1: hard skills learning effectiveness leads to degree effectiveness student perceptions) can be accepted. In the soft skills group, problem solving was the most important, with an effect size of 78.7%, followed by teamwork at 69.4%, emotional intelligence at 52.6%, and ethical decisions at 46.3%. Once again, based on the aforementioned model fit indexes and effect sizes, the final hypothesis (H2: soft skills learning effectiveness leads to degree effectiveness student perceptions) can be accepted.

Conclusion and recommendations

Looking back at the rationale for initiating this study, the RQ was focused on how do recently graduated business college students in the USA view the effectiveness of their degree in terms of learning relevant job skills. The answer was relatively well, but with a few theoretical concerns. A reasonably large sample size was drawn from a US-based NYS population of graduated business students, all were employed when surveyed in 2022 after the pandemic. From a research design perspective, a very complex robust SEM was created which was statistically significant when fitted with the student data (GFI = 0.996, AGFI = 0.989, CFI = 0.984, TLI = 0.968, RNI = 0.984, PNFI = 0.465, RMSEA = 0.072, SRMR = 0.045). These indexes were very good. The effect sizes of the latent factors, indicators, and dependent variables in the fitted model ranged from 46.3 to 96.8%. These are very strong effect sizes. All three hypotheses were accepted. In summary, the model explained business students thought they effectively learned the hard skills and soft skills from the degree, those skills generally matched their current job, and in turn they felt the tuition paid provided a good investment return, leading to satisfaction with their career. By comparison, these effect sizes were higher than the 20% reported in a similar SEM study of engineering students by Jeswani (2016).

Insights can be gleaned by integrating descriptive statistics with the SEM effect sizes and comparing to similar studies. Learning performance had an effect size of 95.6% in the fitted model, a mean of 4.8 on a 1–5 interval scale in the data (SD = 0.5, median = 5), which confirmed most business students thought they effectively learned the job-related skills during their business degree program. The degree ROI effect size was 83.3% in SEM while the mean was 5.0 in the data (SD = 0.7, median = 4.9), which implied most students agreed or strongly agreed the tuition spent they spent on the degree was worth the job-related skills they had learned. It is important to note the individual level of analysis was applied from the student perspective, after the pandemic, and when they became actively employed, as recommended by Janssen et al. (2021). These results generally agreed with relevant studies namely Adrian (2017), Messum et al. (2016), and Jeswani (2016). However, the Jeswani (2016) study was problematic since one of the three SEM factors was insignificant which meant the entire subgroup of skills had no impact in his SEM. However, Adrian (2017, as well as Messum et al. (2016), reported relatively high student values in their survey response scale, near the top, although Messum et al. (2016) used a non-standard 0–4 interval scale.

A few issues surfaced when looking more deeply into the SEM results. Interestingly, all soft skills had large effect sizes in the SEM and high vales near 5 in the survey response data. These soft skills included teamwork, emotional intelligence, ethical decision making, and problem solving. The results indicated business students in the sample learned soft skills effectively, they felt those skills taught during the courses were relevant to their current employment and as a result they were happy with the degree return on investment. Two of the hard skills had high survey response values and effect sizes in the SEM, namely, qualitative (non-numeric theories like organizational behavior) and communications (i.e., reading, writing, speaking, presenting). These suggested students thought they learned those hard skills well, they were related to what was needed in their current employment, and this lead to satisfaction with the tuition spent for their degree, the ROI. The soft skills and hard skills reported by Adrian (2017) as well as those in the Messum et al. (2016) paper, were also relatively high and like the current study, keeping in mind the different scales used by Messum et al. (2016).

However, the main business student problem was with technology and quantitative hard skills. Technology was the most important hard skill indicator in the SEM with an effect size of 75.3%, but it had the second lowest mean and median in the data (M = 3.9, SD = 1, median = 3.3). Quantitative skills had the absolute lowest mean and median in the data (M = 2.9, SD = 1.3, median = 2.2) but paradoxically a high effect size of 62% in the SEM. The low means and medians, high deviations, and large SEM effect sizes, for technology and quantitative, suggest differences between students, some learned more than others, some felt the skills matched their current job, but possibly those learning less may perceive a larger skill gap with their current employment. The low mean of 2.9 and median of 2.2 for quantitative is a red flag, implying more than half of the business students felt they did not learn those categories of job-related skills effectively. Beyond the learning stage, the lower survey responses for the dependent variable job–skill match (M = 3.6, SD = 1.4, median = 3.6), maybe a carry-through phenomenon. The lower learning effectiveness for those two hard skills may have negatively influenced how business students felt they were prepared for their current employment.