Abstract

The past decade has witnessed a notable transformation in the Architecture, Engineering and Construction (AEC) industry, with efforts made both in the academia and industry to facilitate improvement of efficiency, safety and sustainability in civil projects. Such advances have greatly contributed to a higher level of automation in the lifecycle management of civil assets within a digitalised environment. To integrate all the achievements delivered so far and further step up their progress, this study proposes a novel theory, Engineering Brain, by effectively adopting the Metaverse concept in the field of civil engineering. Specifically, the evolution of the Metaverse and its key supporting technologies are first reviewed; then, the Engineering Brain theory is presented, including its theoretical background, key components and their inter-connections. Outlooks of this theory’s implementation within the AEC sector are offered, as a description of the Metaverse of future engineering. Through a comparison between the proposed Engineering Brain theory and the Metaverse, their relationships are illustrated; and how Engineering Brain may function as the Metaverse for future engineering is further explored. Providing an innovative insight into the future engineering sector, this study can potentially guide the entire industry towards its new era based on the Metaverse environment.

Similar content being viewed by others

1 Introduction

As the Industry 4.0 is increasingly adopted in the construction sector in recent years, transformation is happening throughout the lifecycle of civil assets. Advances both in the academia and industry are facilitating the improvement in efficiency, safety, sustainability and automation for successful project deliveries. Meanwhile, massive data collected and exchanged through the Internet of Things (IoT) technology are increasingly utilized to drive machine intelligence and support various managerial decision-makings. Digitalization constitutes another important trend in the construction industry, where building information modelling (BIM) and digital twins (DT) are intensively adopted along with other information technologies to support efficient management of civil assets. There are not only projects led by industrial partners [e.g., Building 4.0 CRC as a part of the Australian Government’s Cooperative Research Centre program (2020)], but also extensive research efforts are made (Bock, 2015; Elghaish et al., 2020; Hautala et al., 2017) to forward such transformation of the construction industry.

Recently, the concept of Metaverse was put under spotlight by Facebook’s CEO, Mark Zuckerberg, in a developer conference (Zuckerberg, 2021). Earlier this year, Microsoft CEO Satya Nadella introduced an idea of enterprise Metaverse at the 2021 Microsoft Inspire partner event (Microsoft, 2021) Metaverse, purposed to assist simulated environments and mixed reality. The heated discussion of this idea in the industry was also joined by chipmaker Nvidia, video game platforms like Epic and Roblox, and even consumer brands like Gucci. On top of the advances in industries, pioneer scholars from a wide range of domains have proposed similar concepts that share some features of the Metaverse. Especially, in the AEC sector, Prof. Wang highlighted the importance of mixed reality in the future engineering industry (Wang, 2007a, 2007b) more than a decade ago. Studies on digital twins and BIM, as the digitalized version of real-life civil assets, have been intensively conducted as well (Alizadehsalehi et al., 2020; Boje et al., 2020; Wang et al., 2015). To date, however, advances in the AEC industry, either for robotics or DT, have failed to achieve a level of unity as high as the Metaverse. Research findings and technologies in the AEC sector are scattered and need to be integrated into an interoperable “universe”, so as to realize a highly automated, efficient, safe and sustainable construction environment. For this reason, this study attempts to adopt the idea of Metaverse in the construction industry. First, it will introduce the evolution and key technologies of the Metaverse, followed by proposing a novel theory, Engineering Brain. Current advances in the construction sector will be systematically incorporated in the Engineering Brain, with an illustration given in detail. Outlooks into the future of the construction industry are presented, based on which a comparative analysis between the Metaverse and the Engineering Brain are provided. Finally, potential adaptations of the Metaverse in the AEC sector in the form of Engineering Brain are explored as well.

2 Metaverse and its Key Technologies

In the early phases of the evolution of the Metaverse, many similar concepts in various names for it were put forward back in 1980s in a range of fiction novels and some massively multiplayer online role-playing games (MMORPG). The terms “Metaverse” was first coined by Neal Stephenson in a science fiction titled Snow Crash in 1992 (Stephenson, 1992). A few well-known Metaverse examples in the field of entertainment include Fortnite by Epic Games and Second Life by Linden Lab, where players can create an avatar for themselves, so as to explore the world in a virtual environment. In a sense, Metaverse resembles a parallel world, where human activities occurring currently in the physical world would also take place in a digital environment. Until today, the concept of the Metaverse is still in a process of evolvement, with no authoritative definition given so far. Yet, certain key elements for the Metaverse can be identified, including videoconferencing, games, email, live streaming, social media, e-commerce, virtual reality, etc. To realise such activities, the assets within a Metaverse ecosystem (e.g., avatars and items of value) should be compatible, interoperable and transferable among a variety of providers and competing products (Lanxon et al., 2021). In addition, the developing Metaverse are concerning more and more about the elements that are centred on users, ranging from avatar identity, content creation, virtual economy, social acceptability, presence, security and privacy, and trust and accountability (Lee et al., 2021).

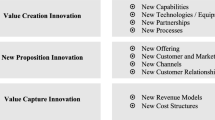

Nonetheless, all the components of a Metaverse have readily existed yet, moving from proprietary ecosystems used by different competing businesses and creators to a universal and integrated ecosystem, all of which are key to the ultimate construction of the Metaverse. Figure 1 lists seven layers of the Metaverse (Radoff, 2021), with industrial partners in the market mapped to each layer. To facilitate the transition from the current Internet to the Metaverse, a wide range of key technologies are required, and they are mapped to the seven layers of the Metaverse as shown in Fig. 1. Specifically, the seventh layer, i.e., infrastructure, represents the underlying technologies that support the Metaverse, including: future mobile networks and Wi-Fi, and hardware components such as graphics processing units (GPUs). Such technologies as virtual reality (VR), augmented reality (AR) and extended reality (XR) fall into the spatial computing layer, while relevant wearables, like VR headsets, belong to the sixth layer, i.e., human interface. The decentralization layer is related mainly to Non-Fungible Token (NFTs) and blockchains. Other technologies, such as Artificial Intelligence (AI), edge and cloud computing, are involved in multiple layers and of importance to the Metaverse. All the above key technologies are illustrated in detail below.

2.1 Mixed reality (MR)

Various “reality” concepts, including Artificial Reality, Virtual Reality, Mixed Reality, Augmented Reality, Augmented Virtuality, Mediated Reality, Diminished Reality, Amplified Reality, etc., have emerged and extensively developed over the past decades. It is worth noting that although their differences are subtle, proper clarification provided by Wang (2007a) are beneficial. Mixed reality (MR), together with virtual reality (VR) and augmented reality (AR), reside in the field of extended reality (XR), an umbrella term covering multiple immersive technologies. By virtue of these technologies, different levels of virtuality can be delivered, ranging from partial sensations to immersive experiences. To be specific, with AR technology, the real-world environment can be overlaid with sensory modalities, whether constructive or destructive, while one’s cognition towards the surroundings can be altered accordingly. On the other hand, with VR technology, users’ environment can be replaced with a virtual and simulated one instead. As for MR, it allows for the coexistence and interactions of physical and virtual objects; and its applications reside neither in the virtual world nor the physical world, but anywhere in between on the reality-virtuality continuum. There are two sub-modes of MR, i.e., Augmented Reality (AR) and Augmented Virtuality (AV): The former is used to augment the real environment with virtual information, while the latter would embed real contents in a virtual world. Apart from the distinct AR and AV, many other modes within the context of Reality-Virtuality (RV) continuum are feasible. For example, under Mutual Augmentation (MA) suggested by Prof. Wang (2007b), “real and virtual entities mutually augment each other, so as to form different augmented cells/spaces”. As a result, a mega-space where the real and virtual spaces are interwoven together can be formed for seamless collaborations among different stakeholders. To realize the mega-space, a recursive augmentation process among virtual spaces and reality spaces has been proposed.

Extensive implementations of XR have been developed in the fields of entertainment, education (Zweifach et al., 2019), healthcare (Andrews et al., 2019; Silva et al., 2018), tourism (Kwok et al., 2021), industrial manufacturing (Fast-Berglund et al., 2018), interior design, and architecture. The deployment of XR in the AEC industry has been increasingly studied as well (Alizadehsalehi et al., 2020; Khan et al., 2021; Wang, 2007a, 2007b), regarding not only life-cycle phases of civil assets, but also project management and professional training. Notably, Prof Wang (2007a) has proposed a Mixed Design Space (MDS) to create a collaborative design environment both for architects and interior designers based on the mixed-reality boundary theory proposed by Benford et al., (1996). Three dimensions constitute the Mixed-Reality boundaries, including: transportation (similar to the concept of immersion in Virtual Reality), artificiality, and spatiality. Figure 2 presents the underlying theories and relevant data communication in the proposed MDS. Table 1 lists the properties of MR boundaries and how they are implemented in the proposed MDS. A prototype of MDS has been offered for validation.

MR boundary theory in a mutual context (Wang, 2007a)

2.2 Artificial intelligence

Artificial intelligence (AI) refers to an approach to train machines to perform tasks that are typically completed by human intelligence. Through proper learning process in certain environments, “intelligent agents” will be able to take actions with maximized chances of achieving their specific goals (Legg et al., 2007; Poole et al., 1998). Ever since AI was founded as an academic discipline in 1956, approaches in AI research have evolved a lot, from optimization search (e.g., genetic algorithms and swarm algorithms), logics, and probabilistic algorithms (e.g., Bayesian networks, Hidden Markov models and decision theory), to machine learning and neural networks. Thanks to the availability of high-performance computers and large amounts of data, deep learning has nowadays dominated this field, given its accuracy and efficiency, while the research efforts put in deep learning has increased drastically. AI has been widely utilized maturely in search engines, targeted advertising, recommendation systems, intelligent personal assistants (e.g., Siri), autonomous vehicles, game playing, and other systems. Along with AI’s widespread applications, issues with its ethic responsibilities have been raised as well.

2.3 Computer vision

Essentially an interdisciplinary subject, computer vision aims to resemble human visual systems and obtain understandings from imagery. Tasks in this field include (3D) scene reconstruction, object detection, object tracking, 3D pose estimation, image restoration, etc. A typical computer vision system in recent research uses a combination of image processing techniques to extract features, and machine learning algorithms are then used to obtain knowledge based on such features. And since the advent of deep learning, the accuracy and efficiency of computer vision systems have been enhanced on several benchmark datasets and various tasks (e.g., classification, object detection and segmentation) (Pramanik et al., 2021; Ren et al., 2015; Wang, Yeh, et al., 2021). The implementations of computer vision range from traffic management (Buch et al., 2011), agriculture and food industry (Brosnan et al., 2004; Tian et al., 2020), life-cycle management of civil assets (Feng et al., 2018; Xu et al., 2020), and disease diagnosis (Bhargava et al., 2021; Song et al., 2016).

2.4 Edge and cloud computing

Cloud computing realises service deliveries via clouds, involving software, analytics, data storage and networking resources. Nowadays, cloud computing has been deployed in many daily activities, and cloud services can be purchased as “Infrastructure as a service” (IaaS), “Platform as a service” (PaaS) or “Software as a service” (SaaS). They allow for secure data storage, enable business continuity and improve collaboration. However, faced with the growth of IoT, the operational cost of cloud computing is becoming an issue. To address this problem, edge computing is proposed, which is essentially to bring computing resources to the “edge” of networks, so that they are closer to users or devices. In this way, latency can be reduced, and operational efficiency can be improved. An increasing number of real-time applications, such as video analytics, smart home environment and smart cities, would benefit from the edge computing for reduced response times (Shi et al., 2016).

2.5 Future mobile networks

The fifth generation of cellular network technology, i.e., 5G, is vital to the development of the Metaverse. The 3rd Generation Partnership Project (3GPP), a global organization which has defined and maintained the specifications for 2G GSM, 3G UMTS and 4G LTE, published the Release 15 (3GPP, 2018), the first full set of 5G standards, in 2018. According to the release, 5G New Radio (NR) cellular communications will be delivered by using two channel coding methods, i.e., low-density parity-check (LDPC) (Gallager, 1962) and polar code (Arikan, 2009). Compared to its predecessors, 5G technology allows for increased data traffic both in mobiles and networks, brings wider bandwidths to sub-6 GHz and mmWave, and can thus provide better operational performances, e.g., ultra-low latency, higher reliability and higher peak data rates. Based on the three user scenarios identified by 3GPP, i.e., enhanced mobile broadband (eMBB), massive machine-type communications (mMTC), and ultra-reliable and low latency communications (URLLC), the 5G technology can now provide new services to a wider range of users in such fields as automotive industry, Industry 4.0, education, health, broadcasting, etc. In addition, to facilitate communications among massive, connected devices within IoT, 5G technology assures the connection density with acceptable energy consumptions. Based on the advancements and potentials, the expanding 5G tech market is predicted to register a compound annual growth rate of 70.83% in the following several years.

2.6 Non-Fungible Token (NFT) and blockchain

Blockchain and cryptocurrency play an important role in the Metaverse, because data in blockchain can boost a unique characteristic, i.e., proof of existence, which can never be overwritten, thus allowing data to be traced with certainty. To further improve the traceability of exchange activities among operators in a supply chain, goods are tokenized. NFT, initiated on the Ethereum blockchain and now becoming a distinct new asset class in other cryptocurrencies (Dowling, 2021), has been applied in the creative industry (Chevet, 2018), the ever-boosting gaming industry (e.g., crypto games like CryptoCats, Gods Unchained and TradeStars) and many other sectors. Such tokens can be used to claim the ownership of goods at a certain point of time, support transfers in an open market, and guarantee their authenticity. Use cases facilitated by NFT and blockchain include gaming, virtual event enjoying and virtual asset trading (Wang, Li, et al., 2021), which all contribute largely to the Metaverse.

3 Engineering brain

3.1 Theoretical background

Theoretically, Engineering Brain cover neuroscience and neural engineering, bionics, and cyber-physical systems in the computer science field.

3.1.1 Neuroscience

Neuroscience is a scientific study on nervous systems, and it is a multidisciplinary science that combines physiology, anatomy, molecular biology, cytology, and mathematical modelling, purposed to understand the fundamental and emergent properties of neurons and neural circuits. Specifically, neuroscience investigates how digital signals pass human brains, what brain areas are responsible for what body functions, and how different brain areas interact with each other in carrying out complex thinking and tasks (Squire et al., 2012). In the neuroscience sector, the divergent-convergent thinking mechanism is an important discovery, which is closely related to the mechanism of Engineering Brain. There are many areas in human’s brain, including occipital lobe, parietal lobe, and frontal lobe; each area affects one or more essential functions. For instance, the occipital lobe affects ones’ visual sense; and when one is making decisions or doing creative work, different brain areas will interact in an active and efficient manner (Goldschmidt, 2016). Nevertheless, these interactions involve two dominant types of thinking: the divergent thinking and the convergent thinking. The former is responsible for collecting information or knowledge from different perspectives, and such information is restricted to the domain knowledge that a person has. The latter, on the other hand, makes final decisions based on the results of the former (Chermahini et al., 2012). Neuroscience forms the basis for various interdisciplinary sectors, including neural engineering, which share similar working mechanisms and lay the foundation of Engineering Brain.

3.1.2 Neural engineering

Neural engineering (or called human brain engineering) draws on the fields of computational neuroscience, experimental neuroscience, neurology, electrical engineering, and signal processing of living neural tissue, with many elements encompassed, including robotics, cybernetics, computer engineering, neural tissue engineering, materials science, and nanotechnology. Neural engineering aims to develop techniques and devices for capturing, monitoring, interpreting and even controlling brain signals, to produce purposeful responses. Neural engineering can bring many benefits to the medical, health care, and gaming sectors. Its typical achievements include, but not limited to: (1) neural imaging, by which the neural techniques (e.g., functional magnetic resonance imaging (fMRI) and magnetic resonance imaging (MRI)) are employed to scan brain structures and activities of neural networks; (2) Brain–computer interfaces, which seek to allow direct communication with human nervous system, so as to monitor and stimulate neural circuits and diagnose and treat neural dysfunctions; and (3) neural prostheses, which refer to the devices that supplement or replace missing functions of nervous systems.

3.1.3 Bionics

The concepts to Engineering Brain also make reference to the knowledge of bionics. Also known as biologically inspired engineering, bionics is an application of biological methods and systems found in nature in the study and design of engineering systems and modern technologies. The philosophy behind bionics is that the transfer of technology between lifeforms and manufactured objects is desirable, because evolutionary pressure typically forces living organisms (fauna and flora) to get optimized (Abuthakeer et al., 2017). Bionics have inspired the birth of many modern techniques, which have been deployed in the construction and engineering sector. Such modern techniques include, but not limited to: (1) robotics, by which the bionics is used to apply the ways of animals’ moving in the design of robots, e.g., the robotic dogs from Boston Dynamics that can carry out inspection tasks dangerous to human engineers; (2) the way that the blue morpho butterfly’s wings reflect light is mimicked to invent RFID tags, which can efficiently read data of materials, equipment and labour; and (3) information technologies, e.g., optimization algorithms inspired by social behaviours of animals (such as ant colony’s optimization) and particle swarm optimization (Zang et al., 2010).

Engineering Brain can be regarded as an extension and specialisation of neuroscience, neural engineering and bionics in engineering projects. As discussed below, it relies on timely collection, interpretation and analysis of data by using advanced AI methods (similar to sensing and understanding the environment and making judgements in a human brain), sharing data among different analytics functions and project parties (similar to transferring signals among brain areas), and controlling or instructing physical entities in projects (e.g., machines and people) by sending decision-related information on time (similar to controlling the body parts). Brain areas and bodies co-exist in human being; however, applying Engineering Brain in practical projects has to deal with separated inorganic entities as well as the interactions between such entities and organic human engineers. Hence, implementation of Engineering Brain further entails the concept of cyber-physical systems from the computer science field.

3.1.4 Cyber-Physical System (CPS)

CPS is actually a synonym of the popular concept “digital twin” (DT), which focuses on generating a cyber world (or virtual world) of the physical one (Boje et al., 2020). As shown in Fig. 3, CPS involves three worlds: the physical, cyber, and mixed worlds. To develop a CPS, all types of data describing the physical world should be collected by using various methods, such as the IoT systems (to collect sensor readings), cameras (to collect images), and audio recorders (to collect voices and languages) (Ghosh et al., 2020). All the multi-modal data are stored, processed and analysed in the cyber world, with simulation and optimization performed, and informed decisions made, in compliance with the philosophy of the data pyramid introduced before. Then, actions can be carried out by following the optimal decisions in the physical world. To boost work efficiency, certain technologies, such as AR/VR, should be employed to project-needed information in the physical world (thus forming the mixed world), so as to guide these performing tasks (Li et al., 2018). In this way, tasks or projects in the physical world can proceed with the minimized risks, while gaining the maximized benefits or profits.

3.2 Definition and components of Engineering Brain

The Engineering Brain is defined as an efficient and intelligent cyber-physical system for realizing optimized decision-making for construction projects based on heterogeneous, multi-modal and life-cycle data by utilizing the state-of-the-art cross-domain technologies. The working mechanism of Engineering Brain refers to interactions among human brain areas.

As illustrated in Fig. 4, the Engineering Brain includes four key components: (1) the frontal lobe, (2) the occipital lobe, (3) the parietal lobe, and (4) the temporal lobe. The aim of Engineering Brain is to build up a cloud reflex arc to handle all engineering issues timely and effectively, so that a project can be completed without delays and accidents, while delivering higher quality and saving more costs. The working mechanism of Engineering Brain is similar to that of a real person: the four lobes handle different types of data (i.e., doing the divergent thinking), while the superior part of the frontal lobe makes accurate predictions and decisions (i.e., doing the convergent thinking). Specifically, data of all aspects of a project are collected and processed continuously (i.e., converting data to information); different methods are used to analyse information of different types; the resultant information/knowledge is exchanged and sent to the superior frontal gyrus for prediction and decision-making; the final results (often expressed as instructions) are sent to project entities (e.g., machines or crews) to carry out certain tasks in the physical world.

3.2.1 Parietal lobe

The parietal lobe is responsible for the sense of smell and touch of people. Therefore, to some extent, it corresponds to an IoT system that collects real-time readings from various types of sensors, such as the information on the status of machines (e.g., from mounted sensors), workers (e.g., from wearable sensors), and environment (e.g., from fixed sensors) (Gamil et al., 2020). The data collected from different sensors are commonly different in many aspects, e.g., in their formats and volumes, which can interfere subsequent analyses. Hence, data fusion methods, including conventional filtering methods (e.g., the Kalman filter) and cutting-edge encoding methods (e.g., the BERT transformer), shall be adopted to integrate data, depending on data types. All sensor data are valuable for real-time monitoring and long-term knowledge mining. The former refers to detecting abnormality and defects by identifying usual readings, often with various fault diagnosis techniques (Riaz et al., 2017); the latter refers to discovering common patterns or knowledge for continuous improvement, e.g., identifying users’ profiles of energy usage for optimizing the facility management (Alcalá et al., 2017). However, sensor readings are structured data, while 80% of data in construction projects are unstructured, e.g., in the forms of images and texts. Therefore, it is critical to collect unstructured data.

3.2.2 Occipital lobe

The occipital lobe handles visual signals in the human brain, so that people can see things. Therefore, in Engineering Brain, its function corresponds to collecting on-site images by using RGB and depth cameras, and then implementing certain analysis methods in the computer vision (CV) sector to process these images. Such methods include both traditional CV techniques, such as displacement detection based on reference points and digital image correction (mainly for structure monitoring) (Wu et al., 2020), and advanced convolutional neural networks (CNN), purposed to deliver various functions, such as recognizing the as-is progress of building structures, detecting and evaluating defects (e.g., cracks), and identifying workers, machines or unsafe behaviours (Ding et al., 2018; Han et al., 2013). In addition, people also take in text data through the visual sense (i.e., reading). Therefore, in Engineering Brain, the “lobe” is also responsible for collecting text data by “reading” project documents stored in file systems by taking naive natural language pre-processing methods, such as sentence splitting, tokenization (i.e., dividing a sentence into phrases), and lemmatization (i.e., converting words to their basic forms as expressed in dictionaries) (Denny et al., 2018). Text data can be employed for knowledge discovery (e.g., deriving causes of an accident and delay) and compliance checking (e.g., detecting non-compliance between a design and its working plan and published standards) (Ayhan et al., 2019; İlal et al., 2017).

3.2.3 Temporal lobe

Dealing with memory, the temporal lobe is where the hippocampus lies. As such, the lobe corresponds to the function of information storage. Databases for both structured and unstructured data should be developed. Structured data can be easily stored in tables with rows and columns. In this case, typical relational databases (e.g., Oracle and DB2) can meet the demands in practice. However, to improve data storage’s efficiency, distributed database architecture can be employed, where separated databases are constructed for different data formats. Another option is NoSQL databases (e.g., MongoDB and Apache Cassandra), which provide better scalability when handling big data and can store massive sensor readings and images more effectively. Besides, some unstructured data (e.g., entities and their relations, which are often extracted from text documents) take the form of triples (e.g., subject-relation-object). Therefore, graph databases, e.g., Neo4j and Protégé, can be adopted to store such data (Jeong et al., 2019; Wu et al., 2021c).

3.2.4 Frontal lobe

The frontal lobe involves three gyruses, each responsible for a different function. The superior frontal gyrus involves convergent thinking (i.e., prediction and decision-making). It should be noted that many objectives (e.g., improving qualities and compressing schedules) in a construction project are contradictory, so one decision may cause cascading effects and influence them all. Thus, it is critical to use multi-objective optimization techniques (e.g., genetic algorithm, particle swarm optimization, and reinforcement learning) to strike a balance among these objectives. As for prediction, models of machine learning and deep learning are good options, as they have demonstrated their effectiveness in many applications, e.g., predicting material demands to place orders, or predicting performance to select bidders (Kim et al., 2019). Predictions and decisions are made by considering the information and knowledge available from all the four lobes. Moreover, many methods and algorithms have been proposed in the field of computer science. However, the key is to incorporate the domain knowledge of the industry, so that the methods and algorithms suit demands of construction projects.

The middle frontal gyrus deals with speaking. Hence, it corresponds to the information/knowledge exchanging function in Engineering Brain, as an enabler of prediction, decision-making, and project execution. However, the industry lacks efficient exchanging methods, a situation recognized as the main barrier to IT implementation. Thus, standardized schemas, e.g., the industry foundation class (IFC), can be developed and implemented among stakeholders in one project or even across the industry, so that information can be described in the same format and can fed into different tools (Bradley et al., 2016; Zhu et al., 2019). In addition, semantic-web technologies, such as ontologies and logic reasoning, can be adopted to further standardize information/knowledge description, e.g., for disambiguation (Wu et al., 2021c). This lobe is also responsible for: (1) sending prediction/decision related information and instructions to guide or instruct entities in physical projects, and (2) exchanging information/knowledge with external parties, e.g., governments and manufacturers. In all cases, information platforms, e.g., BIM and CIM, can serve as the front end for users to search information, while modern human–machine interaction techniques, such as mobile computing, AR, and VR, can be used to boost the communication efficiency (Li et al., 2018).

Finally, the inferior frontal gyrus refers to the task execution module, namely, the body that takes orders from the brain. In construction projects, the “gyrus” refers to entities in a physical project that: (1) forms permanent parts of a physical building structure, e.g., materials and products; and (2) is required for processing the tasks of design, engineering, construction and maintenance. This involves crews of different backgrounds and skills, building design programs, construction machines, and engineering methods. Project teams should manage all the entities according to the predictions and decisions made by the superior gyrus and sent by the middle gyrus, such as selecting the optimal design, re-allocating resources, and removing identified hazards (Wu et al., 2021b; Yu et al., 2015).

3.3 Interaction and development of Engineering Brain’s components

As mentioned above, the divergent and convergent thinking requires interactions among brain areas. In Engineering Brain, this corresponds to information flows among the four lobes. The parietal and occipital lobes would collect, pre-process and analyse data collected by sensors as well as images and texts. All the data, information and knowledge formed in information analysis (i.e., divergent thinking) are sent to and stored in the temporal lobe, the centre for exchanging information and knowledge, which is then sent to the superior frontal gyrus for making predictions and decisions, so as to enable exchanges between the parietal and occipital lobe, as analyses in one lobe may require inputs from the other. For instance, evaluating the risk with a worker may require behaviour analysis (using CV) and location tracking (using wearable GPS). The superior frontal gyrus takes in integrated information/knowledge from the temporal lobe to make predictions and decisions, while the results are sent back to the temporal lobe, which then sends instructions to the middle frontal gyrus, which in turn inform entities in the physical world. The results of prediction and decision-making (e.g., project progress after re-allocating resources) are collected by the Engineering Brain for the next round of analysis, therefore forming a closed loop and enabling continuous improvement. Figure 5 demonstrates the above interaction in the Engineering Brain.

Despite the great value, implementing Engineering Brain in projects in practice requires a development process that entails three main stages. Data of high quality are the pre-requisite for any data-driven analysis. Thus, in the first stage, it is the parietal and occipital lobes that develop fast. Main concerns in this respect include: (1) deploying and optimizing IoT systems as well as methods for image and text data collection, which can cover as many aspects of a project as possible, while minimizing monitoring costs (e.g., the number and energy-consumption of sensors) (Zhou et al., 2019); and (2) developing novel methods for multi-modal data cleaning and fusion. In the second stage, two actions can be simultaneously performed. First, in the parietal and occipital lobes, the cutting-edge sensor data mining, CV and NLP techniques should be implemented to derive useful knowledge from information of different types. Second, in the temporal lobe, methods to standardize, store and exchange information and knowledge should be developed. In the third stage, the focus of development moves to the superior and middle frontal gyruses, where advanced optimization techniques and deep learning models can be applied for predictions and informed decision-making. Finally, in the fourth stage, the middle and inferior frontal gyruses are developed. The former sends instructions to on-site teams, and the latter receives such information and physically executes the tasks to complete the project. It should be noted that a highly developed inferior lobe is the basis for robotic construction, and unmanned machines and robots (e.g., unmanned excavators, cranes and aerial vehicles) are widely used to assist (not to replace) human labours (Wu et al., 2016). Figure 6 presents the evolution process of the Engineering Brain theory.

To facilitate understanding, a simple demonstration of Engineering Brain is shown in Fig. 7 for the maintenance of a bridge. To detect the structure health condition, the sensing system collects multi-source data continuously, including from RGB cameras belonging to the occipital lobe of Engineering Brain as well as various sensors (e.g., accelerators and strain gauges) belonging to the parietal lobe. The sensors collect essential structure responses, which are then cleaned and fed into some mathematical and mechanic models (e.g., finite-element analysis) to assess the overall bridge conditions. The RGB cameras collect images of passing vehicles, with the vehicles with significant risk (e.g., heavy trucks) identified, while their effect on the structure is evaluated. Then, deep learning models and optimization techniques belonging to the superior frontal gyrus would fuse all initial analysis results to evaluate the structure conditions (e.g., an index), with proactive maintenance plans recommended by using case-based reasoning.

4 Outlooks of the Metaverse in future engineering

This section presents a few outlooks concerning the implementation of the Metaverse in future engineering as the revolution of AEC industry goes on. Three aspects will be discussed: intelligent combination of smart technologies; knowledge graph based intelligent recognition reasoning and decision-making; and multi-machine/human–machine collaboration.

4.1 Intelligent combination of smart technologies

One of the future outlooks of Engineering Brain is demand-oriented intelligent combination of smart technologies. Nowadays, over a hundred kinds of smart technologies have been developed, and the number of technologies is continuing to increase. Currently, the appropriate selection and combination of these technologies are largely based on projects’ characteristics as well as human skills and experience, thus being extremely time-consuming and inefficient. Therefore, the development of project demand-oriented intelligent selection and combination of smart technologies is urgent.

This development direction is inspired by the re-definition of artificial materials. It is believed that materials are mostly man-made. For example, glass, plastic, concrete, etc. are proportionally synthesised with several elements in the periodic table. However, future artificial materials are prospected to be freely customised and produced by 3D printing according to certain demands with intelligently integrated essential elements, maybe with no inherent forms or names. This concept provides great inspiration for the future research in the engineering domain and Engineering Brain as well.

Analogously, a schematic diagram of “periodic table of smart technologies” is proposed to illustrate the idea of demand-oriented intelligent combination of smart technologies, as shown in Fig. 8. Specifically, such smart technologies as AR, VR, GIS, BIM, AI, 5G, RFID and 3D printing can be organised and filled into this technology periodic table in accordance with certain rules. The table is dynamic and open to add or delete smart technologies according to different demands. The optimal configuration and dynamic patterns will be figured out with constant and repeated experiments and tests based on various engineering scenarios. Finally, according to engineering demands, the Engineering Brain will intelligently select and integrate the required smart technologies from this table to solve actual engineering problems. For instance, if there is a project to construct a bridge or smart mobility that needs incorporation of several technologies, then a request could be proposed to the “technology periodic table”. The table will consequently provide feedbacks regarding the selection and combination of smart technologies, so as to form specific sub-engineering-brains according to the given engineering scenarios intelligently. It should be noted that the schematic diagram of Fig. 8 is only an imagined picture, serving as an inspiring map for future research.

4.2 Intelligent recognition reasoning and decision-making based on knowledge graphs

Current AI approaches (e.g., big data analytics and deep learning models) are generally superficial, because they are limited to statistically identifying some patterns from enormous data following independent identical distributions (namely, i.i.d). This limitation often affects AI approaches’ performance when they are implemented in the engineering sector, because: (1) they are very data-demanding, but collecting such big data is impractical in practical projects; and (2) raw data largely determine the model performance; in other words, it is difficult to transfer pre-trained models for different engineering problems, which are subject to data following different distributions (Wu, Wang, et al., 2021). Thus, in the future intelligent Engineering Brain, AI models shall capture and understand the underlying casual-effect mechanisms among project entities and events, which feature strong reasoning capacities and can adapt to different problem-solving and decision-making demands with a small amount of data (Schölkopf et al., 2021). For instance, when a model is trained to predict safety risks by using regulations and codes in one country, it can automatically adapt itself to projects in another country that has similar but different safety regulations.

Nevertheless, this involves two issues. First, knowledge graphs that include both abstract and specific parts should be developed. The abstract parts (also called ontologies) would model the common and abstract concepts (i.e., classes) and the relations among the classes (e.g., the class “Lifting Equipment”), while the specific parts would model the physical entities in specific projects (e.g., crane is a “Lifting Equipment”), the mapping between entities and classes, and the relations among the entities. Knowledge graphs should be developed automatically, which require a set of AI techniques, e.g., text understanding, ontology building and merging, and deep learning on graphs (Zhang et al., 2018). Second, based on project knowledge graphs, semantic reasoning rules and logic-driven deep learning models can be combined together to search for project information, infer implicit knowledge hidden in the graphs, and recommend solutions and decisions by mimicking the diverging-converging thinking mechanisms of human engineers. Specifically, heuristic rules are first used to infer information by interpreting nodes and edges in the graphs, while deep learning models can predict the missing elements in rule bodies (Zhang et al., 2020). At present, the reasoning rules still have to be constructed manually; however, with the development of the cutting-edge casualty learning models, sophisticated casual-effect and reasoning mechanisms for rules can be established and encoded in the next generation of deep learning models (Schölkopf et al., 2021). In addition, Metaverse techniques can visualize the above reasoning process, so that human engineers can easily interact with any reasoning step and information source, while adding their own domain knowledge as feedbacks, so as to continuously boost the models’ reasoning capacity.

4.3 Multi-machine and Human–machine collaboration

Intelligent collaboration based on technology advances can be expected in the near future, especially in the forms of multi-device collaboration and human–machine collaboration.

4.3.1 Multi-machine collaboration

Collaboration between multiple devices, machines and technologies is trending as IoT systems develop and a range of related technologies become increasingly mature. Attempts by the academia include AR/VR collaboration in virtual environments (Marks et al., 2020), connected mobile phones (Airtest), connected vehicles (Lu et al., 2014), among others. One of the most promising implementations in the AEC sector is to facilitate an automated and efficient construction process, where on-site equipment are mutually connected to collaborate with each other, without causing collisions or posing dangers to workers. Additional machines, like surveillance cameras, will also be connected to intelligently monitor nearby construction resources, such as heavy equipment at work or construction materials arriving for on-site storage. Other use scenarios include the operation and maintenance (O&M) phases when multiple machines for non-destructive testing are connected and automated for efficient inspections.

4.3.2 Human–Machine collaboration

Instead of taking machines as a tool, humans collaborate with artificial intelligence and other machines in a human–machine collaboration model to achieve shared goals. In the race with machines (Kelly, 2017), such collaboration allows for the gap filling in each other’s intelligence and physical capabilities, although empirical studies have proven that human managers prefer such partnership when machines have inputs roughly 30% (Haesevoets et al., 2021). In industrial applications, the collaborative robots especially designed for direct interactions with humans in a shared workplace are commonly deployed. In the AEC sector, the human–machine collaboration would be particularly beneficial to the creative designs, e.g., architectural design (excluding the repetitive work of engineering drawings), and managerial decision makings both on construction sites and during the O&M phase of civil assets. In these procedures, architects, engineers and asset managers will be able to work in collaboration with machines, which are trained by massive history data and manuals. The project management on construction sites can also take advantage of such human–machine collaboration. For instance, on-site managers can be informed of all types of information in a real-time manner, including progresses, risks and issues. Under further aids from intelligent agents, more efficient and automated management can be expected.

5 Engineering Brain: Metaverse for future engineering

In this section, a comparative analysis is made between the Metaverse and the Engineering Brain by referring to their pillar technologies, compositions of ecosystems, core objectives, and essential elements.

5.1 Pillar technologies, ecosystems and objectives of Metaverse and Engineering Brain

A comparison between the Metaverse and the Engineering Brain is presented in Fig. 9 in terms of pillar technologies, compositions of ecosystems, and objectives. Development of both Metaverse and Engineering Brain relies heavily on similar modern technologies, including Artificial Intelligence, Future Mobile Networks, Edge/Cloud, Computer Vision, Blockchain, Robotics/IoT, User Interactivity, Mixed Reality, etc. (Lee et al., 2021). However, the compositions and objectives of these two platforms differ.

Metaverse intends to provide users with seamless, infinite and ultimate virtual experience by establishing a perpetual perceived virtual world, which would blend the digital and physical worlds, boosted by the fusion of the Internet technologies and Extend Reality (Lee et al., 2021). Users of the Metaverse own their virtual substitutes, known as Avatars, which analogize their physical selves to experience their virtual life (Davis et al., 2009; Lee et al., 2021). According to Lee et al. (2021), a Metaverse ecosystem consists of six core pillars: Avatar, Content Creation, Virtual Economy, Social Acceptability, Security & Privacy, and Trust & Accountability. In Metaverse, Avatars reflect users’ identities, while the rest of the core pillars would support, secure and restrict users’ virtual activities and behaviours. Therefore, the objective of Metaverse is to shape and optimise users’ interactions and alternative experiences in a virtual world, so as to break through the restrictions of the physical world.

Engineering Brain aims to intelligently manage engineering projects and deliver real-time, accurate and comprehensive digital control and unmanned construction by establishing a human brain-like intelligent engineering decision system. Engineering Brain can equip engineering projects with an AI “brain” to enable real-time information collection, intelligent analysis and decision-making, effective information sharing, and automatically guided project construction. The key ecological compositions of Engineering Brain include engineering project entities and four major human brain-like modules (i.e., Frontal Lobe Module, Occipital Lobe Module, Parietal Lobe Module, and Temporal Lobe Module). Specifically, the project entities refer to project-essential resources, such as manpower, equipment, facilities, materials, etc., while the human brain-like modules reflect the state-of-the-art cross-domain technologies, with a working mechanism in analogy to the interactions among human brain areas. These modules collect, transmit, analyse and storage different types of project data and then work out predictions and decisions, so as to enable project entities to interact within the cyber world of Engineering Brain systems, and finally control the entities to carry out their tasks in the physical world. Therefore, the Engineering Brain operates around engineering projects to provide intelligent engineering solutions throughout projects’ lifecycles virtually and shape the physical world accordingly.

Despite the differences between Metaverse and Engineering Brain in terms of ecosystems and objectives, both of them can alter or shape the interactions or activities in physical world via constructions in the virtual world.

5.2 Essential elements of Metaverse and Engineering Brain

Figure 10 showcases the essential elements of both Metaverse and Engineering Brain, as well as their corresponding relationships. According to the currently popular concepts in gaming and social fields, in terms of the ways of access and interaction, Metaverse’s major elements can be highly abstracted as Avatars, Portals and “Parallel universe”. Specifically, Avatars are defined as user-created digital representations controlled by the users who participate in Metaverse and interact with others in virtual identities (Bailenson et al., 2005). In addition to human beings, animals (e.g., pets of human) may also gradually become users of Metaverse by interacting inside it (Davis et al., 2009). In Metaverse, all virtual creations go around users’ experience to make Avatars represent physical users, so that they feel and act as if in the real world or even beyond the real world. Portals refer to the hardware devices or interfaces for users to enter the virtual world of Metaverse and realise their virtual senses for interactions, such as VR, AR, sensors, brain-chips, etc. Therefore, Portals are indispensable bridges to link physical users and the virtual world in Metaverse. The “Parallel universe” in this context implies the virtual world of Metaverse constructed by using advanced web and Internet technologies (Lee et al., 2021), purposed for users to interact as Avatars. It is also the hottest domain of Metaverse currently, attracting massive attentions, imaginations and capitals for development. Clearly, this parsing logic of Metaverse is based on the intertwining of virtuality and reality, not only including the broadly mentioned and fancied “virtual world”, but also involving the physical objects and interfaces of Metaverse.

Analogously, Engineering Brain is also deemed as constituted by three major essential elements: Entities, Sensory modalities, and Digital twins. Entities here refer to the major resources of an engineering project, such as manpower, equipment, facilities, materials, etc., which can be digitalised or symbolised into the Engineering Brain system and can make interactions under instructions. And then, tasks can be carried out in the physical world. Entities are the core physical support, including the participants throughout the entire life cycle of engineering projects. Sensory modalities are the ways of obtaining sensory data, such as visual, tactile and auditory information, via devices or interfaces. They refer to the perception modules of Engineering Brain, including the Occipital Lobe Module and Parietal Lobe Module, and play a role of equipping the entities, so as to enter the cyber world of Engineering Brain and drive them to interact. Such technologies as mixed reality, computer vision or various types of sensors (Wang, 2008) can be employed to support the sensory modalities. Digital twins (Hou et al., 2021; Lee et al., 2021; Mohammadi & Taylor, 2017) imply the cyber world of Engineering Brain. In this context, digital twins not only include all the properties of the duplicated physical counterparts, but also cover the modules responsible for data transmission, data analysis and decision making, all involving the Frontal Lobe Module and Temporal Lobe Module. Digital twins enable entities to deliver all the operations required by engineering projects virtually and intelligently (e.g., visualisation, intelligent design, unmanned construction, etc.) (Ma et al., 2021; Wang, 2007a, 2007b), so as to guide them shaping the physical world accordingly.

5.3 Engineering Brain: the Metaverse for future engineering

Based on the analyses above, the corresponding relationships between essential elements of Metaverse and Engineering Brain can be established. The elements of Entities, Sensory modalities and Digital twins in Engineering Brain can be understood in analogy to the elements of Avatars, Portals and “Parallel universe” in Metaverse, respectively, as illustrated in Fig. 10. Both Engineering Brain and Metaverse equip physical entities or users with interfaces, so that they can connect with the virtual world and eventually influence their behaviour and performance in the physical world by shaping their participation and interaction in a virtual environment. The virtual worlds of Engineering Brain and Metaverse are not only simple duplications or mappings of the realities, but also provide features to remedy and surpass the defects of the physical world. For instance, they will be offered with independent economic systems based on blockchain, highly intelligent mechanisms and operations based on computing, efficient and seamless spanning between virtuality and reality, high degree of autonomy, etc. Therefore, if the Metaverse is designed and developed for humans (and possibly their pets) in gaming or social industries, then Engineering Brain can be regarded as the Metaverse for the future engineering.

6 Conclusion

Based on the hot topic of Metaverse and current advances in the digitalization and automation of the construction industry, this study proposes a theoretical system, Engineering Brain, for operating construction projects. The similarities and differences between the Engineering Brain system and the Metaverse are discussed, and the transformation from Engineering Brain to Metaverse is explored, with a focus on the roles of Avatars in the Engineering Brain system. In addition, outlooks into the future construction industry fuelled by the Metaverse and relevant technologies are given, which can potentially facilitate the further development of related fields.

References

Abuthakeer, S. S., Kumar, S. R., & Arvind, R. M. (2017). Bionics as an Inspiration for Machine ToolStructure-A Review. Manufacturing Technology Today, 16(5), 10–15.

Airtest. Airtest Project Docs: Multi-machine collaboration script. Accessed Nov 21, 2021. https://airtest.doc.io.netease.com/en/IDEdocs/run_script/2_multi_cooperation/

Alcalá, J., Ureña, J., Hernández, Á., & Gualda, D. (2017). Event-based energy disaggregation algorithm for activity monitoring from a single-point sensor. IEEE Transactions on Instrumentation and Measurement, 66(10), 2615–2626. https://doi.org/10.1109/TIM.2017.2700987

Alizadehsalehi, S., Hadavi, A., & Huang, J. C. (2020). From BIM to extended reality in AEC industry. Automation in Construction, 116, 103254.

Andrews, C., Southworth, M. K., Silva, J. N., & Silva, J. R. (2019). Extended reality in medical practice. Current Treatment Options in Cardiovascular Medicine, 21(4), 1–12.

Arikan, E. (2009). Channel polarization: A method for constructing capacity-achieving codes for symmetric binary-input memoryless channels. IEEE Transactions on Information Theory, 55(7), 3051–3073.

Ayhan, B. U., & Tokdemir, O. B. (2019). Safety assessment in megaprojects using artificial intelligence. Safety Science, 118, 273–287. https://doi.org/10.1016/j.ssci.2019.05.027

Bailenson, J., Swinth, K., Hoyt, C., Persky, S., Dimov, A., & Blascovich, J. (2005). The independent and interactive effects of embodied-agent appearance and behavior on self-report, cognitive, and behavioral markers of copresence in immersive virtual environments. Presence, 14(4), 379–393.

Benford, S., Brown, C., Reynard, G., & Greenhalgh, C. (1996). “Shared spaces: transportation, artificiality, and spatiality.” In Vol. of Proceedings of the 1996 ACM conference on Computer supported cooperative work, 77–86. https://doi.org/10.1145/240080.240196

Bhargava, A., & Bansal, A. (2021). Novel coronavirus (COVID-19) diagnosis using computer vision and artificial intelligence techniques: a review. Multimedia Tools and Applications, 1–16.

Bock, T. (2015). The future of construction automation: Technological disruption and the upcoming ubiquity of robotics. Automation in Construction, 59, 113–121.

Boje, C., Guerriero, A., Kubicki, S., & Rezgui, Y. (2020). Towards a semantic Construction Digital Twin: Directions for future research. Automation in Construction, 114, 103179.

Bradley, A., Li, H., Lark, R., & Dunn, S. (2016). BIM for infrastructure: An overall review and constructor perspective. Automation in Construction, 71, 139–152. https://doi.org/10.1016/j.autcon.2016.08.019

Brosnan, T., & Sun, D.-W. (2004). Improving quality inspection of food products by computer vision––a review. Journal of Food Engineering, 61(1), 3–16.

Buch, N., Velastin, S. A., & Orwell, J. (2011). A review of computer vision techniques for the analysis of urban traffic. IEEE Transactions on Intelligent Transportation Systems, 12(3), 920–939.

Australian Governement’s Cooperative Research Centre program. 2020. Building 4.0 CRC. Accessed Nov 15, 2021. https://building4pointzero.org/

Chermahini, S. A., & Hommel, B. (2012). Creative mood swings: divergent and convergent thinking affect mood in opposite ways. Psychological Research Psychologische Forschung, 76(5), 634–640. https://doi.org/10.1007/s00426-011-0358-z

Chevet, S. (2018). Blockchain technology and non-fungible tokens: Reshaping value chains in creative industries. Available at SSRN 3212662.

CryptoCats. 2021. Accessed Nov 14, 2021. https://cryptocats.thetwentysix.io/

Davis, A., Murphy, J., Owens, D., Khazanchi, D., & Zigurs, I. (2009). Avatars, people, and virtual worlds: Foundations for research in metaverses. Journal of the Association for Information Systems, 10(2), 90.

Denny, M. J., & Spirling, A. (2018). Text preprocessing for unsupervised learning: Why it matters, when it misleads, and what to do about it. Political Analysis, 26(2), 168–189. https://doi.org/10.1017/pan.2017.44

Ding, L., Fang, W., Luo, H., Love, P. E., Zhong, B., & Ouyang, X. (2018). A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Automation in Construction, 86, 118–124. https://doi.org/10.1016/j.autcon.2017.11.002

Dowling, M. (2021). Is non-fungible token pricing driven by cryptocurrencies? Finance Research Letters. https://doi.org/10.1016/j.frl.2021.102097

Elghaish, F., Matarneh, S., Talebi, S., Kagioglou, M., Hosseini, M. R., & Abrishami, S. (2020). Toward digitalization in the construction industry with immersive and drones technologies: a critical literature review. Smart and Sustainable Built Environment., 10, 345.

Eliasmith, C., & Anderson, C. H. (2003). Neural engineering: Computation, representation, and dynamics in neurobiological systems. New York: MIT press.

Fast-Berglund, Å., Gong, L., & Li, D. (2018). Testing and validating Extended Reality (xR) technologies in manufacturing. Procedia Manufacturing, 25, 31–38.

Feng, D., & Feng, M. Q. (2018). Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection – A review. Engineering Structures, 156, 105–117. https://doi.org/10.1016/j.engstruct.2017.11.018

Gallager, R. (1962). Low-density parity-check codes. IRE Transactions on Information Theory, 8(1), 21–28.

Epic Games. Fortnite. Accessed Nov 10, 2021. https://www.epicgames.com/fortnite/en-US/home

Gamil, Y., Abdullah, M. A., Abd Rahman, I., & Asad, M. M. (2020). Internet of things in construction industry revolution 4.0: Recent trends and challenges in the Malaysian context. Journal of Engineering, Design and Technology, 18(5), 1091–1102. https://doi.org/10.1108/JEDT-06-2019-0164

Ghosh, A., Edwards, D. J., & Hosseini, M. R. (2020). Patterns and trends in Internet of Things (IoT) research: future applications in the construction industry. Engineering, Construction and Architectural Management, 28(2), 457–481. https://doi.org/10.1108/ECAM-04-2020-0271

Goldschmidt, G. (2016). Linkographic evidence for concurrent divergent and convergent thinking in creative design. Creativity Research Journal, 28(2), 115–122. https://doi.org/10.1080/10400419.2016.1162497

Haesevoets, T., De Cremer, D., Dierckx, K., & Van Hiel, A. (2021). Human-machine collaboration in managerial decision making. Computers in Human Behavior, 119, 106730. https://doi.org/10.1016/j.chb.2021.106730

Han, S., Lee, S., & Peña-Mora, F. (2013). Vision-based detection of unsafe actions of a construction worker: Case study of ladder climbing. Journal of Computing in Civil Engineering, 27(6), 635–644. https://doi.org/10.1061/(ASCE)CP.1943-5487.0000279

Hautala, K., Järvenpää, M. E., & Pulkkinen, P. (2017). Digitalization transforms the construction sector throughout asset’s life-cycle from design to operation and maintenance. Stahlbau, 86(4), 340–345.

Hou, L., Wu, S., Zhang, G., Tan, Y., & Wang, X. (2021). Literature review of digital twins applications in construction workforce safety. Applied Science, 11, 339. https://doi.org/10.3390/app11010339.

İlal, S. M., & Günaydın, H. M. (2017). Computer representation of building codes for automated compliance checking. Automation in Construction, 82, 43–58. https://doi.org/10.1016/j.autcon.2017.06.018

Jeong, S., Hou, R., Lynch, J. P., Sohn, H., & Law, K. H. (2019). A scalable cloud-based cyberinfrastructure platform for bridge monitoring. Structure and Infrastructure Engineering, 15(1), 82–102. https://doi.org/10.1080/15732479.2018.1500617

Kelly, K. (2017). The inevitable: Understanding the 12 technological forces that will shape our future. Penguin.

Khan, A., Sepasgozar, S., Liu, T., & Yu, R. (2021). Integration of BIM and immersive technologies for AEC: A scientometric-SWOT analysis and critical content review. Buildings, 11(3), 126.

Kim, J.-M., & Jung, H. (2019). Predicting bid prices by using machine learning methods. Applied Economics, 51(19), 2011–2018. https://doi.org/10.1080/00036846.2018.1537477

Kwok, A. O., & Koh, S. G. (2021). COVID-19 and extended reality (XR). Current Issues in Tourism, 24(14), 1935–1940.

Linden Lab. Second Life. Accessed Nov 10, 2021. https://www.secondlife.com/

Lanxon, N., & Bloomberg. (2021). Welcome to the Metaverse: What it is, who’s behind it, and why it matters. Fortune. Accessed Nov 3, 2021. https://fortune.com/2021/09/29/welcome-metaverse-what-it-is-who-behind-why-matters-matrix-zuckerberg/

Lee, P., Braud, T., Zhou, P., Wang, L., Xu, D., Lin, Z., Kumar, A., Bermejo, C., & Hui, P. (2021). All One Needs to Know about Metaverse: A Complete Survey on Technological Singularity. Virtual Ecosystem and Research Agenda. https://doi.org/10.13140/RG.2.2.11200.05124/7

Legg, S., & Hutter, M. (2007). A collection of definitions of intelligence. Frontiers in Artificial Intelligence and Applications, 157, 17.

Li, X., Yi, W., Chi, H.-L., Wang, X., & Chan, A. P. (2018). A critical review of virtual and augmented reality (VR/AR) applications in construction safety. Automation in Construction, 86, 150–162. https://doi.org/10.1016/j.autcon.2017.11.003

Lu, N., Cheng, N., Zhang, N., Shen, X., & Mark, J. W. (2014). Connected vehicles: Solutions and challenges. IEEE Internet of Things Journal, 1(4), 289–299.

Ma, W., Wang, X., Wang, J., Xiang, X., & Sun, J. (2021). Generative design in building information modelling (BIM):approaches and requirements. Sensors, 21(16), 5439.

Marks, S., & White, D. (2020). Multi-Device Collaboration in Virtual. Environments. https://doi.org/10.1145/3385378.3385381

Microsoft. 2021. Microsoft Inspire 2021. https://myinspire.microsoft.com/home

Mohammadi, N., & Taylor, J. E. (2017). Smart city digital twins. 2017 IEEE Symposium Series on Computational Intelligence (SSCI), 1–5. https://doi.org/10.1109/SSCI.2017.8285439.

Poole, D., Mackworth, A.,& Goebel, R. (1998). Computational Intelligence.

Pramanik, A., Pal, S. K., Maiti, J., & Mitra, P. (2021). Granulated RCNN and multi-class deep sort for multi-object detection and tracking. IEEE Transactions on Emerging Topics in Computational Intelligence.

Radoff, J. (2021). The Metaverse Value-Chain. Medium. Accessed Nov 21, 2021. https://medium.com/building-the-metaverse/the-metaverse-value-chain-afcf9e09e3a7

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster r-cnn: Towards real-time object detection with region proposal networks. In Advances in neural information processing systems. 91–99.

Riaz, Z., Parn, E. A., Edwards, D. J., Arslan, M., Shen, C., & Pena-Mora, F. (2017). BIM and sensor-based data management system for construction safety monitoring. Journal of Engineering, Design and Technology, 15(6), 738–753. https://doi.org/10.1108/JEDT-03-2017-0017

Schölkopf, B., Locatello, F., Bauer, S., Ke, N. R., Kalchbrenner, N., Goyal, A., & Bengio, Y. (2021). Toward causal representation learning. Proceedings of the IEEE, 109(5), 612–634.

Shi, W., Cao, J., Zhang, Q., Li, Y., & Xu, L. (2016). Edge computing: Vision and challenges. IEEE Internet of Things Journal, 3(5), 637–646.

Silva, J. N., Southworth, M., Raptis, C., & Silva, J. (2018). Emerging applications of virtual reality in cardiovascular medicine. JACC: Basic to Translational Science, 3(3), 420–430.

Song, H., Nguyen, A.-D., Gong, M., & Lee, S. (2016). A review of computer vision methods for purpose on computer-aided diagnosis. Journal of International Society for Simulation Surgery, 3(1), 1–8.

Squire, L., Berg, D., Bloom, F. E., Du Lac, S., Ghosh, A., & Spitzer, N. C. (2012). Fundamental neuroscience. New York: Academic press.

Stephenson, N. (1992). Snow Crash. New York: Bantam Books.

Tian, H., Wang, T., Liu, Y., Qiao, X., & Li, Y. (2020). Computer vision technology in agricultural automation—A review. Information Processing in Agriculture, 7(1), 1–19.

3GPP 2018. TR 21.915, Release description, Release 15.

Wang, X. (2007a). Implementation and Experimentation of a Mixed Reality Collaborative Design Space. In International Conference on Computer Supported Cooperative Work in Design. 111–122.

Wang, X. (2007b). Mutually augmented virtual environments for architectural design and collaboration. Computer-Aided Architectural Design Futures (CAADFutures), 2007, 17–29.

Wang, X. (2008). Implementation and Experimentation of a Mixed Reality Collaborative Design Space. In W. Shen, J. Yong, Y. Yang, J. P. A. Barthès, & J. Luo (Eds.), Computer Supported Cooperative Work in Design IV. CSCWD 2007. Lecture Notes in Computer Science (Vol. 5236). Berlin, Heidelberg: Springer. https://doi.org/10.1007/978-3-540-92719-8_11.

Wang, C.-Y., Yeh, I.-H., & Liao, H.-Y. M. (2021a). You Only Learn One Representation: Unified Network for Multiple Tasks. arXiv preprint arXiv:2105.04206.

Wang, Q., Li, R., Wang, Q., & Chen, S. (2021b). Non-fungible token (NFT): Overview, evaluation, opportunities and challenges. arXiv preprint arXiv:2105.07447.

Wang, X., & Chong, H.-Y. (2015). Setting new trends of integrated Building Information Modelling (BIM) for construction industry. Construction Innovation, 15(1), 2–6. https://doi.org/10.1108/CI-10-2014-0049

Wu, C., Wang, X., Wu, P., Wang, J., Jiang, R., Chen, M., & Swapan, M. (2021). Hybrid deep learning model for automating constraint modelling in advanced working packaging. Automation in Construction, 127, 103733.

Wu, C., Wu, P., Wang, J., Jiang, R., Chen, M., & Wang, X. (2020). Critical review of data-driven decision-making in bridge operation and maintenance. Structure and Infrastructure Engineering. https://doi.org/10.1080/15732479.2020.1833946

Wu, C., Wu, P., Wang, J., Jiang, R., Chen, M., & Wang, X. (2021b). Developing a hybrid approach to extract constraints related information for constraint management. Automation in Construction, 124, 103563. https://doi.org/10.1016/j.autcon.2021b.103563

Wu, C., Wu, P., Wang, J., Jiang, R., Chen, M., & Wang, X. (2021c). Ontological knowledge base for concrete bridge rehabilitation project management. Automation in Construction, 121, 103428. https://doi.org/10.1016/j.autcon.2020.103428

Wu, P., Wang, J., & Wang, X. (2016). A critical review of the use of 3-D printing in the construction industry. Automation in Construction, 68(68), 21–31. https://doi.org/10.1016/j.autcon.2016.04.005

Xu, S., Wang, J., Shou, W., Ngo, T., Sadick, A. M., & Wang, X. (2020). Computer vision techniques in construction: A critical review. Archives of Computational Methods in Engineering. https://doi.org/10.1007/s11831-020-09504-3

Yu, W., Li, B., Jia, H., Zhang, M., & Wang, D. (2015). Application of multi-objective genetic algorithm to optimize energy efficiency and thermal comfort in building design. Energy and Buildings, 88, 135–143. https://doi.org/10.1016/j.enbuild.2014.11.063

Zang, H., Zhang, S., & Hapeshi, K. (2010). A review of nature-inspired algorithms. Journal of Bionic Engineering, 7(4), S232–S237.

Zhang, Y., Chen, X., Yang, Y., Ramamurthy, A., Li, B., Qi, Y., & Song, L. (2020). Efficient probabilistic logic reasoning with graph neural networks. arXiv preprint arXiv:2001.11850.

Zhang, Z., Cui, P., & Zhu, W. (2018). Deep learning on graphs: a Survey. IEEE Transactions on Knowledge and Data Engineering, 14(8), 1–24. https://doi.org/10.1109/TKDE.2020.2981333

Zhou, G.-D., Xie, M.-X., Yi, T.-H., & Li, H.-N. (2019). Optimal wireless sensor network configuration for structural monitoring using automatic-learning firefly algorithm. Advances in Structural Engineering, 22(4), 907–918. https://doi.org/10.1177/1369433218797074

Zhu, J., Wang, X., Wang, P., Wu, Z., & Kim, M. J. (2019). Integration of BIM and GIS: Geometry from IFC to shapefile using open-source technology. Automation in Construction, 102, 105–119. https://doi.org/10.1016/j.autcon.2019.02.014

Zuckerberg, M. (2021). Connect 2021. https://www.facebook.com/facebookrealitylabs/videos/561535698440683/

Zweifach, S. M., & Triola, M. M. (2019). Extended reality in medical education: Driving adoption through provider-centered design. Digital Biomarkers, 3(1), 14–21.

Funding

Australian Research Council, LP180100222.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article. Xiangyu Wang is an editorial board member for AI in Civil Engineering and was not involved in the editorial review, or the decision to publish, this article. All authors declare that there are no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wang, X., Wang, J., Wu, C. et al. Engineering Brain: Metaverse for future engineering. AI Civ. Eng. 1, 2 (2022). https://doi.org/10.1007/s43503-022-00001-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43503-022-00001-z