Abstract

Comprehensive school tobacco policies have the potential to reduce smoking in vocational education where smoking is widespread. Assessment of the implementation process is important to understand whether and how complex interventions work, yet many studies do not measure receipt among the target group. We conducted a quantitative process evaluation of a newly developed smoking intervention in Danish vocational education to (1) operationalize measures of delivery and receipt, (2) investigate the extent to which the intervention was delivered and received, and (3) analyze whether this differed across school settings. We used questionnaire data collected 4–5 months after baseline among students (N = 644), teachers (N = 54), and principals (N = 11) from 8 intervention schools to operationalize implementation at the school level (delivery; content, quality, and fidelity) and student level (receipt; participation, responsiveness, exposure, and individual-level implementation). We calculated means and compared levels across school settings using stratified analysis and mixed models. The total intervention was delivered by a mean of 76% according to how it was intended and received by a mean of 36% across all students. Relatively low means of participation and responsiveness indicated challenges to reach the students with the intervention components, and delivery and receipt varied between school settings. This study highlights the challenge of reaching the intended target group in complex health behavior interventions even when reaching relatively high levels of fidelity. Further studies using the operationalized measures can give insight into the ‘black box’ of the intervention and strengthen future programs targeting smoking in vocational education.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Smoking remains a major public health problem with profound socioeconomic inequalities (Hiscock et al., 2012). Daily smoking among European young people is most prevalent among vocational school students compared to academic students (de Looze et al., 2013). This is also the case in Denmark, where 29% of students in vocational education and training (vocational school) (Ringgaard et al., 2020) and 9% of academic high school students smoked daily in 2019 (Pisinger et al., 2019). More vocational school students come from lower socioeconomic backgrounds compared to academic high school students (Statistics-Denmark, 2019). The high smoking prevalence among vocational school students is concerning because it might contribute to the socioeconomic inequalities in health later in life. Frohlich and Potvin (2008) argue that it is necessary to focus on vulnerable populations to address social inequalities in health (Frohlich & Potvin, 2008), such as young people of low socioeconomic position who are more likely to initiate smoking, less likely to succeed in smoking cessation, and more exposed to tobacco’s harms (Hiscock et al., 2012). Thus, smoking interventions, i.e., interventions to prevent initiation, increase cessation, and prevent escalation of smoking are highly needed in vocational schools (de Looze et al., 2013).

School tobacco policies have been widely used in efforts to prevent cigarette smoking in different high school settings (Galanti et al., 2014). Evidence of their effectiveness is inconclusive (Galanti et al., 2014), though recent studies, e.g., a study across six European cities, demonstrated associations between comprehensive school tobacco policies and reduced smoking (Källmén et al., 2020; Mélard et al., 2020). A systematic review suggests that comprehensive smoking bans, clear rules, strict enforcement, and availability of education and prevention might be associated with less smoking in adolescent school settings (Galanti et al., 2014). Several studies point towards enforcement as a key element (Linnansaari et al., 2019; Schreuders et al., 2017).

The prohibition of smoking for all students during school hours (smoke-free school hours) might be preferable to other school tobacco policies, such as the prohibition of smoking at school premises, since the latter might maintain or reinforce visibility of smoking (Schreuders et al., 2017). We tested a newly developed smoking intervention (the “Focus” intervention) of unknown efficacy in a cluster-randomized controlled trial at Danish vocational schools and preparatory basic education in 2018 and 2019. Preparatory basic education is a program for young people below age 25 which is comparable to vocational school in terms of smoking patterns and socioeconomic characteristics. Vocational education will be used as a common term for vocational school and preparatory basic education. The aim of the intervention was to reduce daily cigarette smoking among students through the introduction of a comprehensive school tobacco policy (smoke-free school hours), a course for school staff, an edutainment session, an educational curriculum, a “quit-and-win” competition, and access to smoking cessation support (Jakobsen et al., 2021; Kjeld et al., 2023).

School-based smoking interventions have often targeted primary and secondary schools (Thomas et al., 2013), e.g., a Danish intervention similar to “Focus” which was effective in preventing smoking through a combination of a school tobacco policy, an educational curriculum and parental involvement (Andersen et al., 2015). Less studies have been conducted in vocational schools. An international systematic review of programs targeting use of tobacco, alcohol, and other drugs among youth in alternative high schools, e.g., vocational education, including studies from the US, UK, Europe, and Asia indicated that programs based on motivation enhancement, life skills, and decision making had been most successful among this target group (Sussman et al., 2014). A Danish intervention in vocational schools, “Shaping the Social,” showed that improvement of the social environment increased school connectedness and reduced progression to daily smoking among occasional smokers though the intervention had no effect on smoking among daily smokers (Andersen et al., 2016). A French intervention included peer education components (Cousson-Gélie et al., 2018) which have been effective in preventing smoking among school children (Campbell et al., 2008). A recent Danish intervention, “Smoke-Free Vocational Schools,” demonstrated associations between activities prior to the introduction of a smoke-free school hours tobacco policy and implementation fidelity after 5 and 14 months (Hjort et al., 2022). The activities included new facilities for student school-break activities that replace social smoking through a participatory workshop, a workshop to prepare staff for implementing the school tobacco policy, and fixed procedures for policy implementation (Hjort et al., 2022). However, to our knowledge, no smoking interventions including school tobacco policies in vocational education have been evaluated in a randomized study design. The “Focus” intervention presented in this paper combined a comprehensive school tobacco policy with educational and preventive components which might increase effectiveness (Galanti et al., 2014), was evaluated as a cluster-RCT, and included the preparatory basic education program.

A Danish national policy imposed all upper-secondary education institutions with students under 18 years, including vocational education, to introduce a smoke-free school hours tobacco policy in 2021. A survey from 2022 showed that 81% of daily or occasional smokers from vocational education still smoked during school hours (Petersen et al., 2022) indicating that the policy is not properly implemented. The effect evaluation of the “Focus” intervention, which was conducted prior to the national law, showed no statistically significant difference in smoking status between students from intervention and control schools 4–5 months after baseline (Kjeld et al., 2023) though per protocol analyses indicated effectiveness on daily and regular smoking among students in schools that implemented the full intervention compared to the control group (daily smoking: OR 0.44, 95% CI 0.19, 1.02) (Kjeld et al., 2023). However, given the simple dichotomization (i.e., full vs. partial intervention) of implementation not underpinned by a conceptual framework, it remains unclear how the implementation process and the implementation of the single intervention components (or their interrelations) can explain the outcomes. A systematic process evaluation including thorough assessment of the implementation can help clarify whether improved implementation of the intervention components, including smoke-free school hours, can reduce smoking in vocational education.

Assessment of the implementation process of complex interventions is essential to understand whether, and how, they are effective (Carroll et al., 2007; Durlak & DuPre, 2008). It can help avoid type III error that occurs when an inadequately implemented program is being evaluated (Basch et al., 1985; Dobson & Cook, 1980). This might cause for the wrong conclusions being drawn about whether null results are due to wrongful assumptions in the program theory (theory failure) or poor implementation of the program (implementation failure) (Linnan & Steckler, 2002). A range of theories, models, and frameworks have been published within the area of process evaluation and implementation science (Carroll et al., 2007; Damschroder et al., 2009; Durlak & DuPre, 2008; Glasgow et al., 1999; Linnan & Steckler, 2002; Martinez et al., 2014; Proctor et al., 2011; Skivington et al., 2021). Yet, the measurement of implementation in quantitative analyses often cover single or few aspects such as fidelity and dose (Durlak & DuPre, 2008). Indeed, a recent systematic review of school health policy implementation measurement tools showed that fidelity, i.e., the extent to which programs are delivered as intended, was the most commonly assessed implementation outcome (McLoughlin et al., 2021). The conceptual framework for implementation fidelity by Carroll et al. (2007) illustrates how fidelity is interrelated with other factors such as intervention complexity, facilitation strategies, quality of delivery, and participant responsiveness that can moderate the level of fidelity achieved and thus emphasize the complexity of the implementation process. Similar to Carroll et al. (2007), other prominent implementation frameworks include aspects of participants’ receipt, i.e., how far they are exposed to and how they respond to interventions, such as reach, dose received, and participant responsiveness (Carroll et al., 2007; Glasgow et al., 2019; Linnan & Steckler, 2002). However, these aspects are measured less frequently (Ferm et al., 2018). Thus, we applied a model developed by Ferm et al. (2018) conceptually based on the framework for implementation fidelity by Carroll et al. (2007) for a comprehensive evaluation of the implementation process, which includes quantifiable measurements of delivery (organizational level) and receipt (individual level). The model is based on the program theory and includes multiple aspects of implementation including dose delivered, content, quality, participation, and responsiveness (Carroll et al., 2007; Ferm et al., 2018).

We included a variety of vocational programs in the intervention with different professional orientations, organizational capacities, student compositions, etc. (Hjerteforeningen, 2017; Ringgaard et al., 2020) to increase the representativeness of the setting. Based on previous research and because implementation is known to be greatly influenced by factors related to the context in which they occur (Craig et al., 2018; Durlak & DuPre, 2008; Gaber et al., 2022; Minary et al., 2018; Nilsen & Bernhardsson, 2019; Waller et al., 2017), we expect that implementation varies between the included school settings. The objectives of this study are as follows:

-

(a)

To operationalize quantitative measurements of implementation at the school level (delivery) and student level (receipt) for the total intervention and each intervention component

-

(b)

To assess the extent to which the intervention was delivered and received as intended

-

(c)

To examine whether delivery and receipt differed across school settings

Methods

The “Focus” Intervention

Setting

Danish vocational school is an upper-secondary education for a skilled profession within four main subject areas: (1) Care, health, and pedagogy (e.g., social and health care assistant); (2) Administration, commerce, and business service (e.g., office assistant); (3) Food, agriculture, and hospitality (e.g., chef); and (4) Technology, construction, and transport (e.g., electrician). It consists of a two-part school-based basic program and a main program that alternates between school and apprenticeships in approved workplaces (Statistics-Denmark, 2019). The “Focus” intervention is intended for older adolescents (aged 15–19 years) and young adults (aged 20–24 years) attending the first part of the basic program, which is mandatory for students who completed lower secondary school less than two years ago (Statistics-Denmark, 2019), the second part of the basic program, or preparatory basic education. Preparatory basic education is for young people below 25 years who has completed lower secondary school and need to improve professionally, personally, or socially to proceed to the labor market or upper-secondary education, often vocational school. The program is mostly school-based but can also involve internships and includes a large amount of individual guidance.

Intervention Components and Implementation Strategies

The intervention was developed in 2017–2018 based on the stages of the Behavior Change Wheel model (Michie et al., 2011) and informed by Intervention Mapping, including a thorough qualitative needs assessment study and expert and stakeholder involvement, and it was underpinned by current evidence, the “Capability Opportunity Motivation—Behavior” (COM-B) model of behavior change, self-determination theory (Ryan & Deci, 2000), and social–ecological theories. The preliminary program was feasibility tested among the target group and adjusted accordingly. Since the program was newly developed, its efficacy was unknown prior to the intervention period and the aim of the cluster-RCT was to test the effectiveness of the intervention in reducing smoking among students in vocational education.

The intervention consists of six intervention components: (1) The introduction and enforcement of a comprehensive school tobacco policy, “smoke-free school hours,” i.e., students, staff, and visitors were not allowed to smoke during school hours. (2) Two-day external course for 2–4 staff members in short motivational counseling about smoking of young people organized by the Danish Cancer Society. The staff course was also intended to facilitate supportive, non-judgmental enforcement of the school tobacco policy. (3) An edutainment session, i.e., a one-time event at the beginning of the school year for students performed by an external professional actor to promote knowledge about nicotine dependency, the consequences of smoking, individual risk of illness, and common misperceptions about smoking delivered with a combination of educational and entertaining elements. The session also served the purpose of making students aware of the new school tobacco policy and the smoking cessation support offer which was introduced during the session. (4) An educational curriculum of eight sessions delivered by teachers in classes designed to challenge students’ beliefs, attitudes, and possible misperceptions about smoking, stimulate reflection about own smoking behavior and its social influence, and provide opportunities to form social relations and activities as alternatives to smoking. (5) A class-based “quit-and-win” competition with the aim of increasing students’ motivation for and support in each other for abstaining from smoking. The competition entailed measurements of carbon monoxide levels in students’ breath two times during the intervention period by student assistants from the project group. The class with the biggest average reduction in (or maintenance of low) carbon monoxide level won a prize. (6) Access to smoking cessation support for all students and staff provided by the National Quitline through individual telephone counseling. Detailed descriptions of each intervention component are published elsewhere (Jakobsen et al., 2021; Kjeld et al., 2023).

As for strategies to support the implementation of the components, guidelines for implementation of the intervention components were distributed to each school in the form of a printed booklet prior to the intervention period providing an outline of the intervention activities and recommendations of how to implement them. The educational material was also provided in a printed booklet to the schools to be handed out to the teachers with instructions and recommendations of how to use it in class. Moreover, the project group visited each school to present the intervention components and discuss potential barriers and solutions prior to the beginning of the intervention period. Posters were distributed to the schools communicating the project identity, messages about the tobacco policy, and instructions for signing up for the National Quitline smoking cessation support offer.

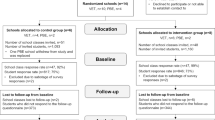

Study Design and Participants

The intervention was evaluated as a cluster-randomized controlled trial running over two periods in 2018 and 2019, respectively, from August (2018/2019) to January (2019/2020). The overall aim was to prevent and reduce daily smoking (Jakobsen et al., 2021). A total of 14 schools were included with 8 schools in the intervention group and 6 schools in the control group. Randomization was stratified by school type, so 3 intervention schools covered the social and health care assistant education within the care, health, and pedagogy main subject area (social and health care schools), 3 schools covered educations within the main subject areas of technology, construction, and transport and administration, commerce, and business service (technical and commercial schools), and 2 schools covered the preparatory basic education (preparatory schools). After randomization, one of the two preparatory schools proclaimed that they would not be able to implement the full intervention due to organizational changes and a new management. To adhere to the intention-to-treat principle, the school remained in the study. Further details on recruitment and randomization can be accessed in the study protocol and effect evaluation papers (Jakobsen et al., 2021; Kjeld et al., 2023). The present study is based on data from the intervention schools.

Data Collection

Students from the 8 intervention schools were invited to answer web-based questionnaires during school hours in the first week of school in August (baseline) and 4–5 months later in December or January (follow-up). Both questionnaires included items regarding primary and secondary outcomes. The follow-up questionnaire also included items regarding implementation, i.e., students’ participation in and responsiveness to the intervention components informed by the framework for implementation fidelity (Carroll et al., 2007) and developed by the project group with inspiration from surveys to similar populations (Bast et al., 2016; Ringgaard et al., 2020; Hjort et al., 2021a). Questionnaires for teachers and principals were distributed at the beginning of the school year and three months later. We invited those members of the management from each school who had been involved with the intervention components to answer the principal questionnaire. We asked the principal or contact person from each school to invite all teachers who had been involved in the educational curriculum and at least two other teachers to answer the teacher questionnaire, thus, it was up to each school to decide how many teachers to invite. Online Resource 1 presents numbers of invited and responding students, teachers, and principals from each of the 8 intervention schools. The questionnaire at three months covered the schools’ delivery of the intervention components including the content delivered and different aspects of the quality of delivery. More details on the data collection are published elsewhere (Jakobsen et al., 2021). The preparatory school that was challenged in implementing the intervention received reduced versions of the questionnaire.

Study Population of the Present Study

644 students who answered the implementation questionnaire (response rate 56%) 4–5 months after baseline were included in the study. 54 teachers (response rate 35%) and 11 principals (response rate 34%) who answered the implementation questionnaires were included in the study (Online Resource 1).

Implementation Measurement: Conceptual Framework and Model for Quantification

This study draws on the conceptual framework by Carroll et al. (2007) and on Ferm et al.'s (2018) model to quantify implementation.

Conceptual Framework of Implementation Fidelity

The framework for implementation fidelity by Carroll et al. (2007) differentiates itself from other comprehensive frameworks by clarifying the functions of each concept and their relationships with each other. It includes the concept of adherence, equivalent to implementation fidelity, defined as the extent to which those responsible for delivering the intervention adhere to how it was intended to be delivered in terms of the subcategories content, coverage, frequency, and duration. Content is described as the “active ingredients” of the intervention, e.g., treatment, skills, or knowledge to be delivered to the participants. Four concepts can potentially moderate the level of implementation fidelity achieved: intervention complexity, facilitation strategies, quality of delivery, and participant responsiveness (Carroll et al., 2007). Quality of delivery refers to the manner with which the intervention is delivered in order to achieve what was intended (Carroll et al., 2007). Participant responsiveness refers to participants’ attitudes towards, or engagement in, an intervention, and is rooted in Rogers’ diffusion of innovation theory (Carroll et al., 2007; Rogers 2003), that is, the acceptability of an intervention can moderate implementation fidelity through, e.g., deliverers’ non-compliance with or avoidance of certain parts of an intervention due to lack of interest or resistance from the participants (Carroll et al., 2007). What Carroll et al. (2007) describe as concepts will be termed “dimensions” onwards.

Model to Quantify Implementation Fidelity

We applied Ferm et al.’s model to quantify implementation (Ferm et al., 2018) which is based on the implementation fidelity framework described above (Carroll et al., 2007). It quantifies delivery (dose delivered, content, quality, fidelity, and organizational-level implementation) and receipt (participation, responsiveness, exposure, and individual-level implementation) (Ferm et al., 2018), and encompasses three steps: (1) Development of a program logic that specifies the theoretical assumptions of how the intervention is expected to work, and an intervention protocol which is an operationalized program logic addressed at the intervention deliverers. The step includes a specification of how important fidelity is compared with adaptation, i.e., should the protocol be strictly followed or are there room for local adaptations to the target group (Ferm et al., 2018). (2) A priori specifications of measurable success criteria for the complete and acceptable delivery of each intervention component. The success criteria should incorporate the specifications of the importance of fidelity versus adaptation from the previous step as to take into account the deliverers’ adherence to those local adaptations which were allowed or encouraged in the intervention protocol. (3) A priori specifications of measurable success criteria for the receipt of the intervention components among the target group (Ferm et al., 2018).

Implementation Measurement: Operationalization for the “Focus” Intervention

Program Logic and Intervention Protocol

We developed a program theory (Online Resource 2), distributed implementation guidelines to the intervention schools, and developed an implementation questionnaire prior to the intervention period. After the intervention period and data collection, we adopted the approach by Ferm et al. (2018). Thus, we reviewed the program theory and guideline documents in the light of the model’s first step. For example, we clarified the extent to which adaptation had been allowed and how this had been communicated to the intervention deliverers. The implementation guidelines reflected that “Focus” was designed with room for local adaptability (Carroll et al., 2007; Durlak & DuPre, 2008).

Quantification of Delivery and Receipt

We defined measurable success criteria for delivery and receipt (Table 1, Online Resource 3) based on the program theory, the procedures in step 1, and scientific discussions within the project group. We used data from the teacher and principal questionnaires to quantify delivery. We assigned a percentage reflecting the success criteria for delivery obtained at the school level for the implementation dimensions content and quality of delivery; 100% for optimal delivery, 50% for acceptable delivery, and 0% if none of these were obtained. Ferm et al. (2018) suggest calculating dose delivered as the number of delivered sessions out of intended sessions, but this was not applicable to the structural delivery of the school tobacco policy. Thus, we left out dose delivered in the present study. Principal and teacher data covered different aspects of content and quality. We expected that members of the management possessed knowledge about general topics at school such as the enforcement and communication of the tobacco policy, whereas teachers were aware of specific issues that took place in the classroom, etc. Project logbooks were the source of information in cases where the project group or other external actors were responsible for the delivery, e.g., the edutainment session. The level of fidelity of each component was calculated as the mean of content and quality (Ferm et al., 2018) (Tables 1 and 2):

In the original model, dose delivered was used to calculate organizational-level implementation as the mean of dose delivered and fidelity (Ferm et al., 2018) but as dose delivered was not included, organizational (school)-level implementation was equal to fidelity. Fidelity of the total intervention was calculated as the mean fidelity of all six components.

We used student questionnaire data to quantify receipt. We assessed students’ participation in and responsiveness to the intervention components according to the pre-specified success criteria; no (0%), acceptable (50%), or optimal (100%) receipt. The staff course was not assessed at the individual level. Students’ exposure and individual-level implementation were then calculated from the school-specific fidelity percentage and student-specific participation and responsiveness percentages. Each student’s exposure to each intervention component was calculated as the school-specific fidelity times the student-specific participation (Ferm et al., 2018) (Tables 1 and 2):

However, as we did not have appropriate data for measuring participation in the structurally delivered tobacco policy such as absence data, participation was not measured for this component.

Individual-level implementation was calculated as exposure times responsiveness (Ferm et al., 2018) (Tables 1 and 2):

Individual-level implementation of the total intervention was calculated as the mean individual-level implementation of all components. Table 2 shows the implementation dimensions of the original model and the present study. An example of assigned percentages and calculations is provided in Online Resource 4.

Statistical Analyses

SAS 9.4 was used to calculate the implementation measurements and for statistical analyses. We calculated all operationalized dimensions as a percentage (0–100) for each school or student. School settings were divided into three categories: (1) social and health care schools, (2) technical and commercial schools, and (3) preparatory schools. To assess the extent to which the intervention was implemented at the school level, we calculated the mean percentage of each delivery dimension (content, quality, fidelity) across all schools. To assess the extent to which the intervention was implemented at the individual level, we calculated the mean percentage of each receipt dimension (participation, responsiveness, exposure, individual-level implementation) across all students. To investigate whether delivery and receipt differed between the school settings, we stratified the mean fidelity by school settings, and used the PROC MIXED procedure, to account for the clustered data structure, to compare the means of individual-level implementation by school settings with 95% confidence intervals and p-values. We assumed that the smoke-free school hours policy was an important component. Thus, we performed sensitivity analyses to consider whether different weighting factors of the tobacco policy in the calculation of implementation of the total intervention would alter the results (weighted means).

Principles for Coding, Calculations, and Missing Data

To calculate the implementation measurements, we used the most frequent teacher or principal response if there were differing responses to the same question within the same school. If the responses were equally distributed, the most moderate response was selected. The corresponding teacher response, if any, was used if a response was missing in the principal data. When a dimension consisted of several aspects such as quality of delivery of the tobacco policy (the mean of 5 aspects) and a school or student had a missing value in one or more of the aspects, the mean of the available aspects was calculated. We used the same principle when a third dimension was calculated from two dimensions such as fidelity, that is, if a school had a missing value in, e.g., content, fidelity was equal to quality.

Results

Characteristics of the Study Population

About half of all students were identified as female; this varied from 85% at social and health care schools to 23% at technical and commercial schools (Table 3). About half (54%) were in the youngest age group of 15–17 years, but this varied across the school settings. Daily smoking at baseline was highest among preparatory students (41%) and lowest among technical and commercial students (21%). Of the 54 teachers, 21 taught either the first, second, or both parts of the basic program whereof 5 teachers also taught other types of classes, 12 taught other classes such as commercial high school or preparatory basic education, and 5 had other job functions such as administration of internships. Of the 11 principals, 2 were the principal or vice principal of the school, 8 were heads of education, and 2 had other job titles, e.g., head of development. 74% of teachers and 67% of principals had been employed at their school for more than 3 years.

To Which Extent was the Intervention Delivered as Intended?

The total intervention was delivered with 76% success according to the program theory (fidelity), and mean fidelity of the separate components ranged from 56 to 100% (Table 4). For the tobacco policy component, the content was delivered with a mean of 63% across all schools. Quality in terms of enforcement, having a written policy, and the provision of information about the policy ranged from 83 to 90%, while having a dialog was 67%. In terms of content, the educational curriculum was delivered with a mean of 56%, while the components delivered by external partners (staff course, competition, edutainment session) were delivered with a mean of 100%. The mean quality scores for the staff course, smoking cessation support, and edutainment session ranged from 53 to 81%.

To Which Extent was the Intervention Received as Intended?

A mean of 36% implementation (individual-level implementation) of the total intervention was achieved across all students, and individual-level implementation of the separate intervention components ranged from 23 to 48% (Table 4). For the tobacco policy component, a mean responsiveness of 54% resulted in a mean individual-level implementation of 37% and indicated that some students had negative attitudes towards the policy. A third of all students (30%) disagreed or totally disagreed that it is fair that the school makes rules for whether students are allowed to smoke during school hours (not shown in tables). Mean participation in the remaining components ranged from 56 to 69% and were lowest for the smoking cessation support offer. The educational curriculum was received with a mean of 73% responsiveness, while this ranged from 48 to 49% for the competition and edutainment session.

Did Delivery Differ Across School Settings?

The mean fidelity of the total intervention was highest in social and health care schools (89%) followed by technical and commercial schools (75%) and lowest in preparatory schools (57%) (Table 4). This was also the case for the tobacco policy, and fidelity was especially low for preparatory schools (25%) because one school decided not to implement the full intervention. The school scores 0% in all dimensions of delivery since it did not make any changes to the school tobacco policy the given school year. The three school settings did not differ clearly from each other in terms of fidelity to the other intervention components except the educational curriculum where mean fidelity was much lower at preparatory schools (25%) compared to the two other school settings (67%).

Did Receipt Differ Across School Settings?

Individual-level implementation of the total intervention was highest in social and health care schools (52%) followed by technical and commercial schools (29%) and lowest in preparatory schools (21%). This was also the case for all intervention components without exceptions (Table 4). The mean differences in individual-level implementation across school settings of the total intervention and all separate intervention components were statistically significant when comparing social and health care schools against technical and commercial schools and preparatory schools, respectively (Table 5). When comparing the two latter school settings, however, the mean differences were only slightly higher for technical and commercial schools and only statistically significant for the total intervention, and the educational curriculum and class competition components.

Sensitivity analyses where the tobacco policy was weighted more than the other intervention components showed that the means of individual-level implementation did not change, and the means of fidelity were reduced by 0.7–1.1 percentage point for every half increase in weighting factor of the policy (Online Resource 5).

Discussion

This study presents an operationalization of quantitative measurements of implementation of a school-based multi-component intervention to reduce smoking in Danish vocational education. We found that the total intervention was delivered by the schools with a mean of 76% success according to how it was intended to be delivered and received at the individual level by a mean of 36% across all students. Delivery and receipt varied between the school settings with higher levels at social and health care schools. Receipt (individual-level implementation) mostly varied between social and health care schools compared to technical and commercial schools and preparatory schools, and not clearly between the two latter school settings.

The findings indicate that the schools delivered the components with relatively high fidelity according to how it was intended, but that the components were less optimally received by the students. We found that many students had negative attitudes towards the policy reflected as low responsiveness, and we found rather low means of responsiveness to the competition and edutainment components and participation in the smoking cessation support offer (measured as awareness of the offer) indicating that these components did not reach or appeal to the students as expected. However, for the educational curriculum, the results indicate that the schools were challenged in delivering the material, but that the material appealed to the students.

Comparison to Other Studies

Levels of Delivery and Receipt

The overall mean fidelity score of 76% and the range across intervention components are in line with other studies evaluating delivery of school-based health promotion interventions (Bast et al., 2019; Durlak & DuPre, 2008; Koorts et al., 2022). Large variations in the use of conceptual frameworks and operationalization challenge the comparison of levels across studies (Martinez et al., 2014). A similar tobacco policy intervention in Danish elementary schools was delivered by 74% implementation fidelity operationalized as a combined measure of adherence and quality (Bast et al., 2019). To our knowledge, no evident benchmarks for successful implementation are available in the literature. Some authors define high implementation as a level greater than 67% based on the division of 100% into tertiles (Koorts et al., 2022) though Durlak and DuPre (2008) point out that what is considered low or high implementation is dependent on the study. A level of around 60%, they argue, is common and levels above 80% are rare (Durlak & DuPre, 2008). Considering that 50% was defined as acceptable and 100% was defined as optimal in the present study, 76% might be interpreted as somewhere in between acceptable and optimal. Likewise, the overall mean individual-level implementation of 36% could be interpreted as lower than acceptable in terms of the intended level. In social and health care schools, mean individual-level implementation was above 50% for the total intervention and 3 out of 5 intervention components, and receipt might therefore be interpreted as acceptable in this school setting.

Students’ Responses to School Tobacco Policies

The negative attitudes towards the tobacco policy resonate with findings from other studies. Resistance from students who smoke persistently and students from disadvantaged backgrounds was identified as a barrier to implementation of school tobacco policies across seven European cities (Hoffmann et al., 2020). Further, Schreuders et al. (2017) examined students’ cognitive and behavioral responses to school tobacco policies and how they relate to policy implementation and enforcement. They found that school tobacco policies can trigger mechanisms leading to reduced smoking but also associated counter-vailing mechanisms that can disrupt this process. For example, students may break the rules for smoking when they have no sympathy for the school’s decision to prohibit smoking because they internalize the belief that smoking asserts their personal autonomy (Schreuders et al., 2017) indicating potential reverse effects of school tobacco polices on smoking when implementation is insufficient or inconsistent. Thus, this study suggests a highly complex relationship between school tobacco policies and adolescents’ smoking behavior closely interlinked with implementation processes.

Differences Across School Settings

To our knowledge, no other studies have investigated differences in implementation of a smoking intervention across different upper-secondary school settings. The higher levels of implementation in social and health care vocational schools might be explained by these schools’ inherent focus on health which is in line with the intervention’s aim to prevent unhealthy behavior and may have increased the schools’ motivation to implement (Scaccia et al., 2015). Motivation and compatibility between the innovation and the existing values and norms of the school (Scaccia et al., 2015; Rogers, 2003) are central components of organizational readiness, which is known to influence the implementation process (Domitrovich et al., 2008; Durlak & DuPre, 2008; Dusenbury et al., 2003; Nilsen & Bernhardsson, 2019; Scaccia et al., 2015). This may also reflect that managers at social and health care vocational schools believe that health promotion is a school responsibility which has been identified as a facilitator for developing organizational readiness to implement smoke-free school hours in Danish vocational education in a recent study (Hjort et al., 2021b).

The low implementation rates at preparatory schools might be related to contextual influences. Firstly, the preparatory schools were involved in a merge process at the national level the same year as the schools participated in the intervention. The heavy workload and organizational changes complicated the schools’ delivery of the intervention components. Secondly, the schools enroll young people classified as NEET (not in education, employment, or training), who often struggle with social and psychological issues (Gariépy et al., 2022) and therefore might be harder to reach with school-based programs (Mercken et al., 2012; Bast et al., 2018). Thus, it seems that though the “Focus” intervention targeted a vulnerable population rather than employing a population approach to avoid contributing to, and hopefully prevent, the health disparities associated with smoking (Frohlich & Potvin, 2008), the most vulnerable subgroup within the targeted setting was the hardest to reach. This finding is rather concerning in terms of social inequalities in health. According to Frohlich and Potvin (2008), vulnerable populations share social characteristics that put them at risk of risks, i.e., a higher mean of total risk exposure than the rest of the population (Frohlich & Potvin, 2008). Thus, to address smoking behavior in preparatory basic education in the future, more knowledge about this setting is needed and a more holistic, cross-disciplinary approach might be necessary, including the involvement of different stakeholders and services and focusing on social and psychological issues such as mental well-being and drug abuse (Gariépy et al., 2022).

Operationalization of Implementation Measurements

Other studies have quantified implementation in process evaluations (Hasson, 2010; Koorts et al., 2022; McLoughlin et al., 2021; Salahuddin et al., 2018) with regard to smoking interventions and school tobacco policies (Hjort et al., 2022; McLoughlin et al., 2021; Rozema et al., 2018), though many have addressed smoking cessation (Begum et al., 2021) or younger age groups (Bast et al., 2016, 2019; Dobbie et al., 2019). A recent study examined the implementation of a smoke-free school hours policy within the same setting and target group (Hjort et al., 2022) inspired by the approach for measuring implementation fidelity described by Bast et al., (2016, 2019). This approach entails pre-specification of cut-points for when implementation is achieved or not achieved based on adherence, dose, quality of delivery, and participant responsiveness. Bast et al. (2019) assessed three main intervention components of a smoking intervention in elementary schools as implemented or not implemented for each pupil. Pupils were then grouped according to how many components they had been exposed to, whereas individual-level implementation in the present study and Ferm et al.’s model was operationalized as a percentage for each student or participant, i.e., a degree, and not either/or. This broader scale of implementation degree might be more suitable for capturing the nuances of organizations’ delivery of interventions, particularly complex public health interventions that are often adaptive, i.e., flexible in the delivery to improve the fit to the local context and thereby enhance effectiveness (Durlak & DuPre, 2008; Pérez et al., 2016). Thus, applying acceptable as well as optimal levels of implementation are beneficial to encompass the schools in the “gray zone” whose efforts might otherwise be categorized as “not implemented.” Furthermore, Ferm et al.’s approach enables more detailed analysis of potential dose response relationships between implementation and outcomes.

Methodological Considerations

Major strengths of the present study include the use of a comprehensive conceptual framework (Carroll et al., 2007) and model (Ferm et al., 2018) that consider the relationship between the implementation dimensions and take into account adaptations to the intervention (Carroll et al., 2007; Durlak & DuPre, 2008; Pérez et al., 2016), and the use of multiple data sources (students, teachers, principals) to capture multiple aspects of the implementation of a complex intervention. Moreover, the collection of student data in school increased response rates and we were able to reach groups that are normally at risk for non-response (Smit et al., 2009). The present study also has some limitations. First, the model by Ferm et al. (2018) was applied after the questionnaire development and data collection, so we did not have appropriate measurements of all implementation dimensions for all components. This implicated that comparisons of implementation scores between the components should be interpreted with caution. For example, comparing the tobacco policy and the smoking cessation support component is difficult because we had access to more detailed information on content and quality for the former and only on the quality of the latter. Thus, the fidelity level of the cessation component is attached with more uncertainty than that of the tobacco policy. Second, we were challenged in reaching the teachers who were involved with the educational curriculum at school due to low response rates, which might implicate that the included teachers have limited knowledge about the delivery of the curriculum component. Third, the relatively high levels of delivery might be subject to some degree of information bias. The measurements rely on self-reports from school staff and may therefore be associated with social desirability (Goldberg Lillehoj et al., 2004), but provider self-reports are often the most feasible solution in large interventions and the staff have unique perspectives on the implementation process (Goldberg Lillehoj et al., 2004). Thus, we believe that provider self-reports represented the best source of information. Fourth, many schools declined to participate during the recruitment process as they were reluctant to implement the tobacco policy. Thus, the managements at the included schools are expected to be rather resourceful and delivery rates of the tobacco policy might have been lower if the intervention was conducted in a random sample of vocational schools. Further, this selection bias could also apply to the student level because schools with more resourceful managements might have better social climates which might influence, e.g., students’ participation in and responsiveness to the intervention components. Also, the high level of non-response from teachers and principals might have resulted in selection bias because the staff at schools in which the implementation was weak might have been less likely to respond to the questionnaire. We analyzed differences in students’ mean participation and responsiveness between schools according to whether principals did or did not respond to the implementation questionnaire (Online Resource 6) and found no significant variation as for the tobacco policy and edutainment session but significantly lower mean participation in the curriculum, class competition, and smoking cessation support at the school with 0 principal responses. This indicates that schools who implemented the components to a greater extent are better represented in the data of the present study. Another methodological consideration was that the six intervention components were treated as equally important although it was hypothesized in the program theory that the tobacco policy was an important component central to the intervention. We did not weigh the components for the calculation of the implementation (individual-level implementation and fidelity) of the total intervention as there was no apparent weighting factor available from the literature, making the choice of weighting factors arbitrary. However, we performed sensitivity analyses, and since we observed little to no changes in the means when different weighting factors of the tobacco policy component were used, we found the absence of a weighting factor acceptable. Finally, it should be noted that distinguishing between what entails the intervention and what entails the strategies to implement the intervention is known to be challenging and there is a gray area in between (Eldh et al., 2017). We were not familiar with the literature on implementation strategies (Powell et al., 2015) in the design process of the intervention in 2017, thus, we retrospectively became aware that some parts of the intervention could be classified as implementation strategies. More explicit attention to implementation strategies might have strengthened the implementation process. Selecting appropriate strategies can be a complex task and tailoring which strategies to use in different settings according to local needs, as suggested and guided by Powell et al. (2017), might be a way forward to make strategies more contextually sensitive and enhance implementation outcomes (Powell et al., 2017).

Conclusions

This study has various implications for research and practice within the field of school-based smoking programs and complex interventions in general. The results show a discrepancy between the delivery and receipt of the intervention; the components were generally delivered with relatively high levels of fidelity, although some schools were more challenged, but the receipt at the student level was lower and differed greatly between the school settings. This finding highlights the inadequacy of assessing organizations’ delivery of interventions according to protocol only and the importance of assessing the uptake of the intervention among the intended target group (i.e., the students) expected to change their health behavior. Being able to identify suboptimal receipt is essential, but it is also important to investigate why the target group is not reached. The findings from the present study could give rise to further quantitative analyses using the operationalized measurements to gain more knowledge about how vocational students can be reached better in future smoking programs, e.g., analyses to identify school-level and student-level determinants for lower or higher implementation degree. As the “Focus” intervention was newly developed with unknown efficacy, and we found no overall effect on students’ smoking status (Kjeld et al., 2023) the results of the present study can be used to interpret this null finding and shed light on the role of the implementation process in the program’s effectiveness. This paper points towards a combination of theory and implementation failure, that is, most components were delivered with high fidelity but less successfully received while others were hard to deliver but well received, indicating a complexity in the implementation process that can only partly be captured with quantitative methods. A comprehensive qualitative process evaluation (not yet published) has been conducted during the intervention period providing in-depth knowledge about participant perspectives and the role of context that can further unfold the complex interactions between intervention and context and give insight into the ‘black box’ of how the intervention works (Skivington et al., 2021). Though shortcomings to the delivery and receipt have been detected in this paper, we also found varying degrees of receipt among the students and school types. Thus, a next step is to investigate whether higher degree of individual-level implementation leads to changes in the hypothesized outcomes, i.e., to empirically test the assumptions of the program theory. A strengthened knowledge base can provide decision makers with specific recommendations to improve programs targeting smoking behavior in high-risk school settings such as vocational education onwards. As for the external validity of the present study and the “Focus” intervention in general, the results might be transferable to other countries experiencing high smoking prevalence in vocational education or similar adolescent settings. Upper secondary school settings in other European countries such as those in the “Smoking Inequalities Learning from Natural Experiments—Renew” (SILNE-R) project studies (Netherlands, Belgium, Germany, Finland, Portugal, Italy, and Ireland) seem comparable to the Danish vocational setting in terms of the organizational and political context for implementing comprehensive school tobacco policies (Hoffmann et al., 2020; Schreuders et al., 2019; Mélard et al., 2020; Hjort et al., 2021b). Further, the study demonstrates how Ferm et al.’s model (2018) can be used to operationalize and assess delivery and receipt of a complex school-based multi-component intervention. We found this model to be a feasible tool in process evaluation to pinpoint implementation successes and shortcomings. As a smoke-free school hours tobacco policy has been mandated by law in vocational education in Denmark from 2021, future research should address potential implementation strategies (Powell et al., 2015) to promote the uptake of the policy into routine practice.

Data Availability

The data that support the findings of this study are available from University of Southern Denmark (SDU) but restrictions apply to the availability of these data, which were used under license for the current study, and so are not publicly available. Data are, however, available from the authors upon reasonable request and with permission of University of Southern Denmark (SDU).

References

Andersen, A., Krølner, R., Bast, L., Thygesen, L., & Due, P. (2015). Effects of the X: IT smoking intervention: A school-based cluster randomized trial. International Journal of Epidemiology. https://doi.org/10.1093/ije/dyv145

Andersen, S., Rod, M. H., Ersbøll, A. K., Stock, C., Johansen, C., Holmberg, T., Zinckernagel, L., Ingholt, L., Sørensen, B. B., & Tolstrup, J. S. (2016). Effects of a settings-based intervention to promote student wellbeing and reduce smoking in vocational schools: A non-randomized controlled study. Social Science & Medicine, 161, 195–203. https://doi.org/10.1016/j.socscimed.2016.06.012

Basch, C., Sliepcevich, E., Gold, R., Duncan, D., & Kolbe, L. (1985). Avoiding type III errors in health education program evaluations: A case study. Health Education Quarterly, 12, 315–331. https://doi.org/10.1177/109019818501200311

Bast, L. S., Andersen, A., Ersbøll, A. K., & Due, P. (2019). Implementation fidelity and adolescent smoking: The X: IT study—A school randomized smoking prevention trial. Evaluation and Program Planning, 72, 24–32.

Bast, L. S., Due, P., & Andersen, A. (2018). Social differences in implementation of a school based smoking preventive intervention. Tobacco Induced Diseases. https://doi.org/10.18332/tid/83842

Bast, L. S., Due, P., Bendtsen, P., Ringgard, L., Wohllebe, L., Damsgaard, M. T., Grønbæk, M., Ersbøll, A. K., & Andersen, A. (2016). High impact of implementation on school-based smoking prevention: The X:IT study-a cluster-randomized smoking prevention trial. Implementation Science, 11(1), 125. https://doi.org/10.1186/s13012-016-0490-7

Begum, S., Yada, A., & Lorencatto, F. (2021). How has intervention fidelity been assessed in smoking cessation interventions? A systematic review. Journal of Smoking Cessation, 2021, 6641208. https://doi.org/10.1155/2021/6641208

Campbell, R., Starkey, F., Holliday, J., Audrey, S., Bloor, M., Parry-Langdon, N., Hughes, R., & Moore, L. (2008). An informal school-based peer-led intervention for smoking prevention in adolescence (ASSIST): A cluster randomised trial. Lancet, 371, 1595–1602. https://doi.org/10.1016/S0140-6736(08)60692-3

Carroll, C., Patterson, M., Wood, S., Booth, A., Rick, J., & Balain, S. (2007). A conceptual framework for implementation fidelity. Implementation Science, 2, 40. https://doi.org/10.1186/1748-5908-2-40

Cousson-Gélie, F., Lareyre, O., Margueritte, M., Paillart, J., Huteau, M.-E., Djoufelkit, K., Pereira, B., & Stoebner, A. (2018). Preventing tobacco in vocational high schools: Study protocol for a randomized controlled trial of P2P, a peer to peer and theory planned behavior-based program. BMC Public Health, 18(1), 494. https://doi.org/10.1186/s12889-018-5226-y

Craig, P., Ruggiero, E., Frohlich, K. L., Mykhalovskiy, E., & White, M. (2018). Taking account of context in population health intervention research: Guidance for producers, users and funders of research. National Institute for Health and Care Research (NIHR). https://doi.org/10.17863/CAM.26129

Damschroder, L. J., Aron, D. C., Keith, R. E., Kirsh, S. R., Alexander, J. A., & Lowery, J. C. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, 50. https://doi.org/10.1186/1748-5908-4-50

de Looze, M., ter Bogt, T., Hublet, A., Kuntsche, E., Richter, M., Zsiros, E., Godeau, E., & Vollebergh, W. (2013). Trends in educational differences in adolescent daily smoking across Europe, 2002–10. The European Journal of Public Health, 23(5), 846–852.

Dobbie, F., Purves, R., McKell, J., Dougall, N., Campbell, R., White, J., Amos, A., Moore, L., & Bauld, L. (2019). Implementation of a peer-led school based smoking prevention programme: a mixed methods process evaluation. BMC Public Health, 19(1), 742. https://doi.org/10.1186/s12889-019-7112-7

Dobson, D., & Cook, T. J. (1980). Avoiding type III error in program evaluation: Results from a field experiment. Evaluation and Program Planning, 3(4), 269–276. https://doi.org/10.1016/0149-7189(80)90042-7

Domitrovich, C. E., Bradshaw, C. P., Poduska, J. M., Hoagwood, K., Buckley, J. A., Olin, S., Romanelli, L. H., Leaf, P. J., Greenberg, M. T., & Ialongo, N. S. (2008). Maximizing the implementation quality of evidence-based preventive interventions in schools: A conceptual framework. Advances in School Mental Health Promotion, 1(3), 6–28. https://doi.org/10.1080/1754730x.2008.9715730

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. https://doi.org/10.1007/s10464-008-9165-0

Dusenbury, L., Brannigan, R., Falco, M., & Hansen, W. B. (2003). A review of research on fidelity of implementation: Implications for drug abuse prevention in school settings. Health Education Research, 18(2), 237–256. https://doi.org/10.1093/her/18.2.237

Eldh, A. C., Almost, J., DeCorby-Watson, K., Gifford, W., Harvey, G., Hasson, H., Kenny, D., Moodie, S., Wallin, L., & Yost, J. (2017). Clinical interventions, implementation interventions, and the potential greyness in between—A discussion paper. BMC Health Services Research. https://doi.org/10.1186/s12913-016-1958-5

Ferm, L., Rasmussen, C. D. N., & Jørgensen, M. B. (2018). Operationalizing a model to quantify implementation of a multi-component intervention in a stepped-wedge trial. Implementation Science, 13(1), 26. https://doi.org/10.1186/s13012-018-0720-2

Frohlich, K. L., & Potvin, L. (2008). Transcending the known in public health practice: The inequality paradox: The population approach and vulnerable populations. American Journal of Public Health, 98(2), 216–221. https://doi.org/10.2105/ajph.2007.114777

Gaber, J., Datta, J., Clark, R., Lamarche, L., Parascandalo, F., Di Pelino, S., Forsyth, P., Oliver, D., Mangin, D., & Price, D. (2022). Understanding how context and culture in six communities can shape implementation of a complex intervention: A comparative case study. BMC Health Services Research, 22(1), 221. https://doi.org/10.1186/s12913-022-07615-0

Galanti, M. R., Coppo, A., Jonsson, E., Bremberg, S., & Faggiano, F. (2014). Anti-tobacco policy in schools: Upcoming preventive strategy or prevention myth? A review of 31 studies. Tobacco Control, 23(4), 295–301.

Gariépy, G., Danna, S. M., Hawke, L., Henderson, J., & Iyer, S. N. (2022). The mental health of young people who are not in education, employment, or training: A systematic review and meta-analysis. Social Psychiatry and Psychiatric Epidemiology, 57(6), 1107–1121. https://doi.org/10.1007/s00127-021-02212-8

Glasgow, R. E., Harden, S. M., Gaglio, B., Rabin, B., Smith, M. L., Porter, G. C., Ory, M. G., & Estabrooks, P. A. (2019). RE-AIM planning and evaluation framework: Adapting to new science and practice with a 20-year review. Frontiers in Public Health, 7, 64. https://doi.org/10.3389/fpubh.2019.00064

Glasgow, R. E., Vogt, T. M., & Boles, S. M. (1999). Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health, 89(9), 1322–1327. https://doi.org/10.2105/ajph.89.9.1322

Goldberg Lillehoj, C. J., Griffin, K. W., & Spoth, R. (2004). Program provider and observer ratings of school-based preventive intervention implementation: Agreement and relation to youth outcomes. Health Education & Behavior, 31(2), 242–257. https://doi.org/10.1177/1090198103260514

Hasson, H. (2010). Systematic evaluation of implementation fidelity of complex interventions in health and social care. Implementation Science, 5(1), 67. https://doi.org/10.1186/1748-5908-5-67

Hiscock, R., Bauld, L., Amos, A., Fidler, J. A., & Munafò, M. (2012). Socioeconomic status and smoking: A review. Annals of the New York Academy of Sciences, 1248(1), 107–123.

Hjerteforeningen. (2017). Danske erhvervsskolers sundhedsfremmende indsatser og implementeringskapacitet. Copenhagen, Denmark

Hjort, A. V., Christiansen, T. B., Stage, M., Rasmussen, K. H., Pisinger, C., Tjørnhøj-Thomsen, T., & Klinker, C. D. (2021a). Programme theory and realist evaluation of the ‘Smoke-Free Vocational Schools’ research and intervention project: a study protocol. British Medical Journal Open, 11(2), e042728. https://doi.org/10.1136/bmjopen-2020-042728

Hjort, A. V., Kuipers, M. A. G., Stage, M., Pisinger, C., & Klinker, C. D. (2022). Intervention activities associated with the implementation of a comprehensive school tobacco policy at Danish vocational schools: A repeated cross-sectional study. International Journal of Environmental Research and Public Health, 19(19), 12489.

Hjort, A. V., Schreuders, M., Rasmussen, K. H., & Klinker, C. D. (2021b). Are Danish vocational schools ready to implement “smoke-free school hours”? A qualitative study informed by the theory of organizational readiness for change. Implementation Science Communications, 2(1), 40. https://doi.org/10.1186/s43058-021-00140-x

Hoffmann, L., Mlinarić, M., Mï Lard, N., Leï, O. T., Grard, A., Lindfors, P., Kunst, A. E., Silne-R Consortium, & Richter, M. (2020). ‘[…] the situation in the schools still remains the Achilles heel.’ Barriers to the implementation of school tobacco policies—A qualitative study from local stakeholder’s perspective in seven European cities. Health Education Research, 35(1), 32–43. https://doi.org/10.1093/her/cyz037

Jakobsen, G., Danielsen, D., Jensen, M., Vinther, J., Pisinger, C., Holmberg, T., Krølner, R., & Andersen, S. (2021). Reducing smoking in youth by a smoke-free school environment: A stratified cluster randomized controlled trial of focus, a multicomponent program for alternative high schools. Tobacco Prevention and Cessation. https://doi.org/10.18332/tpc/133934

Källmén, H., Wennberg, P., Sohlberg, T., & Larsson, M. (2020). Effects of a school tobacco policy on student smoking and snus use. Health Behavior Policy Review, 7(4), 358–365.

Kjeld, S. G., Thygesen, L. C., Danielsen, D., Jakobsen, G. S., Jensen, M. P., Holmberg, T., Bast, L. S., Lund, L., Pisinger, C., & Andersen, S. (2023). Effectiveness of the multi-component intervention ‘Focus’ on reducing smoking among students in the vocational education setting: A cluster randomized controlled trial. BMC Public Health, 23(1), 419. https://doi.org/10.1186/s12889-023-15331-5

Koorts, H., Timperio, A., Abbott, G., Arundell, L., Ridgers, N. D., Cerin, E., Brown, H., Daly, R. M., Dunstan, D. W., Hume, C., Chinapaw, M. J. M., Moodie, M., Hesketh, K. D., & Salmon, J. (2022). Is level of implementation linked with intervention outcomes? Process evaluation of the TransformUs intervention to increase children’s physical activity and reduce sedentary behaviour. International Journal of Behavioral Nutrition and Physical Activity, 19(1), 122. https://doi.org/10.1186/s12966-022-01354-5

Linnan, L., & Steckler, A. (2002). An overview. In L. Linnan & A. Steckler (Eds.), Process evaluation for public health interventions and research. Wiley.

Linnansaari, A., Schreuders, M., Kunst, A. E., Rimpelä, A., & Lindfors, P. (2019). Understanding school staff members’ enforcement of school tobacco policies to achieve tobacco-free school: A realist review. Systematic Reviews, 8(1), 177. https://doi.org/10.1186/s13643-019-1086-5

Martinez, R. G., Lewis, C. C., & Weiner, B. J. (2014). Instrumentation issues in implementation science. Implementation Science, 9(1), 118. https://doi.org/10.1186/s13012-014-0118-8

McLoughlin, G. M., Allen, P., Walsh-Bailey, C., & Brownson, R. C. (2021). A systematic review of school health policy measurement tools: Implementation determinants and outcomes. Implementation Science Communications, 2(1), 67. https://doi.org/10.1186/s43058-021-00169-y

Mélard, N., Grard, A., Robert, P. O., Kuipers, M. A. G., Schreuders, M., Rimpelä, A. H., Leão, T., Hoffmann, L., Richter, M., Kunst, A. E., & Lorant, V. (2020). School tobacco policies and adolescent smoking in six European cities in 2013 and 2016: A school-level longitudinal study. Preventive Medicine, 138, 106142. https://doi.org/10.1016/j.ypmed.2020.106142

Mercken, L., Moore, L., Crone, M. R., De Vries, H., De Bourdeaudhuij, I., Lien, N., Fagiano, F., Vitoria, P. D., & Van Lenthe, F. J. (2012). The effectiveness of school-based smoking prevention interventions among low- and high-SES European teenagers. Health Education Research, 27(3), 459–469. https://doi.org/10.1093/her/cys017

Michie, S., Hyder, N., Walia, A., & West, R. (2011). Development of a taxonomy of behaviour change techniques used in individual behavioural support for smoking cessation. Addictive Behaviors, 36(4), 315–319. https://doi.org/10.1016/j.addbeh.2010.11.016

Minary, L., Alla, F., Cambon, L., Kivits, J., & Potvin, L. (2018). Addressing complexity in population health intervention research: The context/intervention interface. Journal of Epidemiology and Community Health, 72(4), 319–323. https://doi.org/10.1136/jech-2017-209921

Nilsen, P., & Bernhardsson, S. (2019). Context matters in implementation science: A scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Services Research, 19(1), 1–21.

Pérez, D., Van der Stuyft, P., Zabala, M. C., Castro, M., & Lefèvre, P. (2016). A modified theoretical framework to assess implementation fidelity of adaptive public health interventions. Implementation Science, 11(1), 91. https://doi.org/10.1186/s13012-016-0457-8

Petersen, M. T., Lund, L., & Bast, L. S. (2022). RØG—En undersøgelse af tobak, adfærd og regler 2022. Copenhagen, Denmark

Pisinger, V., Thorsted, A., Jezek, A., Jørgensen, A., Christensen, A. I., & Thygesen, L. C. (2019). Sundhed og trivsel på gymnasiale uddannelser 2019. Copenhagen, Denmark

Powell, B. J., Beidas, R. S., Lewis, C. C., Aarons, G. A., McMillen, J. C., Proctor, E. K., & Mandell, D. S. (2017). Methods to improve the selection and tailoring of implementation strategies. The Journal of Behavioral Health Services & Research, 44(2), 177–194. https://doi.org/10.1007/s11414-015-9475-6

Powell, B. J., Waltz, T. J., Chinman, M. J., Damschroder, L. J., Smith, J. L., Matthieu, M. M., Proctor, E. K., & Kirchner, J. E. (2015). A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10(1), 21. https://doi.org/10.1186/s13012-015-0209-1

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., Griffey, R., & Hensley, M. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. https://doi.org/10.1007/s10488-010-0319-7

Ringgaard, L. W., Heinze, C., Andersen, N. B. S., Hansen, G. I. L., Hjort, A. V., & Klinker, C. D. (2020). Sundhed og trivsel på erhvervsuddannelser 2019. Steno Diabetes Center Copenhagen, Hjerteforeningen, Kræftens Bekæmpelse. Copenhagen, Denmark

Rogers, E. M. (2003). Diffusion of Innovations (5th ed.). Simon & Schuster.

Rozema, A. D., Hiemstra, M., Mathijssen, J. J. P., Jansen, M. W. J., & van Oers, H. (2018). Impact of an outdoor smoking ban at secondary schools on cigarettes, e-cigarettes and water pipe use among adolescents: An 18-month follow-up. International Journal of Environmental Research and Public Health. https://doi.org/10.3390/ijerph15020205

Ryan, R. M., & Deci, E. (2000). Self-determination theory and the facilitation of intrinsic motivation, social development, and well-being. American Psychologist, 55(1), 68.

Salahuddin, M., Barlow, S. E., Pont, S. J., Butte, N. F., & Hoelscher, D. M. (2018). Development and use of an index for measuring implementation of a weight management program in children in primary care clinics in Texas. BMC Family Practice, 19(1), 191. https://doi.org/10.1186/s12875-018-0882-7

Scaccia, J. P., Cook, B. S., Lamont, A., Wandersman, A., Castellow, J., Katz, J., & Beidas, R. S. (2015). A practical implementation science heuristic for organizational readiness: R = MC(2). Journal of Community Psychology, 43(4), 484–501. https://doi.org/10.1002/jcop.21698

Schreuders, M., Nuyts, P. A. W., van den Putte, B., & Kunst, A. E. (2017). Understanding the impact of school tobacco policies on adolescent smoking behaviour: A realist review. Social Science and Medicine, 183, 19–27. https://doi.org/10.1016/j.socscimed.2017.04.031

Schreuders, M., van den Putte, B., & Kunst, A. E. (2019). Why secondary schools do not implement far-reaching smoke-free policies: Exploring deep core, policy core, and secondary beliefs of school staff in the Netherlands. International Journal of Behavioral Medicine, 26(6), 608–618. https://doi.org/10.1007/s12529-019-09818-y

Skivington, K., Matthews, L., Simpson, S. A., Craig, P., Baird, J., Blazeby, J. M., . . . Moore, L. (2021). A new framework for developing and evaluating complex interventions: update of Medical Research Council guidance. BMJ, 374, n2061. doi:https://doi.org/10.1136/bmj.n2061

Smit, F., Zwart, W., Spruit, I., Monshouwer, K., & van Ameijden, E. (2009). Monitoring substance use in adolescents: School survey or household survey? Drugs Education Prevention and Policy, 9, 267–274. https://doi.org/10.1080/09687630210129529

Statistics-Denmark (2019). Erhvervsuddannelser i Danmark 2019. Retrived April 11, 2023, from https://www.dst.dk/da/Statistik/nyheder-analyser-publ/Publikationer/VisPub?cid=32526

Sussman, S., Arriaza, B., & Grigsby, T. J. (2014). Alcohol, tobacco, and other drug misuse prevention and cessation programming for alternative high school youth: A review. Journal of School Health, 84(11), 748–758.

Thomas, R. E., McLellan, J., & Perera, R. (2013). School-based programmes for preventing smoking. Cochrane Database of Systematic Reviews, 2013(4), CD001293. https://doi.org/10.1002/14651858.CD001293.pub3

Waller, G., Finch, T., Giles, E. L., & Newbury-Birch, D. (2017). Exploring the factors affecting the implementation of tobacco and substance use interventions within a secondary school setting: A systematic review. Implementation Science, 12(1), 130. https://doi.org/10.1186/s13012-017-0659-8

Acknowledgements

The Focus project group would like to thank all participating schools, principals, teachers, school coordinators, and students.

Funding

Open access funding provided by Royal Danish Library. The Focus study was funded by the Danish Cancer Society (Proposal number R163-A10653) and Marie Pil Jensen’s PhD scholarship is co-financed by TrygFonden Denmark and University of Southern Denmark (SDU).

Author information

Authors and Affiliations

Contributions

MPJ participated in the collection of data, data cleaning, statistical analyses, interpretation of data, and drafted the manuscript. RFK supervised the research group and contributed to the design of the study, statistical analyses, and interpretation of data. MBJ contributed with methodological expertise and interpretation of data. LSB contributed to the statistical analyses and interpretation of data. SA is the project leader and principal investigator of the Focus intervention and coordinated the study, contributed to its design and collection of data. She contributed to the statistical analyses and interpretation of data. All authors read, revised, and approved the final manuscript.

Corresponding author

Ethics declarations

Competing Interests

The authors declare that they have no competing interests.

Ethical Approval

The study adheres to the Danish rules of ethics and legislature of the Danish Data Protection Agency (Ref: 17/12006). According to the National Committee on Health Research Ethics (Ref: 20182000-83), it was not required to obtain ethical approval and informed consent. Before data collection, students were informed that their information would solely be used for research purposes and treated confidentially, that their participation was voluntary, and that they could withdraw from the study at any time.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jensen, M.P., Krølner, R.F., Jørgensen, M.B. et al. Assessment of Delivery and Receipt of a Complex School-Based Smoking Intervention: A Systematic Quantitative Process Evaluation. Glob Implement Res Appl 3, 129–146 (2023). https://doi.org/10.1007/s43477-023-00084-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43477-023-00084-5