Abstract

Zero-determinant strategies are memory-one strategies in repeated games which unilaterally enforce linear relations between expected payoffs of players. Recently, the concept of zero-determinant strategies was extended to the class of memory-n strategies with \(n\ge 1\), which enables more complicated control of payoffs by one player. However, what we can do by memory-n zero-determinant strategies is still not clear. Here, we show that memory-n zero-determinant strategies in repeated games can be used to control conditional expectations of payoffs. Equivalently, they can be used to control expected payoffs in biased ensembles, where a history of action profiles with large value of bias function is more weighted. Controlling conditional expectations of payoffs is useful for strengthening zero-determinant strategies, because players can choose conditions in such a way that only unfavorable action profiles to one player are contained in the conditions. We provide several examples of memory-n zero-determinant strategies in the repeated prisoner’s dilemma game. We also explain that a deformed version of zero-determinant strategies is easily extended to the memory-n case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Repeated games have succeeded in explaining cooperative behavior in the prisoner’s dilemma situation, where defection is more favorable than cooperation [1, 2]. Recently, finite-memory strategies (strategies with finite recall) in repeated games have attracted much attention in game theory, because the rationality of real agents is bounded [3]. In computer science, agents with bounded rationality were modeled by finite automata, and equilibria of such agents have been investigated [4,5,6,7]. In evolutionary biology, evolutionary stability of finite-memory strategies has been mainly focused on [8,9,10]. The class of memory-one strategies contains several representative strategies in the repeated prisoner’s dilemma game, such as the Grim Trigger strategy [11], the Tit-for-Tat strategy [12, 13], and the Win-Stay Lose-Shift strategy [9]. Moreover, longer-memory strategies have recently been investigated since longer memory enables agents more complicated behavior [14,15,16,17,18].

In 2012, two physicists, William Press and Freeman Dyson, discovered a novel class of memory-one strategies, called zero-determinant (ZD) strategies, in the infinitely repeated prisoner’s dilemma game [19]. Counterintuitively, ZD strategies unilaterally control expected payoffs of players by enforcing linear relations between expected payoffs. Since the discovery of ZD strategies, many extensions have been done, including extensions to multi-player multi-action stage games [20,21,22,23,24], extensions to games with imperfect monitoring [25,26,27], extensions to games with a discounting factor [23, 28,29,30], extensions to asynchronous games [31, 32], an extension to linear relations between moments of payoffs [33], and an extension to long-memory strategies [34]. In addition, evolutionary stability of ZD strategies, such as extortionate ZD strategy and generous ZD strategy, in the repeated prisoner’s dilemma game has been substantially investigated [35,36,37,38,39,40]. Human experiments also compared performance of extortionate ZD strategy and that of generous ZD strategy [41, 42]. Furthermore, mathematical properties of the situation where several players take ZD strategies were investigated [21, 27].

In this paper, we provide an interpretation about the ability of memory-n ZD strategies [34]. Although memory-n ZD strategies were originally introduced as strategies which unilaterally enforce linear relations between correlation functions of payoffs, we here elucidate that the fundamental ability of memory-n ZD strategies is that they unilaterally enforce linear relations between conditional expectations of payoffs. Equivalently, we can rephrase that memory-n ZD strategies unilaterally enforce linear relations between expected payoffs in biased ensembles [43,44,45,46,47,48,49]. The results in Ref. [34] can be derived from this interpretation. We also provide examples of memory-n ZD strategies in the repeated prisoner’s dilemma game. Since expected payoffs conditional on previous action profiles are used in linear relations, players can choose conditions in such a way that only action profiles unfavorable to one player are contained in the conditions, which may result in strengthening original memory-one ZD strategies. Furthermore, we show that extension of deformed ZD strategies [33] to the memory-n case is straightforward.

This paper is organized as follows. In Sect. 2, we introduce a model of repeated games. In Sect. 3, we review ZD strategies. In Sect. 4, we show that there exist strategies which unilaterally enforce probability zero to specific action profiles. In Sect. 5, we introduce the concept of biased memory-n ZD strategies, and show that they unilaterally enforce linear relations between expected payoffs in biased ensembles or probability zero for a set of action profiles. In this section, we also discuss that the factorable memory-n ZD strategies in Ref. [34] can be derived from biased memory-n ZD strategies. In Sect. 6, we provide examples of biased memory-n ZD strategies in the repeated prisoner’s dilemma game. In Sect. 7, we introduce memory-n version of deformed ZD strategies and provide several examples. Section 8 is devoted to concluding remarks.

2 Model

We consider a repeated game with N players. The set of players is described as \(\mathcal {N}:=\left\{ 1, \cdots , N \right\}\). The action of player \(a\in \mathcal {N}\) in a one-shot game is written as \(\sigma _a \in A_a := \left\{ 1, \cdots , M_a \right\}\), where \(M_a<\infty\) is the number of action of player a. We define \(\mathcal {A}:=\prod _{a=1}^N A_a\). We collectively write \(\varvec{\sigma }:=\left( \sigma _1, \cdots , \sigma _N \right) \in \mathcal {A}\) and call \(\varvec{\sigma }\) an action profile. The payoff of player a when the action profile is \(\varvec{\sigma }\) is described as \(s_a\left( \varvec{\sigma } \right)\). We also write a probability M-simplex by \(\Delta _M\). We consider the situation that the game is repeated infinitely. We write an action of player a in t-th round \((t\ge 1)\) by \(\sigma _a(t)\). The (behavior) strategy of player a is described as \(\mathcal {T}_a := \left\{ T^{(t)}_a \right\} _{t=1}^\infty\), where \(T^{(t)}_a: \mathcal {A}^{t-1} \rightarrow \Delta _{M_a}\) is the conditional probability at t-th round. We write the expectation of the quantity B with respect to strategies of all players by \(\mathbb {E}[B]\). We introduce a discounting factor by \(\delta\), which satisfies \(0\le \delta \le 1\). The payoff of player a in the repeated game is defined by

for \(0\le \delta < 1\), and

for \(\delta =1\). In this paper, we consider only the case \(\delta =1\). Below we write \(\sum _{\varvec{\sigma }\in \mathcal {A}}\) and \(\sum _{\sigma _a \in A_a} (\forall a)\) as \(\sum _{\varvec{\sigma }}\) and \(\sum _{\sigma _a}\), respectively.

The payoff is rewritten as

where we have defined the joint probability distribution of action profiles \(\left\{ \varvec{\sigma }(t^\prime ) \right\} _{t^\prime =1}^{t}\)

It should be noted that \(\mathbb {P}_t\) satisfies the recursion relation

We first introduce (time-independent) memory-n strategies \((n\ge 0)\).

Definition 1

A strategy of player a is a (time-independent) memory-n strategy \((n\ge 0)\) when it is written in the form

for all \(t> n\) with some common conditional probability \(T_a\).

Throughout this paper, we consider the situation that a player \(\exists a\in \mathcal {N}\) uses a memory-n strategy. We remark that strategies of players \(-a:=\mathcal {N} \kern 0.14em \backslash \{ a \}\) are arbitrary. We define \(\varvec{\sigma }_{-a}:=\varvec{\sigma }\backslash \sigma _a\). For \(t\ge n\), we also define the marginal probability distribution of the last n action profiles obtained from \(\mathbb {P}_t\) by

By taking summation of the both sides of Eq. (5) with respect to \(\varvec{\sigma }_{-a}(t+1)\), \(\varvec{\sigma }(t)\), \(\cdots\), and \(\varvec{\sigma }(1)\) for \(t\ge n\), the left-hand-side becomes

where \(\delta _{\sigma , \sigma ^\prime }\) represents the Kronecker delta, which takes 1 for \(\sigma =\sigma ^\prime\) and 0 otherwise. (The last line is obtained by renaming variables.) The right-hand-side becomes

By renaming \(\sigma _a(t+1)\rightarrow \sigma _a\), we obtain

for \(t\ge n\). Then, by calculating \(\lim _{T\rightarrow \infty } \frac{1}{T} \sum _{t=n}^{T+n-1}\) of both sides, we finally obtain

where we have introduced the limit distribution

Therefore, we obtain the generalized version of Akin’s lemma [34, 50]:

Lemma 1

For the quantity

the relation

holds for arbitrary \(\sigma _a\).

In other words, player a unilaterally enforces linear relations between values of the limit probability distribution \(P^{*}\) regardless of the strategies of other players. Memory-one examples of such linear relations in the repeated prisoner’s game is provided in the Appendix. The quantity (13) is called a Press-Dyson tensor (or a strategy tensor) [34].

It should be noted that a Press-Dyson tensor \(\hat{T}_a\) is solely controlled by player a. Due to properties of a probability distribution \(T_a\), a Press-Dyson tensor satisfies several relations. First, it satisfies

for arbitrary \(\left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) due to the normalization condition of \(T_a\). This implies that the number of linear relations (14) enforced by player a is at most \((M_a-1)\). Second, it satisfies

for all \(\sigma _a\), \(\varvec{\sigma }^{(-1)}\), \(\cdots\), \(\varvec{\sigma }^{(-n)}\). Third, it satisfies

for all \(\sigma _a\), \(\varvec{\sigma }^{(-1)}\), \(\cdots\), \(\varvec{\sigma }^{(-n)}\). The last two comes from the fact that \(T_a\) takes value in [0, 1].

Below we write the expectation for the limit distribution \(P^{*} \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) by \(\left\langle \cdots \right\rangle ^{*}\), and note \(s_0\left( \varvec{\sigma } \right) :=1\) \((\forall \varvec{\sigma })\) for simplicity. We remark that the payoff of player \(\forall a^\prime \in \mathcal {N}\) is described as

That is, the payoffs in the repeated game are calculated as expected payoffs in the limit distribution. In the proof of Lemma 1, we have assumed that \(P^*\) exists. When \(P^*\) does not exist, the payoffs in the repeated games cannot be defined. Therefore, we consider only the case that \(P^*\) exists.

3 Previous Studies

Press and Dyson introduced the concept of zero-determinant strategies in repeated games [19]:

Definition 2

A memory-one strategy of player a is a zero-determinant (ZD) strategy when its Press-Dyson vectors \(\hat{T}_a\) can be written in the form

with some nontrivial coefficients \(\left\{ c_{\sigma _a} \right\}\) and \(\left\{ \alpha _{b} \right\}\) (that is, not \(c_{1}=\cdots =c_{M_a}=\text {const.}\) and not \(\alpha _0=\alpha _1=\cdots =\alpha _N=0\)).

(Press-Dyson tensors with \(n=1\) are particularly called Press-Dyson vectors.) Because Press-Dyson vectors satisfy Akin’s lemma (Lemma 1), the following proposition holds:

Proposition 1

([19, 23]) A ZD strategy (19) unilaterally enforces a linear relation between expected payoffs:

That is, the expected payoffs can be unilaterally controlled by one ZD player.

Recently, a deformed version of ZD strategies was also introduced [33]:

Definition 3

A memory-one strategy of player a is a deformed ZD strategy when its Press-Dyson vectors \(\hat{T}_a\) can be written in the form

with some nontrivial coefficients \(\left\{ c_{\sigma _a} \right\}\) and \(\left\{ \alpha _{k_1, \cdots , \kern 0.14em k_N} \right\}\).

Due to the same reason as Proposition 1, the following proposition holds:

Proposition 2

([33]) A deformed ZD strategy (21) unilaterally enforces a linear relation between moments of payoffs:

That is, the moments of payoffs can also be unilaterally controlled by one ZD player.

Furthermore, Ueda extended the concept of ZD strategies to memory-n strategies [34]:

Definition 4

A memory-n strategy of player a is a memory-n ZD strategy when its Press-Dyson tensors \(\hat{T}_a\) can be written in the form

with some nontrivial coefficients \(\left\{ c_{\sigma _a} \right\}\) and \(\left\{ \alpha _{b^{(-1)},\cdots , \kern 0.14em b^{(-n)}} \right\}\).

Because of Lemma 1, the following proposition also holds:

Proposition 3

([34]) A memory-n ZD strategy (23) unilaterally enforces a linear relation between correlation functions of payoffs:

The purpose of this paper is reinterpreting memory-n ZD strategies in terms of more elementary strategies.

4 Probability-Controlling Strategies

We first prove that there exist memory-n strategies which avoid an arbitrary action profile \(\varvec{\sigma }\) in the limit distribution. We define \(\delta _{\varvec{\sigma }, \hat{\varvec{\sigma }}}:=\prod _{a=1}^N \delta _{\sigma _a, \hat{\sigma }_a}\).

Proposition 4

Given some action profiles \(\left\{ \hat{\varvec{\sigma }}^{(-m)}\right\} _{m=1}^n\), memory-n strategies of player a of the form

where \(\hat{T}_a^{(1)}\) is a Press-Dyson vector of a memory-one strategy satisfying \(\hat{T}_a^{(1)} \left( \sigma _a^* | \hat{\varvec{\sigma }}^{(-1)} \right) \ne 0\) for some \(\sigma _a^*\), unilaterally enforce probability zero to the history \(\hat{\varvec{\sigma }}^{(-1)}, \cdots , \hat{\varvec{\sigma }}^{(-n)}\):

Proof

We consider Eq. (25) with \(\sigma _a=\sigma _a^*\):

By calculating expectations of the both sides with respect to the limit distribution \(P^{*} \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) corresponding to the strategy (25), and by using Lemma 1, we obtain

By the assumption \(\hat{T}_a^{(1)} \left( \sigma _a^* | \hat{\varvec{\sigma }}^{(-1)} \right) \ne 0\), we obtain the Eq. (26). \(\Box\)

Strategies of the form (25) can be used for avoiding some unfavorable situation \(\left( \hat{\varvec{\sigma }}^{(-1)}, \cdots , \hat{\varvec{\sigma }}^{(-n)} \right)\). We call strategies of the form (25) probability-controlling strategies. For example, the Grim Trigger strategy of player 1 in the repeated prisoner’s dilemma game can be regarded as a memory-one probability-controlling strategy avoiding the action profile (Cooperation, Defection), as we can see in the Appendix. This fact provides another explanation about the property that the Grim Trigger strategy is unbeatable [51]. We again discuss Grim Trigger in Sect. 6.

5 Biased Memory-n ZD Strategies

The limit distribution \(P^{*}\left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) gives the joint probability of n action profiles \(\left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\). When we consider some real function \(K \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) and introduce the quantity

this quantity can also be regarded as a probability distribution of n action profiles \(\left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\). In this ensemble of histories, a history with large K is more weighted. We call such ensemble \(P_K\) as biased ensemble biased by the function K. Biased ensembles recently attract much attention in statistical mechanics of trajectories [43,44,45,46,47,48,49].

We now prove our main theorem.

Theorem 1

Let \(\hat{T}_a^{(1)}\) be Press-Dyson vectors of a memory-one ZD strategy of player a:

with some coefficients \(\left\{ c_{\sigma _a} \right\}\) and \(\left\{ \alpha _b \right\}\). Let \(K: \mathcal {A}^n \rightarrow \mathbb {R}\cup \{ -\infty \}\) be a function satisfying \(K \left( \cdot \right) < \infty\), and define

Then, a memory-n strategy

unilaterally enforces either a linear relation between expected payoffs in a biased ensemble (biased by the function K)

or the relation

where \({{\,\mathrm{supp}\,}}f\) represents the support of function f.

Proof

First, we check that tensors (32) indeed satisfy the conditions of strategies, that is, Eqs. (15), (16), and (17). Due to the equality

for Press-Dyson vectors of memory-one ZD strategies, we obtain

for arbitrary \(\left\{ \varvec{\sigma }^{(-m)} \right\} _{m=1}^n\), which implies Eq. (15). In addition, because the Press-Dyson vectors \(\hat{T}_a^{(1)}\) of a memory-one ZD strategy satisfies

for all \(\sigma _a\) and \(\varvec{\sigma }^{(-1)}\), and the sign of \(\hat{T}_a \left( \sigma _a | \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) is the same as that of \(\hat{T}_a^{(1)} \left( \sigma _a | \varvec{\sigma }^{(-1)} \right)\), we obtain Eq. (16) for all \(\sigma _a\) and \(\left\{ \varvec{\sigma }^{(-m)} \right\} _{m=1}^n\) Furthermore, since the Press-Dyson vectors \(\hat{T}_a^{(1)}\) of a memory-one ZD strategy satisfies

and then Eq. (32) satisfies

we obtain Eq. (17) for all \(\sigma _a\), \(\varvec{\sigma }^{(-1)}\), \(\cdots\), \(\varvec{\sigma }^{(-n)}\)

Next, from Eqs. (32) and (30), we obtain

By calculating expectations of the both sides with respect to the corresponding limit distribution \(P^{*} \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) and using Lemma 1, we obtain

Furthermore, if

by dividing the both sides of Eq. (41) by \(\left\langle e^{K \left( \varvec{\sigma }^{(-2)}, \cdots , \varvec{\sigma }^{(-n)} \right) - K_{\max }} \right\rangle ^{*}\), we obtain Eq. (33). Otherwise, the equality

holds. This equality is rewritten as

However, because \(e^K\) is non-negative, this equality implies Eq. (34). \(\square\)

Theorem 1 can be regarded as an extension of Proposition 4. It should be noted that the limit probability distribution \(P^{*} \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) depends on strategies. We call strategies in this Theorem as biased memory-n ZD strategies. Controlling biased expectations of payoffs is useful for strengthening memory-one ZD strategies, because players can choose biased functions in such a way that unfavorable action profiles to one player are more weighted. Biased ensembles are used to amplify rare events in the same way as evolution in population genetics. When we consider situation where each group with N players is selected by fitness \(e^K\), expected payoffs in such situation are calculated by our biased expectations. Such situation may be useful in the context of multilevel selection [52], if K is given by the total payoffs of all players in one group, for instance. Furthermore, Theorem 1 contains the following three corollaries.

Corollary 1

Let \(\hat{T}_a^{(1)}\) be Press-Dyson vectors of a memory-one ZD strategy satisfying Eq. (30). Let \(\left\{ \hat{\varvec{\sigma }}^{(-m)} \right\} _{m=1}^n\) be some action profiles. If \(\sum _{b=0}^N \alpha _b s_b \left( \hat{\varvec{\sigma }}^{(-1)} \right) \ne 0\), then a memory-n strategy

unilaterally enforces the equation

Proof

By choosing the function K such that

in Eq. (32), we obtain

(We remark that K can be \(-\infty\).) It should be noted that \(K_{\max }=0\). By using the assumption \(\sum _{b=0}^N \alpha _b s_b \left( \hat{\varvec{\sigma }}^{(-1)} \right) \ne 0\), we obtain the result (46). \(\square\)

Corollary 1 can also be derived directly from Proposition 4.

Corollary 2

Let \(\hat{T}_a^{(1)}\) be Press-Dyson vectors of a memory-one ZD strategy satisfying Eq. (30). Let \(\left\{ \hat{\varvec{\sigma }}^{(-m)} \right\} _{m=2}^n\) be some action profiles. Then a memory-n strategy

unilaterally enforces either a linear relation between conditional expectations of payoffs when the history of the previous \(n-1\) action profiles is \(\hat{\varvec{\sigma }}^{(-2)}\), \(\cdots\), \(\hat{\varvec{\sigma }}^{(-n)}\)

or the relation

Proof

By choosing the function K such that

in Eq. (32), we obtain the relation (50) or the relation (51). It should be noted that Eq. (51) can be rewritten as

which is a relation on the marginal distribution. \(\square\)

We remark that memory-n strategies (49) approach the strategy “Repeat” [50]

(which repeats the previous action of the player) as n increases, because \(\prod _{m=2}^n \delta _{\varvec{\sigma }^{(-m)}, \hat{\varvec{\sigma }}^{(-m)}}=0\) for most \(\left\{ \varvec{\sigma }^{(-m)} \right\} _{m=1}^n\). This property is used to control only conditional expectations when history of the action profiles is \(\left( \hat{\varvec{\sigma }}^{(-2)}, \cdots , \hat{\varvec{\sigma }}^{(-n)} \right)\).

Corollary 3

Memory-n strategies of the form (32) contain factorable memory-n ZD strategies (23):

Proof

In Theorem 1, when the quantity \(K\left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) is written in the form \(\sum _{m=2}^n K_m \left( \varvec{\sigma }^{(-m)} \right)\), and the quantity \(G_m \left( \varvec{\sigma } \right) := e^{ K_m \left( \varvec{\sigma } \right) } \ge 0\) \((m\ge 2)\) is written by payoffs in the form

with some coefficients \(\left\{ \alpha _{b_m}^{(m)} \right\}\), then the strategies (32) are reduced to memory-n ZD strategies (55). \(\square\)

6 Examples: the Prisoner’s Dilemma Game

6.1 Setup

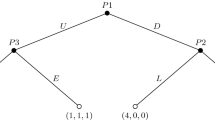

We consider the repeated prisoner’s dilemma game as an example. The prisoner’s dilemma game is the simplest two-player two-action game, where each player chooses cooperation (written as 1) or defection (written as 2) in each round [19]. Payoffs of two players are

with \(T>R>P>S\). Although the Nash equilibrium of the one-shot game is (2, 2), it has been known that cooperative Nash equilibria exist when this game is repeated infinitely many times and the discounting factor is large enough (folk theorem).

6.2 Biased Memory-n ZD Strategies

In the repeated prisoner’s dilemma game, the following strategies are contained in biased memory-n ZD strategies of player 1:

where \(G_1\) is a Press-Dyson vector of memory-one ZD strategies and \(G_m\) \((m\ge 2)\) is non-negative quantity. For example, when we assume that \(2R>T+S\) and \(2P<T+S\), they are

with \(B:=\max \left\{ 2R-(T+S), (T+S)-2P \right\}\), and

for each \(m\ge 2\). We remark that the set of \(G_m\) \((m\ge 1)\) also contains quantities which cannot be represented by payoffs. A linear relation enforced by the ZD strategy is

that is, a linear relation between correlation functions of payoffs. These strategies contain all memory-two ZD strategies reported in Ref. [34].

6.3 Extension of Tit-for-Tat

For example, the memory-n strategy

is a memory-n ZD strategy, which unilaterally enforces

When \(n=1\), this strategy is reduced to Tit-for-Tat (TFT) strategy, which unilaterally enforces \(\left\langle s_{1} \right\rangle ^{*} = \left\langle s_{2} \right\rangle ^{*}\) [19]. Because Eq. (63) becomes zero when \(\varvec{\sigma }^{(-m)}=(2,1)\) for some \(m\ge 2\), Eq. (64) can be regarded as a fairness condition between two players in the situation where (2, 1) was not played in the previous \(n-1\) rounds. Since the action profile (2, 1) is favorable to player 1, Eq. (64) can be regarded as a fairness condition when player 1 is in an unfavorable position. Therefore, this strategy may be stronger than TFT, and whether this statement is true or not should be studied in future.

Similarly, a slightly different memory-n strategy

is also a memory-n ZD strategy, which unilaterally enforces

This strategy is also reduced to TFT when \(n=1\). Because expectation in Eq. (66) is conditional on the action profiles (1, 1), (2, 1) and (2, 2), this strategy may be weaker than TFT. We can also construct a mixed version of (63) and (65) by choosing different \(G_m\) for each \(m\ge 2\).

6.4 Grim Trigger as Biased TFT

Finally, we again interpret the Grim Trigger strategy in terms of a biased memory-one ZD strategy. We remark that the Grim Trigger strategy itself is not a ZD strategy. In Eq. (45), when we set \(a=1\), \(n=1\), \(\hat{\varvec{\sigma }}^{(-1)}=(1,2)\), and choose TFT as the strategy \(\hat{T}_1^{(1)}\) of player 1

we obtain

or \(T_1 \left( 1 | \varvec{\sigma }^{(-1)} \right) = \delta _{\varvec{\sigma }^{(-1)}, (1,1)}\), which is the Grim Trigger strategy. It should be noted that the action profile (1, 2) is unfavorable for player 1. Corollary 1 claims that the strategy unilaterally enforces

which is consistent with Appendix. It has been known that, although a pair of TFT is not a subgame perfect equilibrium, a pair of Grim Trigger is a subgame perfect equilibrium. Therefore, this result can be interpreted as that TFT gets strengthened by a bias function \(\delta_{\sigma^{-1},(1,2)}\).

7 Memory-n Deformed ZD Strategies

We can consider a memory-n version of deformed ZD strategies (Definition 3).

Definition 5

A memory-n strategy of player a is a deformed memory-n ZD strategy when its Press-Dyson tensors \(\hat{T}_a\) can be written in the form

where \(G_1\) is a deformed memory-one ZD strategy and \(G_m\) \((m\ge 2)\) are non-negative quantities.

For example, in the prisoner’s dilemma game, because of the equality

for all \(\varvec{\sigma }\), the following strategy can be regarded as an extension of TFT strategy:

A linear relation enforced by the extended TFT is

This linear relation can be interpreted as a fairness condition between two players.

In particular, because the identity

holds for arbitrary h, the strategy (63) can be rewritten as

with arbitrary \(\{ h_m \}\). Therefore, we obtain the following proposition:

Proposition 5

The strategy (63) simultaneously enforces linear relations

with arbitrary \(\{ h_m \}\).

Similarly, the strategy (65) can be rewritten as

with arbitrary \(\{ h_m \}\), and the following proposition holds:

Proposition 6

The strategy (65) simultaneously enforces linear relations

with arbitrary \(\{ h_m \}\).

It should be noted that the strategies (63) and (65) themselves do not depend on parameters \(\{ h_m \}\). Therefore, the limit probability distribution \(P^{*} \left( \varvec{\sigma }^{(-1)}, \cdots , \varvec{\sigma }^{(-n)} \right)\) also does not depend on \(\{ h_m \}\). By differentiating Eq. (78) or Eq. (80) with respect to \(\{ h_m \}\) arbitrary times, we can obtain an infinite number of payoff relations.

8 Concluding Remarks

In this paper, we introduced the concept of biased memory-n ZD strategies (32), which unilaterally enforce linear relations between expected payoffs in biased ensembles or probability zero for a set of action profiles. Biased memory-n ZD strategies can be used to construct the original memory-n ZD strategies in Ref. [34], which unilaterally enforce linear relations between correlation functions of payoffs. From another point of view, biased memory-n ZD strategies can be regarded as extension of probability-controlling strategies introduced in Sect. 4. Furthermore, biased memory-n ZD strategies can also be used to construct ZD strategies which unilaterally enforce linear relations between conditional expectations of payoffs. Because the expectation of payoffs conditional on previous action profiles is used, players can choose conditions in such a way that only unfavorable action profiles to one player are contained in the conditions. We provided several examples of biased memory-n ZD strategies in the repeated prisoner’s dilemma game. Moreover, we explained that extension of deformed ZD strategies [33] to the memory-n case is straightforward.

The significance of this study is that we provided a method to interpret every time-independent finite-memory strategy in terms of linear relations about \(P^*\) enforced by it. As we can see in Theorem 1, when a memory-n strategy is described as a biased memory-n ZD strategy, it enforces some linear relation between expected payoffs in biased ensembles or probability zero for a set of action profiles. Even if a memory-n strategy is not described as a biased memory-n ZD strategy, it can still enforce linear relations about \(P^*\) (Lemma 1). Such result may be useful for interpretation of strategies. For example, as we saw in the repeated prisoner’s dilemma game, Grim Trigger can be regarded as a memory-one strategy enforcing \(P^{*}(1,2)=0\), which directly represents that Grim Trigger is unbeatable. Furthermore, for any two-player symmetric potential games, the Imitate-If-Better strategy [51], which imitates the opponent’s previous action if and only if it was beaten in the previous round, has the similar property as Grim Trigger in the prisoner’s dilemma game [53]. That is, it unilaterally enforces a linear relation between conditional expectations

(\(\mathbb {I}\) is an indicator function), which directly means that \(\left\langle s_{a} \right\rangle ^{*} \ge \left\langle s_{-a} \right\rangle ^{*}\). (Grim Trigger is a special case of the Imitate-If-Better strategy for the prisoner’s dilemma game.) In this way, controlling conditional expectations can be useful when we investigate properties of finite-memory strategies.

Before ending this paper, we make two remarks. The first remark is related to the existence of biased memory-n ZD strategies. It has been known that the existence of memory-one ZD strategies is highly dependent on the stage game [27]. For example, no memory-one ZD strategies exist in the repeated rock-paper-scissors game. However, because we construct biased memory-n ZD strategies by using memory-one ZD strategies, the existence condition of biased memory-n ZD strategies is the same as that of memory-one ZD strategies used for construction. Clarifying the existence condition of memory-one ZD strategies is an important subject of future work.

The second remark is on the relation between biased memory-n ZD strategies and equilibrium strategies. Although we explained that Grim Trigger can be obtained by biasing TFT, other strategies can also be obtained. For example, in Subsect. 6.4, if we use \(\hat{\varvec{\sigma }}^{(-1)}=(2,1)\) instead of \(\hat{\varvec{\sigma }}^{(-1)}=(1,2)\), Eq. (68) becomes

which unilaterally enforces \(P^{*} \left( 2,1 \right) = 0\). This strategy forms neither a subgame perfect equilibrium nor a Nash equilibrium. Therefore, the concept of biased memory-n ZD strategies itself is generally not related to equilibrium strategies. Rather, an important implication is that all time-independent finite-memory strategies can be interpreted by linear relations about \(P^*\) enforced by it, as noted above. Furthermore, in the repeated prisoner’s dilemma game, memory-one Nash equilibria were characterized by using the formalism coming with memory-one ZD strategies [50]. In particular, a pair of equalizer strategies, which are one example of memory-one ZD strategies and unilaterally set the payoff of the opponent, forms a Nash equilibrium, because each player cannot improve his/her payoff as long as the opponent uses an equalizer strategy. We would like to investigate whether our formalism can be used for characterization of memory-n Nash equilibria in the repeated prisoner’s dilemma game or not in future.

Data Availability

We claim that this work is a theoretical result and there is no available data source.

References

Fudenberg D, Tirole J (1991) Game theory. MIT Press, Massachusetts

Osborne MJ, Rubinstein A (1994) A course in game theory. MIT Press, Massachusetts

Rubinstein A (1998) Modeling bounded rationality. MIT Press, Massachusetts

Kalai E, Stanford W (1988) Finite rationality and interpersonal complexity in repeated games. Econometrica: Journal of the Econometric Society 397–410

Neyman A (1985) Bounded complexity justifies cooperation in the finitely repeated prisoners’ dilemma. Econ Lett 19(3):227–229

Neyman A, Okada D (1999) Strategic entropy and complexity in repeated games. Games Econom Behav 29(1–2):191–223

Rubinstein A (1986) Finite automata play the repeated prisoner’s dilemma. J Econ Theory 39(1):83–96

Imhof LA, Fudenberg D, Nowak MA (2005) Evolutionary cycles of cooperation and defection. Proc Natl Acad Sci 102(31):10797–10800

Nowak M, Sigmund K (1993) A strategy of win-stay, lose-shift that outperforms tit-for-tat in the prisoner’s dilemma game. Nature 364(6432):56–58

Nowak MA, Sigmund K (1992) Tit for tat in heterogeneous populations. Nature 355(6357):250–253

Friedman JW (1971) A non-cooperative equilibrium for supergames. Rev Econ Stud 38(1):1–12

Axelrod R, Hamilton WD (1981) The evolution of cooperation. Science 211(4489):1390–1396

Rapoport A, Chammah AM, Orwant CJ (1965) Prisoner’s dilemma: a study in conflict and cooperation, vol 165. University of Michigan Press

Hilbe C, Martinez-Vaquero LA, Chatterjee K, Nowak MA (2017) Memory-n strategies of direct reciprocity. Proc Natl Acad Sci 114(18):4715–4720

Li J, Kendall G (2013) The effect of memory size on the evolutionary stability of strategies in iterated prisoner’s dilemma. IEEE Trans Evol Comput 18(6):819–826

Murase Y, Baek SK (2018) Seven rules to avoid the tragedy of the commons. J Theor Biol 449:94–102

Murase Y, Baek SK (2020) Five rules for friendly rivalry in direct reciprocity. Sci Rep 10:16904

Yi SD, Baek SK, Choi JK (2017) Combination with anti-tit-for-tat remedies problems of tit-for-tat. J Theor Biol 412:1–7

Press WH, Dyson FJ (2012) Iterated prisoner’s dilemma contains strategies that dominate any evolutionary opponent. Proc Natl Acad Sci 109(26):10409–10413

Guo JL (2014) Zero-determinant strategies in iterated multi-strategy games. arXiv preprint arXiv:1409.1786

He X, Dai H, Ning P, Dutta R (2016) Zero-determinant strategies for multi-player multi-action iterated games. IEEE Signal Process Lett 23(3):311–315

Hilbe C, Wu B, Traulsen A, Nowak MA (2014) Cooperation and control in multiplayer social dilemmas. Proc Natl Acad Sci 111(46):16425–16430

McAvoy A, Hauert C (2016) Autocratic strategies for iterated games with arbitrary action spaces. Proc Natl Acad Sci 113(13):3573–3578

Pan L, Hao D, Rong Z, Zhou T (2015) Zero-determinant strategies in iterated public goods game. Sci Rep 5:13096

Hao D, Rong Z, Zhou T (2015) Extortion under uncertainty: zero-determinant strategies in noisy games. Phys Rev E 91:052803

Mamiya A, Ichinose G (2019) Strategies that enforce linear payoff relationships under observation errors in repeated prisoner’s dilemma game. J Theor Biol 477:63–76

Ueda M, Tanaka T (2020) Linear algebraic structure of zero-determinant strategies in repeated games. PLoS ONE 15(4):e0230973

Hilbe C, Traulsen A, Sigmund K (2015) Partners or rivals? Strategies for the iterated prisoner’s dilemma. Games Econom Behav 92:41–52

Ichinose G, Masuda N (2018) Zero-determinant strategies in finitely repeated games. J Theor Biol 438:61–77

Mamiya A, Ichinose G (2020) Zero-determinant strategies under observation errors in repeated games. Phys Rev E 102:032115

McAvoy A, Hauert C (2017) Autocratic strategies for alternating games. Theor Popul Biol 113:13–22

Young RD (2017) Press-dyson analysis of asynchronous, sequential prisoner’s dilemma. arXiv preprint arXiv:1712.05048

Ueda M (2021) Tit-for-tat strategy as a deformed zero-determinant strategy in repeated games. J Phys Soc Jpn 90(2):025002

Ueda M (2021) Memory-two zero-determinant strategies in repeated games. R Soc Open Sci 8(5):202186

Adami C, Hintze A (2013) Evolutionary instability of zero-determinant strategies demonstrates that winning is not everything. Nat Commun 4(1):1–8

Hilbe C, Nowak MA, Sigmund K (2013) Evolution of extortion in iterated prisoner’s dilemma games. Proc Natl Acad Sci 110(17):6913–6918

Hilbe C, Nowak MA, Traulsen A (2013) Adaptive dynamics of extortion and compliance. PLoS ONE 8(11):1–9

Stewart AJ, Plotkin JB (2012) Extortion and cooperation in the prisoner’s dilemma. Proc Natl Acad Sci 109(26):10134–10135

Stewart AJ, Plotkin JB (2013) From extortion to generosity, evolution in the iterated prisoner’s dilemma. Proc Natl Acad Sci 110(38):15348–15353

Szolnoki A, Perc M (2014) Evolution of extortion in structured populations. Phys Rev E 89(2):022804

Hilbe C, Röhl T, Milinski M (2014) Extortion subdues human players but is finally punished in the prisoner’s dilemma. Nat Commun 5:3976

Wang Z, Zhou Y, Lien JW, Zheng J, Xu B (2016) Extortion can outperform generosity in the iterated prisoner’s dilemma. Nat Commun 7:11125

Beck C, Schögl F (1993) Thermodynamics of chaotic systems: an introduction. Cambridge University Press

Garrahan JP, Jack RL, Lecomte V, Pitard E, van Duijvendijk K, van Wijland F (2007) Dynamical first-order phase transition in kinetically constrained models of glasses. Phys Rev Lett 98(19):195702

Giardina C, Kurchan J, Peliti L (2006) Direct evaluation of large-deviation functions. Phys Rev Lett 96(12):120603

Jack RL, Sollich P (2010) Large deviations and ensembles of trajectories in stochastic models. Prog Theor Phys Suppl 184:304–317

Lecomte V, Appert-Rolland C, van Wijland F (2005) Chaotic properties of systems with Markov dynamics. Phys Rev Lett 95(1):010601

Nyawo PT, Touchette H (2017) A minimal model of dynamical phase transition. EPL (Europhysics Letters) 116(5):50009

Ueda M, Sasa SI (2015) Replica symmetry breaking in trajectories of a driven brownian particle. Phys Rev Lett 115(8):080605

Akin E (2016) The iterated prisoner’s dilemma: good strategies and their dynamics. Ergodic Theory, Advances in Dynamical Systems. pp 77–107

Duersch P, Oechssler J, Schipper BC (2012) Unbeatable imitation. Games Econom Behav 76(1):88–96

Traulsen A, Nowak MA (2006) Evolution of cooperation by multilevel selection. Proc Natl Acad Sci 103(29):10952–10955

Ueda M (2022) Unbeatable tit-for-tat as a zero-determinant strategy. J Phys Soc Jpn 91(5):054804

Usui Y, Ueda M (2021) Symmetric equilibrium of multi-agent reinforcement learning in repeated prisoner’s dilemma. Appl Math Comput 409:126370

Acknowledgements

We thank Genki Ichinose for valuable discussions.

Funding

This study was supported by JSPS KAKENHI Grant Number JP20K19884 and Inamori Research Grants.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The author declares no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Akin’s Lemma for Deterministic Memory-one Strategies

In this appendix, we provide results of Akin’s lemma in the repeated prisoner’s dilemma game. We use the same notation as that in Sect. 6. We write a memory-one strategy of player 1 as

The number of deterministic memory-one strategies is sixteen [54]. The results of Akin’s lemma are summarized in Table 1. We can see that each strategy indeed enforces a linear relation between values of the limit probability distribution \(P^{*}\).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ueda, M. Controlling Conditional Expectations by Zero-Determinant Strategies. Oper. Res. Forum 3, 48 (2022). https://doi.org/10.1007/s43069-022-00159-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43069-022-00159-3