Abstract

In interdependent networks, nodes are connected to each other with respect to their failure dependency relations. As a result of this dependency, a failure in one of the nodes of one of the networks within a system of several interdependent networks can cause the failure of the entire system. Diagnosing the initial source of the failure in a collapsed system of interdependent networks is an important problem to be addressed. We study an online failure diagnosis problem defined on a collapsed system of interdependent networks where the source of the failure is at an unknown node (v). In this problem, each node of the system has a positive inspection cost and the source of the failure is diagnosed when v is inspected. The objective is to provide an online algorithm which considers dependency relations between nodes and diagnoses v with minimum total inspection cost. We address this problem from worst-case competitive analysis perspective for the first time. In this approach, solutions which are provided under incomplete information are compared with the best solution that is provided in presence of complete information using the competitive ratio (CR) notion. We give a lower bound of the CR for deterministic online algorithms and prove its tightness by providing an optimal deterministic online algorithm. Furthermore, we provide a lower bound on the expected CR of randomized online algorithms and prove its tightness by presenting an optimal randomized online algorithm. We prove that randomized algorithms are able to obtain better CR compared to deterministic algorithms in the expected sense for this online problem.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Muro et al. [16] provided an extensive study of the recovery of interdependent networks. In this study, complex networks are defined as a collection of nodes and links in which the components of the system are denoted as nodes and the links give their interactions. Traditionally, the main focus of network analysis studies was to address single isolated networks neglecting their interactions and dependency to other networks. However, nowadays more research is devoted to analysis of interdependent networks. The inter-dependency and interactions of different real-life networks such as the transportation systems, power girds, communication infrastructure and gas transmission networks are beyond question and form the main stream of research in the field of network analysis. From a modelling perspective, interdependent networks have novel characteristics that were not present in classical network analysis models and now they should be addressed. For example, a collection of networks with inter-dependency is considerably more vulnerable compared to each of the networks within that collection of the networks [18]. In particular, minor local failures in one of the networks can lead to cascading failures among all the networks which may result in disastrous consequences. In other words, a small failure in just a single node from a network within a collection of interdependent networks can result in a complete collapse of all the networks. A real-life case of such events is the 2003 electrical blackout in Italy. In this event, a shutdown in one of the power stations caused failure of a number of Internet communication nodes which in turn caused more shutdowns within the power distribution network [9].

As a result of such events and observations, recent studies addressing failures in network analysis problems aim to identify scenarios that might result in system failures and focus on cascading failures in a collection of networks with inter-dependency relations [9]. A major question that should be investigated in a post-failure situation is how to diagnose the source of the system collapse by considering dependency relations between nodes in the system. In this study, we consider a system of interdependent networks where nodes are linked to each other with respect to their failure dependency relations. We analyze a post-failure problem where the nodes in a subset of the nodes of the system are collapsed and the cause of the breakdown is at an unknown node among collapsed nodes. In this problem, a positive inspection cost is assigned to each node of the system and the source of the system breakdown is diagnosed when the inspection cost of its corresponding node is incurred. The goal of this study is to provide an online algorithm which diagnoses the source of the system failure with minimum total incurred inspection cost.

In our study, a competitive analysis of this problem is provided for the first time. Within a competitive analysis framework, the performance of an online algorithm is assessed by comparing its results with the offline optimal solution. In here, an online algorithm refers to an algorithm that provides a solution for an online problem under incomplete information and offline optimal solution is the extracted optimal solution when all the information is known in advance, i.e., the optimal solution to the offline problem. In this approach, an analysis of the worst-case performance is provided and hence no probabilistic knowledge of future events is required in advance. Sleator and Tarjan [21] is the first study that proposed competitive analysis as a framework to analyze online problems. However, Karlin et al. [13] was the first study that proposed the name ”competitive analysis” for this kind of analysis of online problems.

Online algorithms are divided into two categories as deterministic and randomized. In a deterministic algorithm, actions of the decision maker do not depend on probabilistic outcomes, whereas in a randomized algorithm the actions of the decision maker are taken according to some probability distribution [2]. Sleator and Tarjan [21] also introduced competitive ratio (CR) as a notion to assess the performance of online algorithms. This notion was then adopted and used by many researchers in this field. The CR of a deterministic online algorithm on an online problem is the maximum fraction value derived from dividing the obtained objective function value of that online algorithm to the optimal objective value of the offline version of the problem over all the instances of that problem. In a randomized algorithm however, the expected value of CR should be used. The expected CR of a randomized algorithm on a problem is equivalent to the maximum fraction obtained from dividing the expected objective function value of the online algorithm to the optimal objective value of the offline variation considering the entire set of problem instances.

1.1 Problem Definition

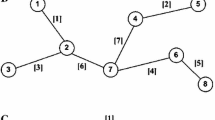

We study a system involving m interdependent networks \(N_1, N_2,..., N_m\) with node sets \(V_1, V_2, ..., V_m\), where the nodes in \(V_l\) and \(V_k\) (\(l, k = 1,2,3,...,m\)) are connected to each other with respect to their failure dependencies, i.e., failing a node causes the failure of all linked nodes to that node. We let \(V = V_1 \cup V_2 \cup V_3 \cup ... \cup V_m\) be the global node set of the system. We consider a post-failure problem where the nodes in a subset of connected nodes \(V' \subset V\) are failed and caused the system breakdown. The source of the breakdown is at an unknown node \(v \in V'\). In this problem, a positive inspection cost \(c_i\) is associated with each node \(v_i \in V\). The goal is to propose an online algorithm which inspects v and diagnoses the source of the system collapse with minimum total inspection cost. We refer to this problem as the online failure diagnosis problem in interdependent networks (OFDIN). In the offline version of the OFDIN, the failing node containing the source of the system collapse is known, i.e., v is known. Hence, the cost or the objective function value of the offline optimum equals the inspection cost of v. Table 1 gives a list of utilized notations in our problem.

2 Literature Review

In our study, we consider a collapsed system of interdependent networks and aim to find an unknown node in the system which is the source of the system failure. Therefore, the primary focus of our literature review is to address two categories of studies. First, we present an overview of articles that consider system failures on interdependent networks. Next, we discuss the studies which investigate search problems defined on networks. Finally, we give our contributions in section 2.3.

2.1 Related Studies on Interdependent Networks

Rinaldi et al. [17] was the first study that introduced inter-dependencies among critical infrastructures and showed that cascading failure between interdependent networks can lead to catastrophic events. A theoretical framework to address inter-dependency among networks has been proposed by [9]. By considering potential cascading failures, this framework provides a better understanding of the robustness in interdependent networks. Moreover, this study proposes exact analytical solutions to identify the set of critical nodes that their failure can cascade in the system of the interdependent networks to a point where the system is fragmented into two separated networks. Chen et al. [10] analyzed and studied cascading failures in real-life interdependent networks. In particular, the authors focused on the inter-dependencies between communication networks and power grids. They proposed a model which is able to identify essential nodes from the perspective of robustness by critically analyzing nodes on each of the networks.

Zhang and Modiano [24] studied the inter-dependency of supply and demand networks. They have addressed cascading failures by investigating the minimum number of supply nodes that eliminating them would result in a disconnection between the demand and supply networks. They developed algorithms to assess the connectivity of the supply node on various network topologies and under different inter-dependency scenarios. Ajam et al. [1] investigated the dynamic inter-dependency between the status of a road network and the critical demand locations in a post-disaster condition. In this study, some road segments are blocked due to a disaster and opening them can provide accessibility to demand nodes. Muro et al. [16] introduced a recovery strategy for nodes in a collapsed network. They developed a framework for recovery of cascading failures in a collection of networks with inter-dependency relations by providing a numerical analysis and an efficient strategy. Shekhtman et al. [18] provided a review of the developed frameworks that address the recovery and vulnerability of interdependent networks.

2.2 Related Search Problems on Networks

Search problems defined on single networks are closely related to our study. An extensive amount of research has been conducted on search problems in which a static hider is positioned at a point or node on a given network, and a moving agent/searcher targets to locate the hider in the shortest time by traversing the edges and inspecting the nodes of the network. The hiders in the search problems correspond to the failures in interdependent networks, and the searchers in the search problems correspond to the agents who are responsible to identify the failures within the interdependent networks. In the search problems, the searcher starts from a node of the network which could be either decided by the searcher or is known and fixed in advance. The literature on this type of search problems is divided into two main streams. In the first stream, the hider is permitted to be positioned at any point within the network. This could be a node or alongside any of the edges. The hider is located by the searcher once the searcher arrives at the point in which the hider is positioned. This stream of search problems corresponds to continuous search problems. For problems belonging to the first stream, see [4, 5, 11 and 12].

The second stream assumes that the hider can only be positioned at one of the nodes within the network. Each node is associated with an inspection cost that can have different values for each node and the agent/searcher should conduct the inspection at the node where the hider is located before the location of the hider is identified. Hence, the agent/searcher bears both the search and travelling costs. This second stream of search problems corresponds to discrete search problems. Another assumption of discrete search problems is that the searcher does not need to inspect every node along its path. The searcher can pass through the nodes without conducting an inspection. If a node was only passed through and not inspected at a point, the searcher has the option of returning to that node for an inspection if the hider is not located. In the literature of the discrete search problems, two decision rules are utilized: 1) Bayes, in which the searcher minimizes the expected cost with respect to a prior distribution, and 2) minimax, in which the problem is modeled as a zero-sum game where the hider and the agent/searcher are its players. In the Bayes approach, the probability of overlooking the hider in case the searcher probes the same node in which the hider is positioned is also taken into account.

Loessner and Wegener [15] studied a variation of the second stream of the search problems where the starting node is decided by the searcher from Bayes perspective. They obtained sufficient and essential conditions for the existence of optimal strategies. Wegener [22] investigated the complexity of the same problem as the one considered in [15] and proved that this problem is NP-hard. Kikuta [14] studied another variation of the search problems belonging to the second stream on finite cyclic networks from the minimax point of view, where the starting node of the searcher is given. They modeled the problem as a zero-sum game between two persons and solved it for a special case. This study also proposed properties of optimal strategies for both the agent/searcher and the hider. Baston and Kikuta [6] investigated a version of search problems of the second stream from the minimax perspective, where the starting node is decided by the searcher. They modeled this problem as a zero-sum game between two persons and provided an upper bound for the value of the game. In addition, they proved a lower bound on the value of the game when the edge costs are uniform. Baston and Kikuta [6] also provided results on special cases of star and line networks. In another article, Baston and Kikuta [7] analyzed a version of discrete search problems from the minimax perspective on directed but not necessarily strongly connected networks. In this problem, the starting node is decided by the searcher.

Recently, Shiri et al. [20] studied a discrete search-and-rescue problem defined on a network where multiple searching agents aim to find multiple hiders which are positioned at nodes of the network. The hiders in this problem represent victims of a natural or man-made disaster. They studied this problem from competitive analysis point of view and presented lower bounds on the CR of online algorithms with a deterministic nature. They also proposed heuristic online algorithms to solve this problem and tested their heuristic algorithms on randomly generated instances. Alpern and Gal [3] provides more information on search theory and related problems, where zero-sum search games between the searcher and the hider under different scenarios are considered.

2.3 Our Contributions

In this study, we investigate an online failure diagnosis problem (OFDIN) on a system of interdependent networks from competitive analysis perspective for the first time in the literature. Our contributions are listed below:

-

We provide worst-case optimal policies for the OFDIN.

-

We derive a lower bound on the CR of the online algorithms with a deterministic nature. We prove the tightness of our algorithm by proposing an optimal deterministic algorithm whose CR matches with our lower bound.

-

We provide a lower bound for the expected CR of online algorithms with a randomized nature. We show the tightness of our lower bound by developing an optimal randomized algorithm in which the expected CR meets the proposed lower bound.

-

We present results which suggests that randomized algorithms are capable of finding better CR values in comparison to deterministic algorithms for the OFDIN in the expected sense.

The remainder of this paper is organized as follows. In Section 3, we derive a lower bound on the CR of deterministic online algorithms and prove its tightness by introducing an optimal deterministic online algorithm in Section 4. In Section 5, we prove a tight lower bound on the expected CR of randomized online algorithms. We propose an optimal randomized online algorithm in Section 6. Concluding remarks are presented in Section 7.

3 Tight Lower Bound on the Competitive Ratio of Deterministic Algorithms

In competitive analysis of a problem, in order to prove that a \(LB^{D}\) is a lower bound on the CR of deterministic algorithms for an arbitrary online problem, it is sufficient to prove that for an arbitrary deterministic algorithm, \(ALG^{D}\), at least one instance \(I^{D}\) exists that \(ALG^{D}\) is not able to obtain a CR better than \(LB^{D}\) on \(I^{D}\). Hereafter, we let \(V = \{v_1,v_2,...,v_n\} = V_1 \cup V_2 \cup ... \cup V_m\). We recall that \(V^{'} \subset V\) is the subset of failing nodes in the problem definition.

Lemma 1

If n shows the number of nodes in V, then there are no deterministic online algorithms that can achieve a CR which is less than n for the OFDIN.

Proof

We consider an instance of the problem where all pairs of the nodes in V are connected, i.e., each pair of nodes in V have failure dependencies and the failure of a single node cause all the remaining nodes in the system to fail. We set the inspection cost of all n nodes in V equal to one. Since the nodes in V are completely connected, an arbitrary deterministic online algorithm \(ALG^D\) applied to this worst-case instance, corresponds to a solution in which the order of the n nodes that should be inspected is given. Let v denote the node which contains the source of the system failure. For \(ALG^D\), we consider the instance in which v is inspected last. Hence, the objective function value of \(ALG^D\) is n. It is trivial that the optimal cost of the offline problem is one since the inspection cost of all nodes is one, the CR of \(ALG^D\) is larger than n.

4 An Optimal Deterministic Online Algorithm

Next, we propose a deterministic online algorithm which achieves the CR of n for the OFDIN. We call this algorithm the low-cost-first (LCF).

The LCF algorithm.

-

Input. Take m interdependent networks \(N_1, N_2, ..., N_m\) with node sets \(V_1, V_2, ..., V_m\), the edges (i.e., failure dependencies) between nodes in \(V_l\) and \(V_k\) for \(l,k = 1,2,...,m\), the inspection cost (\(c_i\)) of each node \(v_i\) in \(V = V_1 \cup V_2 ... \cup V_m\), and the set of failing nodes \(V^{'} \subset V\) as input.

-

Step 1. Calculate the degree of each node in \(V'\). Go to Step 2.

-

Step 2. Inspect the node v in \(V'\) with the minimum inspection cost. If there are more than one nodes with the minimum inspection cost, let v be the node with the minimum inspection cost which has the highest degree. If the source of the failure is diagnosed, terminate. Otherwise, go to Step 3.

-

Step 3. Set \(V= V \setminus \{v\}\) and \(V^{'} = V^{'} \setminus \{v\}\). Update the edges between nodes in V. Go to Step 2.

Below we prove that the LCF algorithm meets the CR of n for the OFDIN.

Theorem 1

The deterministic algorithm LCF is optimal and achieves the CR value of n for the OFDIN.

Proof

Suppose that the nodes \(v_1, v_2,..., v_{n^{'}} \in V'\) are labelled in a non-decreasing order of their inspection costs from 1 to \(n'\) and the source of the system failure is at the \(j^{th}\) (\(j\in \{1,2,..., n^{'}\}\)) node, i.e., the optimal objective function value of the offline problem is \(c_j\). Note that the corresponding objective function value of the LCF algorithm is \(c_1+c_2+...+c_j\). Hence, the CR of the LCF algorithm cannot be more than \(\dfrac{\sum _{i=1}^{j} c_i}{c_j}\). Note that \(c_1 \le c_2 \le ... \le c_j\) since the LCF algorithm examines the nodes in non-decreasing order of their inspection costs according to Step 2. Therefore, the CR of the LCF is less than j. The theorem has been proved since \(j \le n^{'} \le n\).

In the next section, we show that randomized algorithms are able to obtain a better expected CR in comparison to the CR that can be obtained from the best deterministic online algorithms for the OFDIN.

5 Tight Lower Bound on the Expected Competitive Ratio of Randomized Algorithms

Derivation of a lower bound for the CR usually requires a set of specific instances in which there are no online algorithm that are capable of finding good solutions compared to the optimal solution of the offline variation of the problem. However, providing such lower bounds on the expected objective function value for randomized algorithms has proven to be usually very complex. Yao’s Principle is a standard tool for providing lower bounds on the expected CR for randomized algorithms. [23] proved that the expected objective function value for a randomized algorithm on the worst-case scenario of a problem cannot be better than the worst-case random probability distribution of the best performing deterministic algorithm for that distribution. Yao’s Principle enable us to look at the randomization within the input of the problem instead of facing it in the proposed online algorithm. We apply Yao’s Principle in the next lemma in order to provide a tight lower bound on the expected CR of randomized algorithms for the OFDIN.

Lemma 2

There are no online algorithms with a randomized nature that can achieve an expected CR which is less than \(\dfrac{n+1}{2}\) for the OFDIN. In here, n shows the number of nodes in V.

Proof

Same as the proof of Lemma 1, we investigate an instance of the problem where all nodes in V are linked, i.e., \(V = V'\) and failure of a single node causes the failure of all other nodes in V. We set the inspection cost of all n nodes in V to one. Thus, the optimal objective function value of the offline problem is one. We choose \(i^{*} \in \{1,2,...,n\}\) uniformly at random and assume that the source of the system failure is at node \(i^{*}\). An arbitrary deterministic online algorithm \(ALG^D\) applied to this instance examines node \(i^{*}\) and finds the source of the failure with probability \(\dfrac{1}{n}\) at the ith iteration for \(i=1,2,...,n\). If \(ALG^D\) terminates at the ith iteration, it incurs a cost of i. Therefore, the expected objective function value of \(ALG^D\) is \(\dfrac{1}{n} \sum _{i=1}^{n} i = \dfrac{n+1}{2}\). We just showed that the expected cost of \(ALG^D\) with respect to the uniform distribution given on the input is \(\dfrac{n+1}{2}\) for an instance of the OFDIN. The lemma follows by Yao’s Principle [23] since the optimal objective function value of the offline problem is one.

In the next section, we propose a randomized online algorithm whose expected CR meets the obtained lower bound of \(\dfrac{n+1}{2}\).

6 An Optimal Randomized Online Algorithm

We need to present the following definition before describing our optimal randomized algorithm. Note that this property is defined in a different structure for the online k-Canadian traveler problem in [8]. Below, we provide the definition in a generalized structure.

Definition 1

The elements \(\theta _1,\theta _2,...,\theta _n\) which are associated with costs \(c_1,c_2,...,c_n\) have the similar costs structure if for all \(i = 1,2,...,n\) it follows that

To devise our randomized algorithm, we apply the probability distribution that is presented in the following lemma. The proof of this lemma can be found in [9].

Lemma 3

Assume that the elements \(\theta _1,\theta _2,...,\theta _n\) with costs \(c_1 \le c_2 \le ... \le c_{n}\) satisfy the given costs structure in Definition 1. Then the distribution of probabilities given as \(\Omega _{n}= \lambda ^{*}p^{'}\) belongs to the polyhedron \(Q_n\), in which \(\Omega _{n}\) and \(p^{'}\) denote the n-vectors, \(\lambda ^{*} = \sum _{i=1}^{n} \dfrac{1}{p^{'}_{i}} \in [0,1]\),

and

Next, we present our optimal randomized algorithm. Since the algorithm utilizes similar costs property, we call it ANALOG.

6.1 The Optimal Randomized Online Algorithm (ANALOG)

-

Input. Take m interdependent networks \(N_1, N_2, ..., N_m\) with node sets \(V_1, V_2, ..., V_m\), the edges (i.e., failure dependencies) between nodes in \(V_l\) and \(V_k\) for \(l,k = 1,2,...,m\), the inspection cost (\(c_i\)) of each node \(v_i\) in \(V = V_1 \cup V_2 ... \cup V_m\), and the set of failing nodes \(V^{'} \subset V\) as input.

-

Step 1. Let \(n'\) give the number of nodes in \(V'\). Label the nodes in \(V'\) from 1 to \(n'\) in a way that \(c_1 \le c_2 \le ... \le c_{n'}\). Define S as the selection list. Define \(S^{'}\) as the search list. Add all \(n'\) nodes in \(V'\) to \(S^{'}\). Go to Step 2.

-

Step 2. For any arbitrary set of t nodes with search costs \(c_1 \le c_2 \le ... \le c_t\) satisfying a similar cost structure, let the probability distribution \(\Omega _{t}=(p_1,p_2,...,p_t) \in Q_{t}\) be the probabilities that are denoted within Lemma 3. Go to Step 3.

-

Step 3. Set \(S=\emptyset\). Add the nodes from \(S^{'}\) to S by searching them in their non-decreasing inspection cost values to the point which adding the next node violates the similar costs property. Go to Step 4.

-

Step 4. Let t give the number of nodes in S. Choose one of the t nodes in S according to \(\Omega _t\) and inspect the node. If the source of the system failure is diagnosed, terminate. Otherwise, remove the inspected node from \(S^{'}\), \(V'\) and V. Go to Step 3.

To prove that the ANALOG algorithm is optimal, we utilize the following lemma which is proven in [19].

Lemma 4

Suppose that the elements \(\theta _1,\theta _2,...,\theta _n\) with costs \(c_1 \le c_2 \le ... \le c_n\) have the similar costs property. It holds that \(\sum _{i=1}^{n}p_{i}c_{i} \le \sum _{i=1}^{n} \dfrac{1}{n}c_i\), where \(\Omega _n=(p_1,p_2,...,p_n) \in Q_n\) is the probability distribution which is defined in Lemma 3.

Theorem 2

The randomized algorithm ANALOG is optimal and can obtain an expected CR of \(\dfrac{n+1}{2}\) for the OFDIN. In here, n gives the number of nodes in V.

Proof

Note that \(n^{'}\) (\(n^{'} \le n\)) denotes the number of failing nodes in \(V'\). We prove the theorem by induction on n.

-

Base case. When \(n = n^{'}=1\), the only failing node in the system is inspected with probability \(p_1 = 1\). Hence, the costs of ANALOG and the offline optimum equal \(c_1\). Thus, the CR of ANALOG is one.

-

Inductive step. For \(n \ge n^{'} \ge 2\), suppose that the nodes in \(V'\) are labelled from 1 to \(n'\) (\(n^{'} \ge 2\)) such that \(c_1\le c_2 \le ... \le c_{n'}\). Also let node \({i^{*}}\) \((i^{*} \in \{1,2,...,n^{'} \})\) be the node containing the source of the system failure. Hence, the cost value of the offline problem is \(c_{i^{*}}\). While Step 3 of the algorithm is implemented for the first time, we add the nodes from the search list (\(S^{'}\)) to the selection list (S) based on their non-decreasing inspection costs up to the point where adding the nodes to the selection list disrupts the given costs structure. After Step 3 of the algorithm is implemented for the first time, the selection list will be updated by adding a number of nodes to it. Let us denote these nodes by 1, 2, ..., t (\(t \le n^{'} \le n\)) with search costs \(c_1,c_2,...,c_{t}\). After this point, the strategy moves to Step 4 which incorporates two scenarios. The rest of our proof is presented in following considering these scenarios.

-

Case 1. the selection list does not include node \({i^{*}}\). In this scenario, one of the nodes 1, 2, ..., t is inspected according to the probability distribution \(\Omega _{t}=(p_1,p_2,...,p_{t})\) (Lemma 3). Hence, ANALOG incurs an expected cost of \(\sum _{i=1}^{t} p_ic_i\) at the end of the first implementation of Step 4. Note that \(\sum _{i=1}^{t} p_ic_i \le \sum _{j=1}^{t} \dfrac{1}{t} c_j\) according to Lemma 4. If the source of the failure is diagnosed, the algorithm terminates. Otherwise, the inspected node is removed from V. Let \(C^{n-1}\) give the expected cost of ANALOG from the time that the first implementation of Step 4 is completed (i.e., when \(\vert V \vert = n-1\)) until the time when the algorithm terminates. Note that \(C^{n-1}\) is at most \((\dfrac{n}{2})c_{i^{*}}\) by the induction assumption (e.g., for \(n=2\), \(C^1 = c_{i^{*}}\) according to the base case). Thus, the expected cost of ANALOG is at most \(\sum _{j=1}^{t} \dfrac{1}{t} c_j + (\dfrac{n}{2})c_{i^{*}}\). Since node \({i^{*}}\) is not in the selection list, it can be observed that this node does not adhere a similar cost structure with the t nodes which are already added to the selection list. Hence, \(\sum _{j=1}^{t} \dfrac{1}{t} c_j < \dfrac{c_{i^{*}}}{2}\) according to Definition 1. Consequently, the expected cost of ANALOG is at most \(\dfrac{n+1}{2}c_{i^{*}}\) and the expected CR of ANALOG is at most \(\dfrac{n+1}{2}\).

-

Case 2. the selection list includes node \({i^{*}}\). In this case, one of the nodes 1, 2, ..., t is inspected according to the probability distribution \(\Omega _{t}=(p_1,p_2,...,p_{t})\) (Lemma 3). If the source of the system failure is not diagnosed, ANALOG removes the inspected node from the search list; otherwise, the source of the breakdown is found and the algorithm ends. Suppose that the source of the failure is not diagnosed and a node is removed from V, i.e., \(\vert V \vert = n-1\). Let \(C^{n-1}\) gives the expected objective function value of ANALOG from the time that the first implementation of Step 4 is completed until the time when the algorithm terminates. Since \(t \le n^{'} \le n\), an upper bound on the expected CR can be given as

Note that \(C^{n-1}\) is less than \((\dfrac{n}{2})c_{i^{*}}\) by the assumption that we made in the induction. As a result, the expected CR has an upper bound given by

We show that the expected CR is at most \(\dfrac{n+1}{2}\) for all \(i^{*} = 1,2,..., n\), i.e.,

for all \(i = 1,2,..., n\), or equivalently

for all \(i = 1,2,..., n\). Since the probability distribution \({\Omega _{n}} = (p_1,p_2,...,p_{n})\) belongs to the polyhedron \(Q_{n}\), the proof holds by the description of \(Q_{n}\) in Lemma 3.

Corollary 1

The optimal randomized algorithm ANALOG obtains a better CR when compared to the best deterministic algorithm LCF in the expected sense.

Proof

The corollary follows since \(\dfrac{n+1}{2} \le n\) for positive integer values of n.

7 Concluding Remarks

We studied a post-failure problem defined on a system of interdependent networks where nodes are linked to each other with respect to their failure dependency relations. In this problem, a subset of nodes of the system are collapsed and the reason of the system failure is at an unknown node among the failing nodes. A positive inspection cost is assigned to each of the nodes in the system and the source of the system breakdown is diagnosed when the inspection cost of its corresponding node is incurred. The goal of this study is to devise an online algorithm which diagnoses the source of the system breakdown with minimum total incurred inspection cost.

-

We proposed online algorithms with deterministic and randomized nature that are optimal with respect to the worst-case scenarios.

-

We proposed a lower bound on the competitive ratio of online algorithms with a deterministic nature and proved that our lower bound is tight.

-

We proved the tightness of our lower bound by developing an optimal deterministic algorithm whose competitive ratio matches the suggested tight lower bound.

-

We also derived a lower bound on the expected competitive ratio for online algorithms with an online nature.

-

We proved the tightness of this lower bound by providing an optimal randomized algorithm with a matching expected competitive ratio.

-

Our results confirm that online algorithms with a randomized nature can obtain better competitive ratio in comparison to deterministic algorithms in the expected sense for the OFDIN.

A summary of the results is given in Table 2

An advantage of applying competitive analysis is that optimal solutions which are robust against the worst-case scenarios are provided for the OFDIN. Given that obtaining probability distributions is not practical in several real-life applications of the OFDIN, another advantage of utilizing the competitive analysis approach is that no probability distribution is required to model the uncertainties of the systems and instead solutions are dynamically provided while new information is revealed. As it is stated above, competitive analysis focuses on the worst-case performance of solutions. Hence, a disadvantage of this approach is that the provided solutions may achieve undesirable average performance over all instances of the problem.

As a future research direction, the variation of the OFDIN where there is a switch cost between the interdependent networks can be investigated. Another interesting future research direction is to study the OFDIN with multiple inspecting agents. In the case with multiple agents, the communication level among them can also be investigated. For instance, the agents can have a full and immediate communication where they can inform each other about visited nodes or the communication could be partial or with delays. Applying the proposed algorithms on real systems of interdependent networks and real-world case studies is another future research direction for the introduced online problem in this study.

Change history

15 July 2021

A Correction to this paper has been published: https://doi.org/10.1007/s43069-021-00083-y

References

Ajam M, Akbari V, Salman FS (2019) Minimizing latency in post-disaster road clearance operations. Eur J Oper Res 277(3):1098–1112

Albers S (2003) Online algorithms: A survey. Math Program 97:3–26

Alpern S, Gal S (2003) The Theory of Search Games and Rendezvous. Internat Ser Oper Res Management Sci, Boston

Alpern S, Baston V, Gal S (2009) Searching symmetric networks with utilitarian-postman paths. Networks 53:392–402

Angelopoulos S, Arsenio D, Durr C (2017) Infinite linear programming and online searching with turn cost. Theor Comput Sci 670:11–22

Baston V, Kikuta K (2013) Search games on networks with travelling and search costs and with arbitrary searcher starting points. Networks 62:72–79

Baston V, Kikuta K (2014) Search games on a network with travelling and search costs. Int J Game Theory 44:347–365

Bender M, Westphal S (2015) An optimal randomized online algorithm for the k-canadian traveller problem on node-disjoint paths. J Comb Optim 30:87–96

Buldyrev SV, Parshani R, Paul G, Stanley HE, Havlin S (2010) Catastrophic cascade of failures in interdependent networks. Nature 464:1025–1028

Chen Z, Wu J, Xia Y, Zhang X (2018) Robustness of interdependent power grids and communication networks: A complex network perspective. IEEE Trans Circuits Syst Express Briefs 65:115–119

Demaine ED, Fekete SP, Gal S (2006) Online searching with turn cost. Theor Comput Sci 361:342–355

Jaillet P, Stafford M (2001) Online searching. Oper Res 49:501–515

Karlin AR, Manasse MS, Rudolph L, Sleator DD (1988) Competitive snoopy caching. Algorithmica 3:79–119

Kikuta K (2004) A search game on a cyclic graph. Nav Res Logist 51:977–993

Loessner U, Wegener I (1982) Discrete sequential search with positive switch cost. Math Oper Res 7:426–440

Muro MD, Rocca CEL, Stanley HE, Havlin S, Braunstein L (2016) Recovery of interdependent networks. Sci Rep 6:22834

Rinaldi S, Peerenboom J, Kelly T (2001) Identifying, understanding, and analyzing critical infrastructure interdependencies. IEEE Control Syst Mag 21:11–25

Shekhtman LM, Danziger MM, Havlin S (2016) Recent advances on failure and recovery in networks of networks. Chaos Solitons Fractals 90:28–36

Shiri D, Salman FS (2019) Competitive analysis of randomized online strategies for the multi-agent k-canadian traveler problem. J Comb Optim 37:848–865

Shiri D, Akbari V, Salman FS (2020) Online routing and scheduling of search-and-rescue teams. OR Spectrum 42(3):755–784

Sleator D, Tarjan R (1985) Amortized efficiency of list update and paging rules. Commun ACM 28:202–208

Wegener I (1985) Optimal search with positive switch cost is np-hard. Inf Process Lett 21:49–52

Yao AC (1977) Probabilistic computations: Towards a unified measure of complexity. Proceedings of the 18th Annual IEEE Symposium on the Foundations of Computer Science, p 222–227

Zhang J, Modiano E (2018) Connectivity in interdependent networks. IEEE/ACM Trans Networking 26:2090–2103

Funding

The authors have not received any financial support for the authorship, publication, and research of this article.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of Interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of Topical Collection on Optimization, Control, and Machine Learning for Interdependent Networks

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shiri, D., Akbari, V. Online Failure Diagnosis in Interdependent Networks. SN Oper. Res. Forum 2, 10 (2021). https://doi.org/10.1007/s43069-021-00055-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s43069-021-00055-2