Abstract

We provide necessary and sufficient conditions for a pair S, T of Hilbert space operators in order that they satisfy \(S^*=T\) and \(T^*=S\). As a main result we establish an improvement of von Neumann’s classical theorem on the positive self-adjointness of \(S^*S\) for two variables. We also give some new characterizations of self-adjointness and skew-adjointness of operators, not requiring their symmetry or skew-symmetry, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The adjoint of an unbounded linear operator was first introduced by John von Neumann in [5] as a profound ingredient for developing a rigorous mathematical framework for quantum mechanics. By definition, the adjoint of a densely defined linear transformation S, acting between two Hilbert spaces, is an operator T with the largest possible domain such that

holds for every x from the domain of S. The adjoint operator, denoted by \(S^*\), is therefore “maximal” in the sense that it extends every operator T that has property (1). On the other hand, every restriction T of \(S^*\) fulfills that adjoint relation. Thus, in order to decide whether an operator T is identical with the adjoint of S it seems reasonable to restrict ourselves to investigating those operators T that have property (1). This issue was explored in detail in [16] by means of the operator matrix

In the present paper we continue to examine the conditions under which an operator T is equal to the adjoint \(S^*\) of S. Nevertheless, as opposed to the situation treated in the cited papers, we do not assume that S and T are adjoint to each other in the sense of (1). Observe that condition (1) is equivalent to identity

So, still under condition (1), T is equal to the adjoint of S if and only if \(S^*\cap T=S^*\). In the present paper we are going to guarantee equality \(S^*=T\) by imposing new conditions, weaker than (1), by means of the kernel and range spaces. Roughly speaking, we only require that the intersection of the graphs of \(S^*\) and T be, in a sense, “large enough”. We also establish a criterion in terms of the norm of the resolvent of the operator matrix

As an application we gain some characterizations of self-adjoint, skew-adjoint and unitary operators, thereby generalizing some analogous results by Nieminen [7] (cf. also [9]).

2 Preliminaries

Throughout this paper, \({\mathcal {H}}\) and \({\mathcal {K}}\) will denote real or complex Hilbert spaces. By an operator S between \({\mathcal {H}}\) and \({\mathcal {K}}\) we mean a linear map \(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\) whose domain \({\mathrm{dom\,}}S\) is a linear subspace of \({\mathcal {H}}\). We stress that, unless otherwise indicated, linear operators are not assumed to be densely defined. However, the adjoint of such an operator can only be interpreted as a “multivalued operator”, that is, a linear relation. Therefore we are going to collect here some basic notions and facts on linear relations.

A linear relation between two Hilbert spaces \({\mathcal {H}}\) and \({\mathcal {K}}\) is nothing but a linear subspace S of the Cartesian product \({\mathcal {H}}\times {\mathcal {K}}\), respectively, a closed linear relation is just a closed subspace of \({\mathcal {H}}\times {\mathcal {K}}\). To a linear relation S we associate the following subspaces

which are referred to as the domain, range, kernel and multivalued part of S, respectively. Every linear operator when identified with its graph is a linear relation with trivial multivalued part. Conversely, a linear relation whose multivalued part consists only of the vector 0 is (the graph of) an operator.

A notable advantage of linear relations, compared to operators, lies in the fact that one might define the adjoint without any further assumption on the domain. Namely, the adjoint of a linear relation S will be again a linear relation \(S^*\) between \({\mathcal {K}}\) and \({\mathcal {H}}\), given by

Here, \(V:{\mathcal {H}}\times {\mathcal {K}}\rightarrow {\mathcal {K}}\times {\mathcal {H}}\) stands for the ‘flip’ operator \(V(h,k){:}{=}(k,-h)\). It is seen immediately that \(S^*\) is automatically a closed linear relation and satisfies the useful identity

On the other hand, a closed linear relation S entails the following orthogonal decomposition of the product Hilbert space \({\mathcal {K}}\times {\mathcal {H}}\):

Note that another equivalent definition of \(S^*\) is obtained in terms of the inner product as follows:

In other words, \((k',h')\in S^*\) holds if and only if

In particular, if S is a densely defined operator, then the relation \(S^*\) coincides with the usual adjoint operator of S. Recall also the dual identities

where the second equality tells us that the adjoint of a densely defined linear relation is always a (single valued) operator. For further information on linear relations we refer the reader to [1, 2, 4, 10].

3 Operators which are adjoint of each other

Arens [1] characterized the equality \(S=T\) of two linear relations in terms of their kernel and range (see Corollary 2). Below we provide a similar characterization of \(S\subset T\). Observe that the intersection \(S\cap T\) of the linear relations S and T is again a linear relation, but this is not true for their union \(S\cup T\) as it is not a linear subspace in general. The linear span of \(S\cup T\) will be denoted by \(S\vee T\), which in turn is a linear relation.

Proposition 1

Let S and T be linear relations between two vector spaces. Then the following three statements are equivalent:

-

(i)

\(S\subset T\),

-

(ii)

\(\ker \,S\subset \ker \, T\)and\({\mathrm{ran\,}}S\subset {\mathrm{ran\,}}(S\cap T)\),

-

(iii)

\({\mathrm{ran\,}}S\subset {\mathrm{ran\,}}T\)and\(\ker \, (S\vee T)\subset \ker \, T\).

Proof

It is clear that (i) implies both (ii) and (iii). Suppose now (ii) and let \((h,k)\in S\) then there exists u with \((u,k)\in T\cap S\). Consequently, \((h-u,0)\in S\), i.e., \(h-u\in \ker \, S\subset \ker \, T\). Hence

which yields \(S\subset T\), so (ii) implies (i). Finally, assume (iii) and take \((h,k)\in S\). Then \((u,k)\in T\) for some u and hence \((h-u,0)\in S\vee T\), i.e., \(h-u\in \ker \, T\). Consequently,

which yields \(S\subset T\). \(\square\)

Corollary 1

Let S and T be two linear relations between two vector spaces. The following three statements are equivalent:

-

(i)

\(S=T\),

-

(ii)

\(\ker \, S=\ker T\)and\({\mathrm{ran\,}}S+{\mathrm{ran\,}}T\subseteq {\mathrm{ran\,}}(S\cap T)\),

-

(iii)

\({\mathrm{ran\,}}S={\mathrm{ran\,}}T\)and\(\ker \, (S\vee T)\subseteq \ker \, (S\cap T)\).

Corollary 2

LetSandTbe linear relations between two vector spaces such that\(S\subset T\). Then the following assertions are equivalent:

-

(i)

\(S=T\),

-

(ii)

\(\ker \, S=\ker T\)and\({\mathrm{ran\,}}S={\mathrm{ran\,}}T\).

In [17, Theorem 2.9] M. H. Stone established a simple yet effective sufficient condition for an operator to be self-adjoint: a densely defined symmetric operator S is necessarily self-adjoint provided it is surjective. In that case, it is invertible with bounded and self-adjoint inverse due to the Hellinger–Toeplitz theorem. Here, density of the domain can be dropped from the hypotheses: a surjective symmetric operator is automatically densely defined (see also [16, Corollary 6.7] and [15, Lemma 2.1]).

Below we establish a generalization of Stone’s result for a pair of operators.

Proposition 2

Let\({\mathcal {H}},{\mathcal {K}}\)be real or complex Hilbert spaces and let\(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\)and\(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\)be (not necessarily densely defined or closed) linear operators such that

ThenSandTare both densely defined operators such that\(S^*=T\)and\(T^*=S\).

Proof

For brevity, introduce the following notations

Observe that \(S_0\) and \(T_0\) are adjoint to each other in the sense that

We claim that \(S_0\) and \(T_0\) are densely defined: let \(z\in ({\mathrm{dom\,}}S_0)^{\perp }\), then by surjectivity, \(z=T_0v\) for some \(v\in {\mathrm{dom\,}}T_0\). Hence

which implies \(v=0\) and also \(z=0\). The same argument shows that \(T_0\) is densely defined too. We see now that S and \(T^*\) are densely defined operators such that

and \({\mathrm{ran\,}}(S\cap T^*)={\mathcal {K}}\). Corollary 1 applied to S and \(T^*\) implies that \(S=T^*\). The same argument yields equality \(S^*=T\). \(\square\)

Corollary 3

Let\(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\)and\(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\)be (not necessarily densely defined) surjective operators such that

ThenSandTare both densely defined operators such that\(S^*=T\)and\(T^*=S\).

From Proposition 2 we gain a sufficient condition of self-adjointness without the assumptions of being symmetric or densely defined:

Corollary 4

Let\({\mathcal {H}}\)be a Hilbert space and let\(S:{\mathcal {H}}\rightarrow {\mathcal {H}}\)be a linear operator such that\({\mathrm{ran\,}}(S\cap S^*)={\mathcal {H}}\). ThenSis densely defined and self-adjoint.

Proof

Apply Proposition 2 with \(T{:}{=}S\). \(\square\)

Clearly, if S is a symmetric operator, then \(S\cap S^*=S\). Hence we retrieve [17, Theorem 2.9] by M. H. Stone as an immediate consequence (cf. also [16, Corollary 6.7]):

Corollary 5

Every surjective symmetric operator is densely defined and self-adjoint.

In the next result we give a necessary and sufficient condition for an operator S to be identical with the adjoint of a given operator T.

Theorem 1

Let\({\mathcal {H}},{\mathcal {K}}\)be real or complex Hilbert spaces and let\(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\)and\(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\)be (not necessarily densely defined or closed) linear operators. The following two statements are equivalent:

-

(i)

Tis densely defined and\(S=T^*\),

-

(ii)

-

(a)

\(({\mathrm{ran\,}}T)^{\perp }=\ker \, S\),

-

(b)

\({\mathrm{ran\,}}S+{\mathrm{ran\,}}T^*\subset {\mathrm{ran\,}}(S\cap T^*)\).

-

(a)

Proof

It is obvious that (i) implies (ii). Assume now (ii) and for sake of brevity introduce the operator

We start by establishing that T is densely defined. Let \(g\in ({\mathrm{dom\,}}T^*)^{\perp }\), then \((0,g)\in T^*\), i.e., \(g\in {\mathrm{ran\,}}T^*\). By (ii) (a),

for some \(h\in {\mathrm{dom\,}}S_0\). Then it follows that \((h,Sh)\in T^*\) and therefore

whence we infer that \(h\in ({\mathrm{ran\,}}T)^{\perp }\). Again by (ii) (a) we have \(h\in \ker \, S\) and thus \(g=Sh=0\). This proves that T is densely defined and as a consequence, \(T^*\) is an operator. Next we prove that

To see this consider \(g\in {\mathrm{dom\,}}T^*\). By (ii) (b),

for some \(h\in {\mathrm{dom\,}}S_0\). Then it follows that \(T^*(g-h)=0\), i.e.,

Consequently, \(g=(g-h)+h\in {\mathrm{dom\,}}S\) and \(Sg=Sh=T^*g\), which proves (3). It only remains to show that the converse inclusion

holds also true. For let \(g\in {\mathrm{dom\,}}S\) and choose \(h\in {\mathrm{dom\,}}S_0\) such that

Then \(g-h\in \ker \, S=({\mathrm{ran\,}}T)^\perp =\ker \, T^*\) whence we get \(g=(g-h)+h\in {\mathrm{dom\,}}T^*\) and \(T^*g=T^*h=Sg\), which proves (4). \(\square\)

A celebrated theorem by von Neumann [6] states that \(S^*S\) and \(SS^*\) are positive and selfadjoint operators provided that S is a densely defined and closed operator between \({\mathcal {H}}\) and \({\mathcal {K}}.\) In that case, \(I+S^*S\) and \(I+SS^*\) are both surjective. In [12] it has been proved that the converse is also true: If \(I+S^*S\) and \(I+SS^*\) are both surjective operators, then S is necessarily closed (cf. also [3]). Below, as the main result of the paper, we establish an improvement of Neumann’s theorem:

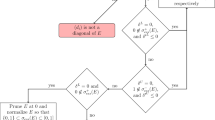

Theorem 2

Let\({\mathcal {H}},{\mathcal {K}}\)be real or complex Hilbert spaces and let\(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\)and\(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\)be linear operators and introduce the operators\(S_0{:}{=}S\cap T^*\)and\(T_0{:}{=}T\cap S^*\). The following statements are equivalent:

-

(i)

S, Tare both densely defined and they are adjoint of each other: \(S^*=T\)and\(T^*=S\),

-

(ii)

\({\mathrm{ran\,}}(I+T_0S_0)={\mathcal {H}}\)and\({\mathrm{ran\,}}(I+S_0T_0)={\mathcal {K}}\).

Proof

It is clear that (i) implies (ii). To prove the converse implication observe first that

We start by showing that \(S^{}_0\) is densely defined. Take a vector \(g\in ({\mathrm{dom\,}}S_0)^{\perp }\), then there is \(u\in {\mathrm{dom\,}}S^{}_0\) such that \(g=u+T^{}_0S^{}_0u\). Consequently,

whence \(u=0\), and therefore also \(g=0\). It is proved analogously that \(T^{}_0\) is densely defined too, and therefore the adjoint relations \(S_0^{*}\) and \(T_0^{*}\) are operators such that \(S^{}_0\subset T_0^*\) and \(T^{}_0\subset S_0^*\).

We are going to prove now that \(S^{}_0\) and \(T^{}_0\) are adjoint of each other, i.e.,

Consider a vector \(g\in {\mathrm{dom\,}}T_0^*\) and take \(u\in {\mathrm{dom\,}}S^{}_0\) and \(v\in {\mathrm{dom\,}}T^{}_0\) such that

Since u is in \({\mathrm{dom\,}}T_0^*\) we infer that \(T_0S_0u\in {\mathrm{dom\,}}T_0^*\) and hence

It follows then that

which yields \(v=S_0u\in {\mathrm{dom\,}}T_0.\) As a consequence we obtain that

and therefore that \(g\in {\mathrm{dom\,}}S_0\). This proves the first equality of (5). The second one is proved in a similar way.

Now we can complete the proof easily: since \(S_0\subset T^*\) and \(T_0\subset T\) it follows that

whence \(T^*=T_0^*=S^{}_0\), and therefore \(T^*\subset S\). On the other hand, \(T_0\subset S^*\) implies

whence we conclude that \(S=T^*\). It can be proved in a similar way that \(T=S^*\). \(\square\)

As an immediate consequence we conclude the following result:

Corollary 6

Let \({\mathcal {H}}\) and \({\mathcal {K}}\) be real or complex Hilbert spaces and let \(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\) be a densely defined operator. The following statements are equivalent:

-

(i)

Sis closed,

-

(ii)

\(S^*S\)and\(SS^*\)are self-adjoint operators,

-

(iii)

\({\mathrm{ran\,}}(I+S^*S)={\mathcal {H}}\)and\({\mathrm{ran\,}}(I+SS^*)={\mathcal {K}}\).

Proof

Apply Theorem 2 with \(T{:}{=}S^*\). \(\square\)

In the ensuing theorem we provide a renge-kernel characterization of operators T that are identical with the adjoint \(S^*\) of a densely defined symmetric operator S. We stress that no condition on the closedness of the operator or density of the domain is imposed. On the contrary: we get those properties from the other conditions.

Theorem 3

Let\({\mathcal {H}}\)be a real or complex Hilbert space and let\(T:{\mathcal {H}}\rightarrow {\mathcal {H}}\)be a (not necessarily densely defined or closed)linear operator and let\(T_0{:}{=}T\cap T^*\). The following two statements are equivalent:

-

(i)

there exists a densely defined symmetric operatorSsuch that\(S^*=T\),

-

(ii)

-

(a)

\(\ker \, T=({\mathrm{ran\,}}T^*)^{\perp }\),

-

(b)

\({\mathrm{ran\,}}T_0={\mathrm{ran\,}}T^{**}={\mathrm{ran\,}}T^*\).

-

(a)

In particular, if any of the equivalent conditions (i), (ii) is satisfied, thenTis a densely defined and closed operator such that\(T^*\subset T\).

Proof

It is straightforward that (i) implies (ii) so we only prove the converse. We start by proving that T is densely defined. Take \(g\in ({\mathrm{dom\,}}T)^{\perp }\), then \(g\in {{\,\mathrm{mul}\,}}T^*\subseteq {\mathrm{ran\,}}T^*\). By (ii) (b), there exists \(h\in {\mathrm{dom\,}}T_0\) such that \(g=T_0h=Th\). Consequently, \((h,g)\in T^*\) and for every \(f\in {\mathrm{dom\,}}T\),

which yields \(h\in ({\mathrm{ran\,}}T)^{\perp }\). Observe that (ii) (a) and (b) together imply that

whence we infer that \(h\in \ker \, T\) and therefore that \(g=Th=0\). This means that \(T^*\) is a (single valued) operator. Our next claim is to show that

To this end, let \(g\in {\mathrm{dom\,}}T^*\), then \(T^*g=T_0h\) for some \(h_0\in {\mathrm{dom\,}}T_0\). From inclusion \(T_0\subset T^*\) we conclude that \(g-h\in \ker \, T^*=({\mathrm{ran\,}}T)^\perp\), thus \(g=(g-h)+h\in {\mathrm{dom\,}}T\) and

which proves (7). Next we show that \(T^*\) is densely defined too, i.e., T is closable. To this end consider a vector \(g\in ({\mathrm{dom\,}}T^*)^\perp ={{\,\mathrm{mul}\,}}T^{**}\). Since \({{\,\mathrm{mul}\,}}T^{**}\subseteq {\mathrm{ran\,}}T^{**}\), we can find a vector \(h\in {\mathrm{dom\,}}T_0\) such that \(g=T_0h\). For every \(k\in {\mathrm{dom\,}}T^*\),

thus \(h\in ({\mathrm{ran\,}}T^*)^{\perp }\). By (ii) (a) we infer that \(h\in \ker \, T\) and hence \(g=Th=0\) and thus \(({\mathrm{dom\,}}T^*)^\perp =\{0\}\), as it is claimed. Finally we show that T is closed. Take \(g\in {\mathrm{dom\,}}T^{**}\), then \(T^{**}g=Th\) for some \(h\in {\mathrm{dom\,}}T\), according to assumption (ii) (b). Hence \(g-h\in \ker \, T^{**}=({\mathrm{ran\,}}T^*)^{\perp }\), thus \(g-h\in \ker \, T\) because of (ii) (a). Consequently, \(g=(g-h)+h\in {\mathrm{dom\,}}T\) which proves identity \(T=T^{**}\). Summing up, \(S{:}{=}T^*\) is a densely defined operator such that \(S\subset T=S^*\). In other words, T is identical with the adjoint \(S^*\) of the symmetric operator S. \(\square\)

4 Characterizations involving resolvent norm estimations

Let \({\mathcal {H}}\) and \({\mathcal {K}}\) be real or complex Hilbert spaces. For given two linear operators \(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\) and \(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\), let us consider the operator matrix

acting on the product Hilbert space \({\mathcal {H}}\times {\mathcal {K}}\). More precisely, \(M_{S,T}\) is an operator acting on its domain \({\mathrm{dom\,}}M_{S,T}{:}{=}{\mathrm{dom\,}}S\times {\mathrm{dom\,}}T\) by

Assume that a real or complex number \(\lambda \in {\mathbb {K}}\) belongs to the resolvent set \(\rho (M_{S,T})\), which means that

has an everywhere defined bounded inverse. In that case, for brevity’s sake, we introduce the notation

for the corresponding resolvent operator.

In the present section, we are going to establish some criteria, by means of norms of the resolvent operator \(R_{S,T}(\lambda )\), under which the operators S and T are adjoint of each other. Our approach is motivated by the classical paper of T. Nieminen [7] (cf. also [9]). We emphasize that our framework is more general than that of [7] for many ways: we do not assume that the operators under consideration are densely defined or closed, and also the underlying space may be real or complex.

Theorem 4

Let\(S:{\mathcal {H}}\rightarrow {\mathcal {K}}\)and\(T:{\mathcal {K}}\rightarrow {\mathcal {H}}\)be linear operators between the real or complex Hilbert spaces\({\mathcal {H}}\)and\({\mathcal {K}}\). The following assertions are equivalent:

-

(i)

SandTare densely defined such that\(S^*=T\)and\(T^*=S\),

-

(ii)

every non-zero real numbertbelongs to the resolvent set of\(M_{S,T}\)and

$$\begin{aligned} \Vert R_{S,T}(t) \Vert \le \frac{1}{{|}t{|}},\qquad \forall t\in {\mathbb {R}}, t\ne 0. \end{aligned}$$(8)

Proof

Let us start by proving that (i) implies (ii). Assume therefore that S is densely defined and closed and that \(T=S^*\). Consider a non-zero real number t and a pair of vectors \(h\in {\mathrm{dom\,}}S\) and \(k\in {\mathrm{dom\,}}S^*\), then we have

which implies that \(M_{S,T}+t\) is bounded from below and the norm of its inverse \(R_{S,T}(-t)\) satisfies (8). However it is not yet clear that \(R_{S,T}(-t)\) is everywhere defined. Since we have

it follows that

where W is the ‘flip’ operator \(W(k,h){:}{=}(-h,k)\). Since S is densely defined and closed according to our hypotheses, the subspace on the right hand side of (9) is equal to \({\mathcal {H}}\times {\mathcal {K}}\). This proves statement (ii).

For the converse direction, observe that (8) implies

Hence from (8) we conclude that

for every \(t\in {\mathbb {R}}\). Consequently,

In the real Hilbert space case it is straightforward that S and T are adjoint to each other. In the complex case, replace x by ix to get

So, in both real and complex cases, we obtain that \(S\subset T^*\) and \(T\subset S^*\). With notation of Theorem 2 this means that \(S_0=S\) and \(T_0=T\). Since we have

we conclude that

is a surjective operator onto \({\mathcal {H}}\times {\mathcal {K}}\), which entails \({\mathrm{ran\,}}(I+TS)={\mathcal {H}}\) and \({\mathrm{ran\,}}(I+ST)= {\mathcal {K}}\). An immediate application of Theorem 2 completes the proof. \(\square\)

As an immediate consequence of Theorem 4 we can establish the following characterizations of self-adjoint, skew-adjoint and unitary operators.

Corollary 7

Let \({\mathcal {H}}\) be a real or complex Hilbert space. For a linear operator \(S:{\mathcal {H}}\rightarrow {\mathcal {H}}\) the following assertions are equivalent:

-

(i)

Sis densely defined and self-adjoint,

-

(ii)

Every non-zero real number t is in the resolvent set of \(M_{S,-S}\) and

$$\begin{aligned} \Vert R_{S,-S}(t) \Vert \le \frac{1}{{|}t{|}},\qquad \forall t\in {\mathbb {R}}, t\ne 0. \end{aligned}$$(10)

Proof

Apply Theorem 4 with \(T{:}{=}S\) to conclude the desired equivalence. \(\square\)

Corollary 8

Let \({\mathcal {H}}\) be a real or complex Hilbert space. For a linear operator \(S:{\mathcal {H}}\rightarrow {\mathcal {H}}\) the following assertions are equivalent:

-

(i)

Sis densely defined and skew-adjoint,

-

(ii)

every non-zero real number t is in the resolvent set of \(M_{S,S}\) and

$$\begin{aligned} \Vert R_{S,S}(t) \Vert \le \frac{1}{{|}t{|}},\qquad \forall t\in {\mathbb {R}}, t\ne 0. \end{aligned}$$(11)

Proof

Apply Theorem 4 with \(T{:}{=}-S\). \(\square\)

Corollary 9

Let\({\mathcal {H}}\)and\({\mathcal {K}}\)be a real or complex Hilbert spaces. For a linear operator\(U:{\mathcal {H}}\rightarrow {\mathcal {K}}\)the following assertions are equivalent:

-

(i)

Uis a unitary operator,

-

(ii)

\(\ker U=\{0\}\), every non-zero real numbertis in the resolvent set of\(M_{U,U^{-1}}\)and

$$\begin{aligned} \Vert R_{U,U^{-1}}(t) \Vert \le \frac{1}{{|}t{|}},\qquad \forall t\in {\mathbb {R}}, t\ne 0. \end{aligned}$$(12)

Proof

An application of Theorem 4 with \(S{:}{=}U\) and \(T{:}{=}U^{-1}\) shows that U is densely defined and closed such that \(U^*=U^{-1}\). Hence, \({\mathrm{ran\,}}U^*\subseteq {\mathrm{dom\,}}U\). Since we have \({\mathrm{ran\,}}U^*+{\mathrm{dom\,}}U={\mathcal {H}}\) for every densely defined closed operator U, we infer that \({\mathrm{dom\,}}U={\mathcal {H}}\) and therefore U is a unitary operator. \(\square\)

References

Arens, R.: Operational calculus of linear relations. Pac. J. Math. 11, 9–23 (1961)

Behrndt, J., Hassi, S., de Snoo, H.S.V.: Boundary Value Problems, Weyl Functions, and Differential Operators Monographs in Mathematics, vol. 108. Birkhäuser, Cham (2020)

Gesztesy, F., Schmüdgen, K.: On a theorem of Z. Sebestyén and Zs. Tarcsay. Acta Sci. Math. (Szeged) 85, 291–293 (2019)

Hassi, S., de Snoo, H.S.V., Szafraniec, F.H.: Componentwise and canonical decompositions of linear relations. Diss. Math. 465, 59 (2009)

Neumann, J.: Allgemeine Eigenwerttheorie hermitescher Funktionaloperatoren. Math. Ann. 102, 49–131 (1930)

Neumann, J.: Über adjungierte Funktionaloperatoren. Ann. Math. 33, 294–310 (1932)

Nieminen, T.: A condition for the self-adjointness of a linear operator. Ann. Acad. Sci. Fenn. Ser., A I No 316

Popovici, D., Sebestyén, Z.: On operators which are adjoint to each other. Acta Sci. Math. (Szeged) 80, 175–194 (2014)

Roman, M., Sandovici, A.: A note on a paper by Nieminen. Results Math. 74(2), Art. 73 (2019)

Schmüdgen, K.: Unbounded Self-adjoint Operators on Hilbert Space, vol. 265. Springer Science and Business Media, New York (2012)

Sebestyén, Z., Tarcsay, Z.: Characterizations of selfadjoint operators. Stud. Sci. Math. Hung. 50, 423–435 (2013)

Sebestyén, Z., Tarcsay, Z.: A reversed von Neumann theorem. Acta Sci. Math. (Szeged) 80, 659–664 (2014)

Sebestyén, Z., Tarcsay, Z.: Characterizations of essentially selfadjoint and skew-adjoint operators. Stud. Sci. Math. Hung. 52, 371–385 (2015)

Sebestyén, Z., Tarcsay, Z.: Adjoint of sums and products of operators in Hilbert spaces. Acta Sci. Math. (Szeged) 82, 175–191 (2016)

Sebestyén, Z., Tarcsay, Z.: On the square root of a positive selfadjoint operator. Period. Math. Hung. 75, 268–272 (2017)

Sebestyén, Z., Tarcsay, Z.: On the adjoint of Hilbert space operators. Linear Multilinear Algebra 67, 625–645 (2019)

Stone, M.H.: Linear Transformations in Hilbert Spaces and their Applications to Analysis, Amer. Math. Soc. Colloq. Publ., vol. 15. American Mathematical Society, Providence (1932)

Acknowledgements

Open access funding provided by Eötvös Loránd University (ELTE). The corresponding author Zs. Tarcsay was supported by DAAD-TEMPUS Cooperation Project “Harmonic Analysis and Extremal Problems” (grant no. 308015). Project no. ED 18-1-2019-0030 (Application-specific highly reliable IT solutions) has been implemented with the support provided from the National Research, Development and Innovation Fund of Hungary, financed under the Thematic Excellence Programme funding scheme.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Daniel Pellegrino.

Dedicated to Professor Franciszek Hugon Szafraniec on the occasion of his 80th birthday.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tarcsay, Z., Sebestyén, Z. Range-kernel characterizations of operators which are adjoint of each other. Adv. Oper. Theory 5, 1026–1038 (2020). https://doi.org/10.1007/s43036-020-00068-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43036-020-00068-4