Abstract

Optimal control of diffusion processes is intimately connected to the problem of solving certain Hamilton–Jacobi–Bellman equations. Building on recent machine learning inspired approaches towards high-dimensional PDEs, we investigate the potential of iterative diffusion optimisation techniques, in particular considering applications in importance sampling and rare event simulation, and focusing on problems without diffusion control, with linearly controlled drift and running costs that depend quadratically on the control. More generally, our methods apply to nonlinear parabolic PDEs with a certain shift invariance. The choice of an appropriate loss function being a central element in the algorithmic design, we develop a principled framework based on divergences between path measures, encompassing various existing methods. Motivated by connections to forward-backward SDEs, we propose and study the novel log-variance divergence, showing favourable properties of corresponding Monte Carlo estimators. The promise of the developed approach is exemplified by a range of high-dimensional and metastable numerical examples.

Similar content being viewed by others

1 Introduction

Hamilton–Jacobi–Bellman partial differential equations (HJB-PDEs) are of central importance in applied mathematics. Rooted in reformulations of classical mechanics [49] in the nineteenth century, they nowadays form the backbone of (stochastic) optimal control theory [89, 123], having a profound impact on neighbouring fields such as optimal transportation [120, 121], mean field games [20], backward stochastic differential equations (BSDEs) [19] and large deviations [42]. Applications in science and engineering abound; examples include stochastic filtering and data assimilation [87, 104], the simulation of rare events in molecular dynamics [55, 59, 128], and nonconvex optimisation [24]. Many of these applications involve HJB-PDEs in high-dimensional or even infinite-dimensional state spaces, posing a formidable challenge for their numerical treatment and in particular rendering grid-based schemes infeasible.

In recent years, approaches to approximating the solutions of high-dimensional elliptic and parabolic PDEs have been developed combining well-known Feynman–Kac formulae with machine learning methodologies, seeking scalability and robustness in high-dimensional and complex scenarios [36, 54]. Crucially, the use of artificial neural networks offers the promise of accurate and efficient function approximation which in conjunction with Monte Carlo methods might beat the curse of dimensionality, as investigated in [6, 25, 53, 67].

In this paper, we focus on HJB-PDEs that can be linked to controlled diffusions (see Sect. 2),

where b and \(\sigma \) are coefficients derived from the model at hand, and u is to be thought of as an adaptable steering force to be chosen so as to minimise a given objective functional. In terms of the problems and applications alluded to in the first paragraph, we are particularly interested in situations where applying a suitable control u improves certain properties of (1); often these are related to sampling efficiency, exploration of state space, or fit to empirical data. We have been particularly motivated by the prospect of directing recent advances in the methodology for solving high-dimensional HJB-PDEs towards the challenges of rare event simulation [17].

Our attention in this paper is constrained to a class of algorithms that may be termed iterative diffusion optimisation (IDO) techniques, related in spirit to reinforcement learning [100]. Speaking in broad terms, those are characterised by the following outline of steps meant to be executed iteratively until convergence or until a satisfactory control u is found:

-

1.

Simulate N realisations \(\{(X_s^{u,(i)})_{0 \le s \le T}, \,\, i=1,\ldots ,N\}\) of the solution to (1).

-

2.

Compute a performance measure and a corresponding gradient associated to the control u, based on

\({\{(X_s^{u,(i)})_{0 \le s \le T}, \,\, i=1,\ldots ,N\}}\).

-

3.

Modify u according to the gradient obtained in the previous step. Repeat starting from 1.

Many algorithmic approaches from the literature can be placed in the IDO framework, in particular some that connect forward-backward SDEs and machine learning [36, 54] as well as some that are rooted in molecular dynamics and optimal control [59, 73, 128]. Those instances of IDO mainly differ in terms of the performance measure employed in step 2, or, in other words, in terms of an underlying loss function \({\mathcal {L}}(u)\) constructed on the set of control vector fields. Typically, \({\mathcal {L}}(u)\) is given in terms of expectations involving the solution to (1). Consequently, step 1 can be thought of as providing an empirical estimate of this quantity (and its gradient) based on a sample of size N.

For a principled design and understanding of IDO-like algorithms, it is central to analyse the properties of loss functions and corresponding Monte Carlo estimators, and identify guidelines that promise good performance. Permissible loss functions include those that admit a global minimum representing the solution to the problem at hand. Moreover, suitable loss functions yield themselves to efficient optimisation procedures (step 3) such as stochastic gradient descent. In this respect, important desiderata are the absence of local minima as well as the availability of low-variance gradient estimators.

In this article, we show that a variety of loss functions can be constructed and analysed in terms of divergences between probability measures on the path space associated to solutions of (1), providing a unifying framework for IDO and extending on previous works in that direction [59, 73, 128]. As this perspective entails the approximation of a target probability measure as a core element, our approach exposes connections to the theory of variational inference [15, 124]. Classical divergences include the relative entropy (or \(\mathrm {KL}\)-divergence) and its counterpart, the cross-entropy. Motivated by connections to forward-backward SDEs and importance sampling, we propose the novel family of log-variance divergences,

parametrised by a probability measure \(\widetilde{{\mathbb {P}}}\). Loss functions based on these divergences can be viewed as modifications of those proposed in [36, 54] for solving forward-backward SDEs, essentially replacing second moments by variances, see Sect. 3.2. Moreover, it turns out that the log-variance divergences are closely related to the \({{\,\mathrm{{\text {KL}}}\,}}\)-divergence (see Proposition 4.6), allowing us to draw (perhaps surprising) connections to methods that directly attempt to optimise the dynamics with respect to a control objective.

As the loss functions considered in this article are defined in terms of expected values, practical implementations require appropriate Monte Carlo estimators whose variance directly impacts algorithmic performance. We study the associated relative errors, in particular in high-dimensional settings and for \({\mathbb {P}}_1 \approx {\mathbb {P}}_2\), i.e. close to the optimal control. The proposed log-variance divergence and its corresponding standard Monte Carlo estimator turn out to be robust in both settings, in a precise sense that will be developed in later sections. After the completion of this manuscript, the potential of the log-variance divergences for inferences in computational Bayesian statistics has been explored in [105], along with a more careful analysis of their relations to control variates (see also Remark 4.7 below).

1.1 Our contributions and overview

The primary contributions of this article can be summarised as follows:

-

1.

Building on earlier work connecting optimal control functionals and the \(\mathrm {KL}\)-divergence [59, 73, 128], we develop the perspective of constructing loss functions via divergences on path space, offering a systematic approach to algorithmic design and analysis.

-

2.

We show that modifications of recently proposed approaches based on forward-backward SDEs [36, 54] can be placed within this framework. Indeed, the log-variance divergences (2) encapsulate a family of forward-backward SDE systems (see Sect. 3.2). The aforementioned adjustments needed to establish the path space perspective often lead to faster convergence and more accurate approximation of the optimal control, as we show by means of numerical experiments.

-

3.

We show that certain instances of algorithms based on the control objective (or \(\mathrm {KL}\)-divergence) and forward-backward SDEs (or the log-variance divergences) are equivalent when the sample size N in step 1 is large.

-

4.

We investigate the properties of sample based gradient estimators associated to the losses and divergences under consideration. In particular, we define two notions of stability: robustness of a divergence under tensorisation (related to stability in high-dimensional settings) and robustness at the optimal control solution (related to stability of the final approximation). From the losses and divergences considered in this article, we show that only the log-variance divergences satisfy both desiderata and illustrate our findings by means of extensive numerical experiments.

The paper is structured as follows. In Sect. 2 we provide a literature overview, stating connections between different perspectives on the control problem under consideration and summarising corresponding numerical treatments. As a unifying viewpoint, in Sect. 3 we define viable loss functions through divergences on path space and discuss their connections to the algorithmic approaches encountered in Sect. 2. In particular, we elucidate the relationships of the log-variance divergences with forward-backward SDEs. In the two upcoming sections we analyse properties of the suggested losses, where in Sect. 4 we obtain equivalence relations that hold in an infinite batch size limit and in Sect. 5 we investigate the variances associated to the losses’ estimator versions. In the latter case, we consider stability close to the optimal control solution as well as in high dimensionsal settings. In Sect. 6 we provide numerical examples that illustrate our findings. Finally, we conclude the paper with Sect. 7, giving an outlook to future research. Most of the proofs are deferred to the appendix.

2 Optimal control problems, change of path measures and Hamilton–Jacobi–Bellman PDEs: connections and equivalences

In this section we will introduce three different perspectives on essentially the same problem. Throughout, we will assume a fixed filtered probability space \((\Omega , {\mathcal {F}},({\mathcal {F}}_t)_{t \ge 0}, \Theta )\) satisfying the ‘usual conditions’ [77, Section 21.4] and consider stochastic differential equations (SDEs) of the form

on the time interval \(s \in [t,T]\), \(0 \le t< T < \infty \). Here, \(b: {\mathbb {R}}^d \times [t, T] \rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}^d\) denotes the drift coefficient, \(\sigma : {{\,\mathrm{{\mathbb {R}}}\,}}^d \times [t,T]\rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}^{d\times d}\) denotes the diffusion coefficient, \((W_s)_{t \le s \le T}\) denotes standard d-dimensional Brownian motion, and \(x_{\mathrm {init}} \in {\mathbb {R}}^d\) is the (deterministic) initial condition. We will work under the following conditions specifying the regularity of b and \(\sigma \).

Assumption 1

(Coefficients of the SDE (3)) The coefficients b and \(\sigma \) are continuously differentiable, \(\sigma \) has bounded first-order spatial derivatives, and \((\sigma \sigma ^\top )(x,s)\) is positive definite for all \((x,s) \in {\mathbb {R}}^d \times [t,T]\). Furthermore, there exist constants \(C, c_1, c_2>0\) such that

for all \((x,s) \in {\mathbb {R}}^d \times [t,T]\) and \(\xi \in {\mathbb {R}}^d\).

Let us furthermore introduce a modified version of (3),

where we think of \(u: {\mathbb {R}}^d \times [t,T] \rightarrow {\mathbb {R}}^d\) as a control term steering the dynamics. We will throughout assume that \(u \in {\mathcal {U}}\), the set of admissible controls. For definiteness, we will set

but note that the smoothness and boundedness assumptions can be relaxed in various scenarios. Under Assumption 1 and with \({\mathcal {U}}\) as defined in (6), the SDEs (3) and (5) admit unique strong solutions according to [91, Theorem 5.2.1].

2.1 Optimal control

Consider the cost functional

where \(f \in C^1( {\mathbb {R}}^d \times [t,T]; [0 ,\infty ))\) specifies a part of the running and \(g \in C^1( {\mathbb {R}}^d; {\mathbb {R}})\) the terminal costs, and \((X^u_s)_{t \le s \le T}\) denotes the unique strong solution to the controlled SDE (5) with initial condition \(X_t^u = x_{\mathrm {init}}\). Throughout we assume that f and g are such that the expectation in (7) is finite, for all \((x_{\mathrm {init}},t) \in {\mathbb {R}}^d \times [0,T]\). Our objective is to find a control \(u \in {\mathcal {U}}\) that minimises (7):

Problem 2.1

(Optimal control) For \((x_{\mathrm {init}},t) \in {\mathbb {R}}^d \times [0,T]\), find \(u^* \in {\mathcal {U}}\) such that

Defining the value function [45, Section I.4], or ‘optimal cost-to-go’,

it is well-known that under suitable conditions, V satisfies a Hamilton–Jacobi–Bellman PDE involving the infinitesimal generator [96, Section 2.3] associated to the uncontrolled SDE (3),

The optimal control solving (8) can then be recovered from \(u^* = -\sigma ^\top \nabla V\) (see Theorem 2.2 for details). Let us state this reformulation of Problem 2.1 as follows:

Problem 2.2

(Hamilton–Jacobi–Bellman PDE) Find a solution V to the PDE

where f and g are as in (7).

Throughout, we will focus on solutions to (11) that admit bounded and continuous derivatives of up to first order in time and second order in space (see, however, Remark 2.4). This set will be denoted by \(C_b^{2,1}({\mathbb {R}}^d \times [0,T];{\mathbb {R}})\). Solutions to elliptic and parabolic PDEs admit probabilistic representations by means of the celebrated Feynman–Kac formulae [99, Sections 1.3.3 and 6.3]. To wit, consider the following coupled system of forward-backward SDEs (in the following FBSDEs for short):

Problem 2.3

(Forward-backward SDEs) For \((x_{\mathrm {init}},t) \in {\mathbb {R}}^d \times [0,T]\), find progressively measurable stochastic processes \(Y : \Omega \times [t,T] \rightarrow {\mathbb {R}}\) and \(Z : \Omega \times [t,T] \rightarrow {\mathbb {R}}^d\) such that

almost surely.

Under suitable conditions, Itô’s formula implies that Y is connected to the value function V as defined in (9) via \(Y_s = V(X_s,s)\). Similarly, Z is connected to the optimal control \(u^*\) through \(Z_s = -u^*(X_s,s) = \sigma ^\top \nabla V(X_s,s)\). See [94, 95] and Theorem 2.2 for details.

2.2 Conditioning and rare events

One major motivation for our work is the problem of sampling rare transition events in diffusion models. In this section we will explain how this challenge can be formalised in terms of weighted measures on path space, leading to a close connection to the optimal control problems encountered in the previous section.

We will fix the initial time to be \(t=0\), i.e. consider the SDEs (3) and (5) on the interval [0, T]. For fixed initial condition \(x_{\mathrm {init}} \in {\mathbb {R}}^d\), let us introduce the path space

equipped with the supremum norm and the corresponding Borel-\(\sigma \)-algebra, and denote the set of probability measures on \({\mathcal {C}}\) by \({\mathcal {P}}({\mathcal {C}})\). The SDEs (3) and (5) induce probability measures on \({\mathcal {C}}\) defined to be the laws associated to the corresponding strong solutions; those measures will be denoted by \({\mathbb {P}}\) and \({\mathbb {P}}^u\), respectivelyFootnote 1. Furthermore, we define the work functional \({\mathcal {W}}:{\mathcal {C}} \rightarrow {\mathbb {R}}\) via

where \(f:{\mathbb {R}}^d \times [0,T] \rightarrow {\mathbb {R}}\) and \(g:{\mathbb {R}}^d \rightarrow {\mathbb {R}}\) are as in Problem 2.1. Finally, \({\mathcal {W}}\) induces a reweighted path measure \({\mathbb {Q}}\) on \({\mathcal {C}}\) via

assuming f and g are such that \({\mathcal {Z}}\) is finite (we shall tacitly make this assumption from now on). We may ask whether \({\mathbb {Q}}\) can be obtained as the path measure related to a controlled SDE of the form (5):

Problem 2.4

(Conditioning) Find \(u^* \in {\mathcal {U}}\) such that the path measure \({\mathbb {P}}^{u^*}\) associated to (5) coincides with \({\mathbb {Q}}\).

Referring to the above as a conditioning problem is justified by the fact that (15) may be viewed as an instance of Bayes’ formula relating conditional probabilities [104]. This connection can be formalised using Doob’s h-transform [33, 34] and applied to diffusion bridges and quasistationary distributions, for instance (see [26] and references therein).

Example 2.1

(Rare event simulation) Let us consider SDEs of the form (3), where the drift is a gradient, i.e. \(b = - \nabla \Psi \), and the potential \(\Psi \) is of multimodal type. As an example we shall discuss the one-dimensional case \(d=1\) and assume that \(\Psi \in C^\infty ({\mathbb {R}})\) is given by

with \(\kappa > 0\). Furthermore, let us fix the initial conditions \(x_{\mathrm {init}} = -1\) and \(t=0\), and assume a constant diffusion coefficient of size unity, \(\sigma = 1\). Observe that \(\Psi \) exhibits two local minima at \(x = \pm 1\), separated by a barrier at \(x=0\), the height of which is modulated by the parameter \(\kappa \) (see Fig. 8 in Section 6.4 for an illustration). When \(\kappa \) is sufficiently large, the dynamics induced by (3) exhibits metastable behaviour: transitions between the two basins happen very rarely as the transition time depends exponentially on the height of the barrier [11, 80]. Applications such as molecular dynamics are often concerned with statistics and derived quantities from these rare events as those are typically directly linked to biological functioning [37, 109, 110]. At the same time, computational approaches face a difficult sampling problem as transitions are hard to obtain by direct simulation from (3). Choosing \(f = 0\) and g such that \(e^{-g}\) is concentrated around \(x=1\) (consider, for instance, \(g(x) = \nu (x-1)^2\) with \(\nu > 0\) sufficiently large), we see that \({\mathbb {Q}}\) as defined in (15) predominantly charges paths initialised in \(x=-1\) at \(t=0\) and enter a neighbourhood of \(x=1\) at final time T. Problem 2.4 can then be understood as the task of finding a control u that allows efficient simulation of transition paths. Similar issues arise in the context of stochastic filtering, where the objective is sample paths that are compatible with available data [104].

2.3 Sampling problems

The free energy [58] associated to the dynamics (3) and the work functional (14) is given by

where the normalising constant \({\mathcal {Z}}\) has been defined in (15). The problem of computing \({\mathcal {Z}}\) is ubiquitous in nonequilibrium thermodynamics and statistics [15, 113], and, quite often, the variance associated to the random variable \(\exp (-{\mathcal {W}}(X))\) is so large as to render direct estimation of the expectation \({\mathbb {E}} \left[ \exp (-{\mathcal {W}}(X)) \right] \) computationally infeasibleFootnote 2. A natural approach is then to use the identity

where we recall that X and \(X^u\) refer to the strong solutions to (3) and (5), respectively, and \(\frac{\mathrm {d}{\mathbb {P}}}{\mathrm {d}{\mathbb {P}}^u}\) denotes the Radon–Nikodym derivative, explicitly given by Girsanov’s theoremFootnote 3 [118, Theorem 2.1.1],

see the proof of Theorem 2.2. As explained in [58], techniques leveraging (18) may be thought of as instances of importance sampling on path space. Given that (18) holds for all \(u \in {\mathcal {U}}\), it is clearly desirable to choose the control such as to guarantee favourable statistical properties:

Problem 2.5

(Variance minimisation) Find \(u^* \in {\mathcal {U}}\) such that

Under suitable conditions, it turns out that there exists \(u^* \in {\mathcal {U}}\) such the variance expression (20) is in fact zero (see Theorem 2.2, (1d)), providing a perfect sampling scheme.

The problem formulations detailed so far are intimately connected as summarised by the following theorem:

Theorem 2.2

(Connections and equivalences) The following holds:

-

1.

Let \(V \in C_b^{2,1}({\mathbb {R}}^d \times [0,T];{\mathbb {R}})\) be a solution to Problem 2.2, i.e. solve the HJB-PDE (11). Set

$$\begin{aligned} u^* =-\sigma ^\top \nabla V. \end{aligned}$$(21)Then

-

(a)

the control \(u^*\) provides a solution to Problem 2.1, i.e. \(u^*\) minimises the objective (7),

-

(b)

the pair

$$\begin{aligned} Y_s = V(X_s, s), \qquad Z_s = \sigma ^\top \nabla V(X_s, s) \end{aligned}$$(22)solves the FBSDE (12), i.e. Problem 2.3,

-

(c)

the measure \({\mathbb {P}}^{u^*}\) associated to the controlled SDE (5) coincides with \({\mathbb {Q}}\), i.e. \(u^*\) solves Problem 2.4,

-

(d)

the control \(u^*\) provides the minimum-variance estimator in (20), i.e. \(u^*\) solves Problem 2.5. Moreover, the variance is in fact zero, i.e. the random variable

$$\begin{aligned} \exp (-{\mathcal {W}}(X^{u^*})) \frac{\mathrm {d}{\mathbb {P}}}{\mathrm {d}{\mathbb {P}}^{u^*}} \end{aligned}$$(23)is almost surely constant.

Furthermore, we have that

$$\begin{aligned} J(u^*; x_{\mathrm {init}},0) = V(x_{\mathrm {init}},0) = Y_0 = - \log {\mathcal {Z}}. \end{aligned}$$(24) -

(a)

-

2.

Conversely, let \(u^* \in {\mathcal {U}}\) solve Problem 2.4, i.e. assume that \({\mathbb {P}}^{u^*}\) coincides with \({\mathbb {Q}}\). Then the statement (1d) holds. Furthermore, setting

$$\begin{aligned} Y_0 = -\log {\mathcal {Z}}, \qquad Z_s = -u^*(X_s,s), \end{aligned}$$(25)solves the backward SDE (12b) from Problem 2.3, i.e. (25) together with the first equation in (12b) determines a process \((Y_s)_{0 \le s \le T}\) that satisfies the final condition \(Y_T = g(X_T)\), almost surely.

Remark 2.3

We extend the connections between the optimal control formulation (Problem 2.1) and FBSDEs (Problem 2.3) in Proposition 4.3, see also Remark 4.4.

Remark 2.4

(Regularity, uniqueness, and further connections) Going beyond classical solvability of the HJB-PDE (11) and introducing the notion of viscosity solutions [45, 94], the strong regularity and boundedness assumptions on V in the first statement could be much relaxed and the connections exposed in Theorem 2.2 could be extended [99, 123]. As a case in point, we note that in the current setting, neither a solution to Problem 2.1 nor to Problem 2.3 necessarily provides a classical solution to the PDE (11), as optimal controls are known to be non-differentiable, in general.

However, assuming classical well-posedness of the HJB-PDE (11), Theorem 2.2 implies that the solution can be found by addressing one of the Problems 2.1, 2.3, 2.4 or 2.5 and using the formulas (21) and (22), as long as those problems admit unique solutions, in an appropriate sense. For the latter issue, we refer the reader to [79] and [115, Chapter 11] in the context of forward-backward SDEs and to [14] in the context of measures on path space. We note that, in particular, the forward SDE (12a) can be thought of as providing a random grid for the solution of the HJB-PDE (11), obtained through the backward SDE (12b).

Remark 2.5

(Random initial conditions) The equivalence between Problems 2.2 and 2.3 shows that \(u^*\) does not depend on \(x_{\mathrm {init}}\). Consequently, the initial condition in (12a) can be random rather than deterministic. In Sect. 6.3 we demonstrate potential benefits of this extension for FBSDE-based algorithms.

Remark 2.6

(Variational formulas and duality) The identities (24) connect key quantities pertaining to the problem formulations 2.1, 2.2, 2.3 and 2.4. The fact that \(J(u^*; x_{\mathrm {init}},0) = - \log {\mathcal {Z}}\) can moreover be understood in terms of the Donsker-Varadhan formula [16], furnishing an explicit expression for the value function,

Remark 2.7

(Generalisations) The problem formulations 2.1, 2.2 and 2.3 admit generalisations that keep parts of the connections expressed in Theorem 2.2 intact. From the PDE-perspective (Problem 2.2), it is possible to consider more general nonlinearities,

with h being a function satisfying appropriate regularity and boundedness assumptions. As in Theorem 2.2 (1b), the nonlinear parabolic PDE (2.7) is related to a generalisation of the forward-backward system (12),

where the connection is still given by (22), see [99, Section 6.3]. From the perspective of optimal control (Problem 2.1), it is possible to extend the discussion to SDEs of the form

replacing (5), and to running costs \({\widetilde{f}}(X^u_s, u_s, s)\) instead of \(f(X^u_s, s) + \frac{1}{2}|u(X^u_s, s)|^2\) in (7), assuming that \(u_s \in {\widetilde{U}} \subset {\mathbb {R}}^m \), for some \(m \in {\mathbb {N}}\). This setting gives rise to more general HJB-PDEs,

where \(\nabla ^2 V\) denotes the Hessian of V, and the Hamiltonian H is given by

see [45, 99]. In certain scenarios [125, Section 4.5.2], it is then possible to relate (30) to (2.7), noting however that typically h will be given in terms of a minimisation problem as in (31). The relationship to Problems 2.4 and 2.5 as well as the identity (21) rest on the particular structureFootnote 4 inherent in (5) and (7), enabling the use of Girsanov’s theorem (see the Proof of Theorem 2.2 below). The methods developed in this paper based on the log-variance loss (46) can straightforwardly be extended to equations of the form (2.7) in the case when h depends on V only through \(\nabla V\), owing to the invariance of the PDE under shifts of the form \(V \mapsto V + \mathrm {const.}\), see Remark 3.12. In order to address optimal control problems involving additional minimisation tasks posed by Hamiltonians such as (31) it might be feasible to include appropriate penalty terms in the loss functional. We leave this direction for future work.

Proof of Theorem 2.2

The statement (1a) is a classical result in stochastic optimal control theory, often referred to as a verification theorem, and can for instance be found in [45, Theorem IV.4.4] or [99, Theorem 3.5.2]. The implication (1b) is a direct consequence of Itô’s formula, cf. [99, Proposition 6.3.2] or [19, Proposition 2.14]. Before proceeding to (1c), we note that the first equality in (24) now follows from (9) (for background, see [45, Section IV.2]), while the second equality is a direct consequence of (1b). Using (12) and (1b), the third equality follows from

relying on the facts that \(Y_0\) is deterministic (again using (1b)), and that the term inside the second expectation is a martingale (as \(u^*\) is assumed to be bounded). Turning to (1c), let us define an equivalent measure \({\widetilde{\Theta }}\) on \((\Omega ,{\mathcal {F}})\) via

Since \(u^*\) is assumed to be bounded, Novikov’s condition is satisfied, and hence Girsanov’s theorem asserts that the process \(({\widetilde{W}}_t)_{0 \le t \le T}\) defined by

is a Brownian motion with respect to \({\widetilde{\Theta }}\). Consequently, we have that

using (12) and (24) in the last step. We note that similar arguments can be found in [75, 20, Section 3.3.1].

For the proof of (1d) we refer to [58, Theorem 2]. The proof of the second statement is very similar to the argument presented for (1c), resting primarily on (33) and (35), and is therefore omitted. \(\square \)

2.4 Algorithms and previous work

The numerical treatment of optimal control problems has been an active area of research for many decades and multiple perspectives on solving Problem 2.1 have been developed. The monographs [13] and [82] provide good overviews to policy iteration and Q-learning, strategies that have been further investigated in the machine learning literature and that are generally subsumed under the term reinforcement learning [100]. We also recommend [72] as an introduction to the specific setting considered in this paper. To cope with the key issue of high dimensionality, the authors of [92] suggest solving a certain type of control problem in the framework of hierarchical tensor products. Another strategy of dealing with the curse of dimensionality is to first apply a model reduction technique and only then solve for the reduced model. Here, recent results on balanced truncation for controlled linear S(P)DEs have for instance been suggested in [10], and approaches for systems with a slow-fast scale separation via the homogenisation method can be found in [127].

Solutions to Problem 2.2, i.e. to HJB-PDEs of the type (11), can be approximated through finite difference or finite volume methods [1, 90, 98]. However, these approaches are usually not applicable in high-dimensional settings. In contrast, the recently introduced Multilevel Picard method [66] based on a combination of the Feynman–Kac and Bismut-Elworthy-Li formulas has been proven to beat the curse of dimensionality in a variety of settings, see [7, 65, 68,69,70].

The FBSDE formulation (Problem 2.3) has opened the door for Monte Carlo based methods that have been developed since the early 90s. We mention in particular least-squares Monte Carlo, where \((Z_s)_{0 \le s \le T}\) is approximated iteratively backwards in time by solving a regression problem in each time step, along the lines of the dynamic programming principle [99, Chapter 3]. A good introduction can be found in [46]; for extensive analysis on numerical errors we refer the reader to [47, 126]. Recently, this approach has also been connected with deep learning, replacing Galerkin approximations by neural networks [64], as well as with the tensor train format, exploiting inherent low rank structures [106].

Another method leveraging the FBSDE perspective has been put forward in [36, 54] and further developed in [4, 5]. Here, the main idea is to enforce the terminal condition \(Y_T = g(X_T)\) in (12b) by iteratively minimising the loss function

using a stochastic gradient descent IDO scheme. The notation \(Y_T(y_0,u)\) indicates that the process in (12b) is to be simulated with given initial condition \(y_0\) and control u (these representing a priori guesses or current approximations, typically relying on neural networks), hence viewing (12b) as a forward process. Consequently, the approach thus described can be classified as a shooting method for boundary value problems. We note that this idea allows treating rather general parabolic and elliptic PDEs [52, 67], as well as – with some modifications – optimal stopping problems [8, 9], going beyong the setting considered in this paper. Using neural network approximations in conjunction with FBSDE-based Monte-Carlo techniques holds the promise of alleviating the curse of dimensionality; understanding this phenomenon and proving rigorous mathematical statements has been been the focus of intense current research [12, 52, 53, 67, 71]. Let us also mention that similar algorithms have been suggested in [101, 102], in particular proposing to modify the loss function (36) in order to encode the backward dynamics (12b), and extensive investigation of optimal network design and choice of tuneable parameters has been carried out [23]. Furthermore, we refer to [21, 22] for convergence results in the broader context of mean field control. In [56, Section III.B] it has been proposed to modifiy the forward dynamics (12a) (and, to componsate, also the backward dynamics (12b)) by an additional control term. This idea is central for the main results of this paper, see Sect. 3.2. Similar ideas for other types of PDEs have been proposed as well, see for instance [39, 102].

Conditioned diffusions (Problem 2.4) have been considered in a large deviation context [35] as well as in a variational setting [56, 58] motivated by free energy computations, building on earlier work in [16, 30], see also [3, 26, 29, 43]. The simulation of diffusion bridges has been studied in [86] and conditioning via Doob’s h-transform has been employed in a sequential Monte Carlo context [61]. The formulation in Problem 2.4 identifies the target measure \({\mathbb {Q}}\), motivating approaches that seek to minimise certain divergences on path space. This perspective will be developed in detail in Sect. 3.1, building bridges to Problems 2.1, 2.2, 2.3 and 2.5. Prior work following this direction includes [14, 50, 59, 73, 103], in particular relying on a connection between the \({{\,\mathrm{{\text {KL}}}\,}}\)-divergence (or relative entropy) on path space and the cost functional (7), see also Proposition 3.5. A similar line of reasoning leads to the cross-entropy method [58, 74, 108, 128], see Proposition 3.7 and equation (62) in Sect. 3.3.

Problem 2.5 motivates minimising the variance of importance sampling estimators. We refer the reader to [88, Section 5.2] for a recent attempt based on neural networks, to [2] for a theoretical analysis of convergence rates, to [57] for potential non-robustness issues, and to [18] for a general overview regarding adaptive importance sampling techniques. The relationship between optimal control and importance sampling (see Theorem 2.2) has been exploited by various authors to construct efficient samplers [74, 114], in particular also with a view towards the sampling based estimation of hitting times, in which case optimal controls are governed by elliptic rather than parabolic PDEs [55, 56, 59, 60]. Similar sampling problems have been addressed in the context of sequential Monte Carlo [31, 61] and generative models [116, 117]. The latter works examine the potential of the controlled SDE (5) as a sampling device targeting a suitable distribution of the final state \(X^u_T\).

3 Approximating probability measures on path space

In this section we demonstrate that many of the algorithmic approaches encountered in the previous section can be recovered as minimisation procedures of certain divergences between probability measures on path space. Similar perspectives (mostly discussing the relative entropy and cross-entropy in Definition 3.1 below) can be found in the literature, see [59, 73, 128]. Recall from Sect. 2.2 that we denote by \({\mathcal {C}}\) the space of \({\mathbb {R}}^d\)-valued paths on the time interval [0, T] with fixed initial point \(x_{\mathrm {init}} \in {\mathbb {R}}^d\). As before, the probability measures on \({\mathcal {C}}\) induced by (3) and (5) will be denoted by \({\mathbb {P}}\) and \({\mathbb {P}}^u\), respectively. From now on, let us assume that there exists a unique optimal control with convenient regularity properties:

Assumption 2

The HJB-PDE (11) admits a unique solution \(V \in C_b^{2,1}({\mathbb {R}}^d \times [0,T])\). We set

For Assumption 2 to be satisfied, it is sufficient to impose the regularity and boundedness conditions \(b,\sigma ,f \in C_b^{2,1}({\mathbb {R}}^d)\) and \(g \in C_b^{3}({\mathbb {R}}^d)\), seeFootnote 5 [45, Theorem 4.2]. The strong boundedness assumption on V could be weakened and for instance be replaced by the condition \(\sigma ^\top \nabla V \in {\mathcal {U}}\). For existence and uniqueness results involving unbounded controls we refer to [44], and for specific examples to Sect. 6.2 and 6.3. In the sense made precise in Theorem 2.2, the control \(u^*\) defined above provides solutions to the Problems 2.1-2.5 considered in Sect. 2. Moreover, there exists a corresponding optimal path measure \({\mathbb {Q}}\) (in the following also called the target measure) defined in (15) and satisfying \({\mathbb {Q}} = {\mathbb {P}}^{u^*}\). We further note that Assumption 2 together with the results from [115, Chapter 11] imply that the solution to the FBSDE (12) is unique.

3.1 Divergences and loss functions

The SDE (5) establishes a measurable map \({\mathcal {U}} \ni u \mapsto {\mathbb {P}}^u \in {\mathcal {P}}({\mathcal {C}})\) that can be made explicit in terms of Radon–Nikodym derivatives using Girsanov’s theorem (see Lemma A.1 in Appendix A.1). Consequently, we can elevate divergences between path measures to loss functions on vector fields. To wit, let \(D: {\mathcal {P}}({\mathcal {C}})\times {\mathcal {P}}({\mathcal {C}}) \rightarrow {\mathbb {R}}_{\ge 0}\ \cup \{+\infty \}\) be a divergenceFootnote 6, where, as before, \({\mathcal {P}}({\mathcal {C}})\) denotes the set of probability measures on \({\mathcal {C}}\). Then, setting

we immediately see that \({\mathcal {L}}_D \ge 0\), with Theorem 2.2 implying that \({\mathcal {L}}_D(u) = 0\) if and only if \(u = u^*\). Consequently, an approximation of the optimal control vector field \(u^*\) can in principle be found by minimising the loss \({\mathcal {L}}_D\). In the remainder of the paper, we will suggest possible losses and study some of their properties.

Starting with the \({{\,\mathrm{{\text {KL}}}\,}}\)-divergence, we introduce the relative entropy loss and the cross-entropy loss, corresponding to the divergences

Definition 3.1

(Relative entropy and cross-entropy losses) The relative entropy loss is given by

and the cross-entropy loss by

where the target measure \({\mathbb {Q}}\) has been defined in (15).

Remark 3.2

(Notation) Note that, by definition, the expectations in (40) and (41) are understood as integrals on \({\mathcal {C}}\), i.e.

In contrast, the expectation operator \({\mathbb {E}}\) (without subscript, as used in (7) and (18), for instance) throughout denotes integrals on the underlying abstract probability space \((\Omega , {\mathcal {F}},({\mathcal {F}}_t)_{t \ge 0}, \Theta )\).

For \(\widetilde{{\mathbb {P}}} \in {\mathcal {P}}({\mathcal {C}})\), it is straightforward to verify that

and

define divergences on the set of probability measures equivalent to \(\widetilde{{\mathbb {P}}}\). Henceforth, these quantities shall be called variance divergence and log-variance divergence, respectively.

Remark 3.3

Setting \(\widetilde{{\mathbb {P}}} = {\mathbb {P}}_1\), the quantity \(D^{\mathrm {Var}}_{{\mathbb {P}}_1}({\mathbb {P}}_1 \vert {\mathbb {P}}_2)\) coincides with the Pearson \(\chi ^2\)-divergence [32, 84] measuring the importance sampling relative error [2, 57], hence relating to Problem 2.5. The divergence \(D^{\mathrm {Var(log)}}_{\widetilde{{\mathbb {P}}}}\) seems to be new; it is motivated by its connections to the forward-backward SDE formulation of optimal control (see Problem 2.3), as will be explained in Sect. 3.2. Let us already mention that inserting the \(\log \) in (43) to obtain (44) has the potential benefit of making sample based estimation more robust in high dimensions (see Sect. 5.2). Furthermore, we point the reader to Proposition 4.3 revealing close connections between \(D^{\mathrm {Var(log)}}_{\widetilde{{\mathbb {P}}}}\) and the relative entropy.

Using (43) and (44) with \(\widetilde{{\mathbb {P}}} = {\mathbb {P}}^v\), we obtain two additional families of losses, indexed by \(v \in {\mathcal {U}}\):

Definition 3.4

(Variance and log-variance losses) For \(v \in {\mathcal {U}}\), the variance loss is given by

and the log-variance loss by

whenever \({\mathbb {E}}_{\widetilde{{\mathbb {P}}}}\left[ \left| \frac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\mathbb {P}}^u}\right| \right] < \infty \) or \({\mathbb {E}}_{\widetilde{{\mathbb {P}}}}\left[ \left| \log \frac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\mathbb {P}}^u}\right| \right] < \infty \), respectivelyFootnote 7. The notation \({{{\,\mathrm{{\text {Var}}}\,}}}_{{\mathbb {P}}^v}\) is to be interpreted in line with Remark 3.2.

By direct computations invoking Girsanov’s theorem, the losses defined above admit explicit representations in terms of solutions to SDEs of the form (3) and (5). Crucially, the propositions that follow replace the expectations on \({\mathcal {C}}\) used in the definitions (40), (41), (43) and (44) by expectations on \(\Omega \) that are more amenable to direct probabilistic interpretation and Monte Carlo simulation (see also Remark 3.2). Recall that the target measure \({\mathbb {Q}}\) is assumed to be of the type (15), where \({\mathcal {W}}\) has been defined in (14). We start with the relative entropy loss:

Proposition 3.5

(Relative entropy loss) For \(u \in {\mathcal {U}}\), let \((X_s^u)_{0 \le s \le T}\) denote the unique strong solution to (5). Then

Proof

See [59, 73]. For the reader’s convenience, we provide a self-contained proof in Appendix A.1. \(\square \)

Remark 3.6

Up to the constant \(\log {\mathcal {Z}}\), the loss \({\mathcal {L}}_{{{\,\mathrm{\mathrm {RE}}\,}}}\) coincides with the cost functional (7) associated to the optimal control formulation in Problem 2.1. The approach of minimising the \({{\,\mathrm{{\text {KL}}}\,}}\)-divergence between \({\mathbb {P}}^u\) and \({\mathbb {Q}}\) as defined in (40) is thus directly linked to the perspective outlined in Sect. 2.1. We refer to [59, 73] for further details.

The cross-entropy loss admits a family of representations, indexed by \(v \in {\mathcal {U}}\):

Proposition 3.7

(Cross-entropy loss) For \(v \in {\mathcal {U}}\), let \((X_s^v)_{0 \le s \le T}\) denote the unique strong solution to (5), with u replaced by v. Then there exists a constant \(C \in {\mathbb {R}}\) (not depending on u in the next line) such that

for all \(u \in {\mathcal {U}}\).

Proof

See [128] or Appendix A.1 for a self-contained proof. \(\square \)

Remark 3.8

The appearance of the exponential term in (48b) can be traced back to the reweightingFootnote 8

recalling that \({\mathbb {P}}^v\) denotes the path measure associated to (5) controlled by v. While the choice of v evidently does not affect the loss function, judicious tuning may have a significant impact on the numerical performance by means of altering the statistical error for the associated estimators (see Sect. 3.3). We note that the expression (47) for the relative entropy loss can similarly be augmented by an additional control \(v \in {\mathcal {U}}\). However, Proposition 5.7 in Sect. 5.2 discourages this approach and our numerical experiments using a reweighting for the relative entropy loss have not been promising. In general, we feel that exponential terms of the form appearing in (48b) often have a detrimental effect on the variance of estimators, which should also be compared to an analysis in [106]. Therefore, an important feature of both the relative entropy loss and the log-variance loss (see Proposition 3.10) seems to be that expectations can be taken with respect to controlled processes \((X_s^v)_{0 \le s \le T}\) without incurring exponential factors as in (48b).

Remark 3.9

Setting \(v = 0\) leads to the simplification

where \((X_s)_{0 \le s \le T}\) solves the uncontrolled SDE (3). The quadratic dependence of \({\mathcal {L}}_{{{\,\mathrm{\mathrm {CE}}\,}}}\) on u has been exploited in [128] to construct efficient Galerkin-type approximations of \(u^*\).

Finally, we derive corresponding representations for the variance and log-variance losses:

Proposition 3.10

(Variance-type losses) For \(v \in {\mathcal {U}}\), let \((X_s^v)_{0 \le s \le T}\) denote the unique strong solution to (5), with u replaced by v. Furthermore, define

Then

and

for all \(u \in {\mathcal {U}}\).

Proof

See Appendix A.1. \(\square \)

Setting \(v=u\) in (52) recovers the importance sampling objective in (18), i.e. the variance divergence \(D^{{{\,\mathrm{{\text {Var}}}\,}}}_{{\mathbb {P}}^u}\) encodes the formulation from Problem 2.5. See also [57, 88].

Remark 3.11

While different choices of v merely lead to distinct representations for the cross-entropy loss \({\mathcal {L}}_{{{\,\mathrm{\mathrm {CE}}\,}}}\) according to Proposition 3.7 and Remark 3.8, the variance losses \({\mathcal {L}}_{\mathrm {Var}_v}\) and \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) do indeed depend on v. However, the property \({\mathcal {L}}_{\mathrm {Var}_v}(u) = 0 \iff u = u^*\) (and similarly for \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}\)) holds for all \(v \in {\mathcal {U}}\), by construction.

3.2 FBSDEs and the log-variance loss

As it turns out, the log-variance loss \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) as computed in (53) is intimately connected to the FBSDE formulation in Problem 2.3 (and we already used the notation \({\widetilde{Y}}_T^{u,v}\) in hindsight). Indeed, setting \(v = 0\) in Proposition 3.10 and writing

for some (at this point, arbitrary) constant \(y_0 \in {\mathbb {R}}\), we recover the forward SDE (12a) from (3) and the backward SDE (12b) from (51) in conjunction with the optimality condition \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}(u) = 0\), using also the identification \(u^*(X_s,s) =: -Z_s\) suggested by (22). For arbitrary \(v \in {\mathcal {U}}\), we similarly obtain the generalised FBSDE system

again setting

In this sense, the divergence \(D^{{{\,\mathrm{{\text {Var}}}\,}}(\log )}_{{\mathbb {P}}^v}({\mathbb {P}}^u|{\mathbb {Q}})\) encodes the dynamics (55). Let us again insist on the fact that by construction the solution \((Y_s,Z_s)_{0 \le s \le T}\) to (55) does not depend on \(v \in {\mathcal {U}}\) (the contribution \(\sigma (X^v_s,s) v(X^v_s,s) \, \mathrm ds\) in (55a) being compensated for by the term \(v(X^v_s,s) \cdot Z_s \, \mathrm {d}s\) in (55b)), whereas clearly \((X_s^v)_{0 \le s \le T}\) does. When \(u^*(X_s,s)=-Z_s\) is approximated in an iterative manner (see Sect. 6.1), the choice \(v = u\) is natural as it amounts to applying the currently obtained estimate for the optimal control to the forward process (55a). In this context, the system (55) was put forward in [56, Section III.B]. The bearings of appropriate choices for v will be further discussed in Sect. 5.

It is instructive to compare the expression (54) for the log-variance loss to the ‘moment loss’

suggested in [36, 54] in the context of solving more general nonlinear parabolic PDEsFootnote 9. More generally, we can define

as a counterpart to the expression (53). Note that unlike the losses considered so far, the moment losses depend on the additional parameter \(y_0 \in {\mathbb {R}}\), which has implications in numerical implementations. Also, these losses do not admit a straightforward interpretation in terms of divergences between path measures. As we show in Proposition 4.6, algorithms based on \({\mathcal {L}}_{\mathrm {moment}_v}\) are in fact equivalent to their counterparts based on \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) in the limit of infinite batch size when \(y_0\) is chosen optimally or when the forward process is controlled in a certain way. We already anticipate that optimising an additional parameter \(y_0\) can slow down convergence towards the solution \(u^*\) considerably (see Sect. 6).

Remark 3.12

Reversing the argument, the log-variance loss can be obtained from (57) by replacing the second moment by the variance and using the translation invariance (54) to remove the dependence on \(y_0\). The fact that this procedure leads to a viable loss function (i.e. satisfying \({\mathcal {L}}(u)=0 \iff u=u^*\)) can be traced back to the fact that the Hamilton–Jacobi PDE (11a) is itself translation invariant (i.e. it remains unchanged under the transformation \(V \mapsto V + \mathrm {const}\)). Following this argument, the log-variance loss can be applied for solving more general PDEs of the form (2.7) in the case when h depends on V only through \(\nabla V\). Furthermore, our interpretation in terms of divergences between probability measures on path space remains valid, at least in the case when \(\sigma \) is constant (in the following we let \(\sigma = I_{d \times d}\) for simplicity)Footnote 10. Indeed, denoting as before the path measure associated to (28a) by \({\mathbb {P}}\), defining the target \({\mathbb {Q}}\) via \(\tfrac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\mathbb {P}}} \propto e^{-g}\), and introducing the neural network approximation \({\widetilde{u}} \approx -\sigma ^\top \nabla V\), the backward SDE (28b) induces a \({\widetilde{u}}\)-dependent path measure \({\mathbb {P}}^{{\widetilde{u}}}\),

assuming that the right-hand side is \({\mathbb {P}}\)-integrable. Using \(Z \approx -{\widetilde{u}}\) in (28b) and denoting the corresponding process by \(Y^{{\widetilde{u}}}\), we then obtain

as an implementable loss function, with straightforward modifications to (2.7) when \({\mathbb {P}}\) is replaced by \({\mathbb {P}}^v\), see (55). Note, however, that in general the vector field \({\widetilde{u}}\) does not lend itself to a straightforward interpretation in terms of a control problem. The PDEs treated in [36, 54] do not possess the shift-invariance property (that is, h depends on V), and thus the vanishing of (60) does not characterise the solution to the PDE (27a) uniquely (not even up to additive constants). Uniqueness may be restored by including appropriate terms in (60) enforcing the terminal condition (27b). Theoretical and numerical properties of such extensions may be fruitful directions for future work.

3.3 Algorithmic outline and empirical estimators

In order to motivate the theoretical analysis in the following sections, let us give a brief overview of algorithmic implementations based on the loss functions developed so far. We refer to Sect. 6.1 for a more detailed account. Recall that by the construction outlined in Sect. 3.1, the solution \(u^*\) as defined in (37) is characterised as the global minimum of \({\mathcal {L}}\), where \({\mathcal {L}}\) represents a generic loss function. Assuming a parametrisation \({\mathbb {R}}^p \ni \theta \mapsto u_{\theta }\) (derived from, for instance, a Galerkin truncation or a neural network), we apply gradient-descent type methods to the function \(\theta \mapsto {\mathcal {L}}(u_\theta )\), relying on the explicit expressions obtained in Propositions 3.5, 3.7 and 3.10. It is an important aspect that those expressions involve expectations that need to be estimated on the basis of ensemble averages. To approximate the loss \({\mathcal {L}}_{{{\,\mathrm{\mathrm {RE}}\,}}}\), for instance, we use the estimator

where \((X^{u,(i)}_s)_{0 \le s \le T}\), \(i=1, \ldots , N\) denote independent realisations of the solution to (5), and \(N \in {\mathbb {N}}\) refers to the batch size. The estimators \(\widehat{{\mathcal {L}}}_{{{\,\mathrm{\mathrm {CE}}\,}}}^{(N)}(u)\), \(\widehat{{\mathcal {L}}}_{{{\,\mathrm{{\text {Var}}}\,}}}^{(N)}(u)\), \(\widehat{{\mathcal {L}}}_{{{\,\mathrm{{\text {Var}}}\,}}}^{\log ,(N)}(u)\) and \(\widehat{{\mathcal {L}}}^{ (N)}_{\mathrm {moment}_v}(u,y_0)\) are constructed analogously, i.e. the estimator for the cross-entropy loss is given by

the estimator for the variance loss is given by

the estimator for the log-variance loss by

and the estimator for the moment loss by

In the previous displays, the overline denotes an empirical mean, for example

and \((W_t^{(i)})_{t \ge 0}\), \(i=1,\ldots , N\) denote independent Brownian motions associated to \((X_t^{u,(i)})_{t \ge 0}\). By the law of large numbers, the convergence \(\widehat{{\mathcal {L}}}^{(N)} (u) \rightarrow {\mathcal {L}}(u)\) holds almost surely up to additive and multiplicative constantsFootnote 11, but as we show in Sect. 6, the fluctuations for finite N play a crucial role for the overall performance of the method. The variance associated to empirical estimators will hence be analysed in Sect. 5.

Remark 3.13

The estimators introduced in this section are standard, and more elaborate constructions, for instance involving control variates [107, Section 4.4.2], can be considered to reduce the variance. We leave this direction for future work. It is noteworthy, however, that the log-variance estimator (64) appears to act as a control variate in natural way, see Propositions 4.3 and 4.6 and Remark 4.7.

Remark 3.14

Note that the estimator \(\widehat{{\mathcal {L}}}^{(N)}_{{{\,\mathrm{\mathrm {CE}}\,}},v}\) depends on \(v \in {\mathcal {U}}\), in contrast to its target \({\mathcal {L}}_{{{\,\mathrm{\mathrm {CE}}\,}}}\); in other words, the limit \(\lim _{N \rightarrow \infty } \widehat{{\mathcal {L}}}^{(N)}_{{{\,\mathrm{\mathrm {CE}}\,}},v}(u)\) does not depend on v. This contrasts the pairs \((\widehat{{\mathcal {L}}}^{(N)}_{\mathrm {Var}_v},{\mathcal {L}}_{\mathrm {Var}_v}) \) and \((\widehat{{\mathcal {L}}}^{\log ,(N)}_{\mathrm {Var}_v},{\mathcal {L}}^{\log }_{\mathrm {Var}_v})\), see also Remark 3.8.

We provide a sketch of the algorithmic procedure in Algorithm 1. Clearly, choosing different loss functions (and corresponding estimators) at every gradient step as indicated leads to viable algorithms. In particular, we have in mind the option of adjusting the forward control \(v \in {\mathcal {U}}\) using the current approximation \(u_\theta \). More precisely, denoting by \(u_\theta ^{(j)}\) the approximation at the \(j^{\text {th}}\) step, it is reasonable to set \(v= u^{(j)}_\theta \) in the iteration yielding \(u^{(j+1)}_\theta \). In the remainder of this paper, we will focus on this strategy for updating v, leaving differing schemes for future work.

4 Equivalence properties in the limit of infinite batch size

In this section we will analyse some of the properties of the losses defined in Sect. 3.1, not taking into account the approximation by ensemble averages described in Sect. 3.3. In other words, the results in this section are expected to be valid when the batch size N used to compute the estimators \(\widehat{{\mathcal {L}}}^{(N)}\) is sufficiently large. The derivatives relevant for the gradient-descent type methodology described in Sect. 3.3 can be computed as follows,

where \(\frac{\delta }{\delta u} {\mathcal {L}}(u;\phi )\) denotes the Gâteaux derivative in direction \(\phi \). We recall its definition [112, Section 5.2]:

Definition 4.1

(Gâteaux derivative) Let \(u \in {\mathcal {U}}\) and \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\). A loss function \({\mathcal {L}}:{\mathcal {U}} \rightarrow {\mathbb {R}}\) is called Gâteaux-differentiable at u, if, for all \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\), the real-valued function \(\varepsilon \mapsto {\mathcal {L}}(u + \epsilon \phi )\) is differentiable at \(\varepsilon = 0\). In this case we define the Gâteaux derivative in direction \(\phi \) to be

Remark 4.2

The functions \(\phi _i\) defined in (67) depend on the chosen parametrisation for u. In the case when a Galerkin truncation is used, \( u_\theta = \sum _{i} \theta _i \alpha _i,\) these coincide with the chosen ansatz functions (i.e. \(\phi _i = \alpha _i\)). Concerning neural networks, the family \((\phi _i)_i\) reflects the choice of the architecture, the function \(\phi _i\) encoding the response to a a change in the \(i^{\text {th}}\) weight. For convenience, we will throughout work under the assumption (implicit in Definition 4.1) that the functions \(\phi _i\) are bounded, noting however that this could be relaxed with additional technical effort. Furthermore, note that Definition 4.1 extends straightforwardly to the estimator versions \(\widehat{{\mathcal {L}}}^{(N)}\).

The following result shows that algorithms based on \(\frac{1}{2}{\mathcal {L}}_{{{\,\mathrm{{\text {Var}}}\,}}_v}^{\log }\) and \({\mathcal {L}}_{{{\,\mathrm{\mathrm {RE}}\,}}}\) behave equivalently in the limit of infinite batch size, provided that the update rule \(v=u\) for the log-variance loss is applied (see the discussion towards the end of Sect. 3.3), and that ‘all other things being equal’, for instance in terms of network architecture and choice of optimiser. Furthermore, we provide an analytical expression for the gradient for future reference.

Proposition 4.3

(Equivalence of log-variance loss and relative entropy loss) Let \(u,v \in {\mathcal {U}}\) and \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T] ; {\mathbb {R}}^d)\). Then \({\mathcal {L}}_{{{\,\mathrm{{\text {Var}}}\,}}_v}^{\log }\) and \({\mathcal {L}}_{\mathrm {RE}}\) are Gâteaux-differentiable at u in direction \(\phi \). Furthermore,

Remark 4.4

Proposition 4.3 extends the connection between the cost functional (7) and the FBSDE formulation (12) exposed in Theorem 2.2. Indeed, the Problems 2.1 and 2.3 do not only agree on identifying the solution \(u^*\); it is also the case that the gradients of the corresponding loss functions agree for \(u \ne u^*\).

Moreover, it is instructive to compare the expressions (47) and (53) (or their sample based variants (61) and (64)). Namely, computing the derivatives associated to the relative entropy loss entails differentiating both the SDE-solution \(X^u\) as well as f and g, determining the running and terminal costs. Perhaps surprisingly, the latter is not necessary for obtaining the derivatives of the log-variance loss, opening the door for gradient-free implementations.

Proof of Proposition 4.3

We present a heuristic argument based on the perspective introduced in Sect. 3.1 and refer to Appendix A.2 for a rigorous proof.

For fixed \({\mathbb {P}}\in {\mathcal {P}}({\mathcal {C}})\), let us consider perturbations \({\mathbb {P}}+ \varepsilon {\mathbb {U}}\), where \({\mathbb {U}}\) is a signed measure with \({\mathbb {U}}({\mathcal {C}}) = 0\). Assuming sufficient regularity, we then expect

where the first term on the right-hand side vanishes because of \({\mathbb {U}}({\mathcal {C}}) = 0\). Likewise,

For \({\widetilde{{\mathbb {P}}}} = {\mathbb {P}}\), the second term in (71b) vanishes (again, because of \({\mathbb {U}}({\mathcal {C}}) = 0\)), and hence (71b) agrees with (70) up to a factor of 2. \(\square \)

Remark 4.5

(Local minima) It is interesting to note that (71) can be expressed as

In particular, the derivative is zero for all \({\mathbb {U}}\) with \({\mathbb {U}}({\mathcal {C}}) = 0\) if and only if \({\mathbb {P}}= {\mathbb {Q}}\). In other words, we expect the loss landscape associated to losses based on the log-variance divergence to be free of local minima where the optimisation procedure could get stuck. A more refined analysis concerning the relative entropy loss can be found in [83].

In the following proposition, we gather results concerning the moment loss \({\mathcal {L}}_{\mathrm {moment}_v}\) defined in (57). The first statement is analogous to Proposition 4.3 and shows that \({\mathcal {L}}_{\mathrm {moment}_v}\) and \({\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) are equivalent in the infinite batch size limit, provided that the update strategy \(v=u\) is employed. The second statement deals with the alternative \(v \ne u\). In this case, \(y_0 = -\log {\mathcal {Z}}\) (i.e. finding the optimal \(y_0\) according to Theorem 2.2) is necessary for \({\mathcal {L}}_{\mathrm {moment}_v}\) to identify the correct \(u^*\). Consequently, approximation of the optimal control will be inaccurate unless the parameter \(y_0\) is determined without error.

Proposition 4.6

(Properties of the moment loss) Let \(u,v \in {\mathcal {U}}\) and \(y_0 \in {\mathbb {R}}\). Then the following holds:

-

1.

The losses \({\mathcal {L}}_{\mathrm {moment},v}(\cdot , y_0)\) and \({\mathcal {L}}_{{{\,\mathrm{{\text {Var}}}\,}}_v}^{\log }\) are Gâteaux-differentiable at u, and

$$\begin{aligned} \left( \frac{\delta }{\delta u}{\mathcal {L}}_{\mathrm {moment}_v}(u, y_0;\phi ) \right) \Big |_{v=u} = \left( \frac{\delta }{\delta u} {\mathcal {L}}^{\log }_{{{\,\mathrm{{\text {Var}}}\,}}_v}(u;\phi ) \right) \Big |_{v=u} \end{aligned}$$(73)holds for all \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\). In particular, (73) is zero at \(u = u^*\), independently of \(y_0\).

-

2.

If \(v \ne u\), then

$$\begin{aligned} \frac{\delta }{\delta u}{\mathcal {L}}_{\mathrm {moment}_v}(u, y_0;\phi )= 0 \end{aligned}$$(74)holds for all \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\) if and only if \(u = u^*\) and \(y_0 = -\log {\mathcal {Z}}\).

Proof

The proof can be found in Appendix A.2. \(\square \)

Remark 4.7

(Control variates) Inspecting the proofs of Propositions 4.3 and 4.6, we see that the identities (69) and (73) rest on the vanishing of terms of the form \( \beta \, {{{\,\mathrm{{\mathbb {E}}}\,}}} \left[ \int _0^T \phi (X_s^u,s) \cdot \mathrm {d}W_s \right] , \) where \(\beta = -y_0\) for the moment loss and \(\beta = - {{\,\mathrm{{\mathbb {E}}}\,}}\left[ g(X_T^u) - {\widetilde{Y}}^{u,u}_T\right] \) for the log-variance loss. The corresponding Monte Carlo estimators (see Sect. 3.3) hence include terms that are zero in expectation and act as control variates [107, Section 4.4.2]. Using the explicit expression for the derivative in (69), the optimal value for \(\beta \) in terms of variance reduction is given by

which splits into a \(\phi \)-independent (i.e. shared across network weights) and a \(\phi \)-dependent (i.e. weight-specific) term. The \(\phi \)-independent term is reproduced in expectation by the log-variance estimator. Numerical evidence suggests that the \(\phi \)-dependent term is often small and fluctuates around zero, but implementations that include this contribution (based on Monte Carlo estimates) hold the promise of further variance reductions. We note however that determining a control variate for every weight carries a significant computational overhead and that Monte Carlo errors need to be taken into account. Finally, if \(y_0\) in the moment loss differs greatly from \(- {{\,\mathrm{{\mathbb {E}}}\,}}\left[ g(X_T^u) - {\widetilde{Y}}_T^{u,u} \right] \), we expect the corresponding variance to be large, hindering algorithmic performance. In our follow-up paper [105], we have provided a more detailed analysis of the connections between the log-variance divergences and variance reduction techniques in the context of computational Bayesian inference.

5 Finite sample properties and the variance of estimators

In this section we investigate properties of the sample versions of the losses as outlined in Sect. 3.3 and, in particular, study their variances and relative errors. We will highlight two different types of robustness, both of which prove significant for convergence speed and stability concerning practical implementations of Algorithm 1, see the numerical experiments in Sect. 6.

5.1 Robustness at the solution \(u^*\)

By construction, the optimal control solution \(u^*\) represents the global minimum of all considered losses. Consequently, the associated directional derivatives vanish at \(u^*\), i.e.

for all \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\). A natural question is whether similar statements can be made with respect to the corresponding Monte Carlo estimators. We make the following definition.

Definition 5.1

(Robustness at the solution \(u^*\)) We say that an estimator \(\widehat{{\mathcal {L}}}^{(N)}\) is robust at the solution \(u^*\) if

for all \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\) and \(N \in {\mathbb {N}}\).

Remark 5.2

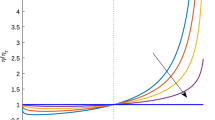

Robustness at the solution \(u^*\) implies that fluctuations in the gradient due to Monte Carlo errors are suppressed close to \(u^*\), facilitating accurate approximation. Conversely, if robustness at \(u^*\) does not hold, then the relative error (i.e. the Monte Carlo error relative to the size of the gradients (67)) grows without bounds near \(u^*\), potentially incurring instabilities of the gradient-descent type scheme. We refer to Fig. 12 and the corresponding discussion for an illustration of this phenomenon.

Proposition 5.3

(Robustness and non-robustness at \(u^*\)) The following holds:

-

1.

The variance estimator \(\widehat{{\mathcal {L}}}^{(N)}_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) and the log-variance estimator \(\widehat{{\mathcal {L}}}^{\log (N)}_{{{\,\mathrm{{\text {Var}}}\,}}_v}\) are robust at \(u^*\), for all \(v \in {\mathcal {U}}\).

-

2.

For all \(v \in {\mathcal {U}}\), the moment estimator \(\widehat{{\mathcal {L}}}^{(N)}_{{\text {moment}}_v}(\cdot ,y_0)\) is robust at \(u^*\), i.e.

$$\begin{aligned} {{\,\mathrm{{\text {Var}}}\,}}\left( \frac{\delta }{\delta u}\Big |_{u=u^*}\widehat{{\mathcal {L}}}_{\mathrm {moment}_v}^{(N)}(u, y_0; \phi ) \right) = 0,\qquad \text {for all} \,\ \phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d), \end{aligned}$$(78)if and only if \(y_0 = - \log {\mathcal {Z}}\).

-

3.

The relative entropy estimator \(\widehat{{\mathcal {L}}}_{{{\,\mathrm{\mathrm {RE}}\,}}}^{(N)}\) is not robust at \(u^*\). More precisely, for \(\phi \in C_b^1({\mathbb {R}}^d \times [0,T]; {\mathbb {R}}^d)\),

$$\begin{aligned} {{\,\mathrm{{\text {Var}}}\,}}\left( \frac{\delta }{\delta u}\Big |_{u=u^*}\widehat{{\mathcal {L}}}_{{{\,\mathrm{\mathrm {RE}}\,}}}^{(N)}(u; \phi ) \right) = \frac{1}{N} {\mathbb {E}} \left[ \int _0^T \vert (\nabla u^*)^\top (X_s^{u^*},s) A_s\vert ^2 \,\mathrm ds \right] , \end{aligned}$$(79)where \((A_s)_{0 \le s \le T}\) denotes the unique strong solution to the SDE

$$\begin{aligned} \mathrm {d}A_s {=} (\sigma \phi )(X_s^{u^*},s) \, \mathrm {d}s {+} \left[ (\nabla b {+} \nabla (\sigma u^{*}))(X_s^{u^*},s)\right] ^\top A_s \, \mathrm {d}s {+} A_s \cdot \nabla \sigma (X_s^{u^*},s)\, \mathrm {d}W_s, A_0 {=} 0. \end{aligned}$$(80) -

4.

For all \(v \in {\mathcal {U}}\), the cross-entropy estimator \(\widehat{{\mathcal {L}}}^{(N)}_{{{\,\mathrm{\mathrm {CE}}\,}}, v}\) is not robust at \(u^*\).

Remark 5.4

The fact that robustness of the moment estimator at \(u^*\) requires \(y_0 = -\log {\mathcal {Z}}\) might lead to instabilities in practice as this relation is rarely satisfied exactly. Note that the variance of the relative entropy estimator at \(u^*\) depends on \(\nabla u^*\). We thus expect instabilities in metastable settings, where often this quantity is fairly large. For numerical confirmation, see Fig. 12 and the related discussion.

Proof

For illustration, we show the robustness of the log-variance estimator \(\widehat{{\mathcal {L}}}^{\log (N)}_{{{\,\mathrm{{\text {Var}}}\,}}_v}\). The remaining proofs are deferred to Appendix A.3. By a straightforward calculation (essentially equivalent to (119) in Appendix A.1), we see that

where

The claim now follows from observing that

is almost surely constant (i.e. does not depend on i), according to the second equation in (55b). \(\square \)

5.2 Stability in high dimensions—robustness under tensorisation

In this section we study the robustness of the proposed algorithms in high-dimensional settings. As a motivation, consider the case when the drift and diffusion coefficients in the uncontrolled SDE (3) split into separate contributions along different dimensions,

for \(x=(x_1,\ldots ,x_d) \in {\mathbb {R}}^d\), and analogously for the running and terminal costs f and g as well as for the control vector field u. It is then straightforward to show that the path measure \({\mathbb {P}}^u\) associated to the controlled SDE (5) and the target measure \({\mathbb {Q}}\) defined in (15) factorise,

From the perspective of statistical physics, (85) corresponds to the scenario where non-interacting systems are considered simultaneously. To study the case when d grows large, we leverage the perspective put forward in Sect. 3.1, recalling that \(D({\mathbb {P}}\vert {\mathbb {Q}})\) denotes a generic divergence. In what follows, we will denote corresponding estimators based on a sample of size N by \({\widehat{D}}^{(N)}({\mathbb {P}}\vert {\mathbb {Q}})\), and study the quantity

measuring the relative statistical error when estimating \(D({\mathbb {P}}\vert {\mathbb {Q}})\) from samples, noting that \(r^{(N)}({\mathbb {P}} \vert {\mathbb {Q}}) = {\mathcal {O}}(N^{-1/2})\). As \(r^{(N)}\) is clearly linked to algorithmic performance and stability, we are interested in divergences, corresponding loss functions and estimators whose relative error remains controlled when the number of independent factors in (85) increases:

Definition 5.5

(Robustness under tensorisation) We say that a divergence \(D: {\mathcal {P}}({\mathcal {C}}) \times {\mathcal {P}}({\mathcal {C}}) \rightarrow {\mathbb {R}} \cup \{+ \infty \}\) and a corresponding estimator \({\widehat{D}}^{(N)}\) are robust under tensorisation if, for all \({\mathbb {P}},{\mathbb {Q}} \in {\mathcal {P}}({\mathcal {C}})\) such that \(D({\mathbb {P}} \vert {\mathbb {Q}}) < \infty \) and \(N \in {\mathbb {N}}\), there exists \(C > 0\) such that

for all \(M \in {\mathbb {N}}\). Here, \({\mathbb {P}}_i\) and \({\mathbb {Q}}_i\) represent identical copies of \({\mathbb {P}}\) and \({\mathbb {Q}}\), respectively, so that \(\bigotimes _{i=1}^M {\mathbb {P}}_i\) and \(\bigotimes _{i=1}^M {\mathbb {Q}}_i\) are measures on the product space \(\bigotimes _{i=1}^M C([0,T],{\mathbb {R}}^d) \simeq C([0,T],{\mathbb {R}}^{Md})\).

Clearly, if \({\mathbb {P}}\) and \({\mathbb {Q}}\) are measures on \(C([0,T],{\mathbb {R}})\), then M coincides with the dimension of the combined problem.

Remark 5.6

The variance and log-variance divergences defined in (43) and (44) depend on an auxiliary measure \(\widetilde{{\mathbb {P}}}\). Definition 5.5 extends straightforwardly by considering the product measures \(\bigotimes _{i=1}^d\widetilde{{\mathbb {P}}}_i\). In a similar vein, the relative entropy and cross-entropy divergences admit estimators that depend on a further probability measure \({\widetilde{{\mathbb {P}}}}\),

where \(X^j \sim {\widetilde{{\mathbb {P}}}}\), motivated by the identities \(D^{{{\,\mathrm{\mathrm {RE}}\,}}}({\mathbb {P}}\vert {\mathbb {Q}}) = {\mathbb {E}}_{{\widetilde{{\mathbb {P}}}}} \left[ \log \left( \frac{\mathrm {d}{\mathbb {P}}}{\mathrm {d}{\mathbb {Q}}} \right) \frac{\mathrm {d}{\mathbb {P}}}{\mathrm {d}{\widetilde{{\mathbb {P}}}}}\right] \) and \(D^{{{\,\mathrm{\mathrm {CE}}\,}}}({\mathbb {P}}\vert {\mathbb {Q}}) = {\mathbb {E}}_{{\widetilde{{\mathbb {P}}}}} \left[ \log \left( \frac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\mathbb {P}}} \right) \frac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\widetilde{{\mathbb {P}}}}}\right] \). We refer to Remark 3.8 for a similar discussion.

Proposition 5.7

We have the following robustness and non-robustness properties:

-

1.

The log-variance divergence \(D^{\mathrm {Var(log)}}_{\widetilde{{\mathbb {P}}}}\), approximated using the standard Monte Carlo estimator, is robust under tensorisation, for all \(\widetilde{{\mathbb {P}}} \in {\mathcal {P}}({\mathcal {C}})\).

-

2.

The relative entropy divergence \(D^{{{\,\mathrm{\mathrm {RE}}\,}}}\), estimated using \({\widehat{D}}^{{{\,\mathrm{\mathrm {RE}}\,}},(N)}_{{\widetilde{{\mathbb {P}}}}}\), is robust under tensorisation if and only if \({\widetilde{{\mathbb {P}}}} = {\mathbb {P}}\).

-

3.

The variance divergence \(D^{\mathrm {Var}}_{\widetilde{{\mathbb {P}}}}\) is not robust under tensorisation when approximated using the standard Monte Carlo estimator. More precisely, if \(\frac{\mathrm {d}{\mathbb {Q}}}{\mathrm {d}{\mathbb {P}}}\) is not \(\widetilde{{\mathbb {P}}}\)-almost surely constant, then, for fixed \(N \in {\mathbb {N}}\), there exist constants \(a > 0\) and \(C>1\) such that

$$\begin{aligned} r^{(N)} \left( \bigotimes _{i=1}^M {\mathbb {P}}_i \Big \vert \bigotimes _{i=1}^M {\mathbb {Q}}_i \right) \ge a \,C^M, \end{aligned}$$(89)for all \(M\ge 1\).

-

4.

The cross-entropy divergence \(D^{{{\,\mathrm{\mathrm {RE}}\,}}}\), estimated using \({\widehat{D}}^{{{\,\mathrm{\mathrm {RE}}\,}},(N)}_{{\widetilde{{\mathbb {P}}}}}\), is not robust under tensorisation. More precisely, for fixed \(N \in {\mathbb {N}}\) there exists a constant \(a>0\) such that

$$\begin{aligned} r^{(N)} \left( \bigotimes _{i=1}^M {\mathbb {P}}_i \Big \vert \bigotimes _{i=1}^M {\mathbb {Q}}_i \right) \ge a \left( \sqrt{ \chi ^2 ({\mathbb {Q}}\vert {\widetilde{{\mathbb {P}}}}) + 1} \right) ^M, \end{aligned}$$(90)for all \(M \ge 1\). Here

$$\begin{aligned} \chi ^2({\mathbb {Q}}\vert {\widetilde{{\mathbb {P}}}}) = {{{\,\mathrm{{\mathbb {E}}}\,}}}_{{\widetilde{{\mathbb {P}}}}} \left[ \left( \frac{\mathrm {d} {\mathbb {Q}}}{\mathrm {d} {\widetilde{{\mathbb {P}}}}}\right) ^2 - 1 \right] \end{aligned}$$(91)denotes the \(\chi ^2\)-divergence between \({\mathbb {Q}}\) and \({\widetilde{{\mathbb {P}}}}\).

Proof

See Appendix A.3. \(\square \)

Remark 5.8

Proposition 5.7 suggests that the variance and cross-entropy losses perform poorly in high-dimensional settings as the relative errors (89) and (90) scale exponentially in M. Numerical support can be found in Sect. 6. We note that in practical scenarios we have that \({\widetilde{{\mathbb {P}}}} \ne {\mathbb {Q}}\) as it is not feasible to sample from the target, and hence \(\sqrt{ \chi ^2 ({\mathbb {Q}}\vert {\widetilde{{\mathbb {P}}}}) + 1} > 1\).

6 Numerical experiments

In this section we illustrate our theoretical results on the basis of numerical experiments. In Sect. 6.1 we discuss computational details of our implementations, complementing the discussion in Sect. 3.3. The Sects. 6.2 and 6.3 focus on the case when the uncontrolled SDE (3) describes an Ornstein–Uhlenbeck process and the dimension is comparatively large. In Sect. 6.4 we consider metastable settings (of both low and moderate dimensionality), representative of those typically encountered in rare event simulations (see Example 2.1). We rely on PyTorch as a tool for automatic differentiation and refer to the code at https://github.com/lorenzrichter/path-space-PDE-solver.

6.1 Computational aspects

The numerical treatment of the Problems 2.1-2.5 using the IDO-methodology is based on the explicit loss function representations in Sect. 3.1, together with a gradient descent scheme relying on automatic differentiationFootnote 12. Following the discussion in Sect. 3.3, a particular instance of an IDO-algorithm is determined by the choice of a loss function, and, in the case of the cross-entropy, moment and variance-type losses, by a strategy to update the control vector field v in the forward dynamics (see Propositions 3.7 and 3.10). As mentioned towards the end of Sect. 3.3, we focus on setting \(v=u\) at each gradient step, i.e. to use the current approximation as a forward control. Importantly, we do not differentiate the loss with respect to v; in practice this can be achieved by removing the corresponding variables from the autodifferentiation computational graph (for instance using the detach command in the PyTorch package). Including differentiation with respect to v as well as more elaborate choices of the forward control might be rewarding directions for future research.

Practical implementations require approximations at three different stages: first, the time discretisation of the SDEs (3) or (5); second, the Monte Carlo approximation of the losses (as outlined in Sect. 3.3), or, to be precise, the approximation of their respective gradients; and third, the function approximation of either the optimal control vector field \(u^*\) or the value function V. Moreover, implementations vary according to the choice of an appropriate gradient descent method.

Concerning the first point, we discretise the SDE (5) using the Euler-Maruyama scheme [78] along a time grid \(0 = t_0< \dots < t_K = T\), namely iterating

where \(\Delta t > 0\) denotes the step size, and \(\xi _n \sim {\mathcal {N}}(0, I_{d \times d})\) are independent standard Gaussian random variables. Recall that the initial value can be random rather than deterministic (see Remark 2.5). We demonstrate the potential benefit of sampling \({\widehat{X}}_0\) from a given density in Sect. 6.3.

We next discuss the approximation of \(u^*\). First, note that a viable and straightforward alternative is to instead approximate V and compute \(u^* = - \sigma ^\top \nabla V\) whenever needed (for instance by automatic differentiation), see [101]. However, this approach has performed slightly worse in our experiments, and, furthermore, V can be recovered from \(u^* \) by integration along an appropriately chosen curve. To approximate \(u^*\), a classic option is a to use a Galerkin truncation, i.e. a linear combination of ansatz functions

for \(n \in \{0, \dots , K-1\}\) with parameters \(\theta _m^n \in {{\,\mathrm{{\mathbb {R}}}\,}}\). Choosing an appropriate set \(\{ \alpha _m \}_{m=1}^M\) is crucial for algorithmic performance – a task that in high-dimensional settings requires detailed a priori knowledge about the problem at hand. Instead, we focus on approximations of \(u^*\) realised by neural networks.

Definition 6.1

(Neural networks) We define a standard feed-forward neural network \(\Phi _\varrho :{{\,\mathrm{{\mathbb {R}}}\,}}^k \rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}^m\) by

with matrices \(A_l \in {{\,\mathrm{{\mathbb {R}}}\,}}^{n_{l} \times n_{l-1}}\), vectors \(b_l \in {{\,\mathrm{{\mathbb {R}}}\,}}^{n_l}, 1 \le l \le L\), and a nonlinear activation function \(\varrho : {{\,\mathrm{{\mathbb {R}}}\,}}\rightarrow {{\,\mathrm{{\mathbb {R}}}\,}}\) that is to be applied componentwise. We further define the DenseNet [38, 63] containing additional skip connections,

where \(x_{L}\) is defined recursively by

with \(A_l \in {{\,\mathrm{{\mathbb {R}}}\,}}^{n_l \times \sum _{i=0}^{l-1} n_i}, b_l \in {{\,\mathrm{{\mathbb {R}}}\,}}^l\) for \(1 \le l \le L-1\) and \(x_1 = x\), \(n_0 = d\). In both cases the collection of matrices \(A_l\) and vectors \(b_l\) comprises the learnable parameters \(\theta \).

Neural networks are known to be universal function approximators [28, 62], with recent results indicating favourable properties in high-dimensional settings [40, 41, 52, 97, 111]. The control u can be represented by either \(u(x,t) = \Phi _\varrho (y)\) with \(y=(x,t)^\top \), i.e. using one neural network for both the space and time dependence, or by \(u(x,t_n) = \Phi ^n_\varrho (x)\), using one neural network per time step. The former alternative led to better performance in our experiments, and the reported results rely on this choice. For the gradient descent step we either choose SGD with constant learning rate [51, Algorithm 8.1] or Adam [51, Algorithm 8.7], [76], a variant that relies on adaptive step sizes and momenta. Further numerical investigations on network architectures and optimisation heuristics can be found in [23].