Abstract

Texture analysis plays an important role in different domains of healthcare, agriculture, and industry, where multi-channel sensors are gaining more attention. This contribution presents an interpretable and efficient framework for texture classification and segmentation that exploits colour or channel information and does not require much data to produce accurate results. This makes such a framework well-suited for medical applications and resource-limited hardware. Our approach builds upon a distance-based generalized matrix learning vector quantization (GMLVQ) algorithm. We extend it with parametrized angle-based dissimilarity and introduce a special matrix format for multi-channel images. Classification accuracy evaluation of various model designs was performed on VisTex and ALOT data, and the segmentation application was demonstrated on an agricultural data set. Our extension of parametrized angle dissimilarity measure leads to better model generalization and robustness against varying lighting conditions than its Euclidean counterpart. The proposed matrix format for multichannel images enhances classification accuracy while reducing the number of parameters. Regarding segmentation, our method shows promising results, provided with a small class-imbalanced training data set. Proposed methodology achieves higher accuracy than prior work benchmarks and a small-scale CNN while maintaining a significantly lower parameter count. Notably, it is interpretable and accurate in scenarios where limited and unbalanced training data are available.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

A texture is an inherent property of any object, hence can be used to describe and distinguish them. As artificial intelligence (AI) develops, texture analysis becomes more often associated with computer vision which aims to process and understand digital images. By digital image, we assume a composition of picture elements (pixels) that have spatial and channel dimensions as shown in Fig. 1. In this context, texture refers to spatial variations in channels [1] which AI can detect and measure. Consequently, AI methods excel in tasks like classification and segmentation because they can precisely analyze the texture within specific image regions [2]. Texture analysis is used in various fields from industrial quality control [3, 4] and agriculture [5,6,7] to medical imaging [8,9,10], as exemplified in Fig. 2.

A range of methodologies is employed for texture analysis tasks, including well-established techniques such as Gabor filtering [11], co-occurrence matrices [12], local binary patterns (LBP) [13], and Markov random fields (MRF) [14]. While state-of-the-art techniques like convolutional neural networks (CNN) exhibit remarkable accuracy [15,16,17], they typically demand large amounts of data for training, lack interpretability, and require substantial computational resources. These issues become particularly relevant in real-time monitoring with unmanned aerial vehicles (UAV) like drones that are widely used for their flexibility in agriculture [18]. This has led to an increased demand for lightweight software solutions. In medical applications, the interpretability of results becomes a critical concern. Despite the traditional techniques not necessarily sharing the issues with CNNs, they were originally designed for single-channel intensity images. In the case of multichannel input like RGB, a common practice is to convert them into intensity images using fixed transformation functions. However, the adoption of task-specific transformations, as shown by the colour image analysis learning vector quantization (CIA-LVQ) framework [19] has shown potential for enhancing accuracy. CIA-LVQ is a prototype-based classifier that learns RGB-to-intensity transformations under which the dissimilarities between image patches are computed. Additionally, a Gabor filter bank is used as a feature extractor. In [20] CIA-LVQ was extended by introducing the adaptive filter bank, which improved classification results even further. Both works considered images in the complex Fourier domain. A recent extension proposed a variation of this framework which operates in the spatial domain and learns only the coefficients of Gabor filters, reducing the number of adaptive parameters to only 5 per filter [21]. All existing CIA-LVQ papers use the parameterized Quadratic Form (QF) in Euclidean space to measure dissimilarity between filtered patches. Other LVQ variants employ a Parametrized Angle-based dissimilarity [22] and probabilistic cost functions for soft class assignments [23, 24]. Note those can readily incorporate the extensions proposed here.

This work is an extension of our conference paper [25] where we aim to develop an efficient, interpretable multichannel texture analysis framework tailored for scenarios with limited training data. As a foundation we use CIA-LVQ, but adjust its original adaptive dissimilarity measure and extend it with a Parametrized Angle-based (PA) dissimilarity. Moreover, we demonstrate a specialized case of transformation matrices designed specifically for multichannel imagery, enhancing model generalization and explainability while reducing complexity. Unlike previous works [19, 20] that consider images in the complex Fourier domain, we operate in the spatial domain for more intuitive results interpretation. We omit the filtering operation, focusing on the influence of dissimilarity measures and matrix format on the results, distinguishing our approach from [21]. In addition to the VisTex [26] data set used in previous works, we evaluate our models on the ALOT [27] subset that offers varying illumination conditions. Notably, our model outperforms the most recently published CIA-LVQ results and a CNN while having 226 times and 106 times fewer trainable parameters, respectively. Finally, we showcase the applicability of our method to the semantic segmentation of agricultural photos, highlighting the robustness and versatility of our proposed framework.

This paper is structured as follows. First, we explain the methodology in Sect. “Methods” in detail. Specifically, the theoretical basis regarding LVQ can be found in Sect. “LVQ for Multichannel Image Analysis” while experimental setups for classification and segmentation are described in Sect. “Experiments”. The results are discussed and compared to other benchmarks in Sect. “Results and Discussion”. Finally, in Sect. “Conclusion and Future Work” we give a general evaluation of our framework and discuss plans for future work.

Methods

In this section, we give a detailed overview of our methodology. It is based on the idea of prototype learning. This paradigm defines models that are built around a set of representative data examples (prototypes), and the predictions for new, unseen data are made according to the similarity to these prototypes. Learning vector quantization (LVQ) [28] is a prototype-based classifier that stands out for its interpretability, intuitive accessibility, and flexibility [29]. Since its invention various extensions have been proposed such as generalized LVQ (GLVQ) [30] and generalized matrix LVQ (GMLVQ) [31] which we explain in the following sections.

LVQ for Multichannel Image Analysis

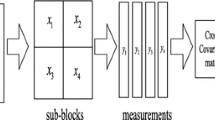

Our pipeline is similar to the one of CIA-LVQ and can be split into input preprocessing and prototype-based learning. The first stage is the extraction of random non-overlapping patches from the original images. Without loss of generality, we consider all h channels and hence a patch of size \(p \times p\) has the dimensionality of \(n=p \times p \times h\). For colour images, h is typically 3 or 4 (e.g. RGB, CMYK), but the extension to hyperspectral data is possible. The value for p should be selected, such that the important parts of a given texture are covered. Finally, the patches are vectorized by row-wise flattening and channel-wise concatenation to form feature vectors \(\textbf{x}_i\in \mathbb {R}^n\), as shown in Fig. 3. In contrast to [19, 20] we consider non-complex input in the spatial domain and no Gabor filter bank. Hence, we analyze the use of previously introduced angle LVQ (ALVQ) [22] for colour texture classification and propose a block-diagonal parameterization for multi-channel intensities.

Additionally, we demonstrate a segmentation application of the framework. Trained classifiers can be used in patch-based segmentation, with texture pattern as a criterion for image partitioning. Semantic segmentation where each pixel is classified based on its neighbourhood is straightforward and requires a sliding window of the same dimensions \(p \times p\) as the training patches.

LVQ Principles

LVQ is a supervised algorithm with the winner-takes-all scheme, in which a data point is classified according to the label of its closest prototype. Throughout the following, we assume a labelled training data set \(\{(\textbf{x}_i,y_i) \mid \textbf{x}_i\in \mathbb {R}^n\), and \(y_i\in \{1,\ldots ,C\}\}_{i=1}^N\) and a set of prototype vectors \(\textbf{w}_j \in \mathbb {R}^n\) with labels \(c(\textbf{w}_j)\in \{1,\ldots ,C\}\). In contrast to the original heuristic prototype update the GLVQ introduced a training scheme as minimization of costs:

with distance measure \(d_i^J=d(\textbf{x}_i,\textbf{w}^J)\) to the closest prototype \(\textbf{w}^J\) with the same class label \(y_i=c(\) \(\textbf{w}^J\)) and the distance \(d_i^K=d(\textbf{x}_i,\textbf{w}^K)\) to the closest prototype with non-matching label \(y_i\ne c(\) \(\textbf{w}^K\)). \(\Phi\) is a monotonic function and we use the identity function in this contribution. The definition of d plays a central role in LVQ-based classifiers, as it determines the closest prototypes. Figure 4 illustrates the essence of the algorithm and highlights the importance of d. In this paper the quadratic form (QF) and a parametric angle-based (PA) dissimilarities are considered.Footnote 1

LVQ learning principle: for a data point \(\textbf{x}_i\) distance to all prototypes \(\textbf{w}_j\) is computed. In the case of a training phase \(\textbf{w}_1, \ \textbf{w}_2\) are being updated as the closest prototypes from the same and different class with respect to training data point \(\textbf{x}_i\). In the case of the inference phase, \(\textbf{x}_i\) is an unseen observation that is classified according to the closest prototype. i.e. \(\textbf{w}_1\)

Generalized Matrix LVQ

GMLVQ is an extension of GLVQ which makes the distance metric adaptive by employing a positive semi-definite \(n \times n\) matrix \(\Lambda\), which accounts for the pair-wise correlation between the features. To ensure positive semi-definiteness \(\Lambda\) can be decomposed as \(\Lambda = \Omega ^T\Omega\) with \(\Omega \in \mathbb {R}^{m\times n}\), with \(m \le n\). The corresponding quadratic form (QF) is defined as:

where \(\Omega\) is learned and updated along with the prototypes, hence the distance becomes adaptive. Special cases of (2) include the squared Euclidean distance if the resulting \(\Lambda\) is fixed as identity matrix, and generalized relevance LVQ [32] where it is a diagonal matrix. Rectangular matrices \(\Omega\) with \(m<n\) imply dimensionality reduction by means of linear transformation.

The CIA-LVQ framework [19,20,21] adopts full rectangular matrices \(\Omega\) with \(m=\frac{n}{3}\) to obtain a lower-dimensional intensity (“quasi-greyscale”) representation of the original 3-channel RGB image patch. While other unsupervised techniques like PCA can generate such representation, they come with assumptions of e.g. variance as a criterion of importance, linearity, and orthogonality, that do not always hold [33]. Moreover, unlike principle components which lack interpretability, \(\Lambda\) can be understood as the correlation matrix of spatio-colour features. Naturally, this concept can be generalized to data with h intensity channels. In this work, we use rectangular \(\Omega\)’s and introduce the new option specifically developed for channel intensity images (both variants shown in Fig. 5). We term it a block-diagonal matrix:

Hence, every row \(\widehat{\Omega }_{i}\) contains only contributions from the same pixel from each of h channels reducing the number of free matrix parameters from \(m\times n\) to hm, which can prevent the risk of overfitting. Instead of a global transformation, local matrices \(\Omega ^j\) or \(\widehat{\Omega }^j\) attached to each prototype or class can be trained, changing the piece-wise linear decision boundaries into nonlinear ones composed of quadratic pieces [31]. The resulting number of trainable parameters for classwise matrices is then \(C(nm + ln)\) for full \(\Omega ^j\) or \(C(n + ln)\) for block-diagonal \(\widehat{\Omega }^j\) with C being the number of classes, and l being the number of prototypes per class.

Data vector \(\textbf{x}_i\) is projected with a full \(\Omega ^T\) (a) or block-diagonal (b) \(\widehat{\Omega }^T\) transformation matrix resulting in a lower-dimensional representation \(\mathbf {\xi }_i\). Shading indicates contributions to the shaded element of \(\mathbf {\xi }_i\) and empty cells indicate zero weights

Parametrized Angle Dissimilarity

A very recent extension of GMLVQ named angle LVQ (ALVQ) [22] introduced a parameterized angle (PA) distance, that demonstrated very robust behaviour for heterogeneous data and imbalanced classes. The dissimilarity is defined as:

The exponential function g transforms the parameterized cosine similarity \(b^{\Omega }=\cos \theta \in [-1,1]\) into a dissimilarity \(\in [0,1]\). The hyperparameter \(\beta\) influences the slope as shown in Fig. 6b weighting the contribution of samples within the receptive field based on their distance to the prototype. For \(\beta \rightarrow 0\) the weighting is near-linear, while increasing \(\beta\) non-linearly decreases the contribution of samples further away from the prototype. The angle-based distance classifies on the hyper-sphere instead of Euclidean space and hence does not consider the magnitude of vectors (see Fig. 6a). This can be beneficial in scenarios where e.g. lightning conditions change.

Euclidean and angle-based distances marked as dashed lines and arcs, respectively (a). Note, in the illustrated scenario the Euclidean distances from \(\textbf{x}\) to \(\textbf{w}_1\) and \(\textbf{w}_2\) are equal, while it is not the case for angle-based distance. For parametric angle distance \(\beta\) influences the result nonlinearly (b)

Optimization and Fine-Tuning

Optimization of the prototypes and transformation matrices occurs through the minimization of the non-convex cost function in Eq. 1 by gradient methods, such as stochastic gradient descent or conjugate gradient. The corresponding partial derivatives for local matrix LVQ using the QF read:

with r, c specifying row and column respectively. And for PA distance:

To prevent the algorithm from degeneration, the \(\Omega ^j\) normalization by enforcing a unit trace \(\text {tr}(\Lambda ^j)\equiv \sum _{i=1}^n\Lambda ^j_{ii}=1\) is advised in [31]. The trace also coincides with the sum of the eigenvalues. In terms of \(\Omega ^j\) updates, such normalization is realized by dividing all its elements by \(\sqrt{\text {tr}(\Lambda ^j)}\). Based on the assumption that the matrices are normalized, a regularization of strength \(\alpha\) of a cost function has also been proposed in [34] to avoid oversimplification of the model:

Thus, cost function is penalized more for smaller determinant \(\det (\Omega ^j\Omega ^{jT})\). The update rules for matrices are adjusted respectively. As a result, \(\Lambda ^j\) would have more balanced eigenvalues. We argue that this approach is suitable even without unit trace normalization, but in such a case, the cost function is rewarded less rather than penalized more for the smaller determinant.

The class imbalance can be dealt with by incorporating a cost matrix \(\Gamma =\{\gamma _{cp}\}_{c,p=1,\dots ,C}\) with \(\sum _{c,p}\gamma _{cp}=1\), as suggested in [35, 36]. The rows of this matrix represent the actual classes c and columns denote the predicted classes p. When all \(\gamma _{cp}\) are equal the weight contribution of each class is inversely proportional to the class strength and the cost function becomes:

where \(y_i=c\) is the class label of a training data point \(\textbf{x}_i\), \(n_c\) is the size that class, \(\hat{y_i}\) is the predicted label, and \(\mu _i\) is the cost function value of the data point i as defined in Eq. 1.

Experiments

In this section, we present the experimental setups, data sets, and model parameterizations used for the classification and segmentation experiments. The reference Python implementation of GMLVQ can be found in [37].

Classification

The focus of these two experiments is to evaluate the GMLVQ classifier on colour texture images. We study the influence of regularization, normalization, the format of classwise transformation matrix (full \(\Omega ^j\) or block-diagonal \(\hat{\Omega }^j\)) and the dissimilarity measure (QF or PA). Moreover, we compare our results to the accuracy of a CNN and a recent CIA-LVQ benchmark [21].

For the experimentation we use the same portion of VisTex [26] data set as in previous CIA-LVQ iterations, to be directly comparable to previous results. It contains 29 \(128\times 128\) colour images from 4 classes as shown in Fig. 7 (top). Furthermore, a limited subset of the ALOT [27] data of 18 images is used to test performance under varying lighting conditions, where a classifier is tested on conditions never seen in training. We selected 2 classes with very similar textures and colour palettes, that are difficult to distinguish for the human eye (bottom row of Fig. 7). Prior to preprocessing explained in Sect. “LVQ for Multichannel Image Analysis”, ALOT images were cropped to a square and resized to \(128\times 128\). Per image, 150 and 200 \(15\times 15\) training patches were extracted for VisTex and ALOT, respectively.

For both data sets the images used for training and testing do not overlap and the hyperparameter \(\beta\) is selected based on the training results during model validation with full matrices. For VisTex and ALOT, the highest training accuracy is achieved with \(\beta =4\) and \(\beta =0.1\), respectively. For model validation, we train 5 models on random sets of patches extracted from the training images and random initialization of the model parameters. The cost function is optimized using the limited-memory Broyden–Fletcher–Goldfarb–Shanno algorithm (L-BFGS-B) [38]. Local class-wise \(\Omega ^j\) \((j=c(\textbf{w}^L))\) matrices are employed. During the VisTex experiment, we also investigate different cases in terms of normalization, regularization, and block-diagonal \(\widehat{\Omega }^j\).

Both random initialization and training patches are seeded to be the same for different scenarios, such that they are directly comparable only differing in the dissimilarity measure used. For VisTex we experimented with 5 different designs and trained 5 models for each. Hence, a total of 5 (designs) \(\times\) 2 (distance metrics) \(\times\) 5 (models) = 50 models were used. For ALOT we show the most promising design, resulting in a total of 1 (design) \(\times\) 2 (distance metrics) \(\times\) 3 (models) = 6 models. Finally, we compare our method to a small-scale CNN with 3 hidden layers. To achieve a closer resemblance with our patch-based model a CNN used \(15\times 15\) kernels.

Segmentation

This experiment showcases an application to colour agricultural images captured by a drone in a rose greenhouse. This data set is a part of the SMART-AGENTS project.Footnote 2 The goal is to identify mildew or mold on the plants. Since these diseased regions have a similar colour and shape to insects’ eggs as shown in the top right of Fig. 8, we consider a 3-class problem with ‘mold’, ‘eggs’ and ‘healthy’ classes. Due to the absence of ground truth segmentation by an expert, we prepare the labelled dataset manually. First, non-overlapping full-resolution images are selected for training a test set. We use a patch size of \(7\times 7\) pixels which is typically sufficient to cover a single mold region or an egg. Most of the data samples from ‘mold’ and ‘eggs’ classes come from manual cropping of \(7\times 7\) patches, however, if a larger region is encountered, the subpatches are randomly extracted from it. As for the ‘healthy’ class, 14 healthy \(50\times 50\) areas are selected and then \(7\times 7\) subpatches are drawn from them. This leads to a highly imbalanced training data set consisting of 1005 patches (see Fig. 8). Interestingly, mold occurrences are observed more frequently on green leaves, resulting in a scarcity of unhealthy brown leaf representatives in the dataset. There could be a biological reason behind this observation which is unrelated to seasonality since the environment in a greenhouse is controlled. For segmentation, \(301\times 301\) subimages from full-resolution images not occurring in the train set are prepared that capture different photo properties like blurriness, lighting, egg presence, etc.

In this experiment, we focus on simple models to demonstrate their application to segmentation. Hence, we train 5 GMLVQ models with 1 prototype per class, local full \(\Omega ^j \in \mathbb {R}^{49\times 147}\) matrices and QF distance. The models differ by a seed used for random initialisation and drawing random patches from the ‘healthy’ class. During GMLVQ training the imbalance correction is applied. After validating the performance of a classifier, we train the final model with combined train and test data consisting of 1439 patches. This model is then used for segmentation through per-patch classification with a sliding window. There are no available ground truth masks from an expert, hence our segmentation results are not evaluated with any metric. Instead, they serve as a preliminary detection which can then be updated by an expert.

Results and Discussion

In this section, the results of our classification and segmentation experiments are presented and discussed.

Classification

The accuracy and standard deviation for all classification experiments are summarized in Table 1. In our analysis of VisTex results, we designate the QF model in the first row as a ‘baseline’ due to its most basic design. We observe that when normalization is used during training with QF distance, models exhibit increased overfitting, with an approximate 20% difference between train and test accuracy. However, this comes with a reduction in standard deviation compared to the baseline by over sixfold and twofold for train and test data, respectively. Regularization proved to be helpful, as it improves the baseline test accuracy by \(2.31\%\) while lowering the standard deviation. The use of block-diagonal matrices, indicated by ‘Full’ being false, results in the highest test accuracy and least overfitting, surpassing the baseline by 7.65%. Moreover, as depicted in the confusion matrix in the top right panel of Fig. 9, class-wise accuracies for the Brick and Fabric classes are improved.

In contrast to QF-trained models, neither regularization nor normalization significantly impacts the test or training accuracy of models with PA dissimilarity. Using PA leads to more evenly distributed class-wise accuracies as shown in confusion matrices in Fig. 9. The highest test results are consistently achieved with block-diagonal \(\widehat{\Omega }\)’s. We observe that models with PA dissimilarity are more accurate for all considered cases, though regularization and \(\widehat{\Omega }\)’s achieve comparable results. Our experiments suggest that the block-diagonal transformation matrix is advantageous regardless of the dissimilarity, as it reduces the number of parameters and provides better generalization and interpretation. In comparison to previous CIA-LVQ studies on the same VisTex subset, our best model surpasses a variation with adaptive Gabor filters [21] by 2.81%, despite having only 13,500 trainable parameters compared to an estimated 3,051,000 in [21]. Additionally, the considered CNN with 1441348 parameters exhibits inferior generalization ability compared to our models on both datasets, with a test accuracy of 69.23% and 61.11% for VisTex and ALOT, respectively. However, we acknowledge that the rigorous optimization of the CNN is beyond the scope of this contribution. The comparison of accuracy with respect to the number of parameters of discussed classifiers is visualized in Fig. 10.

The comparison of our best model’s (triangle) average accuracy, the results of benchmark [21] (circle) and CNN (square) with respect to the number of free parameters for VisTex and ALOT

The ALOT experiments highlight the inability of QF-trained models to distinguish classes under varying lighting conditions and camera positions. We attribute the success of models with PA distance primarily to their classification on a hypersphere, with the non-linear transformation g playing a secondary role. As shown in the right panel of Fig. 11, small \(\beta\) (approximately linear) achieve the highest training accuracy and test results far superior compared to the random assignment of QF-trained models. For VisTex the performance improvement is dependent on the non-linearity of the transformation, as small \(\beta\) gives quite similar results to the models with QF distance (see left panel of Fig. 11).

While the interpretation of the results is not the focus of this contribution, Fig. 12 visualizes the class-wise spatial correlations between and within colour channels, in the form of \(\Lambda ^j\), as a way of looking into the learned patterns from the VisTex data. For example, a lot of strong positive correlations can be observed within the Red (top left quadrant) and Green (middle quadrant) channels for the “Brick” class, and negative correlations between those channels. For the “Bark” class, however, most positive correlations are found within the Green and the Blue channels, while the correlations between them are mostly negative.

Segmentation

First, we compare the influence of class-imbalance correction (CIC). Despite 3.27% higher overall accuracy without CIC, models trained with CIC demonstrate a reduced confusion between moldy and healthy regions, which is crucial for the intended application. Specifically, the accuracy of the ‘mold’ classification is 9% higher (see Fig. 13).

Next, we visually evaluate the segmentation quality. Figure 14 demonstrates the results obtained on images that contain no eggs. The leftmost column showcases an example of a rather blurry image with moldy regions in the background. In-focus mold patches and a small background region on the brown leaf are correctly detected, but very blurry or darkened mold stains pose a challenge, possibly due to non-representative training data. As for the middle column that demonstrates an image with specular reflections, we see that while the majority mold regions are detected, there are many false positives due to highlights being classified as mold. The rightmost column, featuring an image of healthy leaves, exhibits some false positives due to reflections on healthy leaf edges being classified as mold.

In Fig. 15 the examples containing all 3 classes can be found. Segmentation results in the first two columns indicate the model’s tendency to classify light spots on brown leaves as eggs more frequently than on green leaves. We assume that the reason behind this might be the lack of training data representing unhealthy patches on brown leaves. Additionally, the model occasionally misclassifies the outlines of eggs as mold, which is especially apparent in the bottom part of the middle column. However, given small, unbalanced and not representative training data, even a basic model with one prototype per class is able to differentiate relatively similar classes such as ‘mold’ and ‘eggs’.

Conclusion and Future Work

In this paper, we presented a framework for efficient and interpretable texture image analysis, focusing on the challenges of limited training data. It is based on an extension of the GMLVQ algorithm which aims to learn spatio-colour patterns in multi-channel input and yields transformation matrices to project image patches to a lower-dimensional discriminative intensity space. We introduce a parametric angle (PA) dissimilarity as an alternative to the quadratic form (QF) distance and propose a new block-diagonal format of the transformation matrices, that learns a per-channel contribution for each pixel.

Experiments on the VisTex data set demonstrate that models trained with PA distance outperform their QF-trained counterparts in terms of accuracy in various scenarios involving regularisation and normalisation. While reducing the complexity, the block-diagonal matrices achieve the best performance in both PA and QF-based models. Additionally, we experimented with the ALOT images, where lighting conditions and the angle of view differ between training and test data. Our results show that a small-scale CNN and models trained with QF distance fail to generalize to unseen lighting conditions, while PA-based models achieve 79.11% in that scenario. We also showcase a scenario of a real-world application, where our framework performs semantic segmentation of agricultural photos. This experiment demonstrates the effectiveness of used class imbalance correction approach and highlights GMLVQ’s ability to learn from limited training data.

Future work includes a focus on segmentation tasks, with plans to integrate GMLVQ with the \(\alpha\)-tree algorithm [39]. An \(\alpha\)-tree provides an efficient way to project a multichannel image into a tree structure taking into account both spectral and morphological constraints. A combined architecture will yield a fast and interactive segmentation and tiny object detection tool applicable across diverse domains such as life sciences, astronomy, and agriculture. Furthermore, our efforts will extend to enhance model explainability through prototype visualization and classification activation maps, contributing to the broader utility of our framework in medicine and healthcare.

Data Availability

The VisTex dataset is available at https://vismod.media.mit.edu/vismod/imagery/VisionTexture/vistex.html. The ALOT dataset is available at https://aloi.science.uva.nl/public_alot/. The Roses dataset is available from Kerstin Bunte. Restrictions apply to the availability of these data, which were used under license for this study.

Notes

Our dissimilarity measures are not required to satisfy the triangle inequality and hence are not necessarily proper metrics. We still refer to these pseudo-metrics as “distances" and “dissimilarities" throughout this paper for improved readability.

References

Srinivasan GN, Shobha G. Statistical texture analysis. Int J Comput Inf Eng. 2008;2(12):4268–73.

Nailon W. Texture Anal. Methods for Med. Image Characterisation. 2010. p. 75–100. https://doi.org/10.5772/8912.

Kumar A, Pang G. Fabric defect segmentation using multichannel blob detectors. Opt Eng. 2000;39:3176–90. https://doi.org/10.1117/1.1327837.

Carreón YJP, Díaz-Hernández O, Escalera Santos GJ, Cipriano-Urbano I, Solorio-Ordaz FJ, González-Gutiérrez J, Zenit R. Texture analysis of dried droplets for the quality control of medicines. Sensors. 2021. https://doi.org/10.3390/s21124048.

Kupidura P. The comparison of different methods of texture analysis for their efficacy for land use classification in satellite imagery. Remote Sens. 2019. https://doi.org/10.3390/rs11101233.

Gavhale KR, Gawande U, et al. An overview of the research on plant leaves disease detection using image processing techniques. Iosr J Comput Eng (iosr-jce). 2014;16(1):10–6. https://doi.org/10.9790/0661-16151016.

Kwak G-H, Park N-W. Impact of texture information on crop classification with machine learning and uav images. Appl Sci. 2019;9:643. https://doi.org/10.3390/app9040643.

Castellano G, Bonilha L, Li LM, Cendes F. Texture analysis of medical images. Clin Radiol. 2004;59(12):1061–9. https://doi.org/10.1016/j.crad.2004.07.008.

Kather J, Weis C-A, Bianconi F, Melchers S, Schad L, Gaiser T, Marx A, Zöllner F. Multi-class texture analysis in colorectal cancer histology. Sci Rep. 2016;6:27988. https://doi.org/10.1038/srep27988.

Gautam A, Raman B. Towards accurate classification of skin cancer from dermatology images. IET Image Proc. 2021;15(9):1971–86. https://doi.org/10.1049/ipr2.12166.

Fogel IY, Sagi D. Gabor filters as texture discriminator. Biol Cybern. 1989;61:103–13. https://doi.org/10.1007/BF00204594.

Haralick RM, Shanmugam K, Dinstein IH. Textural features for image classification. IEEE Trans Syst Man Cybern SMC. 1973;3(6):610–21. https://doi.org/10.1109/TSMC.1973.4309314.

Ojala T, Pietikäinen M, Harwood D. Performance evaluation of texture measures with classification based on kullback discrimination of distributions. In: Proceedings of 12th international conference on pattern recognition, vol 1. 1994. p. 582–5851.

Cross GR, Jain AK. Markov random field texture models. IEEE Trans Pattern Anal Mach Intell PAMI. 1983;5(1):25–39. https://doi.org/10.1109/TPAMI.1983.4767341.

Kumar S, Gupta A. Comparative review of machine learning and deep learning techniques for texture classification. In: Proc. of the Int. Conf. on Artif. Intell. Techniques for Elect. Eng. Syst. (AITEES 2022). 2022. p. 95–112. https://doi.org/10.2991/978-94-6463-074-9_10.

Dixit U, Mishra A, Shukla A, Tiwari R. Texture classification using convolutional neural network optimized with whale optimization algorithm. SN Appl Sci. 2019. https://doi.org/10.1007/s42452-019-0678-y.

Roy SK, Dubey SR, Chanda B, Chaudhuri BB, Ghosh DK. Texfusionnet: an ensemble of deep cnn feature for texture classification. In: Chaudhuri BB, Nakagawa M, Khanna P, Kumar S, editors. Proceedings of 3rd international conference on computer vision and image processing. Singapore: Springer; 2020. p. 271–83.

Deng L, Mao Z, Li X, Hu Z, Duan F, Yan Y. Uav-based multispectral remote sensing for precision agriculture: a comparison between different cameras. J Photogramm Remote Sens. 2018;146:124–36. https://doi.org/10.1016/j.isprsjprs.2018.09.008.

Bunte K, Giotis I, Petkov N, Biehl M. Adaptive matrices for color texture classification. In: Real P, Diaz-Pernil D, Molina-Abril H, Berciano A, Kropatsch W, editors. Computer analysis of images and patterns. Berlin: Springer; 2011. p. 489–97.

Giotis I, Bunte K, Petkov N, Biehl M. Adaptive matrices and filters for color texture classification (vol 47, pg 79, 2013). J Math Imaging Vis. 2014;48:202–202. https://doi.org/10.1007/s10851-013-0472-1.

Luimstra G, Bunte K. Adaptive gabor filters for interpretable color texture classification. In: Eur. Symp. on Artif. Neural Netw. (ESANN). 2022. p. 61–6.

Ghosh S, Tiño P, Bunte K. Visualization and knowledge discovery from interpretable models. In: Int. Joint Conf. on Neural Netw.(IJCNN), Glasgow. IEEE; 2020. p. 1–8. https://doi.org/10.1109/IJCNN48605.2020.9206702.

Villmann A, Kaden M, Saralajew S, Villmann T. Probabilistic learning vector quantization with cross-entropy for probabilistic class assignments in classification learning. In: Rutkowski L, Scherer R, Korytkowski M, Pedrycz W, Tadeusiewicz R, Zurada JM, editors. Artificial intelligence and soft computing. Cham: Springer; 2018. p. 724–35.

Bonilla EV, Robles-Kelly A. Discriminative probabilistic prototype learning. 2012. arXiv:1206.4686 [CoRR abs]

Shumska M, Bunte K. Towards robust colour texture classification with limited training data. In: Computer analysis of images and patterns. Cham: Springer; 2023. p. 153–63. https://doi.org/10.1007/978-3-031-44237-7_15

MIT Vision and Modeling Group. Database VisTex of Color Textures from MIT. https://vismod.media.mit.edu/vismod/imagery/VisionTexture/vistex.html

Burghouts GJ, Geusebroek JM. Material-specific adaptation of color invariant features. Pattern Recogn Lett. 2009;30:306–13. https://doi.org/10.1016/j.patrec.2008.10.005.

Kohonen T. Learning vector quantization. Berlin: Springer; 1995. p. 175–89. https://doi.org/10.1007/978-3-642-97610-0_6.

Biehl M, Hammer B, Villmann T. Prototype-based models in machine learning. WIREs Cogn Sci. 2016;7(2):92–111. https://doi.org/10.1002/wcs.1378.

Sato A, Yamada K. Generalized learning vector quantization. In: Neural information processing systems. 1995.

Schneider P, Biehl M, Hammer B. Adaptive relevance matrices in learning vector quantization. Neural Comput. 2009;21(12):3532–61. https://doi.org/10.1162/neco.2009.11-08-908.

Hammer B, Villmann T. Generalized relevance learning vector quantization. Neural Netw. 2002;15:1059–68. https://doi.org/10.1016/S0893-6080(02)00079-5.

Shlens J. A tutorial on principal component analysis. 2014.

Schneider P, Bunte K, Stiekema H, Hammer B, Villmann T, Biehl M. Regularization in matrix relevance learning. IEEE Trans Neural Netw. 2010;21(5):831–40. https://doi.org/10.1109/TNN.2010.2042729.

Pazzani M, Merz C, Murphy P, Ali K, Hume T, Brunk C. Reducing misclassification costs. In: Cohen WW, Hirsh H, editors. Machine learning proceedings 1994. San Francisco: Morgan Kaufmann; 1994. p. 217–25. https://doi.org/10.1016/B978-1-55860-335-6.50034-9.

Ghosh S, Baranowski ES, Biehl M, Arlt W, Tino P, Bunte K. Interpretable models capable of handling systematic missingness in imbalanced classes and heterogeneous datasets. 2022.

Veen R, Biehl M, Vries G-J. sklvq: scikit learning vector quantization. J Mach Learn Res. 2021;22(231):1–6.

Zhu C, Byrd RH, Lu P, Nocedal J. Algorithm 778: L-bfgs-b. ACM Trans Math Softw. 1997;23(4):550–60. https://doi.org/10.1145/279232.279236.

Ouzounis GK, Soille P. The alpha-tree algorithm. JRC Scientific and Policy Report. 2012. https://doi.org/10.2788/48773.

Funding

This publication is part of the project SMART-AGENTS (with project number 18024 of the research programme Smart Industry which is (partly) financed by the Dutch Research Council (NWO).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Mariya Shumska and Kerstin Bunte. The first draft of the manuscript was written by Mariya Shumska, and Kerstin Bunte and Michael Wilkinson commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Research involving human and/or animals

Not applicable.

Informed consent

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Shumska, M., Wilkinson, M.H.F. & Bunte, K. Towards Robust Colour Texture Analysis with Limited Training Data. SN COMPUT. SCI. 5, 730 (2024). https://doi.org/10.1007/s42979-024-03067-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-024-03067-x