Abstract

Learning disabilities present formidable obstacles for children in their educational journeys, often overlooked or misunderstood. This research aims to address two critical issues: the differentiation between learning and intellectual disabilities, and the resistance of teachers to integrating technology into education. In response, a comprehensive approach was adopted. Firstly, a mobile application was developed to screen for potential learning disabilities, offering teachers insights into individual student challenges and personalized lecture adaptations. Secondly, a quasi-experimental pretest–posttest study was conducted, comparing student performance following traditional and technologically supported lectures. Results from the study revealed significant score improvements among students identified as potentially having learning disabilities when exposed to technologically supported lectures. Conversely, students not identified as potential cases remained within the normal range of performance. These findings underscore the potential of technology to enhance educational outcomes for children with learning disabilities while highlighting the importance of recognizing and addressing these challenges in educational settings. By bridging the gap between identification and intervention, this research contributes to a more inclusive and effective educational environment.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The concept of disabilities has eluded a single, definitive definition throughout human history. Evidence of disabilities traces back to prehistoric times [1], suggesting their enduring presence within society. However, societal attitudes towards individuals with disabilities have varied significantly. While ancient civilizations like those in Ancient Greece often integrated individuals with disabilities into daily life [2], other societies exhibited less inclusive behaviors. Individuals with intellectual disabilities, for instance, were historically stigmatized, sometimes being associated with demonic possession [3], while those with physical disabilities were often objectified as mere spectacles for public amusement [4]. Among these various forms of disabilities, learning disabilities silently persisted throughout history.

Termed as "invisible handicaps" by Dr. Kurt Goldstein in the 1920s [5], learning disabilities represent a distinct category of cognitive challenges. Unlike intellectual disabilities, which typically correlate with low Intelligence Quotient (IQ) scores, learning disabilities often manifest as discrepancies between academic performance and intellectual potential [6]. The "Discrepancy model" formerly functioned as a diagnostic tool, identifying students with learning disabilities based on such disparities [7]. Even if we moved on to different models that take into account discrepancies that could also be due to other circumstances such as family problems, it is crucial to recognize that learning disabilities do not impede intellect but rather denote cognitive differences from the norm.

Today, the challenge does not lie in defining learning disabilities but in fostering widespread understanding and acceptance. While some nations, particularly in the West, mandate early screening and intervention for children with learning disabilities, many regions lag behind. In countries such as the Republic of North Macedonia, where the study was conducted, inclusive education initiatives strive to emulate Western models without a full comprehension of their intricacies [8]. This lack of understanding is evident even in official documents, where terms such as "students with disabilities" encompass a broad spectrum of challenges, ranging from physical and intellectual disabilities to sensory impairments and learning disabilities. However, such general categorizations overlook the diverse needs and interventions required for each disability subtype, exacerbating the challenges faced by educators.

Distinguishing between screening and diagnosis is paramount in addressing the needs of children with learning disabilities. While screening offers a swift identification of potential issues, diagnosis necessitates extensive assessment and monitoring. Our proposed mobile application seeks to empower educators in environments where stigmas surrounding psychiatric evaluations hinder the proper assessment of learning disabilities. Although the scope of our experiment was limited, initial results indicate promising accuracy, underscoring the app's potential efficacy as a support tool for teachers in addressing the needs of children with learning disabilities.

We organize the paper as follows: In the subsequent sections, this paper delves into the state of the art and related work concerning learning disability screening, both in physical and digital contexts, offering insights into international and local approaches. Following this, the methodology employed in this research, including the development of the mobile screening app and the execution of the quasi-experimental pretest–posttest study is detailed. The results of the study, along with their implications, are then discussed, shedding light on the efficacy of technological interventions in addressing learning disabilities. Finally, the conclusion highlights the significance of this research and underscores the need for further exploration in the field of educational technology and inclusive education.

State of the Art

Understanding the landscape of learning disability screening requires an examination of both physical and digital contexts, considering international and local perspectives.

International Context

Various countries employ diverse approaches to learning disability screening. In the United States, the Response to Intervention (RTI) system has largely replaced the Discrepancy Model, offering a more immediate and effective response to student needs [7]. Germany emphasizes early intervention, supported by strong parental engagement, while Italy's experience, exemplified by Giacomo Cutrera's journey, highlights the evolving understanding and support for individuals with learning disabilities [8,9,10,11].

Local Context

In North Macedonia, educational laws lack explicit mention of learning disabilities, often conflating them with other disabilities [11]. The absence of official screening exacerbates the challenge, reflected in teachers' limited awareness [11]. A survey revealed significant gaps in understanding among educators, underscoring the urgency of addressing these issues [11].

Digital Context

The digital era presents opportunities for innovative screening approaches. Mobile applications, particularly focused on dyslexia, have shown promise globally [12]. Apps like "The Cure" in Sri Lanka and "DysPuzzle" in Spain have demonstrated success, albeit primarily targeting dyslexia [12]. However, other learning disabilities remain underexplored in digital screening efforts, creating a lack of available solutions [12].

Unique Contribution

The mobile application developed for this research fills a critical void in North Macedonia's educational ecosystem by addressing multiple learning disabilities in a single platform. Unlike existing apps, it aims to cater to the specific needs of the local context, reflecting the urgency of the situation where inclusive education remains an aspiration rather than a reality [11]. By leveraging mobile technology, this research seeks to advance the screening process and pave the way for inclusive education in Macedonia, despite initial challenges and stigma associated with psychiatry [11].

Developing and Testing a Mobile Application for Learning Disability Screening

In an effort to enhance educational support for students, particularly those with diverse learning needs, a mobile application was developed as part of this research. The application aims to facilitate the screening of learning disabilities, offering a user-friendly interface for both teachers and students.

Planning and Requirements Gathering

The development process commenced with an in-depth planning phase, crucial for laying a strong foundation for the application. The primary objective was to create a mobile application that would facilitate the screening of learning disabilities, ensuring it was user-friendly for both teachers and students. This phase involved extensive consultations with educators, parents, and experts in learning disabilities to understand the specific needs and challenges faced in educational settings. The insights obtained from these interactions were invaluable in shaping the application's scope and guiding the development process. Key features and functionalities were identified, focusing on the needs of the target users.

The app comprised two components: a student interface featuring four distinct screening tests and a teacher dashboard allowing access to student data for those enrolled in the teacher's classes. The tests focused on screening for Dyslexia, Dyscalculia, Attention Deficit Hyperactivity Disorder (ADHD), and Auditory Processing Disorder (APD). These tests collectively provided a comprehensive assessment of the student's learning abilities, evaluating critical aspects such as attention (ADHD), word processing (Dyslexia), auditory processing (APD), and numerical processing (Dyscalculia). It is essential to recognize that learning disabilities extend beyond traditional scholastic subjects, impacting various aspects of neurological development. Additional conditions such as Non-verbal Learning Disabilities, Dyspraxia, and Dysgraphia were also acknowledged, though not directly screened.

This version of the app is built around research based on completely anonymous student data (the method will be explored in the next sections). We still include student profiles that, at this moment, might not reveal to the teacher which student was screened for what, but can still help teachers understand the difficulties students in the school might have. This function might take different forms in the future after careful consideration. The app is currently exempt from GDPR under Recital 26, as we use anonymous data for research purposes and have taken all measures to ensure data is no longer identifiable. We still take privacy very seriously, so for any future expansion where students might use non-anonymous data, we are committed to being fully GDPR compliant.

-

1.

Functional Requirements: Functional requirements describe the specific features and functionalities the app should provide. These requirements were categorized based on the two main user types: teachers and students. Below are the functional requirements presented in table form (see Tables 1 and 2):

-

2.

Non-Functional Requirements: Non-functional requirements (see Table 3) address the app's overall performance, security, and usability. These requirements are essential for ensuring a high-quality user experience and maintaining data integrity.

With these functional and non-functional requirements documented, we established a solid foundation for the app's development. This structured approach ensured clarity in the development process and aligned the final product with the project's goals. Following these preparatory steps, the actual development began, focusing on implementing these requirements effectively.

Technology Selection

With a clear understanding of the requirements, the next step was selecting the appropriate technology stack. React Native was chosen as the primary development framework due to its robust capabilities for building cross-platform mobile applications. This choice ensured that the application could run seamlessly on both iOS and Android devices, maximizing its reach and accessibility.

React Navigation was integrated to manage the navigation between various screens, providing a smooth and intuitive user experience. For state management, Redux was employed, enabling efficient handling of application state across different components. Firebase was selected for backend services, including user authentication and real-time database functionalities, ensuring a secure and scalable infrastructure.

Design and Prototyping

The design phase was pivotal in translating the gathered requirements into a visual and interactive format. Initial wireframes were created to outline the basic layout and structure of the application. These wireframes were then developed into high-fidelity prototypes, which were iteratively refined based on feedback from stakeholders and potential users.

Key design principles focused on simplicity and intuitiveness. The user interface (UI) was crafted to be clean and straightforward, ensuring ease of use for both teachers and students. Special attention was given to the user experience (UX), aiming to create an engaging and seamless interaction with the application. Accessibility considerations were also prioritized to ensure the application was usable by individuals with diverse abilities.

Figure 1 depicts the Prototype Landing Page, while Fig. 2 illustrates the Prototype Test Page. Please note that the questions in the Prototype Test Page do not come from the final version. Also, the timer and score are not visible to the user in the final version.

Development and Implementation

The development phase followed an iterative and agile approach, allowing for flexibility and adaptability throughout the process. The initial setup involved configuring the development environment with the necessary tools and dependencies, laying the groundwork for subsequent development activities.

Development Steps

-

1.

Core Features Implementation: The development team focused on building the core features of the application. This included user authentication, where Firebase's authentication service was integrated to manage user login and registration securely. Figure 3 depicts the Landing Page of the mobile application.

-

2.

Teacher and Student Dashboards: Separate dashboards for teachers and students were developed. The teacher dashboard (see Fig. 4a) provided insights into student performance and access to various administrative functionalities. The student dashboard (see Fig. 4b) served as a centralized platform for accessing different tests and assessments.

-

3.

Class and Student Management*: The students screen (see Fig. 5) allowed teachers to view and manage lists of students in specific classes. This component facilitated efficient classroom management and streamlined administrative tasks.

-

4.

Student Profile Screen*: This screen (see Fig. 6) provides detailed information about individual students' academic journeys and potential learning challenges, offering valuable insights for personalized support.

*Note: As mentioned, these pages are explained as they would be used in a future fully integrated version. Currently, as no personal data is collected, they serve to give a general idea of which difficulties students might have without linking them to the student’s name.

-

5.

Test Page: The test page (see Fig. 7) was developed as the core component where students could engage in interactive test-taking experiences. This page is essential for assessing various skills, such as reading, math, audio comprehension, and attention span.

-

Interactive Experience: The test page offers a user-friendly interface that encourages active engagement. Students can answer questions interactively, and the page supports various types of media, including text, images, and audio.

-

Comprehensive Assessments: The tests cover different skill categories, providing a thorough assessment of students' learning abilities. Questions are dynamically retrieved from data sources based on the selected test category.

-

Real-Time Feedback: As students’ progress through the tests, their scores and time are tracked in real-time. This feature helps in providing immediate feedback, which is crucial for both students and teachers to understand areas that need improvement.

-

Flexibility and Adaptability: The test page is designed to handle various types of questions and answer formats. This flexibility is vital for creating a wide range of assessments tailored to different learning disabilities.

-

Continuous Improvement: Given the importance of the test page, it was subject to continuous enhancements throughout the development process. Even after the initial minimal viable product version, significant efforts were made to refine and polish this component to ensure it meets the high standards required for effective educational support.

-

The workflow of the application is displayed in Fig. 8.

Throughout the development phase, continuous testing and debugging were conducted. This included unit tests to verify the functionality of individual components, integration tests to ensure different parts of the application worked together seamlessly, and user acceptance tests (UAT) to validate the application against user requirements.

Localization and Adaptation

Given that the primary users of the application were Macedonians, localization was an essential step in the development process. The application was translated into Macedonian, ensuring that all textual content was accurately adapted to the local language. This involved more than just direct translation; careful consideration was given to cultural and linguistic nuances to ensure the application was both effective and culturally appropriate.

Testing and Evaluation

Practical testing of the application was conducted in a school setting, following a formal experimental design. Evaluation measures focused on mathematical accuracy rather than comparison against confirmed cases, considering the limitations of available data. Prior to the experiment, rigorous testing and debugging were performed to ensure the app's functionality and reliability.

Experimental Design

The experiment was conducted at the outset of the 2023/2024 academic year at Mustafa Kemal Ataturk Elementary School in Centar Zhupa, North Macedonia. Spanning three school hours, the experiment was accommodated by the school board due to the absence of scheduled lectures at that time.

The experiment was designed as follows: after randomly selecting students, each student took four assessment tests using our mobile app. Phones were provided if necessary. Internally, after the test, we knew which students were screened as potential cases and which were not. We continued the experiment with all students and recorded all test data in the database.

The pretest–posttest element began with a traditional lecture, where the teacher used only the board and their voice. After this lecture, the students were given tests related to the lecture content. All students participated in the second lecture, which included the technological intervention. This setup did not have a traditional control group; instead, we compared the results of students identified as potential cases against those not identified as potential cases. The second lecture employed learning disability-friendly techniques with technology, such as a smart board, audio support, online collaboration tools, and gamification. The posttest followed the second lecture.

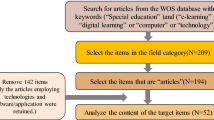

The experimental procedure is illustrated in the flow chart shown in Fig. 9.

The final comparison was done between the app screening results and the pretest–posttest results. If improvements were more prominent in students screened as potentially having learning disabilities, it would demonstrate the crucial effect of technology on their education. Below is a thorough explanation of each step in the experiment and the application used for the screening.

First Hour—Screening

Ten domestic students, spanning various grades, volunteered to participate in the experiment. Utilizing simple randomization, participants were chosen by drawing from a jar containing paper cutouts. Each student was represented by an alias found on their respective paper cutouts, ensuring anonymity. The aliases found on the cutouts already had profiles pre-registered on the mobile application and we had no way to know which specific student got which alias. No personal data was collected, and participation was strictly voluntary.

Students were introduced to the mobile application and briefed on the types of questions they would encounter. Assistance was readily available for any technical difficulties encountered during app installation, login, or Internet connectivity issues.

The screening tests were adaptations of real-world assessments typically conducted through paper-based or observational methods. Below, we provide a brief overview of each test administered:

-

1.

Dyslexia: Dyslexia is characterized by difficulties in decoding text, spelling, and fluent word recognition. The screening measures included:

-

Hidden Timer: A concealed timer monitored response times to prevent repeated readings and ensure fair assessment, present in all tests.

-

Decoding and Word Recognition: Tasks involved identifying words and structures, including rhymes.

-

Spelling Tests: Students identified correctly and incorrectly spelled words.

-

Reading Comprehension: Students read passages and answered questions to assess understanding and recall.

-

Varied Presentation: Questions and answers were presented in different fonts or handwriting to assess decoding skills.

-

-

2.

Dyscalculia: Dyscalculia entails challenges in comprehending mathematical concepts. The screening encompassed:

-

Basic Arithmetic: Problems covering addition, subtraction, multiplication, division, fractions, decimals, etc.

-

Fractions: Precise calculations to gauge conceptual understanding and visualization.

-

Matching: Associating numerical values with quantities or images.

-

Math Decoding: Questions on time, money, charts, and graphs to assess conceptual comprehension.

-

-

3.

Auditory Processing Disorder (APD): APD affects the interpretation of auditory information, particularly in noisy or echo-filled environments. The screening involved:

-

Auditory Recognition: Identification of spoken words or phrases to assess recognition abilities.

-

Noise: Exposure to real-world audio settings with background noise or echoes.

-

Replay Option: A replay feature allowed students to listen again, albeit too many replays would reflect in the hidden timer.

-

-

4.

Attention Deficit Hyperactivity Disorder (ADHD): ADHD, often overshadowed by Dyslexia in popularity but less understood, presents a complex challenge. Frequently mistaken for an intellectual disability, ADHD is, in fact, a neurological learning disorder impacting focus, hyperactivity, fixation, and impulse control. Although some symptoms overlap with those of autism spectrum disorders [13], ADHD does not signify a diminished IQ but rather a need for tailored teaching methods that engage students with diverse stimuli beyond conventional instruction. The screening included:

-

Attention Assessment: This component involved instructing students to memorize information and then shifting their focus to another task before returning to the initial one. By observing students' ability to maintain attention across tasks, this assessment provided insights into their focus and attention regulation skills.

-

Self-Diagnosis: Integrated seamlessly into the screening process, self-diagnostic questions were interspersed with other tasks. These questions probed students' impulsivity while offering valuable insights into their behavior. Examples of such questions included inquiries about frustration levels during common scenarios, such as waiting in line.

-

Upon completion, results were retrieved from the Firebase server established beforehand, facilitating data analysis.

Second Hour & Third Hour—Pretest & Posttest

At this juncture, the experiment transitions into a quasi-experimental design. Unlike traditional experimental setups where groups are randomly assigned, our approach involves grouping participants based on specific characteristics or conditions [14]. This methodology addresses the practical constraints of real-world educational settings, allowing us to focus on aiding a targeted group of individuals. Nonetheless, all students underwent equal testing, and groupings only come into consideration during the comparison of results between students identified as potential cases and those not identified as such. Our evaluation extends beyond mere assessment of the mobile application's functionality; we seek to discern the extent to which each student benefited, considering their initial performance levels. This holistic analysis underscores the app's efficacy and underscores the pressing need for technology in supporting students with learning disabilities.

The pretest–posttest methodology comprises two distinct phases, as the name implies: pretest and posttest. This sequential approach captures participants' performance both before and after the intervention, shedding light on the intervention's impact. In our case, each phase was preceded by a lecture, with the second lecture incorporating the intervention under examination.

In consultation with the teachers and the school board, it was agreed that our presence would be minimized during all three hours of the experiment. The rationale behind this decision stemmed from the potential pressure an external observer might inadvertently exert on the students, leading to either over-performance or underperformance due to anxiety. Consequently, the lectures proceeded as they would on any regular school day.

During the pretest phase, following the initial lecture, students completed the assessment. Subsequently, the second lecture introduced the intervention, comprising technological support encompassing visual and auditory aids, as prearranged. The posttest phase mirrored the structure of the pretest, enabling us to gauge the intervention's impact on student performance.

Upon completion of the posttest, all assessment papers were returned for analysis, marking the culmination of the experimental procedure.

Results

The results of the experiment are presented comprehensively in Table 4, detailing the mobile application test results, pretest scores, and posttest scores for each student across various learning disabilities. Additionally, the calculation of Cohen’s d values [15] is employed to assess the practical significance of the research, offering insights into the extent of improvement post-intervention.

To clarify, students not identified as potential cases of learning disabilities by the app serve as our de facto control group. They were the students that scored high on the App—ergo the app itself divided the students into groups. Since students took multiple tests, it's possible for one student to be in the control group for one learning disability while not in the other. Control group results, included in the overall analysis, allow us to compare the effectiveness of the intervention across both groups. For instance, while students like Eta (Dyslexia, ADHD, APD) and Alpha (ADHD) (potential cases) showed notable improvement, others such as Beta and Theta (not potential cases) did not exhibit significant changes. This comparison underscores the efficacy of the app-based intervention for those identified with potential learning disabilities.

To clarify, students not identified as potential cases of learning disabilities by the app serve as our de facto control group. They were the students that scored high on the App- ergo the app itself divided the students into the groups. Students took multiple tests, so one student can even be in the control group for one learning disability while not in the other. Control group results, included in the overall analysis, allow us to compare the effectiveness of the intervention across both groups. This comparison underscores the efficacy of the app-based intervention for those identified with potential learning disabilities.

Table 4 provides a comprehensive overview of the students' performance across different learning disabilities, including Dyslexia, Dyscalculia, APD, and ADHD. Each student is represented by an alias, and their respective scores on the mobile application test, pretest, and posttest are recorded. Notably, the scores are scaled differently for each test, with the app test results ranging from 0 to 50, and the pretest and posttest results ranging from 0 to 100. This allows for a comparative analysis of students' performance before and after the intervention.

As per standard practice in pretest–posttest approaches, Cohen’s d is utilized as a metric to quantify the effect size of the intervention. By calculating Cohen’s d [15] for each student based on their pretest and posttest scores, the magnitude of improvement post-intervention can be mathematically expressed. Cohen’s d offers a nuanced understanding of the impact of the intervention, taking into account the variability of individual student performance through the standard deviations of their scores.

To ensure the accuracy and reliability of the findings, the standard deviation is calculated for both the pretest and posttest scores. This statistical measure accounts for the dispersion of scores around the mean, thus mitigating potential biases that may arise from variations in test difficulty. The process begins by computing the mean scores for both tests:

Following the determination of means, the square difference for each data point relative to its respective mean is calculated. These calculations are tabulated in Tables 5 and 6 for the pretest and posttest scores, respectively.

In Table 5, each data point's square difference from the pretest mean is computed. This step is crucial for assessing the variability of scores and is instrumental in determining the pretest variance.

Similarly, Table 6 outlines the square differences of each data point from the posttest mean. These values are essential for calculating the posttest variance and standard deviation.

With the square differences computed, the next step involves calculating the variance for both the pretest and posttest scores. The variance provides insight into the spread of data points around the mean, offering a quantitative measure of variability.

Once the variances are determined, the standard deviation is calculated using the square root of the respective variances. This step finalizes the computation necessary for Cohen’s d calculation, providing a standardized measure of the spread of scores around the mean for both the pretest and posttest.

To ensure accurate comparisons, a modified formula for Cohen’s d is employed, considering the variability in standard deviations between the pretest and posttest scores:

With this adjusted formula, Cohen’s d values are computed for each student, as shown in Table 7. These values offer insights into the magnitude of improvement post-intervention and facilitate a standardized interpretation of the research findings.

Table 7 presents the calculated Cohen’s d values for each student, along with their respective pretest and posttest means and standard deviations. The Cohen’s d values serve as a standardized measure of the effect size of the intervention, aiding in the interpretation of the practical significance of the research outcomes.

Fortunately, Cohen’s d offers a standardized interpretation, where a value of 0.2 signifies a small effect, 0.5 denotes a medium effect, and 0.8 indicates a large effect. Upon analyzing our data, students Alpha, Epsilon, and Iota demonstrated a small effect, albeit with a value of 0.48, which is close to the threshold of 0.5 signifying a medium effect. Additionally, student Gamma exhibited a medium effect. Conversely, Zeta and Eta showcased a large effect, surpassing the 0.8 threshold. Furthermore, Delta and Beta showed no significant increase, while Theta and Kappa displayed a decrease, although not reaching the -0.2 threshold, which can be considered statistically negligible.

Figure 10 presents a comparison of the App Test Results alongside Cohen’s d values, summarizing all learning disabilities assessed in the study. The vertical bars correspond to the Cohen’s d values for each student, while the coloring and patterns match the app results. Additionally, lines signifying the effect size interpretations are overlaid. The visualization demonstrates the alignment between the mobile application's effectiveness and Cohen’s interpretations. Students Eta and Zeta, who exhibited significant improvement (Cohen’s d > 0.8), are identified as having a high chance of benefiting from the app. This predictive capability is particularly crucial in regions like Republic of North Macedonia, where official testing is stigmatized. However, it raises questions about the specific challenges faced by these students and others with medium or close-to-medium improvement according to Cohen’s d values.

Given that students often have multiple learning disabilities simultaneously, further analysis is warranted to understand the complexities involved. Hence, separate visualizations for each screened learning disability can provide insights into which disabilities the app positively impacts per student, avoiding the common mistake of grouping all disabilities together. These new visualizations will use the same Cohen’s d values, yet their app results for the individual learning disabilities will be used to showcase which students were most likely screened and which test results most likely led to that. (see Fig. 11a–d).

This visualization supports the hypothesis that students like Zeta and Eta, exhibiting signs of multiple learning disabilities simultaneously, can significantly impact the extent of improvement. Moreover, students like Gamma, who show moderate improvement, as well Alpha, Epsilon, and Iota whose effects are the closest to the medium threshold, would be overshadowed in the original visualization but indeed both the Cohen’s interpretation and the app results reflect that a single learning disability is highly possible for them.

This comprehensive analysis underscores the importance of considering individual student profiles and their specific learning disabilities when evaluating the effectiveness of educational technology interventions.

Discussion

The findings of this study underscore the significant potential of educational technology interventions in enhancing learning outcomes for children with learning disabilities. The mobile application developed for this research demonstrated promising accuracy in screening for Dyslexia, Dyscalculia, Attention Deficit Hyperactivity Disorder (ADHD), and Auditory Processing Disorder (APD), suggesting that such tools can be valuable assets in educational settings.

The experimental results revealed notable improvements in student performance post-intervention, particularly for those identified by the app as having potential learning disabilities. This underscores the efficacy of targeted educational technology in supporting students who may otherwise struggle in traditional learning environments.

Recent studies in educational technology and special education have similarly highlighted the benefits of integrating digital tools for screening and intervention. For example, González and Espada [16] demonstrated the effectiveness of mobile applications in identifying dyslexia in early childhood, aligning with our findings on the app's accuracy in dyslexia screening. Furthermore, a study by Sharma et al. [17] explored the use of digital platforms for ADHD management, showing positive outcomes in attention and behavior regulation, which parallels our results on ADHD screening and intervention.

While previous research has predominantly focused on single-disability screening apps, our study's unique contribution lies in its multi-disability screening capability. This comprehensive approach addresses a gap in the existing literature, which often segregates interventions by specific disabilities. Our findings indicate that a multi-functional app can streamline the screening process, making it more efficient and less stigmatizing for students.

The primary novelty of this study is the development and implementation of a mobile application capable of screening for multiple learning disabilities within a single platform. This multi-faceted approach is not only efficient but also adaptable to diverse educational settings, particularly in regions where specialized resources are scarce. By focusing on a broader spectrum of learning disabilities, our app represents a significant step forward in inclusive education.

The practical applications of this research are vast. Educational institutions can leverage this technology to facilitate early identification and intervention, thereby improving educational outcomes for students with learning disabilities. Future research should focus on longitudinal studies to assess the long-term efficacy of the app and its impact on student performance over time. Additionally, expanding the app's capabilities to include screening for other learning disabilities, such as Dysgraphia and Dyspraxia, could further enhance its utility and inclusivity.

In summary, this study contributes to the growing body of literature advocating for the integration of technology in education, particularly for students with learning disabilities. The promising results highlight the need for continued innovation and research in this field to ensure that all students receive the support they need to succeed academically.

Conclusion

The findings of this research underscore the transformative potential of technological interventions in improving educational outcomes for children with learning disabilities. Through the development and implementation of a novel app designed for screening and data collection, this study has showcased the profound impact that such interventions can have on student performance.

By employing mathematical analyses, we have elucidated the pressing need for tools like the app developed in this study. The results underscore the critical role of technology in education, particularly in addressing the unique needs of students with learning disabilities. Despite potential resistance to the integration of technology in educational settings, our findings highlight its indispensable value in enhancing the learning experiences of students with diverse learning profiles.

In summary, this research serves as a compelling testament to the power of technological innovation in facilitating inclusive education practices. Moving forward, it is imperative to continue advocating for the development and integration of technology-driven solutions that cater to the diverse needs of learners, ensuring that every child has the opportunity to thrive academically. Future studies should focus on longitudinal assessments and the inclusion of additional learning disabilities to further validate and expand the app's capabilities, ultimately paving the way for a more inclusive and effective educational landscape.

Data Availability

Not Applicable.

References

Brown RC. Florida’s first people: 12,000 years of human history. Sarasota: Pineapple Press; 2013.

Samama E. The Greek vocabulary of disabilities. In: Disability in antiquity. Routledge: London; 2016. p. 137–54.

Covey HC. Western Christianity’s two historical treatments of people with disabilities or mental illness. Soc Sci J. 2005;42(1):107–14.

Carter K. Freaks no more: rehistoricizing disabled circus artists. Perform Matters. 2018;4(1–2):141–6.

Carlson S. A two hundred year history of learning disabilities. 2005. Online Submission.

Intellectual Disability Definition & Meaning. (n.d.). Merriam-Webster. Retrieved from https://www.merriam-webster.com/dictionary/intellectual%20disability (Accessed 25 July 2023).

Restori AF, Katz GS, Lee HB. A critique of the IQ/achievement discrepancy model for identifying specific learning disabilities. Europe’s J Psychol. 2009;5(4):128–45.

Wilcke A, Müller B, Schaadt G, Kirsten H, Boltze J. High acceptance of an early dyslexia screening test involving genetic analyses in Germany. Eur J Hum Genet. 2016;24(2):178–82. https://doi.org/10.1038/ejhg.2015.118.

Cutrera G. Demone Bianco: Una Storia di Dislessia. 2008. Ebook retrieved from http://www.impegnocivile.it/progetti/BreakingBarriers/Documenti/demone_bianco_Giacomo%20Cutrera.pdf (Accessed 15 August 2016).

Change.Org. (n.d.). Subito una legge per tutelare gli studenti universitari dislessici. Retrieved from https://www.change.org/p/subito-una-legge-per-tutelare-gli-studenti-universitari-dislessici-lostudio%C3%A8undiritto?recruiter=1250366543&utm_source=share_petition&utm_medium=whatsapp&utm_campaign=petition_dashboard&recruited_by_id=76f6cbe0-804f-11ec-b65e-f1bda7ac6bc5 (Accessed 26 July 2023).

BКЛУЧEHOCT HA ДEЦATA И MЛAДИTE... - UNICEF. Retrieved from https://www.unicef.org/northmacedonia/media/4276/file/MK_CWDinSecondaryEducation_Report_MK.pdf (Accessed 26 July 2023).

Politi-Georgousi S, Drigas A. Mobile applications, an emerging powerful tool for dyslexia screening and intervention: a systematic literature review. Int J Interactive Mobile Technol. 2020;14:4–17. https://doi.org/10.3991/ijim.v14i18.15315.

Hours C, Recasens C, Baleyte JM. ASD and ADHD comorbidity: what are we talking about? Front Psych. 2022;13:154.

Dawson TE. A primer on experimental and quasi-experimental design. 1997.

Becker LA. Effect size (ES), Universitat de València, 2000.

González FJ, Espada JP. Mobile applications for dyslexia detection and intervention. J Educ Technol Soc. 2020;23(4):24–35.

Sharma R, et al. Mobile learning technologies for education: benefits and pending issues. Appl Sci. 2021;11(9):4111. https://doi.org/10.3390/app11094111.

Funding

Open access funding provided by University of Pretoria. The paper is in Open Access form under a Creative Commons Attribution 4.0 International License.

Author information

Authors and Affiliations

Contributions

Conceptualization, investigation, data curation, writing the original draft preparation by Benjamin Adjiovski, Dijana Capeska Bogatinoska, Mersiha Ismajloska; writing— review and editing, discussions by Benjamin Adjiovski, Dijana Capeska Bogatinoska, Mersiha Ismajloska, and Reza Malekian.

Corresponding authors

Ethics declarations

Conflict of Interest

The authors declare no competing interests.

Research Involving Human and /or Animals

Not Applicable.

Informed Consent

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Adjiovski, B., Bogatinoska, D.C., Ismajloska, M. et al. Enhancing Educational Technology in Lectures for School Students with Learning Disabilities: A Comprehensive Analysis. SN COMPUT. SCI. 5, 716 (2024). https://doi.org/10.1007/s42979-024-03049-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-024-03049-z