Abstract

One of the main questions paleographers aim to answer while studying historical manuscripts is when they were produced. Automatized methods provide tools that can aid in a more accurate and objective date estimation. Many of these methods are based on the hypothesis that handwriting styles change over periods. However, the sparse availability of digitized historical manuscripts poses a challenge in obtaining robust systems. The presented research extends previous research that explored the effects of data augmentation by elastic morphing on the dating of historical manuscripts. Linear support vector machines were trained on k-fold cross-validation on textural and grapheme-based features extracted from the Medieval Paleographical Scale, early Aramaic manuscripts, the Dead Sea Scrolls, and volumes of the French Royal Chancery collection. Results indicate training models with augmented data can improve the performance of historical manuscript dating by 1–3% in cumulative scores, but also diminish it. Data augmentation using elastic morphing can both improve and decrease date prediction of historical manuscripts and should be carefully considered. Moreover, further enhancements are possible by considering models tuned to the features and documents’ scripts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Historical manuscripts, i.e., handwritten accounts, letters, and similar documents, contain essential information required to understand historical events. Paleographers study such documents, seeking to understand their social and cultural contexts. They specifically seek to identify the script(s), author(s), location, and production date of historical manuscripts, often through the study of handwriting styles, writing materials, and contents. To do so, specific domain knowledge is needed. Additionally, these methods are time costly and lead to subjective estimations. Moreover, the repetitive physical handling of the documents contributes to the documents’ further degradation.

Digitizing historical manuscripts by, e.g., scanning contributes to their preservation and has allowed for the application of machine learning to help paleographers answer the aforementioned questions in a more objective manner. Historical manuscript dating, in particular, can benefit from these automatized methods, as it can be necessary to resort to physical methods that can be destructive and have limited reliability.

Automatized methods are commonly based on the hypothesis that handwriting styles change over a period [1], allowing for the documents’ date estimation. These methods thus aim to estimate dates by identifying characteristics in handwriting specific to time periods.

Due to the limited availability of historical manuscripts, research has mainly focused on statistical feature-extraction techniques. These statistical methods extract the handwriting style by capturing attributes such as curvature or slant or representing the general character shapes in the documents [2]. However, for reliable results, manuscripts need a sufficient amount of handwriting to extract the handwriting styles.

Both traditional and automatized methods must deal with data sparsity and the degradation of ancient materials; new data can only be obtained by digitizing or discovering more manuscripts. Data augmentation can be a potential solution to this issue. Data augmentation is commonly used in machine learning to generate additional realistic training data from existing data to obtain more robust models. However, this must be done carefully to prevent the loss of information on the handwriting styles. This occurs when using standard techniques, such as rotating or mirroring the images. Data augmentation on the character level, on the other hand, could generate realistic samples that simulate an author’s own variability in their handwriting.

In previous research [3], we investigated the effects of character-level data augmentation on the style-based dating of historical manuscripts. The current research extends this by introducing another medieval data set, which contains images from the French Royal Chancery (FRC) registers, and applying the previously presented techniques. Specifically, manuscript images taken from the Medieval Paleographical Scale (MPS) collections, the FRC (HIMANISFootnote 1) collection, the Bodleian Libraries of the University of Oxford, the Khalili collections, and the Dead Sea Scrolls were augmented with an elastic rubber-sheet algorithm [5]. The first collection, MPS, has medieval charters produced between 1300 and 1550 CE in four cities: Arnhem, Leiden, Leuven, and Groningen. Additionally, 840 images from 28 books of the FRC collection were used that date between 1302 and 1330 CE. A number of early Aramaic, Aramaic, and Hebrew manuscripts were taken from the last three collections. Several statistical feature-extraction methods on the textural and character level were used to train linear support vector machines (SVM) with only non-augmented images and with both non-augmented and augmented images.

Related Works

Scripts have their own characteristics, and some features represent these better than others. Consequently, feature selection is one of the challenges in style-based dating. Digital collections of historical manuscripts are available in various languages and scripts, such as the Medieval Paleographical Scale [6], and the Svenskt Diplomatariums huvudkartotek (SDHK) data sets,Footnote 2 which are in Dutch and Swedish, respectively. Additionally, the early Aramaic and Dead Sea Scrolls collections [7] contain ancient texts in Hebrew, Aramaic, Greek, and Arabic, dating from the fifth century BCE (Before the Common Era) until the Crusader Period (12th–13th centuries CE).

Statistical feature-extraction techniques are commonly divided into textural-based and grapheme-based features that capture information on the handwriting across an entire image on the textural and character level, respectively. One example of a statistical feature is the ‘Hinge’ feature, which captures a handwriting sample’s slant and curvature. It describes the joint probability distribution of the two hinged edge fragments and forms the basis of the family of ‘Hinge’ features [2]. The feature has been extended to, e.g., co-occurrence features QuadHinge and CoHinge, which emphasize the handwriting’s curvature and shape information, respectively [8].

Next to Hinge features, other textural features have been used for historical manuscript dating, such as curvature-free and chain code features [9, 10]. Additionally, features can be combined. The authors of Ref. [11], for instance, investigated combinations of Gabor filters [12], local binary patterns [13], and gray-level co-occurrence Matrices [14], showing an improved performance compared to the individual features on the MPS data set.

Grapheme-based features represent character shapes. They are obtained through the extraction of graphemes from a set of documents and used to train a clustering method. The cluster representations form a codebook, from which a probability distribution of grapheme usage is computed for each document to represent the handwriting styles.

One grapheme-based feature is Connected Component Contours (CO3) [15], which describes the shape of a fully connected contour fragment. This was extended to Fraglets [2], which parts the connected contours based on their minima to deal with cursive handwriting. Other extensions are k stroke and k contour fragments, which partition CO3 in k stroke and contour fragments, respectively [16]. In He et al. [17], Junclets was proposed, which represents the junctions in writing contours.

The MPS data set is often used in research on historical manuscript dating. He et al. specifically proposed several methods. In He et al. [1], dates were predicted through a combination of local and global Support Vector Regression on extracted Fraglets and Hinge features. Later the authors extended their work and proposed i.a. the grapheme-based features k contour fragments and Junclets. Moreover, the temporal pattern codebook was proposed in He et al. [18] for maintaining temporal information lost with the Self-Organizing Map (SOM) that was common use for training codebooks. Finally, He et al. compared various statistical feature-extraction methods for historical manuscript dating in He et al. [19].

A reason the MPS data set is often used is that it is relatively clean. However, it is not representative of other collections of historical manuscripts. Due to aging, they are usually more degraded, as is the case with the Dead Sea Scrolls. An initial framework for the style-based dating of these manuscripts using both statistical and grapheme-based features was proposed in Dhali et al. [20]. The Dead Sea Scrolls posed a challenge due to them being fragmented and containing eroded ink traces. Moreover, there are few labeled manuscripts available.

Next to statistical hand-crafted features, deep learning approaches have been proposed. These have applied transfer learning, i.e., fine-tuning pre-trained neural networks using new data on a different task than they were initially intended for. Transfer learning has the potential for historical manuscript dating as it requires less data than standard deep learning methods. In Wahlberg et al. [21], the Google ImageNet-network was fine-tuned on the SDHK collection. However, this was done using 11,000 images, which is large for a data set of historical manuscripts. Additionally, a group of pre-trained neural networks was fine-tuned on the 3267 images from the MPS data set in Hamid et al. [22]. Here, statistical methods were outperformed by the best-performing model.

While deep learning approaches show promising results, statistical methods are still relevant. To train a neural network, the manuscripts’ images need to be partitioned into patches, possibly leading to loss of information. To solve this problem, Hamid et al. [22] ensured that each patch contained “3–4 lines of text with 1.5–2 words per line” to extract the handwriting style. While this was a solution for the MPS data set, it may not be for smaller and more degraded collections, such as the Dead Sea Scrolls. In contrast, statistical feature extraction does not require image resizing and considers the handwriting style over the entire image.

More recently, studies have fused statistical features with those extracted using neural networks. Hierarchical fusion was proposed by Adam et al. [23]. Specifically, they combined Gabor filters, Histogram of Orient Gradients, and Hinge with features extracted from a pre-trained ResNet through joint sparse representation. This method outperformed the individual statistical and ResNet features on a collection of Arabic manuscripts.

Methods

This section will present the dating model along with data description, image processing, and feature extraction techniques.

Data

The initial research used two datasets: MPS and EAA [3]. In connection to those, the current article extends to the FRC dataset. These three datasets are briefly presented in the following subsections.

Medieval Paleographical Scale (MPS) Collection

The current research uses the MPS data set [1, 6, 16, 24]. Non-text content, such as seals, supporting backgrounds, and color calibrators, have been removed. This data set consequently provides relatively clean images. Yet, it also includes more degraded images and images where parts of a seal or ribbon remain present. The data set is publicly available via Zenodo.Footnote 3

The MPS data set contains 3267 images of charters collected from four cities signifying four corners of the medieval Dutch language area. Charters were commonly used to document legal or financial transactions or actions. Additionally, the manuscripts’ production dates have been recorded. For these charters, usually parchment and sometimes paper was used. Figure 1 shows an example image.

A document image from the Medieval Paleographical Scale (MPS) collection. Image taken from Koopmans et al. [3]

The medieval charters date from 1300 CE to 1550 CE. Due to the evolution of handwriting being slow and gradual, documents from 11 quarter century key years with a margin of ± 5 years were included in the data set. Hence, the data set consists of images of charters from the medieval Dutch language area in the periods 1300 ± 5, 1325 ± 5, 1350 ± 5, up to 1550 ± 5. Table 1 contains the number of charters in each key year.

Early Aramaic and Additional (EAA) Manuscripts

In addition to the MPS data set, 30 images from the early Aramaic, Aramaic, and Hebrew manuscripts were used. For ease of referreing to this second dataset, EAA is used in the rest of the article, even though EAA contains Aramaic and Hebrew in addition to early Aramaic scripts. A list of the EAA images used in this study can be found in appendix (see Table 7). These images are publicly available through the Bodleian Libraries, University of Oxford,Footnote 4 the Khalili collections,Footnote 5 and the Leon Levy Digital. For the selected manuscripts from the EAA dataset, the dates were directly inferred from dates or events recorded in the manuscripts (i.e., internally dated). Their dates span from 456 BCE to 133 CE. In addition, the data set contains several degraded manuscripts with missing ink traces or only two or three lines of text. An example image is shown in Fig. 2.

An early Aramaic (EA) manuscript from the Bodleian Libraries, the University of Oxford (Pell. Aram. I). Image taken from Koopmans et al. [3]

French Royal Chancery (FRC) Registers

As a third data set, and extending our previous work in Koopmans et al. [3], the FRC data set was used [25]. This was a sub-set of 840 images of the French Royal Chancery collection, also known as Registres du Trésor des Chartes. A total of 28 volumes from this collection were used, or specifically, their first 30 pages. This was to avoid the increasing use of drawings, crossed-out texts, marginal notes, etc., that is often observed in later pages of manuscripts. The list of volumes used in the current research can be found in appendix (see Table 8).

Most volumes contained the years they were written in (the ground truth) on the first page. However, these pages also contained many artifacts and little handwriting. Hence, these pages were removed after the ground truths were determined. Additionally, empty pages were removed. This led to the removal of 48 images, resulting in a data set of 792 images. An example image from the FRC collection is shown in Fig. 3.

Preprocessing

Label Refinement

EAA The set of images from the EAA collections did not contain sufficient samples for each year. Therefore, the samples were manually classified based on historical periods identified by historians.Footnote 6 The time periods and the corresponding number of samples are shown in Table 2.

The Persian period contained two groups of samples spread apart for more than 30 years. Under the assumption that handwriting styles changed during this time, these samples were split into two periods: the Early and Late Persian Periods. These were based on the samples’ production years and not on defined historical periods. Images from the upper bound of the year range in Table 2 were included in the classes. The manuscripts from the Roman Period were excluded as they contained insufficient samples. The images were relabeled according to the median of their corresponding year ranges.

FRC The set of images from the FRC collection, in contrast to the EAA collections, contained sufficient samples for each year. However, many of the volumes overlapped in the time ranges they were written or were too close to each other for distinguishable changes. For this reason, the images were manually classified into 4 categories, such that they contained sufficient variability to capture the handwriting style changes over a period. The images were relabeled according to the median of their corresponding year ranges. The resulting categories and their distributions are shown in Table 3.

Data Augmentation

To augment the data such that new samples simulate a realistic variability of an author’s handwriting, the Imagemorph program [26] was used. The program applies random elastic rubber-sheet transforms to the data through local non-uniform distortions, meaning that transformations occur on the components of characters. Consequently, the Imagemorph algorithm can generate a large number of unique samples. For the augmented data to be realistic, a smoothing radius of 8 and a displacement factor of 1 were used, measured in units of pixels. As images of the MPS data set required high memory, three augmented images were generated per image. Since the EAA data sets were small, 15 images were generated per image. The FRC images were each augmented 10 times for the same reason.

Binarization

To extract only the handwriting, the ink traces in the images were extracted through binarization. This resulted in images with a white background representing the writing surface, and a black foreground representing the ink of the handwriting.

MPS Otsu thresholding [28] was used for binarizing the MPS images, as the MPS data set is relatively clean, and it has been successfully used in previous research with the data set [1, 16, 19]. Otsu thresholding is an intensity-based thresholding technique where the separability between the resulting gray values (black and white) is maximized. Figure 4 shows Fig. 1 after binarization.

EAA The EAA images were more difficult to binarize using threshold-based techniques. So, for the EAA images, we used BiNet: a deep learning-based method designed specifically to binarize historical manuscripts [27]. Figure 5 shows Fig. 2 after binarization.

FRC The FRC images were partly binarized using BiNet, and partly using adaptive thresholding. Here, the threshold value is a Gaussian-weighted sum of the neighborhood, subtracted with a set constant C. The neighborhood size was set to (\(45\times 45\)), and a constant of \(C=23\) was used after experimentation. However, due to the resulting binarized documents containing many unwanted artifacts, further operations were applied for noise reduction. First, a median filter with a size of \(5\times 5\) was applied. Next, dilation was applied with a kernel size of (\(7\times 7\)) to emphasize the locations of the handwriting. After the dilation, a bitwise AND operator was used on the dilated and the initial binarized image. This removed the majority of the artifacts in the original binarized images. However, the ink contained many gaps in the letters, too. Hence, a closing operation and another median filter were applied, with kernel sizes (\(2\times 2\)) and (\(3\times 3\)), respectively. For simplicity, we will refer to this binarization process as adaptive thresholding.

A total of 398 images were binarized using adaptive thresholding, and 394 with BiNet. For 3 images (2 of category 1311 and 1 of 1319), the binarization quality was too low to obtain features for. This left 789 images of the FRC data set to be used for feature extraction. When using adaptive thresholding, the images were cropped before binarization was applied, as this enhanced the binarization quality. For the BiNet images, this was done after binarization to decrease the whitespace. From the images, 5% of the top and left sides were cut, and 10% and 20 % were cut from the bottom and right sides. Moreover, images were further manually cropped when much noise was present on the outer sides. Examples of images binarized with BiNet and adaptive thresholding are shown in Figs. 6 and 7, respectively.

Example image of the FRC collection binarized using BiNet model [27]

Binarized image of Fig. 3 using adaptive thresholding

Feature Extraction

The handwriting styles of manuscripts were described by five textural features and one grapheme-based feature. Since the MPS, FRC, and the EAA data sets are written in different scripts and languages, features were chosen that are robust to this.

Textural Features

Textural-based feature-extraction methods contain statistical information on handwriting in a binarized image by considering its texture. Textural-based features capture handwriting attributes like slant, curvature, and the author’s pen grip, represented in a probability distribution.

He et al. proposed the joint feature distribution (JFD) principle in He and Schomaker [19], describing how new, more robust features can be created. Two groups of such features were identified: the spatial joint feature distribution (JFD-S) and the attribute joint feature distribution (JFD-A). The JFD-S principle derives new features by combining the same feature at adjacent locations, consequently capturing a larger area. The JFD-A principle derives new features from different features at the same location, consequently capturing multiple properties.

Hinge [2] is obtained by taking orientations \(\alpha\) and \(\beta\) with \(\alpha < \beta\) of two contour fragments attached at one pixel and computing their joint probability distribution. The Hinge feature captures the curvature and orientation in the handwriting. 23 angle bins were used for \(\alpha\) and \(\beta\).

CoHinge [8] follows the JFD-S principle, combining two Hinge kernels at two different points \(x_i, x_j\) with a Manhattan distance l, and is described by:

This shows that the CoHinge kernel over contour fragments can be quantized into a 4D histogram. The number of bins for each orientation \(\alpha\) and \(\beta\) was set to 10.

QuadHinge [8] follows the JDF-A principle, combining the Hinge kernel with the fragment curvature measurement \(C(f_{\textrm{c}})\). Although Hinge also captures curvature information, it focuses on the orientation due to the small lengths of the contour fragments or lengths of the hinge edges. The fragment curvature measurement is defined as:

\(F_{\textrm{c}}\) is a contour fragment with length s on an ink trace with endpoints \((x_1, y_1), (x_2, y_2)\). In addition, the QuadHinge feature is scale-invariant due to agglomerating the kernel with multiple scales. The QuadHinge kernel can then be described through the Hinge kernel and the fragment curvature measurement on contour fragments \(F_1, F_2\):

The number of bins of the orientations was set to 12, and that for the curvature to 6, resulting in a dimensionality of 5184.

DeltaHinge [29] is a rotation-invariant feature generalizing the Hinge feature by computing the first derivative of the Hinge kernel over a sequence of pixels along a contour. Consequently, it captures the curvature information of the handwriting contours. The Delta-n-Hinge kernel is defined as:

where n is the nth derivative of the Hinge kernel. When used for writer identification, performance decreased for \(n >1\), implying that the feature’s ability to capture writing styles decreased. Hence, the current research used \(n = 1\).

Triple Chain Code (TCC) [10] captures the curvature and orientation of the handwriting by combining chain codes at three different locations along a contour fragment. The chain code represents the direction of the next pixel, indicated by a number between 1 and 8. TCC is defined as:

where \({\text {CC}}(x_i)\) is the chain code at location \(x_i\), and Manhattan distance \(l = 7\).

Grapheme-Based Features

Grapheme-based features are allograph-level features that partially or fully overlap with allographs in handwriting, described by a statistical distribution. The handwriting style is represented by the probability distribution of the grapheme usage across a document, computed with a common codebook.

Junclets [17] represents the crossing points, i.e., junctions, in handwriting. Junctions are categorized into ‘L,’ ‘T,’ and ‘X’ junctions with 2, 3, and 4 branches, respectively. In different time periods, the angles between the branches, the number of branches, and the lengths of the branches can differ, which is captured by the junction representations. Compared to other grapheme-based features, this feature does not need segmentation or line detection methods. A junction is represented as the normalized stroke-length distribution of a reference point in the ink over a set of \(N = 120\) directions. The stroke lengths are computed with the Euclidean distance from a reference point in a direction \(n \in N\) until the edge of the ink. The feature is scale-invariant and captures the ink-width and stroke length.

Codebook

Previous research commonly used the Self-Organizing Map (SOM) [30] unsupervised clustering method to train the codebook [19]. Using SOM, however, means losing temporal information of the input patterns. The partially supervised Self-Organizing Time Map (SOTM) [31] maintains this information. In He et al. [18], SOTM showed an improved performance for a grapheme-based feature compared to SOM. Hence, the codebook was trained with SOTM.

SOTM trains sub-codebooks \(D_t\) for each time period using the standard SOM [30], with handwriting patterns \(\Omega (t)\) from key year y(t). The key years for the MPS (in CE), EAA (in BCE), and FRC (in CE) data sets were defined as \(y(t) = \{1300, 1325, 1350,\ldots , 1550\}\), \(y(t) = \{470, 365, 198 \}\), and \(y(t) = \{1305, 1311, 1319, 1326 \}\), respectively. The final codebook D is composed of the sub-codebooks \(D_t\): \(D = \{D_1, D_2,\ldots , D_n\}\), with n key years. To maintain the temporal information, the sub-codebooks are trained in ascending order. The initial sub-codebook \(D_1\) is randomly initialized as no prior information exists in the data set. The succeeding sub-codebooks are initialized with \(D_{t - 1}\) and then trained. Algorithm 1 shows the pseudo-code obtained from He et al. [18].

The Euclidean distance measure was used to train the sub-codebooks, as it significantly decreased training times compared to the commonly used \(\chi ^2\)-distance. Each sub-codebook was trained for 500 epochs, ensuring sufficient training occurred. The learning rate \(\alpha ^*\) decayed from \(\alpha = 0.99\) following Eq. (6). The sub-codebooks were trained on a computer cluster.Footnote 7

A historical manuscript’s feature vector was obtained by mapping its extracted graphemes to their most similar elements in the trained codebook, computed via the Euclidean distance, and forming a histogram. Finally, the normalized histogram formed the feature vector.

Post-processing

The feature vectors of all features were small decimal numbers, varying between \(10^{-2}\) and \(10^{-6}\). To emphasize the differences between the feature vectors of feature type, the feature vectors were normalized between 0 and 1 based on the range of a feature’s feature vectors. A feature vector f is scaled according to the following equations:

Here, max and min are the maximum and minimum values across the whole set of feature vectors of a certain feature, and \(\max (f)\) and \(\min (f)\) are the maximum and minimum values of the feature vector f [32].

Dating

Model

Historical manuscript dating can be regarded as a classification or a regression problem. As the MPS data set was divided into 11 non-overlapping classes (or key years), and the EAA and FRC data sets were partitioned into classes, it was regarded as a classification problem. Following previous research on the MPS data set [19], linear support vector machines (SVM) were used for date prediction with a one-versus-all strategy.

Measures

The mean absolute error (MAE) and the cumulative score (CS) are two commonly used metrics to evaluate model performance for historical manuscript dating. The MAE is defined as follows:

Here, \(y_i\) is a query document’s ground truth, and \(\bar{y_i}\) is its estimated year. N is the number of test documents. The CS is defined in Geng et al. [33] as

The CS describes the percentage of test images that are predicted with an absolute error e no higher than a number of years \(\alpha\). At \(\alpha = 0\) years, the CS is equal to the accuracy.

For all data sets, CS with \(\alpha = 0\) years was used. Since paleographers generally consider an absolute error of 25 years acceptable, and the MPS set has key years spread apart by 25 years, CS with \(\alpha = 25\) years was also used for the MPS data set.

Experiments

The MPS and FRC images were randomly split into a test and training set, containing 10% and 90% of the data, respectively. The EAA images were split into a test and training set of 5 and 23 images, respectively. 2 samples were included in the test set from classes 470 and 365 BCE each, and 1 image from class 198 BCE. The images were sorted based on their labels, and the first images of each class were selected for testing.

The models were tuned with stratified k-fold cross-validation for both data sets, as they were imbalanced. For the MPS and FRC data sets, \(k = 10\). Since the training set of the EAA data set contained only four images from 198 BCE, \(k = 4\) for this set. To obtain the models to be tuned to specific validation sets in each iteration of k-fold cross-validation, hyper-parameters were selected using the mean results of the cross-validation across six random seeds, ranging from 0 to 250 with steps of 50. The set of values considered for the hyper-parameters were \(2^n, n = -7, -6, -5,\ldots , 10\). During the process, the augmented images of those in the validation and test sets were excluded from the training.

Models were trained in two conditions. In the non-augmented condition, only non-augmented images were used, and in the augmented condition, both augmented and non-augmented images were used for training.

Codebook Different sub-codebook sizes can result in different model performances. Hence, various sub-codebook sizes were tested to obtain the optimal size for the Junclets feature. A codebook’s size is its total number of nodes, i.e., \(n_{\textrm{columns}} \cdot n_{\textrm{rows}}\). The full codebook D is the concatenation of the sub-codebooks \(D_t\), and thus, its size will be \({\text {size}}_{D_t} \cdot n_{\textrm{classes}}\). We considered the set of sub-codebook sizes \(s = \{25, 100, 225, 400, 625, 900\}\) with \(n_{\textrm{columns}} = n_{\textrm{rows}}\). These conditions were the same for all data sets. Since different codebook sizes result in different features, the sub-codebook sizes were determined based on the validation results of models trained on only non-augmented images.

The code used for the experiments and the SOTM is publicly available.Footnote 8

Results

Five textural and one grapheme-based feature were used to explore the effects of data augmentation on the style-based dating of historical manuscripts. Linear SVMs were trained in ‘non-augmented’ and ‘augmented’ conditions and tuned using tenfold (MPS and FRC) and fourfold (EAA) cross-validation. The models were tested on a hold-out set containing only non-augmented data. The test set of the MPS and FRC data sets contained 10% of the data, and that of the EAA dataset contained 17.8% (5 images) of the data.

The models were evaluated with the MAE and CS with \(\alpha = 0\) years (i.e., accuracy). In addition, the MPS data set was evaluated with CS with \(\alpha = 25\) years.

Sub-codebook Size

An optimal sub-codebook size needed to be selected before investigating Junclets. Results of k-fold cross-validation for sub-codebook sizes 25, 100, 225, 400, 625, and 900 were evaluated on non-augmented data. For the FRC data set, no graphemes were extracted for a total of 395 images. From these, 96, 138, 126, and 35 images were part of the 1305, 1311, 1319, and 1316 categories, respectively. Hence, the models for Junclets could only be trained on 394 images.

MPS

Figures 8 and 9 show the MAE and CS for the MPS data set over sub-codebook size, respectively. The MAE shows a minimum sub-codebook size of 625. Moreover, CS with \(\alpha = 25\) and \(\alpha = 0\) years show a maximum at sub-codebook size 625. Therefore, Junclets features were obtained with sub-codebooks of size 625 on the MPS data.

MAE over sub-codebook size on non-augmented MPS data from tenfold cross-validation. Also presented in Koopmans et al. [3]

CS with \(\alpha = 25\) and \(\alpha = 0\) years over sub-codebook size on non-augmented MPS data from tenfold cross-validation. Also presented in Koopmans et al. [3]

MAE over sub-codebook size on non-augmented EAA data from fourfold cross-validation. Also presented in Koopmans et al. [3]

EAA

Figure 10 displays the MAE over the sub-codebook size on validation results for the EAA data. The MAE decreases until the sub-codebook size is 225, after which it fluctuates. This is reflected in the CS with \(\alpha = 0\) years (Fig. 11). In addition, the standard deviations for the MAE and CS (\(\alpha = 0\)) appear the smallest here. Hence, a sub-codebook size of 225 was chosen for the EAA data.

CS with \(\alpha = 0\) years over sub-codebook size on non-augmented EAA data from four fold cross-validation. Also presented in Koopmans et al. [3]

FRC

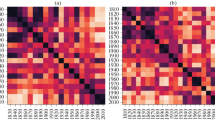

Figures 12 and 13 display the MAE and CS with \(\alpha =0\) years for the FRC data set over the sub-codebook size, respectively. The MAE shows a minimum sub-codebook size of 100. Likewise, the CS shows a maximum for this size. Consequently, Junclets features were obtained using a sub-codebook size of 100 for the FRC data set.

Augmentation

MPS

Figure 14 shows the MAE per feature across augmented and non-augmented conditions. All features except TCC display a decrease in the augmented condition compared to the non-augmented condition. TCC displayed an increase.

MAE on MPS (unseen) test data across non-augmented and augmented conditions. Also presented in Koopmans et al. [3]

Figure 15 shows the CS with \(\alpha = 25\) years for both non-augmented and augmented conditions. All features display an increase in the augmented condition compared to the non-augmented condition except for TCC and Hinge, which showed a decrease. Additionally, Junclets did not change in performance across conditions

CS with \(\alpha = 25\) years on MPS (unseen) test data across non-augmented and augmented conditions. Also presented in Koopmans et al. [3]

.

As displayed in Fig. 16, all features showed an increase in CS with \(\alpha = 0\) years in the augmented condition compared to the non-augmented condition except DeltaHinge. DeltaHinge feature showed no change in performance across conditions on test data.

CS with \(\alpha = 0\) years on MPS (unseen) test data across non-augmented and augmented conditions. Also presented in Koopmans et al. [3]

These results denote an overall increase in performance for all features, with the exception of TCC. However, the changes in performances are small. This is reflected in the validation results shown in Table 4, where changes between the non-augmented and augmented conditions are insignificant. This is indicated by means of the measures in augmented conditions overlapping with the ranges denoted by the standard deviations corresponding to the non-augmented conditions.

EAA Collections

Figures 17 and 18 show the MAE and CS with \(\alpha = 0\) years across all features for the EAA data set. Performance increased for Junclets in the augmented condition compared to the non-augmented condition, denoted by decreases in MAE and increases in accuracy. QuadHinge also showed an increase in performance, indicated by the decrease in MAE in the augmented condition. A decrease in performance for TCC, DeltaHinge, and Hinge features is denoted by an increase in MAE and a reduction in accuracy. CoHinge displayed no change across conditions on the test set.

MAE on EAA (unseen) test data across non-augmented and augmented conditions. Also presented in Koopmans et al. [3]

CS with \(\alpha = 0\) years on EAA (unseen) test data across non-augmented and augmented conditions. Also presented in Koopmans et al. [3]

The validation results do not reflect these test results (Table 5), as Junclets and TCC displayed a decrease in performance with a reduction in mean MAE and a decrease in mean accuracy in the augmented condition compared to the non-augmented condition. However, DeltaHinge, QuadHinge, CoHinge, and Hinge showed the opposite. Additionally, standard deviations increased significantly in the augmented condition compared to the non-augmented condition.

FRC

Figures 19 and 20 show the MAE and CS with \(\alpha = 0\) years across all features. In contrast to the MPS and EAA data sets, these results show a decrease in model performance for augmented images across all features, except Junclets. The Junclets feature showed an increase in performance, as denoted by the decrease in test MAE and increase in test CS with \(\alpha = 0\).

These results are partially reflected in the validation results, shown in Table 6. The test results are mainly in line with the validation results, with the exception of the CoHinge feature, which displayed an overall increase in performance. However, this increase, along with that of Junclets, appears insignificant due to the measures’ means and corresponding standard deviations overlapping.

Significance

Statistical tests (ANOVA, [34]) were performed to see if the results showed significant improvements for all features.

MPS For the MPS data, the results from Junclets and DeltaHinge features were statistically significant for both MAE and CS, with p values much smaller than 0.005.

EAA The results on the EAA data did not show any significance for any of the feature extraction techniques.

FRC The results on the FRC data set showed a statistically significant difference (\(p < 0.0005\)) between the augmented and non-augmented conditions for the accuracy of the Hinge feature and the MAE of the CoHinge feature. As aforementioned, the Hinge feature displayed a decrease in model performance, while CoHinge displayed an increase in performance. The remaining features did not show statistically significant differences.

Discussion

The current research explored how the style-based dating of historical manuscripts is affected by character-level data augmentation using images from the MPS, FRC, and EAA collections. Images were binarized and augmented through elastic morphing [26]. Linear SVMs were trained on five textural features and one grapheme-based feature. Experiments were conducted to determine optimal sub-codebook sizes for the grapheme-based Junclets feature, as it was obtained through mapping extracted junctions to codebooks trained with SOTM [31]. The SVMs were then trained on only non-augmented images and both non-augmented and augmented images and evaluation through the MAE and CS with \(\alpha\)-values of 0 and 25 years

Key Findings

MPS

Linear SVMs trained on test MPS data in the augmented condition showed an overall increased performance compared to the non-augmented condition for all features except TCC, which showed a decrease in performance. The changes in validation results were statistically significant for Junclets and DeltaHinge. Changes in validation results were insignificant for the remaining features, with the ranges of the standard deviations and mean overlapping across conditions.

The MPS images require much computer memory, and consequently, acquiring the features and models is time costly. Specifically, obtaining the Junclets features required several days. Hence, we generated only three augmented images per MPS image. If more images were generated, results might have shown a clearer or different picture of the effects of data augmentation on the style-based dating of historical manuscripts.

Another possible explanation for the aforementioned small changes in performance is that MPS images were augmented before binarization. Images were augmented using the Imagemorph program, which applies a Gaussian filter over local transformations. Applying this before binarization leads to increased influence from the image’s background, and, consequently, to less severe distortions. The distortions in MPS images were noticeable, yet they might have been too light to produce samples with natural within-writer variability. Whether this significantly affected the results is uncertain and should be considered in the future.

EAA Collections

Test results of models trained on EAA images showed increased performance in the augmented condition compared to the non-augmented condition for Junclets and QuadHinge. Models for TCC, DeltaHinge, and Hinge showed a decrease, and CoHinge showed no change in performance. This is not reflected in the validation results (Table 5). Instead, these results show a decreased performance for Junclets and TCC in the augmented condition compared to the non-augmented condition, and an increase in performance for all other features.

An explanation for these results is the increase in standard deviations across all features for models trained in the augmented conditions compared to models trained in the non-augmented condition. This indicates models were less robust to new data in the augmented condition, which may have led to diverging test results. Moreover, due to the small size of the data set, overfitting likely occurred, as suggested by the differences between test results and validation results within the conditions (e.g., QuadHinge).

The models trained on EAA data could have been less robust in the augmented condition due to the extracted features possibly following nonlinear patterns. The SVMs, however, used linear kernels. While linear SVMs worked well on the MPS images, these are written in Roman script. The EAA data are written in Hebrew. Moreover, handwriting in different geographical locations might change at different rates. Data augmentation could have emphasized the nonlinear patterns in Hebrew, making the linear SVMs too rigid.

FRC

Textural features showed a decrease in performance on test results, while the grapheme-based Junclets feature showed an increase in performance. These findings are in agreement with the validation results, except for CoHinge. CoHinge showed a statistically significant difference in accuracy between non-augmented and augmented conditions, indicating its increase in performance observed in the validation results was significant. On the other hand, the Hinge feature showed a decrease in performance and displayed a statistically significant difference between the conditions.

While the MPS and FRC data sets are both written in the Roman script, the results are opposing. The results on the MPS data set showed an overall increase in model performance when images were augmented, and those on the FRC images showed an overall decrease. One of the main differences between these data sets is in the pre-processing. As with the EAA images, FRC images were augmented after binarization. Additionally, the binarization of FRC images used two different methods, of which the adaptive thresholding method often resulted in thinned or broken characters. The ’n’ and ’m’ characters, in particular, were often disrupted.

Another possible cause for the decrease in the performance of models trained on augmented FRC data is that the augmentation method might introduce too much variety within time periods. While the elastic morphing algorithm is designed to mimic within-writer variability, the relatively low quality of some of the binarized images might have caused too much distortion on the textural level.

Future Research

Characteristics differ between scripts, possibly leading to differing distributions of extracted features. Similarly, different attributes of handwriting are captured by individual features. Consequently, features might follow differing temporal trends (nonlinear or linear). The current research only used linear models and did not consider the differences between both features and scripts. This could have led to a decrease in model performance for augmented data. Therefore, nonlinear models should be studied to optimize performance on individual features and scripts.

Often data sets do not contain equal numbers of manuscripts or writing samples from time periods. The current study did not consider data balancing, for which data augmentation is also a common approach. Rather than generating equal numbers of samples through data augmentation for each image, choosing the number of samples to generate with a focus on data balancing can lead to fewer misclassifications of minority classes and therefore improve overall model performances.

Some data sets purposed for historical manuscript dating can contain classes in which most samples are produced by one writer. This can pose an issue as models trained on these data risk to learn to distinguish writer-specific traits in handwriting rather than those representative of a particular period or year. Hence, it is important to simulate variability between writers and within time periods when augmenting data. This might lead to more robust models than when simulating a realistic within-writer variability.

In “Related works” section, we presented previous research which showed deep learning approaches outperformed statistical features on the MPS data set. Moreover, a fusion of statistical features and deep learning approaches has been shown to improve performance [23]. It would be interesting to investigate whether data augmentation might positively affect historical manuscript dating using these methods on smaller and more heavily degraded documents, such as the EAA collections. Additionally, applying such approaches to individual characters similar to grapheme-based statistical features might bypass the issues of limited data and loss of information due to image resizing.

Data availability

The datasets used in this article are publicly available from their original websites and/or Zenodo repository. All relevant links are shared in the footnotes 1,3,4,5, and 6. For the repository, the DOI is added.

Notes

French Royal Chancery, Paris, Archives nationales de France, JJ35-JJ211, shared with us in the HIMANIS project (https://eadh.org/projects/himanis) [4].

References

He S, Samara P, Burgers J, Schomaker L. Towards style-based dating of historical documents. In: 14th International conference on frontiers in handwritten recognition. IEEE; 2014. https://doi.org/10.1109/ICFHR.2014.52.

Bulacu ML, Schomaker LRB. Text-independent writer identification and verification using textural and allographic features. IEEE Trans Pattern Anal Mach Intell. 2007;29(4):701–17. https://doi.org/10.1109/TPAMI.2007.1009.

Koopmans L, Dhali M, Schomaker L. The effects of character-level data augmentation on style-based dating of historical manuscripts. In: Proceedings of the 12th international conference on pattern recognition applications and methods—ICPRAM, vol 1. 2023, pp. 124–35. https://doi.org/10.5220/0011699500003411 (SciTePress).

Stutzmann D, Moufflet J-F, Hamel S. La recherche en plein texte dans les sources manuscrites médiévales : enjeux et perspectives du projet HIMANIS pour l’édition électronique. Médiévales. 2017;73(73):67–96. https://doi.org/10.4000/medievales.8198.

Bulacu M, Brink A, Van Der Zant T, Schomaker L. Recognition of handwritten numerical fields in a large single-writer historical collection. In: 2009 10th International conference on document analysis and recognition. IEEE; 2009, pp. 808–812.

He S, Schomaker L, Samara P, Burgers J. MPS data set with images of medieval charters for handwriting-style based dating of manuscripts. https://doi.org/10.5281/zenodo.1194357.

Shor P, Manfredi M, Bearman GH, Marengo E, Boydston K, Christens-Barry WA. The leon levy dead sea scrolls digital library: the digitization project of the dead sea scrolls. J East Mediterr Archaeol Herit Stud. 2014;2(2):71–89. https://doi.org/10.5325/jeasmedarcherstu.2.2.0071.

He S, Schomaker L. Co-occurrence features for writer identification. In: Proceedings of international conference on frontiers in handwriting recognition, ICFHR. Institute of Electrical and Electronics Engineers Inc; 2017, pp. 78–83. https://doi.org/10.1109/ICFHR.2016.0027.

He S, Schomaker L. Writer identification using curvature-free features. Pattern Recognit. 2017;63:451–64. https://doi.org/10.1016/j.patcog.2016.09.044.

Siddiqi I, Vincent N. Text independent writer recognition using redundant writing patterns with contour-based orientation and curvature features. Pattern Recognit. 2010;43(11):3853–65. https://doi.org/10.1016/j.patcog.2010.05.019.

Hamid A, Bibi M, Siddiqi I, Moetesum M. Historical manuscript dating using textural measures. In: 2018 International conference on frontiers of information technology (FIT). 2018, pp. 235–240 . https://doi.org/10.1109/FIT.2018.00048.

Fogel I, Sagi D. Gabor filters as texture discriminator. Biol Cybern. 1989;61(2):103–13. https://doi.org/10.1007/BF00204594.

Heikkilä M, Pietikäinen M, Schmid C. Description of interest regions with local binary patterns. Pattern Recognit. 2009;42(3):425–36. https://doi.org/10.1016/j.patcog.2008.08.014.

Haralick R.M, Shanmugam K, Dinstein I. Textural features for image classification. IEEE Trans Syst Man Cybernet SMC. 1973;3(6):610–21. https://doi.org/10.1109/TSMC.1973.4309314.

Schomaker L, Bulacu M. Automatic writer identification using connected-component contours and edge-based features of uppercase western script. IEEE Trans Pattern Anal Mach Intell. 2004;26(6):787–98.

He S, Samara P, Burgers J, Schomaker L. Image-based historical manuscript dating using contour and stroke fragments. Pattern Recognit. 2016;58:159–71. https://doi.org/10.1016/j.patcog.2016.03.032.

He S, Wiering M, Schomaker L. Junction detection in handwritten documents and its application to writer identification. Pattern Recognit. 2015;48(12):4036–48. https://doi.org/10.1016/j.patcog.2015.05.022.

He S, Samara P, Burgers J, Schomaker L. Historical manuscript dating based on temporal pattern codebook. Comput Vis Image Underst. 2016;152:167–75. https://doi.org/10.1016/j.cviu.2016.08.008.

He S, Schomaker L. Beyond OCR: multi-faceted understanding of handwritten document characteristics. Pattern Recognit. 2017;63:321–33. https://doi.org/10.1016/j.patcog.2016.09.017.

Dhali MA, Jansen CN, de Wit JW, Schomaker L. Feature-extraction methods for historical manuscript dating based on writing style development. Pattern Recognit Lett. 2020;131:413–20. https://doi.org/10.1016/j.patrec.2020.01.027.

Wahlberg F, Wilkinson T, Brun A. Historical manuscript production date estimation using deep convolutional neural networks. In: 2016 15th International conference on frontiers in handwriting recognition (ICFHR). 2016, pp. 205–210 . https://doi.org/10.1109/ICFHR.2016.0048.

Hamid A, Bibi M, Moetesum M, Siddiqi I. Deep learning based approach for historical manuscript dating. In: 2019 International conference on document analysis and recognition (ICDAR). 2019, pp. 967–972 . https://doi.org/10.1109/ICDAR.2019.00159.

Adam K, Al-ma’adeed S, Akbari Y. Hierarchical fusion using subsets of multi-features for historical Arabic manuscript dating. J Imaging. 2022;8(3):60. https://doi.org/10.3390/jimaging8030060.

He S, Samara P, Burgers J, Schomaker L. A multiple-label guided clustering algorithm for historical document dating and localization. IEEE Trans Image Process. 2016;25(11):5252–65. https://doi.org/10.1109/TIP.2016.2602078.

Schomaker L. Monk-search and annotation tools for handwritten manuscripts. 2023. http://monk.hpc.rug.nl/. Accessed 08 July 2023.

Bulacu M, Brink A, Zant T, Schomaker L. Recognition of handwritten numerical fields in a large single-writer historical collection In: This is a peer-reviewed conference paper on an important international conference series, ICDAR; 2009 10th international conference on document analysis and recognition; conference date: 26-07-2009 Through 29-07-2009. 2009, pp. 808–812 . https://doi.org/10.1109/ICDAR.2009.8.

Dhali M, Wit J, Schomaker L. Binet: degraded-manuscript binarization in diverse document textures and layouts using deep encoder–decoder networks. ArXiv; 2019, pp. 26, 15 figures, 11 tables.

Otsu N. A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern. 1979;9(1):62–6. https://doi.org/10.1109/TSMC.1979.4310076.

He S, Schomaker L. Delta-n hinge: rotation-invariant features for writer identification. In: 22th International conference on pattern recognition (ICPR). IEEE (The Institute of Electrical and Electronics Engineers); 2014, pp. 2023–2028. https://doi.org/10.1109/ICPR.2014.353.

Kohonen T. The self-organizing map. Proc IEEE. 1990;78(9):1464–80. https://doi.org/10.1109/5.58325.

Sarlin P. Self-organizing time map: an abstraction of temporal multivariate patterns. Neurocomputing. 2013;99:496–508. https://doi.org/10.1016/j.neucom.2012.07.011.

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E. Scikit-learn: machine learning in Python. J Mach Learn Res. 2011;12:2825–30.

Geng X, Zhou Z-H, Smith-Miles K. Automatic age estimation based on facial aging patterns. IEEE Trans Pattern Anal Mach Intell. 2007;29(12):2234–40. https://doi.org/10.1109/TPAMI.2007.70733.

Cuevas A, Febrero M, Fraiman R. An ANOVA test for functional data. Comput Stat Data Anal. 2004;47(1):111–22.

Acknowledgements

The study for this article collaborated with several research outcomes from the European Research Council (EU Horizon 2020) project: The Hands that Wrote the Bible: Digital Palaeography and Scribal Culture of the Dead Sea Scrolls (HandsandBible 640497), principal investigator: Mladen Popović. Furthermore, for the high-resolution, multi-spectral images of the Dead Sea Scrolls, we are grateful to the Israel Antiquities Authority (IAA), courtesy of the Leon Levy Dead Sea Scrolls Digital Library; photographer: Shai Halevi. Additionally, we express our gratitude to the Bodleian Libraries, the University of Oxford, the Khalili collections, and the Staatliche Museen zu Berlin (photographer: Sandra Steib) for the early Aramaic images. We also thank Petros Samara for collecting the Medieval Paleographical Scale (MPS) dataset for the Dutch NWO project. For the inventories of the French Royal Chancery (FRC) registers, we thank the HIMANIS project (HIstorical MANuscript Indexing for user-controlled Search); photography credits: Paris, Archives Nationales. Finally, we thank the Center for Information Technology of the University of Groningen for their support and for providing access to the Peregrine high-performance computing cluster.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Recent Trends on Pattern Recognition Applications and Methods”. Guest edited by Ana Fred, Maria De Marsico and Gabriella Sanniti di Baja.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Koopmans, L., Dhali, M.A. & Schomaker, L. Performance Analysis of Handwritten Text Augmentation on Style-Based Dating of Historical Documents. SN COMPUT. SCI. 5, 397 (2024). https://doi.org/10.1007/s42979-024-02688-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-024-02688-6