Abstract

The analysis of medical data is a significant opportunity worldwide for national health systems to reduce costs and at the same time improve healthcare. The utilization of these technologies is done in the context of monitoring health issues, counting health goals, as well as for recording medical data. In such a context, early detection of users at risk of lower compliance rates and patterns of use of a health monitoring application suggesting a risk of abandonment is an invaluable opportunity to implement tailored intervention strategies aimed at recovering and avoiding abandonment thoughts. This study aims to identify patterns of early dropout in users of an application for mobile intervention, having access to a database of users who have experienced the impact of a digital monitoring application to improve their quality of life for at least 6 months. At the experimental stage, many different approaches for early dropout prediction were implemented with a different set of features. Specifically, the current study proposes a methodology using the Neighborhood Cleaning Rule and a specific classification algorithm based on the Stacked Generalization learning method to predict the early abandonment of users of the health monitoring application. The results showed that the proposed algorithm was able to predict the early dropout of users from the application with an accuracy of 97.6%, making it reliable enough to be used as an early warning system.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Introduction

Recent discoveries in the field of telecommunication technologies, characterized by their robustness and reliability, have contributed significantly to the progress and development in the field of medicine. In such an intensive field of information and demanding knowledge, it is obvious that e-Health solutions are crucial [1]. The term e-Health has been defined by the World Health Organization (WHO) as “the efficient and secure use of information and communication technologies to support health and health- related fields, including healthcare, health monitoring and education, knowledge and research”. In a broader sense, the term describes not only a technical development, but also a way of thinking, to improve healthcare worldwide using information and communication technology.

Continuous developments in science and technology have led to the ever-increasing availability of raw data at an explosive rate. This has created a huge opportunity for knowledge discovery, with machine learning playing an essential role in a wide range of applications from citizens’ daily lives to monitoring their health. Therefore, it is easy to distinguish the need for knowledge from raw data to support decision-making processes. New technologies may have the potential to make a significant contribution to the transformation of the healthcare industry, but they have to face several significant challenges. Regulatory barriers, difficulties in digitizing patient data and financial constraints make the immediate and valid transformation of the healthcare sector imperative.

The global aging population should also be considered, since the improvement of living conditions alongside with better education and easier access to health services are increasing life expectancy. In this necessary change, digital technologies could play a central role. For their part, patients are investing in prevention with the increased use of digital health applications. The utilization of these technologies is done in the context of monitoring health issues, counting health goals and fitness, as well as for recording medical data.

In this way, patients gain more control over their health management and especially for patients with chronic diseases, continuous monitoring of critical data can be lifesaving [2]. The digitization of health care interventions has the potential to alleviate the burden on health systems caused by growing population aging and chronic diseases. Remote patient monitoring, also known as tele-monitoring, is a method of providing health services using specific technologies for the electronic transmission of information between patients and doctors, aiming at monitoring patients outside of hospital facilities. However, these types of technology solutions require high levels of compliance to be effective, and as with any application, users often abandon their use for a variety of reasons. In such a context, early detection of users at risk of lower compliance rates and usage patterns suggesting a risk of abandonment is an invaluable opportunity to implement tailored intervention strategies aimed at recovering and avoiding user release.

For this reason, the aim of the current research was to identify early abandonment patterns in users of a mobile intervention application called Active and Healthy Aging (AHA). Traditionally, user acceptance has been assessed using static methodologies, so medium and long-term acceptance and commitment have not been systematically analyzed. Therefore, to create an alert system for the application, user profiles with an increased risk of early dropout should be identified.

The problem is given a window of 12 consecutive scheduled moments of data acquisition, to predict the user’s compliance during the next three scheduled moments of data acquisition. Data acquisition follows a well-defined protocol consisting of regularly scheduled times, where participants must feed the study with data. In detail, the valid frequency of data acquisition was set twice per week for each periodic activity. In addition, each scheduled data acquisition may include the actual measurement of one or more recurring activities (brain games, physical activity, finger tapping, mindfulness) regardless of the success or failure of the participant. Some environmental information is also provided, such as the date. In this context, consecutive data acquisition should be planned based on the remote monitoring protocol for i = 1 ,…, n time cases for the period of use of the application by the user.

This study aims to address the early dropout problem, analyzing a dataset contained more than 300 users who have experienced the impact of a digital application to improve their quality of life for at least 6 months on the MAHA (Moving Active & Healthy Aging) network in Madrid by applying multiple classification algorithms. Also proposes a new methodology using the Neighborhood Cleaning Rule (NCL) to tackle the problem of imbalanced dataset [3] and demonstrates a classification algorithm using the stacked generalization learning method created to achieve the best possible predictive accuracy of the early dropout of users using the health monitoring application.

As shown in Fig. 1, the proposed system consists of two major stages. The first stage is responsible for collecting and transforming the incoming data and creating new features. The second stage utilizes the preprocessed data as input and predicts low or high user adherence. The components are explained in detail in “Methodology” following “Related Work” which presents the related work until today. In “Adherence Classification” and “Results” respectively, the results and conclusions of the proposed architecture are presented.

Related Work

Patient monitoring in out-of-hospital conditions has been attracting a multitude of researchers and healthcare practitioners in hopes of reducing the medical costs. From the first years of research, home-based monitoring had been shown to be promising for decreasing emergency room visits and hospitalizations compared to standard traditional healthcare [4].

Over time, the development of technology has also focused many researchers on using smartphones for monitoring elderly patients. In [5], an early system that uses a cell phone application for remote monitoring with enabled GPS, an accelerometer and a light sensor is described.

Homecare, as the application of telecare in the home environment, has evolved greatly due to both financial and medical factors. Specifically for the home-based care applications, in 2015, an integrated home care smartwatch-based system that incorporates communication and health monitoring features, such as bio signal monitoring and reminder notifications for treatments based on physician defined schedules, was studied [6]. The study highlights some good practices regarding user interface design that is based on mobile devices.

A similar smartphone-based approach was presented in [7]. In particular the Wanda-CVD system was designed, a remote health monitoring system (RHM), which utilizes wireless coaching using feedback and prompts as social support, in order to reduce the risk of deterioration of the identified cardiovascular disease (CVD). Using data and features such as activity, blood pressure and questionnaire responses transmitted through the smartphone, the system was able to predict successful and unsuccessful participants with an F-measure of 91.9%.

The study presented in [8], introduced the use of an eHealth monitoring Platform. The aim was to gain insights into the causes of dropout for patients with chronic lifestyle diseases and evaluate if attrition can be predicted and consequently prevented. Results showed that dropouts from eHealth lifestyle interventions can be predicted using various data mining methods and the best performing predictive model was found to be Random Forest.

In [9], a usability evaluation of “Heart Around”, an integrated homecare platform providing communication, health monitoring and emergency response services was performed. The SUS method was applied, to gain an initial high-level assessment of the system’s usability. The study stressed out the importance of incorporating communication and general social networking features in homecare systems, as well as the significance of the user’s connection to their personal doctor and caregivers. The study has also illustrated that adoption of a homecare system and the regular interaction with it, can be encouraged by incorporating enhanced features that are either enjoyable or useful on a daily basis.

Finally, in [10] an innovative self-management mobile app was designed to reduce the risk of developing type 2 diabetes and its complications, such as cardiovascular disease. Bluetooth movement data coming from wearable insole devices are used to track crucial factors such as medication adherence and physical activity. The SVM and decision tree models were designed to identify sitting and standing, producing 86% accuracy.

Methodology

Data Preprocessing Stage

A raw dataset was generated with the aim of developing predictive models. The data were extracted from a non-relational database using a mobile intervention application. The database contains a set of tables consisting of various application metrics and demographic data that vary between users. Before the data cleaning stage for a machine learning problem, it is vital to understand the data and the goal we want to achieve. Without this understanding, we have no basis for making decisions about the data at the refining and preparing stage. In the data pre-processing stage, the various datasets (EQ5D3L, SPQ, Sociodemo, UCLA, UTAUT, brain games, fingertapping, physical activity, digital phenotyping, mindfulness) used in the present work were successfully processed in relation to the various problems they encountered.

Since the machine learning algorithms accept only numeric inputs, at the first stage of preprocessing it is necessary to encode these categorical variables into numerical values using coding techniques. In this context, the ordinal categorical values found in the various data tables were encoded in numbers for better understanding by the algorithms. In more detail, the technique of ordinal coding was followed, as mentioned in [11], in which an integer number is assigned to each category, provided that the number of existing categories is known. Regarding nominal categorical features, such as the type of electronic device used by the user (e.g., tablet, mobile), the one-hot-encoding technique was utilized. This method involves representing each category as a binary variable, with a value of 1 indicating the presence of the category and a value of 0 indicating the absence of the category.

An important step in the preprocessing stage was the process of the information concerning the group in which the users belong. Specifically, the users who participated in the study, belonged to a case of use, depending on their physical and cognitive condition at the time of their registration. The UC6 and UC3 categories include the elderly without physical and cognitive problems, the UC5 category includes users with physical disabilities, and the UC7 category includes people at risk of isolation. Therefore, during the preprocessing stage, the categories UC6 and UC3 were represented by the same integer, without losing the value of the information.

The preprocessing of the data was continued by renaming attributes to various tables ensuring data consistency. More specifically, in the table that contains the information from the application fingertapping game, the column of the unique user ID was renamed to have the same name in all tables. Also, for the creation of the dataset and the better understanding of its characteristics, the three questions mentioned in the self-perception questionnaires were renamed to SPQ1, SPQ3, SPQ5 respectively. The understanding of the available data then led to the use of the information provided by the users, by completing the EQ5DL3, SPQ and UCLA questionnaires, only at the stage of enrollment in the application and not during or at the end of the study. The reason was the lack of information for most users of the application.

In addition, during the observation of the data, problems were identified and encountered in the data types and specifically in the fields with date values. In the same direction, the existence of problematic recordings regarding the measurements of the application were observed either due to the duration of the user interaction or due to overlap in the same period of two or three recordings for the same user at the same time. To address and fix the problem, a record time checker was created, resulting in the rejection of those lasting less than five seconds, followed by a filtering rule for record overruns maintaining the longest recording time.

Feature Engineering Stage

During the study of the UCLA questionnaire dataset, which refers to the UCLA loneliness scale, it was observed that all questions express the feeling of loneliness in a different way. This can be defined as a 20-item measure that assesses how often a person feels disconnected from others. However, studying complex psychological constructs like loneliness, can be difficult to define and measure. Therefore, a new feature called level of loneliness was manually created by adding up the responses to all 20 questions and categorizing the user into one of the five classes (0, 1, 2, 3, 4). This led to an easier to interpret approach and analysis of the data. In accordance with the study regulations, users who did not provide responses to the questionnaire were assigned to class 0 and subsequently excluded from the prediction process. Class 1 indicates low levels of loneliness, class 2 indicates moderate levels, class 3 indicates high levels and class 4 indicates extreme levels. This approach was taken to ensure consistency with the study’s guidelines and maintain the integrity of the analysis.

As demonstrated during the experimental process, dimensionality reduction led to the avoidance of over-fitting the models and improved their predictive function. At this point, to complete the first stage of data preprocessing, features that do not provide information on the process of predicting users’ early dropout were discarded and data processing was continued by filtering application users according to study protocols.

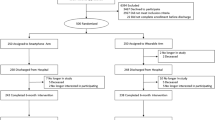

User Validation Stage and Sliding Window Algorithm

The database contained more than 300 users who have experienced the impact of an AHA digital application to improve their quality of life for at least 6 months on the MAHA (Moving Active & Healthy Aging) network in Madrid. However, it turned out that many users were invalid to participate in the survey. To confirm the validity of the users, a series of checks were performed for the quality and the validation of the data.

The validation process almost always involves discarding data when entering it into a knowledge mining system. During the first stage of data observation, the stored socio-demographic information provided by users was used. Specifically, users with an application period of less than 7.5 weeks were initially rejected, since the period of predicting user consistency concerns the performance of 15 data acquisition points. Then, according to the protocols of the study, the users were filtered according to their age. For their valid participation in the study, each participant had to be over 65 years old.

In the second control phase, the processed user interaction logs with the application were used, as they emerged when the initial records were preprocessed. The purpose was to reject inactive users, who did not use the application even once. As a result of the above control, there was a significant reduction in the total number of valid users of the application based on the study regulations.

The data preprocessing phase continued gathering the data per valid user. The acquisition of data follows a well-defined protocol consisting of regularly scheduled moments, where participants must feed data to the study, with the valid frequency of data acquisition being set at twice per week of use of the application for any activity. In addition, each scheduled data acquisition may include the actual measurement of one or more recurring activities regardless of the success or failure of the participant.

In the context of creating consecutive data acquisitions based on the remote monitoring protocol for i = 1,…, n time cases for the period of use of the application by the user, the rolling window time series method was used. Essentially this method could be described as a window that slides over “time series” data and extracts all the data in that window [12]. This sliding window is the basis to turn any time series dataset into a supervised learning problem. This approach was leveraged to create a custom algorithm for user profiling. By continuously reusing per week and per user the useful functions of the algorithm, it is possible to describe each user’s behavior in the application environment and create a set of data containing all the activity and information about the total users.

The data gathering process starts collecting all the available data from the database per user and then the unique user id is deleted, as it is not important for the prediction process. The availability of user’s answers to the EQ5DL3, SPQ and UCLA questionnaires is then checked, during user’s registration phase in the application. In the event that the user's questionnaire responses are missing, a default value of 0 is assigned to represent the absence of the information. This approach is in accordance with the study's established protocols. It should be noted that these missing responses are not factored into the prediction process.

Upon completion of the collection of “static” information, the created custom algorithm based on the rolling window time series method is applied. Initially, the recorded interval of interaction and presence of the user in the application is collected. The available time period is then broken down into “time windows” containing 15 data acquisition moments.

At this point, it is important to mention the reliability of the created custom algorithm, as for the creation of time frames, the time interval is converted from weeks to hours. Data acquisition follows a well-defined protocol with the valid frequency of data acquisition being set for participants twice a week for each activity. According to the protocol, the time period of one week is converted to 168 h and the time frame of data acquisition moments is set at 84 h. Following the same strategy, the time “windows” of 15 data acquisition moments were created, with the process proceeding one week ahead of time each time and being completed after checking unavailable observations for the next period of 7.5 weeks.

Along with the creation of time frames, the measurements of the periodic activities of the user are collected regardless of his success or failure. In this way, it is possible to count the user’s activity per time of data download. Specifically, the method searches for user registrations in the specified time period and checks if the valid frequency protocol is followed. At this point, to generate predictions and evaluate machine learning models, the custom algorithm is applied during the last three data retrieval moments to create the prediction class and describes the user consistency during that time.

A programmed acquisition is considered to have been effectively implemented by a participant, if at least one of the programmed variables has been obtained for measurement. The consistency during the next 3 scheduled data acquisition moments is considered low if the number of taken measurements is 0 or 1 and high if it is greater than 2 valid measurements during the time period. The result of the method is the creation of attributes representing the 12 data acquisition points (T–0,…,T–11), as defined by the problem presented by the study and the prediction class. The created raw dataset is described in Table 1.

Although existing knowledge mining techniques have shown great success in many real-world applications, decision support systems face the problem of imbalance dataset. The fundamental issue is the ability of the imbalanced dataset to significantly affect the performance of the most standard machine learning algorithms. Algorithms expect balanced class distributions or equal misalignment costs, so when applied to complex imbalanced datasets, they fail to properly represent data attributes.

NCL Filtering and Feature Selection Stage

In the present study, sampling was performed using the neighborhood cleaning rule to balance the data. The Neighborhood Cleaning Rule for data cleaning is a sampling technique that combines both the Condensed Nearest Neighbor (CNN) rule for removing redundant examples and the Edited Nearest Neighbors (ENN) rule for removing noise or ambiguous examples. Initially the NCL method removes negative examples that are incorrectly classified by their three nearest neighbors. It then identifies the neighbors of each positive example and subtracts those belonging to the majority class. It essentially uses the one-sided selection principle but looks more closely at the quality of the removed data. The reason is that the noisy examples will probably be misplaced and many of them will be used in the training process, leading to the misplacement of several subsequent test examples [13]. Therefore, it focuses less on improving the balance of the class distribution and more on the quality of the examples maintained in the majority class. This approach to dealing with imbalanced datasets, was proposed by Jorma Laurikkala in 2001, emphasizing that the quality of classification results does not necessarily depend on the size of the class.

Therefore, in addition to class allocation, other data characteristics, such as noise, which may interfere with classification should be taken into consideration [14]. Following the NCL filtering, a selection of features was performed using the Chi-squared statistical test. More specifically, the selection of the first fourteen characteristics based on the calculated statistical p value for all the characteristics was made, as they are presented in Table 2.

During the experimental stage, various classification techniques were studied, such as Random Forest (RF), Multilayer Perceptron (MLP), Support Vector Machines (SVC), Logistic Regression, Naïve Bayes (GNB), K-nearest neighbors (KNN), AdaBoost, XGBoost (XGB), LightGBM and the Stacked Generalized Algorithm created in the work. Moreover, the option to search for the values of the hyperparameters of the different models was selected with the grid search procedure and the use of a subset of the data for faster response of the algorithms.

Each algorithm requires a specific grid of hyper-parameters that should be customized depending on the problem it is called to tackle. The results of the parameter selection presented detailed in Table 3, improved the performance of the investigated models in detecting low future levels of user compliance of the application.

Adherence Classification

In the context of the present work, the creation and testing of a stacked generalized algorithm was chosen since it is one of the most effective approaches to classification problems. The selection of the models for the final structure of the stacked generalized algorithm, proposed by the present work, was done by testing all the possible combinations of the machine learning algorithms that were studied separately.

Specifically, the selected stacked generalized algorithm uses as level-0 models, the Random Forest, Naïve Bayes and LightGBM algorithms with the hyperparameters presented in Table 3, which are applied to the training dataset. Upon completion of their predictions, the Logistic Regression algorithm is then used as a meta-model (level-1 model), which integrates the projections of the original models, learning how to better combine previous predictions. The final algorithm knows when to use each model in the dataset and is used to address the problem as the final estimator. The main idea for the creation of the stacked generalized algorithm was created during the experimental stage and the logic was that by combining classifiers with different inductive biases, the feature space would be explored differently, resulting in different classifiers whose errors are not correlated.

Therefore, the Random Forest and LightGBM algorithms, which showed the best results, were used with it as level-0 models. Combining the results of these models into one meta-classifier, led classifiers to learn from each other’s errors resulting in a more efficient model (Fig. 2).

Results

Instead of a train-test split method for the training and validation, the tenfold stratified cross validation was used to evaluate the different classification algorithms in the most reliable way. According to stratified cross validation the data is randomly divided into pieces, depending on the folds defined by the user, maintaining the imbalanced class distribution in each fold ensuring that the ratio of positive to negative examples found in the original distribution is observed in all aspects. Formulas used for evaluation measures of classification techniques are as follows:

where:

TP: predicted adherence and ground truth adherence is high.

TN: predicted adherence and ground truth adherence is low.

FP: predicted adherence is high and ground truth adherence is low.

FN: predicted adherence is low and ground truth adherence is high.

Regarding the performance of the proposed classifier, selecting the 14 features based on the Chi-square statistical test and using the NCL data cleaning rule led to the optimal performance (Table 4).

The average measurements of the classification algorithms per classification metric based on the methodology using Chi-square statistical test for feature selection and the NCL rule for data cleaning are presented in Table 5.

Based on McNemar Test [15] applied on the predictions of the different models, stacking model superiority compared to the rival algorithms was found statistically significant. Moreover, the dataset size is a major concern in the medical field, where classification is itself a challenge and it becomes a more difficult challenge, when applied to small training datasets, which can lead to an unreliable classification model. To investigate the effect of the size of the training data on the overall performance of the supervised classification models, the performance of the models was studied as they were trained in different subsets of the total available records.

For the purposes of the analysis, the dataset was first separated into training and test data and then created seven different data subsets from the training set, used for the training of the algorithms. Increasing the amount of data in the training set increases the data to noise ratio. In this way, if the training and test data come from different distributions, increasing the data volume will not reduce this noise source.

Therefore, increasing the volume of data can only exacerbate the over-adaptation while increasing the complexity of the model. Otherwise, the performance of the test dataset should improve or remain the same, but in no case should it deteriorate significantly. The results of the investigation showed that the overall performance of the classifiers depends on how much a dataset represents the original distribution and not on its size, verifying the study [16]. Important findings of the analysis were the small variations in the performance of the algorithms, as well as the valid control of the absence of over- training of the algorithms.

Conclusions

The application of machine learning models to datasets of medical monitoring applications is a very interesting topic for researchers today, as there are many health issues that need to be investigated. In this paper, various classification techniques were studied, such as Random Forest, Multilayer Perceptron, SVM, Logistic Regression, Naïve Bayes, KNN, AdaBoost, XGBoost, LightGBM algorithms and the Stacked Generalized Algorithm created in the work. The experiments were performed on the dataset created under the problem, through the process of feature engineering from a non-relational database. Upon completion of the data validation, the best classification technique was sought to predict the possible early dropout of users from using the application.

Using the tenfold stratified cross validation technique in the experimental scenarios performed, it was proved that all classification techniques work with over 90% accuracy. Regarding the use of the Chi-squared test, as a guide for the selection of the appropriate features, by reducing the dimensions, the risk of overfitting of the algorithms and the training time of the model was reduced, while at the same time the accuracy of the results was significantly increased. Next, the problem approach, using the Neighborhood Cleaning Rule algorithm, led to the optimal performance of all classifiers, emphasizing that the quality of classification results does not necessarily depend on the size of the prediction class. Therefore, in addition to prediction class distribution, other data characteristics, such as noise, that may impede optimal classification should be considered. The proposed approach could in the future be part of artificial intelligence (AI) technology for remote monitoring of elderly health, with the aim of maximizing user compliance. The results show that the proposed algorithm was able to predict the early dropout of users from the application with an accuracy of 97.6%.

Availability of Data and Materials

Not applicable.

Code Availability

Not applicable.

References

Olsson S, Lymberis A, Whitehouse D. European commission activities in ehealth. Int J Circumpolar Health. 2005;63:310–6. https://doi.org/10.3402/ijch.v63i4.17747.

Kordatzakis A, Perakis K, Haritou M, Maglogiannis I, Koutsouris D. A novel telematics platform for remote monitoring of patients. J Inf Technol Healthcare. 2007;5:248–54.

Laurikkala J. Improving identification of difficult small classes by balancing class distribution. Proc 8th Conf AI Med Eur Artif Intell Med. Berlin Heidelberg: Springer; 2001. p. 63–6.

Maglogiannis I, Doukas C. Intelligent health monitoring based on pervasive technologies and cloud computing. Int J Artif Intell Tools. 2014. https://doi.org/10.1142/S021821301460001X.

Pigadas V, Doukas C, Plagianakos V, Maglogiannis I. Enabling constant monitoring of chronic patient using android smart phones. ACM Int Conf Proc Series. 2011. https://doi.org/10.1145/2141622.2141697.

Panagopoulos C, Kalatha E, Tsanakas P, Maglogiannis I. Evaluation of a mobile home care platform. Berlin: Springer; 2015. p. 328–43.

Alshurafa N, Sideris C, Pourhomayoun M, Kalantarian H, Sarrafzadeh M, Eastwood JA. Remote health monitoring outcome success prediction using baseline and first month intervention data. IEEE J Biomed Health Inf. 2016. https://doi.org/10.1109/JBHI.2016.2518673.

Pedersen D, Mansourvar M, Sortsø C, Schmidt T. Predicting dropouts from an ehealth platform for lifestyle interventions: analysis of methods and predictors (preprint). J Med Internet Res. 2019. https://doi.org/10.2196/13617.

Panagopoulos C, Menychtas A, Tsanakas P, Maglogiannis I. Increasing usability of homecare applications for older adults: a case study. Designs. 2019;3:23. https://doi.org/10.3390/designs3020023.

Alazzam M, Mansour H, Alassery F, Almulihi A. Machine learning implementation of a diabetic patient monitoring system using interactive e-app. Comput Intell Neurosci. 2021;2021:1–7. https://doi.org/10.1155/2021/5759184.

Potdar K, Pardawala T, Pai C. A comparative study of categorical variable encoding techniques for neural network classifiers. Int J Comput Appl. 2017;175:7–9. https://doi.org/10.5120/ijca2017915495.

Amor L, Lahyani I, Jmaiel M. Recursive and rolling windows for medical time series forecasting: a comparative study. In: 2016 IEEE Intl conference on computational science and engineering (CSE) and IEEE Intl conference on embedded and ubiquitous computing (EUC) and 15th Intl symposium on distributed computing and applications for business engineering (DCABES), Paris, France:IEEE; 2016. p. 106–113. https://doi.org/10.1109/CSE-EUC-DCABES.2016.169

He H, Ma Y. Imbalanced learning: foundations, algorithms, and applications. Imbalanced learning: foundations, algorithms, and applications. New York: Wiley; 2013. https://doi.org/10.1002/9781118646106.

Agustianto K, Destarianto P. Imbalance data handling using neighborhood cleaning rule (NCL) sampling method for precision student modeling. In: 2019 International conference on computer science, Information Technology, and Electrical Engineering (ICOMITEE). 2019. p. 86–89.

Dietterich T. Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput. 1998;10:1895–923.

Althnian A, AlSaeed D, Al-Baity H, Samha A, Dris A, Alzakari N, Abou Elwafa A, Kurdi H. Impact of dataset size on classification performance: an empirical evaluation in the medical domain. Appl Sci. 2021;11:796. https://doi.org/10.3390/app11020796.

Funding

Open access funding provided by HEAL-Link Greece. This research has been co-financed by the European Union and Greek national funds through the Operational Program Competitiveness, Entrepreneurship and Innovation, under the call RESEARCH–CREATE–INNOVATE (project code: MediLudus Personalized home care based on game and gamify elements T 2EK∆K-03049).

Author information

Authors and Affiliations

Contributions

The authors of this work, Vouzis Eleftherios and Maglogiannis Ilias, have contributed equally to the research and writing of this manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

Not applicable.

Consent to Participate

Not applicable.

Consent for Publication

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Machine Learning Modeling Techniques and Applications” guest edited by Lazaros Iliadis, Elias Pimenidis and Chrisina Jayne.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Vouzis, E., Maglogiannis, I. Prediction of Early Dropouts in Patient Remote Monitoring Programs. SN COMPUT. SCI. 4, 467 (2023). https://doi.org/10.1007/s42979-023-01843-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-023-01843-9