Abstract

We investigate the capability of neural network-based model order reduction, i.e., autoencoder (AE), for fluid flows. As an example model, an AE which comprises of convolutional neural networks and multi-layer perceptrons is considered in this study. The AE model is assessed with four canonical fluid flows, namely: (1) two-dimensional cylinder wake, (2) its transient process, (3) NOAA sea surface temperature, and (4) a cross-sectional field of turbulent channel flow, in terms of a number of latent modes, the choice of nonlinear activation functions, and the number of weights contained in the AE model. We find that the AE models are sensitive to the choice of the aforementioned parameters depending on the target flows. Finally, we foresee the extensional applications and perspectives of machine learning based order reduction for numerical and experimental studies in the fluid dynamics community.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Thus far, modal analysis has played a significant role in understanding and investigating complex fluid flow phenomena. In particular, the combination with linear-theory based data-driven and operator-driven tools, e.g., proper orthogonal decomposition (POD) [1], dynamic mode decomposition [2], and Resolvent analysis [3], has enabled us to examine the fluid flows with interpretable manner [4, 5]. We are now able to see their great abilities in both numerical and experimental studies [6,7,8,9,10,11,12]. In addition to these efforts aided by linear-theory based methods, the use of neural networks (NNs) has recently been attracting increasing attentions as a promising tool to extract nonlinear dynamics of fluid flow phenomena [13,14,15,16]. In the present paper, we discuss the capability of the NN-based nonlinear model order reduction tool, i.e., autoencoder, while considering canonical fluid flow examples with several assessments.

In recent years, machine learning methods have exemplified their great potential in fluid dynamics, e.g., turbulence modeling [17,18,19,20,21,22,23,24,25], and spatio-temporal data estimation [26,27,28,29,30,31,32,33,34,35,36,37]. Reduced order modeling (ROM) is no exception, referred to as machine-learning-based ROM (ML-ROM). An extreme learning machine based ROM was presented by San and Maulik [38]. They applied the method for quasi-stationary geophysical turbulence and showed its robustness over a POD-based ROM. Maulik et al. [39] applied a probabilistic neural network to obtain a temporal evolution of POD coefficients from the parameters of initial condition considering the shallow water equation and the NOAA sea surface temperature. It was exhibited that the proposed method is able to predict the temporal dynamics while showing a confidence interval of its estimation. For more complex turbulence, Srinivasan et al. [40] demonstrated the capability of a long short-term memory (LSTM) for a nine-equation shear turbulent flow model. In this way, the ML-ROM efforts combined with the traditional ROM are ongoing now.

Furthermore, the use of autoencoder (AE) has also notable role as an effective model-order reduction tool of fluid dynamics. To the best of our knowledge, the first AE application to fluid dynamics is performed by Milano and Koumoutsakos [41]. They compared the capability of the POD and a multi-layer perceptron (MLP)-based AE using the Burgers equation and a turbulent channel flow. They confirmed that the linear MLP is equivalent to POD [42] and demonstrated that the nonlinear MLP provides improved reconstruction and prediction capabilities for the velocity field at a small additional computational cost. More recently, convolutional neural network (CNN)-based AEs have become popular thanks to the concept of filter sharing in CNN, which is suitable to handle high-dimensional fluid data set [43]. Omata and Shirayama [44] used the CNN-AE and POD to reduce a dimension of a wake behind a NACA0012 airfoil. They investigated the difference of the temporal behavior in the low-dimensional latent space depending on the tool for order reduction and demonstrated that the extracted latent variables properly represent the changes in the spatial structure of the unsteady flow field over time. They also exhibited that the capability of this method to compare flow fields constructed using different conditions, e.g., different Reynolds numbers and angles of attack. Hasegawa et al. [45, 46] proposed a CNN-LSTM based ROM to predict the temporal evolution of unsteady flows around a bluff body by following only the time series of low-dimensional latent space. They consider flows around a bluff body whose shape is defined using a combination of trigonometric functions with random amplitudes and demonstrated that the trained ROM were able to reasonably predict flows around a body of unseen shapes, which implies generality of the low-dimensional features extracted in the latent space. The method has also been extended to the examination of Reynolds number dependence [47] and turbulent flows [48], whose results also unveiled some major challenges for CNN-AE, e.g., its capability against significantly different flow topologies from those used for training and the difficulty in handling very high-dimensional data. From the perspective on understanding the latent modes obtained by CNN-AE, Murata et al. [49] suggested a customized CNN-AE referred to as a mode-decomposing CNN-AE which can visualize the contribution of each machine learning based mode. They clearly demonstrated that, similar to the linear MLP mentioned above, the linear CNN-AE is equivalent to POD, the nonlinear CNN-AE improves the reconstruction thanks to the nonlinear operations, and a single CNN-AE mode represents multiple POD modes. Apart from these, we can also see a wide range of AE studies for fluid flow analyses in [50,51,52,53,54]. As a result of such eagerness for the uses of AE, we here arrive at the following open questions:

-

1.

How is the dependence on a lot of considerable parameters for AE-based order reduction, e.g., a number of latent modes, activation function, and laminar or turbulent?

-

2.

Where is the limitation of AE-based model order reduction for fluid flows?

-

3.

Can we expect further improvements of AE-based model order reduction with any well-designed methods?

These questions should commonly appear in feature extraction using AE not only for fluid mechanics problems but also for any high-dimensional nonlinear dynamical systems. To the best of authors’ knowledge, however, the choice of parameters often relies on a trial-and-error, and a systematic study for answering these questions seems to be still lacking.

Our aim in the present paper is to demonstrate the example of assessments for AE-based order reduction in fluid dynamics, so as to tackle the aforementioned questions. Especially, we consider the influence on (1) a number of latent modes, (2) choice of activation functions, and (3) the number of weights in an AE with four data sets which cover a wide range of nature from laminar to turbulent flows. Findings through the present paper regarding neural-network-based model order reduction for fluid flows can be applied to a wide range of problems in science and engineering, since fluid flows can be regarded as a representative example of complex nonlinear dynamical systems. Moreover, because the demand for model order reduction techniques can be found in various scientific fields including robotics [55], aerospace engineering [56, 57], and astronomy [58], we can expect that the present paper can promote the unification of computer science and nonlinear dynamical problems from the perspective of application side. Our presentation is organized as follows. We introduce the present AE models with fundamental theories in “Methods and Theories of Autoencoder”. The information for the covered fluid flow data sets is provided in “Setups for Covered Examples of Fluid Flows”. We then present in “Results” the assessments in terms of various parameters in the AE application to fluid flows. At last, concluding remarks with outlook of AE and fluid flows are stated in “Concluding Remarks”.

Methods and Theories of Autoencoder

Machine Learning Schemes for Construction of Autoencoder

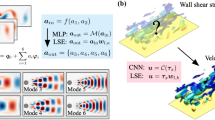

In the present study, we use an autoencoder (AE) based on a combination of a convolutional neural network (CNN) and a multi-layer perceptron (MLP), as illustrated in Fig. 1. Our strategy for order reduction of fluid flows is that the CNN is first used to reduce the dimension into a reasonably small dimensional space, and the MLP is then employed to further map it into the latent (bottleneck) space, as explained in more detail later. In this section, let us introduce the internal procedures in each machine learning model with mathematical expressions and conceptual figures.

We first utilize a convolutional neural network (CNN) [59] for reducing the original dimension of fluid flows. Nowadays, CNNs have become the essential tools in image recognition thanks to their efficient filtering operation. Recently, the use of CNNs has also been seen in the community of fluid dynamics [60,61,62,63,64,65]. A CNN mainly consists of convolutional layers and pooling layers. As shown in Fig. 2a and b, the convolutional layer extracts the spatial feature of the input data by a filter operation,

where \(C=\mathrm{floor}(H/2)\), \(c_{ijm}^{(l-1)}\) and \(c_{ijm}^{(l)}\) are the input and output data at layer l, and \(b_m\) is a bias. A filter is expressed as \(h_{pqkm}\) with the size of \(\left( H\times H\times K\right)\). In the present paper, the size H for the baseline model is set to 3, although it will be changed for the study on the dependence on the number of weights in “Number of Weights”. The output from the filtering operation is passed through an activation function \(\phi\). In the pooling layer illustrated in Fig. 2c, representative values, e.g. maximum value or average value, are extracted from arbitrary regions by pooling operations, such that the image would be downsampled. It is widely known that the model acquires robustness against the input data by pooling operations thanks to the decrease in the spatial sensitivity of the model [66]. Depending on users’ task, the upsampling layer can also be utilized. The upsampling layer copies the value of the low-dimensional images into a high-dimensional space as shown in Fig. 2d. In the training process, the filters in the CNN h are optimized by minimizing a loss function E with the back propagation [67] such that \({\varvec{w}}=\mathrm{argmin}_{\varvec{w}}[{E}({\varvec{q}}_\mathrm{output},{\mathcal F}({\varvec{q}}_\mathrm{input};{\varvec{w}}))]\).

We then apply an MLP [68] for additional order reduction. The MLP has been utilized to a wide range of problems, e.g., classification, regression, and speech recognition [69]. We can also see the applications of MLP to various problems of fluid dynamics such as turbulence modeling [19, 20, 23], reduced order modeling [70], and field estimation [71]. The MLP can be regarded as the aggregate of a perceptron which is a minimum unit, as illustrated in Fig. 3. The input data from the \((l-1)\mathrm{th}\) layer are multiplied by a weight \(\varvec{W}\). These inputs construct a linear superposition \(\varvec{Wq}\) with biases \(\varvec{b}\) and then passed through a nonlinear activation function \(\phi\),

As illustrated in Fig. 3, these perceptrons of MLP are connected with each other and have a fully-connected structure. As well as the CNN, the weights among all connections \(W_{ij}\) are optimized with the minimization manner.

By combining two methods introduced above, we here construct the AE as illustrated in Fig. 1. The AE has a lower dimensional space called the latent space at the bottleneck so that a representative feature of high-dimensional data \(\varvec{q}\) can be extracted by setting the target data as both the input and output. In other words, if we can obtain the similar output to the input data, the dimensional reduction can be successfully achieved onto the latent vector \(\varvec{r}\). In turn, with over-compression of the original data \(\varvec{q}\), of course, the decoded field \(\widehat{\varvec{q}}\) cannot be remapped well. In sum, these relations can be formulated as

where \(\mathcal{F}_e\) and \(\mathcal{F}_d\) are encoder and decoder of autoencoder as shown in Fig. 1. Mathematically, in the training process for obtaining the autoencoder model \(\mathcal F\), the weights \(\varvec{w}\) is optimized to minimize an error function E such that \({\varvec{w}}=\mathrm{argmin}_{\varvec{w}}[{E}({\varvec{q}},{\mathcal F}({\varvec{q}};{\varvec{w}}))]\).

In the present study, we use the \(L_2\) norm error as the cost function E and Adam optimizer [67] is applied for obtaining the weights. Other parameters used for construction of the present AE are summarized in Table 2. Note that theoretical optimization methods [72,73,74] can be considered for hyperparameter decision to further improve the AE capability, although not used in the present study. To avoid an overfitting, we set the early stopping criteria [75]. With regard to the internal steps of the present AE, we first apply the CNN to reduce the spatial dimension of flow fields by \(\mathcal{O}(10^2)\). The MLP is then used to obtain the latent modes by feeding the compressed vector by the CNN, as summarized in Table 1, which shows an example of the AE structure for the cylinder wake problem with the number of latent variables \(n_r=2\). For a case where it is considered irrational to reduce the number of latent variables down to \(\mathcal{O}(10^2)\), e.g., \(n_r=3072\) of turbulent channel flow, the order reduction is conducted by means of CNN only (without MLP).

The Role of Activation Functions in Autoencoder

One of key features in the mathematical procedure of AE is the use of activation functions. This is exactly the reason why neural networks can account for nonlinearlity into their estimations. Here, let us mathematically introduce the strength of nonlinear activation functions for model order reduction by comparing to a proper orthogonal decomposition (POD), which has been known as equivalent to AE with the linear activation function \(z={\phi }(z)\). The details can be seen in some previous studies [42, 76, 77].

We first visit the POD-based order reduction for high-dimensional data matrix \({\varvec{q}}\in {\mathbb R}^{n_{t}\times n_{g}}\) with a reduced basis \({\varvec{\Gamma }}\in {\mathbb R}^{n_{r}\times n_{g}}\), where \(n_t\), \(n_r\) and \(n_g\) denote the numbers of collected snapshots, latent modes and spatial discretized grids, respectively. An optimization procedure of POD can be formulated with an \(L_2\) minimization manner,

where \(\overline{\varvec{q}}\) is the mean value of the high-dimensional data matrix. Next, we consider an AE with the linear activation function \(z={\phi }(z)\), as shown in Fig. 4a. Analogous to Eq. 4, the optimization procedure can be written as

with a reconstructed matrix \(\widehat{\varvec{q}}\), and weights \({\varvec{w}}=[{\varvec{W}},{\varvec{b}},\widehat{\varvec{W}},\widehat{\varvec{b}}]\) in the AE, which contains the weights of encoder \(\varvec{W}\) and decoder \(\widehat{\varvec{W}}\) and the biases of encoder \(\varvec{b}\) and decoder \(\widehat{\varvec{b}}\). Substituting these variables with \({\varvec{q}}\) for Eq. 4, the argmin formulation can be transformed as

Noteworthy here is that Eqs. 4 and 6 are equivalent with each other by replacing the variables as follows,

These transformations tell us that the AE with the linear activation is essentially the same order reduction tool as POD. In other words, with regard to the AE with nonlinear activation functions as illustrated in Fig. 4b, the nonlinear activation functions of encoder \(\phi _e\) and decoder \(\phi _d\) are multiplied to the weights (reduced basis) in Eq. 4, such that

Note that the weights \(\varvec{w}\) including biases are optimized in the training procedure of autoencoder.

As discussed above, the choice of nonlinear activation function is crucial to outperform the traditional linear method. In this study, we consider the use of various activation functions as summarized in Fig. 5. As shown, we cover a wide nature of nonlinear functions which are the well-used candidates, although these are not all of the used functions in the machine learning field. We refer the readers to Nwankpa et al. [78] for details of each nonlinear activation function. For the baseline model of each example, we use the Rectified Linear Unit (ReLU) [79], which has been widely known as a good candidate in terms of weight updates. Note in passing that we use the same activation function at all layers in each model; namely, a case like ReLU for the 1st and 2nd layers and tanh for the 2nd and 3rd layers is not considered. In the present paper, we perform a three-fold cross validation [80], although the representative flow fields with ensemble error values are reported. For readers’ information, the training cost with the representative number of latent variables for each case performed under the NVIDIA TESLA V100 graphics processing unit (GPU) is summarized in Table 3.

Setups for Covered Examples of Fluid Flows

In the present study, we cover a wide range of fluid flow nature considering four examples which include laminar and turbulent flows, as shown in Fig. 6a. The broad spread of complexity in the covered example flows can be also seen in the cumulative singular value spectra presented in Fig. 6b.

Two-Dimensional Cylinder Wake at \(Re_D=100\)

The first example is a two-dimensional cylinder wake at \({Re}_D=100\). The data set is prepared using a two-dimensional direct numerical simulation (DNS) [81]. The governing equations are the continuity and the incompressible Navier–Stokes equation,

where \(\varvec{u}=[u,v]\) and p are the velocity vector and pressure, respectively. All quantities are non-dimensionalized with the fluid density, the free-stream velocity, and the cylinder diameter. The size of the computational domain is (\(L_x, L_y\)) = (25.6, 20.0), and the cylinder center is located at \((x, y)=(9,0)\). A Cartesian grid with the grid spacing of \(\Delta x=\Delta y = 0.025\) is used for the numerical setup, and the number of grid points for the present DNS is \((N_x, N_y)=(1024, 800)\). For the machine learning, only the flow field around the cylinder is extracted as the data set to be used such that \(8.2 \le x \le 17.8\) and \(-2.4 \le y \le 2.4\) with \((N_x^*, N_y^*)=(384, 192)\). We use the vorticity field \(\omega\) as the data attribute. The time interval of flow data is 0.25 corresponding to approximately 23 snapshots per a period with the Strouhal number equals to 0.172. For training the present AE model, we use 1000 snapshots.

Transient Wake at \(Re_D=100\)

We then consider a transient wake behind the cylinder, which is not periodic in time. Since almost the same computational procedure is applied as the case of periodic shedding, let us briefly touch the notable contents. The training data is obtained from the DNS similarly to the first example. Here, the domain is extended three folds in x direction such that \((N^*_x,N^*_y)=(1152,192)\) with \((L^*_x,L^*_y)=(28.8,4.8)\). Also similarly to the first example, we use the vorticity field \(\omega\) as the data attribute. For training the present AE model, we use 4000 snapshots whose sampling interval is finer than that in the periodic shedding case to capture the transient nature correctly.

NOAA Sea Surface Temperature

The third example is the NOAA sea surface temperature data set [82] obtained from satellite and ship observations. The aim in using this data set is to examine the applicability of AE to more practical situations, such as the case without governing equations. We should note that this data set is driven by the influence of seasonal periodicity. The spatial resolution here is \(360\times 180\) based on a one degree grid. We use the 20 years of data corresponding to 1040 snapshots spanning from year 1981 to 2001.

\(y-z\) Sectional Field of Turbulent Channel Flow at \(Re_{\tau }=180\)

To investigate the capability of AE for chaotic turbulence, we lastly consider the use of a cross-sectional (\(y-z\)) streamwise velocity field of a turbulent channel flow at \({Re}_{\tau }=180\) under a constant pressure gradient condition. The data set is prepared by the DNS code developed by Fukagata et al. [83]. The governing equations are the incompressible Navier–Stokes equations, i.e.,

where \(\displaystyle {{\varvec{u}} = [u~v~w]^{\mathrm T}}\) represents the velocity with u, v and w in the streamwise (x), the wall-normal (y) and the spanwise (z) directions. Also, t is the time, p is the pressure, and \({{Re}_\tau = u_\tau \delta /\nu }\) is the friction Reynolds number. The quantities are non-dimensionalized with the channel half-width \(\delta\) and the friction velocity \(u_\tau\). The size of the computational domain and the number of grid points are \((L_{x}, L_{y}, L_{z}) = (4\pi \delta , 2\delta , 2\pi \delta )\) and \((N_{x}, N_{y}, N_{z}) = (256, 96, 256)\), respectively. The grids in the x and z directions are uniform, and a non-uniform grid is applied in the y direction. The no-slip boundary condition is applied to the walls and the periodic boundary condition is used in the x and z directions. We use the fluctuation component of a \(y-z\) sectional streamwise velocity \(u^\prime\) as the representative data attribute for the present AE.

Results

In this section, we examine the influence on the number of latent modes (“Number of Latent Modes”), the choice of activation function for hidden layers (“Choice of Activation Function”), and the number of weights contained in an autoencoder (“Number of Weights”) with the data sets introduced above.

Number of Latent Modes

Let us first investigate the dependence of reconstruction accuracy by autoencoder (AE) on the number of latent variables \(n_r\). The expected trend in this assessment for all data sets is that the reconstruction error would increase with reducing \(n_r\), since the AE needs to discard the information while extracting dominant features from high-dimensional flow fields into a limited dimension of latent space.

The relationship between the number of latent variables \(n_r\) and \(L_2\) error norm \(\epsilon = ||{\varvec{q}}_\mathrm{Ref}-{\varvec{q}}_\mathrm{ML}||_2/||{\varvec{q}}_\mathrm{Ref}||_2\). a Two-dimensional cylinder wake. b Transient flow. c NOAA sea surface temperature. d \(y-z\) sectional streamwise velocity fluctuation of turbulent channel flow. Three-fold cross validation is performed, although not shown

The relationship between the number of latent variables \(n_r\) and \(L_2\) error norm \(\epsilon = ||{\varvec{q}}_\mathrm{Ref}-{\varvec{q}}_\mathrm{ML}||_2/||{\varvec{q}}_\mathrm{Ref}||_2\), where \({\varvec{q}}_\mathrm{Ref}\) and \({\varvec{q}}_\mathrm{ML}\) indicate the reference field and the reconstructed field by an AE, is summarized in Fig. 7. For the periodic shedding case, the structure of whole field can be captured with only two modes as presented in Fig. 7(a). However, the \(L_2\) error norm at \(n_r=2\) is approximately 0.4, which shows a relatively large value despite the fact that the periodic shedding behind a cylinder at \(Re_D=100\) can be represented by only one scalar value [84]. The error value here is also larger than the reported \(L_2\) error norms in the previous studies [49, 85] which handle the cylinder wake at \(Re_D=100\) using AEs with \(n_r=2\). This is likely due to the choice of activation function — in fact, we find a significant difference depending on the choice, which will be explained in “Choice of Activation Function”. It is also striking that the \(L_2\) error at \(n_r=10^1-10^2\) somehow increases. This indicates that the number of weights \(n_w\) contained in the AE has also a non-negligible effect for the mapping ability, since the number of weights is increasing with \(n_r\) in our AE setting as can be seen in Table 1, e.g., \(n_w(n_r=128)>n_w(n_r=256)\). This point will be investigated in “Number of Weights”. We then apply the AE to the transient flow as shown in Fig. 7b. Since the flow in this transient case is also laminar, the whole trend of wake can be represented with a few number of modes, e.g., 2 and 16 modes. The behavior of AE with the data which has no modelled governing equation can be seen in Fig. 7c. As shown, the entire trend of the field can be extracted with only two modes since the considered data here is driven by the seasonal periodicity as mentioned above. The reason for a rise in the error at \(n_r=128\) is likely because of the influence on the number of weights, which is also observed in the cylinder example. We can see a striking difference of AE performance against the previous three examples in Fig. 7d. For the turbulent case, the required number of modes to represent finer scales is visibly larger than those in the other cases since turbulence has a much wider range of scales. The difficulty in compression for turbulence can also be found in terms of \(L_2\) error norms.

To further examine the dependence of reconstruction ability by AEs on the number of latent modes in terms of scales in turbulence, we check the premultiplied spanwise kinetic turbulent energy spectrum \(k_z^+E^+_{uu}(k_z^+)\) in Fig. 8. As shown here, the error level \(||k^+_zE^+_{uu}(k^+_z)_\mathrm{Ref}-k^+_zE^+_{uu}(k^+_z)_\mathrm{ML}||_2/||k^+_zE^+_{uu}(k^+_z)_\mathrm{Ref}||_2\) decreases with increasing \(n_r\), especially on the low-wavenumber space. This implies that the AE model extracts the information in the low-wavenumber region preferentially. Noteworthy here is that the low error level portion suddenly appears in the high-wavenumber region, i.e., white lines at \(n_r=288\) and 1024 in the \(L_2\) error map of Fig. 8. These correspond to the crossing point of the \(E^+_{uu}(k^+_z)\) curves of DNS and AE as can be seen in the lower left of Fig. 8. The under- or overestimation of the kinetic turbulent energy spectrum is caused by a combination of several reasons, e.g., underestimation of \(u^\prime\) because of \(L_2\) fitting and the relationship between a squared velocity and energy such that \(\overline{u^2} = \int E^+_{uu}(k^+_z) dk^+_z\), although it is difficult to separate these into each element. Note that the aforementioned observation is also seen in Scherl et al. [86], who applied a robust principal component analysis to the channel flow.

Choice of Activation Function

Next, we examine the influence of the AE based low dimensionalization on the choice of activation functions. As presented in Fig. 5, we cover a wide range of functions: namely linear mapping, sigmoid, hyperbolic tangent (tanh), rectified linear unit (ReLU, baseline), Leaky ReLU, ELU, Swish, and Softsign. Since the use of activation function is a essential key to account for nonlinearities into the AE-based order reduction as discussed in “The Role of Activation Functions in Autoencoder”, the investigation of the choice here can be regarded as immensely crucial for the construction of AE. For each fluid flow example, we consider the same AE construction and parameters as the models at the first assessment (except for the activation function), which achieved the \(L_2\) error norm of approximately 0.2. Note that an exception is the example of sea surface temperature, since the highest reported \(L_2\) error over the covered number of latent modes is already lower than 0.2. Hence, the numbers of latent modes for each example here are \(n_r=16\) (cylinder wake), 16 (transient), 2 (sea surface temperature), and 1024 (turbulent channel flow), respectively.

Dependence of mapping ability on activation functions. a Two-dimensional cylinder wake. b Transient flow. c NOAA sea surface temperature. d \(y-z\) sectional streamwise velocity fluctuation of turbulent channel flow. Three-fold cross validation is performed, although not shown. Values above contours indicate the \(L_2\) error norm

The dependence of the AE reconstruction on activation functions is summarized in Fig. 9. As compared to the linear mapping \(\phi (z)=z\), the use of nonlinear activation functions leads to improve the accuracy with almost all cases. However, as shown in Fig. 9a, the field reconstructed with sigmoid function \(\phi (z)=(1+e^{-z})^{-1}\) is worse than the others including linear mapping, which shows the mode 0-like field. This is likely because of the widely known fact that the use of sigmoid function has often encountered the vanishing gradient which cannot update weights of neural networks accordingly [78]. The same trend can also be found with the transient flow, as presented in Fig. 9b. To overcome the aforementioned issue, the swish function \(\phi (z)=z/(1+e^{-z})\), which has a similar form to sigmoid, was proposed [87]. This swish can improve the accuracy of the AE based order reduction with the example of periodic shedding, although it is not for the transient. These observations indicate the importance to check the ability of each activation function so as to represent an efficient order reduction. The other notable trend here is the difference of the influence on the selected activation functions depending on the target flows. In our investigation, the appropriate choice of nonlinear activation functions exhibits significant improvement for the first three problem settings, i.e., periodic wake, transient, and sea surface temperature. In contrast, we do not see substantial differences among the nonlinear functions with the turbulence example. It implies that a range of scales contained in flows has also a great impact on the mapping ability of AE with nonlinear activation functions. Summarizing above, a careful choice of activation functions should be taken to implement the AE with acceptable order reduction since the influence and abilities of activation functions highly depend on the target flows which users deal with.

Number of Weights

As discussed in “Number of Latent Modes”, the number of weights contained in AEs may also have an influence for the mapping ability. Here, let us examine this point with two investigations as follows:

-

1.

by changing the amount of parameters in the present AE (“Change of Parameters”)

-

2.

by pruning weights in the present AE (“Pruning Operation”)

Change of Parameters

As mentioned above, the present AE comprises of a convolutional neural network (CNN) and multi-layer perceptrons (MLP). For the assessment here, to change the number of weights in the AE, we tune various parameters in both the CNN and the MLP, e.g., the number of hidden layers in both CNN and MLP, the number of units in MLP, the size and number of filters in CNN. Note in passing that we here only focus on the number of weights, although the way to align the number of weights may also have effects to AE’s ability. For example, we do not consider difference between two cases of \(n_{w,1}=n_{w,2}\) — one model with \(n_{w,1}\) is obtained by tuning the number of layers, while the other with \(n_{w,2}\) is constructed by changing the number of units — although the performance of two may be different caused by the tuned parameters. The same AE construction and parameters as those used in the second assessment with ReLU function are considered, which achieved the \(L_2\) error norm of approximately 0.2 in the first investigation. Similarly to the investigation for activation functions, the numbers of latent modes \(n_r\) for each example are 16 (cylinder wake), 16 (transient), 2 (sea surface temperature), and 1024 (turbulent channel flow), respectively. The dependence of the mapping ability on \(n_w\) is investigated as follows,

-

1.

The number of weights in the MLP \(n_{w,\mathrm{MLP}}\) is fixed, and the number of weights in the CNN \(n_{w,\mathrm{CNN}}\) is changed. The \(L_2\) error is assessed by comparing the original number of weights in the CNN such that \(n_{w,\mathrm{CNN}}/n_{w,\mathrm{CNN:original}}\).

-

2.

The number of weights in the CNN \(n_{w,\mathrm{CNN}}\) is fixed, and the number of weights in the MLP \(n_{w,\mathrm{MLP}}\) is changed. The \(L_2\) error is assessed by comparing the original number of weights in the MLP such that \(n_{w,\mathrm{MLP}}/n_{w,\mathrm{MLP:original}}\).

The number of weights \(n_w=n_{w,\mathrm{CNN}}+n_{w,\mathrm{MLP}}\) in the baseline models is summarized in Table 4. Note again that the CNN first works to reduce the dimension of flows by \(\mathcal{{O}}(10^2)\) and the MLP is then utilized to map into the target dimension as stated in “Machine Learning Schemes for Construction of Autoencoder”. Hence, the considered models for the cylinder wake, transient flow, and sea surface temperature have both the CNN and the MLP. On the other hand, the MLP is not applied to the model for the example of turbulent channel flow, since \(n_r=1024\). Following this reason, only the influence on the weights of CNN is considered for the turbulent case.

The dependence on the number of weights tuned by changing of parameters is summarized in Fig. 10. The expected trend here is that the error decreases with increasing the number of weights, which is seen with the example of turbulent channel flow shown in Fig. 10d. The same trend can also be observed with the parameter modification in CNN for the examples of periodic shedding and transient cylinder flows, as presented in Fig. 10a and b. The error curve for the parameter modification in MLP, however, shows the contrary trend in both cases — the error increases for the larger number of weights. This is likely because there are too many numbers of weights in MLP, and this deepness leads to a difficulty in weight updates, even if we use the ReLU function. With regard to the models of sea surface temperature, the error curves of both CNN and MLP show a valley-like behavior. It implies that these numbers of weights which achieve the lowest errors are appropriate in terms of weight updates. For the channel flow example, the error converges around 0.15 as shown in Fig. 10d, but we should note that it already reaches almost \(n_w/n_{w,\mathrm{original}}=10\). Such a large model will be suffered from a heavy computational burden in terms of both time and storage. Summarizing above, care should be taken for the choice of parameters contained in AE, which directly affect the number of weights, depending on user’s environment, although an increase of number of weights basically leads to acquire a good AE ability. Especially when an AE includes MLP, we have to be more careful since the fully-connected manner inside MLP leads to the exponential increase for the number of weights.

Pruning Operation

Through the investigations above, we found that the appropriate parameter choice enables the AE to improve its mapping ability. Although we may encounter the problem of weight updates as reported above, increasing the number of weights \(n_w\) is also essential to achieve a better AE compression. However, if the AE model could be constructed well with a large number of weights, the increase of \(n_w\) also directly corresponds to a computational burden in terms of both time and storage for a posteriori manner. Hence, our next interest here is whether an AE model trained with a large number of weights can maintain its mapping ability with a pruning operation or not. The pruning is a method to cut edges of connection in neural networks and has been known as a good candidate to reduce the computational burden while keeping the accuracy in both classification and regression tasks [88]. We here assess the possibility to use the pruning for AEs with fluid field data. As illustrated in Fig. 11, we do pruning by taking a threshold based on a maximum value of weights per each layer such that \(|W|_\mathrm{th}=\mu |W|_\mathrm{max}\), where W and \(\mu\) express weights and a sparsity threshold coefficient, respectively. An original model without pruning corresponds to \(\mu =0\). The pruning for the CNN is also performed as well as MLP. For this assessment, we consider the best models in our investigation for activation functions (“Choice of Activation Function”), as summarized in Table 5.

Pruning for AEs with fluid flows. a Relationship between the sparsity threshold coefficient \(\mu\) and the sparsity factor \(\gamma =1-n_w/n_{w,\mathrm{original}}\). The number of singular modes which achieves \(80\%\) cumulative energy \(n_{M,~e=0.8}\) is also shown. b Relationship between a sparsity factor \(\gamma\) and \(L_2\) error norm \(\epsilon\). c Representative flow fields of each example

Let us present in Fig. 12 the results of pruning operation for the AEs with fluid flows. To check the weight distribution of AEs for each problem setting, the relationship between the sparsity threshold coefficient \(\mu\) and the sparsity factor \(\gamma =1-n_w/n_{w,\mathrm{original}}\) is studied in Fig. 12a. With all cases, \(\mu\) and \(\gamma\) have a proportional relationship as can be expected. Noteworthy here is that the sparsity of the model for the turbulent channel flow is lower than that for the others over the considered \(\mu\). This indicates the significant difference in the weight distribution in the AEs in the sense that the contribution of the weights in the turbulence example for reconstruction is distributed more uniformly across the entire system. In contrast, for the laminar cases, i.e., the periodic shedding and transient flows, a fewer number of weights has the higher magnitudes and a contribution for reconstruction than the turbulence case. The curve of sea surface temperature model shows the intermediate behavior between them. It is also striking that the aforementioned trend is analogous to the singular value spectra in Fig. 6. The relationship between the sparsity factor \(\gamma\) and the \(L_2\) error norm with some representative flow fields are shown in Fig. 12b and c. As presented, the reasonable recovery can be performed up to approximately 10–20% pruning. For reader’s information, the number of singular modes which achieves 80% cumulative energy \(n_{M,~e=0.8}\) is presented in Fig. 12a. Users can decide the coefficient \(\gamma\) to attain a target error value depending on target flows. Summarizing above, the efficient order reduction by AE can be carried out by considering the considerable methods and parameters as introduced through the present paper.

Concluding Remarks

We presented the assessment of neural network based model order reduction method, i.e., autoencoder (AE), for fluid flows. The present AE which comprises of a convolutional neural network and multi-layer perceptrons was applied to four data sets, i.e., two-dimensional cylinder wake, transient process, NOAA sea surface temperature, and turbulent channel flow. The model was evaluated in terms of various considerable parameters in deciding a construction of AE. With regard to the first investigation for the number of latent modes, it was clearly seen that the mapping ability of AE highly depends on the target flows, i.e., complexity. In addition, the first assessment enables us to notice the importance of investigation for the choice of activation function and the effect of number of weights contained in the AE. Motivated by the first assessment, the choice of activation function was then considered. We found that we need to be careful for the choice of of activation functions at hidden layers of AE so as to achieve the effective order reduction because the influence of activation functions highly depends on target flows. At last, the dependence of the reconstruction accuracy by the AE on the number of weights contained in the model was assessed. We exhibited that care should be taken to change the amount of parameters of AE models because we may encounter the problem of weight updates by using many numbers of weights. The use of pruning was also examined as one of the considerable candidates to reduce the number of weights. Although it has been known that the capability of neural networks may be improved by re-training of pruned neural networks [89], the possibility of this strategy will be tackled in future.

We should also discuss remaining issues and considerable outlooks regarding the present form of AE. One of them is the interpretability of the latent space, which may be regarded as one of the weaknesses of neural network-based model order reduction due to its black-box characteristics. Since the AE-based modes are not orthogonal with each other, it is still hard to understand the role of each latent vector for reconstruction [44]. To tackle this issue, Murata et al. [49] proposed a customized CNN-AE which can visualize individual modes obtained by the AE, considering a laminar cylinder wake and its transient process. However, they also reported that the applications to flows requiring a lot of spatial modes for their representation, e.g., turbulence, are still challenging with their formulation. This is because the structure of the proposed AE would be more complicated with increasing the complexity of target problems, and this eventually leads to difficulty in terms of interpretability. The difficulty in applications to turbulence could also be found in this paper, as discussed above. More recently, Fukami et al. [85] have attempted to use a concept of hierarchical AE to handle turbulent flows efficiently, although it still needs further improvement for handling turbulent flows in a sufficiently interpretable manner. Although the aforementioned challenges are just examples, through such continuous efforts, we hope to establish in near future more efficient and elegant model order reduction with additional AE designs.

Furthermore, of particular interest here is how we can control a high-dimensional and nonlinear dynamical system by using the capability of AE demonstrated in the present study, which can promote efficient order reduction of data thanks to nonlinear operations inside neural networks. From this viewpoint, it is widely known that capturing appropriate coordinates capitalizing on such model order reduction techniques including AE considered in the present study is also important since being able to describe dynamics on a proper coordinate system enables us to apply a broad variety of modern control theory in a computationally efficient manner while keeping its interpretability and generaliziability [90]. To that end, there are several studies to enforce linearity in the latent space of AE [91, 92], although their demonstrations are still limited to low-dimensional problems that are distanced from practical applications. We expect that the knowledge obtained through the present study, which addresses complex fluid flow problems, can be transferred to such linear-enforced AE techniques towards more efficient control of nonlinear dynamical systems in near future.

References

Lumley JL. In: Yaglom AM, Tatarski VI, editors. Atmospheric turbulence and radio wave propagation. Nauka; 1967.

Schmid PJ. Dynamic mode decomposition of numerical and experimental data. J Fluid Mech. 2010;656:5–28.

McKeon BJ, Sharma AS. A critical-layer framework for turbulent pipe flow. J Fluid Mech. 2010;658:336–82.

Taira K, Brunton SL, Dawson STM, Rowley CW, Colonius T, McKeon BJ, Schmidt OT, Gordeyev S, Theofilis V, Ukeiley LS. Modal analysis of fluid flows: an overview. AIAA J. 2017;55(12):4013–41.

Taira K, Hemati MS, Brunton SL, Sun Y, Duraisamy K, Bagheri S, Dawson S, Yeh CA. Modal analysis of fluid flows: applications and outlook. AIAA J. 2020;58(3):998–1022.

Noack BR, Morzynski M, Tadmor G. Reduced-order modelling for flow control, vol. 528. Berlin: Springer Science & Business Media; 2011.

Noack BR, Papas P, Monkewitz PA. The need for a pressure-term representation in empirical Galerkin models of incompressible shear flows. J Fluid Mech. 2005;523:339–65.

Bagheri S. Koopman-mode decomposition of the cylinder wake. J Fluid Mech. 2013;726:596–623.

Liu Q, An B, Nohmi M, Obuchi M, Taira K. Core-pressure alleviation for a wall-normal vortex by active flow control. J Fluid Mech. 2018;853:R1.

Schmid PJ. Application of the dynamic mode decomposition to experimental data. Exp Fluids. 2011;50(4):1123–30.

Yeh CA, Taira K. Resolvent-analysis-based design of airfoil separation control. J Fluid Mech. 2019;867:572–610.

Nakashima S, Fukagata K, Luhar M. Assessment of suboptimal control for turbulent skin friction reduction via resolvent analysis. J Fluid Mech. 2017;828:496–526.

Brunton SL, Noack BR, Koumoutsakos P. Machine learning for fluid mechanics. Annu Rev Fluid Mech. 2020;52:477–508.

Brenner MP, Eldredge JD, Freund JB. Perspective on machine learning for advancing fluid mechanics. Phys Rev Fluids. 2019;4:100,501.

Kutz JN. Deep learning in fluid dynamics. J Fluid Mech. 2017;814:1–4.

Duriez T, Brunton SL, Noack BR. Machine learning control-taming nonlinear dynamics and turbulence, vol. 116. Berlin: Springer; 2017.

Duraisamy K. Perspectives on machine learning-augmented Reynolds-averaged and large eddy simulation models of turbulence. Phys Rev Fluids. 2021;6(5):050504.

Duraisamy K, Iaccarino G, Xiao H. Turbulence modeling in the age of data. Annu Rev Fluid Mech. 2019;51:357–77.

Ling J, Kurzawski A, Templeton J. Reynolds averaged turbulence modelling using deep neural networks with embedded invariance. J Fluid Mech. 2016;807:155–66.

Gamahara M, Hattori Y. Searching for turbulence models by artificial neural network. Phys Rev Fluids. 2017;2(5):054604.

Maulik R, San O. A neural network approach for the blind deconvolution of turbulent flows. J Fluid Mech. 2017;831:151–81.

Maulik R, San O, Jacob JD, Crick C. Sub-grid scale model classification and blending through deep learning. J Fluid Mech. 2019;870:784–812.

Maulik R, San O, Rasheed A, Vedula P. Subgrid modelling for two-dimensional turbulence using neural networks. J Fluid Mech. 2019;858:122–44.

Yang X, Zafar S, Wang JX, Xiao H. Predictive large-eddy-simulation wall modeling via physics-informed neural networks. Phys Rev Fluids. 2019;4(3):034602.

Wu JL, Xiao H, Paterson E. Physics-informed machine learning approach for augmenting turbulence models: a comprehensive framework. Phys Rev Fluids. 2018;3(7):074,602.

Fukami K, Nabae Y, Kawai K, Fukagata K. Synthetic turbulent inflow generator using machine learning. Phys Rev Fluids. 2019;4:064,603.

Salehipour H, Peltier WR. Deep learning of mixing by two ‘atoms’ of stratified turbulence. J Fluid Mech. 2019;861:R4.

Cai S, Zhou S, Xu C, Gao Q. Dense motion estimation of particle images via a convolutional neural network. Exp Fluids. 2019;60:60–73.

Fukami K, Fukagata K, Taira K. Super-resolution reconstruction of turbulent flows with machine learning. J Fluid Mech. 2019;870:106–20.

Fukami K, Fukagata K, Taira K. in 11th International Symposium on Turbulence and Shear Flow Phenomena (TSFP11). Southampton: UK; 2019. p. 208.

Huang J, Liu H, Cai W. Online in situ prediction of 3-D flame evolution from its history 2-D projections via deep learning. J Fluid Mech. 2019;875(R2).

Morimoto M, Fukami K, Fukagata K. Experimental velocity data estimation for imperfect particle images using machine learning. Phys. Fluids 2021;33:087121. https://doi.org/10.1063/5.0060760.

Fukami K, Fukagata K, Taira K. Machine-learning-based spatio-temporal super resolution reconstruction of turbulent flows. J Fluid Mech. 2021;909(A9). https://doi.org/10.1017/jfm.2020.948.

Morimoto M, Fukami K, Zhang K, Fukagata K. Generalization techniques of neural networks for fluid flow estimation. 2020. arXiv:2011.11911.

Erichson NB, Mathelin L, Yao Z, Brunton SL, Mahoney MW, Kutz JN. Shallow neural networks for fluid flow reconstruction with limited sensors. Proc Roy Soc A. 2020;476(2238):20200,097.

Matsuo M, Nakamura T, Morimoto M, Fukami K, Fukagata K. Supervised convolutional network for three-dimensional fluid data reconstruction from sectional flow fields with adaptive super-resolution assistance. 2021. arXiv:2103.090205

Morimoto M, Fukami K, Zhang K, Nair AG, Fukagata K. Convolutional neural networks for fluid flow analysis: toward effective metamodeling and low-dimensionalization. Theor Comput Fluid Dyn. 2021. https://doi.org/10.1007/s00162-021-00580-0.

San O, Maulik R. Extreme learning machine for reduced order modeling of turbulent geophysical flows. Phys Rev E. 2018;97:04,322.

Maulik R, Fukami K, Ramachandra N, Fukagata K, Taira K. Probabilistic neural networks for fluid flow surrogate modeling and data recovery. Phys Rev Fluids. 2020;5:104,401.

Srinivasan PA, Guastoni L, Azizpour H, Schlatter P, Vinuesa R. Predictions of turbulent shear flows using deep neural networks. Phys Rev Fluids. 2019;4:054,603.

Milano M, Koumoutsakos P. Neural network modeling for near wall turbulent flow. J Comput Phys. 2002;182:1–26.

Baldi P, Hornik K. Neural networks and principal component analysis: learning from examples without local minima. Neural Netw. 1989;2(1):53–8.

Fukami K, Fukagata K, Taira K. Assessment of supervised machine learning for fluid flows. Theory Comput Fluid Dyn. 2020;34(4):497–519.

Omata N, Shirayama S. A novel method of low-dimensional representation for temporal behavior of flow fields using deep autoencoder. AIP Adv. 2019;9(1):015,006.

Hasegawa K, Fukami K, Murata T, Fukagata K. Data-driven reduced order modeling of flows around two-dimensional bluff bodies of various shapes, ASME-JSME-KSME Joint Fluids Engineering Conference, San Francisco, USA (Paper 5079) (2019)

Hasegawa K, Fukami K, Murata T, Fukagata K. Machine-learning-based reduced-order modeling for unsteady flows around bluff bodies of various shapes. Theory Comput Fluid Dyn. 2020;34(4):367–88.

Hasegawa K, Fukami K, Murata T, Fukagata K. CNN-LSTM based reduced order modeling of two-dimensional unsteady flows around a circular cylinder at different Reynolds numbers. Fluid Dyn Res. 2020;52:065,501.

Nakamura T, Fukami K, Hasegawa K, Nabae Y, Fukagata K. Convolutional neural network and long short-term memory based reduced order surrogate for minimal turbulent channel flow. Phys Fluids. 2021;33:025,116.

Murata T, Fukami K, Fukagata K. Nonlinear mode decomposition with convolutional neural networks for fluid dynamics. J Fluid Mech. 2020;882(A13). https://doi.org/10.1017/jfm.2019.822.

Erichson NB, Muehlebach M, Mahoney MW. Physics-informed autoencoders for lyapunov-stable fluid flow prediction. 2019. arXiv:1905.10866.

Carlberg KT, Jameson A, Kochenderfer MJ, Morton J, Peng L, Witherden FD. Recovering missing CFD data for high-order discretizations using deep neural networks and dynamics learning. J Comput Phys. 2019;395:105–24.

Xu J, Duraisamy K. Multi-level convolutional autoencoder networks for parametric prediction of spatio-temporal dynamics. Comput Method Appl. 2020;M 372:113,379.

Maulik R, Lusch B, Balaprakash P. Reduced-order modeling of advection-dominated systems with recurrent neural networks and convolutional autoencoders. Phys Fluids. 2021;33:037,106.

Liu Y, Ponce C, Brunton SL, Kutz JN. Multiresolution convolutional autoencoders. 2020. arXiv:2004.04946.

Saku Y, Aizawa M, Ooi T, Ishigami G. Spatio-temporal prediction of soil deformation in bucket excavation using machine learning. Adv Robot. 2021. https://doi.org/10.1080/01691864.2021.1943521.

Amsallem D, Farhat C. Interpolation method for adapting reduced-order models and application to aeroelasticity. AIAA J. 2008;46(7):1803–13.

Brunton SL, Nathan Kutz J, Manohar K, Aravkin AY, Morgansen K, Klemisch J, Goebel N, Buttrick J, Poskin J, Blom-Schieber AW, et al. Data-driven aerospace engineering: reframing the industry with machine learning. AIAA J. 2021;59(8):2820–47.

Cheng TY, Li N, Conselice CJ, Aragón-Salamanca A, Dye S, Metcalf RB. Identifying strong lenses with unsupervised machine learning using convolutional autoencoder. Mon Not R Astron Soc. 2020;494(3):3750–65.

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–324.

Fukami K, Maulik R, Ramachandra N, Fukagata K, Taira K. Global field reconstruction from sparse sensors with Voronoi tessellation-assisted deep learning. Nat Mach Intell. 2021; arXiv:2101.00554.

Lee S, You D. Data-driven prediction of unsteady flow fields over a circular cylinder using deep learning. J Fluid Mech. 2019;879:217–54.

Kim J, Lee C. Prediction of turbulent heat transfer using convolutional neural networks. J Fluid Mech. 2020;882:A18.

Lee S, You D, Mechanisms of a convolutional neural network for learning three-dimensional unsteady wake flow. 2019. arXiv:1909.06042.

Moriya N, Fukami K, Nabae Y, Morimoto M, Nakamura T, Fukagata K. Inserting machine-learned virtual wall velocity for large-eddy simulation of turbulent channel flows. 2021. arXiv:2106.09271.

Nakamura T, Fukami K, Fukagata K. Comparison of linear regressions and neural networks for fluid flow problems assisted with error-curve analysis. 2021. arXiv:2105.00913.

Le Q, Ngiam J, Chen Z, Chia D, Koh P. Tiled convolutional neural networks. Adv Neural Inf Proc Syst. 2010;23:1279–87.

Kingma DP, Ba J. Adam: A method for stochastic optimization. 2014. p. arXiv:1412.6980.

Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagation errors. Nature. 1986;322:533–6.

Domingos P. A few useful things to know about machine learning. Commun ACM. 2012;55(10):78–87.

Lui HFS, Wolf WR. Construction of reduced-order models for fluid flows using deep feedforward neural networks. J Fluid Mech. 2019;872:963–94.

Yu J, Hesthaven JS. Flowfield reconstruction method using artificial neural network. AIAA J. 2019;57(2):482–98.

Bergstra J, Yamins D, Cox D. Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures. Int Conf Mach Learn. 2013; 28:115–23.

Brochu E, Cora V, Freitas de N. A tutorial on Bayesian optimization of expensive cost functions, with application to active user modeling and hierarchical reinforcement learning, Technical Report TR-2009-023, University of British Columbia 2009

Maulik R, Mohan A, Lusch B, Madireddy S, Balaprakash P. Time-series learning of latent-space dynamics for reduced-order model closure. Physica D. 2020;405:132,368.

Prechelt L. Automatic early stopping using cross validation: quantifying the criteria. Neural Netw. 1998;11(4):761–7.

Bourlard H, Kamp Y. Auto-association by multilayer perceptrons and singular value decomposition. Biol Cybern. 1988;59(4–5):291–4.

Oja E. Simplified neuron model as a principal component analyzer. J Math Biol. 1982;15(3):267–73.

Nwankpa C, Ijomah W, Gachagan A, Marshall S. Activation functions: Comparison of trends in practice and research for deep learning. 2018. p. arXiv:1811.03378.

Nair V, Hinton GE. Rectified linear units improve restricted Boltzmann machines. Proceedings of the 27th International Conference on Machine Learning 2010; 807–14.

Brunton SL, Kutz JN. Data-driven science and engineering: machine learning, dynamical systems, and control. Cambridge: Cambridge University Press; 2019.

Kor H, Badri Ghomizad M, Fukagata K. A unified interpolation stencil for ghost-cell immersed boundary method for flow around complex geometries. J Fluid Sci Technol. 2017;12(1):JFST0011. https://doi.org/10.1299/jfst.2017jfst0011.

Available on https://www.esrl.noaa.gov/psd/

Fukagata K, Kasagi N, Koumoutsakos P. A theoretical prediction of friction drag reduction in turbulent flow by superhydrophobic surfaces. Phys Fluids. 2006;18:051,703.

Taira K, Nakao H. Phase-response analysis of synchronization for periodic flows. J Fluid Mech. 2018;846:R2.

Fukami K, Nakamura T, Fukagata K. Convolutional neural network based hierarchical autoencoder for nonlinear mode decomposition of fluid field data. Phys Fluids. 2020;32:095,110.

Scherl I, Storm B, Shang JK, Williams O, Polagye BL, Brunton SL. Robust principal component analysis for modal decomposition of corrupt fluid flows. Phys Rev Fluids. 2020;5:054,401.

Ramachandran P, Zoph B, Le QV. Searching for activation functions. 2017. arXiv:1710.05941.

Han S, Mao H, Dally WJ. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. 2015. arXiv:1510.00149.

Han S, Pool J, Tran J, Dally W. Learning both weights and connections for efficient neural networks, Adv. Neural Inf. Process. 2015;1:1135–43.

Fukami K, Murata T, Zhang K, Fukagata K. Sparse identification of nonlinear dynamics with low-dimensionalized flow representations. J. Fluid Mech. 2021;926(A10). https://doi.org/10.1017/jfm.2021.697.

Lusch B, Kutz JN, Brunton SL. Deep learning for universal linear embeddings of nonlinear dynamics. Nat Commun. 2018;9(1):4950.

Champion K, Lusch B, Kutz JN, Brunton SL. Data-driven discovery of coordinates and governing equations. Proc Natl Acad Sci USA. 2019;116(45):22445–51.

Acknowledgements

This work was supported from Japan Society for the Promotion of Science (KAKENHI Grant Number: 18H03758, 21H05007).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors report no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fukami, K., Hasegawa, K., Nakamura, T. et al. Model Order Reduction with Neural Networks: Application to Laminar and Turbulent Flows. SN COMPUT. SCI. 2, 467 (2021). https://doi.org/10.1007/s42979-021-00867-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-021-00867-3