Abstract

There are various fields are affected by the growth of data dimensionality. The major problems which are resulted from high dimensionality of data including high memory requirements, high computational cost, and low machine learning classifier performance. Therefore, proper selection of relevant features from the set of available features and the removal of irrelevant features will solve these problems. Therefore, to solve the feature selection problem, an improved version of Dragonfly Algorithm (DA) is proposed by combining it with Simulated Annealing (SA), where the improved algorithm named BDA-SA. To solve the local optima problem of DA and enhance its ability in selecting the best subset of features for classification problems, Simulated Annealing (SA) was applied to the best solution found by Binary Dragonfly algorithm in attempt to improve its accuracy. A set of frequently used data sets from UCI repository was utilized to evaluate the performance of the proposed FS approach. Results show that the proposed hybrid approach, named BDA-SA, has superior performance when compared to wrapper-based FS methods including a feature selection method based on the basic version of Binary Dragonfly Algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Recently, data mining field has become an active research area due to presence of the huge amount of data in digital format that needs to be transformed into useful information. The main task of data mining is to build models for the discovery of useful hidden patterns in collections of huge data. It is considered as an essential step in the knowledge discovery process [16]. Preprocessing of data is a critical step in data mining. It has a direct impact on data mining techniques such as classification. It affects the quality of discovered patterns and the accuracy of the classification models [16, 25]. Feature selection is one of the main pre-processing steps in data mining that aims to discard noisy and irrelevant features while retaining the useful and informative ones. Selecting the ideal or near ideal subset of given features leads to accurate classification results and less computational cost [6, 25]. Feature selection approaches are classified based on estimation criteria of selected subset of features into two classes filter and wrapper approaches [6]. Wrapper techniques heavily rely on their search for optimal subset of features on the accuracy of machine learning classifiers such as KNN or SVM, whereas filter techniques use scoring matrices such as chi-square and information gain to assess the goodness of the selected subset of features. More precisely, in filter approaches, attributes are ranked using a filter approach, e.g., chi-square, and then the attributes with less than a predefined threshold are removed [1, 6, 14].

Generally speaking, finding an optimal subset of features is a challenging task. FS has gained the interest of many researchers in data mining and machine learning fields [8, 14]. The literature shows that meta-heuristic techniques have been very effective in tackling many optimization problems like machine learning, engineering design, data mining, production problems and feature selection [38]. For feature selection problem, Genetic algorithm (GA) and Particle swarm optimization algorithm (PSO) have been successfully utilized for solving many feature selection problems [6, 7, 13, 17]. Moreover, many biologically inspired approaches such as simulated annealing (SA) [29], Tabe search (TS) [45], Ant Colony Optimization (ACO) [22], Binary Bat Algorithm [32]. Moth-Flame Optimization Algorithm [44], Antlion Optimization Algorithm [43], Dragonfly Optimization Algorithm [26], and Whale Optimization Algorithm (WOA) [35] have been efficiently applied to discover the best subsets of features for many classification problems.

As discussed in [37, 38], when designing and using meta-heuristic techniques, two main different criteria must be considered: diversification which refers to search space exploration, and intensification which means the exploitation of the optimal solution (e.,g., best subset of features found so far). Based on these criteria, meta-heuristic techniques can be distinguished into two branches. Population-based meta-heuristics (e.,g., PSO) and single-solution based techniques such as SA and TS which are biased towards exploitation. The performance of the search algorithm can be improved if an appropriate balance between the exploration and the exploitation is achieved. The desired balance between exploration and exploitation can be achieved via combining two techniques, e.g., population-based approach and single-solution based approach.

Various hybrid meta-heuristic approaches have been proposed for solving wide range of optimization problem. Hybrid approaches have also been popular, because they benefit from advantages of two or more algorithms [37]. In [18], to control the search procedure, local search approaches were embedded in GA algorithm. Obtained results show better performance in comparison with classical versions of GA. In [42], simulated annealing algorithm in conjunction with Genetic Algorithms were used to optimize industrial production management problem. In addition, a hybrid approach based on Markov chain and simulated annealing algorithms was designed to tackle the travel salesman problem [28]. Furthermore, in [4], Particle Swarm Optimization algorithm was hybridized with Simulated Annealing algorithm to avoid PSO in getting trapped in local optima. The designed approach was applied to deal with complex multi-dimensional optimization problem. Finally, in [3], the ACO algorithm was used in conjunction with a GA as a hybrid approach for feature selection in text classification domain. The proposed approach recorded better results in comparison with filter approaches and the classic version of ACO. Despite the effectiveness that meta-heuristic algorithms have shown to solve many optimization problems, they have some shortcomings such as their sensitivity to parameter tuning, finding the optimal parameters for difference optimization problems is very important. Furthermore, in terms of performance, many of the present optimization algorithms have difficulties in dealing with high dimension optimization problems [5]. In addition, the computational cost of using meta-heuristics in general and the hybrid meta-heuristic approaches in particular is relatively high.

In this work, SA is used to enhance the best solution found so far (subset of features) by the Binary Dragonfly Optimizer. The classic version of Binary Dragonfly Algorithm was used for feature selection [26], but to best of our knowledge, this is the first time that Binary Dragonfly hybridized with Simulated Annealing is utilized in feature selection domain. The remaining of this paper is organized as follows: related work is reviewed in section “Related Work”, whereas section “Algorithms” presents the BDA and SA Algorithms. Section “Proposed Feature Selection Method” explains the proposed FS approach. In section “Experiments”, the experimental results are presented and discussed. Conclusion and future work are shown in section “Conclusion”.

In this paper, there are number of key contributions as follows:

-

BDA-SA: an improved version of the standard binary version of BDA is proposed.

-

The main improvement includes the combination of DA with SA to solve the local optima problem of standard BDA.

-

BDA-SA wrapper feature selection model is developed in this paper.

-

We evaluated and compared BDA-SA with a number of well-known algorithms including (BDA, PSO, BGWO and BALO).

-

We compared the results with using all features, where we used 18 data sets from UCI repository which are frequently used by feature selection research. and ALO) using 18 benchmark data sets from UCI repository. From these results, it is clearly confirmed the superiority of BDA-SA in comparison to these baseline algorithms.

Related Work

Based on literature investigation, many optimization algorithms were improved by combining them with local search algorithms (LSA). For example, in a study by [11], Elgamal et al. improved Harris Hawks Optimization (HHO) Algorithm by SA and applied it for feature selection problem. In [2], the authors also improved water cycle optimization with SA and applied it for spam email detection. In work by [41], performance of Salp Algorithm (SSA) was enhanced by combining it with a new developed LSA and applied it for feature selection problem. Also, in [40], WOA was combined with new a new local search algorithm to overcome the problem of local optima, and applied it for rules selection problem. Furthermore, in [21], Jia et al. improved spotted hyena optimization using SA and applied it for feature selection problem. Also, Simulated Annealing was hybridized with GA in [27] to be utilized as feature selection method for the classification of power disturbance in the Power Quality problem. In [33], Genetic Algorithm GA was used with SA to extract features to tackle the examination timetabling problem. A local search strategy was embedded in Particle Swarm Optimization algorithm to guide the PSO during the search process for the best subset of feature in classification [31]. Mafarja and Mirjalili [25] proposed two hybrid wrapper feature selection approaches based on Whale Optimization and Simulated Annealing algorithms. The aim of using SA is to enhance the exploitation of WOA. Results showed that the proposed approaches improved the classification accuracy in comparison with other wrapper feature selection techniques.

The literature also revealed that many successful efforts were done to improve the performance of Dragonfly optimization algorithm. For instance, in [19], Sayed et al. used several chaotic maps to adjust the movement parameters of dragonflies of DA algorithm through the iterations to accelerate its convergence rate. Hammouri et al. in [15] adopted several functions such as linear, quadratic, and sinusoidal for updating the main coefficients of Binary Dragonfly algorithm. Also, a hyper learning strategy was utilized by [39] to boost the Binary Dragonfly algorithm to avoid local optima, and enhance its search behaviour for an ideal subset of features. the proposed method was applied to a coronavirus disease (COVID-19) data set. Experimental results demonstrated the ability of hyper learning based BDA in improving the classification accuracy. Finally, in [34], Qasim et al. proposed a feature selection approach in which the binary dragonfly algorithm (BDA) was hybridized with statistical dependence (SD). The proposed hybrid approach confirmed its efficiency in increasing the classification accuracy. Thus, based on the achieved results and improvements conducted in these mentioned studies, it has motivated us to combine SA as a LSA with DA to improve its search ability for feature selection problem.

Algorithms

Dragonfly Algorithm

Dragonfly Algorithm is a recent biologically inspired optimization approach which was proposed by Seyedali Mirjalili in 2015 [30]. It was found that dragonfly swarming behavior depends on two sorts of swarming behavior: hunting and migration [30, 36]. Hunting swarm of dragonflies moves in small subgroups over a restricted area to find and hunt preys. This behavior was utilized to simulate the exploration part of optimization process. In the migration behavior, in contrast with hunting swarm, dragonflies move a long one direction in bigger subgroups. This behavior was exploited to simulate the exploitation part of the optimization [30, 36]. Generally, the aim of swarm members is to co-operate to discover food places, and to protect themselves form danger of enemies. Based on these two aims, a set of factors is mathematically modeled for adjusting the positions of members in the swarm. The mathematical models for implementing the swarming behavior of dragonfly insects are given as follows [12, 26, 30]:

Separation indicates the way that flying dragonflies follow to avoid clashes between themselves. This can be mathematically written as in Eq. (1):

where X refers to the current search agent, while \(X_i\) denotes the \(j-\)th neighbor of X. N represents the number of neighbors. Alignment refers to the way of adjusting the velocity of an individual with respect to the velocity vector of other close dragonflies in the swarm. This can be mathematically written using Eq. (2):

where \(V_{j}\) refers to the j-th neighbor’s velocity vector.

Cohesion is a factor for position update of search agents that represents the desire of search agents to travel towards the mass center. It is mathematically written as in Eq. (3):

Attraction denotes the interest of search agents to travel in direction of food location. The tendency of \(i-\)th member in the swarm to move towards the food source is obtained using Eq. (4):

where \(F_{location}\) refers to the location of food source, and X refers to the current member.

Distraction refers to mechanism that dragonflies follow to flee from enemy. The distraction of \(i-\)th dragonfly is defined as in Eq. (5):

where \(E_{location}\) presents current position of the enemy, and X is the position of the current member.

To find the optimum solution for a given optimization problem, DA defines a position vector and a step vector for each search agent in the swarm. These vectors are utilized to update the positions of search agents in the search space of the given optimization problem. The step vector which refers to travelling direction of dragonflies is formulated as follow [26, 30]:

where s, a, c, f, and e are known as weighting factors for separation (\(S_i\)), alignment (\(A_i\)), cohesion (\(C_i\)), attraction (\(F_i\)) and distraction (\(E_i\)) of the i-th search agent, respectively. w refers to the inertia weight.

The obtained step vector (\(\varDelta X\)) is used to estimate the position vector of search agent X as follows:

where t indicates the current iteration.

The basic version of Dragonfly optimizer is proposed for the problems in continuous search space. The dragonflies can update their position by adding the step vector to the position vector. Feature selection is a binary optimization problem, so the update strategy as in Eq. (7) is not possible for binary search space. Mirjalili [30] utilized the following transfer function to convert the step vector values to a number restricted in [0,1].

The above transfer function is used to find the probability of updating the position of dragonflies in the swarm, and then the following equation is employed to update the positions of dragonflies (search agents):

where r is a number in the range of [0,1].

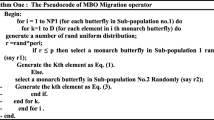

Algorithm 1 presents the pseudocode of Binary Dragonfly algorithm.

Simulated Annealing

SA is a single-solution based meta-heuristic optimization algorithm, introduced by Kirkpatrick et al. [23] in 1983. It has been widely used to tackle discrete and continuous optimization problems. SA is classified as a hill-climbing local search approach, in which a certain probability is used to decide weather to accept worse solution or not [23]. SA generates an initial solution (in our case, the best solution found so far by BDA is used as SA initial solution). A neighbour solution to the optimal one found so far is generated by SA based on fitness value of the neighbour and a specific neighbourhood structure. If the calculated fitness of neighbour is better (less or equal) than the fitness of optimal solution, then the neighbour solution is selected as the optimal one. Boltzmann probability, \(P=e^{-\frac{\Phi }{T}}\) is applied as an acceptance condition of the neighbour solution. \(\Phi\) refers to the difference between the fitness of the optimal and neighbour solutions, and T is named temperature which is gradually reduced based on cooling schedule throughout the search procedure [20, 23, 25]. In this paper, as adopted in [25], the initial temperature equals \(2*|N|\), where N is the number of features in each data set, and \(T= 0.93* T\), is applied to calculate the cooling schedule. Algorithm 2 presents the pseudocode of SA algorithm [25].

Proposed Feature Selection Method

Feature selection problem is distinguished as a binary optimization problem, so binary vectors are used to represent the solutions. In this way, if the value of a specific cell in the binary vector is set to 1, then the corresponding feature is retained. Otherwise, that feature is ignored. The size of the binary vector is equal to the number of features in the data set. Dragonfly optimization algorithm is a newly introduced optimization approach. The basic version of binary DA algorithm was used for feature selection in [26]. The main aim of this work is to improve the performance of BDA for feature solution problem. To achieve that purpose, the best solution obtained so for by BDA is passed to the SA algorithm to be used as an initial solution instead of the random generation of the initial solution. Therefore, SA will conduct a local search starting with the optimal solution found so far by BDA in attempt to find a better one. Figure 1 presents the flowchart of the proposed approach.

Feature selection is a multi-objective optimization problem, where the maximum accuracy of the classifier and least number of selected features are two related objectives need to be achieved. Eq. (10) is commonly used as fitness function for feature selection [6, 25, 26].

where er denotes the error rate of KNN machine learning classifier using the selected subset of features. \(\propto\) and \(\beta\) are two parameters used to make a balance between the classification accuracy and the size of subset of features (selected by the search agent), \(\propto\) is a number restricted in the range [0,1], and \(\beta\) equals 1 - \(\propto\). N refers to the total number of features in the data set, and m indicates the cardinality of the subset of features selected by the search agent. In this work, since we are mostly interested in getting the highest classification accuracy, \(\propto\) is set equal to 0.99 as in the previous work [26]. The value \(\propto\) is set 0.99, because in this work we improved the binary version of DA algorithm which was developed in [26], and we used the same setting in our experiments to compare our results with the results were reported in BDA [26].

In general, diversification has larger importance than intensification in exploring potentially useful areas of the feature space especially at the beginning of the search process. In later phases, exploitation has larger importance, because the search for better solutions around the best one found by the exploration phase is required [24]. Hybrid approaches such as BDA-SA in our case can be used to achieve the desired balance between exploring and exploiting the search space. However, in comparison with classic wrapper approaches, where a heuristic technique and an evaluator are used, the computational cost of utilizing hybrid approaches is higher.

Experiments

Data Sets

In this work, 18 data sets from UCI repository were used to assess the performance of the proposed feature selection approach [10]. They are the same data sets used by many researchers to evaluate various feature selection approaches. Table 1 outlines the details of applied data sets for evaluating the proposed Binary Dragonfly algorithm based feature selection approach.

Parameter Settings

As in [26], each data set is split into three equal sets: training set, validation set, and test set. In addition, K-fold-cross-validation procedure is used to evaluate KNN classifier (the parameter K of KNN classifier is set to five as adopted in [26]). Also, results of several metaheuristics-based wrapper feature selection algorithms including Binary Particle Swarm Optimization (BPSO), Binary Ant Lion Optimization (BALO), and Binary Gray Wolf Optimization (BGWO) were also used for comparison purpose. Furthermore, for Binary Dragonfly algorithm, the original paper of Dragonfly algorithm [30] comprehensively studied the appropriate values of swarming factors and the inertia weight, for that reason, the same best parameter settings reported in that paper were adopted in this work. Moreover, the same values of parameters adopted in [25] for SA algorithm were used. The parameters of BGWO, BPSO, and BALO algorithms were selected based on recommended setting reported in the original publications and related studies in feature selection domain. In all conducted experiments, common parameters were set as in Table 2. These values were set following a range of initial experiments. Comparison between approaches were made based on three criteria comprising classification accuracy, number of selected features, and best fitness. In addition, each approach was run 20 times with random initial solutions on a machine with Intel Core i5 processor 2.2 GHz and 4 GB of RAM.

Results and Discussion

This section presents all recorded results obtained from the proposed FS approach. The proposed hybrid approach BDA-SA was compared to the original version of BDA based approach. In terms of classification accuracy, it is clear from Table 3 that BDA-SA is able to classify most accurately on all data sets. It can be stated that SA succeeded to enhance the best solution found by BDA algorithm. In addition, in terms of best fitness, Table 3 reveals the averages of best fitness for the applied FS approaches on each data set. In most of the cases, BDA-SA obtained the lowest average of fitness value. BDA is slightly better than BDA-SA in only two cases (SonerEW and M-of-n dat asets). Although, we will see later that the differences are not statistically significant. It is clear from Table 3 that BDA-SA has less averages of selected features than BDA in some cases, while the averages recorded by BDA are less on other cases. We previously observed that BDA-SA is superior on all cases in terms of classification accuracy. It is evident that when the average of selected features by BDA-SA is greater than BDA, this means that BDA-SA approach managed to find informative and relevant features ignored by BDA, and when the average of selected features by BDA-SA is less than BDA, that means BDA-SA approach may removed some noisy or irrelevant features selected by BDA approach.

Since the main aim of this work is to enhance the performance of BDA algorithm by hybridizing it with SA algorithm, we conducted further statistical analysis to demonstrate that the hybrid BDA-SA approach is better than using the basic BDA alone for feature selection problem. Table 4 presents the standard deviation values of the averages of classification accuracy, best fitness, and number of selected features for BDA and BDA-SA approaches on each data set. In terms of classification accuracy, as in Table 4, it can be observed that BDA-SA approach behaves more robust than BDA based approach on almost all data sets. In terms of best fitness and number of selected features, as shown in Table 4, it can be seen that BDA-SA approach is better than BDA approach on half of the cases.

The average and standard deviation were used as measures to compare the overall results obtained form BDA and BDA-SA. To see whether the differences in the results are statistically significant or not, the non-parametric Wilcoxon test with significant level 0.05 was applied. This test is appropriate to compare the algorithms that have stochastic behaviour [9]. As in Table 5, the p values of the accuracy and the fitness show that BDA-SA recorded significantly better results than BDA on most of the data sets. In terms of selected features, the differences are statistically significant on eight cases, while on other data sets including BreastEW, CongressEW, WaveformEW, SpectEW, and IonosphereEW, p values show that the differences are not statistically significant. The superiority of BDA-SA particularly in terms of classification accuracy is expected, since it utilizes two powerful searching algorithms, DA which is efficient in exploration and SA that has a strong exploitation capability. The ability of DA is utilized in exploring the highly relevant regions in the feature space and avoid the trap of local optima, and then SA is used to intensify the nearby regions to the optimal solution (best subset of features) discovered by BDA algorithm. However, in terms of computational time, as revealed in Fig. 2, in all cases, the computation cost of BDA-SA is higher compared to BDA.

The performance of BDA-SA was also compared with three meta-heuristic-based feature selection approaches including Binary Particle Swarm Optimization, Binary Ant Lion Optimization, and Binary Gray Wolf Optimization. In terms of accuracy rates, as revealed in Table 6, BDA-SA outperformed all its competitors. In addition, in terms of best fitness rates, as presented in Table 7, BDA-SA recorded lowest averages of best fitness on fifteen out of eighteen data sets. Furthermore, in terms of lowest number of selected features, Table 8 shows that BDA-SA outperformed other algorithms on more than 50% of tested cases. Also, the average of computational time for each approach was considered. Figure 3 shows the computational cost of BDA-SA, BPSO, BGWO, and BALO. It can be observed that BPSO is the best in terms of lowest computational time.

Conclusion

This work introduced BDA-SA as a hybrid feature selection approach. The main goal was to enhance the performance of Binary Dragonfly algorithm especially in terms for classification accuracy. The best solution found so far by BDA algorithm was used as initial solution by SA algorithm to conduct a local search to find better solution than the one obtained by BDA. The proposed approach was assessed on a set of frequently used data sets from UCI machine learning repository. The performance of BDA-SA was compared to the native BDA algorithm as well as various algorithms comprising BGWO, BPSO, and BALO. Experimental results show that BDA-SA outperformed BDA and the other algorithms. In the future, it is worth to evaluate the proposed hybrid approach on more complex data sets.

References

Ahmed S, Mafarja M, Faris H, Aljarah I. Feature selection using salp swarm algorithm with chaos. In: Proceedings of the 2Nd International Conference on Intelligent Systems, Metaheuristics & Swarm Intelligence, ISMSI ’18, pp. 65–69. ACM, New York, NY, USA (2018). https://doi.org/10.1145/3206185.3206198.

Al-Rawashdeh G, Mamat R, Hafhizah BARN. Hybrid water cycle optimization algorithm with simulated annealing for spam e-mail detection. IEEE Access. 2019;7:143721–34. https://doi.org/10.1109/ACCESS.2019.2944089.

Basiri ME, Nemati S. A novel hybrid aco-ga algorithm for text feature selection. In: 2009 IEEE Congress on Evolutionary Computation, pp. 2561–2568. IEEE (2009)

Basu M, Deb P, Garai G. Hybrid of particle swarm optimization and simulated annealing for multidimensional function optimization. Int J Inform Technol. 2014;20(1).

BoussaïD I, Lepagnot J, Siarry P. A survey on optimization metaheuristics. Inf Sci. 2013;237:82–117.

Chantar HK, Corne DW Feature subset selection for arabic document categorization using BPSO-KNN. In: Nature and Biologically Inspired Computing, 2011 Third World Congress on, pp. 546–551. IEEE (2011)

Chuang LY, Yang CH, Li JC. Chaotic maps based on binary particle swarm optimization for feature selection. Appl Soft Comput. 2011;11:239–48. https://doi.org/10.1016/j.asoc.2009.11.014.

Dash M, Liu H. Feature selection for classification. Intell Data Anal. 1997;1:131–56.

Derrac J, García S, Molina D, Herrera F. A practical tutorial on the use of nonprametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithm. Swarm Evol Comput. 2011;1:3–18.

Dua D, Graff C. UCI machine learning repository (2017). http://archive.ics.uci.edu/ml

Elgamal M, Binti N, Tubishat M, Alswaitti M, Mirjalili S. An improved harris hawks optimization algorithm with simulated annealing for feature selection in the medical field. IEEE Access. 2020;8. https://doi.org/10.1109/ACCESS.2020.3029728.

Elhariri E, El-Bendary N, Hassanien A.E. Bio-inspired optimization for feature set dimensionality reduction. In: 2016 3rd International Conference on Advances in Computational Tools for Engineering Applications (ACTEA), pp. 184–189 (2016)

Ferri F.J, Kadirkamanathan V, Kittler J. Feature subset search using genetic algorithms. In: IEE/IEEE Workshop on Natural Algorithms in Signal Processing, IEE. Press (1993)

Guyon I, Elisseeff A. An introduction to variable and feature selection. J Mach Learn Res. 2003; 3,1157–1182. http://dl.acm.org/citation.cfm?id=944919.944968

Hammouri A, Mafarja M, Al-Betar M, Awadallah M, Doush I. An improved dragonfly algorithm for feature selection. Knowl-Based Syst. 2020;203:106131. https://doi.org/10.1016/j.knosys.2020.106131.

Han J, Kamber M, Pei J. Data Mining: Concepts and Techniques. Amsterdam: Elsevier; 2012.

Huang CL, Dun JF. A distributed pso-svm hybrid system with feature selection and parameter optimization. Appl Soft Comput. 2008;8:1381–91. https://doi.org/10.1016/j.asoc.2007.10.007.

Il-Seok Oh. Jin-Seon Lee, Byung-Ro Moon: Hybrid genetic algorithms for feature selection. IEEE Trans Pattern Anal Mach Intell. 2004;26(11):1424–37.

Ismail Sayed G, Tharwat A, Hassanien AE. Chaotic dragonfly algorithm: an improved metaheuristic algorithm for feature selection. Appl Intell. 2019;49. https://doi.org/10.1007/s10489-018-1261-8.

Jeong IS, Kim HK, Kim TH, Hwi LD, Kim K, Kang SH. A feature selection approach based on simulated annealing for detecting various denial of service attacks. Softw Netw 2016; 173–190 (2016)

Jia H, Li J, Song W, Peng X, Lang C, Li Y. Spotted hyena optimization algorithm with simulated annealing for feature selection. IEEE Access PP, 1–1 (2019). https://doi.org/10.1109/ACCESS.2019.2919991

Kashef S, Nezamabadi-pour H. An advanced aco algorithm for feature subset selection. Neurocomputing. 2015;147:271–9. https://doi.org/10.1016/j.neucom.2014.06.067.

Kirkpatrick S, D Jr Gelatt C, P. Jr. Vecchi M. Optimization by simulated annealing. Science (New York, N.Y.) 1983;220,671–80. https://doi.org/10.1126/science.220.4598.671

Mafarja M, Aljarah I, Heidari AA, Faris H, Fournier Viger P, Li X, Mirjalili S. Binary dragonfly optimization for feature selection using time-varying transfer functions. Knowl-Based Syst. 2018;161:185–204.

Mafarja M, Mirjalili S. Hybrid whale optimization algorithm with simulated annealing for feature selection. Neurocomputing. 2017;. https://doi.org/10.1016/j.neucom.2017.04.053.

Mafarja M.M, Eleyan D, Jaber I, Hammouri A, Mirjalili S. Binary dragonfly algorithm for feature selection. In: 2017 International Conference on New Trends in Computing Sciences (ICTCS), pp. 12–17 (2017)

Manimala K, Selvi K, Ahila R. Hybrid soft computing techniques for feature selection and parameter optimization in power quality data mining. Appl. Soft Comput. 2011;11:5485–97.

Martin OC, Otto SW. Combining simulated annealing with local search heuristics. Ann OR. 1993;63:57–75.

Meiri R, Zahavi J. Using simulated annealing to optimize feature selection problem in marketing applications. Eur J Oper Res. 2006;171:842–58. https://doi.org/10.1016/j.ejor.2004.09.010.

Mirjalili S. Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput Appl. 2015;27(4):1053–73.

Moradi P, Gholampour M. A hybrid particle swarm optimization for feature subset selection by integrating a novel local search strategy. Appl Soft Comput. 43(C), 117–130 (2016). https://doi.org/10.1016/j.asoc.2016.01.044.

Nakamura RYM, Pereira LAM, Costa KA, Rodrigues D, Papa JP, Yang XB. A binary bat algorithm for feature selection. In: 2012 25th SIBGRAPI Conference on Graphics, Patterns and Images, 2012; pp. 291–297

Olabiyisi Stephen O, Fagbola Temitayo M, Omidiora Elijah O, Oyeleye Akin C. Hybrid metaheuristic feature extraction technique for solving timetabling problem. Int J Sci Eng Res USA. 2012;3(8):1–6.

Qasim O, Sabah M, Alzamzum F. Hybrid binary dragonfly optimization algorithm with statistical dependence for feature selection. Int J Math Eng Manag Sci. 2020;5:1420–8. https://doi.org/10.33889/IJMEMS.2020.5.6.105.

Sharawi M, Zawbaa H.M, Emary E, Zawbaa H.M, Emary E. Feature selection approach based on whale optimization algorithm. In: 2017 Ninth International Conference on Advanced Computational Intelligence (ICACI), pp. 163–168 (2017)

Song J, Li S. Elite opposition learning and exponential function steps-based dragonfly algorithm for global optimization. In: 2017 IEEE International Conference on Information and Automation (ICIA), pp. 1178–1183 (2017)

Talbi EG. A taxonomy of hybrid metaheuristics. J Heuristics. 2002;8:541–64. https://doi.org/10.1023/A:1016540724870.

Talbi EG Metaheuristics: from design to implementation. Wiley (2009). https://hal.inria.fr/hal-00750681

Too J, Mirjalili S. A hyper learning binary dragonfly algorithm for feature selection: A covid-19 case study. Knowl-Based Syst. 2020;212:106553. https://doi.org/10.1016/j.knosys.2020.106553.

Tubishat M, Idris N, Abushariah M. Explicit aspects extraction in sentiment analysis using optimal rules combination. Future Gen Compu Syst. 2020;114. https://doi.org/10.1016/j.future.2020.08.019.

Tubishat M, Jaafar S, Alswaitti M, Mirjalili S, Idris N, Ismail MA, Omar M. Dynamic salp swarm algorithm for feature selection. Expert Syst Appl. 2020;164:113873. https://doi.org/10.1016/j.eswa.2020.113873.

Vasant P. Hybrid simulated annealing and genetic algorithms for industrial production management problems. Int J Comput Methods (IJCM) (2), 7 (2010)

Zawbaa H.M, Emary E, Parv B. Feature selection based on antlion optimization algorithm. In: 2015 Third World Conference on Complex Systems (WCCS), pp. 1–7 (2015)

Zawbaa H.M, Emary E, Parv B, Sharawi M. Feature selection approach based on moth-flame optimization algorithm. In: 2016 IEEE Congress on Evolutionary Computation (CEC), pp. 4612–4617 (2016)

Zhang H, Sun G. Feature selection using tabu search method. Pattern Recognit. 2002;35:701–11. https://doi.org/10.1016/S0031-3203(01)00046-2.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Ethical standard

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Chantar, H., Tubishat, M., Essgaer, M. et al. Hybrid Binary Dragonfly Algorithm with Simulated Annealing for Feature Selection. SN COMPUT. SCI. 2, 295 (2021). https://doi.org/10.1007/s42979-021-00687-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42979-021-00687-5