Abstract

This study considers the treatment choice problem when the outcome variable is binary. We focus on statistical treatment rules that plug in fitted values from a nonparametric kernel regression, and show that the maximum regret can be calculated by maximizing over two parameters. Using this result, we propose a novel bandwidth selection method based on the minimax regret criterion. Finally, we perform a numerical exercise to compare the optimal bandwidth choices for binary and normally distributed outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This study examines the problem of determining whether to treat individuals based on observed covariates. A standard approach is to employ a plug-in rule that selects the treatment according to the sign of an estimate of the conditional average treatment effect (CATE). Kernel regression is a prevalent technique for estimating the CATE, and a crucial aspect of implementing this method is bandwidth selection. Many studies have proposed bandwidth selection approaches for estimation problems (see Li and Racine (2007)). However, to the best of our knowledge, there are few studies that investigate bandwidth selection for the treatment choice problem. We propose a novel method for bandwidth selection in the treatment choice problem when the outcome is a binary variable.

In this study, we consider a planner who wants to use experimental data to determine whether to treat individuals with a particular covariate value. Following Manski (2004, 2007), Stoye (2009, 2012), Tetenov (2012), Ishihara and Kitagawa (2021), and Yata (2021), we focus on the minimax regret criterion to solve the decision problem. When the outcome variable is binary, its conditional distribution is characterized by a conditional mean function. We assume that this conditional mean function is a Lipschitz function, and show that the maximum regret can be calculated by maximizing over two parameters. Based on these results, we propose a computationally tractable algorithm for obtaining the optimal bandwidth.

Ishihara and Kitagawa (2021) and Yata (2021) derive the minimax regret rule in a similar setting when the outcome variable is normally distributed. Using the results of Ishihara and Kitagawa (2021), calculation of the maximum regret for a nonrandomized statistical treatment rule which incorporates fitted values derived from nonparametric kernel regression can be performed with ease. However, this approach relies on the normality of the outcome variable, and therefore cannot be applied to binary outcomes.

Stoye (2012) considers the statistical decision problem with a binary outcome. The setting of Stoye (2012) is similar to this study, but our framework differs in two respects. First, we focus on the treatment choice at a particular covariate value, whereas Stoye (2012) considers treatment assignment functions that map from the covariate support into a binary treatment status. Second, our restriction on the conditional mean function differs from that of Stoye (2012). Stoye (2012) assumes that the variation of the conditional mean function is bounded. In contrast, our study assumes that the conditional mean function is a Lipschitz function.

The remainder of this paper is organized as follows. Section 2 describes the study setting. Section 3 defines the statistical decision problem and provides a computationally tractable algorithm to obtain the optimal bandwidth. Section 4 presents a numerical exercise where bandwidth selections for binary and normally distributed outcomes are compared. Section 5 concludes the study.

2 Setting

Suppose that we have experimental data \(\{Y_i,D_i,X_i\}_{i=1}^n\), where \(X_i \in \mathbb {R}^{d_x}\) is a vector of observable pre-treatment covariates, \(D_i \in \{0,1\}\) is a binary indicator for the treatment, and \(Y_i \in \{0,1\}\) is a binary outcome. Then \(Y_i\) satisfies

This implies \(Y_i|D_i,X_i \sim Ber \left( \mu (D_i,X_i) \right)\). Under the unconfoundedness assumption, we can view \(\mu (1,x) - \mu (0,x)\) as the CATE.

For simplicity, we assume that \(D_i\) and \(X_i\) are deterministic, \(D_i=1\) for \(i = 1, \ldots , n_1\), and \(D_i = 0\) for \(i = n_1+1, \ldots , n_1+n_0\), where \(n_1+n_0 = n\). We define \(Y_{1,i} \equiv Y_i\) for \(i = 1, \ldots , n_1\), \(Y_{0,i} \equiv Y_{n_1 + i}\) for \(i = 1, \ldots , n_0\), \(X_{1,i} \equiv X_i\) for \(i = 1, \ldots , n_1\), and \(X_{0,i} \equiv X_{n_1 + i}\) for \(i = 1, \ldots , n_0\). Letting \(p_{1,i} \equiv \mu (1,X_{1,i})\) for \(i=1,\ldots ,n_1\) and \(p_{0,i} \equiv \mu (0,X_{0,i})\) for \(i=1,\ldots ,n_0\), then \(Y_{1,i} \sim Ber(p_{1,i})\) for \(i=1,\ldots ,n_1\) and \(Y_{0,i} \sim Ber(p_{0,i})\) for \(i=1,\ldots ,n_0\). Additionally, we define \(p_{1,0} \equiv \mu (1,0)\) and \(p_{0,0} \equiv \mu (0,0)\). Then, the distributions of \({\varvec{Y}}_1 \equiv (Y_{1,1},\ldots ,Y_{1,n_1})'\) and \({\varvec{Y}}_0 \equiv (Y_{0,1},\ldots ,Y_{0,n_0})'\) are determined by the parameters \({\varvec{p}}_1 \equiv (p_{1,0}, \ldots , p_{1,n_1})'\) and \({\varvec{p}}_0 \equiv (p_{0,0}, \ldots , p_{0,n_0})'\).

Throughout this paper, we consider a planner seeking to determine whether to treat individuals with \(X_i = x_0\) based on the data \(\textbf{D} \equiv ({\varvec{Y}}_1, {\varvec{Y}}_0)\) with the parameters \({\varvec{p}}_1 \equiv (p_{1,0}, \ldots , p_{1,n_1})'\) and \({\varvec{p}}_0 \equiv (p_{0,0}, \ldots , p_{0,n_0})'\) unknown. Here \(x_0\) is a specific value of the covariate vector. The value \(x_0\) does not have to be included in the support of the covariate distribution in data. Without loss of generality, we assume that \(x_0 = 0\).

Following Manski (2004, 2007), Stoye (2009, 2012), Tetenov (2012), Ishihara and Kitagawa (2021), and Yata (2021), we focus on the minimax regret criterion to solve the decision problem. To this end, we assume that the true parameters \({\varvec{p}} \equiv ({\varvec{p}}_1, {\varvec{p}}_0)\) are known to belong to the parameter space \(\mathcal {P} \equiv \mathcal {P}_1 \times \mathcal {P}_0\). We impose the following restrictions on this parameter space:

where \(X_{1,0} = X_{0,0} \equiv 0\). This assumption implies that \(\mu (1,x)\) and \(\mu (0,x)\) are Lipschitz functions with the Lipschitz constant \(C>0\).

3 Main results

3.1 Welfare and regret

Given a treatment choice action \(\delta \in [0,1]\), we define the welfare attained by \(\delta\) as

The optimal treatment choice action given knowledge of \({\varvec{p}}\) is

\(W(\delta ^{*})\) is then the welfare that would be attained if we knew \({\varvec{p}}\).

Let \(\hat{\delta }(\textbf{D}) \in \{0,1\}\) be a statistical treatment rule that maps data \(\textbf{D}\) to the binary treatment choice decision. The welfare regret of \(\hat{\delta }(\textbf{D})\) is defined as

where \(E_{{\varvec{p}}}(\cdot )\) is the expectation with respect to the sampling distribution of \(\textbf{D}\) given the parameters \({\varvec{p}}\).

This study focuses on the following statistical treatment rule.

Assumption 1

We consider the following class of statistical treatment rules \(\mathcal {D}\):

where \(K: \mathbb {R}^{d_x} \rightarrow \mathbb {R}_{+}\) denotes a kernel function.

Assumption 1 states that we focus on non-randomized statistical treatment rules that plug in the fitted values from a nonparametric kernel regression. In addition, we assume that the kernel function takes non-negative values. Our results rely on this condition. In (5), \(\theta\) is the bandwidth of the kernel regression estimator.

The minimax regret criterion selects a statistical treatment rule that minimizes the maximum regret:

Under Assumption 1, a statistical treatment rule \(\hat{\delta } \in \mathcal {D}\) is characterized by its bandwidth \(\theta\), and the optimal bandwidth can be calculated as follows:

where \(\hat{\delta }_{\theta }\) is as defined in (5). In the next subsection, we describe the calculation of the optimal bandwidth \(\theta ^{*}\).

3.2 Minimax regret rule

The following theorem implies that we can calculate \(\max _{{\varvec{p}} \in \mathcal {P}} R({\varvec{p}},\hat{\delta }_{\theta })\) by maximizing over two parameters.

Theorem 1

Under Assumption 1, for any \(\hat{\delta }_{\theta } \in \mathcal {D}\) we obtain

where \(\tilde{{\varvec{p}}}^{-}(p_{1,0}, p_{0,0}) \equiv \left( \tilde{{\varvec{p}}}_1^{-}(p_{1,0}), \tilde{{\varvec{p}}}_0^{+}(p_{0,0}) \right)\), \(\tilde{{\varvec{p}}}^{+}(p_{1,0}, p_{0,0}) \equiv \left( \tilde{{\varvec{p}}}_1^{+}(p_{1,0}), \tilde{{\varvec{p}}}_0^{-}(p_{0,0}) \right)\),

Theorem 1 provides a way of finding the parameters that maximize the regret of \(\hat{\delta }_{\theta } \in \mathcal {D}\). If \(p_{1,0} > p_{0,0}\), then the regret of \(\hat{\delta }_{\theta }\) is maximized at

Similarly, if \(p_{1,0} < p_{0,0}\), then the regret of \(\hat{\delta }_{\theta }\) is maximized at

These results allow us to calculate the maximum regret for a given \(p_{1,0}\) and \(p_{0,0}\), and we can then compute \(\max _{{\varvec{p}} \in \mathcal {P}} R({\varvec{p}},\hat{\delta }_{\theta })\) by maximizing over \(p_{1,0}\) and \(p_{0,0}\).

In view of Theorem 1, we can compute the optimal bandwidth \(\theta ^{*}\) using the following algorithm.

- 1.:

-

Fix \(\hat{\delta }_{\theta } \in \mathcal {D}\) and generate uniformly distributed random variables \(\{U_{1,i,s}\}_{i=1, \ldots , n_1, \, s = 1, \ldots , S}\) and \(\{U_{0,i,s}\}_{i=1, \ldots , n_0, \, s = 1, \ldots , S}\), where S is a large number. Define the following variables:

$$\begin{aligned} \tilde{Y}_{1,i,s}^{-}(p)\equiv & {} 1 \left\{ U_{1,i,s} \le \tilde{p}_{1,i}^{-}(p) \right\} , \ \ \tilde{Y}_{1,i,s}^{+}(p) \equiv 1 \left\{ U_{1,i,s} \le \tilde{p}_{1,i}^{+}(p) \right\} , \\ \tilde{Y}_{0,i,s}^{-}(p)\equiv & {} 1 \left\{ U_{0,i,s} \le \tilde{p}_{0,i}^{-}(p) \right\} , \ \ \tilde{Y}_{0,i,s}^{+}(p) \equiv 1 \left\{ U_{0,i,s} \le \tilde{p}_{0,i}^{+}(p) \right\} , \\ \tilde{{\varvec{Y}}}^{-}_{1,s}(p)\equiv & {} \left( \tilde{Y}_{1,1,s}^{-}(p), \ldots , \tilde{Y}_{1,n_1,s}^{-}(p) \right) , \ \ \tilde{{\varvec{Y}}}^{+}_{1,s}(p) \equiv \left( \tilde{Y}_{1,1,s}^{+}(p), \ldots , \tilde{Y}_{1,n_1,s}^{+}(p) \right) , \\ \tilde{{\varvec{Y}}}^{-}_{0,s}(p)\equiv & {} \left( \tilde{Y}_{0,1,s}^{-}(p), \ldots , \tilde{Y}_{0,n_0,s}^{-}(p) \right) , \ \ \tilde{{\varvec{Y}}}^{+}_{0,s}(p) \equiv \left( \tilde{Y}_{0,1,s}^{+}(p), \ldots , \tilde{Y}_{0,n_0,s}^{+}(p) \right) . \end{aligned}$$ - 2.:

-

Approximate \(E_{\tilde{{\varvec{p}}}^{-}(p_{1,0}, p_{0,0})} [ \hat{\delta }_{\theta }(\textbf{D}) ]\) and \(E_{\tilde{{\varvec{p}}}^{+}(p_{1,0}, p_{0,0})} [ \hat{\delta }_{\theta }(\textbf{D}) ]\) by \(\pi ^{-}_{\theta }(p_{1,0},p_{0,0})\) and \(\pi ^{+}_{\theta }(p_{1,0},p_{0,0})\), where

$$\begin{aligned} \pi ^{-}_{\theta }(p_{1,0},p_{0,0})\equiv & {} \frac{1}{S} \sum _{s=1}^S \hat{\delta }_{\theta }( \tilde{{\varvec{Y}}}^{-}_{1,s}(p_{1,0}), \tilde{{\varvec{Y}}}^{+}_{0,s}(p_{0,0})), \\ \pi ^{+}_{\theta }(p_{1,0},p_{0,0})\equiv & {} \frac{1}{S} \sum _{s=1}^S \hat{\delta }_{\theta }( \tilde{{\varvec{Y}}}^{+}_{1,s}(p_{1,0}), \tilde{{\varvec{Y}}}^{-}_{0,s}(p_{0,0})). \end{aligned}$$ - 3.:

-

Calculate the maximum regret \(\overline{R}(\theta )\), where

$$\begin{aligned} \overline{R}(\theta )\equiv & {} \max \left[ \max _{p_{1,0}>p_{0,0}} \left\{ (p_{1,0}-p_{0,0}) \left( 1 - \pi ^{-}_{\theta }(p_{1,0},p_{0,0}) \right) \right\} , \right. \\{} & {} \left. \max _{p_{1,0}<p_{0,0}} \left\{ (p_{0,0}-p_{1,0}) \pi ^{+}_{\theta }(p_{1,0},p_{0,0}) \right\} \right] . \end{aligned}$$ - 4.:

-

Minimize \(\overline{R}(\theta )\) and obtain the optimal bandwidth \(\theta ^{*}\).

This algorithm is advantageous from a computational perspective. Computation of the exact minimax regret rule is often challenging in the context of statistical treatment choice. In situations where the sample size is large, calculating the maximum regret necessitates addressing an optimization problem of substantial dimension. However, using Theorem 1, it is possible to calculate the maximum regret with greater ease. In the next section, we compute \(\theta ^{*}\) by using this algorithm.

Remark 1

Similar to Ishihara and Kitagawa (2021), when the Lipschitz constant C is unknown, it is unclear how to select it in a theoretically justifiable data-driven manner. However, Ishihara and Kitagawa (2021) and Yata (2021) propose some practical choices for C. In the empirical application of Ishihara and Kitagawa (2021), C is selected by leave-one-out cross-validation. Yata (2021) estimates a lower bound on C by using the derivative of the conditional mean function. Both of these methods can also be applied in our setting.

Remark 2

When C is sufficiently large, the maximum regret does not depend on the bandwidth. If \(X_{1,0}, X_{1,1}, \ldots , X_{1,n_1}\) and \(X_{0,0}, X_{0,1}, \ldots , X_{0,n_0}\) differ, then for large enough C, the parameter space \(\mathcal {P}\) becomes \([0,1]^{n_1+1} \times [0,1]^{n_0+1}\). From Theorem 1, if \(p_{1,0} > p_{0,0}\), then the regret of \(\hat{\delta }_{\theta }\) is maximized at

Similarly, if \(p_{1,0} < p_{0,0}\), then the regret of \(\hat{\delta }_{\theta }\) is maximized at \({\varvec{p}}_1 = (p_{1,0},1,\ldots ,1)'\) and \({\varvec{p}}_0 = (p_{0,0},0,\ldots ,0)'\). These results imply that

Hence, the maximum regret does not depend on the bandwidth \(\theta\).

4 Numerical exercise

In this section, we perform a numerical exercise to compare the optimal bandwidth described in the previous section with the optimal bandwidth for a normally distributed outcome. Throughout this section, we set the pre-treatment covariate values to equidistant grid points on \([-1,1]\):

with \(n_1=n_0=n/2\). We consider the kernel regression class \(\mathcal {D}\) defined in (5), and use the Gaussian kernel as the kernel function. We calculate the optimal bandwidth \(\theta ^{*}\) using our proposed method.

We compare our method with the bandwidth selection that minimizes the maximum regret when the outcome variable is normally distributed. Suppose that \(Y_{1,i} \sim N(p_{1,i}, 0.5^2)\) and \(Y_{0,i} \sim N(p_{0,i}, 0.5^2)\). Then, we set the parameter space to be \(\mathcal {P}^{N} \equiv \mathcal {P}^N_1 \times \mathcal {P}_0^N\), where

Define \(w_{1,i,\theta } \equiv K(X_{1,i}/\theta ) / \sum _{i=1}^{n_1} K(X_{1,i}/\theta )\) and \(w_{0,i,\theta } \equiv K(X_{0,i}/\theta ) / \sum _{i=1}^{n_0} K(X_{0,i}/\theta )\). Using the results of Ishihara and Kitagawa (2021), the maximum regret can be expressed as follows:

where \(\eta (a) \equiv \max _{t>0} \{ t \cdot \Phi (-t+a) \}\) with \(\Phi (\cdot )\) being the N(0, 1) distribution function, \(s(\theta ) \equiv 0.5 \sqrt{\sum _{i=1}^{n_1} w_{1,i,\theta }^2 + \sum _{i=1}^{n_0} w_{0,i,\theta }^2}\), and

Appendix 2 provides the details of the derivation. Using this result, we calculate the bandwidth that minimizes the maximum regret when the outcome is normally distributed.

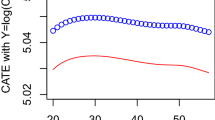

Table 1 lists the optimal bandwidth choices in the binary and normal cases. In the binary case, we calculate the optimal bandwidth using the algorithm of Sect. 3.2. In the normal case, we calculate the bandwidth that minimizes (8), that is, the bandwidth choice proposed by Ishihara and Kitagawa (2021).

In many cases, the optimal bandwidth decreases as C decreases or n increases. When n is large, the optimal bandwidth with a binary outcome is close to that for a normally distributed outcome. This phenomenon arises due to the asymptotic normality of the kernel regression estimator. In the binary case, the maximum regret (7) is a step function with respect to \(\theta\), and approaches a continuous function as n increases. Hence, Table 1 reports the optimal bandwidth range when \(n=10\). When n is small, the optimal bandwidth with a binary outcome can be significantly different from that for a normally distributed outcome.

5 Conclusion

This study investigated whether to treat individuals based on observed covariates. The standard approach to this problem is to use a plug-in rule that determines the treatment based on the sign of an estimate of the CATE. We focused on statistical treatment rules based on nonparametric kernel regression. In situations in which the outcome variable is normally distributed, Ishihara and Kitagawa (2021) showed that the maximum regret can be calculated easily. This study demonstrated that, when the outcome variable is a binary variable, the maximum regret can be calculated by maximizing over two parameters. Using these results, we proposed a method for selecting the optimal bandwidth in the case of a binary outcome. In addition, we performed a numerical exercise to compare the optimal bandwidth choices for binary and normally distributed outcomes.

Data availability

Replication codes for the simulation are available from the corresponding author upon request.

References

Ishihara, T., & Kitagawa, T. (2021). Evidence aggregation for treatment choice, arXiv preprint arXiv:2108.06473. Accessed 24 July 2023.

Li, Q., & Racine, J. S. (2007). Nonparametric Econometrics: Theory and Practice. Princeton University Press.

Manski, C. F. (2004). Statistical treatment rules for heterogeneous populations. Econometrica, 72, 1221–1246.

Manski, C. F. (2007). Minimax-regret treatment choice with missing outcome data. Journal of Econometrics, 139, 105–115.

Stoye, J. (2009). Minimax regret treatment choice with finite samples. Journal of Econometrics, 151, 70–81.

Stoye, J. (2012). Minimax regret treatment choice with covariates or with limited validity of experiments. Journal of Econometrics, 166, 138–156.

Tetenov, A. (2012). Statistical treatment choice based on asymmetric minimax regret criteria. Journal of Econometrics, 166, 157–165.

Yata, K. (2021). Optimal decision rules under partial identification, arXiv preprint arXiv:2111.04926. Accessed 24 July 2023.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was partially supported by JSPS KAKENHI Grant Number 22K13373. I would like to thank the editor and an anonymous referee for their careful reading and comments. I would also like to thank Toru Kitagawa, Masayuki Sawada, and Kohei Yata for their helpful comments and suggestions.

Appendices

Appendix 1: Proofs of Theorem 1 and Lemma 1

Proof of Theorem 1

If \({\varvec{p}}\) satisfies \(p_{1,0} > p_{0,0}\), then we have

For any \(p \in [0,1]\), \(\tilde{{\varvec{p}}}_1^{-}(p)\) and \(\tilde{{\varvec{p}}}_0^{+}(p)\) are contained in \(\mathcal {P}_1\) and \(\mathcal {P}_0\), respectively. Additionally, we have \(p_{1,i} \ge \tilde{p}_{1,i}^{-}(p_{1,0})\) and \(p_{0,i} \le \tilde{p}_{0,i}^{+}(p_{0,0})\) for all i. Because Ber(p) first-order stochastically dominates \(Ber(\tilde{p})\) for \(p \ge \tilde{p}\), it follows from Assumption 1 and Lemma 1 that

Hence, we obtain

Similarly, we obtain

As \(p_{1,0}=p_{0,0}\) implies \(R({\varvec{p}},\hat{\delta }_{\theta }) = 0\), we obtain (7). \(\square\)

Lemma 1

Suppose that \(W_1, W_2, \ldots , W_n\), and \(\tilde{W}_1\) are independent random variables and \(\tilde{W}_1\) first-order stochastically dominates \(W_1\). If \(g(w_1, \ldots , w_n)\) is increasing in \(w_1\), then we have \(E\left[ g(W_1, W_2, \ldots , W_n) \right] \le E\left[ g(\tilde{W}_1, W_2, \ldots , W_n) \right]\).

Proof

Let \(F_i\) be a distribution function of \(W_i\) and \(\tilde{F}_1\) be a distribution function of \(\tilde{W}_1\). Because \(g(w_1, \ldots , w_n)\) is increasing in \(w_1\), we have \(\int g(w_1, \ldots , w_n) dF_1(w_1) \le \int g(w_1, \ldots , w_n) d\tilde{F}_1(w_1)\). Hence, we obtain

\(\square\)

Appendix 2: Derivation of (8)

In this section, we present the derivation of (8). Suppose that \(Y_{1,i} \sim N(p_{1,i}, 0.5^2)\) and \(Y_{0,i} \sim N(p_{0,i}, 0.5^2)\). Then, we set the parameter space to be \(\mathcal {P}^{N} \equiv \mathcal {P}^N_1 \times \mathcal {P}_0^N\), where

Define \(w_{1,i,\theta } \equiv K(X_{1,i}/\theta ) / \sum _{i=1}^{n_1} K(X_{1,i}/\theta )\) and \(w_{0,i,\theta } \equiv K(X_{0,i}/\theta ) / \sum _{i=1}^{n_0} K(X_{0,i}/\theta )\). Then the regret can be written as

From the symmetry of \(\mathcal {P}^N\), we have

From the definitions of \(\mathcal {P}_1^N\) and \(\mathcal {P}_0^N\), we obtain

Hence, we obtain (8). This result is essentially the same as that of Ishihara and Kitagawa (2021).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ishihara, T. Bandwidth selection for treatment choice with binary outcomes. JER 74, 539–549 (2023). https://doi.org/10.1007/s42973-023-00142-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42973-023-00142-5