Abstract

For the analysis of square contingency tables with the same row and column ordinal classifications, this study proposes an index for measuring the degree of departure from the symmetry model using new cumulative probabilities. The proposed index is constructed based on the Cressie and Read’s power divergence, or the weighted average of the Patil and Taillie’s diversity index. This study derives a plug-in estimator of the proposed index and an approximate confidence interval for the proposed index. The estimator of the proposed index is expected to reduce the bias more than the estimator of the existing index, even when the sample size is not large. The proposed index is identical to the existing index under the conditional symmetry model. Therefore, assuming the probability structure in which the conditional symmetry model holds, the performances of plug-in estimators of the proposed and existing indexes can be simply compared. Through numerical examples and real data analysis, the usefulness of the proposed index compared to the existing index is demonstrated.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In two-way contingency tables, we are usually interested in whether or not there is association between the row and column variables. Therefore, for the analysis of two-way contingency tables, the independence model is commonly used. On the other hand, in square contingency tables with the same row and column classifications, we are often interested in whether or not there is symmetric or asymmetric between the row and column variables. This is because, in square contingency tables, there is a strong association. Therefore, for the analysis of square contingency tables, various models having the structure of symmetry or asymmetry are proposed, see for example, Tahata and Tomizawa (2014), Tahata (2020, 2022). The symmetry model (Bowker, 1948) is the original statistical model dedicated to the analysis of square contingency tables.

When the symmetry model does not fit the present data well, we are usually interested in (i) applying extended symmetry models (e.g., the quasi-symmetry model (Caussinus, 1965), the marginal homogeneity model (Stuart, 1955), and the sum-symmetry model (Ando, 2022; Yamamoto et al., 2013)), (ii) applying asymmetry models [e.g., the conditional symmetry model (McCullagh, 1978), the generalized quasi-symmetry model (Kateri and Papaioannou, 1997), the ordinal generalized quasi-symmetry model (Kateri and Agresti, 2007), and the m-additional parameters marginal homogeneity model (Tahata and Tomizawa, 2008)], and (iii) measuring the degree of departure from the symmetry model. This study focuses on an index for measuring the degree of departure from the symmetry model.

For the analysis of square contingency tables with nominal categories, Tomizawa (1994) proposed two kinds of indexes for measuring the degree of departure from the symmetry model. One index is constructed based on the Kullback–Leibler divergence (or the weighted average of conditional Shannon entropy), and the other is conducted based on the Pearson’s chi-squared type divergence (or the weighted average of conditional Gauss divergence). Thereafter, Tomizawa et al. (1998) considered a generalization of those indexes. The generalized index, denoted by \(\psi ^{(\lambda )}\), is constructed based on the power divergence of Cressie and Read (1984), or the weighted average of the diversity index of Patil and Taillie (1982). It must be noted that the \(\psi ^{(\lambda )}\) is the function of the cell probabilities \(\{\pi _{ij}\}\). When we want to use the information with respect to the order of listing the categories, it would not be appropriate to apply the \(\psi ^{(\lambda )}\). This is because, the \(\psi ^{(\lambda )}\) is invariant under arbitrary same permutations of row and column categories.

For the analysis of square contingency tables with ordinal categories, Tomizawa et al. (2001) proposed an index, denoted by \(\phi ^{(\lambda )}\), for measuring the degree of departure from the symmetry model. The \(\phi ^{(\lambda )}\) is also constructed based on the power divergence of Cressie and Read (1984), or the weighted average of the diversity index of Patil and Taillie (1982). It must be noted that the \(\phi ^{(\lambda )}\) is the function of the cumulative probabilities \(\{G_{ij}\}\). Since the cumulative probabilities \(\{G_{ij}\}\) can be defined for only ordinal categorical variables, it may be applicable to apply the \(\phi ^{(\lambda )}\) to square contingency tables with ordered categories. See Sect. 2 for the details of \(\{G_{ij}\}\) and the \(\phi ^{(\lambda )}\).

These indexes \(\psi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\) are important to compare the degrees of departure from the symmetry model for several data as the ranges of these indexes are both zero to one, and the values of these indexes do not depend on the sample size. For the analysis of square contingency tables with ordinal categories, analysts may compare the degrees of departure from the symmetry model though estimating the asymmetry parameter of conditional symmetry model. This procedure, however, can only be used when the conditional symmetry model holds. The index \(\phi ^{(\lambda )}\) has no such limitation.

Using the first-order term in the Taylor expansion, the plug-in estimators \(\hat{\psi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\) with the cell probabilities replaced by the corresponding sample proportions are approximately the unbiased estimators of \(\psi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\), respectively. Moreover, using the delta method, the \(\sqrt{N}(\hat{\psi }^{(\lambda )} - \psi ^{(\lambda )})\) (or \(\sqrt{N}(\hat{\phi }^{(\lambda )} - \phi ^{(\lambda )})\)) is asymptotically distributed as a normal distribution with mean zero and variance \(\sigma ^2[\hat{\psi }^{(\lambda )}]\) (or \(\sigma ^2[\hat{\phi }^{(\lambda )}]\)), where the N is sample size. It must be noted that the asymptotic normal distribution of \(\sqrt{N}(\hat{\psi }^{(\lambda )} - \psi ^{(\lambda )})\) (or \(\sqrt{N}(\hat{\phi }^{(\lambda )} - \phi ^{(\lambda )})\)) is not applicable when \(\psi ^{(\lambda )} = 0\) and \(\psi ^{(\lambda )} = 1\) (or \(\phi ^{(\lambda )} = 0\) and \(\phi ^{(\lambda )} = 1\)). For the details of above results, see previous studies Tomizawa et al. (1998, 2001), Ando et al. (2017). When the sample size is not so large, the \(\hat{\psi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\) may not be the unbiased estimators. To address this issue, using the second-order term in the Taylor expansion, Tomizawa et al. (2007) proposed the improved approximate unbiased estimators of \(\psi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\) that are better than the \(\hat{\psi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\). However, in this approach, it is difficult to derive the asymptotic distributions of the improved approximate unbiased estimators for \(\psi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\).

For square contingency tables with ordered categories, this study takes particular note of a new cumulative probabilities different from \(\{G_{ij}\}\). The new cumulative probabilities are defined to be greater on average than \(\{G_{ij}\}\). The purposes of this study are to (i) give the equivalence constraint of the symmetry model using the new cumulative probabilities, (ii) propose an index for measuring the degree of departure from the symmetry model using the new cumulative probabilities, and (iii) derive a plug-in estimator of the proposed index and an approximate confidence interval for the proposed index. The estimator of the proposed index is expected to reduce the bias more than \(\hat{\phi }^{(\lambda )}\), even when the sample size is not large. Moreover, we discuss the situation, where the \(\phi ^{(\lambda )}\) cannot apply to the data, while the proposed index can.

The rest of this paper is organized as follows. Section 2 gives the equivalence constraint of the symmetry model using the new cumulative probabilities, and introduces the existing and proposed indexes for measuring the degree of departure from the symmetry model. In Sect. 3, we derive a plug-in estimator of the proposed index and an approximate confidence interval for the proposed index. Section 4 shows the relation between the proposed index and the conditional symmetry model. Sections 5 and 6 conduct the numerical experiment and read data analysis, respectively. In Sect. 7, we discuss the difference of the limitation between the proposed and existing indexes. Section 8 closes with the concluding remarks.

2 Proposed method

2.1 Symmetry model

Consider an \(R \times R\) square contingency table. Let \(\pi _{ij}\) be the cell probability that an observation will fall in (i, j)th cell of the square contingency table for \(i, j = 1,2,\dots ,R\).

The symmetry model (Bowker, 1948) is defined by

The symmetry model indicates the symmetric structure of cell probabilities with respect to the main diagonals cells (i.e., (i, i)th cells for \(i=1,2,\dots ,R\)) of the square contingency table.

For square contingency tables with ordered categories, let denote the cumulative probabilities \(G_{ij}\) for \(i,j=1,2,\dots ,R\) as follows:

The symmetry model can be expressed as

see McCullagh (1978).

Let

This study introduces new cumulative probabilities as follows:

The cumulative probabilities \(\Pi _{ij}\), for \(i<j\), indicate the sum of the cell probabilities of the upper triangular elements in the square contingency table, excluding the (i, j)th cell, and the \(\Pi _{ij}\), for \(i>j\), indicate the sum of the cell probabilities of the lower triangular elements, excluding the (i, j)th cell. The \(\pi _{st}\) for one \(s<t\) is always included \((R+1)(R-2)/2\) within the sum of \(\Pi _{ij}\) for all \(i<j\), while the \(\pi _{st}\) is included \((t-s)(t-s+1)/2\) within the sum of \(G_{ij}\) for all \(i<j\). The \((t-s)(t-s+1)/2\) takes the maximum value \(R(R-1)/2\) when \(t-s=R-1\) (i.e., \(\pi _{st}=\pi _{1R}\)), and it takes the minimum value 1 when \(t-s=1\). Therefore, the cumulative probabilities \(\{\Pi _{ij}\}\) tend to be greater on average than \(\{G_{ij}\}\). When the sample size is not large, it is expected to be more robust to estimate a function of \(\{\Pi _{ij}\}\) that takes on average larger values than \(\{G_{ij}\}\).

This study gives the following theorem with respect to the equivalence constraint of the symmetry model using the \(\{\Pi _{ij}\}\).

Theorem 1

The following necessary and sufficient condition with respect to the symmetry model holds.

See Appendix A for the proof of Theorem 1. From Theorem 1, the symmetry model can also be expressed as

2.2 Existing index of symmetry

Tomizawa et al. (2001) proposed the index \(\phi ^{(\lambda )}\) for measuring the degree of departure from the symmetry model using \(\{G_{ij}\}\). Assume that \(G_{ij}+G_{ji} >0\), for \(i<j\), the \(\phi ^{(\lambda )}\) is defined by

with

where

It must be noted that \(I^{(\lambda )}_G\) is the power-divergence of Cressie and Read (1984) between \(\{G^*_{ij}\}\) and \(\{Q^*_{ij}\}\), and \(G^*_{ij}=Q^*_{ij}\) for \(i \ne j\) when the symmetry model holds. The value at \(\lambda =0\) is taken to be limit as \(\lambda \rightarrow 0\), that is,

where

It must be noted that \(I^{(0)}_G\) is the Kullback–Leibler (KL) divergence between \(\{G^*_{ij}\}\) and \(\{Q^*_{ij}\}\).

The symmetry model can be expressed as

where

The \(\phi ^{(\lambda )}\) can be expressed as

where

It must be noted that \(H^{(\lambda )}_{G(ij)}\) is the Patil and Taillie (1982) diversity index of degree \(\lambda\) for \(\{G^c_{ij}, G^c_{ji}\}\). The value at \(\lambda =0\) is taken to be limit as \(\lambda \rightarrow 0\), that is,

where

It must be noted that \(H^{(0)}_{G(ij)}\) is the Shannon entropy for \(\{G^c_{ij}, G^c_{ji}\}\).

The \(\phi ^{(\lambda )}\) has the following properties: (i) the range of \(\phi ^{(\lambda )}\) is zero to one, (ii) the \(\phi ^{(\lambda )}\) is equal to zero if and only if the symmetry model holds, and (iii) the \(\phi ^{(\lambda )}\) is equal to one if and only if the degree of departure from the symmetry model is largest, that is, \(G^c_{ij}=0\) and \(G^c_{ji}=1\) or \(G^c_{ij}=1\) and \(G^c_{ji}=0\) for \(i<j\).

2.3 Proposed index of symmetry

In this section, we propose an index \(\Phi ^{(\lambda )}\) for measuring the degree of departure from the symmetry model using \(\{\Pi _{ij}\}\). Assume that \(\Pi _{ij}+\Pi _{ji} >0\), for \(i<j\), the \(\Phi ^{(\lambda )}\) is defined by

with

where

It must be noted that \(I^{(\lambda )}_\Pi\) is the power-divergence between \(\{\Pi ^*_{ij}\}\) and \(\{\Theta ^*_{ij}\}\), and \(\Pi ^*_{ij}=\Theta ^*_{ij}\) for \(i \ne j\) when the symmetry model holds. The value at \(\lambda =0\) is taken to be limit as \(\lambda \rightarrow 0\), that is,

where

It must be noted that \(I^{(0)}_\Pi\) is the KL divergence between \(\{\Pi ^*_{ij}\}\) and \(\{\Theta ^*_{ij}\}\).

When \(\lambda =0\), the \(I^{(\lambda )}_\Pi\) is identical to the minimum KL divergence between \(\{\Pi ^*_{ij}\}\) and probability distribution with the structure of the symmetry model. When \(\lambda \ne 0\), the \(I^{(\lambda )}_\Pi\) is not the minimum power-divergence divergence between \(\{\Pi ^*_{ij}\}\) and probability distribution with the structure of symmetry model. Therefore, if analysts want to measure the degree of departure from the symmetry model by using the minimum power-divergence between \(\{\Pi ^*_{ij}\}\) and probability distribution with the structure of symmetry model, then analysts can use only the \(\Phi ^{(0)}\) based on the minimum KL divergence.

The symmetry model can be expressed as

where

The \(\Phi ^{(\lambda )}\) can be expressed as

where

It must be noted that \(I^{(\lambda )}_{\Pi (ij)}\) is the power-divergence between \(\{\Pi ^*_{ij}, \Pi ^*_{ji}\}\) and \(\{1/2, 1/2\}\). The value at \(\lambda =0\) is taken to be limit as \(\lambda \rightarrow 0\), that is,

where

It must be noted that \(I^{(0)}_{\Pi (ij)}\) is the KL divergence between \(\{\Pi ^*_{ij}, \Pi ^*_{ji}\}\) and \(\{1/2, 1/2\}\).

The \(\Phi ^{(\lambda )}\) can also be expressed as

where

It must be noted that \(H^{(\lambda )}_{\Pi (ij)}\) is the Patil and Taillie’s diversity index of degree \(\lambda\) for \(\{\Pi ^c_{ij}, \Pi ^c_{ji}\}\). The value at \(\lambda =0\) is taken to be limit as \(\lambda \rightarrow 0\), that is,

where

It must be noted that \(H^{(0)}_{\Pi (ij)}\) is the Shannon entropy for \(\{\Pi ^c_{ij}, \Pi ^c_{ji}\}\).

This study gives the following theorem with respect to the properties of \(\Phi ^{(\lambda )}\).

Theorem 2

The \(\Phi ^{(\lambda )}\) has the following properties: (i) the range of \(\Phi ^{(\lambda )}\) is zero to one, (ii) the \(\Phi ^{(\lambda )}\) is equal to zero if and only if the symmetry model holds, and (iii) the \(\Phi ^{(\lambda )}\) is equal to one if and only if the degree of departure from the symmetry model is largest, that is, \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) for all \(i<j\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\) for all \(i<j\).

See Appendix B for the proof of Theorem 2. The definition of the maximum departure from the symmetry model in the \(\Phi ^{(\lambda )}\) (i.e., \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) for all \(i<j\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\) for all \(i<j\)) indicates that the cell probability \(\pi _{ij}=0\) for all \(i<j\) or \(\pi _{ji}=0\) for all \(i<j\) under the condition \(\Pi _{ij}+\Pi _{ji} >0\).

It must be noted that the \(\phi ^{(\lambda )}\) is defined under the condition \(G_{ij}+G_{ji} >0\) for \(i<j\), and the \(\Phi ^{(\lambda )}\) is defined under the condition \(\Pi _{ij}+\Pi _{ji} >0\) for \(i<j\). We will discuss the difference of the limitation between the proposed index \(\Phi ^{(\lambda )}\) and the existing index \(\phi ^{(\lambda )}\) in Sect. 7.

3 Estimator and confidence interval for proposed index

Let \(n_{ij}\) be the observed frequency in (i, j)th cell of the square contingency table for \(i, j = 1,2,\dots ,R\). Assume that a multinomial distribution applies to the square contingency table. Let \(\hat{\pi }_{ij}\) (\(=n_{ij}/N\)) be the sample proportions of \(\pi _{ij}\) for \(i, j = 1,2,\dots ,R\), where \(N=\sum \sum n_{ij}\).

The plug-in estimator \(\hat{\Phi }^{(\lambda )}\) with \(\{\pi _{ij}\}\) replaced by \(\{\hat{\pi }_{ij}\}\) is approximately the unbiased estimator of \(\Phi ^{(\lambda )}\). This is because, it is obvious from the following equation using the Taylor expansion:

where \(\hat{\varvec{\pi }}\) and \(\varvec{\pi }\) are the \(R^2 \times 1\) vectors, that is,

We will derive the asymptotic distribution of \(\hat{\Phi }^{(\lambda )}\) using the delta method (Bishop et al. 2007, Sec. 14.6). Additionally, the approximate confidence interval for \(\Phi ^{(\lambda )}\) is derived. From the central limit theorem, \(\sqrt{N}(\hat{\varvec{\pi }} - \varvec{\pi })\) is asymptotically (\(N \rightarrow \infty\)) distributed as a normal distribution with mean zero vector and the covariance matrix \(\varvec{D}(\varvec{\pi }) - \varvec{\pi }\varvec{\pi }^\top\), where \(\varvec{D}(\varvec{\pi })\) is a diagonal matrix with the kth component of \(\varvec{\pi }\) in the kth diagonal element (\(k=1,\dots ,R^2\)). Although these convergences hold when the cell probability \(\pi _{ij}\) is sufficiently large, they may not apply when the order of \(N\pi _{ij}\) is small. From the delta method and the Eq. (1), \(\sqrt{N}(\hat{\Phi }^{(\lambda )} - \Phi ^{(\lambda )})\) is asymptotically (\(N \rightarrow \infty\)) distributed as a normal distribution with mean zero and the variance \(\sigma ^2[\hat{\Phi }^{(\lambda )}]\). The detail of the variance \(\sigma ^2[\hat{\Phi }^{(\lambda )}]\) is as follows:

where for \(\lambda >-1\) and \(\lambda \ne 0\),

with

and for \(\lambda = 0\),

Since

the variance \(\sigma ^2[\hat{\Phi }^{(\lambda )}]\) can be expressed as

It must be noted that the asymptotic normal distribution of \(\sqrt{N}(\hat{\Phi }^{(\lambda )} - \Phi ^{(\lambda )})\) is not applicable when \(\Phi ^{(\lambda )} = 0\) and \(\Phi ^{(\lambda )} = 1\). This is because the variance of \(\hat{\Phi }^{(\lambda )}\) is equal to zero.

Since the variance \(\sigma ^2[\hat{\Phi }^{(\lambda )}]\) is unknown, we introduce the estimator \(\widehat{\sigma ^{2} [\hat{\Phi }^{(\lambda )}]}\) that is \(\sigma ^2[\hat{\Phi }^{(\lambda )}]\) with \(\{\pi _{ij}\}\) replaced by \(\{\hat{\pi }_{ij}\}\). Therefore, we derive an approximate \(100(1-\alpha )\%\) confidence interval for \(\Phi ^{(\lambda )}\) as follows:

where \(z_{\alpha /2}\) is the quantile of the standard normal distribution corresponding to a two-tail probability of \(\alpha\).

Let the \(\Phi ^{(\lambda )}_\text {A}\) and \(\Phi ^{(\lambda )}_\text {B}\) be the \(\Phi ^{(\lambda )}\) for independent datasets A and B, respectively. An estimator of the difference between the \(\Phi ^{(\lambda )}_\text {A}\) and \(\Phi ^{(\lambda )}_\text {B}\) is given by \(\hat{\Phi }^{(\lambda )}_\text {A} - \hat{\Phi }^{(\lambda )}_\text {B}\). When the sample sizes \(N_\text {A}\) and \(N_\text {B}\) for datasets A and B are large, this estimator \(\hat{\Phi }^{(\lambda )}_\text {A} - \hat{\Phi }^{(\lambda )}_\text {B}\) is asymptotically distributed as a normal distribution with mean \(\Phi ^{(\lambda )}_\text {A} - \Phi ^{(\lambda )}_\text {B}\) and the variance \(\sigma ^2[\hat{\Phi }^{(\lambda )}_\text {A}]/N_\text {A}+\sigma ^2[\hat{\Phi }^{(\lambda )}_\text {B}]/N_\text {B}\). Therefore, we derive an approximate \(100(1-\alpha )\%\) confidence interval for \(\Phi ^{(\lambda )}_\text {A} - \Phi ^{(\lambda )}_\text {B}\) as follows:

4 Relation between proposed index and conditional symmetry model

The conditional symmetry model (McCullagh 1978) is defined by

The conditional symmetry model indicates the asymmetric structure of cell probabilities with respect to the main diagonals cells of the square contingency table.

Under the conditional symmetry model, the proposed index \(\Phi ^{(\lambda )}\) is the function of \(\tau\) as follows: for \(\lambda >-1\),

Additionally, under the conditional symmetry model, the existing index \(\phi ^{(\lambda )}\) also is the function of \(\tau\) as follows: for \(\lambda >-1\),

The above results show that the proposed index \(\Phi ^{(\lambda )}\) is identical to the existing index \(\phi ^{(\lambda )}\) under the conditional symmetry model. Therefore, assuming the probability structure in which the conditional symmetry model holds, the performances of plug-in estimators \(\hat{\Phi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\) can be simply compared. In Sect. 5, we will evaluate the performances of them.

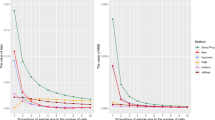

Figure 1 sketches the value of the \(\Phi ^{(\lambda )}\) corresponding to the value of \(\tau\) in the range \(0.1 \le \tau \le 10\) under the conditional symmetry model. The \(\Phi ^{(\lambda )}\) increases monotonically as \(\tau\) approaches zero or infinity.

5 Numerical examples

When the sample size is large enough, the plug-in estimator \(\hat{\Phi }^{(\lambda )}\) of the proposed index is an unbiased estimator, and the approximate confidence interval for the proposed index works. Therefore, it is necessary to evaluate the performances of those in a finite sample.

5.1 Setup

Assume that the observed frequencies \(\{n_{ij}\}\) are distributed as a multinomial distribution with probability vector \(\varvec{\pi }\). Table 1 shows the probability vectors \(\varvec{\pi }\) that satisfy the restriction of the conditional symmetry model. We will generate the random numbers based on the multinomial distribution with probability vector \(\varvec{\pi }\) shown in Table 1.

Table 2 shows the true values of the \(\Phi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\) for \(\lambda =0,0.5,1,1.5\) for each scenario in Table 1. It must be noted that the \(\Phi ^{(\lambda )}\) is identical to the \(\phi ^{(\lambda )}\) under the conditional symmetry model.

Let \(\hat{\Phi }_l^{(\lambda )}\) and \(\hat{\phi }_l^{(\lambda )}\) for \(l=1,\dots ,10000\) be the estimates of \(\Phi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\) in the lth iteration, respectively. The sample sizes N are set 50, 100, 200, 300, 500 and 1000. The performances of the plug-in estimators \(\hat{\Phi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\) are evaluated by the Bias (i.e., \(\sum _{l=1}^{10000}(\hat{\Phi }_l^{(\lambda )}-\Phi ^{(\lambda )})/10000\) and \(\sum _{l=1}^{10000}(\hat{\phi }_l^{(\lambda )}-\phi ^{(\lambda )})/10000\)) and the mean squared error (MSE) (i.e., \(\sum _{l=1}^{10000}(\hat{\Phi }_l^{(\lambda )}-\Phi ^{(\lambda )})^2/10000\) and \(\sum _{l=1}^{10000}(\hat{\phi }_l^{(\lambda )}-\phi ^{(\lambda )})^2/10000\)). Additionally, the performances of the approximate confidence intervals for \(\Phi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\) are evaluated by the coverage probability (CP) which is the proportion of the time that the approximate confidence interval contains the true value.

5.2 Results

Tables 3, 4 and 5 show the Bias, MSE of the plug-in estimators \(\hat{\Phi }^{(\lambda )}\) and \(\hat{\phi }^{(\lambda )}\), and the CP of the approximate 95\(\%\) confidence intervals for \(\Phi ^{(\lambda )}\) and \(\phi ^{(\lambda )}\). The Bias and MSE were each close to zero as the sample size increased. Additionally, the Bias and MSE of the \(\hat{\Phi }^{(\lambda )}\) were smaller in all scenarios compared to those of \(\hat{\phi }^{(\lambda )}\). Therefore, from Tables 3, 4 and 5, we conclude that the plug-in estimator \(\hat{\Phi }^{(\lambda )}\) converges to the true value \(\Phi ^{(\lambda )}\) (or \(\phi ^{(\lambda )}\)) faster than \(\hat{\phi }^{(\lambda )}\) under the conditional symmetry model. Moreover, we consider that the \(\hat{\Phi }^{(\lambda )}\) reduces the bias more than than \(\hat{\phi }^{(\lambda )}\), even when the sample size is not large.

The biases of plug-in estimator \(\hat{\Phi }^{(\lambda )}\) are positive in all scenarios. In the proposed index \(\Phi ^{(\lambda )}\), the maximum of \(H^{(\lambda )}_{\Pi (ij)}\) is \((2^\lambda -1)/(\lambda 2^\lambda )\) when \(\Pi ^c_{ij} = \Pi ^c_{ji} = 1/2\), and the minimum of \(H^{(\lambda )}_{\Pi (ij)}\) is zero when \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\). Therefore, the plug-in estimator \(\hat{\Phi }^{(\lambda )}\) arise positive bias by overestimating (or underestimating) \(\Pi _{ij}\) and underestimating (or overestimating) \(\Pi _{ji}\) for \(i<j\).

The CP was close to 95% as the sample size increased. When the sample size N is 300, the CP of the approximate 95\(\%\) confidence interval for \(\Phi ^{(\lambda )}\) were over the 94% in all scenarios, although those for \(\phi ^{(\lambda )}\) were under the 94% in most scenarios. In the case of the degree of departure from the symmetry model is not large (i.e., Scenario 1), the CP of the approximate 95\(\%\) confidence interval for \(\phi ^{(\lambda )}\) were under the 94% even when \(N=500\).

6 Real data analysis

The real datasets in Table 6 were analyzed to illustrate the utility of the proposed index \(\Phi ^{(\lambda )}\). These real datasets recorded the distance vision were taken from Tan (2017, p. 78, p. 139). Such distance vision data have been analyzed with respect to the symmetry model or its related models, see for example, Bowker (1948), Stuart (1955), Caussinus (1965), Bishop et al. (2007) and Tan (2017).

From Table 7, the approximate 95% confidence intervals for \(\Phi ^{(\lambda )}\) for \(\lambda =0, 0.5, 1, 1.5\) do not include zero for the dataset in Table 6a, and include zero for the dataset in Table 6b. Additionally, for the dataset in Table 6a, the degree of departure from the symmetry model is comparable with the conditional symmetry model in which \(\tau\) is about 1.5 to 2.5, and it is fairly slight for the dataset in Table 6b.

Next, we will compare the degrees of departure from the symmetry in two datasets of Table 6. From Table 8, the approximate 95% confidence intervals for the difference of \(\Phi ^{(\lambda )}\) between Table 6a, b are positive for all \(\lambda =0, 0.5, 1, 1.5\). Therefore, we conclude that the degree of departure from the symmetry in dataset of Table 6a is greater than that in dataset of Table 6b. The above results for datasets of Table 6 can also be obtained by using the existing index \(\phi ^{(\lambda )}\).

7 Discussion

In this section, we will discuss the difference of the limitation between the proposed index \(\Phi ^{(\lambda )}\) and the existing index \(\phi ^{(\lambda )}\). The \(\phi ^{(\lambda )}\) is defined under the condition \(G_{ij}+G_{ji} >0\) for \(i<j\), and the \(\Phi ^{(\lambda )}\) is defined under the condition \(\Pi _{ij}+\Pi _{ji} >0\) for \(i<j\). When the observed frequencies concentrate on the only one pair of symmetric cells (i.e., \(n_{ab}\) and \(n_{ba}\) for \(a \ne b\)) except the main diagonals cells, the \(\Phi ^{(\lambda )}\) cannot be estimated. However, we believe that, in practice, this situation is unlikely to occur. On the other hand, we believe that the situation, where the \(\phi ^{(\lambda )}\) cannot be estimated, may occur.

The datasets in Table 9 was taken from Agresti 2010[p. 266]. These datasets show the data for the right and left eyes, stratified by the gender of the person, with the four ordered categories used to summarize retinopathy severity.

For the datasets in Table 9, the \(\hat{\phi }^{(\lambda )}\) cannot be calculated. This is because, these datasets do not satisfy the assumption of the \(\phi ^{(\lambda )}\). On the other hand, since these datasets satisfy the assumption of the \(\Phi ^{(\lambda )}\), the \(\hat{\Phi }^{(\lambda )}\) can be calculated, see Table 10. Therefore, we believe that the proposed index \(\Phi ^{(\lambda )}\) is more useful than the existing index \(\phi ^{(\lambda )}\) in these points.

8 Concluding remarks

This study proposed the new index \(\Phi ^{(\lambda )}\) that can measure the degree of departure from the symmetry model. The \(\Phi ^{(\lambda )}\) was constructed based on the power divergence of Cressie and Read 1984, or the weighted average of the diversity index of Patil and Taillie (1982). Additionally, the \(\Phi ^{(\lambda )}\) is the function of the cumulative probabilities \(\{\Pi _{ij}\}\).

Through the numerical examples, real data analysis and discussion, the usefulness of the proposed index \(\Phi ^{(\lambda )}\) compared to existing index \(\phi ^{(\lambda )}\) was demonstrated. In the numerical examples, we concluded that the plug-in estimator \(\hat{\Phi }^{(\lambda )}\) converges to the true value faster than \(\hat{\phi }^{(\lambda )}\). In the discussion, we showed that the \(\Phi ^{(\lambda )}\) can be estimated in the situation where the \(\phi ^{(\lambda )}\) cannot be estimated.

The reader may be interested in which value of \(\lambda\) is preferred for a given dataset. However, it seems difficult to discuss this. It seems to be important that, for given datasets, the analyst calculates the values of \(\hat{\Phi }^{(\lambda )}\) for various values of \(\lambda\) and discusses the degrees of departure from the symmetry model in terms of these \(\hat{\Phi }^{(\lambda )}\) values.

References

Agresti, A. (2010). Analysis of ordinal categorical data (2nd ed.). Wiley.

Ando, S. (2022). Orthogonal decomposition of symmetry model using sum-symmetry model for ordinal square contingency tables. Chilean Journal of Statistics, 13(2), 221–231.

Ando, S., Tahata, K., & Tomizawa, S. (2017). Visualized measure vector of departure from symmetry for square contingency tables. Statistics in Biopharmaceutical Research, 9(2), 212–224.

Bishop, Y. M., Fienberg, S. E., & Holland, P. W. (2007). Discrete multivariate analysis: Theory and practice. Springer.

Bowker, A. H. (1948). A test for symmetry in contingency tables. Journal of the American Statistical Association, 43(244), 572–574.

Caussinus, H. (1965). Contribution à l’analyse statistique des tableaux de corrélation. Annales de la Faculté des Sciences de l’Universitéde Toulouse, 29, 77–183.

Cressie, N., & Read, T. R. (1984). Multinomial goodness-of-fit tests. Journal of the Royal Statistical Society: Series B (Methodological), 46(3), 440–464.

Kateri, M., & Agresti, A. (2007). A class of ordinal quasi-symmetry models for square contingency tables. Statistics & Probability Letters, 77(6), 598–603.

Kateri, M., & Papaioannou, T. (1997). Asymmetry models for contingency tables. Journal of the American Statistical Association, 92(439), 1124–1131.

McCullagh, P. (1978). A class of parametric models for the analysis of square contingency tables with ordered categories. Biometrika, 65(2), 413–418.

Patil, G., & Taillie, C. (1982). Diversity as a concept and its measurement. Journal of the American Statistical Association, 77(379), 548–561.

Stuart, A. (1955). A test for homogeneity of the marginal distributions in a two-way classification. Biometrika, 42(3/4), 412–416.

Tahata, K. (2020). Separation of symmetry for square tables with ordinal categorical data. Japanese Journal of Statistics and Data Science, 3(2), 469–484.

Tahata, K. (2022). Advances in quasi-symmetry for square contingency tables. Symmetry, 14(5), 1051.

Tahata, K., & Tomizawa, S. (2008). Generalized marginal homogeneity model and its relation to marginal equimoments for square contingency tables with ordered categories. Advances in Data Analysis and Classification, 2(3), 295–311.

Tahata, K., & Tomizawa, S. (2014). Symmetry and asymmetry models and decompositions of models for contingency tables. SUT Journal of Mathematics, 50(2), 131–165.

Tan, T. K. (2017). Doubly classified model with R. Springer.

Tomizawa, S. (1994). Two kinds of measures of departure from symmetry in square contingency tables having nominal categories. Statistica Sinica, 4(1), 325–334.

Tomizawa, S., Miyamoto, N., & Hatanaka, Y. (2001). Measure of asymmetry for square contingency tables having ordered categories. Australian & New Zealand Journal of Statistics, 43(3), 335–349.

Tomizawa, S., Miyamoto, N., & Ohba, N. (2007). Improved approximate unbiased estimators of measures of asymmetry for square contingency tables. Advances and Applications in Statistics, 7(1), 47–63.

Tomizawa, S., Seo, T., & Yamamoto, H. (1998). Power-divergence-type measure of departure from symmetry for square contingency tables that have nominal categories. Journal of Applied Statistics, 25(3), 387–398.

Yamamoto, K., Tanaka, Y., & Tomizawa, S. (2013). Sum-symmetry model and its orthogonal decomposition for square contingency tables with ordered categories. SUT Journal of Mathematics, 49(2), 121–128.

Acknowledgements

The authors are grateful to two anonymous referees and the editor for their valuable suggestions on improving this article.

Funding

Open Access funding provided by Tokyo University of Science.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Proof of Theorem 1

Proof

Let denote the following \(R(R-1)/2 \times 1\) vectors:

where the symbol “\(\top\)" indicates the transpose. The \(\varvec{\Pi }_U\) and \(\varvec{\Pi }_L\) are represented as \(\varvec{A}\varvec{\pi }_U\) and \(\varvec{A}\varvec{\pi }_L\), respectively, where the \(\varvec{A}\) is the \(R(R-1)/2 \times R(R-1)/2\) matrix with diagonal elements 0 and off-diagonal elements 1. It must be noted that the matrix \(\varvec{A}\) is obviously non-singular matrix. Therefore, we obtain the following necessary and sufficient condition.

The proof is completed.

Proof of Theorem 2

Proof

Since \(\Pi ^c_{ij}+\Pi ^c_{ji}=1\) for \(i<j\), for each \(\lambda\), the \(H^{(\lambda )}_{\Pi (ij)}\) can be expressed as the function of \(\Pi ^c_{ij}\) as follows:

In the case of \(\lambda > -1 \ \text {and} \ \lambda \ne 0\), the maximum of \(H^{(\lambda )}_{\Pi (ij)}\) is \((2^\lambda -1)/(\lambda 2^\lambda )\) when \(\Pi ^c_{ij} = \Pi ^c_{ji} = 1/2\), and the minimum of \(H^{(\lambda )}_{\Pi (ij)}\) is zero when \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\). In the case of \(\lambda = 0\), the maximum of \(H^{(0)}_{\Pi (ij)}\) is \(\log 2\) when \(\Pi ^c_{ij} = \Pi ^c_{ji} = 1/2\), and the minimum of \(H^{(0)}_{\Pi (ij)}\) is zero when \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\). From these results, the properties (i) and (ii) hold. Additionally, we show that the property (iii) also holds. For \(1\le \alpha< \beta < \gamma \le R\), if \(\Pi ^c_{\alpha \beta }=0\) and \(\Pi ^c_{\alpha \gamma }=1\), then \(\Pi ^c_{\beta \gamma }\ne 0\) and \(\Pi ^c_{\beta \gamma }\ne 1\). Thus, for all \(i<j\), the \(H^{(\lambda )}_{\Pi (ij)}\) is zero only when \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) for all \(i<j\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\) for all \(i<j\) but \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\) for \(i<j\).

Therefore, the \(\Phi ^{(\lambda )}\) has the following properties: (i) the range of \(\Phi ^{(\lambda )}\) is zero to one, (ii) the \(\Phi ^{(\lambda )}\) is equal to zero if and only if the symmetry model holds, and (iii) the \(\Phi ^{(\lambda )}\) is equal to one if and only if the degree of departure from the symmetry model is largest, that is, \(\Pi ^c_{ij}=0\) and \(\Pi ^c_{ji}=1\) for all \(i<j\) or \(\Pi ^c_{ij}=1\) and \(\Pi ^c_{ji}=0\) for all \(i<j\). The proof is completed.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ando, S., Momozaki, T., Masusaki, Y. et al. An index for measuring degree of departure from symmetry for ordinal square contingency tables. J. Korean Stat. Soc. (2024). https://doi.org/10.1007/s42952-024-00271-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s42952-024-00271-6