Abstract

Homeowners, first-time buyers, banks, governments and construction companies are highly interested in following the state of the property market. Currently, property price indexes are published several months out of date and hence do not offer the up-to-date information which housing market stakeholders need in order to make informed decisions. In this article, we present an enhanced version of a mix-adjusted median based property price index which uses geospatial property data and stratification in order to compare similar houses sold in different trading periods. The expansion of the algorithm to include additional parameters, enabled by both a richer dataset and the introduction of a new, efficient data structure for nearest neighbour approximation, allows for the construction of a far smoother and more robust index than the original algorithm produced.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

House price indexes provide vital information to the political, financial and sales markets, affecting the operation and services of lending institutions greatly and influencing important governmental decisions [1]. As one of the largest asset classes, house prices can even offer insight regarding the overall state of the economy of a nation [2]. Property value trends can predict near-future inflation or deflation and also have a considerable effect on the gross domestic product and the financial markets [3, 4].

There are a multitude of stakeholders interested in the development and availability of an algorithm which can offer an accurate picture of the current state of the housing market, including home buyers, construction companies, governments, banks and homeowners [5, 6].

Due to the recent global financial crisis, house price indexes and forecasting models play a more crucial role than ever. The key to providing a more robust and up-to-date overview of the housing market lies in machine learning and statistical analysis on set of big data [7]. The primary aim is the improvement of currently popular algorithms for calculating and forecasting price changes, while making such indexes faster to compute and more regularly updated. Such advances could potentially play a key role in identifying price bubbles and preventing future collapses in the housing market [8, 9].

Hedging against market risk has been shown to be potentially beneficial to all stakeholders, however, it relies on having up-to-date and reliable price change information which is generally not publicly available [7, 10]. This restricts the possibility of this tool becoming a mainstream option to homeowners and small businesses.

In this article, we will expand upon previous work by Maguire et al. [5] on a stratified, mix-adjusted median property price model by applying said algorithm to a larger and richer dataset of property listings and explore the enhancements in smoothness offered by evolving the original algorithm [11]. Such evolutions have been made possible through the introduction of a custom-tailored data structure, the GeoTree, which allows for rapid identification of a group of neighbours of any given property within fixed distance buckets [12]. These introductions remove the time barrier which was constraining the original algorithm, which took an excessive amount of time to compute results, leading to an inability to further expand the size of the dataset.

The result of our study is a flexible, highly adaptable house price index model, which can be utilised to extract an accurate house price index from datasets of varying degrees of size and richness. The model offers the ability for a layperson to construct an up-to-date property price index using publicly available data, while allowing for stakeholders with greater data resources, such as mortgage lenders or corporations, to leverage their more descriptive information sources to achieve a higher degree of model precision.

2 An overview of property price index models

In this section we will detail the three main classes of existing property price indexes. These consist of the hedonic regression, repeat-sales and central-price tendency/mix-adjusted median methods.

2.1 Hedonic regression

Hedonic regression is a method which considers all of the characteristics of a house (eg. bedrooms, bathrooms, land size, location etc.) and calculates how much weight each of these attributes have in relation to the overall price of the house [13]. Mathematically, a semi-log hedonic regression model is typically used for house price index estimation [14]:

where \(p_{x}\) is the price of property x sold in the period of interest. c is a constant. I is the set of property attributes on which the model is being fit. \(\beta _{i}\) is the regression co-efficient associated with attribute i. \(\mathrm {D}_{i}^{x}\) is a dummy variable, indicating the presence of characteristic i in property x, in the case of categorical attributes. For continuous attributes, this will take on the continuous value in question. \(\epsilon _{i}^{x}\) is an error term for attribute i.

While hedonic regression has been shown to be the most robust measure in general by Goh et al. [15], outperforming the repeat-sales and mix-adjusted median methods, it requires a vast amount of detailed data and the interpretation of an experienced statistician in order to produce a result [5, 16].

As hedonic regression rests on the assumption that the price of a property can be broken down into its integral attributes, the algorithm in theory should consider every possible characteristic of the house. However, it would be impractical to obtain all of this information. As a result, specifying a complete set of regressors is extremely difficult [17].

The great number of free parameters which require tuning in a hedonic regression model also leads to a high chance of overfitting [5]. This issue may be more pronounced in cases where the training data is sourced from a biased sample which is not representative of the property market as a whole, rather than from a complete set of property sale transactions in a given region.

2.2 Repeat-sales

The repeat-sales method is the most commonly used method of reporting housing sales in the United States and uses repeated sales of the same property over long periods of time to calculate change [18]. An enhanced, weighted version of this algorithm was explored by Case et al. [19]. The advantage of this method comes in the simplicity of constructing and understanding the index; historical sales of the same property are compared with each other and thus the attributes of each house need not be known nor considered. The trade-off for this simplicity comes at the cost of requiring enormous amounts of data stretched across long periods of time [20]. Mathematically, the standard repeat sales model takes the form [14]:

where \(p_n^t\) is the price of property n when sold in time period t. T is the set of all time periods over which the index is measuring. \(\beta _{i}\) is the regression coefficient associated with time period i. \(\mathrm {D}_{i}^{n}\) is a dummy variable taking value 1 where \(i = t\), taking value − 1 where \(i = s\) and taking value 0 otherwise. \(\epsilon _{i}^{n}\) is an error term.

It has also been theorised that the sample of repeat sales is not representative of the housing market as a whole. For example, in a study by Jansen et al. [21], only 7% of detached homes were resold in the study period, while 30% of apartments had multiple sales in the same dataset. It is argued that this phenomenon occurs due to the ’starter home hypothesis’: houses which are cheaper and in worse condition generally sell more frequently due to young homeowners upgrading [21,22,23]. This leads to over-representation of inexpensive and poorer quality property in the repeat-sales method. Cheap houses are also sometimes purchased for renovation or are sold quickly if the homeowner becomes unsatisfied with them, which contributes to this selection bias [21]. Furthermore, newly constructed houses are under-represented in the repeat-sales model as a brand new property cannot be a repeat sale unless it is immediately sold on to a second buyer [22].

As a result of the low number of repeat transactions, an overwhelming amount of data is discarded [24]. This leads to great inefficiency of the index and its use of the data available to it. In the commonly used repeat-sales algorithm by Case et al. [19], almost 96% of the property transactions are disregarded due to incompatibility with the method [17].

2.3 Central price tendency/mix-adjusted median

Central-price tendency models have been explored as an alternative to the more commonly used methods detailed previously. The model relies on the principle that large sets of clustered data tend to exhibit a noise-cancelling effect and result in a stable, smooth output [5]. Furthermore, central price tendency models offer a greater level of simplicity than the highly-theoretical hedonic regression model. When compared to the repeat sales method, central tendency models offer more efficient use of their dataset, both in the sense of quantity and time period spread [5, 25].

According to a study of house price index models by Goh et al. [15], the central-tendency method employed by Prasad et al. [25] significantly outperforms the repeat-sales method despite utilising much smaller dataset. However, the method is criticised as it does not consider the constituent properties of a house and is thus more prone to inaccurate fluctuations due to a differing mix of sample properties between time periods [15]. For this reason, Goh et al. [15] finds that the hedonic regression model still outperforms the mix-adjusted median model used by Prasad et al. [25]. Despite this, the simplicity and high level of data utilisation that the method offers were argued to justify these drawbacks [15, 25].

An evolution of the mix-adjusted median algorithm used by Prasad et al. was later shown to outperform the robustness of the hedonic regression model used by the Irish Central Statistics Office [5, 26]. This model is described in detail in Sect. 7.1. The primary drawback of this algorithm was long execution time and high algorithmic complexity due to brute-force geospatial search, limiting the algorithm from being further expanded, both in terms of algorithmic features and the size of the dataset [12].

3 The role of price indexes in the financial sector

Property price index algorithms are of high interest to financial institutions, particularly banks who partake in mortage lending. We will outline the importance of these models to said institutions, as well as exploring the feasibility of implementing each of the models discussed in Sect. 2.

3.1 Importance of property price models to the financial services sector

While there are a multitude of stakeholders in the property market, perhaps the greatest of these is the financial services sector, due to lending in the form of mortgages. For the majority of people, a house is the most valuable asset they will own in their lifetime. Furthermore, almost one-third of British households are actively paying a mortgage on their house, which collectively forms the greatest source of debt for said group of people [27].

A change in the trend of house prices can have an extraordinary impact on the general strength or weakness of an economy. When property prices are high, homeowners feel secure in increasing both spending and borrowing, which in turn stimulates economic activity and increases bank revenues. However, when house prices are falling, homeowners can reduce their spending as they begin to fear that their debt burden from their mortgage will outsize the value of their property, thus restricting economic activity [27, 28].

Mortgages are a key source of revenue for banks and financial bodies, due to their long repayment length, which results in a considerable amount of interest accrued. However, they also pose a substantial risk for said financial institutions, as they involve the lending of a large principal which is often repaid over decades, during which the financial circumstances and stability of the borrower are not guaranteed to remain constant and indeed, are often influenced by the flux in property prices as an indicator of general economic stability. This makes it difficult to predict the number of borrowers who will struggle to meet their repayments during periods of economic downturn [29].

While an economic recession usually results in massive downward pressure on commercial property prices and the equities market, such a sharp drop tends not to be reflected as drastically in the residential property market. Rather, the number of transactions usually drops, as property owners no longer wish to sell their house for a lower sum of money than they would have received before. It is likely that such a drop in residential property sales volume is reflected in a reduction in new mortgage applications, hence resulting in a loss of revenue and profit for lenders. Furthermore, such an economic event signals reduced financial stability for borrowers and thus default rates on mortgages will rise, causing a greater amount of bad debt on the books [28, 29].

It is logical then that financial bodies are highly interested in tracking the movements in property prices, to inform their lending policies and risk assessment methods. A more bullish property market may lead to banks taking on slightly more risk, with a view that the property will appreciate and so too will the confidence of the borrower. Conversely, a bearish market will likely result in a tightening of the lending criteria, with institutions only taking on highly financially secure borrowers who they judge to be capable of weathering the storm of further depreciation of their newly-purchased property, in a worst-case scenario [30]. They might also be interested in comparing a mortgage application to the average property price for that region, to judge whether the price is excessively expensive when balanced with the financial circumstances of the applicant.

The untimely manner in which government statistical offices tend to release information on market movements, with a lag of 1–2 months being typical, may result in key policy decisions around lending being made later than is ideal. As a result, larger financial institutions are often interested in creating their own custom house price model which delivers up-to-date information, in order to better inform their lending criteria. We will present a suitable, performant model meeting these criteria later in this article.

3.2 Viability of models for application in the banking sector

Where a bank wishes to develop their own property price index model in order to get more up-to-date market information, there are some key considerations when choosing the appropriate methodology to employ. While the repeat sales method might at first seem tempting due to the simplicity of implementation, further thought reveals that this method is unlikely to be suitable. This algorithm relies on comparing multiple sales of the exact same house over long periods of time. If a financial body is using their historical mortgage data to fit the model, it is unlikely that the past sales of any given property were conducted using mortgages taken out at the same bank by different buyers, resulting in a low match rate for what is already a wasteful method in terms of data utilisation. Furthermore, historical data stretching back over decades is generally necessary to generate a reliable result with this method, which will likely be difficult for an institution to both source and convert into a clean, rich digital format [20].

The hedonic regression model may be a viable option, as these institutions will have property characteristic data for the properties on their loan books, which is key to the performance of this algorithm. However, the main drawback of using this method is the complexity of the model. The process of creating a hedonic regression model is very theoretically intense and generally requires the work of a number of statisticians in order to implement and interpret the index on an ongoing, regular basis. Furthermore, due to the human labour associated with maintaining a hedonic regression model, as well as the reliance on rich, detailed and well filtered data, it is difficult to produce the model on a more frequent time schedule than monthly or bi-monthly, particularly when this work must be repeated on a region-by-region basis, where an institution wants more granular measures than a national model.

Overfitting is another possible avenue of concern with regard to hedonic regression indices, as mentioned in Sect. 2.1. As hedonic regression relies on having a complete view of the property market, it may adapt poorly to financial institutions who likely only have access to a biased sample of property sales which have used their own lending products as the method of payment. If a particular bank was to target the middle-class working family as their intended customer base, for example, this may lead to a bias in the type of homes which are predominantly included in the model’s data pipeline, thus not accurately capturing the trend in the broader housing market, rather, only the movements in a subset of it.

Mix-adjusted median based property price index models may therefore prove the most effective option for a financial institution to implement. The main advantages of such an approach lie in the ease of implementation and flexibility to incorporate various data sources of differing densities. Firstly, a mix-adjusted median algorithm can usually be computed in an entirely automated way, without a great amount of tuning or manual processing, reducing the need for multiple statisticians to spend time constantly tweaking the model to produce a monthly release, particularly where results are being produced for a number of different cities or regions. This allows for the model to be recomputed very frequently; as often as daily or two-to-three times per week, if sufficient live incoming data is available for the model.

This model also does not rely on specifying a complete set of price-affecting characteristics and can work with as little as three attributes: the sale date, the address and the price. Due to this, the algorithm can use the entire property sale transaction data for greater accuracy and avoidance of overfitting, which is published publicly in most countries; for example, by the Property Services Regulatory Authority in Ireland, or by HM Land Registry in the United Kingdom. Furthermore, the flexibility of the methodology allows for additional core attributes, such as the number of rooms, to be included for greater accuracy, as we will demonstrate later in this article. This means that the institution can mix their own highly detailed mortgage data together with general, unbiased but sparsely-detailed data for property sales, in order to increase the model’s perspective of the market as a whole. As a result, the mix-adjusted median model is a sensible option for large banking institutions who wish to see very regular updates on the market in order to aid them in deciding on their credit lending policies.

4 GeoTree: a data structure for rapid, approximate nearest neighbour bucket searching

In order to solve the issues surrounding the brute-force geospatial search for nearest neighbours discussed in Sect. 2.3, a fast, custom bucket solution was implemented in order to generate neighbour results for a given property in \(O\left( 1\right)\) time. This means that the execution time does not increase with the number of samples in the structure; it remains constant regardless of the size.

4.1 Naive geospatial search

The distance between two pieces of geospatial data defined using the GPS co-ordinate system is computed using the haversine formula [31]. If we wish to find the closest point in a dataset to any given point in a naive fashion, we must loop over the dataset and compute the haversine distance between each point and the given, fixed point. This is an \(O\left( n\right)\) computation. If the distances are to be stored for later use, this also requires \(O\left( n\right)\) memory consumption. Thus, if the closest point to every point in the dataset must be found, this requires an additional nested loop over the dataset, resulting in \(O\left( n^2\right)\) memory and time complexity overall (assuming the distance matrix is stored). If such a computation is applied to a large dataset, such as the 147,635 property transactions used in the house price index developed by Maguire et al. [5], an \(O\left( n^2\right)\) algorithm can run extremely slowly even on powerful modern machines.

As GPS co-ordinates are multi-dimensional objects, it is difficult to prune and cut data from the search space without performing the haversine computation. Due to this, a different approach to geospatial search will prove necessary to investigate.

4.2 GeoHash

A geohash is a string encoding for GPS co-ordinates, allowing co-ordinate pairs to be represented by a single string of characters. The publicly-released encoding method was invented by Niemeyer in 2008 [32]. The algorithm works by assigning a geohash string to a square area on the earth, usually referred to as a bucket. Every GPS co-ordinate which falls inside that bucket will be assigned that geohash. The number of characters in a geohash is user-specified and determines the size of the bucket. The more characters in the geohash, the smaller the bucket becomes, and the greater precision the geohash can resolve to. While geohashes thus do not represent points on the globe, as there is no limit to the number of characters in a geohash, they can represent an arbitrarily small square on the globe and thus can be reduced to an exact point for practical purposes. Figure 1 demonstrates parts of the geohash grid on a section of map.

Geohashes are constructed in such a way that their string similarity signifies something about their proximity on the globe. Take the longest sequential substring of identical characters possible from two geohashes (starting at the first character of each geohash) and call this string x. Then x itself is a geohash (ie. a bucket) with a certain area. The longer the length of x, the smaller the area of this bucket. Thus x gives an upper bound on the distance between the points. We will refer to this substring as the smallest common bucket (SCB) of a pair of geohashes. We define the length of the SCB as the length of the substring defining it. This definition can additionally be generalised to a set of geohashes of any size. Furthermore, we define the SCB of a single geohash g to be the set of all geohashes in the dataset which have g as a prefix. We can immediately assert an upper bound of 123,264 m for the distance between the geohashes in Fig. 2, as per the table of upper bounds in the pygeohash package, which was used in the implementation of this project [33].

4.3 Efficiency improvement attempts

Geohashing algorithms have, over time, improved in efficiency and have been put to use in a wide variety of applications and research contexts [34, 35]. As stated by Roussopoulos et al. [36], the efficient execution of nearest neighbour computations requires the use of niche spatial data structures which are constructed with the proximity of the data points being a key consideration.

The method proposed by Roussopoulos et al. [36] makes use of R-trees, a data structure similar in nature to the GeoTree structure which we will introduce in this article [37]. They propose an efficient algorithm for the precise NN computation of a spatial point, and extend this to identify the exact k-nearest neighbours using a subtree traversal algorithm which demonstrates improved efficiency over the naive search algorithm. Arya et al. [38] further this research by introducing an approximate k-NN algorithm with time complexity of \(O\left( kd\log n\right)\) for any given value of k.

A comparison of some data structures for spatial searching and indexing was carried out by Kothuri et al. [39], with a specific focus on comparison between the aforementioned R-trees and Quadtrees, including application to large real-world GIS datasets. The results indicate that the Quadtree is superior to the R-tree in terms of build time due to expensive R-tree clustering. As a trade-off, the R-tree has faster query time. Both of these trees are designed to query for a very precise, user-defined area of geospatial data. As a result they are still relatively slow when making a very large number of queries to the tree.

Beygelzimer et al. [40] introduce another new data structure, the cover tree. Here, each level of the tree acts as a “cover” for the level directly beneath it, which allows narrowing of the nearest neighbour search space to logarithmic time in n.

Research has also been carried out in reducing the searching overhead when the exact k-NN results are not required, and only a spatial region around each of the nearest neighbours is desired. It is often the case that ranged neighbour queries are performed as traditional k-NN queries repeated multiple times, which results in a large execution time overhead [41]. This is an inefficient method, as the lack of precision required in a ranged query can be exploited in order to optimise the search process and increase performance and efficiency, a key feature of the GeoTree.

Muja et al. provide a detailed overview of more recently proposed data structures such as partitioning trees, hashing based NN structures and graph based NN structures designed to enable efficient k-NN search algorithms [42]. The suffix-tree, a data structure which is designed to rapidly identify substrings in a string, has also had many incarnations and variations in the literature [43]. The GeoTree follows a somewhat similar conceptual idea and applies it to geohashes, allowing very rapid identification of groups of geohashes with shared prefixes.

The common theme within this existing body of work is the sentiment that methods of speeding up k-NN search, particularly upon data of a geospatial nature, require specialised data structures designed specifically for the purpose of proximity searching [36].

4.4 GeoTree

The goal of our data structure is to allow efficient approximate ranged proximity search over a set of geohashes. For example, given a database of house data, we wish to retrieve a collection of houses in a small radius around each house without having to iterate over the entire database. In more general terms, we wish to pool all other strings in a dataset which have a maximal length SCB with respect to any given string.

4.4.1 High-level description

A GeoTree is a general tree (a tree which has an arbitrary number of children at each node) with an immutable fixed height h set by the user upon creation. Each level of the tree represents a character in the geohash, with the exception of level zero—the root node. For example, at level one, the tree contains a node for every character that occurs among the first characters of each geohash in the database. For each node in the first level, that node will contain children corresponding to each possible character present in the second position of every geohash string in the dataset sharing the same first character as represented by the parent node. The same principle applies from level three to level h of the GeoTree, using the third to hth characters of the geohash respectively.

At any node, we refer to the path to that node in the tree as the substring of that node, and represent it by the string where the ith character corresponds to the letter associated with the node in the path at depth i.

The general structure of a GeoTree is demonstrated in Fig. 3. As can be seen, the first level of the tree has a node for each possible letter in the alphabet. Only characters which are actually present in the first letters of the geohashes in our dataset will receive nodes in the constructed tree, however, we include all characters in this diagram for clarity. In the second level, the a node also has a child for each possible letter. This same principle applies to the other nodes in the tree. Formally, at the ith level, each node has a child for each of the characters present among the \((i+1)\)th position of the geohash strings which are in the SCB of the current substring of that node. A worked example of a constructed GeoTree follows in Fig. 4.

Consider the following set of geohashes which has been created for the purpose of demonstration: \(\{bh9f98, bh9f98, bd7j98, ac7j98, bh9aaj, bh9f9d, ac7j98, bd7jya, bh9aaj, ac7aaj\}\). The GeoTree generated by the insertion of the geohashes above with a fixed height of six would appear as seen in Fig. 4Footnote 1.

4.4.2 GeoTree data nodes

The data attributes associated with a particular geohash are added as a child of the leaf node of the substring corresponding to that geohash in the tree, as shown in Fig. 5. In the case where one geohash is associated with multiple data entries, each data entry will have its own node as a child of the geohash substring, as demonstrated in the diagram.

It is now possible to collect all data entries in the SCB of a particular geohash substring without iterating over the entire dataset. Given a particular geohash in the tree, we can move any number of levels up the tree from that geohash’s leaf nodes and explore all nearby data entries by traversing the subtree given by taking that node as the root. Thus, to compute the set of geohashes with an SCB of length m or greater with respect to the particular geohash in question, we need only explore the subtree at level m along the path corresponding to that particular geohash. Despite this improvement, we wish to remove the process of traversing the subtree altogether.

4.4.3 Subtree data caching

In order to eliminate traversal of the subtree we must cache all data entries in the subtree at each level. To cache the subtree traversal, each non-leaf node receives an additional child node which we will refer to as the list (ls) node. The list node holds references to every data entry that has a leaf node within the same subtree as the list node itself. As a result, the list node offers an instant enumeration of every leaf node within the subtree structure in which it sits, removing the need to traverse the subtree and collect the data at the leaf nodes. The structure of the tree with list nodes added is demonstrated in Fig. 6 (some nodes and list nodes are omitted for the sake of brevity and clarity).

4.4.4 Retrieval of the subtree data

Given any geohash, we can query the tree for a set of nearby neighbouring geohashes by traversing down the GeoTree along some substring of that geohash. A longer length substring will correspond to a smaller radius in which neighbours will be returned. When the desired level is reached, the cached list node at that level can be queried for instant retrieval of the set of approximate k-NN of the geohash in question.

As a result of this structure’s design, the GeoTree does not produce a distance measure for the items in the GeoTree. Rather, it clusters groups of nearby data points. While this does not allow for fine tuning of the search radius, it allows a set of data points which are geospatially close to the specified geohash to be retrieved in constant time, which is a worthwhile trade-off for our specific purposes, as it drastically decreases the computation time of the index.

4.5 Geohash+

Extended geohashes, which we will refer to as geohash+, are geohashes which have been modified to encode additional information regarding the property at that location. Additional parameters are encoded by adding a character in front of the geohash. The value of the character at that position corresponds to the value of the parameter which that character represents. Figure 7 demonstrates the structure of a geohash+ with two additional parameters, \(p_1\) and \(p_2\).

Any number of parameters can be prepended to the geohash. In the context of properties, this includes the number of bedrooms, the number of bathrooms, an indicator of the type of property (detached house, semi-detached house, apartment etc.), a parameter representing floor size ranges and any other attribute desired for comparison.

4.6 GeoTree Performance with geohash+

Due to the design of the GeoTree data structure, a geohash+ will be inserted into the tree in exactly the same manner as a regular geohash [12]. If the original GeoTree had a height of h for a dataset with h-length geohashes, then the GeoTree accepting that geohash extended to a geohash+ with p additional parameters prepended should have a height of \(h+p\). However, both of these are fixed, constant, user-specified parameters which are independent of the number of data points, and hence do not affect the constant-time performance of the GeoTree.

The major benefit of this design is that the ranged proximity search will interpret the additional parameters as regular geohash characters when constructing the common buckets upon insertion, and also when finding the SCB in any search, without introducing additional performance and complexity drawbacks. This means that if we wish to match properties for price comparison in our index models not only geospatially, but also by bedroom count, for example, the GeoTree with geohash+ will naturally take care of this added complexity without increasing the computation time complexity.

5 Case study: myhome property listing data

MyHome [44] are a major player in property sale listings in Ireland. With data on property asking prices being collected since 2011, MyHome have a rich database of detailed data regarding houses which have been listed for sale. MyHome have provided access to their dataset for the purposes of this research.

5.1 Dataset overview

The data provided by MyHome includes verified GPS co-ordinates, the number of bedrooms, the type of dwelling and further information for most of its listings. It is important to note, however, that this dataset consists of asking prices, rather than the sale prices featured in the less detailed Irish Property Price Register Data (used in the original algorithm) [5].

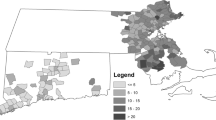

The dataset consists of a total of 718,351 property listing records over the period February 2011 to March 2019 (inclusive). This results in 7330 mean listings per month (with a standard deviation of 1689), however, this raw data requires some filtering for errors and outliers.

5.2 Data filtration

As with the majority of human collected data, some pruning must be done to the MyHome dataset in order to remove outliers and erroneous data. Firstly, not all transactions in the dataset include verified GPS co-ordinates or include data on the number of bedrooms. These records will be instantly discarded for the purpose of the enhanced version of the algorithm. They account for 16.5% of the dataset. Furthermore, any property listed with greater than six bedrooms will not be considered. These properties are not representative of a standard house on the market as the number of such listings amounts to just 1% of the entire dataset.

Any data entries which do not include an asking price cannot be used for house price index calculation and must be excluded. Such records amount to 3.6% of the dataset. Additionally, asking price records which have a price of less than €10,000 or more than €1,000,000 are also excluded, as these generally consist of data entry errors (eg. wrong number of zeroes in user-entered asking price), abandoned or dilapidated properties in listings below the lower bound and mansions or commercial property in the entries exceeding the upper bound. Properties which meet these exclusion criteria based on their price amount to only 2% of the dataset and thus are not representative of the market overall.

In summation, 77% of the dataset survives the pruning process. This leaves us with 5646 filtered mean listings per month.

5.3 Comparison with PPR dataset

The mean number of filtered monthly listings available in our dataset represents a 157% increase on the 2200 mean monthly records used in the original algorithm’s index computation [5]. Furthermore, the dataset in question is significantly more precise and accurate than the PPR dataset, owing to the ability to more effectively prune the dataset. The PPR dataset consists of address data entered by hand from written documents and does not use the Irish postcode system, meaning that addresses are often vague or ambiguous. This results in some erroneous data being factored into the model computation as there is no effective way to prune this data [5]. The MyHome dataset has been filtered to include verified addresses only, as described previously.

The PPR dataset has no information on the number of bedrooms or any key characteristics of the property. This can result in dilapidated properties, apartment blocks, inherited properties (which have an inaccurate sale value which is used for taxation purposes) and mansions mistakenly being counted as houses [5]. Our dataset consists of only single properties and the filtration process described previously greatly reduces the number of such unrepresentative samples making their way into the index calculation.

The “sparse and frugal” PPR dataset was capable of outperforming the CSO’s hedonic regression model with a mix-adjusted median model [5]. With the larger, richer and more well-pruned MyHome dataset, further algorithmic enhancements to this model are possible.

6 Performance measures

Property prices are generally assumed to change in a smooth, calm manner over time [45, 46]. According to Maguire et al. [5], the smoothest index is, in practice, the most robust index. As a result of this, smoothness is considered to be one of the strong indicators of reliability for an index. However, the ’smoothness’ of a time series is not well defined nor immediately intuitive to measure mathematically.

The standard deviation of the time series will offer some insight into the spread of the index around the mean index value. A high standard deviation indicates that the index changes tend to be large in magnitude. While this is useful in investigating the “calmness” of the index (how dramatic its changes tend to be), it is not a reliable smoothness measure, as it is possible to have a very smooth graph with sizeable changes.

The standard deviation of the differences is a much more reliable measure of smoothness. A high standard deviation of the differences indicates that there is a high degree of variance among the differences ie. the change from point to point is unpredictable and somewhat wild. A low value for this metric would indicate that the changes in the graph behave in a more calm manner.

Finally, we present a metric which we have defined, the mean spike magnitude \(\mu _{\varDelta {X}}\) (MSM) of a time series X. This is intended to measure the mean value of the contrast between changes each time the trend direction of the graph flips. In other words, it is designed to measure the average size of the ‘spikes’ in the graph.

Given \(D_X = \{d_1, \ldots , d_n\}\) is the set of differences in the time series X, we say that the pair \((d_i, d_{i+1})\) is a spike if \(d_i\) and \(d_{i+1}\) have different signs. Then \(S_i = |d_{i+1} - d_i|\) is the spike magnitude of the spike \((d_i, d_{i+1})\).

The mean spike magnitude of X is defined as:

where:

7 Algorithmic evolution

7.1 Original price index algorithm

The central price tendency algorithm introduced by Maguire et al. [5] was designed around a key limitation; extremely frugal data. The only data available for each property was location, sale date and sale price. The core concept of the algorithm relies on using geographical proximity in order to match similar properties historically for the purpose of comparing sale prices. While this method is likely to match certain properties inaccurately, the key concept of central price tendency is that these mismatches should average out over large datasets and cancel noise.

The first major component of the algorithm is the voting stage. The aim of this is to remove properties from the dataset which are geographically isolated. The index relies on matching historical property sales which are close in location to a property in question. As a result, isolated properties will perform poorly as it will not be possible to make sufficiently near property matches for them.

In order to filter out such properties, each property in the dataset gives one vote to its closest neighbour, or a certain, set number of nearest neighbours. Once all of these votes have been casted, the total number of votes per property is enumerated and a segment of properties with the lowest votes is removed. In the implementation of the algorithm used by Maguire et al. [5], this amounted to ten percent of the dataset.

Once the voting stage of the algorithm is complete, the next major component is the stratification stage. This is the core of the algorithm and involves stratifying average property changes on a month by month comparative basis which then serve as multiple points of reference when computing the overall monthly change. The following is a detailed explanation of the original algorithm’s implementation.

First, take a particular month in the dataset which will serve as the stratification base, \(m_b\). Then we iterate through each house sale record in \(m_b\), represented by \(h_{m_b}\). We must now find the nearest neighbour of \(h_{m_b}\) in each preceding month in the dataset, through a proximity search. For each prior month \(m_x\) to \(m_b\), refer to the nearest neighbour in \(m_x\) to \(h_{m_b}\) in question as \(h_{m_x}\). Now we are able to compute the change between the sale price of \(h_{m_b}\) and the nearest sold neighbour to h in each of the months \(\{m_1, \ldots , m_n\}\) as a ratio of \(h_{m_b}\) to \(h_{m_x}\) for \(x \in \{1, \ldots , n\}\). Once this is done for every property in \(m_b\), we will have a scenario such that there is a catalogue of sale price ratios for every month prior to m and thus we can look at the median price difference between m and each historic month.

However, this is only stratification with one base, referred to as stage three in the original article [5]. We then expand the algorithm by using every month in the dataset as a stratification base. The result of this is that every month in the dataset now has price reference points to every month which preceded it and we can now use these reference points as a way to compare month to month.

Assume that \(m_x\) and \(m_{x+1}\) are consecutive months in the dataset and thus we have two sets of median ratios \(\{r_x(m_1), \dots , r_x(m_{x-1})\}\) and \(\{r_{x+1}(m_1), \dots , r_{x+1}(m_{x})\}\) where \(r_{a}(m_y)\) represents the median property sale ratio between months \(m_a\) and \(m_y\) where \(m_a\) is the chosen stratification base. In order to compute the property price index change from \(m_x\) to \(m_{x+1}\), we look at the difference between \(r_x(m_i)\) and \(r_{x+1}(m_i)\) for each \(i \in {1, \dots , x-1}\) and take the mean of those differences. As such, we are not directly comparing each month, rather we are contrasting the relationship of both months in question to each historical month and taking an averaging of those comparisons.

This results in a central price tendency based property index that outperformed the national Irish hedonic regression based index while using a far more frugal set of data to do so.

7.2 Enhanced price index

In order to enhance our price index model, we prepend a parameter to the geohash of each property representing the number of bedrooms present within that property. As a result, when the GeoTree is performing the SCB computation, it will now only match properties which are both nearby and share the same number of bedrooms as the property in question. This allows the index model to compare the price ratio of properties which are more similar in nature during the stratification stage and thus should result in a smoother, more accurate measure of the change in property prices over time [11].

As described previously, the GeoTree sees the additional parameter no differently to any other character in the geohash and due to its placement at the start of the geohash, the search space will be instantly narrowed to properties with matching number of bedrooms, x, by taking the x branch in the tree at the first step of traversal.

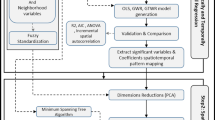

8 Results

Firstly, we will examine the performance improvement offered by the introduction of the GeoTree data structure, in addition to demonstrating the scalability of said data structure. Following this, we run the property price index algorithm on the MyHome data without factoring any additional parameters as a control step. Finally, we create a GeoTree with geohash+ entries consisting of the number of bedrooms in the house prepended to the geohash for the property, showing a comparison of each index time series.

8.1 GeoTree performance

Table 1 compares the performance of the original property price index algorithm with and without use of the GeoTree (on a database of 279,474 property sale records), including both single threaded execution time and multi-threaded execution time (running eight threads across eight CPU cores) on our test machine. The results using the GeoTree are marked with a + symbol.

Figure 8 demonstrates the high level of similarity between the original PPR index algorithm and the PPR index with GeoTree. The slight difference is due to the algorithmic change of considering a basket of neighbours for each property, rather than a single neighbour per property as in the original algorithm, which could be argued to be a positive algorithmic change, as a larger sample of properties is considered. Regardless, the difference between each time series is minimal and both are extremely highly correlated with one another (\(p=0.999\)).

8.2 GeoTree scalability testing

In order to test the scalability of the GeoTree, we obtained a dataset comprising 2,857,669 property sale records for California, and evaluated both the build and query time of the data structure. Table 2 shows mean build time and mean query time on both 10% (\(\sim\) 285,000 records) and 100% (\(\sim\)2.85 million records) of the dataset. In this context, query time refers to the total time to perform 100 sequential queries, as a single query was too fast to accurately measure.

The results demonstrate that the height of the tree has a modest effect on the build time, while dataset size has a linear effect on build time, thus supporting the claimed \(O\left( n\right)\) build time with \(O\left( 1\right)\) insertion. Furthermore, query time is shown to remain constant regardless of both tree height and dataset size, with negligible differences in all instances.Footnote 2

8.3 Improved index model performance

Table 3 shows the performance metrics previously described applied to the algorithms discussed in this paper: Original PPR, PPR with GeoTree, MyHome without bedroom factoring and MyHome with bedroom factoring. While both the standard deviation of the differences and the MSM show that some smoothness is sacrificed by the GeoTree implementation of the PPR algorithm, the index running on MyHome’s data without bedroom factoring approximately matches the smoothness of the original algorithm. Furthermore, when bedroom factoring is introduced, the algorithm produces by far the smoothest index, with the standard deviation of the differences being 26.2% lower than the PPR (original) algorithm presented by Maguire et al. [5], while the MSM sits at 58.2% lower.

If we compare the MyHome results in isolation, we can clearly observe that the addition of bedroom matching makes a very significant impact on the index performance. While the trend of each graph is observably similar, Fig. 8 demonstrates that month to month changes are less erratic and appear less prone to large, spontaneous dips. Considering the smoothness metrics, the introduction of bedroom factoring generates a decrease of 26.8% in the standard deviation of the differences and a decrease of approximately 48.4% in the MSM. These results show a clear improvement by tightening the accuracy of property matching and are promising for the potential future inclusion of additional parameters such as bedroom matching should such data become available.

Figure 8 corresponds with the results of these metrics, with the MyHome data (bedrooms factored) index appearing the smoothest time series of the four which are compared. It is important to note that the PPR data is based upon actual sale prices, while the MyHome data is based on listed asking prices of properties which are up for sale and as such, may produce somewhat different results.

It is a well known fact that properties sell extremely well in spring and towards the end of the year, the former being the most popular period for property sales. Furthermore, the months towards late summer and shortly after tend to be the least busy periods in the year for selling property [47]. These phenomena can be observed in Fig. 8 where there is a dramatic increase in the listed asking prices of properties in the spring months and towards the end of each year, while the less popular months tend to experience a slump in price movement. As such, the two PPR graphs and the MyHome data (bedrooms not factored) graph are following more or less the same trend in price action and their graphs tend to meet often, however, the majority of the price action in the MyHome data graphs tends to wait for the popular selling months. The PPR graph does not experience these phenomena as selling property can be a long, protracted process and due to a myriad of factors such as price bidding, paperwork, legal hurdles, mortgage applications and delays in reporting, final sale notifications can happen outside of the time period in which the sale price is agreed between buyer and seller.

9 Conclusion

9.1 Contributions

The introduction of bedroom factoring as an additional parameter in the pairing of nearby properties has been shown to have a profound impact on the smoothness of the mix-adjusted median property price index. These developments were made possible due to the acquisition of a richer data set and the introduction of the GeoTree data structure, which greatly increased the performance of the algorithm. There is also scope for the introduction of further property characteristics (such as the number of bathrooms, property type etc.) in the proximity matching part of the algorithm, should such data be acquired.

Despite this advancement, the algorithm still has great benefit to the layperson, outperforming certain implementations of hedonic regression models without having access to richer, private datasets [11]. The result is a highly flexible algorithm, which can adapt to various levels of data availability while still offering a high degree of accuracy. Examples of free, publicly available datasets which could be used with our house price index model include the Irish Property Price Register [48] (used in this analysis), or the British Price Paid dataset covering England and Wales, published monthly by HM Land Registry [49]. Stakeholders with greater exposure to the market, such as mortgage lenders, are likely to have their own rich data sources and thus will be capable of leveraging the increased accuracy offered by our model when it is fed a more descriptive dataset, as demonstrated in this study.

Furthermore, the design of the GeoTree data structure ensures that minimal computational complexity is added when considering the technical implementation of this algorithmic adjustment [12]. Any additional parameters or attributes could also be integrated with ease, without increasing the complexity of the index computation. This contribution is of great benefit to all housing market stakeholders, as it means that as soon as the property sale data for a given month becomes available, a house price index model can be produced immediately, using our algorithm. This counteracts the substantial time lag issue associated with national hedonic regression models, where house price indices typically are not published for one-to-two months after the end of the month to which the data pertains (e.g. August property price change may not be published until October).

9.2 Limitations and future work

The efficiency improvements offered by the GeoTree are such that our model could be computed rapidly enough, with full automation, to have real-time updates (e.g. up to every 5 min) to a property price index, if a sufficiently rich stream of continuous data was available to the algorithm. Large property listing websites, such as Zillow, likely have enough live, incoming listing data that such an index would be feasible to compute at this frequency, however, this volume of data is not publicly available so as to allow for demonstration of such an application by ourselves.

Comprehensive financial institutions dealing in mortgage lending likely have enough data to produce such an index on a region-by-region basis at least as frequently as weekly, if not even more regularly. This would aid their credit departments in lending decisions by offering a live, timely view of the changing dynamics of the property market and points of reference for typical house prices in each region, which the out of date national hedonic regression indices are incapable of doing, due to the lengthy publication delay discussed in Sect. 9.1.

Despite these limitations, we believe that our index has substantial benefit to all property market stakeholders, regardless of the amount of data at hand, or the richness of said data. Our future ambitions for research in this field include expanding our house price index model to perform property market forecasting based on emerging data. We also hope to gain access to an even larger property sale dataset, so that we can benchmark our model’s performance on a higher frequency than monthly indices. Beyond this, our goal is to integrate the house price index model into a deep learning model framework which can perform individual property valuation based on a number of input characteristics. This model aims not only to show the present value of a given property, but also the historical change in the value of said property, using our house price index model as an input.

Notes

Note: The leaf nodes consisting of an integer in curly braces, \(\{x\}\), is for demonstration and indicates that x is the number of insertions to the tree with that geohash string.

Note, this analysis is not designed to provide results on our house price index. Rather, it is intended to demonstrate that our GeoTree proximity matching solution is scalable to a larger geospatial dataset than the dataset used in our model analysis.

References

Diewert WE, de Haan J, Hendriks R (2015) Hedonic regressions and the decomposition of a house price index into land and structure components. Econom Rev 34(1–2):106–126. https://doi.org/10.1080/07474938.2014.944791

Case K, Shiller R, Quigley J (2001) Comparing wealth effects: the stock market versus the housing market. Adv Macroecon. https://doi.org/10.3386/w8606

Forni M, Hallin M, Lippi M, Reichlin L (2003) Do financial variables help forecasting inflation and real activity in the euro area? J Monetary Econ 50(6):1243–1255. https://doi.org/10.1016/S0304-3932(03)00079-5

Gupta R, Hartley F (2013) The role of asset prices in forecasting inflation and output in South Africa. J Emerg Market Financ 12(3):239–291. https://doi.org/10.1177/0972652713512913

Maguire P, Miller R, Moser P, Maguire R (2016) A robust house price index using sparse and frugal data. J Prop Res 33(4):293–308. https://doi.org/10.1080/09599916.2016.1258718

Plakandaras V, Gupta R, Gogas P, Papadimitriou T (2014) Forecasting the U.S. real house price index. Working paper series 30–14, Rimini Centre for Economic Analysis. https://ideas.repec.org/p/rim/rimwps/30_14.html

Hernando JR (2018) Humanizing finance by hedging property values. Emerald Publishing Limited, Bingley, pp 183–204. https://doi.org/10.1108/S0196-382120170000034015 (Chap. 10)

Jadevicius A, Huston S (2015) Arima modelling of Lithuanian house price index. Int J Hous Mark Anal 8(1):135–147. https://doi.org/10.1108/IJHMA-04-2014-0010

Klotz P, Lin TC, Hsu SH (2016) Modeling property bubble dynamics in Greece, Ireland, Portugal and Spain. J Eur Real Estate Res 9(1):52–75. https://doi.org/10.1108/JERER-11-2014-0038

Englund P, Hwang M, Quigley JM (2002) Hedging housing risk. J Real Estate Financ Econ 24(1):167–200. https://doi.org/10.1023/A:1013942607458

Miller R, Maguire P (2020) A rapidly updating stratified mix-adjusted median property price index model. In: 2020 IEEE symposium series on computational intelligence (SSCI), IEEE, p 9–15, https://doi.org/10.1109/SSCI47803.2020.9308235

Miller R, Maguire P (2020) GeoTree: a data structure for constant time geospatial search enabling a real-time mix-adjusted median property price index. arXiv e-prints arXiv:2008.02167

Kain JF, Quigley JM (1970) Measuring the value of housing quality. J Am Stat Assoc 65(330):532–548. https://doi.org/10.1080/01621459.1970.10481102

OECD, Eurostat, Organization IL, Fund IM, Bank TW, for Europe UNEC (2013) Handbook on residential property price indices. https://doi.org/10.1787/9789264197183-en

Goh YM, Costello G, Schwann G (2012) Accuracy and robustness of house price index methods. Hous Stud 27(5):643–666. https://doi.org/10.1080/02673037.2012.697551

Bourassa S, Hoesli M, Sun J (2006) A simple alternative house price index method. J Hous Econ 15(1):80–97. https://doi.org/10.1016/j.jhe.2006.03.001

Case B, Pollakowski HO, Wachter SM (1991) On choosing among house price index methodologies. Real Estate Econ 19(3):286–307. https://doi.org/10.1111/1540-6229.00554

Bailey MJ, Muth RF, Nourse HO (1963) A regression method for real estate price index construction. J Am Stat Assoc 58(304):933–942. https://doi.org/10.1080/01621459.1963.10480679

Case KE, Shiller RJ (1987) Prices of single family homes since 1970: New indexes for four cities. Working paper 2393, National Bureau of Economic Research. https://doi.org/10.3386/w2393. http://www.nber.org/papers/w2393

de Vries P, de Haan J, van der Wal E, Mariën G (2009) A house price index based on the spar method. J Hous Econ 18(3):214–223. https://doi.org/10.1016/j.jhe.2009.07.002 (special Issue on Owner Occupied Housing in National Accounts and Inflation Measures)

Jansen S, Vries P, Coolen H, Lamain CJM, Boelhouwer P (2008) Developing a house price index for the Netherlands: a practical application of weighted repeat sales. J Real Estate Financ Econ 37:163–186. https://doi.org/10.1007/s11146-007-9068-0

Costello G, Watkins C (2002) Towards a system of local house price indices. Hous Stud 17(6):857–873. https://doi.org/10.1080/02673030216001

Dorsey RE, Hu H, Mayer WJ, Chen Wang H (2010) Hedonic versus repeat-sales housing price indexes for measuring the recent boom-bust cycle. J Hous Econ 19(2):75–93. https://doi.org/10.1016/j.jhe.2010.04.001

Dombrow J, Knight JR, Sirmans CF (1997) Aggregation bias in repeat-sales indices. J Real Estate Financ Econ 14(1):75–88. https://doi.org/10.1023/A:1007720001268

Prasad N, Richards A (2008) Improving median housing price indexes through stratification. J Real Estate Res 30(1):45–72

O’Hanlon N (2011) Constructing a national house price index for Ireland. J Stat Soc Inq Soc Ireland 40:167–196

Bank of England (2018) How does the housing market affect the economy? https://www.bankofengland.co.uk/knowledgebank/how-does-the-housing-market-affect-the-economy. Accessed 24 May 2021

Zhu H et al (2005) The importance of property markets for monetary policy and financial stability. Real Estate Indica Financ Stab 21:9–29

Bank of England (2018) What is the bank of England’s role in the housing market? https://www.bankofengland.co.uk/knowledgebank/whats-the-bank-of-englands-role-in-the-housing-market. Accessed 24 May 2021

Che X, Li B, Guo K, Wang J (2011) Property prices and bank lending: some evidence from China’s regional financial centres. Procedia Comput Sci 4:1660–1667. https://doi.org/10.1016/j.procs.2011.04.179 (proceedings of the International Conference on Computational Science, ICCS 2011)

Robusto CC (1957) The cosine-haversine formula. Am Math Mon 64(1):38–40

Niemeyer G (2008) geohash.org is public! https://blog.labix.org/2008/02/26/geohashorg-is-public. Accessed 02 May 2019

McGinnis W (2017) Pygeohash. https://github.com/wdm0006/pygeohash, [Python]

Moussalli R, Srivatsa M, Asaad S (2015) Fast and flexible conversion of Geohash codes to and from latitude/longitude coordinates. In: 2015 IEEE 23rd annual international symposium on field-programmable custom computing machines, p 179–186. https://doi.org/10.1109/FCCM.2015.18

Moussalli R, Asaad SW, Srivatsa M (2015) Enhanced conversion between geohash codes and corresponding longitude/latitude coordinates. https://patents.google.com/patent/US20160283515

Roussopoulos N, Kelley S, Vincent F (1995) Nearest neighbor queries. SIGMOD Rec 24(2):71–79. https://doi.org/10.1145/568271.223794

Guttman A (1984) R-trees: a dynamic index structure for spatial searching. SIGMOD Rec 14(2):47–57. https://doi.org/10.1145/971697.602266

Arya S, Mount DM, Netanyahu NS, Silverman R, Wu AY (1998) An optimal algorithm for approximate nearest neighbor searching fixed dimensions. J ACM 45(6):891–923. https://doi.org/10.1145/293347.293348

Kothuri RKV, Ravada S, Abugov D (2002) Quadtree and R-tree indexes in oracle spatial: a comparison using GIS data. In: Proceedings of the 2002 ACM SIGMOD international conference on Management of data, ACM, p 546–557

Beygelzimer A, Kakade S, Langford J (2006) Cover trees for nearest neighbor. In: Proceedings of the 23rd international conference on machine learning, ACM, New York, NY, USA, ICML ’06, p 97–104. https://doi.org/10.1145/1143844.1143857

Bao J, Chow C, Mokbel MF, Ku W (2010) Efficient evaluation of k-range nearest neighbor queries in road networks. In: 2010 Eleventh international conference on mobile data Management, p 115–124. https://doi.org/10.1109/MDM.2010.40

Muja M, Lowe DG (2014) Scalable nearest neighbor algorithms for high dimensional data. IEEE Trans Pattern Anal Mach Intell 36(11):2227–2240. https://doi.org/10.1109/TPAMI.2014.2321376

Apostolico A, Crochemore M, Farach-Colton M, Galil Z, Muthukrishnan S (2016) 40 years of suffix trees. Commun ACM 59(4):66–73

MyHome Ltd (2021) http://www.myhome.ie. Accessed 31 Mar 2021

McMillen DP (2003) Neighborhood house price indexes in Chicago: a Fourier repeat sales approach. J Econ Geogr 3(1):57–73. https://doi.org/10.1093/jeg/3.1.57

Clapp JM, Kim H, Gelfand AE (2002) Predicting spatial patterns of house prices using LPR and Bayesian smoothing. Real Estate Econ 30(4):505–532. https://doi.org/10.1111/1540-6229.00048

Paci L, Beamonte MA, Gelfand AE, Gargallo P, Salvador M (2017) Analysis of residential property sales using space-time point patterns. Spatial Stat 21:149–165. https://doi.org/10.1016/j.spasta.2017.06.007

PPR (2021) Property price register. https://www.propertypriceregister.ie. Accessed 25 Oct 2021

HM-Land-Registry (2021) Price paid dataset. https://www.gov.uk/government/statistical-data-sets/price-paid-data-downloads. Accessed 25 Oct 2021

Funding

Open Access funding provided by the IReL Consortium.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miller, R., Maguire, P. A real-time mix-adjusted median property price index enabled by an efficient nearest neighbour approximation data structure. J BANK FINANC TECHNOL 6, 135–148 (2022). https://doi.org/10.1007/s42786-022-00043-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42786-022-00043-y