Abstract

This article examines the impact of Federal Open Market Committee (FOMC) statements on stock and foreign exchange markets with the use of text-mining and predictive models. We take into account a long period since March 2001 until June 2023. Unlike in most previous studies, both linear and non-linear methods were applied. We also take into account additional explanatory variables that control for the current corporate managers’ and retail customers’ assessment of the economic situation. The proposed methodology is based on calculating the FOMC statements’ tone (called sentiment) and incorporate it as a potential predictor in the modeling process. For the purpose of sentiment calculation, we utilized the FinBERT pre-trained NLP model. Fourteen event windows around the event are considered. We proved that the information content of FOMC statements is an important predictor of the financial markets’ reaction directly after the event. In the case of models explaining the reaction of financial markets in the first minute after the announcement of the FOMC statement, the sentiment score was the first or the second most important feature, after the market surprise component. We also showed that applying non-linear models resulted in better prediction of market reaction due to identified non-linearities in the relationship between the two most important predictors (surprise component and sentiment score) and returns just after the event. Last but not least, the predictive accuracy during the COVID pandemic was indeed lower than in the previous year.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Predicting the financial markets’ behavior has always been an important topic to researchers. Traders can decide whether to sell or buy a particular asset with the advance of time based on a number of arbitrages among which event arbitrage is still developing. Event arbitrage is a high-frequency strategy that trades on the market movements surrounding an event. Events utilized in event arbitrage strategies can be any release of news about the condition of the economy, industry- or corporate-specific announcements, disturbances on the market, and other factors that impact market prices.

As an event of interest, this article considers the release of Federal Open Market Committee (FOMC) public statements. The FOMC meets about eight times a year at scheduled meetings to discuss monetary policy changes, decide on the federal funds target rate level, review economic conditions, and assess price stability and the employment situation. There are also conference calls and non-scheduled meetings conducted on a non-regular basis.Footnote 1 For traders, FOMC meetings are a time of increased volatility because any change in federal fund rates can affect a range of economic aspects. In addition to the announced interest rate level, the content and specific wording is broadly analyzed in order to capture the tone of communication, along with the FOMC’s views on the economy and its policy propensity.

FOMC communication includes post-meeting statements (released immediately after decisions have been made), speeches by the Chair, the Chair’s quarterly press conference, meeting minutes, a summary of Economic Projections, Governors’ speeches, congressional testimony by the Chair, speeches by bank presidents, the Fed’s semi-annual written report to Congress, and the news feed of the Fed.Footnote 2 There is a vast literature that investigates market reaction to FOMC communication, among which the most examined are meeting minutes. This paper on the other hand broadens the existing literature on market reactions to statements.

Several studies have documented the fact that FOMC decisions have a significant impact on financial markets. Works on these issues focus on analyzing the mere fact of publication of any FOMC announcement, the day of FOMC scheduled meetings, or changes in interest rate value through the whole FOMC cycle (Cieslak et al., 2019), whether on the day of an FOMC meeting (Bernanke & Kuttner, 2005; Hayo et al., 2008; Kohn and Sack, 2004) or based on intraday stock reaction (Chirinoko & Curran, 2005; Farka & Fleissig, 2013). There is also a still growing body of research that is based on linguistic analysis of communication. Prior works include both an automated approach to analysis of FOMC communication and one based on human judgement. A narrative approach or “human text-mining” (meaning assigning subjective ratings of text by researchers) was applied for instance by Bernanke et al. (2004) and Romer and Romer (2004). More recent papers, as well as this one, usually incorporate an automated approach. Researchers such as Boukus and Rosenberg (2006), Lucca and Trebbi (2009), Cannon (2015), Mazis and Tsekrekos (2017), Jegadeesh and Wu (2017), Raul Cruz Tadle (2022) and Gu et al. (2022) statistically examined the content of FOMC communication in order to measure the tone and find its relationship to market reaction.

In this paper we study whether one can accurately predict financial markets reaction to the FOMC post-meeting statements based on their contents. In order to measure the effect, we extract the tone (“sentiment”) of the statements and include it as an independent variable in estimated models. We also account for market expectations regarding the Federal Funds Target Rate using 30-day Federal Funds Futures and the direction of change of the Federal Funds Target Rate relative to the rate announced in the previous statement. As additional predictors, we examine the Purchasing Managers’ Index (PMI) and Consumer Confidence Index (CCI). Econometrically, we employ various modeling approaches, including traditional linear regression and several machine-learning algorithms—namely, LASSO regression, ridge regression, support vector regression using both polynomial and radial kernels, random forest, and XGBoost. The sample under investigation is from 2001 to 2023. In our view, this is a useful choice as it includes three global crises (the financial crisis, the coronavirus pandemic and the war in Ukraine) along with many changes regarding leading individuals within the FOMC. Review of the literature in our research area allowed us to identify various research gaps, ones we fill with this paper. First, unlike most studies, we use both linear and non-linear methods in order to account for potential non-linear relationships between returns and their predictors. Second, we use both the scheduled time of statement releases and the time the public first learns about the result of meetings (we call it ‘adjusted time’), while it is commonly assumed in many studies that statements are announced at the scheduled time. Third, we take into account additional explanatory variables that control for the current corporate managers’ and retail customers’ assessment of the economic situation. Last, but not least, we apply a simple trading strategy based on the predicted returns and assess its profitability.

We examine stock and foreign exchange market reactions to FOMC post-meeting statements release in order to validate three research hypotheses. First, the information content of FOMC statements is an important predictor of the financial markets’ reaction directly after the event. Second, applying non-linear models results in better prediction of market reaction due to explaining more of the data variability and considering potentially non-linear relationships. Third, the predictive accuracy during the COVID pandemic is lower as financial markets are more volatile due to higher uncertainty related to the future macroeconomic situation.

The rest of this paper is structured as follows. Section 1 details the FOMC statements’ information content, which is analyzed in the context of market reaction. Section 2 details data issues, including data collation, sources, features preparation, and its analysis. Section 3 presents the empirical strategy, including sentiment retrieval and modeling techniques in order to examine the relationship between market reactions to FOMC statement sentiment, market surprise component, and other indicators. Section 4 provides the results of our analyses and a discussion of the results. The last section summarizes and concludes the paper and tables ideas for further extending this research.

2 FOMC Statements and their impact on financial markets

In recent years, public statements issued by the FOMC have become an increasingly important component of US monetary policy. Since the early 1980s, the FOMC has held eight regularly scheduled meetings per year, during which members discuss the economic outlook and formulate monetary policy. The FOMC first announced the result of a meeting including a Fed Funds Target Rate decision on February 4, 1994.Footnote 3 Before 1994, the market instead deduced changes to the target of the Federal Funds Rate from open market operations. In January 2000, the Committee announced that it would issue a statement following each regularly scheduled meeting, regardless of whether or not there had been a change in monetary policy.Footnote 4 Statements have been published since then on the same day that policy decisions are made.

As to measuring FOMC communication content, a similar text-mining methodology (namely, Latent Semantic Analysis) was applied by Mazis and Tsekrekos (2017) and Boukus and Rosenberg (2006). The former analyzed the FOMC statements issued between May 2003 and December 2014 and found that they incorporate many recurring topics. In that study, the themes appear to be statistically significant in explaining the variation in 3-month, 2-year, 5-year, and 10-year Treasury yields. The study controls for monetary policy uncertainty and financial markets’ stress (variables such as the three-month Eurodollar implied volatility and the VIX from the Chicago Board Options Exchange) along with concurrent economic outlook (variables such as the S&P 500 index, the foreign exchange value of the dollar, and the price of crude oil). As to statement-related variables, only the themes and release indicators were considered. The analysis was conducted through time-series regressions. The latter researchers apply latent semantic analysis to the FOMC’s minutes. Their analysis shows that the minutes reflect complex economic information that can be useful as a source of information in predicting economic activity. Boukus and Rosenberg discover that several themes are related to current market indicators, especially three-month yields and GDP growth. As control variables they use a proxy for expected business conditions and Eurodollar implied volatility. They use correlation analysis and linear regression for statistical inference. As to FOMC-related variables, only the minutes release indicator was considered.

Jegadeesh and Wu (2017) extend the analysis of extracting the topics of FOMC minutes by measuring each topic sentiment using a lexicon-based approach. They then examine the informativeness of the overall document with special heed to the extracted topics’ meaning if market volatility increases following the minutes’ release. They find that on the document level, the market does not indicate a significant reaction to either positive sentiment or negative sentiment. As to the document topic level, they find that the market reacts significantly to monetary policy, inflation, and employment and does not pay particular attention to growth-related discussions. As an equity market approximation, they use SPDR exchange-traded fund and S&P E-Mini futures contracts, and as a bond market approximation they use Eurodollar futures contracts.

Gu, Chen, et al. (2022) also focused on analyzing FOMC meeting minutes. They calculate the sentiment using the Loughran and McDonald lexicon that they confirmed with Cloud Natural language API provided by Google. They find that stock market reacts positively when the tone of FOMC minutes is more optimistic and that it reacts negatively to pessimistic tone. They proved that the influence of tonality of FOMC minutes on stock market is more significant during periods of high monetary policy uncertainty. They investigate the returns of E-mini S&P 500 futures and 2-year, 5-year and 10-year Treasury futures from 5 min before to 5 min after the release. They also investigated the response of Risk Premium to FOMC Minutes. This research regarded the period between January 2005 and January 2018.

Tadle (2022) evaluates both the content of FOMC meeting minutes and their corresponding statement. He implements the Dictionary Method of Content Analysis based on pre-defined keywords classified to hawkish and dovish types. In his work, he investigates the fed funds futures, the U.S. and emerging market equity indices, and foreign exchange valuations against the U.S. dollar. The results indicate that fed funds futures rates tend to rise (fall) following hawkish (dovish) minutes releases and that the dollar’s valuation against several currencies also has a higher likelihood of increasing after the market observes hawkish minutes information. He works on high frequency data using tick-by-tick frequency and adjusting it to more accurately represents market activity. His research covered FOMC meetings held on days from December 14, 2004 to December 15–16, 2015.

Cannon (2015) on the other hand focuses only on sentiment extraction and in order to score the content of FOMC meeting transcripts, he uses a lexicon-based approach for sentiment extraction using a combination of finance and consumer dictionaries. He discovers that the sentiment of Committee discussions is strongly related to real economic activity approximated by the Chicago Fed National Activity Index (CFNAI) and that the relationship varies by speaker class—more specifically, the tone of Bank Presidents proved to be more positive than the tone of the Governors and staff, and the tone of the staff proved to be more positive than the tone of the Governors. His research regards the period between 1977 and 2009. For statistical inference he utilized correlation analysis and linear regression using only one factor.

The content of communication was also investigated by Lucca and Trebbi (2009). They use two automated scoring algorithms regarding FOMC statements: Google semantic orientation score and Factiva semantic orientation score. They find that for high-frequency data, short-term Treasury yields respond to unexpected policy rate decisions and that longer-dated Treasury yields react mainly to changes in the content of communication. The dependent variables they analyzed were monetary policy surprise and the change in linguistic scores. There is no clear indication as to the statements’ release time that they considered. For statistical inference they use linear regression, a univariate model, Taylor-rule and VAR specifications.

As to measuring the impact of the Fed and also the mechanism behind impact, Bernanke and Kuttner (2005) conducted an event-study analysis focused on explaining the stock market reaction to Federal Reserve policy by analyzing FOMC announcement days. They consider the equity indexes of the Center for Research in Security Prices (CRSP) as an approximation of stock market reaction. Also, they conduct an event study analysis based on daily returns. They calculate the market reaction to funds rate changes on the day of the change, taking into further consideration funds futures data to gauge market policy expectations. They find a relatively strong and consistent response of the stock market to unexpected monetary policy actions. Their research regards FOMC meetings from June 1989 to December 2002. They estimate linear regression and VAR specification.

Kutan and Neuenkirch (2008) study the effects of FOMC communication (including statements, monetary policy reports, testimonies, BOG speeches, Presidents’ speeches) on the returns and volatility of Treasury bills, Treasury notes, S&P 500 Index, and the Dollar/Euro spot rate and focus on monetary policy and economic outlook. They estimate a linear regression and GARCH(1,1) models and incorporate manual communication classification as dummy variables. They control for changes in the Federal Funds target rate and also the forecasted output and inflation level. Additionally, they also consider GDP and CPI as independent variables. They find that central bank communication has significant impact on the financial markets’ returns, in particular on bond markets, and much less on stock and foreign exchange markets. They also find that on stock and foreign exchange markets, the volatility is muted after an announcement. They observe that the reaction of the market is relatively larger the more formal the type of communication. They investigate the period between January 1998 and December 2006.

Farka and Fleissig (2013) examine the impact of FOMC statements and interest rate surprises on asset prices’ volatility, in particular on S&P 500 and 3-month, 2-year, 5-year and 10-year US Treasury securities. Variables related to FOMC statements are four binary indicators that assume a value of one if the analyzed point of time is (1) 3 h prior to the policy release, (2) during the 20-min time-frame corresponding to the policy announcement, (3) right after a policy release up until the end of the policy announcement day, or (4) during the first 4 h of the following day and zero otherwise. Moreover, interest rate surprises are considered. They find that for most securities (especially stocks, intermediate and long-term yields), FOMC statements generate larger reactions than interest rate surprises. They investigate the period between February 1994 and June 2007. The method of estimation they use is GARCH(1,1) specification.

Several studies examined the market reaction within event windows that proved significant to other researchers. For example, Boukus and Rosenberg (2006) considered only a 20-min event window and Farka and Fleissig (2013) considered a 30-min interval.

Based on the reviewed literature, we identified several research gaps which are filled by our article. Firstly, the available literature provides mainly analyses based on basic methods which assume linear relationships between variables, such as correlation or linear regression. In our research, both traditional linear regression and non-linear machine-learning algorithms are used in order to identify potential non-linearities in relationships and assess whether non-linear methods result in better prediction. In addition, we apply novel tools of explainable artificial intelligence (XAI). In order to consistently assess the importance of predictors across various modeling approaches we use model agnostic permutated feature importance (PFI). And to uncover the shape of relationships between predictors and the outcome we calculate and plot Partial Dependence Profiles (PDP). What is more, we consider additional explanatory variables that control for the current corporate managers’ and retail customers’ assessment of the economic situation, which in turn is assumed to influence the financial markets. Our analysis covers a long period including recent turbulent times—COVID pandemic and war in Ukraine. Last, but not least, we use exact statement release time when the public first learned about the result of the meeting. It is commonly assumed in the majority of studies that statements are announced at the scheduled time, which is not always the case. We follow the research by Rosa (2012) who obtained the FOMC statements announcement times by searching through several financial media sources to record the time the public first learned about a FOMC decision. The announcement times regarding the period after 22.06.2011 (the last record in Rosa research) are sourced from the investing.com platform.

The general objective of this paper is to analyze whether one can accurately predict the reaction of financial markets to the content of FOMC public statements directly after they are made public. We aim to verify three research hypotheses. First, the information content of FOMC statements is an important predictor of the financial markets’ reaction directly after the event. Second, applying models that can identify non-linear relationships results in better prediction of market reaction due to explaining more of the data variability. Third, the predictive accuracy of the financial markets’ reaction during the COVID pandemic is lower as financial markets are more volatile.

We decided to conduct research considering two financial markets—namely, the stock market and foreign exchange market, as several studies (such as Hayo et al., 2014) have proven that these markets evince significant reaction to FOMC communication. On the stock market, we analyze the returns of the S&P 500 global index, which includes 500 US companies with the highest capitalization and thus is the best single equity gauging the biggest companies’ performance. As to the foreign exchange market, we analyze EUR/USD which is the most important currency pair in the world, one that significantly reacts to both Europe and US events.

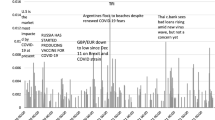

In recent years, the FOMC has improved its communication with the public. Nowadays, the Fed reports more frequently and more comprehensively on economic matters. The public statements form has evolved over the years regarding length, information provided, and wording. The period analyzed in this article covers only the newest regime (from 2001 to 2023) and thus only the changes in statements applying to that period will be described. As to the statements’ length, Fig. 1 indicates the number of words used in the statements through the whole analyzed period. Significant changes occurred after 2008 (the financial crisis), 2014 (Janet Yellen takes over the role of Chairman) and 2020 (coronavirus pandemic).

Besides the statement’s length, the content has also changed. At the beginning of the analyzed period, statements included only general information on the decided Federal Funds Target Rate, the current assessment of the economic situation, the likely future course of the economic situation, and the list of participants voting for a specific measure. Over time, the statements became more informative and transparent. The current form of the statement includes six main features. It begins with an assessment of the current state of the US economy since the previous FOMC meeting. After that, the monetary policy goals are emphasized. The following statement regarding FOMC objectives recurs in almost every statement: “Consistent with its statutory mandate, the Committee seeks to foster maximum employment and price stability”. Later in the statement, the decision regarding the Federal Funds Target Rate is presented. After the policy decision, the likely future course of the economic situation is introduced. The announcement additionally incorporates what factors the FOMC plans to consider in upcoming strategy choices. The last element of the statement is the list of participants voting for the FOMC monetary policy measure. It is important to note that the statements are rather uniform and contain identical sentences with the only difference being the assessment of the economic situation.

3 Methodology

3.1 Modeling approach

We examine the relationship between the information content of FOMC statements, market projections, changes in the Federal Funds Target Rate, the Purchasing Managers’ Index, Consumer Confidence Index, and returns of the S&P 500 Index and EUR/USD to assess the influence of FOMC measures on financial markets. In order to obtain the relation, we estimate seven modeling algorithms—namely, linear regression, LASSO regression, ridge regression, support vector regression using polynomial and radial kernels, random forest, and XGBoost. The linear regression model is a baseline method in the case of many researches (Boukus & Rosenberg, 2006; Lucca & Trebbi, 2009) and what is more, for almost each research regarding the impact of FOMC communication on financial markets. LASSO and ridge regression are OLS extensions that allow for detection of the most important predictors and reduce the impact of redundant variables. Support vector regression, random forest, and XGBoost are three non-linear methods from the area of machine-learning, though ones based on very different assumptions. SVR aims to find a hyperplane fitting the data after applying some non-linear transformations based on a selected kernel function, whereas random forest is one of the most popular ensemble tree-based methods utilizing the bootstrap averaging (bagging) approach—i.e., estimation of many independent models on different bootstrap samples drawn from the original data. XGBoost on the other hand is an implementation of the Gradient Boosting algorithm and is one of the most popular ensemble algorithms based on the boosting method—that is, sequential improvement of the model. To the best of our knowledge, machine-learning algorithms have not been applied to this research problem before.

Linear least squares regression is by far the most widely used modeling method in many different research areas. It is a simple and universal statistical method used for finding linear dependencies between a continuous dependent variable and several features called independent variables. The general objective of this approach is to minimize the error rate—more specifically, to minimize the sum of the squares of the residuals. This method enables us to estimate unknown coefficients that inform to what extent and in which direction independent variables influence the dependent variable. Based on the estimated coefficients and the actual values of independent variables, one can obtain a forecast of the dependent variable.

LASSO (Tibshirani, 1996) and ridge regression are shrinkage modeling methods. This is very similar to linear regression. However, the difference lies in the minimized value. The minimized quantity is the sum of the error rate and the shrinkage penalty that is applied for the variables’ coefficients (except intercept). LASSO regression uses \({l}_{1}\) penalty, while ridge regression uses \({l}_{2}\) penalty. As a result, parameters at less important (or redundant, e.g., highly correlated) variables will shrink towards zero; some of them will even be set equal to zero. At the expense of a certain bias, LASSO and ridge often allow us to obtain more precise forecasts on new data.

Support vectors (Smola & Schölkopf, 2004) are one of the most popular tools used for modeling both categorical (SVM) and quantitative (SVR) dependent variables. In a linear problem, SVM performs classification by finding a hyperplane that maximizes the margin between observations that belong to different classes. SVR aims to fit the best regression hyperplane within a certain threshold of error value \(\varepsilon\). Applying a kernel function results in an implicit non-linear mapping into a higher dimensional feature space, where it is guaranteed to find an appropriate hyperplane (Vapnik, 1995). Thus, one can think of SVR as a process of performing linear regression in a transformed and more dimensional space. The most popular kernel functions include: a linear kernel, a polynomial kernel, and a Gaussian radial basis function.

Random forest (Leo, 2001) is an ensemble learning method that builds a number of different decision or regression trees. The main advantage of using ensemble methods over simple tree models is that it results in better predictive performance than can be obtained from any of the trees alone, and it corrects for trees’ tendency toward overfitting. Random forest utilizes bootstrap averaging (bagging), which repeatedly selects a random sample with a replacement (bootstrap) from the training data set and independently fits trees to these samples. The final result is the average of all model predictions made on all selected subsamples. An important characteristic of random forest is that, at each split in each individual tree, it uses a random subset of predictors. This prevents individual trees from being correlated, because if one or a few features appeared to be strong predictors, they would be selected in many trees in the top split resulting in very similar trees. Averaging results of similar trees would not reduce variance as much as averaging results of uncorrelated trees. Therefore, this limited number of considered features reduces that risk. Random forest requires the optimization of hyperparameters, including in particular a number of trees to build in the forest and a number of variables randomly sampled at each split.

XGBoost (Chen & Guestrin, 2016) on the other hand is built on the basis of boosting the ensemble method. It is an implementation of the Gradient Boosting algorithm with additional technical features—namely, distributed computing, parallelization, out-of-core computing, and cache optimization. The concept of boosting is quite similar to bagging. However, in contrast to bagging where trees were built independently, boosting trees are built sequentially in a way that each tree uses information from previously obtained trees. More specifically, each model is trained with respect to the error of the whole ensemble learned so far. Similar to Random Forest, XGBoost tuning requires optimization of several hyperparameters including in particular the number of iterations, the rate at which the model learns patterns in data, maximum depth of the tree, minimum number of data required in a child node, the number of features supplied to a tree, and regularization parameters.

In our analysis, we compare the market reaction to chosen indicators with respect to eight specified event windows around the time when the post-meeting statements are released. We consider six pre-event windows, namely 10-, 5-, 4-, 3-, 2-, and 1-min before statement is released, and eight post-event windows, namely 1-, 2-, 3-, 4-, 5-, 10-, 30-, and 60-min after statement is released. Pre-event windows are considered in order to examine if there is any insider effect visible on the markets. This happens if the buying or selling decisions of insiders are followed by other investors and thus the market reacts in advance of an actual event.

Based on specified event windows, we then calculate simple returns as a difference between an asset price at the time of a FOMC statement release and an asset price at the end of event window divided by an asset price at the time of a FOMC statement release. The following formula presents the S&P 500 Index returns calculation in the case of a sample 5-min event window:

where \({Y}_{2:05}^{SP500}\) is the close price of the S&P 500 Index reported at 2:05 p.m. and \({Y}_{2:00}^{SP500}\) is the close price of the S&P 500 Index reported at 2:00 p.m.

We follow Kohn and Sack (2004), Bernanke and Kuttner (2005), and Farka and Fleissig (2013) and gauge for market policy expectations. Expectations of Fed policy measures are not directly observable. However, according to Kuttner (2001), the Fed funds’ futures prices are a proper market-based approximation for those expectations. We thus utilize the approach proposed by Kuttner (2001) and adopted also by earlier mentioned researchers. For each monetary policy announcement, we calculate the unexpected component of FOMC decisions as follows:

for all but the first and last days of the month where \(m\) is the number of days in month, \(d\) is the day of FOMC statement release and \({f}_{t}\) (\({f}_{t-1}\)) are the futures rates at time \(t\) (\(t-1\)). For the first date of the month, the surprise component is equal to the one-month futures rate on the last day of the previous month. For the last day of the month, the surprise component is on the other hand calculated as the difference in one-month futures rates.

The general model specification is as follows:

where \({Score}_{t}\) is the tone of a statement released at time \(t\), \(\Delta {r}_{t}^{u}\) is an unexpected component of FOMC decisions calculated based on 30-day Federal Funds Futures, \({FFTR}_{change}\) is the change in the Federal Funds Target Rate compared to the rate published in the previous statement, \({PMI}_{t}\) is value of the Purchasing Managers' Index holding at time \(t\), \({CCI}_{t}\) is a value of the Consumer Confidence Index holding at time \(t\) and \({\in }_{t}\) is the prediction error component. The rationale behind considering both PMI and CCI indexes is that this controls for the attitudes toward the economic situation from two perspectives—namely, business and individual customers.

For all the above-mentioned event windows, we estimate a linear regression which we consider as a baseline model, LASSO regression, ridge regression, SVR using polynomial and radial kernels, random forest, and XGBoost. For each model, the Root Mean Squared Error (RMSE) measure was used as a loss function while model tuning. What is more, to reliably compare the accuracy of applied algorithms we use cross-validation on a rolling basis. First we estimate all models on the training sample until the end of 2018 and use FOMC statements announced in 2019 as the first validation sample. Then we extend the training sample until the end of 2019 and use 2020 as the second validation sample. In the end we estimate models on the data up to the end of 2020 and use 2021 as the third validation sample. Based on the three validation samples (2019, 2020, 2021) we compare the performance of linear and non-linear models in order to verify the second research hypothesis. In addition, this distinction as to the years included in each sample was made in order to be able to confirm or reject the third research hypothesis – namely, that predictive accuracy during the COVID pandemic (2020, 2021) is lower as financial markets are more volatile due to higher uncertainty related to future macroeconomic situation. Then the models are estimated once again on the longest training sample covering the period until the end of 2021 and we use them to predict the reaction of financial markets to FOMC statements on the final test sample since January 2022 until June 2023. This final step is used to verify conclusions from earlier validation samples. Last, but not least, on the test sample we apply a simple trading strategy assuming a long position in the modelled asset for the length of the considered interval whenever the predicted return is positive and assess its total gross profit and loss.

Models are compared with the use of R squared (R2) and Root Mean Square Error (RMSE) of predictions. To assess the variables’ importance in a consistent way across models, we use Permutated Feature Importance (PFI. This is a model agnostic metric which measures the increase in model error (we use RMSE for consistency) after a particular feature is randomly permutated (we used 10 permutations in each case). Additionally, in order to assess the relationship between the outcome variable and its predictors, we analyze Partial Dependence Plots (PDP).

3.2 Initial text preprocessing

As an initial text processing the steps taken before a statement’s sentiment quantification, the following text-cleaning measures were undertaken. First, all downloaded statements were converted into the Corpus, which is a standard text representation in Natural Language Processing. After that, all words were lower-cased. Additionally, punctuation marks and numbers were removed. Later words from the list of English stop words were excluded. The list was originally sourced from tm package implemented in R Software. The list was further extended with FOMC abbreviation expansion and stop words list provided by Loughran and McDonald.Footnote 5 Then all words were subject to the process of lemmatization. The goal of lemmatization is to reduce the inflectional form of a word to a base form. Finally, tokenization took place, this being a process of separating a piece of text into smaller units. In case of our analysis these units are words.

3.3 Sentiment extraction approach

There are two primary methods for calculating text sentiment, including unsupervised and supervised methods. The major difference between these two types of algorithms is that unsupervised algorithms are based on non-tagged data—while supervised algorithms are based on labeled data. The benefit of using unsupervised learning is that no human-tagged training data is needed, something that is costly and subjective; however, it usually fails to perform as well as supervised learning. In the case of FOMC statements, no labels are officially available.

There were several attempts to label FOMC communication, including human tagging (Hayo et al., 2008), market reaction to release (Zadeh & Zollmann, 2009) and statistical methods (e.g., Boukus & Rosenberg, 2006; Cannon, 2015; Jegadeesh & Wu, 2017). There are several statistical approaches used to extract the content of FOMC statements. For example, Boukus and Rosenberg (2006) and Mazis and Tsekrekos (2017) utilize Latent Semantic Analysis in order to extract distinct topics which are covered in post-meeting statements. Topics were extracted also by Jegadeesh and Wu (2017) with the use of an algorithm called Latent Dirichlet Allocation. Besides extracting topics there were also attempts to extract the tone of the communication by using sentiment analysis. With the use of a lexicon-based sentiment analysis approach, Jegadeesh and Wu (2017) calculated sentiment for each extracted topic and Cannon (2015) calculated the sentiment of the overall document. Lucca and Trebbi (2009) on the other hand, use automatic algorithms implemented by Google and Dow Jones (Factiva tool).

In this study, in order to achieve the best possible result without the need of labeling statements, we applied the FinBERT (Yang et al., 2020), which is an NLP finance-specific pre-trained language model based on financial documents including corporate reports, earnings call transcripts, and analyst reports. The algorithm requires only text as an input. No tuning was conducted since the goal of using this tool was to omit the data labeling process while the tuning process required labels. The result is a single number assigned to each document which denotes the sentiment. The sentiment values range from – 1 to 1. Values below 0 indicate rather negative sentiment while values above 0 indicate more optimistic sentiment of the statement.

Figure 2 presents the sentiment score of each statement released between March 20, 2001 and June 14, 2023. A trend line (blue line) was added to the plot in order to better reflect the distribution of positive and negative sentiments over time. Black dots indicate FinBERT sentiment values over time. What can be seen is that before 2005 there were relatively more documents indicating positive sentiment. The trend is reversed until 2012, and after that there is again an advantage of positive statements over negative statements. Also, the sentiment trend shows the financial crisis around 2008 and to a much lesser extent the coronavirus outbreak in 2020.

The FOMC Statements sentiment score obtained using FinBERT model. The figure shows the trend of net sentiment. Analysis includes 186 FOMC statements released between March 20, 2001 and June 14, 2023. The meeting held on January 21, 2008 was omitted due to a lack of key financial data. Black dots are the values of sentiment score over time and the blue line represents the trend calculated using local regression

4 Data

4.1 FOMC statements

Our analysis is based on FOMC statements released over the period from March 2001 to June 2023. The total sample comprises 186 statements. Statements were collected from the Federal Reserve websiteFootnote 6 with the use of a web-scraping algorithm implemented in Python. In the scraping algorithm we limited the content only to FOMC announcements, omitting the names of voters listed in the last paragraph. For the purpose of our primary analysis, we consider statements released after both scheduled and unscheduled meetings, including conference calls. We additionally exclude two statements that were released during the weekend.Footnote 7

FOMC statements are initially analyzed in terms of their length and most common words. The results of the analysis of statements’ length is presented earlier in this article. As to the most common words, Fig. 3 presents a word cloud summarizing the 100 most frequent terms used by the Fed. It can be clearly noticed that the term with the highest frequency is ‘inflation’. Other common words are ‘economic’, ‘will’,’ rate’, ‘percent’, and ‘conditions’. Even though the figure was created after stop words removal, there are still terms present that do not carry any significant content, as do all of those listed above. These terms will be naturally filtered out in the process of sentiment calculation since these are not available in utilized dictionaries.

The data cleaning process reduced the number of words approximately by half. The average number of words in the statement before cleaning was 392, while the average number of words in the statement after cleaning was 195.

4.2 Financial market data

In order to gauge market reaction to FOMC statements, we use high-frequency quotations from both stock and foreign exchange markets. For the stock market, our main indicator is 1-min intraday data on the S&P 500 Index. We use the closing price of an index documented every minute. For the exchange rate market, we use data on the EUR/USD spot rate. Data is collected from Bloomberg for the period from March 1, 2001 to July 1, 2023. Figure 4 shows the reaction in terms of the volatility of asset prices measured with standard deviation around FOMC statements releases. This is obtained as an aggregated standard deviation per one-minute time interval from all analyzed FOMC meeting days. The formula is as follows:

where T is the number of observations in the sample, \({X}_{t}\) is the one-minute return, and \(\overline{X}\) is the sample mean. The number of observations is usually equal to the number of FOMC meetings in the scope of this analysis, unless for some date there is no observation available at a specific point of time.

Volatility of S&P 500 (top panel) and EUR/USD (bottom panel) around statement release. The figure shows the standard deviation of S&P 500 and EUR/USD returns around FOMC statement release. The shaded area indicates the approximated release time of the FOMC statements. Analysis includes 184 FOMC meetings from March 20, 2001 to June 14, 2023. Analyzed window of time for the plot is from 12:30 p.m. until 3 p.m.

The shaded area indicates the approximate release time of the FOMC statement. As to the S&P 500, a significant increase in index volatility is observed between 2:00 p.m. and 2:15 p.m., and this also persists after the release. Maximum volatility before approximate release is about 0.0339 while after the approximate release, maximum volatility reaches above 0.0854. In the case of EUR/USD volatility, it is in general higher than the S&P 500’s volatility for the whole event window. No significant increase around the approximate release time of FOMC statements is observed. Instead, several volatility peaks can be seen during the observed window. The empirical analysis considers 184 FOMC meeting days between March 20, 2001 and June 14, 2023. One meeting held on January 21, 2008 was omitted due to the lack of S&P 500 data on that day, most probably resulting from the fact that that day was Black Monday in worldwide stock markets.

In order to correctly analyze the impact of FOMC statements on both assets, we first needed to map the event window of the announcement with respective financial data according to Content of FOMC Statements. In this section, we analyze fourteen different event windows, namely.

10-, 5-, 4-, 3-, 2-, and 1-min before the event and 1-, 2-, 3-, 4-, 5-, 10-, 30-, and 60-min after the event. For example, assuming the statement was announced at 2 p.m., the 30-min event window covers the period from 2 p.m. to 2:30 p.m.

4.3 Market surprise component

As indicated in Sect. 2, we follow Kohn and Sack (2004), Bernanke and Kuttner (2005), and Farka and Fleissig (2013) and gauge for market policy expectations based on the transformation of 30-day Federal Funds Futures, which was proven by Kuttner (2001) to be a proper market-based approximation for those expectations. We source historical daily data from the investing.com website.Footnote 8 The transformation is obtained using Eq. (2). If there is no statement release on a particular day or the statement is released on the last day of month, the surprise component of zero is assigned.

4.4 Change in the Federal Funds Target Rate

Another variable considered was the directional change of the Federal Funds Target Rate relative to the previous FOMC statement’s release. This was obtained based on the rate that is agreed by FOMC members and then published in a statement. The FFTR was announced as a single number until 2008 and then as a range of values. The data was downloaded from the FRED website separately for the two regimes and then combined. The dependent variable obtained assumes a value of 0 if the FFTR value or range of values did not change from the previous FOMC statement, – 1 if the announced FFTR rate or its range is lower than the previous FOMC statement post and 1 if the announced FFTR rate or its range is greater than the previous FOMC statement.

4.5 Other indicators

Besides the FOMC statement quantifier, interest rate surprises, and change in the Federal Funds Target Rate, we additionally consider in the analysis two economic indicators—namely, the Purchasing Managers’ Index (PMI) and the Consumer Confidence Index (CCI). We sourced the actual monthly data from the investing.com platform.Footnote 9 PMI is calculated based on a monthly survey of senior executives from about 400 companies in 19 industries. The survey is based on five areas: new orders, inventory levels, production, supplier deliveries, and employment. Questions are related to business conditions and any changes in the business environment regarding purchases. As to the CCI, it indicates consumer confidence level with respect to economic stability. The index is based on a monthly survey of about 3000 households that are located all around the United States. The questionnaire includes five questions from two areas: current economic conditions and expectations regarding the changes in economic conditions.

5 Results and discussion

In this section, we examine the stock and foreign exchange markets’ reaction to the release of FOMC post-meeting statements in order to explore three research hypotheses. First, we state that the information content of FOMC is an important predictor of financial markets’ reaction directly after the event. Second, application of non-linear models results in a better prediction of market reaction due to explaining more of data variability. Third, the predictive accuracy during the COVID pandemic is lower as financial markets are more volatile due to higher uncertainty related to the future macroeconomic situation. We fit linear regression, LASSO regression, ridge regression, support vector regression, random forest, and XGBoost for fourteen event windows calculated on the basis of the time when statements were released, only on release days. Usage of the quantified tone of the FOMC statements allows us to verify the first research hypothesis. The second hypothesis is verified by estimating several linear and non-linear models and further by comparing them with respect to the fit and prediction error. The last hypothesis is verified by repeating the model estimation four times using various out-of-sample periods—the year 2019, 2020, 2021 and then 2022–2023. We compare the out-of-sample model performance with the use of R2 and RMSE. In the main article, we are showing the R2 metric while RMSE is presented in the Appendix. Since the greatest effect is seen closest to release time, only the results for six event windows, namely 1-min before the event and 1-, 2-, 3-, 4-, and 5-min after event are reported. It is important to note that the modeling samples consist of about a hundred observations in each event window. We focus on the adjusted statements release time, when the market first learned about a FOMC decision. However, the conclusions were not radically different for the scheduled time.

Our results (R2) for all validation samples (2019, 2020, 2021 and 2022–2023) and both assets (S&P 500 and EUR/USD) are presented in Table 1. In the case of LASSO and XGBoost, there were cases where we didn’t obtain meaningful R2 since the optimal model resulted in a constant prediction. These models are thus not reported in the table below. More specifically, LASSO was not reported for S&P 500 and EUR/USD while XGBoost was not reported for EUR/USD.

Based on the results for the S&P 500 for the 2019 validation sample, one can observe much greater explanatory power for non-linear models (random forest and SVR with a radial kernel) of the return in the first minute before the event and in the first minute after the event. In the following event windows the explanatory power decreases to increase again at the end of the observation window. In the second validation sample (2020) random forest loses its explanatory power. The performance of SVR also decreases, but its variant with a polynomial kernel consistently remains the best performing algorithm in most of the intervals. In 2021 again the random forest seems to capture the best the relation analyzed by model. Looking at all validation samples and all event windows, on average, random forest and SVR seem to perform the best. Their superiority over the linear models is corroborated by the results in the test sample covering the period 2022–2023 in the first 1–2 min immediately after the event, while later linear models have higher predictive power.

The conclusions are much less intuitive for the foreign exchange market. There is no clear intermediate reaction as in the case of the stock market—the values of R2 in the first few minutes are not as high as for S&P 500. However, again in all validation sample one can observe that non-linear algorithms (random forest or SVR) have higher explanatory power than the linear models. This observation is also confirmed by the test sample (2022–2023) where a random forest is explaining much more variability of returns directly after the FOMC statement release than any other approach. For most models, including all linear approaches, R2 is low for most of the analyzed event windows, on contrary to stock market. This may suggest that the FOMC statement introduces much greater uncertainty on the FOREX market, which thus results in much greater volatility and price changes. They are much more difficult to predict than on the stock market, especially via linear models. This result is counter-intuitive, as one might expect that the decisions on interest rates and related FOMC statements should have an immediate impact on currency rather than stock prices. But in recent years, in response to the global financial crisis and more recently to the COVID-19 recession and Russian invasion on Ukraine, central banks across the world have unleashed synchronized monetary stimulus to backstop the economy. This was done by changing the short-term and long-term rates downward. In such circumstances investing in stocks becomes more profitable when compared to bank deposits and other investments and therefore more attractive for individuals, who in turn rather do not invest in the FOREX market.

Therefore, based on the models’ fit in the validation samples and the test sample we conclude that non-linear algorithms perform better than the linear approaches for both the stock market and the foreign exchange market, which confirms our second research hypothesis.

In addition to the analysis based on R2 we also compared the predictive accuracy of all models for both types of assets with RMSE. The results are presented in Tables 4, 5, 6 show the average returns for the analyzed event windows in order to provide broader context of the analysis.

In the case of S&P 500 returns, the best accuracy of predictions is observed for 2020 validation sample for almost all event windows. On contrary, the prediction accuracy is the lowest for 2022–2023 sample, except for 1-min before event window. For EUR/USD one can clearly see higher precision of forecasts in 2019 sample compared to others regardless of the model and range. RMSE error based on 2020 sample is 1.5–4 times higher than for 2019 sample (depending on the model and range) and the difference persists in the subsequent years. The results for the FOREX market confirm our third research hypothesis that the predictive accuracy during the COVID pandemic is lower as financial markets are more volatile due to higher uncertainty related to the future macroeconomic situation. The results for S&P 500 suggest that in the stock markets the higher uncertainty might have been introduced by the Russian invasion on Ukraine in 2022.

Based on the final test sample (2022–2023), we also checked if predictions from the estimated algorithms can be applied in a simple long-only trading strategy. Whenever the predicted return at the moment of the FOMC statement release was positive, we assumed a long position in the analyzed asset (S&P500 or EUR/USD) and kept it until the end of the prediction interval. Cumulative profits and losses (in gross terms—we did not take into account transactional costs) for all algorithms and intervals are presented in Table 2.

The strategy for EUR/USD based on predictions from non-linear algorithms gives small positive profits, but only in the first minute after the announcement, which suggests quick adjustment of the FOREX market to the news. The best of the models (SVR) also gives profits up to the 4th minute after the event, but much lower than in the first one. In the case of S&P 500—it is not possible to obtain any profit even in the gross sense (without transactional costs), which is related to poor predictive power of the models in 2022–2023 mentioned earlier.

Finally, to verify our first research hypothesis about the impact of the content of the FOMC statement on financial markets’ returns we apply further in-depth analysis for the 1-min after the event window for both markets. Table 3 presents the ranking of features by their importance measured as the increase of RMSE after a random permutation of a particular feature (as an average over all non-linear models applied) for each validation sample and the test sample. One can clearly see that the content of the FOMC statement is the first or the second (after PMI) most important predictor of S&P 500 returns just after the event (with the only exception of 2021). However, it does not seem to be as important for the returns of EUR/USD. In this case the surprise component plays a major role, which is in line with expectations. The content of the FOMC statement has only marginal impact on EUR/USD returns in the last testing period. Therefore, our first research hypothesis was only partly confirmed. The value of importance does not show the direction, or more generally, the shape of the relationship between the sentiment score and the return. This can be more closely analyzed with the use of Partial Dependence Plots (PDPs). As the sentiment score is important only in the case of S&P 500 and the value of its importance is relatively high in 2019 and 2020 validation samples, we present PDPs for these periods in Fig. 5. PDPs were calculated for all non-linear algorithms applied and for a linear regression as a reference.

In case of 2019 (top panel), the linear regression indicated very weak negative impact on the sentiment values on S&P 500 returns, while the random forest, SVR radial (two best fitted models in this sample) and xgboost consistently suggest non-linear parabolic shape of relationship with the top up around 0. This seems to be in line with the common saying that “no news is good news”. Neutral sentiment results in the highest predicted returns (ceteris paribus), while with more extreme values of sentiment (either positive or negative) the expected returns are lower. In 2020 (bottom panel) the linear regression suggests lack of impact of FOMC statements on stock market returns. Random forest and xgboost algorithms indicate a non-linear, but completely inverse relationship between the sentiment score and predicted returns. The SVR with a radial kernel, that had the best fit in 2020, confirms the negatively sloping shape of the random forest. This may suggest that the news (especially negative) were reflected in the stock prices already at the moment of the announcement of FOMC statement and the event resulted in some kind of correction of the earlier (over)reaction.

6 Conclusions

The primary aim of this paper was to analyze whether one can accurately predict the reaction of financial markets to the content of FOMC public statements directly after they are made public. We also examined the impact of FOMC communication—more specifically, FOMC statements on financial markets. In our analysis the reaction of financial markets was approximated by the returns of the S&P 500 Index and EUR/USD spot price. We additionally accounted for market expectations regarding the effective Federal Funds Rate, the change in the Federal Funds Target Rate, the Purchasing Managers' Index, and the Consumer Confidence Index. The latter economic indicators are intended to control for attitudes toward the economic situation from two different perspectives—namely, business and individual customers. We proved that the information content of FOMC statements is an important predictor of the financial markets’ reaction directly after the event. In the models explaining the reaction of financial markets in the first minute after the scheduled announcement of the FOMC statement, the sentiment score was the first or the second most important feature, after the surprise component. We also showed that applying non-linear algorithms resulted in better prediction of market reaction due to explaining more of the data variability by catching non-linear relationships. In addition, with the use of XAI tools we identified clear non-linearities in the relationship between the sentiment score and returns just after the event. Last but not least, the predictive accuracy during the COVID pandemic (2020 and 2021) was indeed lower than in the previous year (2019). Taking all the above into account, we confirmed all research hypotheses stated in this article. In addition, based on the predictions we applied a simple event arbitrage strategy. The strategy was slightly profitable (in gross terms) only for EUR/USD and for predictions resulting from non-linear algorithms and only in the first minute after the announcement.

We see several possible extensions, and they are ones we will work on in future research. The first aspect regards sentiment extraction. The method we utilized, as indicated before, has several limitations. Possible improvement in results could be obtained by creating a customized dictionary for sentiment extraction from FOMC statements based on FOMC communication. Another option is to label the statements and use a classification algorithm best tailored to the FOMC’s language. The second aspect regards designing a system that will be fully efficient and enable us to obtain real time results.

Data availability

The dataset used in the empirical part is published in open repository: https://doi.org/10.6084/m9.figshare.24185505.v1.

Change history

27 November 2023

A Correction to this paper has been published: https://doi.org/10.1007/s42521-023-00100-1

Notes

These unscheduled meetings or conference calls always happen when there is a need for immediate action. For example, in 2020 there were two unscheduled meetings on March 3 (statement released at 10:00 a.m. EST) and March 15 (statement released at 5:00 p.m. EDT).

All listed forms of communications can be found on The Federal Reserve website: https://www.federalreserve.gov/newsevents.htm (access date: 26.06.2020). Summary of Economic Projections (SEP) are released four times a year followed by a press conference by the chair. The minutes of the scheduled meetings are released three weeks after the date of a policy decision (this describes the state of affairs since February 2, 2005).

Federal Reserve press release on February 4, 1994 (https://www.federalreserve.gov/fomc/19940204default.htm; access date: 26.06.2020).

Poole and Rasche (2003). The impact of changes in FOMC disclosure practices on the transparency of monetary policy: are markets and the FOMC better "synched"? Federal Reserve Bank of St. Louis Review, 85 (https://files.stlouisfed.org/files/htdocs/publications/review/03/01/PooleRasche.pdf; access date: 26.06.2020).

Available at https://sraf.nd.edu/textual-analysis/resources (access date: 26.06.2020).

FOMC Statements are available at https://www.federalreserve.gov/monetarypolicy/fomccalendars.htm (access date: 21.07.2023).

Excluded statements were released on May 9, 2010 (Sunday) and March 15, 2020 (Sunday).

Historical daily data on 30-day federal funds futures are available at https://www.investing.com/rates-bonds/cbot-30-day-federal-funds-comp-c1-futures-historical-data (access date: 21.07.2023).

Data on PMI are available at https://www.investing.com/economic-calendar/ism-manufacturing-pmi-173 and data on CCI are available at https://www.investing.com/economic-calendar/cb-consumer-confidence-48 (access date: 21.07.2023).

References

Aldridge, I. (2013). High-frequency trading: a practical guide to algorithmic strategies and trading systems (Vol. 604). John Wiley & Sons.

Bernanke, B. S., & Kuttner, K. N. (2005). What explains the stock market’s reaction to Federal Reserve policy? The Journal of Finance, 60(3), 1221–1257.

Boser, B. E., Guyon, I. M., & Vapnik, V. N. (1992, July). A training algorithm for optimal margin classifiers. In Proceedings of the fifth annual workshop on Computational learning theory (pp. 144–152).

Boukus, E., & Rosenberg, J. V. (2006). The information content of FOMC minutes. Available at SSRN 922312.

Cannon, S. (2015). Sentiment of the FOMC: Unscripted (p. 5). Economic Review-Federal Reserve Bank of Kansas City.

Chen, T., & Guestrin, C. (2016, August). Xgboost: A scalable tree boosting system. In Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining (pp. 785–794).

Cieslak, A., Morse, A., & Vissing-Jorgensen, A. (2019). Stock returns over the FOMC cycle. The Journal of Finance, 74(5), 2201–2248.

Correa, R., Garud, K., Londono, J. M., & Mislang, N. (2017). Constructing a dictionary for financial stability. Board of Governors of the Federal Reserve System (US), 6(7), 9.

Farka, M., & Fleissig, A. R. (2013). The impact of FOMC statements on the volatility of asset prices. Applied Economics, 45(10), 1287–1301.

Gidofalvi, G., & Elkan, C. (2001). Using news articles to predict stock price movements. University of California.

Gu, C., Chen, D., Stan, R., & Shen, A. (2022). It is not just What you say, but How you say it: Why tonality matters in central bank communication. Journal of Empirical Finance, 68(C), 216–231.

Guyon, I., Boser, B., & Vapnik, V. (1993). Automatic capacity tuning of very large VC-dimension classifiers. In Advances in neural information processing systems (pp. 147–155).

Hayo, B., Kutan, A., & Neuenkirch, M. (2008). Communicating with many tongues: The impact of FOMC Members Speeches on the US Financial Market’s Returns and Volatility. In: Paper to be presented on the 40th Money, Macro and Finance Research Group Annual Conference, September (pp. 10–12).

James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An introduction to statistical learning. Springer, 112, 18.

Jegadeesh, N., & Wu, D. A. (2017). Deciphering Fedspeak: The information content of FOMC meetings. Available at SSRN 2939937.

Kuttner, K. N. (2001). Monetary policy surprises and interest rates: Evidence from the Fed funds futures market. Journal of Monetary Economics, 47(3), 523–544.

Leo, B. (2001). Random forests. Machine Learning, 45(1), 5–32. https://doi.org/10.1023/A:1010933404324

Loughran, T., & McDonald, B. (2016). Textual analysis in accounting and finance: A survey. Journal of Accounting Research, 54(4), 1187–1230.

Lucca, D. O., & Trebbi, F. (2009). Measuring central bank communication: an automated approach with application to FOMC statements (No. w15367). National Bureau of Economic Research.

Mazis, P., & Tsekrekos, A. (2017). Latent semantic analysis of the FOMC statements. Review of Accounting and Finance, 16(2), 179–217. https://doi.org/10.1108/raf-10-2015-0149

Robert, T. (1996). Regression shrinkage and selection via the LASSO. Journal of the Royal Statistical Society Series B (methodological), 58(1), 267–288. ISSN 00359246.

Rosa, C. (2012). How “Unconventional” are large-scale asset purchases? The Impact of Monetary Policy on Asset Prices. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.2053640

Schumaker, R. P., Zhang, Y., Huang, C. N., & Chen, H. (2012). Evaluating sentiment in financial news articles. Decision Support Systems, 53(3), 458–464.

Smola Alex, J., & Schölkopf, B. (2004). A tutorial on support vector regression. Statistics and Computing, 14(3), 199–222. https://doi.org/10.1023/B:STCO.0000035301.49549.88. ISSN 0960-3174.

Tadle, RC. (2022). FOMC minutes sentiments and their impact on financial markets. Journal of Economics and Business, Elsevier, vol. 118(C).

Vapnik, V. N. (1995). The Nature of Statistical Learning Theory. Springer-Verlag. ISBN 0387945598.

Vapnik, V., Golowich, S. E., & Smola, A. J. (1997). Support vector method for function approximation, regression estimation and signal processing. In: Advances in neural information processing systems (pp. 281–287).

Yang, Y., U. Y., M. C. S., & Huang, A. (2020). Finbert: A pretrained language model for financial communications. arXiv preprint arXiv:2006.08097.

Zadeh, R. B., & Zollmann, A. (2009). Predicting market-volatility from Federal Reserve Board meeting minutes NLP for finance.

Acknowledgements

Our research is based upon work from the Horizon 2020 FinTech project (Grant agreement ID: 825215) and from the COST Action 19130, supported by COST (European Cooperation in Science and Technology), www.cost.eu

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: study conception and design: Piotr Wójcik; data collection: Ewelina Osowska; analysis and interpretation of results: Piotr Wójcik and Ewelina Osowska; draft manuscript preparation: Ewelina Osowska. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Both authors declare that they have no conflicts of interest.

Appendix

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Osowska, E., Wójcik, P. Predicting the reaction of financial markets to Federal Open Market Committee post-meeting statements. Digit Finance 6, 145–175 (2024). https://doi.org/10.1007/s42521-023-00096-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42521-023-00096-8