Abstract

Animal behaviour is often characterised by periodic patterns such as seasonality or diel variation. Such periodic variation can be comprehensively studied from the increasingly detailed ecological time series that are nowadays collected, e.g. using GPS tracking. Within the class of hidden Markov models (HMMs), which is a popular tool for modelling time series driven by underlying behavioural modes, periodic variation is commonly modelled by including trigonometric functions in the linear predictors for the state transition probabilities. This parametric modelling can be too inflexible to capture complex periodic patterns, e.g. featuring multiple activity peaks per day. Here, we explore an alternative approach using penalised splines to model periodic variation in the state-switching dynamics of HMMs. The challenge of estimating the corresponding complex models is substantially reduced by the expectation–maximisation algorithm, which allows us to make use of the existing machinery (and software) for nonparametric regression. The practicality and potential usefulness of our approach is demonstrated in two real-data applications, modelling the movements of African elephants and of common fruit flies.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ecological time series data are often characterised by periodicities such as diel variation, i.e. recurrent patterns over a 24-h period. Ignoring periodic variation can invalidate statistical inference, e.g. standard errors might be underestimated due to residual autocorrelation [1]. Perhaps more importantly, adequately modelling such periodic variation is crucial to comprehensively understand behavioural dynamics, for example to identify times of day at which individuals tend to be most active, allowing inference on a species’ temporal niche [2, 3].

Fortunately, technological advances in, e.g. GPS tracking, accelerometry, and computer vision allow ecologists to study diel variation in much more detail than was previously possible [4, 5]. One popular tool for modelling ecological time series data and the periodicities therein is given by the class of hidden Markov models (HMMs), which links the observed ecological data (e.g. step lengths and turning angles in animal movement) to underlying non-observable states (e.g. resting, foraging, travelling) [6].

In the existing literature, two different approaches have been used to infer periodic variation using HMMs. First, relatively basic HMMs can be used to infer an animal’s behavioural sequence (state decoding), based on which diel variation can be investigated using simple visualisations [5, 7, 8] or an additional regression analysis [9, 10]. From the statistical perspective, such a two-stage approach will often not be ideal: the uncertainty in the state allocation is not propagated, statistical inference on the periodic effects is not straightforward, and the dimension of the state space may be overestimated due to the misspecification of the basic model (see [11] for the latter). Second, periodic variation is nowadays often directly incorporated in HMMs using trigonometric modelling, for instance by relating the state transition probabilities to the hour of the day using sine and cosine functions with 24-h periods [12,13,14,15,16]. While this will often be sufficient, such a parametric modelling of the periodic effect may lack flexibility to capture complex periodic variation, for example with multiple activity peaks over the day. In principle, this limitation can be overcome by including multiple sine and cosine basis functions, with different wavelengths [17,18,19,20]. However, this can lead to numerical instability, and it can be tedious to select an adequate order.

In this contribution, we explore a more flexible, nonparametric estimation of periodicities in the state-switching dynamics of an HMM using cyclic splines. Thereby we avoid making any a priori assumptions on the functional shape of the periodic effect, thus allowing to infer arbitrarily complex behavioural diel patterns. For inference, we devise an expectation–maximisation (EM) algorithm, thereby isolating the estimation of the nonparametric periodic effect. This allows us to exploit the powerful machinery available for nonparametric (regression) modelling, specifically P-splines or other smoothing methods implemented in existing software packages such as mgcv [21]. The feasibility of the proposed approach is illustrated in two case studies, where we investigate diel variation of African elephants (Loxodonta africana) and of common fruit flies (Drosophila melanogaster).

2 Methods

2.1 Notation and Basics

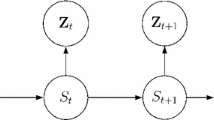

HMMs are used to model time series data \(x_1,\ldots ,x_T\) (e.g. step lengths of an animal) driven by underlying states \(s_1,\ldots ,s_T\) (e.g. the behavioural modes). In a basic HMM, the latent state process is assumed to be a Markov chain with N states, characterised by the initial state probabilities

\(j=1,\ldots ,N\), and the transition probability matrix (t.p.m.)

\(i,j=1,\ldots ,N\), \(t=2,\ldots ,T\). The state active at time t selects which of N possible state-dependent distributions \(f_1,\ldots ,f_N\) generates the observation \(x_t\):

Covariates—including time of day—can be included in either the state-dependent distributions \(f_1,\ldots ,f_N\) or the state transition probabilities \(\gamma _{ij}^{(t)}\). We focus on the latter, as in ecological applications the main interest typically lies in the state process and its drivers, including temporal effects.

2.2 Trigonometric Modelling of Time-of-Day Variation

Including covariates in the state process amounts to regression modelling within the HMM, where covariates affect the state transition probabilities and hence, the behavioural decisions made by an animal. Specifically, if at time \(t-1\) the animal is in state i, the categorical distribution of states at time t is given by the vector \((\gamma _{i1}^{(t)},\ldots ,\gamma _{iN}^{(t)})\). The covariate-dependence of this categorical distribution is typically modelled using a multinomial logistic regression, which is achieved by applying the inverse multinomial logit link to each row i of the t.p.m.,

defining \(\tau _{ii} ^{(t)}=0\) (reference category). The linear predictors \(\tau _{ij}^{(t)}\) can include, inter alia, simple linear effects, polynomial effects, interaction terms, and random effects. Without the latter (for ease of notation), the general form of the linear predictor for \(\gamma _{ij}^{(t)}\) is

where \(z_{tk}\) are covariates. When the aim is to model periodic patterns in the state-switching dynamics, the linear predictor can be extended by including trigonometric basis functions with the desired periodicity. For example, for modelling diel variation in a time series with hourly data, a possible simple form of the linear predictor is

with the additional coefficients \(\omega ^{(ij)}\) and \(\psi ^{(ij)}\) to be estimated alongside \(\varvec{\beta }^{(ij)}\). General periodicities are modelled analogously, replacing the 24 in the denominator by the period length (i.e. the number of sequential observations before completing one period).

With only two harmonics, the flexibility of the periodic component of the linear predictor is somewhat limited: for example, this formulation implies that the periodic component has only one maximum turning point (such that patterns with multiple activity peaks throughout a day may not be adequately captured). The flexibility can be increased by including additional trigonometric functions with different wavelengths:

see for example [19, 20]. By increasing K, arbitrary (smooth) modelling of the periodic effect can be achieved. However, when complex periodic patterns are to be modelled, it can be tedious to select an adequate order K, with the risk of overfitting looming. It may then be more straightforward to avoid making any assumptions on the functional shape of the periodic effect, instead using nonparametric smoothing methods. Such an approach to estimating periodic effects will be presented and explored in the following.

2.3 Cyclic Splines for Modelling Time-of-Day Variation

We now consider nonparametric modelling of the periodic effect, replacing the sum of trigonometric basis functions in (2) by a spline. Specifically, we construct this spline as a linear combination of Q basis functions,

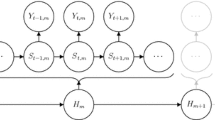

with the scaling coefficients \(a_{1}^{(ij)},\ldots ,a_Q^{(ij)}\) to be estimated. We use cubic B-spline basis functions \(B_1,..., B_Q\), which are easy to compute and yield visually smooth functions. To enforce the desired periodicity, these are wrapped at the boundaries of the support [21]; see Fig. 1 for an illustration with \(Q=8\) and period length 24 h—again general periodicities are modelled analogously.

In practice, a large Q (e.g. 20) is typically used to guarantee sufficient flexibility. Overfitting is avoided by including a penalty on the sums of squared differences between the coefficients \(a_q^{(ij)}\) associated with adjacent B-splines—an approach commonly referred to as P-spline modelling, cf. [22]. While strictly speaking, this model formulation is still parametric, it is commonly labelled as a nonparametric approach because with a large Q the modelling flexibility is effectively unlimited, and there is no meaningful interpretation of the coefficients \(a_q^{(ij)}\).

2.4 EM-Based Estimation of HMMs with Cyclic Splines

The model formulation presented in the previous section effectively involves nonparametric regression modelling within HMMs. For example, in case of \(N=2\) states, the model features a nonparametric logistic regression for each of the state-switching probabilities \(\gamma _{12}^{(t)}\) and \(\gamma _{21}^{(t)}\) (see Eq. (1)). For such nonparametric regression modelling, the inferential machinery (including software packages) is well-established. Therefore, we apply the expectation–maximisation (EM) algorithm to isolate the estimation of the logistic regression component from the estimation of the other parameters of the HMM, in particular those associated with the state-dependent process. This allows us to exploit the tools available for nonparametric logistic regression modelling.

To set up the EM algorithm, we consider the complete-data likelihood (CDLL) of the HMM, i.e. the joint log-likelihood of the observations and the states,

with \(\varvec{\theta }\) the set of parameters necessary to define \(\delta _j^{(1)}\), \(\varvec{\Gamma }^{(t)}\), and the state-dependent distributions \(f_j(x)\). Each iteration of the EM algorithm involves an E-step, replacing all functions of the unobserved states in the CDLL by their conditional expectations (given the data and the current parameter values), and an M-step, optimising the resulting CDLL with respect to \(\varvec{\theta }\). In the case of HMMs, the appeal of the EM algorithm lies in the fact that the M-step neatly splits into several separate optimisation problems—namely one for each the initial distribution, the t.p.m., and the state-dependent distributions—which we exploit below to conveniently estimate the cyclic spline component.

To apply the E-step, we define the indicator variables

and

and rewrite the CDLL as

In the E-step, the indicator variables are then replaced by their conditional expectations

and

with \(\varvec{\theta }\) the current guess of the parameter vector. These conditional expectations are calculated using the standard forward and backward recursions (see [23]).

The M-step then involves optimising the CDLL (5), with \({u}_i(t)\) and \({v}_{ij}(t)\) replaced by \({\hat{u}}_i(t)\) and \({\hat{v}}_{ij}(t)\), respectively, with respect to the parameter vector \(\varvec{\theta }\). The updated estimates of the initial state distribution as well as the state-dependent distribution are obtained as comprehensively described in [23]. For the particular model formulation considered in this contribution, the interest (and challenge) lies in the update of the parameters that affect the state transition probabilities, i.e. the second term in (5),

The first summation in (6) corresponds to the N rows of the t.p.m., each of which implies a categorical regression model for the transition to the next state. We can estimate the associated parameters of each of these regressions separately. For example, for \(N=2\), (6) becomes

Each of these two terms is the log-likelihood of a logistic regression model. For example, the first term can be rewritten as

In logistic regression terminology, the sum \(\sum _{t=2}^{T} {v}_{12}(t)\) gives the number of “successes” (here, the number of switches from state 1 to state 2), and \(\sum _{t=2}^{T} {v}_{11}(t)\) is the number of “failures” (here the number of instances when the process remains in state 1). Within EM, the indicator variables \({v}_{ij}(t)\) are replaced by their conditional expectations \({\hat{v}}_{ij}(t)\), such that (7) becomes a weighted log-likelihood.

The time-varying transition probability \(\gamma _{12}^{(t)}\) in (7) is modelled using cyclic P-splines, see (1) and (3). As described in Sect. 2.3, a wiggliness penalty is added to the weighted log-likelihood, for example

with \(\triangle ^2\) denoting the second-order difference operator and \(\lambda _i\) the (state-dependent) smoothing penalty (see [22]). The estimation of this weighted nonparametric logistic regression can conveniently be conducted using well-established machinery, including existing software. In the case studies below, we implemented this part of the M-step in the EM algorithm using the mgcv package in R [21].

The updated parameter estimates are then used in the E-step of the next iteration. The E and M steps are repeated until a convergence criterion defined by the user is reached [24], e.g. that the difference between the likelihood values obtained in two consecutive iterations is below some threshold. This iterative scheme identifies a (local) maximum of the likelihood function. To increase the chances of finding the global maximum, several initial starting values for \(\varvec{\theta }\) should be tested.

3 Case Studies

3.1 African Elephant

We consider hourly GPS data collected for an African elephant in Etosha National Park, Namibia, from October 2008 to August 2010. The data are available from the Movement Bank Repository [25, 26], cf. [27]. From the positional data, we calculate the Euclidean step lengths as well as the turning angles between consecutive compass directions. From these two metrics, we aim to investigate diel patterns in the elephant’s behaviour. The empirical step length distributions throughout the day indicate a relatively complex diel variation with two activity modes, which may be difficult to adequately model using parametric periodic effects (Fig. 2).

We model the data using 2-state HMMs with gamma and von Mises distributions for the step lengths and turning angles, respectively, assuming conditional independence of the two variables, given the states [28, 29]. For modelling diel variation in the state-switching dynamics, we consider the cyclic P-spline approach (using the default options implemented in mgcv), the trigonometric approach (2) with \(K=1,2\) and (3), and, as an additional benchmark, a model with homogeneous Markov chain (i.e. no diel variation). All fitted models feature an “encamped” state with relatively short step lengths and frequent reversals in direction (state 1) and an “exploratory” state with longer steps and higher persistence in direction (state 2)—see Fig. 6 in Appendix.

Estimated transition probabilities of the elephant as a function of time of day, for the different HMMs considered. For the P-spline model, the pointwise 95% confidence intervals (CIs) based on the Bayesian posterior covariance matrix, as provided by mgcv, are shown. The other CIs are omitted for visual clarity

Figure 3 displays the time-varying probabilities of switching states (left panel: from state 1 to state 2, right panel: vice versa) as estimated under the nonparametric as well as the parametric approach. All models detect a reduction in exploratory activity during the night. However, the flexible P-spline approach additionally captures a bimodal diel variation, with more frequent switching to the exploratory mode in the early morning hours but also in the early afternoon. In contrast, the commonly used trigonometric effect modelling with \(K=1\) (i.e. one sine and one cosine basis function) is not sufficiently flexible to identify this bimodality. When increasing the order to \(K=2\), the bimodality can be identified, however, only with \(K=3\) the parametric approach produces results similar to those obtained using splines (thus indicating that even with \(K=2\) the parametric model might be too inflexible). Furthermore, the proportion of time spent in the exploratory state, for each time of day calculated based on the Viterbi-decoded states, varies notably across the five models fitted (see Fig. 4). This underlines the importance of adequately modelling diel variation, as inflexible models can invalidate inference on the state process.

To formally compare spline-based models with parametric alternatives using trigonometric base functions, we consult the Akaike information criterion (AIC) and the Bayesian information criterion (BIC; see Table 1). The AIC and the BIC favour the trigonometric models with \(K=5\) and \(K=3\), respectively, with the spline-based approach arriving at a similarly complex model as measured by the effective degrees of freedom (edf). Notably, this demonstrates that the penalised spline approach corresponds to data-driven model selection since the choice of the wiggliness penalty \(\lambda \) aims at achieving a favourable balance between underfitting and overfitting.

3.2 Common Fruit Flies

In the second case study, we consider the locomotor activity of laboratory wild type Drosophila melanogaster (iso31) [30]. We collected 2- to 3-days-old male flies and trained them individually to a standard 12-h-light and 12-h-dark condition (LD) for 4.5 days in locomotor tubes. Subsequently, we subjected them to 6 days of constant darkness (DD). The temperature was kept constant (25\(^{\circ }\)C). During these 10 days, locomotor activity was recorded using the Drosophila Activity Monitor (DAM) system (TriKinetics Inc), by counting the times each fly interrupts the infrared beam passing the middle of the locomotor tube. We consider two time series—one under light condition LD and the other under condition DD—for each of 15 individuals. Each observation is the count of beams crossed over a period of 30 min.

The time series of half-hourly counts are modelled using a 2-state HMM with negative binomial state-dependent distributions. For the state transition probabilities, we consider the same time-varying predictors as in the elephant example, additionally allowing for different periodic effects under the two light conditions. The fitted models’ states are associated with low and high activity, with state-dependent mean counts of 2.7 and 54.9, respectively, obtained for the spline-based model (see Fig. 7 in Appendix.).

Model-implied probability of the fruit flies occupying the high-activity state for conditions LD (left) and DD (right), as implied under the different models fitted. For the P-spline model, the pointwise 95% CIs are shown, obtained via Monte Carlo simulation using the Bayesian posterior covariance matrix provided by mgcv. The other CIs are omitted for visual clarity. Horizontal bars indicate the light–dark cycle (LD) in black and white, while under constant darkness (DD), the previous times of light are indicated in grey

Figure 5 shows the time-varying probability of occupying the high-activity state as obtained for the different models considered. Note that these are effectively summary statistics implied by the time-varying t.p.m., which are shown here to facilitate the comparison between the two light conditions. The results emphasise the importance of allowing for sufficient modelling flexibility in the periodic effects, as the commonly used approach with only one sine and one cosine basis function fails to capture several key characteristics: (1) the bimodal activity pattern over the course of the day; (2) the near-certain occupancy of the high-activity state in the evening hours (and of the low-activity state during the night) in the DD condition; (3) the fact that the first activity peak is less pronounced, and the second more pronounced, in the DD condition. The other three models—i.e. those with trigonometric effect modelling and either \(K=2\) or \(K=3\) as well as the spline-based model—all yield similar results. The slight midday peak only revealed by the spline-based approach—as well as by trigonometric models with \(K\ge 4\) (not displayed in the figure)—was also found in another study on activity patterns of fruit flies, albeit under varying temperature conditions [31]. Furthermore, the model comparison shows that the spline-based approach leads to a similar fit as the trigonometric approach, with the BIC favouring the latter with \(K=4\) (see Table 2).

4 Conclusion

Periodic variation in time series data is often of key interest but can be challenging to adequately incorporate in state-switching models. To reveal potentially complex patterns, e.g. multiple activity peaks throughout the day, we explored a flexible nonparametric approach using cyclic P-splines. As illustrated in two case studies, such flexibility in the modelling of periodic variation can uncover relevant patterns that may otherwise go unnoticed, emphasising the potential usefulness of the approach, in particular in settings where periodic variation is of primary interest (cf. [32,33,34]).

We implemented the HMM with cyclic P-splines building on the mgcv functionality within the EM algorithm. However, this is not the only option for conducting inference for such a model. In particular, optimisation of the HMM’s marginal likelihood, obtained by integrating out the spline coefficients using the Laplace approximation [35], has recently been explored and is already implemented in the immensely flexible R package hmmTMB [36]. Moreover, both hmmTMB and the presented EM algorithm are not limited to P-spline modelling but could also incorporate other functional relationships within HMMs, such as random effects or more complex smoothing functions provided by mgcv.

The manifold possibilities of flexibly modelling covariate effects further complicate the already difficult task of model selection in HMMs, which is aggravated by the interplay of the number of states and the modelling of the state process [37]. Although information criteria offer some guidance in identifying an adequately complex model, additional considerations regarding interpretability and computational costs need to be taken into account. For example, even if a spline-based model fits the data slightly better than a simpler parametric one, this advantage may be outweighed by the additional computational burden. Therefore, HMMs demand a holistic approach to model selection, that is to pragmatically balance goodness of fit and study aims.

In practice, nonparametric modelling of periodic variation allows to investigate temporal patterns without making any restrictive assumptions a priori. When used as an exploratory tool, the approach may of course also show that simple trigonometric modelling is sufficient. In both case studies presented in this contribution, information criteria did indeed favour trigonometric modelling of the periodic variation, though only when using considerably more basis functions than are commonly applied in ecological modelling. Selecting an adequate number of basis functions can be tedious in practice, such that the spline-based approach, with its automated optimisation of the bias-variance trade-off via penalised likelihood, may sometimes be more convenient to implement. In any case, incorporating the required flexibility to capture periodic variation—whether using nonparametric or parametric modelling—is crucial for guaranteeing valid inference on behavioural processes.

References

Bell WR, Hillmer SC (1983) Modeling time series with calendar variation. J Am Stat Assoc 78(383):526–534

Albrecht M, Gotelli N (2001) Spatial and temporal niche partitioning in grassland ants. Oecologia 126:134–141

Hertel AG, Swenson JE, Bischof R (2017) A case for considering individual variation in diel activity patterns. Behav Ecol 28(6):1524–1531

Baktoft H, Aarestrup K, Berg S, Boel M, Jacobsen L, Jepsen N, Koed A, Svendsen JC, Skov C (2012) Seasonal and diel effects on the activity of northern pike studied by high-resolution positional telemetry. Ecol Freshw Fish 21(3):386–394

Schwarz JF, Mews S, DeRango EJ, Langrock R, Piedrahita P, Páez-Rosas D, Krüger O (2021) Individuality counts: a new comprehensive approach to foraging strategies of a tropical marine predator. Oecologia 195:313–325

McClintock BT, Langrock R, Gimenez O, Cam E, Borchers DL, Glennie R, Patterson TA (2020) Uncovering ecological state dynamics with hidden Markov models. Ecol Lett 23(12):1878–1903

van de Kerk M, Onorato DP, Criffield MA, Bolker BM, Augustine BC, McKinley SA, Oli MK (2015) Hidden semi-Markov models reveal multiphasic movement of the endangered Florida panther. J Anim Ecol 84(2):576–585

van Beest FM, Mews S, Elkenkamp S, Schuhmann P, Tsolak D, Wobbe T, Bartolino V, Bastardie F, Dietz R, von Dorrien C, Galatius A, Karlsson O, McConnell B, Nabe-Nielsen J, Olsen MT, Teilmann J, Langrock R (2019) Classifying grey seal behaviour in relation to environmental variability and commercial fishing activity—a multivariate hidden Markov model. Sci Rep 9(1):5642

Broekhuis F, Grünewälder S, McNutt JW, Macdonald DW (2014) Optimal hunting conditions drive circalunar behavior of a diurnal carnivore. Behav Ecol 25(5):1268–1275

Hart T, Mann R, Coulson T, Pettorelli N, Trathan P (2010) Behavioural switching in a central place forager: patterns of diving behaviour in the macaroni penguin (Eudyptes chrysolophus). Mar Biol 157(7):1543–1553

Li M, Bolker BM (2017) Incorporating periodic variability in hidden Markov models for animal movement. Mov Ecol 5(1):1

Towner AV, Leos-Barajas V, Langrock R, Schick RS, Smale MJ, Kaschke T, Jewell OJD, Papastamatiou YP (2016) Sex-specific and individual preferences for hunting strategies in white sharks. Funct Ecol 30(8):1397–1407

Leos-Barajas V, Photopoulou T, Langrock R, Patterson TA, Watanabe YY, Murgatroyd M, Papastamatiou YP (2017) Analysis of animal accelerometer data using hidden Markov models. Methods Ecol Evol 8(2):161–173

Bacheler NM, Michelot T, Cheshire RT, Shertzer KW (2019) Fine-scale movement patterns and behavioral states of gray triggerfish Balistes capriscus determined from acoustic telemetry and hidden Markov models. Fish Res 215:76–89

Beumer LT, Pohle J, Schmidt NM, Chimienti M, Desforges J-P, Hansen LH, Langrock R, Pedersen SH, Stelvig M, van Beest FM (2020) An application of upscaled optimal foraging theory using hidden Markov modelling: year-round behavioural variation in a large arctic herbivore. Mov Ecol 8(1):25

Farhadinia MS, Michelot T, Johnson PJ, Hunter LTB, Macdonald DW (2020) Understanding decision making in a food-caching predator using hidden Markov models. Mov Ecol 8(1):9

Damsleth E, Spjøtvoll E (1982) Estimation of trigonometric components in time series. J Am Stat Assoc 77(378):381–387

Grover JP, Chrzanowski TH (2006) Seasonal dynamics of phytoplankton in two warm temperate reservoirs: association of taxonomic composition with temperature. J Plankton Res 28(1):1–17

Langrock R, Zucchini W (2011) Hidden Markov models with arbitrary state dwell-time distributions. Comput Stat Data Anal 55(1):715–724

Papastamatiou YP, Watanabe YY, Demšar U, Leos-Barajas V, Bradley D, Langrock R, Weng K, Lowe CG, Friedlander AM, Caselle JE (2018) Activity seascapes highlight central place foraging strategies in marine predators that never stop swimming. Mov Ecol 6(1):9

Wood SN (2017) Generalized additive models: an introduction with R, 2nd edn. Chapman and Hall, Boca Raton

Eilers PHC, Marx BD (1996) Flexible smoothing with B-splines and penalties. Stat Sci 11(2):89–121

Zucchini W, MacDonald IL, Langrock R (2016) Hidden Markov models for time series: an introduction using R, 2nd edn. Chapman and Hall, Boca Raton, FL

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc B 39(1):1–22

Abrahms B (2017) Data from “Suite of simple metrics reveals common movement syndromes across vertebrate taxa’’. Movebank Data Repos. https://doi.org/10.5441/001/1.hm5nk220

Abrahms B, Seidel D, Dougherty E, Hazen E, Bograd S, Wilson A, McNutt J, Costa D, Blake S, Brashares J, Getz W (2017) Suite of simple metrics reveals common movement syndromes across vertebrate taxa. Mov Ecol 5:12

Tsalyuk M, Kilian W, Reineking B, Getz WM (2019) Temporal variation in resource selection of African elephants follows long-term variability in resource availability. Ecol Monogr 89(2):01348

Langrock R, King R, Matthiopoulos J, Thomas L, Fortin D, Morales JM (2012) Flexible and practical modeling of animal telemetry data: hidden Markov models and extensions. Ecology 93(11):2336–2342

Michelot T, Langrock R, Patterson TA (2016) moveHMM: an R package for the statistical modelling of animal movement data using hidden Markov models. Methods Ecol Evol 7(11):1308–1315

Koh K, Joiner WJ, Wu MN, Yue Z, Smith CJ, Sehgal A (2008) Identification of SLEEPLESS, a sleep-promoting factor. Science 321(5887):372–376

Vanin S, Bhutani S, Montelli S, Menegazzi P, Green EW, Pegoraro M, Sandrelli F, Costa R, Kyriacou CP (2012) Unexpected features of Drosophila circadian behavioural rhythms under natural conditions. Nature 484(7394):371–375

Kronfeld-Schor N, Dayan T (2003) Partitioning of time as an ecological resource. Annu Rev Ecol Evol Syst 34(1):153–181

Hut RA, Kronfeld-Schor N, van der Vinne V, De la Iglesia H (2012) In search of a temporal niche: environmental factors. Prog Brain Res 199:281–304

Davimes JG, Alagaili AN, Bertelsen MF, Mohammed OB, Hemingway J, Bennett NC, Manger PR, Gravett N (2017) Temporal niche switching in Arabian oryx (Oryx leucoryx): seasonal plasticity of 24 h activity patterns in a large desert mammal. Physiol Behav 177:148–154

Kristensen K, Nielsen A, Berg CW, Skaug H, Bell BM (2016) TMB: automatic differentiation and Laplace approximation. J Stat Softw 70:1–21

Michelot T (2022) hmmTMB: hidden Markov models with flexible covariate effects in R. arXiv:2211.14139

Pohle J, Langrock R, van Beest FM, Schmidt NM (2017) Selecting the number of states in hidden Markov models: pragmatic solutions illustrated using animal movement. J Agric Biol Environ Stat 22(3):270–293. https://doi.org/10.1007/s13253-017-0283-8

Acknowledgements

This research was funded by the German Research Foundation (DFG) as part of the SFB TRR 212 (NC\(^3\)); Project Numbers 316099922, 396782756 and 471672756.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the special issue "Ecological Statistics" guest edited by Tiago Marques, Charlotte Jones-Todd, Ben Stevenson, Theo Michelot, and Ben Swallow.

Supplementary Information

Below is the link to the electronic supplementary material.

Appendix A

Appendix A

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Feldmann, C.C., Mews, S., Coculla, A. et al. Flexible Modelling of Diel and Other Periodic Variation in Hidden Markov Models. J Stat Theory Pract 17, 45 (2023). https://doi.org/10.1007/s42519-023-00342-7

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-023-00342-7