Abstract

We consider the problem of designing experiments for the comparison of two regression curves describing the relation between a predictor and a response in two groups, where the data between and within the group may be dependent. In order to derive efficient designs we use results from stochastic analysis to identify the best linear unbiased estimator (BLUE) in a corresponding continuous model. It is demonstrated that in general simultaneous estimation using the data from both groups yields more precise results than estimation of the parameters separately in the two groups. Using the BLUE from simultaneous estimation, we then construct an efficient linear estimator for finite sample size by minimizing the mean squared error between the optimal solution in the continuous model and its discrete approximation with respect to the weights (of the linear estimator). Finally, the optimal design points are determined by minimizing the maximal width of a simultaneous confidence band for the difference of the two regression functions. The advantages of the new approach are illustrated by means of a simulation study, where it is shown that the use of the optimal designs yields substantially narrower confidence bands than the application of uniform designs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The application of optimal or efficient designs can improve the accuracy of statistical analysis substantially, and meanwhile, there exists a well-established and powerful theory for the construction of (approximate) optimal designs for independent observations, see for example the monographs of Pukelsheim [28] or Fedorov and Leonov [15]. In contrast, the determination of optimal designs for efficient statistical analysis from dependent data is more challenging because the corresponding optimization problems are in general not convex and therefore the powerful tools of convex analysis are not applicable. Although design problems for correlated data have been discussed for a long time (see, for example [2, 26, 30, 31], who use asymptotic arguments to develop continuous but in general non-convex optimization problems in this context), a large part of the discussion is restricted to models with a small number of parameters and we refer to Pázman and Müller [27], Müller and Pázman [25], Dette et al. [7], Kiselak and Stehlík [19], Harman and Štulajter [17], Rodriguez-Diaz [29], Campos-Barreiro and López-Fidalgo [4] and Attia and Constantinescu [1] among others.

Recently, Dette et al. [9] suggest a more systematic approach to the problem and determine (asymptotic) optimal designs for least squares estimation, under the additional assumption that the regression functions are eigenfunctions of an integral operator associated with the covariance kernel of the error process. For more general models Dette et al. [10] propose to construct the optimal design and estimator simultaneously. More precisely, they construct a class of estimators and corresponding optimal designs with a variance converging (as the sample size increases) to the optimal variance in the continuous model. Dette et al. [6] propose an alternative strategy for this purpose. They start with the construction of the best linear unbiased estimator (BLUE) in the continuous model using stochastic calculus and determine in a second step an implementable design, which is “close” to the solution in the continuous model. By this approach these authors are able to provide an easily implementable estimator with a corresponding design which is practically non-distinguishable from the weighted least squares estimate (WLSE) with corresponding optimal design. Their results are applicable for a broad class of linear regression models with various covariance kernels and have recently been extended to the situation, where also derivatives of the process can be observed (see [11]).

Dette and Schorning [12] and Dette et al. [13] propose designs for the comparison of regression curves from two independent samples, where the latter reference also allows for dependencies within the samples. Their work is motivated by applications in drug development, where a comparison between two regression models that describe the relation between a common response and the same covariates for two groups is used to establish the non-superiority of one model to the other or to check whether the difference between two regression models can be neglected. For example, if the similarity between two regression functions describing the dose–response relationships in the groups individually has been established, subsequent inference in drug development could be based on the combined samples such that a more efficient statistical analysis is possible on the basis of the larger population. Because of its importance, several procedures for the comparison of curves have been investigated in linear and nonlinear models (see [3, 8, 16, 20, 21, 23, 24], among others). Designs minimizing the maximal width of a (simultaneous) confidence band for the difference between the regression curves calculated from two independent groups are determined by Dette and Schorning [12] and Dette et al. [13], who also demonstrate that the use of these designs yields to substantially narrower confidence bands.

While these results refer to independent groups, it is the purpose of the present paper to investigate designs for the comparison of regression curves corresponding to two groups, where the data within the groups and between the groups may be dependent. It will be demonstrated that in most cases simultaneous estimation of the parameters in the regression models using the data from both groups yields to more efficient inference than estimating the parameters in the models corresponding to the different groups separately. Moreover, the simultaneous estimation procedure can never be worse. While this property holds independently of the design under consideration, we subsequently construct efficient designs for the comparison of curves corresponding to not necessarily independent groups and demonstrate its superiority by means of a simulation study.

The remaining part of this paper is organized as follows. In Sect. 2 we introduce the basics and the design problem. Section 3 is devoted to a continuous model, which could be interpreted as a limiting experiment of the discrete model if the sample size converges to infinity. In this model we derive an explicit representation of the BLUE if estimation is performed simultaneously in both groups. In Sect. 4 we develop a discrete approximation of the continuous BLUE by determining the optimal weights for the linear estimator. Finally, the optimal design points are determined such that the maximum width of the confidence band for the difference of the two regression functions is minimal. Section 5 is devoted to a small numerical comparison of the performance of the optimal designs with uniform designs. In particular, it is demonstrated that optimal designs yield substantially narrower confidence bands. In many cases the maximal width of a confidence band from the uniform design is by a factor between 2 and 10 larger than the width of a confidence band from the optimal design.

2 Simultaneous Estimation of Two Regression Models

Throughout this paper we consider the situation of two groups of observations \(Y_{1,1} , \ldots , Y_{1,n}\) and \(Y_{2,1} , \ldots , Y_{2,n}\) at the points \(t_1, \ldots , t_n\) (\(i=1,2\)) where there may exist dependencies within and between the groups. We assume the relation between the response and the covariate t in each group is described by a linear regression model given by

Thus in each group n observations are taken at the same points \(t_1, \ldots , t_n\) which can be chosen in a compact interval, say [a, b], and observations at different points and in different groups might be dependent. The vectors of the unknown parameters \(\theta ^{(1)}\) and \(\theta ^{(2)}\) are assumed to be \(p_1\)- and \(p_2\)-dimensional, respectively, and the corresponding vectors of regression functions \(f_i(t) = (f_{i,1}(t), \ldots , f_{i,p_i}(t))^\top \), \(i=1, 2\), have continuously differentiable linearly independent components.

To address the situation of correlation between the groups, we start with a very simple covariance structure for each group, but we emphasize that all results presented in this paper are correct for more general covariance structures corresponding to Markov processes, see Remark 3.3 for more details. To be precise, let \(\{{\varepsilon }_1(t)| ~t\in [a, b]\}\) and \(\{{\varepsilon }_2(t)| ~t\in [a, b]\}\) denote two independent Brownian motions, such that

denotes the mean value and the covariance of the individual process \(\varepsilon _i\) at the points \(t_{j}\) and \(t_k\), respectively. Let \(\sigma _1, \sigma _2 >0 \), \(\varrho \in (-1, 1)\), denote by \(\varvec{\Sigma }^{1/2}\) the square root of the covariance matrix

and define for \(t\in [a,b]\) the two-dimensional process \( \{ \varvec{\eta }(t)|~ t \in [a,b] \} \) by

where \( \varvec{\varepsilon }(t) =(\varepsilon _1(t) , \varepsilon _2(t))^\top \). Note that \(\varrho \in (-1, 1)\) denotes the correlation between the observations \(Y_1(t_j)\) and \(Y_2(t_j)\) (\(j=1, \ldots , n\)), and that in general the correlation between \(Y_1(t_j)\) and \(Y_2(t_k)\) is given by

if \(t_j,t_k \in [a,b]\), for \(a>0\). If the interval is given by \([a=0, b]\) instead, the correlation between \(Y_1(t_j)\) and \(Y_2(t_k)\) is given by (2.5) if \(t_j, t_k \in (0, b]\), whereas \(\text{ Corr } (Y_1(0), Y_2(t_j))= \text{ Corr } (Y_1(t_j), Y_2(0)) = 0\) for \(t_j \in [0, b]\).

Considering the two groups individually results in proper (for example weighted least squares) estimators of the parameters \(\theta ^{(1)}\) and \(\theta ^{(2)}\). However, this procedure ignores the correlation between the two groups and estimating the parameters \(\theta ^{(1)}\) and \(\theta ^{(2)}\) simultaneously from the data of both groups might result in more precise estimates. In order to define estimators for the parameters \(\theta ^{(1)}\) and \( \theta ^{(2)}\) using the information from both groups we now consider a more general two-dimensional regression model, which on the one hand contains the situation described in the previous paragraph as a special case, but on the other hand also allows us to consider the case, where some of the components in \(\theta _1\) and \( \theta _2\) coincide, see Example 2.2 and Sect. 3.3 for details. To be precise we define the regression model

where two-dimensional observations

are available at points \(t_1, \ldots , t_n \in [a,b]\). In model (2.6) the vector \(\theta = (\vartheta _1, \ldots , \vartheta _p)^\top \) is a p-dimensional parameter and

denotes a \((2\times p)\) matrix containing continuously differentiable regression functions, where the two-dimensional functions \((F_{1,1}(t) , F_{2,1}(t) )^\top , \ldots , (F_{1,p}(t) , F_{2,p}(t) )^\top \) are assumed to be linearly independent.

Example 2.1

The individual models defined in (2.1) are contained in this two-dimensional model. More precisely, defining the \(p=(p_1+ p_2)\)-dimensional vector of parameters \(\theta \) by \(\theta = ((\theta ^{(1)})^\top , (\theta ^{(2)})^\top )^\top \) and the regression function \(\mathbf {F}^\top (t)\) in (2.7) by the rows

it follows that model (2.6) coincides with model (2.1). Moreover, this composite model takes the correlation between the groups into account. In this case the models describing the relation between the variable t and the responses \(Y_1(t)\) and \(Y_2(t)\) do not share any parameters.

Example 2.2

In this example we consider the situation where some of the parameters of the individual models in (2.1) coincide. This situation occurs, for example, if \(Y_1(t)\) and \(Y_2(t)\) represent clinical parameters (depending on time) before and after treatment, where it can be assumed that the effect at time a coincides before and after the treatment. In this case a reasonable model for average effect in the two groups is given by

More generally, we consider the situation where the vectors of the parameters are given by

where \(\theta ^{(0)} \in \mathbb {R}^{p_0}\) denotes the vector of common parameters in both models and vectors \({\tilde{\theta }}^{(1)} \in \mathbb {R}^{p_1 - p_0}\) and \({\tilde{\theta }}^{(2)} \in \mathbb {R}^{p_2 - p_0}\) contain the different parameters in the two individual models. The corresponding regression functions are given by

where the vector \(f^\top _0(t)\) contains the regression functions corresponding to the common parameters in the two models, and \({\tilde{f}_1}^\top (t)\) and \({\tilde{f}_2}^\top (t)\) denote the vectors of regression functions corresponding to the different parameters \({\tilde{\theta }}^{(1)}\) and \({\tilde{\theta }}^{(2)}\), respectively.

Defining the \(p=(p_1+p_2-p_0)\)-dimensional vector of parameters \(\theta \) by \(\theta = (\theta ^{(0)}, {{\tilde{\theta }}}^{(1)}, {{\tilde{\theta }}}^{(2)})\) and the regression function \(\mathbf {F}^\top (t)\) in (2.7) by the rows

it follows that model (2.6) contains the individual models in (2.1), where the regression functions are given by (2.8) and the parameters \(\theta ^{(1)}\) and \(\theta ^{(2)}\) share the parameter \(\theta ^{(0)}\). Moreover, this composite model takes the potential correlation between the groups into account.

3 Continuous Models

It was demonstrated by Dette et al. [6] that efficient designs for dependent data in regression problems can be derived by first considering the estimation problem in a continuous model. In this model there is no optimal design problem as the data can be observed over the full interval [a, b]. However, efficient designs can be determined in two steps. First, one derives the best linear unbiased estimator (BLUE) in the continuous model and, secondly, one determines design points (and an estimator) such that the resulting estimator from the discrete data provides a good approximation of the optimal solution in the continuous model. In this paper we will use this strategy to develop optimal designs for the comparison of regression curves from two (possible) dependent groups. In the present section we discuss a continuous model corresponding to discrete model (2.6), while the second step, the determination of an “optimal” approximation will be postponed to following Sect. 4 .

3.1 Best Linear Unbiased Estimation

To be precise, we consider the continuous version of the linear regression model in (2.6), that is,

where we assume \(0<a<b\) and the full trajectory of the process \(\{ {\varvec{Y}}(t) \mid t\in [a,b]\}\) is observed, \(\{\varvec{\varepsilon }(t)=(\varepsilon _1(t), \varepsilon _2(t))^\top \mid t\in [a,b]\}\) is a vector of independent Brownian motions as defined in (2.2), and the matrix \(\varvec{\Sigma }^{1/2}\) is the square root of the covariance matrix \(\varvec{\Sigma }\) defined in (2.3). Note that we restrict ourselves to an interval on the positive line, because in this case the notation is slightly simpler. But we emphasize that the theory developed in this section can also be applied for \(a=0\), see Remark 3.1 for more details. We further assume that the \((p \times p)\)-matrix

is non-singular.

Theorem 3.1

Consider continuous linear regression model (3.1) on the interval [a, b], \(a >0\), with a continuously differentiable matrix of regression functions \(\mathbf {F}\), a vector \(\{\varvec{\varepsilon }(t)=(\varepsilon _1(t), \varepsilon _2(t))^\top \mid t\in [a,b]\}\) of independent Brownian motions and a covariance matrix \(\varvec{\Sigma }\) defined by (2.3). The best linear unbiased estimator of the parameter \(\theta \) is given by

Moreover, the minimum variance is given by

Proof

Multiplying \( \varvec{{Y}}\) by the matrix \(\varvec{\Sigma }^{-1/2}\) yields a transformed regression model

where \(\varvec{\Sigma }^{-1/2}\) is the inverse of \(\varvec{\Sigma }^{1/2}\), the square root of the covariance matrix \(\varvec{\Sigma }\) defined in (2.3). Note that the components of the vector \(\varvec{\tilde{Y}}\) are independent, and consequently, the joint likelihood function can be obtained as the product of the individual components. Next we rewrite the components of continuous model (3.5) in terms of two stochastic differential equations, that is

where \( \mathbf {1}_{A}\) is the indicator function of the set A and \(\varvec{\Sigma }^{-1/2}_i\) denotes the i-th row of the matrix \(\varvec{\Sigma }^{-1/2}\) (\(i=1, 2\)). Since \(\{\varepsilon _i(t)| ~t\in [a, b]\}\) is a Brownian motion, its increments are independent. Consequently, the processes \(\{\tilde{Y}_i(t)| ~t\in [0,b]\}\) and the random variable \({\tilde{Y}}_i(a)\) are independent. To obtain the joint density of the processes defined by (3.6) and (3.7) it is therefore sufficient to derive the individual densities.

Let \(\mathbb {P}_\theta ^{(i)}\) and \(\mathbb {P}_0^{(i)}\) denote the measures on C([0, b]) associated with the process \({\tilde{Y}}_i = \{ Y_i(t) | \ t \in [0,b] \}\) and \(\{ \varepsilon _{i} (t) | \ t \in [0,b] \}\), respectively. It follows from Theorem 1 in Appendix II of Ibragimov and Has’minskii [18] that \(\mathbb {P}_\theta ^{(i)} \) is absolute continuous with respect to \(\mathbb {P}_0^{(i)}\) with Radon–Nikodym density

Similarly, if \(\mathbb {Q}_\theta \) denotes the distribution of the random variable \({\tilde{Y}}_i(a) \sim {{\mathcal {N}} } (\Sigma ^{-1/2}_i \mathbf {F}^\top (a)\theta , a) \) in (3.7), then the Radon–Nikodym density of \(\mathbb {Q}^{(i)}_\theta \) with respect to \(\mathbb {Q}^{(i)}_0 \) is given by

Consequently, because of independence, the joint density of \((\mathbb {P}^{(i)}_\theta ,\mathbb {Q}^{(i)}_\theta )\) with respect to \((\mathbb {P}^{(i)}_0,\mathbb {Q}^{(i)}_0)\) is obtained as

As the processes \(\tilde{Y}_1\) and \(\tilde{Y}_2\) are independent by construction, the maximum likelihood estimator in model (3.1) can be determined by solving the equation

with respect to \(\theta \). The solution coincides with the linear estimate defined in (3.3), and a straightforward calculation, using Ito’s formula and the fact that the random variables \(\int ^b_a \varvec{\dot{F}} (t) \mathrm{d} \varvec{\varepsilon } _t \) and \( \varvec{\varepsilon }_a\) are independent, gives

where the matrix \(\mathbf {M}\) is defined in (3.2). Since the covariance matrix \(\mathbf {M}^{-1}\) is the inverse of the information matrix in the continuous regression model in (3.1) (see [18], p. 81), linear estimator (3.3) is the BLUE, which completes the proof of Theorem 3.1. \(\square \)

Remark 3.1

The proof of Theorem 3.1 can easily be modified to obtain the BLUE for the continuous model on the interval \([a=0, b]\). More precisely, for \(a=0\) Eq. (3.7) becomes a deterministic equation equivalent to

and we have to distinguish three cases.

-

(1)

If the regression function \(\mathbf {F}\) satisfies \(\mathbf {F}(0) = \mathbf {0}_{p\times 2}\) (that is \(\text{ rank }(\mathbf {F}(0))= 0\))), deterministic Eq. (3.8) does not contain any further information about the parameter \(\theta \) and the maximum likelihood estimator in model (3.1) is given by

$$\begin{aligned} \hat{\theta }_\mathrm{BLUE} = \mathbf {M}_0^{-1} \Big ( \int _0^b \dot{\mathbf {F}}(t) \varvec{\Sigma }^{-1}\,\mathrm{d}\mathbf {Y} (t) \Big ) , \end{aligned}$$where the minimum variance is given by

$$\begin{aligned} \text{ Cov }(\hat{\theta }_\mathrm{BLUE} ) = \mathbf {M}_0^{-1} =\left( \int _0^b {\dot{\mathbf{F}}}(t) \varvec{\Sigma }^{-1} {\dot{\mathbf{F}}}^\top (t) \,\mathrm{d}t\right) ^{-1} \, . \end{aligned}$$ -

(2)

If the rank of the matrix \(\mathbf {F}(0)\) satisfies \(\text{ rank }(\mathbf {F}(0))=1\), deterministic Eq. (3.8) contains one informative equation about \(\theta \). In that case, we assume without loss of generality that \(F_{1,1}(0) \ne 0 \) and it follows by (3.8) that \(\theta _1\) can be reformulated by \(\theta _2, \ldots , \theta _p\) through

$$\begin{aligned} \theta _1 = \frac{Y_1(0) - \sum _{i=j}^p\theta _j F_{1,j}(0)}{F_{1,1}(0)} \, . \end{aligned}$$(3.9)Using (3.9) in combination with model (3.1), we obtain a reduced model by

$$\begin{aligned} \mathbf {Z}(t) = \mathbf {Y}(t) - \frac{Y_1(0)}{F_{1,1}(0)}\begin{pmatrix}F_{1, 1}(0) \\ F_{2, 1}(0)\end{pmatrix} = \tilde{\mathbf {F}}(t){\tilde{\theta }} + \varvec{\Sigma }^{1/2} \varvec{\varepsilon }(t) , \end{aligned}$$(3.10)where the matrix-valued function \(\tilde{\mathbf {F}}(t)\) is defined by

$$\begin{aligned} \tilde{\mathbf {F}}^T(t) = \bigl ( F_{i,j}(t) - \frac{F_{i,1}(0)}{F_{1, 1}(0)} F_{1, j}(0)\bigr )_{i=1, 2, j=2, \ldots p} \end{aligned}$$(3.11)and the reduced \((p-1)\)-dimensional parameter \({{\tilde{\theta }}}\) is given by \({{\tilde{\theta }}} = (\theta _2, \ldots , \theta _p)\). It follows by \(\text{ rank }(\mathbf {F}(0)) = 1\) that the matrix-valued function \(\tilde{\mathbf {F}}(t)\) defined in (3.11) satisfies \(\tilde{\mathbf {F}^T(0)} = 0_{2\times p}\). Consequently, the modified model given by (3.10) satisfies the condition of the case given in (1) and the best linear unbiased estimator for the reduced parameter \({{\tilde{\theta }}}\) is obtained by

$$\begin{aligned} \hat{{{\tilde{\theta }}}}_\mathrm{BLUE} = \mathbf {M}_0^{-1} \Big ( \int _0^b \dot{\tilde{\mathbf {F}}}(t) \varvec{\Sigma }^{-1}\,\mathrm{d}\mathbf {Z} (t) \Big ) , \end{aligned}$$(3.12)where the process \(\{\mathbf {Z(t)}; t\in [0, b]\}\) is defined by (3.10), the matrix \(\tilde{\mathbf {F}}(t)\) is given by (3.11), and the minimum variance is given by

$$\begin{aligned} \text{ Cov }(\hat{{{\tilde{\theta }}}}_\mathrm{BLUE} ) = \mathbf {M}_0^{-1} =\left( \int _0^b \dot{\tilde{\mathbf {F}}}(t) \varvec{\Sigma }^{-1} \dot{\tilde{\mathbf {F}}}^\top (t) \,\mathrm{d}t\right) ^{-1} \, . \end{aligned}$$The best linear unbiased estimator for the remaining parameter \(\theta _1\) is then obtained by

$$\begin{aligned} {\hat{\theta }}_1 = \frac{Y_1(0) - \sum _{i=j}^p\hat{\tilde{\theta }}_{\mathrm{BLUE},j} F_{1,j}(0)}{F_{1,1}(0)} \, . \end{aligned}$$ -

(3)

If the rank of the matrix \(\mathbf {F}(0)\) satisfies \(\text{ rank }(\mathbf {F}(0)) = 2\), Eq. (3.8) contains two informative equations about \(\theta \).

Let

$$\begin{aligned} \mathbf {A}(t) = \begin{pmatrix} F_{1,1}(t) &{} F_{1,2}(t) \\ F_{2,1}(t) &{} F_{2, 2}(t) \end{pmatrix} \end{aligned}$$(3.13)be the submatrix of \(\mathbf {F}\) which contains the first two columns of \(\mathbf {F}^T(t)\). Without loss of generality, we assume that \(\mathbf {A}(0)\) is non-singular (as \(\text{ rank }(\mathbf {F}(0)) = 2\)).

Then it follows by (3.8) that

$$\begin{aligned} \begin{pmatrix} \theta _1 \\ \theta _2 \end{pmatrix} = \mathbf {A}^{-1}(0)\Bigl (\mathbf {Y}(0) - \bigl (\sum _{j=3}^{p} F_{i,j}(0)\theta _j\bigr )_{i=1, 2} \Bigr ) \, . \end{aligned}$$(3.14)Using (3.14) in combination with (3.1) we obtain a reduced model given by

$$\begin{aligned} \mathbf {Z}(t) = \mathbf {Y}(t) - \mathbf {A}(t)\mathbf {A}^{-1}(0) \mathbf {Y}(0) = \tilde{\mathbf {F}}(t){\tilde{\theta }} + \varvec{\Sigma }^{1/2} \varvec{\varepsilon }(t) \end{aligned}$$(3.15)where the matrix-valued function \(\mathbf {A}(t)\) is given by (3.13), the matrix-valued function \(\tilde{\mathbf {F}}^T(t)\) is of the form

$$\begin{aligned} \tilde{\mathbf {F}}^T(t) = \mathbf {A}(t) \mathbf {A}^{-1}(0)\bigl (F_{i,j}(0)\bigr )_{i=1, 2; j=3, \ldots p } + \bigl (F_{i,j}(t)\bigr )_{i=1, 2; j=3, \ldots p } \end{aligned}$$(3.16)and the reduced \((p-2)\)-dimensional parameter \({{\tilde{\theta }}}\) is given by \({{\tilde{\theta }}} = (\theta _3, \ldots , \theta _p) \) .

The matrix-valued function \(\tilde{\mathbf {F}}(t)\) defined in (3.16) satisfies \(\tilde{\mathbf {F}^T}(0) = \mathbf {0}_{2\times p}\). Consequently, the modified model given by (3.15) satisfies the condition of the case given in (1) and the best linear unbiased estimator \(\hat{{{\tilde{\theta }}}}_\mathrm{BLUE}\) for the reduced \((p-2)\)-dimensional parameter \({{\tilde{\theta }}}\) is obtained by (3.12) using the process \(\{\mathbf {Z(t)}; t\in [0, b]\}\) defined by (3.15) and the matrix-valued function \(\tilde{\mathbf {F}}(t)\) given by (3.16). The best linear unbiased estimator for the remaining parameter \((\theta _1, \theta _2)^T\) is then obtained by

$$\begin{aligned} \begin{pmatrix} {\hat{\theta }}_1 \\ {\hat{\theta }}_2 \end{pmatrix} = \mathbf {A}^{-1}(0)\Bigl (\mathbf {Y}(0) - \bigl (\sum _{j=3}^{p} F_{i,j}(0)\hat{{{\tilde{\theta }}}}_\mathrm{BLUE,j}\bigr )_{i=1, 2} \Bigr ) \, . \end{aligned}$$

3.2 Model with No Common Parameters

Recall the definition of model (2.1) in Sect. 1. It was demonstrated in Example 2.1 that this case is a special case of model (2.6), where the matrix \(\mathbf {F}^\top \) is given by

and \(\theta =({\theta ^{(1)}}^\top ,{\theta ^{(2)}}^\top )^\top \). Considering both components in the vector \(\mathbf {Y}\) separately, we obtain continuous versions of the two models introduced in (2.1),that is,

where the error processes \(\{\eta (t) \mid t \in [a, b]\}\) are defined by (2.4). An application of Theorem 3.1 yields the following BLUE.

Corollary 3.1

Consider continuous linear regression model (2.6) on the interval [a, b], with continuously differentiable matrix (3.17), a vector \(\{\varvec{\varepsilon }(t)=(\varepsilon _1(t), \varepsilon _2(t))^\top \mid t\in [a,b]\}\) of independent Brownian motions and a matrix \(\varvec{\Sigma }\) defined by (2.3). The best linear unbiased estimator for the parameter \(\theta \) is given by

The minimum variance is given by \(\mathbf {M}^{-1}\), where

and

It is of interest to compare estimator (3.19) with the estimator \({\hat{\theta }}_\mathrm{sep} = (({\hat{\theta }}_\mathrm{sep}^{(1)})^\top , ({\hat{\theta }}_\mathrm{sep}^{(2)})^\top )^\top \), which is obtained by estimating the parameter in both models (3.18) separately. It follows from Theorem 2.1 in Dette et al. [6] that the best linear unbiased estimators in these models are given by

where the matrices are defined by

Moreover, the covariance matrices of the estimators \({\hat{\theta }}^{(1)}_\mathrm{sep}\) and \({\hat{\theta }}^{(2)}_\mathrm{sep}\) are the inverses of the Fisher information matrices in the individual models, that is

The following result compares the variance of two estimators (3.19) and (3.21).

Theorem 3.2

If the assumptions of Corollary 3.1 are satisfied, we have (with respect to the Loewner ordering)

for all \(\varrho \in (-1, 1)\), where the \( {\hat{\theta }}^{(i )}_\mathrm{BLUE} \) and \( {\hat{\theta }}^{(i)}_\mathrm{sep}\) are the best linear unbiased estimators of the parameter \(\theta ^{(i)}\) obtained by simultaneous estimation (see (3.19)) and separate estimation in the two groups (see (3.21)) , respectively.

Proof

Without loss of generality we consider the case \(i =1\), the proof for the index \(i=2\) is obtained by the same arguments. Let \(\mathbf {K_1}^\top = (\mathbf {I}_{p_1}, \mathbf {0}_{p_1\times p_2})\) be a \(p_1\times (p_1+ p_2)\)- matrix, where \(\mathbf {I}_{p_1}\) and \(\mathbf {0}_{p_1\times p_2}\) denote the \(p_1\)-identity matrix and a \((p_1\times p_2)\)-matrix filled with zeros. Then,

where

is the Schur complement of the block \(\mathbf {M}_{22}\) of the information matrix \(\mathbf {M}\) (see p. 74 in [28]). Observing (3.22) we now compare \(\mathbf {C}_{\mathbf {K}_1}(\mathbf {M})\) and \(\tfrac{1}{\sigma ^2}\mathbf {M}_{11}\) and obtain

where \(\mathbf {C}_{\mathbf {K}_1}({{\tilde{\mathbf {M}}}})\) is the Schur complement of the block \(\mathbf {M}_{22}\) of the matrix

Note that the matrix \({\tilde{\mathbf {M}}}\) is non-negative definite. An application of Lemma 3.12 of Pukelsheim [28] shows that the Schur complement \(\mathbf {C}_{\mathbf {K}_1}({\tilde{\mathbf {M}}})\) is also non-negative definite, that is \(\mathbf {C}_{\mathbf {K}_1}({\tilde{\mathbf {M}}})\ge 0 \) with respect to the Loewner ordering. Observing (3.24) we have

and the statement of the theorem follows. \(\square \)

Remark 3.2

If \(\varrho = 0\) we have \(\mathbf {C}_{K_1}(\mathbf {M})= \mathbf {M}_{11}\), and it follows from (3.23) that separate estimation in the individual groups does not yield less precise estimates, that is \( \text{ Cov }({\hat{\theta }}_\mathrm{sep}^{(l)}) = \text{ Cov }({\hat{\theta }}^{(1)}_\mathrm{BLUE})\) \((i =1,2)\). However, in general we have \(\text{ Cov } ({\hat{\theta }}_\mathrm{sep}^{(l)}) \ge \text{ Cov } ({\hat{\theta }}^{(1)}_\mathrm{BLUE})\). Moreover, the inequality is strict in most cases, which means that simultaneous estimation of the parameters \(\theta ^{(1)}\) and \(\theta ^{(2)}\) yields more precise estimators. A necessary condition for strict inequality (i.e., the matrix \(\text{ Cov } ({\hat{\theta }}_\mathrm{sep}^{(l)}) - \text{ Cov }({\hat{\theta }}^{(1)}_\mathrm{BLUE})\) is positive definite) is the condition \(\varrho \not = 0\). The following result shows that this condition is not sufficient. It considers the important case where the regression functions \(f_1\) and \(f_2\) in (3.17) are the same and shows that in this case the two estimators \({\hat{\theta }}_\mathrm{BLUE}\) and \({\hat{\theta }}_\mathrm{sep}\) coincide.

Corollary 3.2

If the assumptions of Corollary 3.1 hold and additionally the regression functions in model (2.6) satisfy \(f_1 = f_2\), the best linear unbiased estimator for the parameter \(\theta \) is given by

where \(\mathbf {I}_{2}\) denotes the \(2\times 2\)-identity matrix and the matrix \(\mathbf {M}_{11}\) is defined by (3.27). Moreover, the minimum variance is given by \(\text{ Cov }({\hat{\theta }}_\mathrm{BLUE})= \varvec{\Sigma } \otimes \mathbf {M}_{11}^{-1} \) and

3.3 Models with Common Parameters

Recall the definition of model (2.1) in Sect. 1. It was demonstrated in Example 2.2 that this case is a special case of model (2.6), where the matrix of regression functions is given by

and the vector of parameters is defined by

An application of Theorem 3.1 yields the BLUE in model (2.6) with the matrix \( \mathbf {F}^\top \) defined by (3.25).

Corollary 3.3

Consider continuous linear regression model (2.6) on the interval [a, b], where the matrix of regression functions \( \mathbf {F}^\top \) is continuously differentiable. The best linear unbiased estimator for the parameter \(\theta \) is given by

The minimum variance is

where

and individual blocks in this matrix are given by

for \(i, j=0, 1, 2\), where \(g_0(t) = f_0(t)\) and \(g_i(t)= \tilde{f}_i(t)\) for \(i=1, 2\) .

It is again of interest to compare estimate (3.26) with the estimate \({\hat{\theta }}_\mathrm{sep} = (({\hat{\theta }}_\mathrm{sep}^{(1)})^\top , ({\hat{\theta }}_\mathrm{sep}^{(2)})^\top )^\top \), which is obtained by estimating the parameter \(\theta ^{(i)} = ((\theta ^{(0)})^\top ,\) \(({\tilde{\theta }}^{(i)})^\top )^\top \) in both models \(i=1, 2\) (3.18) separately by using (3.21). The corresponding covariances of the estimators \({\hat{\theta }}^{(1)}_{\mathrm{sep}}\) and \({\hat{\theta }}^{(2)}_{\mathrm{sep}}\) are given by (3.22). The following result compares the variance of two estimators (3.26) and (3.21). Its proof is similar to the proof of Theorem 3.2 and therefore omitted.

Theorem 3.3

If the assumptions of Corollary 3.3 are satisfied, we have (with respect to the Loewner ordering)

for all \(\varrho \in (-1, 1)\), where the \( {\hat{\theta }}^{(i )}_\mathrm{BLUE} \) and \( {\hat{\theta }}^{(i)}_\mathrm{sep}\) are the best linear unbiased estimators of the parameter \(\theta ^{(i)}\) obtained by simultaneous and separate estimation, respectively.

Remark 3.3

The results presented so far have been derived for the case where the error process \(\{\varvec{\varepsilon }(t) = (\varepsilon _1(t), \varepsilon _2(t))^\top | ~t\in [a, b]\}\) in (2.6) consists of two independent Brownian motions. This assumption has been made to simplify the notation. Similar results can be obtained for Markov processes, and in this remark, we indicate the essential arguments.

To be precise, assume that the error processes \(\{\varvec{\varepsilon }(t) = (\varepsilon _1(t), \varepsilon _2(t))^\top | ~t\in [a, b]\}\) in model (2.6) consist of two independent centered Gaussian processes with continuous covariance kernel given by

where \(u(\cdot )\) and \(v(\cdot )\) are functions defined on the interval [a, b] such that the function \(q(\cdot ) = u(\cdot )/v(\cdot )\) is positive and strictly increasing. Kernels of form (3.28) are called triangular kernels, and a famous result in Doob [14] essentially shows that a Gaussian process is a Markov process if and only if its covariance kernel is triangular (see also [22]). In this case model (2.6) can be transformed into a model with an error process consisting of two independent Brownian motions using the arguments given in Appendix B of Dette et al. [10]. More precisely, define

and consider the stochastic process

where \(\{ \varvec{{\tilde{\varepsilon }}} (\tilde{t}) = ({\tilde{\varepsilon }}_1({\tilde{t}})^\top , \tilde{\varepsilon }_2({\tilde{t}})) | \ \tilde{t} \in [\tilde{a},\tilde{b}] \}\) consists of two independent Brownian motions on the interval \([\tilde{a},\tilde{b}]= [ q (a), q (b)]\). It now follows from Doob [14] that the process \(\{ \varvec{ \varepsilon }(t) = (\varepsilon _1(t), \varepsilon _2(t) )^\top | \ t \in [a,b] \}\) consists of two independent centered Gaussian processes on the interval [a, b] with covariance kernel (3.28). Consequently, if we consider the model

and

the results obtained so far are applicable. Thus, a ”good” estimator obtained for the parameter \(\theta \) in model (3.29) is also a ”good estimator” for the parameter \(\theta \) in model (3.1) with error process consisting of two Gaussian processes with covariance kernel (3.28). Consequently, we can derive the optimal estimator for the parameter \(\theta \) in continuous model (3.1) with covariance kernel (3.28) from the best linear unbiased estimator in the model given in (3.29) with Brownian motions by an application of Theorem 3.1. The resulting best linear unbiased estimator for \(\theta \) in model (3.1) with triangular kernel (3.28) is of the form

where the minimum variance is given by

4 Optimal Designs for Comparing Curves

In this section we will derive optimal designs for comparing curves. The first part is devoted to a discretization of the BLUE in the continuous model. In the second part we develop an optimality criterion to obtain efficient designs for the comparison of curves based on the discretized estimators.

4.1 From the Continuous to the Discrete Model

To obtain a discrete design for n observations at the points \(a=t_1, \ldots , t_n\) from the continuous design derived in Sect. 3, we use a similar approach as in Dette et al. [6] and construct a discrete approximation of the stochastic integral in (3.3). For this purpose we consider the linear estimator

where \(a= t_1< t_2< \ldots< t_{n-1}< t_n = b\), \(\varvec{\Omega }_2, \ldots , \varvec{\Omega }_n\) are \(p \times p\) weight matrices and \(\varvec{\Phi }_2=\varvec{\Omega }_2 \dot{\mathbf {F}}(t_{1}), \ldots , \varvec{\Phi }_n = \varvec{\Omega }_n \dot{\mathbf {F}}(t_{n-1})\) are \(p\times 2\) matrices, which have to be chosen in a reasonable way. The matrix \(\mathbf {M}^{-1}\) is given in (3.4). To determine these weights in an “optimal” way we first derive a representation of the mean squared error between best linear estimate (3.3) in the continuous model and its discrete approximation (4.1). The following result is a direct consequence of Ito’s formula.

Lemma 4.1

Consider continuous model (3.1). If the assumptions of Theorem 3.1 are satisfied, we have

In the following we choose optimal \(p\times 2\) matrices \(\Phi _i=\varvec{\Omega }_i \dot{\mathbf {F}}(t_{i-1})\) and design points \(t_2, \ldots , t_{n-1}\) \((t_1=a, t_n=b)\), such that linear estimate (4.1) is unbiased and the mean squared error matrix in (4.2) “becomes small.” An alternative criterion is to replace the mean squared error \(\mathbb {E}_\theta [({\hat{\theta }}_\mathrm{BLUE} - {\hat{\theta }}_n)({\hat{\theta }}_\mathrm{BLUE} - {\hat{\theta }}_n)^\top ]\) by the mean squared error

between the estimate \({\hat{\theta }}_n\) defined in (4.1) and the “true” vector of parameters. The following result shows that in the class of unbiased estimators both optimization problems yield the same solution. The proof is similar to the proof of Theorem 3.1 in Dette et al. [6].

Theorem 4.1

The estimator \(\hat{\theta }_n\) defined in (4.1) is unbiased if and only if the identity

is satisfied. Moreover, for any linear unbiased estimator of the form \(\tilde{\theta }_n = \int _a^b \mathbf {G}(s) dY_s \) we have

In order to describe a solution in terms of optimal “weights” \(\varvec{\Phi }^*_i\) and design points \(t^*_i\) we recall that the condition of unbiasedness of the estimate \({\hat{\theta }}_n\) in (4.1) is given by (4.3) and introduce the notation

It follows from Lemma 4.1 and Theorem 4.1 that for an unbiased estimator \({\hat{\theta }}_n\) of form (4.1) the mean squared error has the representation

which has to be “minimized” subject to the constraint

The following result shows that a minimization with respect to the weights \(\varvec{\Phi }_i\) (or equivalently \(\mathbf {A}_i\)) can actually be carried out with respect to the Loewner ordering.

Theorem 4.2

Assume that the assumptions of Theorem 3.1 are satisfied and that the matrix

is non-singular. Let \(\varvec{\Phi }^*_2, \ldots , \varvec{\Phi }^*_n\) denote \((p \times 2)\)-matrices satisfying the equations

then \(\varvec{\Phi }^*_2, \ldots , \varvec{\Phi }^*_n\) are optimal weight matrices minimizing \( \mathbb {E}_\theta [(\hat{\theta }_\mathrm{BLUE} - \hat{\theta }_n)(\hat{\theta }_\mathrm{BLUE} - \hat{\theta }_n)^\top ] \) with respect to the Loewner ordering among all unbiased estimators of form (4.1). Moreover, the variance of the resulting estimator \({\hat{\theta }}^*_n\) is given by

Proof

Let v denote a p-dimensional vector and consider the problem of minimizing the criterion

subject to constraint (4.6). Observing (4.5) this yields the Lagrange function

where \(\mathbf {A}_2, \ldots , \mathbf {A}_n\) are \((p\times 2)\)-matrices and \(\varvec{\Lambda } = (\lambda _{k,i})^p_{k,i =1}\) is a \((p\times p)\)-matrix of Lagrange multipliers. The function \(G_v\) is convex with respect to \(\mathbf {A}_2,\ldots ,\mathbf {A}_n\). Therefore, taking derivatives with respect to \(\mathbf {A}_j\) yields as necessary and sufficient for the extremum (here we use matrix differential calculus)

Rewriting this system of linear equations in a \((p\times 2)\)-matrix form gives

Substituting the expression in (4.6) and using the non-singularity of the matrices \(\mathbf {M}\) and \(\mathbf {B}\) yields for the matrix of Lagrangian multipliers

which gives

Note that one solution of (4.11) is given by

which does not depend on the vectors v. Therefore, the tuple of matrices \((\mathbf {A}^*_2, \ldots , \mathbf {A}^*_n)\) minimizes the convex function \(G_v\) in (4.10) for all \(v\in \mathbb {R}^{p}\).

Observing the notations in (4.4) shows that the optimal matrix weights are given by (4.8). Moreover, these weights in (4.8) do not depend on the vector v either and provide a simultaneous minimizer of the criterion defined in (4.9) for all \(v\in \mathbb {R}^p\). Consequently, the weights defined in (4.8) minimize \( \mathbb {E}_\theta [(\hat{\theta }_\mathrm{BLUE} - \hat{\theta }_n)(\hat{\theta }_\mathrm{BLUE} - \hat{\theta }_n)^\top ] \) under unbiasedness constraint (4.6) with respect to the Loewner ordering. \(\square \)

Remark 4.1

If the matrix \(\mathbf {B}\) in Theorem 4.2 is singular, the optimal weights are not uniquely determined and we propose to replace the inverse B by its Moore–Penrose inverse.

Note that for fixed design points \(t_1, \ldots , t_n \) Theorem 4.2 yields universally optimal weights \(\varvec{\Phi }^*_2,\ldots ,\varvec{\Phi }^*_n\) (with respect to the Loewner ordering) for estimators of form (4.1) satisfying (4.3). On the other hand, a further optimization with respect to the Loewner ordering with respect to the choice of the points \(t_2,\ldots ,t_{n-1}\) \((t_1=a, t_n=b)\) is not possible, and we have to apply a real-valued optimality criterion for this purpose. In the following section, we will derive such a criterion which explicitly addresses the comparison of the regression curves from the two groups introduced in Sect. 2.

4.2 Confidence Bands

We return to the practical scenario of the two groups introduced in (2.1), where we now focus on the comparison of these groups on the interval [a, b].

More precisely, consider the model introduced in (2.6) and let \(\hat{\theta }^*_n\) be estimator (4.1) with optimal weights defined by (4.8) from n observations taken at the points \(a=t_1<t_2<\ldots< t_{n-1} <t_n = b\). Then this estimator is normally distributed with mean \(\mathbb {E}[\hat{\theta }^*_n]=\theta \) and covariance matrix

where the matrices \(\mathbf {M}, \mathbf {M}_0\) and \(\mathbf {B}\) are given by (3.2), (4.6) and (4.7), respectively. Note that the covariance matrix depends on the points \(t_1, \ldots , t_n\) through the matrix \(\mathbf {B}^{-1}\). Moreover, using the estimator \(\hat{\theta }^*_n\) the prediction of the difference of a fixed point \(t\in [a,b]\) satisfies

where

We now use this result and the results of Gsteiger et al. [16] to obtain a simultaneous confidence band for the difference of the two curves. More precisely, if the interval [a, b] is the range where the two curves should be compared, the simultaneous confidence band is defined as follows. Consider the statistic

and define D as the \((1-\alpha )\)-quantile of the corresponding distribution, that is

Note that Gsteiger et al. [16] propose the parametric bootstrap for choosing the critical value D. Define

then the confidence band for the difference of the two regression functions is defined by

Consequently, good points \(t_1=a< t_2< \ldots <t_{n-1}, t_n=b\) should minimize the width

of this band at each \(t \in [a,b ]\). As this is only possible in rare circumstances, we propose to minimize an \(L_p\)-norm of the function \(h(\cdot ; t_1\ldots , t_n)\) as a design criterion, that is

where the case \(p=\infty \) corresponds to the maximal deviation

Finally, the optimal points \(a=t^*_1< t_2^*< \ldots < t_n^*=b\) (minimizing (4.13)) and the corresponding weights derived in Theorem 4.2 provide the optimal linear unbiased estimator of form (4.1) (with the corresponding optimal design).

Example 4.1

We now conclude this section by considering the cases of no common and common parameters, respectively.

-

(a)

If we are in the situation of Example 2.1 (no common parameters), the regression function \(\mathbf {F}^\top (t)\) is of form in (3.17) and the variance of the prediction of the difference at a fixed point \(t\in [a,b]\) reduces to

$$\begin{aligned} h(t; t_1, \ldots , t_n)&= (f^\top _1(t), - f^\top _2(t)) \mathbf {M}^{-1}\left\{ \mathbf {M}_0 \mathbf {B}^{-1}\mathbf {M}_0 \right. \nonumber \\&\quad \left. + \frac{1}{a} \mathbf {F}(a)\varvec{\Sigma }^{-1}\mathbf {F}^\top (a)\right\} \mathbf {M}^{-1} (f^\top _1(t), - f^\top _2(t))^\top . \end{aligned}$$The corresponding design criterion is given by

$$\begin{aligned} \Phi _p \big (t_1, \ldots , t_n\big )&= \Vert (f^\top _1, - f^\top _2) \mathbf {M}^{-1}\left\{ \mathbf {M}_0 \mathbf {B}^{-1}\mathbf {M}_0 \right. \nonumber \\&\quad \left. + \frac{1}{a} \mathbf {F}(a)\varvec{\Sigma }^{-1}\mathbf {F}^\top (a)\right\} \mathbf {M}^{-1}(f^\top _1, - f^\top _2)^\top \Vert _p \, . \end{aligned}$$(4.14) -

(b)

If we are in the situation of Example 2.2 (common parameters), the regression function \(\mathbf {F}^\top (t)\) is given by (3.25) and the variance of the prediction of the difference at a fixed point \(t\in [a, b]\) reduces to

$$\begin{aligned} h(t; t_1, \ldots , t_n)&= (0, \tilde{f}^\top _1(t), - \tilde{f}^\top _2(t)) \mathbf {M}^{-1}\left\{ \mathbf {M}_0 \mathbf {B}^{-1}\mathbf {M}_0 \right. \nonumber \\&\quad \left. + \frac{1}{a} \mathbf {F}(a)\varvec{\Sigma }^{-1}\mathbf {F}^\top (a)\right\} \mathbf {M}^{-1} (0, \tilde{f}^\top _1(t), - \tilde{f}^\top _2(t)) ^\top \, . \end{aligned}$$The corresponding design criterion is given by

$$\begin{aligned} \Phi _p \big (t_1, \ldots , t_n\big )&= \Vert (0^\top _{p_0}, \tilde{f}^\top _1, - \tilde{f}^\top _2) \mathbf {M}^{-1}\left\{ \mathbf {M}_0 \mathbf {B}^{-1}\mathbf {M}_0 \right. \nonumber \\&\quad \left. + \frac{1}{a} \mathbf {F}(a)\varvec{\Sigma }^{-1}\mathbf {F}^\top (a)\right\} \mathbf {M}^{-1}(0^\top _{p_0}, \tilde{f}^\top _1, - \tilde{f}^\top _2)^\top \Vert _p \, . \end{aligned}$$

5 Numerical Examples

In this section the methodology is illustrated in examples by means of a simulation study. To be precise, we consider regression model (2.6), where the matrix \(\mathbf {F} (t)\) is given by (3.17) corresponding to the case that the regression function does not share common parameters, see Sect. 3.2 for more details. In this case the corresponding bounds for the confidence band are given by (4.12), where

and \(\hat{\theta }^*_n = ( (\hat{\theta }^{*(1)}_n)^\top , (\hat{\theta }^{*(2)}_n)^\top )^\top \) is estimator (4.1) with optimal weights defined in (4.8). The design space is given by the interval \([a, b] = [1, 10]\), and we consider three choices for the functions \(f_1\) and \(f_2\) in matrix (3.17), that is

To model the dependence between the two groups we use the covariance matrix

in (2.6), where the correlations are chosen as \(\varrho = 0.2, 0.5, 0.7\). Following the discussion in Sect. 4.1 we focus on the comparison of the regression curves for the two groups and derive optimal designs, minimizing the criterion \( \Phi _\infty \) defined in (4.14). As a result, we obtain simultaneous confidence bands with a smaller maximal width for the difference of the curves describing the relation in the two groups. We can obtain similar results for different values \(p \in (0,\infty ) \) in (4.14), but for the sake of brevity we concentrate on the criterion \(\Phi _{\infty }\) which is probably also the easiest to interpret for practitioners.

We denote by \(\hat{\theta }_{n}^*\) the linear unbiased estimator derived in Sect. 4. For each of the combinations of regression functions containing two different functions defined in (5.1), the optimal weights have been found by Theorem 4.2 and the optimal design points \(t_{i}^*\) are determined minimizing the criterion \(\Phi _\infty \) defined in (4.14). For the numerical optimization the particle swarm optimization (PSO) algorithm is used (see, for example, [5]) assuming a sample size of four observations in each group, that is, \(n=4\). Furthermore, the uniform design used in the following calculations is the design which has four equally spaced design points in the interval [1, 10]. The \(\Phi _{\infty }\)-optimal design points minimizing the criterion criterion in (4.14) are given in Table 1 for all combinations of models and correlations under consideration. Note that for each model the corresponding optimal design points change for different values of correlation \(\varrho \).

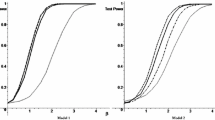

Confidence bands for the difference of the regression functions (solid gray line) on the basis of an optimal (solid lines) and uniform design (dashed lines). Left panel: \(\varrho =0.2\). Middle panel: \(\varrho = 0.5\). Right panel: \(\varrho = 0.7\). First row: model with \(f_1=f_A\) and \(f_2=f_B\). Second row: model with \(f_1=f_A\) and \(f_2=f_C\). Third row: model with \(f_1=f_B\) and \(f_2=f_C\)

In order to investigate the impact of the optimal design on the structure of the confidence bands we have performed a small simulation study simulating confidence bands for the difference of the regression functions. The vector of parameter values used for each model is \(\theta = ({\theta ^{(1)}}^\top , {\theta ^{(2)}}^\top )^\top = (1, 1, 1, 1, 1, 1)^\top \). In Fig. 1 we display the averages of uniform confidence bands defined in (4.12) under the uniform and optimal design calculated by 100 simulation runs.

The left, middle and right columns show the results for the correlations \(\varrho = 0.2\), \(\varrho =0.5\) and \(\varrho = 0.7\), respectively, while the rows correspond to different combinations for the functions \(f_1\) and \(f_2\) (first row: \(f_1=f_A\), \(f_2=f_B\), middle row: \(f_1 = f_A\), \(f_2= f_C\) and last row \(f_1= f_B\), \(f_2=f_C\)). In each graph, the confidence bands from the \(\Phi _{\infty }\)-optimal or the uniform design are plotted separately using the solid and dashed lines, respectively, along with the plot for the true difference \(f_1^\top (t)\theta ^{(1)} - f_2^\top (t)\theta ^{(2)}\) (solid gray lines).

We observe that in all cases under considerations the use of \(\Phi _{\infty }\)-optimal designs yields a clearly visible improvement compared to the uniform design. The maximal width of the confidence band is reduced substantially. Moreover, the bands from the \(\Phi _{\infty }\)-optimal designs are nearly uniformly narrower than the bands based on the uniform design (except for the confidence bands displayed in Fig. 1 in the second row of the first panel). Even more importantly, the confidence bands based on the \(\Phi _{\infty }\)-optimal design show a similar structure as the true differences, while the confidence bands from the uniform design oscillate.

A comparison of the left, middle and right columns in Fig. 1 shows that the maximum width for the confidence bands based on the optimal design decreases with increasing (absolute) correlation \(\varrho \). This effect is not visible for the confidence bands based on the uniform design. For example, for the middle row of Fig. 1, which corresponds to the case \(f_1 = f_A\) and \(f_2 = f_C\), the maximum width of the confidence bands based on the equally spaced design points even seems to increase.

Table 2 presents the values of the criterion \(\Phi _{\infty }\) in (4.14) for the different scenarios and confirms the conclusions drawn from the visual inspection of the confidence bands plots. We observe that the use of the optimal design points reduces the maximum width of the confidence bands substantially. Moreover, for the optimal design the maximum width becomes smaller with increasing (absolute) correlation. On the other hand this monotonicity cannot be observed in all cases for the uniform designs.

In an additional numerical study we investigated the amount of model robustness of the optimal designs depicted in Table 1. For the sake of brevity, we only state the main results here: We observed that the optimal designs based on model combinations, which involve the strongly oscillating function \(f_B\), still produce narrow confidence bands for the other model combinations. These bands are still thinner than the corresponding confidence bands based on the uniform design. On the other hand, if the optimal design based on the model combination \(f_A\) and \(f_C\) is used to construct confidence bands for the other model combinations, the resulting bands become wider, but still have shapes similar to the ones based on the uniform design. In general, the usage of the optimal designs even for miss-specified model combination does not result in wider confidence bands for all models under consideration.

Summarizing, the use of the proposed \(\Phi _{\infty }\)-optimal design improves statistically inference substantially reducing the maximum variance of the difference of the two estimated regression curves even if the regression curves are misspecified. Moreover, simultaneous estimation in combination with a \(\Phi _\infty \)-optimal design yields a further reduction of the maximum width of the confidence bands, thus providing a more precise inference for the difference of the curves describing the relation between t and the responses in the two groups.

References

Attia A, Constantinescu E (2020) Optimal experimental design for inverse problems in the presence of observation correlations. arXiv:2007.14476

Bickel PJ, Herzberg AM (1979) Robustness of design against autocorrelation in time I: asymptotic theory, optimality for location and linear regression. Ann Stat 7(1):77–95

Bretz F, Möllenhoff K, Dette H, Liu W, Trampisch M (2018) Assessing the similarity of dose response and target doses in two non-overlapping subgroups. Stat Med 37:722–738

Campos-Barreiro S, López-Fidalgo J (2015) \(D\)-optimal experimental designs for a growth model applied to a Holstein-Friesian dairy farm. Stat Methods Appl 24:491–505

Clerc M (2006) Particle swarm optimization. Iste Publishing Company, London

Dette H, Konstantinou M, Zhigljavsky A (2017a) A new approach to optimal designs for correlated observations. Ann Stat 45(4):1579–1608

Dette H, Kunert J, Pepelyshev A (2008) Exact optimal designs for weighted least squares analysis with correlated errors. Stat Sin 18(1):135–154

Dette H, Möllenhoff K, Volgushev S, Bretz F (2018) Equivalence of regression curves. J Am Stat Assoc 113:711–729

Dette H, Pepelyshev A, Zhigljavsky A (2013) Optimal design for linear models with correlated observations. Ann Stat 41(1):143–176

Dette H, Pepelyshev A, Zhigljavsky A (2016) Optimal designs in regression with correlated errors. Ann Stat 44(1):113–152

Dette H, Pepelyshev A, Zhigljavsky A (2019) The blue in continuous-time regression models with correlated errors. Ann Stat 47(4):1928–1959

Dette H, Schorning K (2016) Optimal designs for comparing curves. Ann Stat 44(3):1103–1130

Dette H, Schorning K, Konstantinou M (2017b) Optimal designs for comparing regression models with correlated observations. Comput Stat Data Anal 113:273–286

Doob JL (1949) Heuristic approach to the Kolmogorov-Smirnov theorems. Ann Math Stat 20(3):393–403

Fedorov VV, Leonov SL (2013) Optimal design for nonlinear response models. CRC Press, Boca Raton

Gsteiger S, Bretz F, Liu W (2011) Simultaneous confidence bands for non-linear regression models with application to population pharmacokinetic analyses. J Biopharm Stat 21(4):708–725

Harman R, Štulajter F (2010) Optimal prediction designs in finite discrete spectrum linear regression models. Metrika 72(2):281–294

Ibragimov IA, Hasminskii RZ (1981) Statistical estimation. Springer, New York

Kiselak J, Stehlík M (2008) Equidistant \(D\)-optimal designs for parameters of Ornstein-Uhlenbeck process. Stat Probab Lett 78:1388–1396

Liu W, Bretz F, Hayter AJ, Wynn HP (2009) Assessing non-superiority, non-inferiority or equivalence when comparing two regression models over a restricted covariate region. Biometrics 65:1279–1287

Liu W, Jamshidian M, Zhang Y (2011) Multiple comparison of several linear regression models. J Am Stat Assoc 99(466):395–403

Mehr CB, McFadden J (1965) Certain properties of Gaussian processes and their first-passage times. J R Stat Soc Ser B 27(3):505–522

Möllenhoff K, Bretz F, Dette H (2020) Equivalence of regression curves sharing common parameters. Biometrics 76:518–529

Möllenhoff K, Dette H, Kotzagiorgis E, Volgushev S, Collignon O (2018) Regulatory assessment of drug dissolution profiles comparability via maximum deviation. Stat Med 37:2968–2981

Müller WG, Pázman A (2003) Measures for designs in experiments with correlated errors. Biometrika 90:423–434

Näther W (1985) Effective observation of random fields. Teubner Verlagsgesellschaft, Leipzig

Pázman A, Müller WG (2001) Optimal design of experiments subject to correlated errors. Stat Probab Lett 52(1):29–34

Pukelsheim F (2006) Optimal design of experiments. SIAM, Philadelphia

Rodriguez-Diaz JM (2017) Computation of c-optimal designs for models with correlated observations. Comput Stat Data Anal 113:287–296

Sacks J, Ylvisaker ND (1966) Designs for regression problems with correlated errors. Ann Math Stat 37:66–89

Sacks J, Ylvisaker ND (1968) Designs for regression problems with correlated errors; many parameters. Ann Math Stat 39:49–69

Acknowledgements

This research was partially supported by the Collaborative Research Center “Statistical modeling of nonlinear dynamic processes” (Sonderforschungsbereich 823, Teilprojekt C2). The authors would like to thank the referees for their constructive comments on an earlier version of this paper.

Funding

Open Access funding enabled and organized by Projekt DEAL. Funding information was provided by Deutsche Forschungsgemeinschaft (Grant No. SFB 823, C2).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the topical collection “Special Issue: State of the art in research on design and analysis of experiments ” guest edited by John Stufken, Abhyuday Mandal, and Rakhi Singh.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Schorning, K., Dette, H. Optimal Designs for Comparing Regression Curves: Dependence Within and Between Groups. J Stat Theory Pract 15, 88 (2021). https://doi.org/10.1007/s42519-021-00218-8

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-021-00218-8