Abstract

For decades, regression models beyond the mean for continuous responses have attracted great attention in the literature. These models typically include quantile regression and expectile regression. But there is little research on these regression models for discrete responses, particularly from a Bayesian perspective. By forming the likelihood function based on suitable discrete probability mass functions, this paper introduces a discrete density approach for Bayesian inference of these regression models with discrete responses. Bayesian quantile regression for discrete responses is first developed, and then this method is extended to Bayesian expectile regression for discrete responses. The posterior distribution under this approach is shown not only coherent irrespective of the true distribution of the response, but also proper with regarding to improper priors for the unknown model parameters. The performance of the method is evaluated via extensive Monte Carlo simulation studies and one real data analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Regression models for dealing with responses following a non-normal distribution have been drawing significant attention in the literature. For example, quantile regression and expectile regression have been widely developed in the literature and increasingly applied to a greater variety of scientific questions. See, [1,2,3,4,5] and among others.

Typically, quantile regression estimates various conditional quantiles of a response or dependent random variable, including the median (0.5th quantile). Putting different quantile regressions together provide a more complete description of the underlying conditional distribution of the response than a simple mean regression. This is particularly useful when the conditional distribution is asymmetric or heterogeneous or fat-tailed or truncated. Quantile regression has been widely used in statistics and numerous application areas. Bayesian quantile regression for continuous responses has received increasing attention from both theoretical and empirical viewpoints. See a recent review by [6] for the first type of Bayesian quantile regression methods ([7,8,9] and among others) based on asymmetric Laplace distribution (ALD) likelihood function. But among numerous application areas of regression models, discrete observations such as integer values (e.g., -2, -1, 0, 1, 2, 3, etc.) on a response are easily collected. In particular, many big data nowadays contain discrete observations such as number of online transaction, number of days in hospital, number of votes and so on. Classic regression models for discrete responses include logistic, Poisson and negative Binomial regression. Discrete responses are generally skewed, the mean-based regression analysis would not be sufficient for a complete analysis ([10]).

However, quantile regression for discrete responses receives far less attention than for continuous responses in the literature. Binary quantile regression was first introduced by [11]. Then, several authors ([12,13,14] and among others) developed different smoothed estimation techniques (nonparametric or semiparametric methods) for the binary quantile regression model under frequentist approaches. Based on the idea of linking ALD to latent variables in Bayesian Tobit quantile regression ([15]), the papers by [16, 17] and among others did proposed Bayesian inference binary quantile regression. Based on a normal-exponential mixture representation of ALD, [18] and [19] extended Bayesian binary quantile regression to Bayesain ordinal quantile regression. Then, [20] further extended it to Bayesain inference of single-index quantile regression for ordinal data. [21, 22] and [23] applied these discrete quantile regression methods in economics, energy and education, respectively.

But all these research methods are not a direct Bayesian quantile regression approach for discrete responses, or deal with quantile regression for general discrete responses. Similarly, there is little research on expectile regression for discrete responses, let alone from a Bayesian perspective ([24]). A semi-parametric jittering approach for general count quantile regression has been introduced ([25]), but some degree of smoothness has to be artificially imposed on the approach. Quantile regression for count data may be achieved via density regression as shown in [26], but this approach may result in a global estimation of regression coefficients. In this paper, we propose Bayesian inference quantile regression for discrete responses via introducing a discrete version of ALD-based likelihood function. This approach not only keeps the ‘local property’ of quantile regression, but also enjoys the coherency and finite posterior moments of the posterior distribution. Along this line, we then introduce Bayesian expectile regression for discrete responses, which proceeds by forming the likelihood function based on a discrete asymmetric normal distribution (DAND). Section 2 introduces a discrete asymmetric Laplace distribution (DALD) and discusses its natural link with quantile regression for discrete responses. Sections 3 and 4 detail this Bayesian approach for quantile regression and expectile regression with discrete responses, respectively. Section 5 illustrates the numerical performance of the proposed method. Section 6 concludes with a brief discussion.

2 Discrete Asymmetric Laplace Distribution

Let Y be a real-valued random variable with its \(\tau \)-th (\(0<\tau <1\)) quantile \(\mu \) (\(-\infty<\mu <\infty \)), and then it is well-known that \(\mu \) could be found by minimizing the expected loss of Y with respect to the loss function (or check function) \(\rho _\tau (y)= y(\tau -I(y<0)),\) or \(\min _\mu E_{F_0(Y)}\rho _\tau (Y-\mu )\), where \(F_0(Y)\) denotes the distribution function of Y, which is usually unknown in practice.

When Y is a continuous random variable, the inference based on the loss function \(\rho _\tau (y-\mu )\) was linked to a maximum likelihood inference based on an \(\mathrm {ALD}(\mu , \tau )\) with local parameter \(\mu \) and shape parameter \(\tau \):

Now, if Y is a discrete random variable, let Y take integer values in \({\mathbb {Z}}\). We first derive a discrete version of ALD or a DALD and then show that the \(\tau \)th quantile \(\mu \) can also be estimated via this DALD.

To this end, note that the corresponding cumulative distribution function (c.d.f.) of an ALD in Eq.(1) can be written as:

Let \(S(y;\mu ,\tau )\) be the survival function of this ALD, which is given by:

then, according to [27], the probability mass function (p.m.f.) of a DALD can be defined as:

with \(S(y;\mu ,\tau )\) in Eq.(3). Note that when \(y \ge \mu \),

and similar deviation of it in the case of \(y <\mu \). It follows:

and the loss function (or check function) is

Remark 1

One could also incorporate scale parameter \(\sigma \) in Eq.(4) to obtain

According to [6], any fixed \(\sigma \) can be utilised to obtain asymptotically valid posterior inference and make the results asymptotically invariant. Here, we simply fix \(\sigma \) as 1.

Given a sample \(\varvec{Y}= (Y_1, Y_2, \cdots , Y_n)\) of the discrete response Y whose distribution \(F_0(y)\) may be unknown, consider the DALD-based likelihood function for \(\mu \):

Then, we have

This means that the estimation of the \(\tau \)-th quantile \(\mu \) of a discrete random variable Y with respect to the loss function \(\rho _\tau (\cdot )\) is equivalent to maximization of the likelihood function Eq.(6) based on the DALD. According to [28], a Bayesian inference of \(\mu \) can be developed. That is, if \(\pi (\mu )\) represents prior beliefs about the \(\tau \)-th quantile \(\mu \), and \(\varvec{Y}\) is observed data from the unknown distribution \(F_0(Y)\) of the discrete random variable Y, then a posterior \(\pi (\mu |\varvec{Y})\) which is valid and coherent update of \(\pi (\mu )\) can be obtained via the DALD-based likelihood function Eq.(6) and is given by:

Coherency here means if \(\nu \) denotes a probability measure on the space of \(\mu \), then \(\nu \) is named coherent if

for all other probability measure \(\nu _1\) on the space of \(\mu \) in terms of expected loss of Y given by \(E_{F_0(Y)}\rho _\tau (Y-\mu )\). This coherency property aims to ensure the consistency of posterior from the proposed inference even if the ‘working likelihood’ in Eqs.(3)–(A1) is misspecified.

3 Bayesian Quantile Regression with Discrete Responses

Generalized linear models (GLMs) extend the linear modelling capability to scenarios that involve non-normal distributions \( f(y; \mu ) \) or heteroscedasticity, with \( f(y; \mu ) \) specified by the values of \(\mu =E[Y|\varvec{X}=\varvec{x}]\) conditional on \(\varvec{x}\), including to involve a known link function g, \(g(\mu )=\varvec{x}^T \varvec{\beta }\). Specifically, GLMs also apply to the so-called ‘exponential’ family of models, which typically include Poisson regression with log-link function.

When we are interested in the conditional quantile \(Q_Y(\tau | \varvec{x})\) of a discrete response, according to [7], we could still cast the problem in the framework of the generalized linear model, no matter what the original distribution of the data is, by assuming that (i) \( f(y; \mu ) \) follows a DALD in the form of Eqs.(5) or (A1) and (ii) \(g(\mu )=\varvec{x}^T \varvec{\beta }(\tau ) = Q_Y(\tau | \varvec{x})\) for any \(0< \tau <1\).

When covariate information such as a covariate vector \(\varvec{X}\) is available, quantile regression denoted by \(Q_Y(\tau | \varvec{X})\) for \(\mu \) is introduced. Without loss of generality, consider a linear regression model for \(Q_Y(\tau | \varvec{X})\): \(Q_Y(\tau | \varvec{X}) = \varvec{X}^T \varvec{\beta }\), where \(\varvec{\beta }\) is the regression parameter vector.

Given observations \(\varvec{Y}= (Y_1, Y_2, \cdots , Y_n)\) of the discrete response Y, one of the aims in regression analysis is the inference of \(\varvec{\beta }\). Let \(\pi (\varvec{\beta })\) be the prior distribution of \(\varvec{\beta }\), then the posterior distribution of \(\varvec{\beta }\), \(\pi (\varvec{\beta }|\varvec{Y})\) is given by

where the likelihood function \(L(\varvec{Y}|\varvec{\beta })\) is given by:

The numerical computation of the posterior distribution can be carried out by the Metropolis-Hastings algorithm. That is, we first generate a candidate \(\varvec{\beta }^*\) according to a random walk, which results in a symmetric proposal distribution in the Metropolis algorithm. Then, accept or reject \(\varvec{\beta }^*\) for \(\varvec{\beta }\) according to the acceptance probability \(p(\varvec{\beta }^*| \varvec{\beta }) = \min \left( 1, \frac{L\left( \varvec{y}|\varvec{\beta }^*\right) }{L\left( \varvec{y}|\varvec{\beta }\right) }\right) \) around 30%. We always suggest to throw away at last 30% of the iterations at the beginning of an MCMC run or ‘burn-in’ period to make sure the chain to reach stationarity.

Besides the coherent property discussed in Sect. 2 for posterior distribution \(\pi (\varvec{\beta }|\varvec{Y})\), it is important to verify the existence of the posterior distribution when the prior of \(\varvec{\beta }\) is improper, i.e,

or, equivalently,

Moreover, it is preferable to check that the existence of posterior moments of the regression parameters is entirely unaffected by improper priors and quantile index \(\tau \) ([29] and among others), i.e,

where \(r_j\) denotes the order of the moments of \(\beta _j\).

To this end, we have the following conclusion:

Theorem 3.1

Assume the posterior is given by Eq.(8) and \(\pi (\varvec{\beta }) \propto 1\), then all posterior moments of \(\varvec{\beta }\) in Eq.(9) exist.

The proof of Theorem 3.1 is available in the Supplementary Materials.

4 Bayesian Expectile Regression for Discrete Responses

Instead of defining the \(\tau \)-th quantile of a response Y by \(\mathop {\hbox {argmin}}\limits _\mu E\left( \rho _\tau (Y-\mu )\right) \), [30] defined the \(\theta \)-th expectile of Y by

in terms of an asymmetric quadratic loss function

where \(\theta \in (0,1)\) determines the degree of asymmetry of the loss function. Note that \(\theta \) is typically not equal to \(\tau \), although there is a one-to-one relationship between \(\tau \)-th quantile and \(\theta \)-th expectile ([31]).

Corresponding to \(\rho _\theta ^{(E)}(u)\), we can define an asymmetric normal distribution (AND) whose density function is given by

where \(k = \frac{2}{\sqrt{\pi }} \frac{\sqrt{\theta (1-\theta )}}{\sqrt{\theta }+\sqrt{1-\theta }}\), \(\mu \) and \(\theta \) are the location parameter and shape parameter, respectively.

The corresponding c.d.f. of the AND can be written as:

where \(\Phi (\cdot )\) denotes the c.d.f. of the standard normal distribution.

Therefore, based on the survival function \(S^{(E)}\left( y; \mu , \theta \right) \) \(= 1-F^{(E)}\left( y; \mu , \theta \right) \), we can derive the p.m.f. of the DAND by following the same procedure as in Eq.(4). In fact, note that

then, for \(y \in Z\),

we have

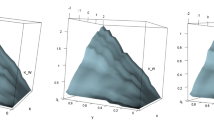

Now, if Y is a discrete random variable with unknown distribution function \(F_0(y)\), then given a sample \(\varvec{Y}= (Y_1, Y_2, \cdots , Y_n)\) of Y, the \(\theta \)-th expectile of Y is estimated by the minimization of the loss function \(\rho _\theta ^{(E)}\) or \(\mathop {\hbox {argmin}}\limits _{\mu } \sum _{i=1}^n \rho _\theta ^{(E)}\left( Y_i-\mu \right) \). Consider the DAND-based likelihood function:

We can see that the expectile \(\mu \) can also be estimated equivalently by the maximization of the likelihood function \(L^{(E)}(\varvec{Y}|\mu )\) in Eq.(14). In fact,

(According to Lagrange mean value theorem \(\int ^b_a \phi (u) du=\phi (\xi ) (b-a)\),)

where \(\phi (\cdot )\) denotes the p.d.f. of the standard normal distribution.

Again, according to [28], a Bayesian inference of the expectile \(\mu \) can be developed. That is, a coherent posterior \(\pi (\mu |\varvec{Y})\) for the update of \(\pi (\mu )\) exists and is given by \(\pi (\mu |\varvec{Y}) \propto \pi (\mu )\, L^{(E)}(\varvec{Y}|\mu )\) with the likelihood function \(L^{(E)}\left( \varvec{Y}|\mu \right) \) in Eq.(14). Along with the similar discussion in Sect. 3, we can prove that the posterior distribution under this Bayesian inference is proper with regarding to improper priors for regression parameter \(\varvec{\beta }\) in the expectile regression model \(\mu = \varvec{X}^T \varvec{\beta }\), if covariate information \(\varvec{X}\) is available. The corresponding proofs are available in the Supplementary Materials.

5 Numerical Analysis

In this section, we implement the proposed method to illustrate the Bayesian quantile regression for discrete responses via Monte Carlo simulation studies and one real data analysis. In all numerical analyses, we follow standard practice by setting the variance of random-walk MH sampling to tune the proposal distribution to get around a 0.25–0.3 acceptance rate. We discard the first 10000 of 20000 runs in every case of MCMC outputs and then collect a sample of 10000 values to calculate the posterior distribution of each of regression coefficients in \(\varvec{\beta }\). All numerical experiments are carried out on one Intel Core i5-3470 CPU (3.20GMHz) processor and 8 GB RAM.

5.1 Simulated Example 1

Consider a simple regression model for which the sample \(Y_i (i=1, 2, \cdots , n)\) is counts and follows a Poisson distribution with parameter 3 and a Binomial distribution with parameters 20 and 1/5, respectively. 500 simulations for each case of \(\tau \in \{\)0.05, 0.25, 0.50, 0.75, 0.95\(\}\) and \(n \in \{200, 1000\}\) are performed. The quantile regression \(Q_\tau (Y)=\beta (\tau )\) is a constant depending on \(\tau \) only. Table 1 compares the posterior means with the true values of \(\beta (\tau )\) for each case under 500 simulations. Moreover, the expectile regression \(Expectile_\theta (Y) = \beta (\theta )\) is also a constant depending on the \(\theta \)-th expectile. Table 2 compares the posterior means with the true values of \(\beta (\theta )\) obtained via an empirical estimation in Eq.(10) for different cases. Both Tables 1 and 2 show that the results obtained by the proposed Bayesian inference are reasonably accurate.

5.2 Simulated Example 2

We consider a discrete quantile linear regression:

where n and p denote the number of observations and independent variables, respectively. \(\beta _k, k = 1,\ldots ,p\) are the regression parameters. Let the random item \(\varepsilon _i\) follows a Poisson distribution with parameter 3. 500 simulations for each case of \(\tau \in \{0.25, 0.50, 0.75\}\) and \(n \in \{300, 1500\}\) are performed.

Without loss of generality, let \(p=2\) in Eq.(15):

where covariate \(X_{1,i}\) is generated from a Geometric distribution with probability 1/4, and covariate \(X_{2,i}\) is generated from a Poisson distribution with parameter 2. We generate the training data with \(\beta _i = \{6, 2, -4\}, i= \{0,1,2\}\) and \(\varepsilon _i \sim \mathrm {Pois}(3)\). 500 simulations for each case of \(\tau \in \{0.25, 0.50, 0.75\}\) and \(n_1 \in \{200, 1000\}\) are performed.

Therefore, the corresponding discrete quantile function is of the form

Although we have chosen improper flat priors in Simulated example 1 above, one may use other priors for analysis in a relatively straightforward fashion. For example, along with [32], conditional conjugate prior distribution in the Normal-Gamma Inverse form for the unknown parameters \(\varvec{\beta }\) can be obtained. Given \(\tau \in (0,1)\), for any \(a > 0\), the prior mean and covariance matrix for \(\varvec{\beta }\) are given, respectively, by

where \(\varvec{\beta }_{a}\) is anticipated values, and \(g>0\) is a known scaling factor. Various values of g have been used in the context of variable selection and estimation. [33] performed variable selection using splines and suggested that the value of g is in the range \(10 \le g \le 1000\). Following the discussions in [32, 34] and among others, we set \(g = 100\) in this paper. Thus, given \(\tau \) and \(\varvec{\beta }_{a\tau }\), the conditional prior distribution for \(\varvec{\beta }\) is readily available. Here, we suggest a particular form of a conjugate Normal-Inverse Gamma family for \(\varvec{\beta }\) given by

For simplicity, let \(E(\varvec{\beta })\) and \(Cov(\varvec{\beta })\) be the fitted values obtained by the semi-parametric jittering approach ([25]), as presented in Table 3.

Under the proposed Bayesian inference in Sect. 2, Table 4 reports the posterior mean, standard deviation and 95% credible interval for the regression parameters \(\beta _0(\tau ), \beta _1(\tau )\) and \(\beta _2(\tau )\), under 500 simulations with \(\varepsilon _i \sim \mathrm {Pois}(3)\), based on a conjugate Normal-Inverse Gamma prior for \(\varvec{\beta }\). It can be shown from Table 4 that under different prior settings, the posterior estimates of regression coefficients obtained from the working likelihood analysis are consistent.

5.3 Analysis of Length of Stay (LoS) in Days

The data are extracted from the Worcester Heart Attack Study with 500 observations ([35]), which describes factors associated with trends over time in the incidence and survival rates following hospital admission for acute myocardial infarction. We aim to explore the relationship between the LoS and its associated factors such as age, gender (years), hr (initial heart rate by beats per minute), BMI (body mass index by \(kg/m^2\)), av3 (complete heart block), cvd (history of cardiovscular disease), sysbp (initial systolic blood pressure by mmHg) and diasbp (initial diastolic blood pressure by mmHg). Among covariates, gender (0=Male, 1=Female), av3 (0=No, 1=Yes) and cvd (0=No, 1=Yes) are binary variables. Age, hr, BMI, sysbp and diasbp as the continuous covariates are detailed in Table 5. The distribution of LoS is skewed and one is usually more interested in long stay or short stay than an average stay ([36, 37] and among others). We aim to investigate how these factors affect the LoS, from short LoS, to middle LoS and long LoS, so that we carry out the analysis in a complete range of the quantiles \(\tau \in \{0.05, 0.1, 0.25, 0.50, 0.75, 0.95\}\).

Therefore, we fit a quantile regression model for LoS of the form:

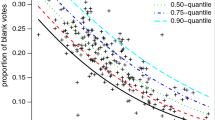

Table 6 shows the posterior mean of all regression parameters under selected quantiles. The boxplots in Fig. 1 also display the posterior mean of these regression parameters across \(\tau \)s.

The values of the posterior mean of \(\beta _1\), \(\beta _3\), \(\beta _7\) and \(\beta _8\) in Table 6 clearly indicate that age and all initial states of heart rate, systolic blood pressure and initial diastolic blood pressure, have little effect on LoS (days), particularly on the low quantiles of its distribution, whereas the values of the posterior mean of \(\beta _2\), \(\beta _5\) and \(\beta _6\) show that gender, complete heart block and history of cardiovscular disease are those factors to affect the LoS most. Specifically, female patients tend to stay longer than male patients generally once they got those health problems and admitted into hospitals. Similarly, patients suffering complete heart block or history of cardiovscular disease stay much longer than patients without these problems, particularly for very long stay needed.

Based on the posterior mean of \(\beta _4\) in Table 6, the effect on LoS from BMI is not big but generally negative except on the median and 95% quantile of the distribution of LoS.

If we compare the fitted median quantiel regression of LoS with a Poisson mean regression below,

We can see that both the proposed median model and Poisson mean model provide consistent conclusion, but Poisson regression can not explored the short and long LoS.

Unlike the jittering method for count (Machado and Silva, 2005), the proposed method in this paper is density function based Bayesian inference. However, if we compare our findings to the results from the count model of Machado and Silva (2005) in Table 7, we can draw similar conclusions to those from Table 6. The difference is that the proposed method can show that complete heart block or history of cardiovscular disease increase the long LoS significantly, which is true in real situations and much significant than what explored in the count model of Machado and Silva (2005).

6 Discussion

Discrete responses or count data are common in many disciplines. Regression analysis of discrete responses has been an active and promising area of research. Data with discrete responses may present features of skewness, fat-tailed and leptokurtic. Quantile regression is a more suitable tool to analyse this type of data than mean regression. We propose Bayesian quantile regression and Bayesian expectile regression for discrete responses. This is achieved by using a discrete asymmetric Laplace distribution and discrete asymmetric normal distribution to form the likelihood function, respectively. The method is shown robust numerically and coherent theoretically. The Bayesian approach which is fairly easy to implement and provides complete univariate and joint posterior distributions of parameters of interest. The posterior distributions of the unknown model parameters are obtained by using M-H algorithm implemented in R. We have shown promising results through Monte Carlo simulation studies and one real data analysis.

References

Efron B (1991) Resgression percentiles using asymmetric squared error loss. Stat Sinica 1:93–125

Koenker R (2005) Quantile regression. Cambridge University Press, Cambridge

Waltrup LS, Sobotka F, Kneib T et al (2015) Expectile and quantile regression-david and goliath? Stat Modell 15(5):433–456

Ehm W, Gneiting T, Jordan A et al (2016) Of quantiles and expectiles: consistent scoring functions, Choquet representations and forecast rankings. J R Stat Soc Ser B 78(3):505–562

Yu K, Lu Z, Stander J (2003) Quantile regression: applications and current research areas. J R Stat Soc Ser D (The Statistician) 52(3):331–350

Yang Y, Wang HJ, He X (2016) Posterior inference in Bayesian quantile regression with asymmetric Laplace likelihood. Int Stat Rev 84(3):327–344

Yu K, Moyeed RA (2001) Bayesian quantile regression. Stat Probab Lett 54(4):437–447

Chen C, Yu K (2009) Automatic bayesian quantile regression curve fitting. Stat Comput 19(3):271–281

Yu K (2002) Quantile regression using rjmcmc algorithm. Comput Stat Data Anal 40(2):303–315

Klakattawi HS, Vinciotti V, Yu K (2018) A simple and adaptive dispersion regression model for count data. Entropy 20(2):142

Manski CF (1985) Semiparametric analysis of discrete response: asymptotic properties of the maximum score estimator. J Econ 27(3):313–333

Kordas G (2006) Smoothed binary regression quantiles. J Appl Econ 21(3):387–407

Horowitz JL (1992) A smoothed maximum score estimator for the binary response model. Econ J Econ Soc 60:505–531

Aristodemou K, He J, Yu K (2019) Binary quantile regression and variable selection: a new approach. Econ Rev 38(6):679–694

Yu K, Stander J (2007) Bayesian analysis of a tobit quantile regression model. J Econ 137(1):260–276

Benoit DF, Van den Poel D (2012) Binary quantile regression: a bayesian approach based on the asymmetric laplace distribution. J Appl Econ 27(7):1174–1188

Benoit DF, Alhamzawi R, Yu K (2013) Bayesian lasso binary quantile regression. Comput Stat 28(6):2861–2873

Rahman MA (2016) Bayesian quantile regression for ordinal models. Bayesian Anal 11(1):1–24

Rahman MA, Karnawat S (2019) Flexible bayesian quantile regression in ordinal models. In: Jeliazkov I, Tobias JL (eds) Topics in identification, limited dependent variables, partial observability, experimentation, and flexible modeling: part b. Emerald Publishing Limited, Bingley

Alhamzawi R, Ali HTM (2018) Bayesian quantile regression for ordinal longitudinal data. J Appl Stat 45(5):815–828

Rahman MA, Vossmeyer A (2019) Estimation and applications of quantile regression for binary longitudinal data. In: Jeliazkov I, Tobias JL (eds) Topics in identification, limited dependent variables, partial observability, experimentation, and flexible modeling: part b. Emerald Publishing Limited, Bingley

Omata Y, Katayama H, Arimura TH (2017) Same concerns, same responses? a bayesian quantile regression analysis of the determinants for supporting nuclear power generation in Japan. Environ Econ Policy Stud 19(3):581–608

Ojha M, Rahman MA (2020) Do online courses provide an equal educational value compared to in-person classroom teaching? evidence from us survey data using quantile regression. Evidence from US Survey Data Using Quantile Regression (July 14, 2020)

Kneib T (2013) Beyond mean regression. Stat Modell 13(4):275–303

Machado JAF, Silva JS (2005) Quantiles for counts. J Am Stat Assoc 100(472):1226–1237

Canale A, Dunson DB (2011) Bayesian kernel mixtures for counts. J Am Stat Assoc 106(496):1528–1539

Roy D (1993) Reliability measures in the discrete bivariate set-up and related characterization results for a bivariate geometric distribution. J Multivar Anal 46(2):362–373

Bissiri PG, Holmes C, Walker SG (2016) A general framework for updating belief distributions. J R Stat Soc Ser B (Stat Methodol) 78(5):1103–1130

Fernández C, Steel MF (1998) On Bayesian modeling of fat tails and skewness. J Am Stat Assoc 93(441):359–371

Newey WK, Powell JL (1987) Asymmetric least squares estimation and testing. Econometrica 55:819–847

Yao Q, Tong H (1996) Asymmetric least squares regression estimation: a nonparametric approach. J Nonparametr Stat 6(2–3):273–292

Alhamzawi R, Yu K (2013) Conjugate priors and variable selection for bayesian quantile regression. Comput Stat Data Anal 64:209–219

Smith M, Kohn R (1996) Nonparametric regression using Bayesian variable selection. J Econ 75(2):317–343

Chen RB, Chu CH, Lai TY et al (2011) Stochastic matching pursuit for Bayesian variable selection. Stat Comput 21(2):247–259

Hosmer DW, Lemeshow S, May S (2008) Model development. Regression modeling of time-to-event data, 2 Edition, applied survival analysis, pp 132–168

Borghans I, Hekkert KD, den Ouden L et al (2014) Unexpectedly long hospital stays as an indicator of risk of unsafe care: an exploratory study. BMJ Open 4(6):e004773

Wolkewitz M, Zortel M, Palomar-Martinez M et al (2017) Landmark prediction of nosocomial infection risk to disentangle short-and long-stay patients. J Hosp Infect 96(1):81–84

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Proof of Theorem 3.1

A parametrization of the DALD in Eq.(5) leads to the following alternative form,

where the parameters p and q (\( 0< p,q <1\)) are related to \(\tau \) via the relationships \(p=\exp \left\{ -\tau \right\} \) and \(q=\exp \left\{ 1-\tau \right\} \).

Lemma A.1

The p.m.f. \(\phi (t)\) defined in Eq.(A1) is bounded by \( p^{|t|}(1-q)\log p \) and \(q^{|t|}(1-p)\log q\).

Proof of Lemma A.1

Expand \(\phi (t)\) as a mixture of g, consider \( 0< q \le p < 1\),

Also,

Now, it is known that \(g(t;a) = a^{|t|}(a-1)\log a\) with \(0<a<1\) is a increasing function of t. Therefore, \(\phi (t)\) has upper bound \(h(p,q)q^{|t|}\) and lower bound \(h(q,p)p^{|t|}\). The same procedure may be easily adapted to \(q \ge p\). \(\square \)

Lemma A.2

For any constant \(a (0<a <1)\) and sample size \(n > m\),

Proof of Lemma A.2

Without loss of generality and consider \(m = 1\) for simplicity, then \(\varvec{X}_i^T \varvec{\beta }= \beta _0 + \beta _1 X_{1i}\),

Since the double-integration \(\int _{{\mathbb {R}}^2} |U|^{r_0} \exp (-|U+V+c_1|) |V_1|^{r_1} \exp (-|U+V+c_2) dU dV\) is finite for any constants \(c_1, c_2\), \(r_0 \ge \) and \(r_1 \ge 0\), Lemma 2 is proved. \(\square \)

Theorem 3.1 below establishes that in the absence of any realistic prior information we could legitimately use an improper uniform prior distribution for all the components of \(\varvec{\beta }\).

Theorem 3.1Assume the posterior is given by Eq.(8) and \(\pi (\varvec{\beta }) \propto 1\), then all posterior moments of \(\varvec{\beta }\) in Eq.(9) exist.

Proof of Theorem 3.1

We need to prove that

is finite. According to Lemma A.1 and Lemma A.2, it suffices to be proved. \(\square \)

Appendix B. Property in Sect. 4

Under Bayesian inference of expectile \(\mu \) for the discrete random variable Y, it can be proved that the posterior distribution is proper with regards to improper priors for the unknown parameters.

Similar to Lemma A.1, the p.m.f \(\phi ^{(E)}(t)\) defined in Eq.(13) is bounded. Consider \(\theta \le 0.5\),

Also,

where \(k = \frac{2}{\sqrt{\pi }} \frac{\sqrt{\theta (1-\theta )}}{\sqrt{\theta }+\sqrt{1-\theta }}\), \(\Phi (\cdot )\) denotes the c.d.f. of the standard normal distribution.

Now, it is known that \(\sqrt{\frac{\theta }{1-\theta }} \le \sqrt{\frac{1-\theta }{\theta }}\) for \(\theta \le 0.5\). Therefore, \(\phi ^{(E)}(t)\) has upper bound \(k \sqrt{\frac{2\pi (1-\theta )}{\theta }} \Phi '(t)\) and lower bound \(k \sqrt{\frac{2\pi \theta }{1-\theta }} \Phi '(t)\) . The same procedure may be easily adapted to \(\theta \ge 0.5\).

Then, for \(n > m\), we also have

Similarly, consider \(m = 1\) for simplicity, then \(\varvec{X}_i^T \varvec{\beta }= \beta _0 + \beta _1 X_{1i}\),

As is known to all that the double-integration is finite for any constants.

Therefore, assume the likelihood is given by Eq.(8) and \(\pi (\varvec{\beta }) \propto 1\), then it can be proved that all posterior moments of \(\varvec{\beta }\) in Eq.(9) exist.

Appendix C. Worcester Heart Attack Study Data Set

Data set used in the illustration of the proposed novel method in Sect. 5.3 is available in R-package smoothHR.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, X., Hu, X. & Yu, K. A Discrete Density Approach to Bayesian Quantile and Expectile Regression with Discrete Responses. J Stat Theory Pract 15, 73 (2021). https://doi.org/10.1007/s42519-021-00203-1

Accepted:

Published:

DOI: https://doi.org/10.1007/s42519-021-00203-1