Abstract

In this manuscript, the authors deeply investigate and test a modern technique that allows the analysis of a structure starting from a photo to identify and locate damage present on it, rapidly and non-destructively without any physical interaction with the analyzed structure. The technique suitability is tested on four statically deformed beams, on which notches represent the defects. The core of the proposed method is the correlation between the curvature that each beam presents under load conditions and its flexural stiffness. The proposed methodology consists in taking a photo of the inflected beam; subsequently, the acquired photo is manipulated with specifically designed image processing tools, and the second derivative of the beam axis is estimated using two distinct numerical differentiator filters (Lanczos filter and Gaussian wavelets) along with suitable processing to reduce border distortions. The tests conducted demonstrate that it is possible, with an opportune static deflection amplitude, to accurately detect the position of the notch with the proposed procedure; a sensitivity analysis is also conducted by testing the procedure with different beam thicknesses, notch positions, and amplitude of the static deflection. Although the authors realize that the technique can generally require sensibly large displacements, the results seem promising.

Similar content being viewed by others

1 Introduction

Structural health monitoring (SHM) is crucial to ensuring the safety and reliability of various engineering mechanical structures, such as buildings, bridges, etc. Detecting, assessing, and managing structural degradation or damage is essential for preventing catastrophic failures and minimizing risks. SHM techniques generally consist in collecting data related to structure behavior, load distribution, vibrations, and material properties to analyze using sophisticated algorithms and models to detect any abnormalities or signs of deterioration [1].

The diffusion of computer vision has revolutionized the field by providing advanced tools for dense data acquisition, real-time monitoring, and assessment of structural integrity. The growing interest in this approach is demonstrated by the proliferation of review articles in the literature over the years [2,3,4,5,6,7].

The application of these techniques is widespread in civil engineering, where the displacements of the structures are relatively large and the natural frequencies small; this makes the acquisition of valuable data via computer vision easier. Vision-based algorithms are employed for motion tracking of large structures [8,9,10,11] (even in real time [12]), in performing modal analysis [13, 14], system identification and updating [15], and in detecting damages [16].

Advantages of computer vision for SHM are several [2, 4]: they are generally inexpensive, contactless, non-destructive and allow for the assessment of structural integrity without the installation of sensors directly on the structure, unlike traditional techniques that rely on data collection through accelerometers or strain gauges. Given the advantages, it is not surprising that vision-based SHM techniques are not limited to civil engineering structures but can also be extended to mechanical components (such as beam-type structures) characterized by operational conditions significantly different from those in civil engineering, such as small displacements and high vibration frequencies.

A static analysis approach is conducted in [17, 18], where a computer vision method for measuring the in-plane displacement field of cantilever beams is presented. The purpose of the cited articles is to carry out a structural health analysis starting from the static deformed shape of the beam (acquired by the vision-based algorithm) by exploiting different damage detection techniques: second-derivative algorithm, line-segment algorithm, and voting algorithm in [17], fractal dimension, wavelet transform, and roughness methods in [18]. A static approach has also been utilized by the authors in [19] to carry out damage detection on an epoxy glass beam by acquiring its statically deformed axis starting from a photo acquisition and then processing it with the wavelet method.

In addition, the possibility of carrying out modal analysis oriented to structural health monitoring has been extensively tested; in [20], the authors introduce and test in laboratory experiments a novel approach to assess structural vibration by utilizing high-resolution images and sub-pixel edge identification. In [21, 22], a fusion data approach is implemented combining accelerometer data and photo acquisition to obtain mode shapes. In [23], a 2D-projective method is employed to acquire structural dynamic data including mode shape, natural frequencies, and damping ratio. In [11], an algorithm for dense full-field displacement measurement is developed and its applicability to modal identification is tested on a set of simply supported beams. Subsequently, damage detection via an MSC-based index is performed. Finally, in [24, 25], a wavelet-based damage detection technique is applied to a vibrating beam elastic line extracted by the edge detection method.

The authors’ intent in this study is to deepen the potentiality of the vision-based method for SHM and damage detection introduced in [19], testing the technique on four beam specimens with different characteristics (e.g., notch position and thickness). To achieve this goal, an algorithm was first developed to extract the deformation axis of a deflected beam starting from a photo. Then, the acquired axis coordinate points were employed as input for the damage detection procedure. Its basis is the correlation between the curvature that the beam exhibits under load conditions, and its flexural stiffness [26]. The damage on the beam results in a localized change in its flexibility, manifesting as abrupt variations in the second derivative of the transverse deflection. Therefore, the proposed methodology can be summarized in two parts: first, the acquisition and processing of the photo to extract the axis of the deformed beam, and second, the damage detection through the estimate of the second derivative. To conclude, the effectiveness of the proposed method was tested in the laboratory on beams of which the structural integrity had already been evaluated in a previous study [27]. This allowed for a comparison between a time-consuming data acquisition methodology (PSD-triangular laser sensor) and a quick vision-based data acquisition technique, and to conduct various sensitivity analyses to provide an overview of the method behavior depending on the structure to which it is applied: the characteristics of the beams under examination indeed could influence damage detectability.

2 Materials and Methods

The experimental setup (Fig. 1) was composed of a frame, in which the beam was clamped at one end, two operating screws to impose the deflection shape, and a consumer-grade camera (Canon EOS 1200D) fixed on a tripod. Black cardboard was used as the background to make the beam more visible and to improve the segmentation processing.

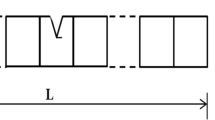

The proposed methodology was tested on four different beams (Fig. 1), whose characteristics are summarized in Table 1. In beam 1, beam 3, and beam 4, a notch, as specified in Table 1, was made at different distances from the beam clamped section to simulate the damage. In beam 2, on the other hand, three different notches were made to carry out an analysis of sensitivity aimed at identifying the most sensitive damage location, thus evaluating the influence of the beam curvature on damage detectability. Furthermore, as indicated in Table 1, each beam had a distinct thickness, enabling the exploration of the link between thickness and damage detectability.

To evaluate the suitability of the vision-based data acquisition method compared to the PSD-triangular-laser-sensor-one (whose results are available in [27]), the beams were statically deformed to emulate their second mode shape measured in [27] and displayed in Fig. 2. It is evident that the static shape obtained in this way will not fit perfectly the dynamic one, as the inertial effects are neglected. However, what matters is comparing the two techniques based on deflected shapes with comparable displacements.

The deflection was emulated by setting beam maximum displacements equal to those measured in [27]; subsequently, this initial deflection was amplified by 2, 4, 6, and 10 times to test the method sensitivity to displacement magnitude.

As can be seen in Table 1, the depth (a/h) and the width (d) of the crack are the same for all the analyzed beams. Although it may seem that the position of the defect is different for each beam, this is true only geometrically. The position of the notch is modally constant for all the beams as it is located at a distance equal to 0.53L from the clumped beam section, which corresponds to the point where the curvature of the second mode shape is maximum.

To take non-distorted photos, camera calibration was carried out by the MatlabFootnote 1 Camera Calibrator.

The segmentation algorithm was implemented in Matlab. Its output is a mask (Fig. 3) in which the beam is the foreground.

The beam axis extraction algorithm was applied to this mask; the procedure employs a 2\({\Delta }_{m}\) × 2\({\Delta }_{n}\) pixel window (the green box in Fig. 3) moving along the beam profile by one pixel per step. 2\({\Delta }_{m}\) and 2\({\Delta }_{n}\) are the window width and height respectively. At every iteration, the axis point coordinates (in pixels) are calculated by the following equation:

where \({m}_{\mathrm{max}}\) and \({m}_{\mathrm{min}}\) are the coordinates, along the beam axis direction, of the extreme pixels of the window, \({n}_{i}\) is the coordinate of the \(i\)th pixel in the transversal direction, and \({I}_{i}\) its intensity. Afterward, a scale factor S [8] transforms pixel coordinates into physical units (m); for each beam the scale factor S was calculated by knowing the beam length in millimeters and its length in pixels using the formula \(S={l}_{\mathrm{mm}}/{l}_{\mathrm{pixel}}\): for example, photos having a resolution equal to 5184 × 3456 pixels produced a scale factory approximately S ~ 0.1 mm/pixels for the 500 mm long beam. This scaling factor is linked to the sensitivity of the visual sensor: a greater resolution leads to a smaller scaling factor, thereby increasing the sensitivity of the sensor. In other words, this means that the technique appears to be potentially influenced by the choice of the camera.

The procedure previously illustrated allowed the extraction of the bent beam axes y(x) (Fig. 4) which were subsequently processed to estimate their second derivative.

In particular, Figs. 5, 6, 7, and 8 show the second derivatives estimated with two distinct differentiator filters: left figures represent the estimate through the Lanczos filter [28, 29], while the estimate in the right figures was obtained employing the continuous wavelet transform [29]. In both cases, the second derivative Eq. (2) was estimated by performing the numerical convolution (represented as \(*\) in Eq. 2) of the bent beam y(x) twice through the respective filters of the first derivative (h1 in Eq. 2). This is to extend the physical signal through the Rotation option for each convolution step for reducing distortions at the edges [30]:

The length of the filters was calibrated through dilation parameters which provided similar performance to reject the experimental/numerical noise; a dilation parameter a = 5 was chosen to process the data.

3 Results

The results of the experimental investigations were analyzed by beam type and compared to those in [27]. It was observed that the displacements of the reference deformation did not initially allow the damage to be detected with the proposed method. At least, this was the case with the experimental setup used.

It is intuitive that the larger the deformation, and, therefore, the curvature, the more readily the damage can be detected. To prove this, sensitivity analysis was conducted, and results were almost the same for each beam; in particular, for beam 2, beam 3, and beam 4, detectability improved as the amplification factor increased. For all the three beams, the damage could not be detected for an amplification factor lower than 6 except for beam 2 (Fig. 6) of which the first damage (located at 99 mm from the beam clamped end) could be identified with an amplification factor equal to 1 (this result will be deeply analyzed later). Even the aluminum beam (Fig. 5) makes an exception, as the damage is never detectable for any amplification factor.

This confirms the correlation between beam thickness and damage detectability already evaluated in [26], which becomes even more evident through the method illustrated in this paper. In fact, comparing all the beams, the peak in the second derivative was larger for beam 2 (Fig. 6), the thickest one, while it could not even be singled out for the thinnest beam (beam 1, Fig. 5). Then, a comparison between beam 3 and beam 4 (respectively Figs. 7, 8), which are made of the same material and only differ in thickness, showed that the peak was more visible in beam 4 which is twice as thick as beam 3.

Finally, considering beam 2, the influence of the curvature on the damage detectability can be demonstrated. In fact, as it can be seen in Fig. 6 with an amplification factor equal to 10 (because this allows to locate clearly all the three damages), the third notch is the most visible with respect to the other two, which are located where the curvature is smaller. This confirms what was assessed in [27], which illustrates how the capability of the second derivative in detecting damage is modulated by the curvature of the system under test, and how the damage located near points with smaller curvature can be poorly identified. Nonetheless, using the method illustrated in this work, the damage located near the point with smallest curvature is the only one that is always visible, even with the amplification factor equal to 1.

4 Conclusions

The purpose of this work was to evaluate the suitability of a vision-based data acquisition method for damage detection compared to previous analyses. The study carried out so far has only involved the specimens already available in the laboratory from the previous study. In fact, the objective of this preliminary study was to understand the potential of the technique and evaluate whether to continue investigating it further.

The results proved that the proposed vision-based method needs larger displacements than a PSD-triangular laser sensor to acquire valuable data. However, we found the results rather encouraging because this technique allowed to acquire a dense full-field displacement with a minimum effort in comparison to [27] which involved the acquisition of a large number of discrete points in a very time-consuming way: it took almost 10 h to perform a complete acquisition using the PSD triangular laser sensor against the few minutes for photo acquisition and processing.

Then, as in [27], the curvature and the thickness of the beam influence the detectability of the damage present on the beam itself; in particular, the larger the curvature and thickness, the easier it is to locate the damage, apart from what has been found for the first notch on beam 2.

Future research will be conducted by considering the dynamic shapes of the beam excited by a shaker or, even, by environmental forces. Moreover, the authors will deepen the reasons allowing to find the first damage located in correspondence of the smallest curvature position on beam 2 starting from the lowest amplification factor.

Data availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Notes

MATLAB 9.13.0 (The MathWorks, Natick, MA, USA 2022).

References

Li, H.-N., Ren, L., Jia, Z.-G., Yi, T.-H., Li, D.-S.: State-of-the-art in structural health monitoring of large and complex civil infrastructures. J. Civ. Struct. Health Monit. 6, 3–16 (2016). https://doi.org/10.1007/s13349-015-0108-9

Dong, C.-Z., Catbas, F.N.: A review of computer vision–based structural health monitoring at local and global levels. Struct. Health Monit.. Health Monit. 20, 692–743 (2021). https://doi.org/10.1177/1475921720935585

Spencer, B.F., Hoskere, V., Narazaki, Y.: Advances in computer vision-based civil infrastructure inspection and monitoring. Engineering 5, 199–222 (2019). https://doi.org/10.1016/j.eng.2018.11.030

Feng, D., Feng, M.Q.: Computer vision for SHM of civil infrastructure: from dynamic response measurement to damage detection—a review. Eng. Struct.Struct. 156, 105–117 (2018). https://doi.org/10.1016/j.engstruct.2017.11.018

Beberniss, T.J., Ehrhardt, D.A.: High-speed 3D digital image correlation vibration measurement: recent advancements and noted limitations. Mech. Syst. Signal Process. 86, 35–48 (2017). https://doi.org/10.1016/j.ymssp.2016.04.014

Baqersad, J., Poozesh, P., Niezrecki, C., Avitabile, P.: Photogrammetry and optical methods in structural dynamics—a review. Mech. Syst. Signal Process. 86, 17–34 (2017). https://doi.org/10.1016/j.ymssp.2016.02.011

Zona, A.: Vision-based vibration monitoring of structures and infrastructures: an overview of recent applications. Infrastructures 6, 4 (2020). https://doi.org/10.3390/infrastructures6010004

Khaloo, A., Lattanzi, D.: Pixel-wise structural motion tracking from rectified repurposed videos. Struct. Control. Health Monit.. Control. Health Monit. (2017). https://doi.org/10.1002/stc.2009

Nie, G.-Y., Bodda, S.S., Sandhu, H.K., Han, K., Gupta, A.: Computer-vision-based vibration tracking using a digital camera: a sparse-optical-flow-based target tracking method. Sensors 22, 6869 (2022). https://doi.org/10.3390/s22186869

Feng, D., Feng, M., Ozer, E., Fukuda, Y.: A vision-based sensor for noncontact structural displacement measurement. Sensors. 15, 16557–16575 (2015). https://doi.org/10.3390/s150716557

Feng, D., Feng, M.Q.: Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Signal Process. 88, 199–211 (2017). https://doi.org/10.1016/j.ymssp.2016.11.021

Santos, C.A., Costa, C.O., Batista, J.: A vision-based system for measuring the displacements of large structures: simultaneous adaptive calibration and full motion estimation. Mech. Syst. Signal Process. 72–73, 678–694 (2016). https://doi.org/10.1016/j.ymssp.2015.10.033

Yang, Y., Dorn, C., Mancini, T., Talken, Z., Kenyon, G., Farrar, C., Mascareñas, D.: Blind identification of full-field vibration modes from video measurements with phase-based video motion magnification. Mech. Syst. Signal Process. 85, 567–590 (2017). https://doi.org/10.1016/j.ymssp.2016.08.041

Khuc, T., Catbas, F.N.: Computer vision-based displacement and vibration monitoring without using physical target on structures. Struct. Infrastruct. Eng.. Infrastruct. Eng. 13, 505–516 (2017). https://doi.org/10.1080/15732479.2016.1164729

Yoon, H., Elanwar, H., Choi, H., Golparvar-Fard, M., Spencer, B.F.: Target-free approach for vision-based structural system identification using consumer-grade cameras: target-free vision-based structural system identification. Struct. Control. Health Monit.. Control. Health Monit. 23, 1405–1416 (2016). https://doi.org/10.1002/stc.1850

Rinaldi, C., Ciambella, J., Gattulli, V.: Image-based operational modal analysis and damage detection validated in an instrumented small-scale steel frame structure. Mech. Syst. Signal Process. 168, 108640 (2022). https://doi.org/10.1016/j.ymssp.2021.108640

Dworakowski, Z., Kohut, P., Gallina, A., Holak, K., Uhl, T.: Vision-based algorithms for damage detection and localization in structural health monitoring: vision-based algorithms for damage detection and localization. Struct. Control. Health Monit.. Control. Health Monit. 23, 35–50 (2016). https://doi.org/10.1002/stc.1755

Shi, J., Xu, X., Wang, J., Li, G.: Beam damage detection using computer vision technology. Nondestr. Test. Eval. 25, 189–204 (2010). https://doi.org/10.1080/10589750903242525

A.V. De Nunzio, G. Faraco, N.I. Giannoccaro, A. Messina, Crack localization on a statically deflected beam by high-resolution photos. in: Proceedings of AIDAA XXVII International Congress, September 4–7 2023 (accepted)

Poudel, U.P., Fu, G., Ye, J.: Structural damage detection using digital video imaging technique and wavelet transformation. J. Sound Vib.Vib. 286, 869–895 (2005). https://doi.org/10.1016/j.jsv.2004.10.043

Javh, J., Slavič, J., Boltežar, M.: High frequency modal identification on noisy high-speed camera data. Mech. Syst. Signal Process. 98, 344–351 (2018). https://doi.org/10.1016/j.ymssp.2017.05.008

Havaran, A., Mahmoudi, M., Ebrahimpour, R.: Extraction of the structural mode shapes utilizing image processing method and data fusion. Mech. Syst. Signal Process. 151, 107380 (2021). https://doi.org/10.1016/j.ymssp.2020.107380

Havaran, A., Mahmoudi, M.: Extracting structural dynamic properties utilizing close photogrammetry method. Measurement 150, 107092 (2020). https://doi.org/10.1016/j.measurement.2019.107092

Kumar, R., Singh, S.K.: Crack detection near the ends of a beam using wavelet transform and high resolution beam deflection measurement. Eur. J. Mech. A. Solids 88, 104259 (2021). https://doi.org/10.1016/j.euromechsol.2021.104259

Patsias, S., Staszewskiy, W.J.: Damage detection using optical measurements and wavelets. Struct. Health Monit.. Health Monit. 1, 5–22 (2002). https://doi.org/10.1177/147592170200100102

Andreaus, U., Casini, P.: Identification of multiple open and fatigue cracks in beam-like structures using wavelets on deflection signals. Continuum Mech. Thermodyn.Thermodyn. 28, 361–378 (2016). https://doi.org/10.1007/s00161-015-0435-4

Trentadue, B., Messina, A., Giannoccaro, N.I.: Detecting damage through the processing of dynamic shapes measured by a PSD-triangular laser sensor. Int. J. Solids Struct.Struct. 44, 5554–5575 (2007). https://doi.org/10.1016/j.ijsolstr.2007.01.018

Lanczos, C.: Applied Analysis. Dover Publications, New York (1956)

Messina, A.: Detecting damage in beams through digital differentiator filters and continuous wavelet transforms. J. Sound Vib.Vib. 272, 385–412 (2004). https://doi.org/10.1016/j.jsv.2003.03.009

Messina, A.: Refinements of damage detection methods based on wavelet analysis of dynamical shapes. Int. J. Solids Struct.Struct. 45, 4068–4097 (2008). https://doi.org/10.1016/j.ijsolstr.2008.02.015

Funding

Open access funding provided by Università del Salento within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

De Nunzio, A.V., Faraco, G., Giannoccaro, N.I. et al. Sensitivity Analysis of a Damage Detection Method Through High-Resolution Photos on Various Statically Deflected Beams. Aerotec. Missili Spaz. 103, 129–135 (2024). https://doi.org/10.1007/s42496-023-00182-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42496-023-00182-5