Abstract

It is challenging to obtain extensive annotated data for under-resourced languages, so we investigate whether it is beneficial to train models using multi-task learning. Sentiment analysis and offensive language identification share similar discourse properties. The selection of these tasks is motivated by the lack of large labelled data for user-generated code-mixed datasets. This paper works with code-mixed YouTube comments for Tamil, Malayalam, and Kannada languages. Our framework is applicable to other sequence classification problems irrespective to the size of the datasets. Experiments show that our multi-task learning model can achieve high results compared to single-task learning while reducing the time and space constraints required to train the models on individual tasks. Analysis of fine-tuned models indicates the preference of multi-task learning over single task learning resulting in a higher weighted F1 score on all three languages. We apply two multi-task learning approaches to three Dravidian languages, Kannada, Malayalam, and Tamil. Maximum scores on Kannada and Malayalam were achieved by mBERT subjected to cross entropy loss and with an approach of hard parameter sharing. Best scores on Tamil was achieved by DistilBERT subjected to cross entropy loss with soft parameter sharing as the architecture type. For the tasks of sentiment analysis and offensive language identification, the best performing model scored a weighted F1-Score of (66.8%, 90.5%), (59%, 70%) and (62.1%,75.3%) for Kannada, Malayalam and Tamil on sentiment analysis and offensive language identification respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The internet is today an essential for accessing information and communicating with others. The easier access of the internet has resulted in majority of the population using social media to amplify their communication and connections throughout the world. Social media platforms such as Facebook, YouTube, and Twitter have paved the way to express any sentiment about the content posted by its users in any language(Severyn et al. 2014; Clarke and Grieve 2017; Tian et al. 2017). These texts are informal as they are written in a spoken tone that does not follow strict grammatical rules. Understanding social media content is lately attracting much attention from the Natural Language Processing (NLP) community (Barman et al. 2014), owing to the growing amount of data and active users. Sentiment Analysis (SA) refers to the method to extract subjectivity and polarity from text (Pang and Lee 2008). The lack of moderation on social media has resulted in the persistent use of offensive language towards other users on their platforms. Offensive language identification (OLI) is the task of identifying whether a comment contains offensive language or not. Traditionally, the classification of SA or OLI is usually attempted in a monolingual and single task. Traditional approaches to this text classification problems are pretty helpful for high resourced languages. However, traditional approaches fail for languages with limited resources, and they also fail on code-mixed text (Chakravarthi et al. 2020).

Multi-Task Learning (MTL) is a practical approach for utilising shared characteristics of tasks to improve system performances (Caruana 1997). In MTL, the objective is to utilise learning multiple tasks simultaneously to improve the performance of the system (Martínez Alonso and Plank 2017). Since SA and OLI are both essentially sequence classification tasks, this inspired us to do MTL. To utilise MTL on Dravidian languages, we experimented with several recent pretrained transformer-based natural language models on Tamil (ISO 639-3: tam), Malayalam (ISO 639-3: mal), and Kannada (ISO 639-3:kan).

Kannada and Malayalam are among the Dravidian languages predominantly spoken in South India and are also official languages in the states of Karnataka and Kerala, respectively (Reddy and Sharoff 2011). The Tamil language has official status in Tamil Nadu of India and countries like Sri Lanka, Singapore, Malaysia and other parts of the world. Dravidian languages are morphologically rich; along with code-mixing, it becomes even more challenging to process these languages (Bhat 2012), and they are under-resourced (Prabhu et al. 2020).

We propose a method to perform MTL to address two main challenges arising when creating a system for user-generated comments from social media. The challenges are:

-

1.

Code-mixing: In multilingual countries like India, Sri Lanka and Singapore, the speakers are likely to be polyglottic and often switch between multiple languages. This phenomenon is called code-mixing and these code-mixed texts are even written in non-native scripts (Das and Gambäck 2014; Bali et al. 2014; Chakravarthi et al. 2020). Code-mixing can be referred to as a blend of two or more languages in a single sentence or conversation, which is prevalent in the social media platforms such as Facebook and YouTube.

-

2.

Scarcity of Data: Although there is an enormous number of speakers for Tamil, Kannada, and Malayalam, these languages are extensively considered as under-resourced languages. One of the main reasons is the lack of user-generated content extracted from social media applications. One of the ways to tackle the problem is to annotate the data on several tasks.

We address 1 by using pretrained multilingual natural language models and 2 by using the MTL approach.

The rest of the paper is organised as follows. Section 2 shows previous work on SA, OLI , and MTL in NLP. Section 3 consists of a detailed description of the datasets for our purposes. Section 4 talks about the proposed models for single-task models and their loss functions. Popular MTL frameworks are described in Section 4.2. We describe the experimental results and analysis in Section 5.4 and conclude our work in Section 6.

2 Related Work

In this section, we briefly review previous relevant work related to (i) Sentiment Analysis, (ii) Offensive language identification and finally,(iii) MTL in NLP.

2.1 Sentiment Analysis

Sentiment analysis is one of the leading research domains devoted to analyse people’s sentiments and opinions on any given entity. Due to its broader applications, there has been a plethora of research performed in several languages. However, the same is not true for the Dravidian languages. As stated earlier, there is a significant lack of data for conducting experiments on code-mixed data in the Dravidian Languages. SA is one of the most prominent downstream tasks of NLP as it is essential for obtaining people’s opinion, which has several business applications in the E-commerce market.

A dataset was created as a part of a shared task on SA of Indian Languages (SAIL), which consisted of around 1,663 code-mixed tweets extracted from Twitter in Tamil (Patra et al. 2015), where SentiWordNet outperformed all of the other systems(Phani et al. 2016)(Das and Bandyopadhyay 2010). There has been a plethora of research performed on several downstream tasks in other languages, primarily due to the abundance of user-generated data in social media, which has developed an interest in people’s opinions and emotions with respect to a specific target. Existing research is relatively low due to the lack of data in code-mixing. SA is one of the downstream tasks that are performed on any natural language model. The largely available crowd-sourced data on social media applications such as Twitter and YouTube have resulted in developing several code-mixed datasets for the task.

To our knowledge, very few Kannada-English code-mixed datasets exist on SA. A Kannada-English code-mixed dataset for the emotion prediction was created (Appidi et al. 2020). A probabilistic approach was employed to classify Parts of Speech (POS) tags (Sowmya Lakshmi and Shambhavi 2017). Several research pursuits have been worked upon SA in Tamil (Se et al. 2016; Thilagavathi and Krishnakumari 2016). A recursive neural network approach was opted to improve the accuracy of texts in Tamil (Padmamala and Prema 2017). A dynamic mode decomposition (DMD) method with random mapping was developed for SA on the SAIL 2015 dataset (Kumar et al. 2020). A Lexicon based approach was employed along with several feature representation approaches for analysing the sentiments on Tamil texts (Thavareesan and Mahesan 2019). When it comes to Malayalam, several supervised Machine Learning and rule-based approaches were used to analyse the sentiments of the Malayalam movie reviews (Nair et al. 2015; 2014; Soumya and Pramod 2020). A fuzzy logic-based hybrid approach was also used to analyse the movie reviews in Malayalam (Anagha et al. 2015).

2.2 Offensive Language Identification

A surge in the popularity of social media platforms has resulted in the rise of trolling, aggression, hostile, and abusive language, which is a concerning issue pertaining to the positive/ negative impacts a message can imply on an individual or groups of people (Tontodimamma et al. 2021; del Arco et al. 2021). This issue has led several researchers to work on identifying offensive language/posts from social media for moderating content on social media platforms to promote positivity (Chakravarthi 2020). Offensive Language can be defined as any text entailing certain forms of unacceptable language, which may include insults, threats, or bad words (del Arco et al. 2021). In comparison, hate speech seems indistinguishable to offensive language. The former aims to detect ‘abusive’ words, which are considered a type of degradation (Nobata et al. 2016; Djuric et al. 2015).

There are several ways to detect offensive language. A supervised learning technique was used (Dadvar et al. 2013), which was based on three decisive factors: content based, cyberbullying based, and user based features to tackle cyberbullying. A multi-level classification system was developed which extracts features at different conceptual levels and applies pattern recognition for detecting flames (rants, taunts, and squalid phases) in sentences (Razavi et al. 2010). It can also be detected by ferreting out offensive and toxic spans in the texts. A toxic span detecting system was developed by leveraging token classification, and span prediction techniques that are based on BERT (Chhablani et al. 2021). MUDES, a multilingual system to detect offensive spans in texts was developed (Ranasinghe and Zampieri 2021). Several systems were developed to identify offensive language as a part of shared tasks conducted to stimulate research in this domain for Arabic, Danish, English, Greek, and Turkish (Zampieri et al. 2019; Zampieri et al. 2020). Consequentially, several NLP researchers have worked on developing systems to detect hate speech on social media (Kumar et al. 2020; Zampieri et al. 2019). However, most of the work done on OLI is language-specific, focusing on monolingual users in lieu of multilingual users, which entails code-mixed text on its social media users (Bali et al. 2014).

For code-mixed sentences, certain researchers analysed the existing state-of-the-art hate speech detection on Hindi-English (Rani et al. 2020), while other researchers compared the existing pretrained embeddings for CNN networks (Banerjee et al. 2020). When it comes to OLI, several systems were developed as a part of shared task for OLI in Dravidian Languages (Chakravarthi et al. 2021; Ghanghor et al. 2021; Yasaswini et al. 2021) and in Indo-European languages (Mandl et al. 2020).

2.3 Multi-Task Learning

The main objective of the multi-task learning (MTL) model is to improve the learning of a model for a given task by utilising the knowledge encompassed in other tasks, where all or a subset of tasks are related (Zhang and Yang 2018). The essence of MTL is that solving many tasks together provides a shared inductive bias that leads to more robust and generalisable system (Changpinyo et al. 2018). It has long been studied in the domain of machine learning. It has applications on neural networks in the NLP domain (Caruana 1997). However, to the best of our knowledge, MTL models have not been developed for the Dravidian languages yet.

There is a considerable variety and unique model designs in the field of computer vision since the requirements in the domain of computer vision is vast. Tasks like image classification, object localisation, object segmentation (Mou and Zhu 2018) and object tracking are widespread. Additionally, multi-tasking in videos for real-time applications (Ouyang et al. 2019), play a vital role in day to day life. Multi-task models have also been used for medical images (Zhai et al. 2020).

MTL models are usually designed with shared encoders which can be customised for the preferred tasks. A multi-scale approach was used to combine LSTM and CNN for better results as it adds up the benefits of both CNN (nearby sentiments) and LSTM (sentiments which are further away) (Jin et al. 2020). Attention-based mechanisms can also be used in the encoder, such as multi-layer bidirectional transformer encoder and knowledge encoder which injects knowledge into the language expressions (Zhang et al. 2020). Not only LSTM, even Gated Recurrent Units (GRU) can be used as a part of multi-task model based on specific tasks; although GRUs have much simpler architecture than the LSTMs, they have the capabilities to forecast futuristic values based on the previous values (Zhang et al. 2020). Such model preferences can be opted to the domain of NLP in order to make the model lighter and faster. The most recent transformation includes using multi-tasks over semi-supervised learning (Li et al. 2020), by stacking recurrent neural networks and utilising a sliding window algorithm the sentiments are transferred on to the next item. An empirical study on MTL models for varieties of biomedical and clinical NLP tasks on BERT was proposed (Peng et al. 2020).

MTL models for the domain of NLP are less in number. The ideas which were applied to other domains such as Computer Vision, Time Series, semi-supervised learning can be acquired, and models could be improved in NLP. As such models are rarely seen, this paper introduces MTL for SA and OLI incorporated from other domains of deep learning.

3 Dataset

We make use of the multilingual dataset, DravidianCodeMixFootnote 1 (Chakravarthi et al. 2021), consisting of over 60,000 manually annotated YouTube comments. The dataset comprises of sentences from 3 code-mixed Dravidian languages, Kannada (Hande et al. 2020), Malayalam (Chakravarthi et al. 2020), and Tamil (Chakravarthi et al. 2020; Chakravarthi et al. 2021). Each comment is annotated for the tasks of Sentiment Analysis and offensive language identification. The code-mixed Kannada dataset consists of 7,273 comments, while the corresponding Malayalam and Tamil code-mixed datasets consist of 12,711 and 43,349 comments, respectively. After removing repetitive sentences, the class-wise distribution of the datasets are specified in Table 1 which are to be split into train, validation, and test sets.

The class labels in the dataset are as follows: Sentiment Analysis:

-

Positive state: Comment contains an explicit or implicit clue in the text suggesting that the speaker is in a positive state.

-

Negative state: Comment contains an explicit or implicit clue in the text suggesting that the speaker is in a negative state.

-

Mixed feelings: Comment contains an explicit or implicit clue in both positive and negative feeling.

-

Neutral state: Comment does not contain an explicit or implicit indicator of the speaker’s emotional state.

-

Not in intended language: For Kannada if the sentence does not contain Kannada written in Kannada script or Latin script then it is not Kannada.

Offensive language identification:

-

Not Offensive: Comment does not contain offence or profanity.

-

Offensive Untargeted: Comment contain offence or profanity without any target. These are comments which contain unacceptable language that does not target anyone.

-

Offensive Targeted Individual: Comment contains offence or profanity which targets the individual.

-

Offensive Targeted Group: Comment contains offence or profanity which targets the group.

-

Offensive Targeted Other: Comment contains offence or profanity which does not belong to any of the previous two categories(e.g., a situation, an issue, an organization or an event).

-

Not in indented language: Comment not in the Kannada language.

The overall class types are similar in all languages The code-mixed datasets of Kannada and Tamil consist of six classes in OLI , while Malayalam consists of five classes. There is an absence of Offensive Language Others (OTO) class in the Malayalam dataset.

4 Methodology

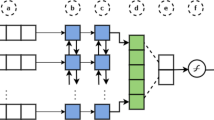

We explore the suitability of several NLP models on the task of Sequence Classification. Several pretrained multilingual transformer models are investigated to find the better fit for the code-mixed datasets of Tamil, Kannada, and Malayalam. For our purpose, we define Single Task Learning (STL) Models, as when we train the transformer-based models on both of the tasks separately. In this section, we discuss several pretrained transformer-based models that have been used for both STL and MTL. Figure 1 represents the outline of the approaches undertaken.

4.1 Transformer-Based Models

Recurrent models such as LSTMs, GRUs fail to achieve state of the art results due to longer sequence lengths, owing to memory limitations while batching. While factorization and conditional computational approaches (Shazeer et al. 2017; Kuchaiev and Ginsburg 2017) have improved the efficiency of the model, yet the underlying issue of computing it sequentially persists. To overcome this, Transformer was proposed (Vaswani et al. 2017), an architecture that completely shuns recurrence and restores to attention mechanisms. It is found that adapting to an architecture with attention mechanisms proves to be much more efficient than recurrent architectures. The transformer block follows a stacked encoder-decoder architecture with multi-headed attention and feed forward layers. Self-attention is an attention mechanism relating distinct arrangements of a single sequence to compute a representation of a given sequence.

Scaled dot-product attention is mathematically computed using 3 vectors from each of the encoder’s input vectors, Query, Key and Value vectors. Key and Value assume dimensions dk and dv respectively. A softmax function is applied on the dot product of queries and keys in order to compute the weights of the values. Practically, the attention function is computed simultaneously on a set of queries and then stacked into a matrix Q. In practice, the attention function is computed on a set of queries simultaneously, being packed into a matrix Q. The Keys and Values are packed into matrices K and V. The matrix of outputs is computed as follows:

The above dot product attention is preferred over additive attention owing to its practical efficiency in both space and time complexities. Self attention is computed several times in transformer’s architecture, thus it is referred to as multi-head Attention. This approach collectively attends information from different representations at different positions.

Consider the phrase - Ask Powerful Questions. In order to calculate the self attention of the first word Ask, the scores for all words in the phrase with respect to Ask is to be computed, which then determines the importance of other words when certain words are being encoded into the input sequence. The scores are divided by 8(23) which is the square root of the dimension of the key vector. The score of the first word is calculated using dot product attention , with the dot product of the query vector q1 with keys k1,k2.k3 of all words in the input sentence. The scores are then normalized using the softmax activation. The normalized scores are then multiplied by vectors v1,v2,v3 and summed up to obtain self-attention vector z1. It is then passed to feed forward network as input. The vectors for the other words are calculated in a similar way in dot product attention.

The normalized scores are then multiplied by vectors v1,v2,v3 and summed up to obtain self-attention vector z1. It is then passed to feed forward network as input. The vectors for the other words are calculated in a similar way in dot product attention. Softmax is an activation function that transforms the vector of numbers into a vector of probabilities. We use softmax function when we want a discrete variable representation of the probability distribution over n possible values. It is a generalization of sigmoid activation function which is used to represent the probability distribution over a binary variable. Softmax activation function over K classes is represented as follows:

Whereas, sigmoid is a binary representation of softmax activation function. It is given by:

Along with attention sub-layers in the transformer block, a fully connected feed forward network (FN) persists in each of the layers in its encoder and decoder. It consists of a ReLU activation in between two positions.

ReLU, a rectified linear activation function, is a piece-wise linear function that will output the input directly if positive, else it will output it as zero. ReLU overcomes the vanishing gradient problem, thus allowing models to learn faster and perform better. It is mathematically computed as follows:

While attention mechanism is a major improvement over recurrence based seq2seq models, it does have its own limitations. As attention can only deal with fixed-length strings, the text has to be split into a number of chunks before feeding them into the inputs. The chunking inadvertently results in context fragmentation. This means that a sentence, if split in the middle will result in significant loss of context. It would mean that the text is split without considering sentence or any other semantic boundary.

Due to the absence of recurrence or convolutional layers, some information about the tokens’ relative or absolute position must be fed for the succession of sequence order in the model. Thus Positional Encodings is added to the input embeddings as shown in Fig. 2. Most of the state of the art language models assume the transformer block as its fundamental building block in each layer.

A transformer architecture by Vaswani et al. (2017)

4.1.1 BERT

The computer vision researchers have repeatedly demonstrated the advantages of transfer learning, pretraining a neural network model on a known task (Deng et al. 2009).

Bidirectional Encoder Representations from Transformers (BERT), a language representation model is designed to pretrain deep bidirectional representations from unlabeled text by jointly conditioning on both left and right context in all layers (Devlin et al. 2019). It has been trained on eleven NLP tasks.

BERT is pretrained on two unsupervised tasks:

-

Masked Language Modeling (MLM):

Standard LMs (Radford 2018) can only be trained left-to-right or right-to-left. However, training it bidirectionally might allow the word to accidentally spot itself. To pretrain deep representations, a certain percentage of the input tokens are masked arbitrarily, and then predict those masked tokens, and are referred to as ”Masked Language Modeling”, which was previously stated as Cloze (Taylor 1953). Before feeding word sequences into BERT, 15% of the words in each sequence are replaced with a [MASK] token. The model then attempts to predict the initial value of the masked words, supported by the context provided by the opposite non-masked words within the sequence.

-

Next Sentence Prediction (NSP):

One of the main drawbacks of LM is its inability to capture the connection between two sentences directly. However, important downstream tasks such as Question Answering (QA) and Natural Language Interference (NLI) are supported with the relationships between two sentences. To overcome this obstacle pretraining for a Binarized Next Sentence Prediction task is performed. Specifically, when choosing the sentences A and B for every pretraining example, 50% of the time B is that the actual next sentence that follows A (labeled as Is Next),and 50% of the time it is a random sentence from the corpus (labeled as Not Next). In Fig. 3, the input consist of two Tamil sentences. After tokenizing the sentences, we observe that there are 13 input tokens inclusive of the [CLS] and [SEP] tokens. Tokens T1, T2, ..., T13 represent the positional embeddings while TA and TB represent the tokens for whether a given sentence follows the other.

Fig. 3 Illustration of BERT input representations. The Tamil sentence translates to “This is a Flower. It is blue in colour.” The input embeddings are the sum of token, segmentation and positional embeddings (Devlin et al. 2019)

We use the sequence tagging specific inputs and outputs into BERT and fine-tune all parameters end-to-end. At the input, sentence A and sentence B from pretraining are equivalent to a degenerate text pair in sequence tagging. The [CLS] representation is fed into an output layer for classification as observed in Fig. 4.

Illustration of Fine-Tuning BERT on single sentence classification Tasks like SA and OLI (Devlin et al. 2019)

4.1.2 DistilBERT

DistilBERT was proposed (Sanh et al. 2019) as a method to pretrain a smaller language representation model which serves for the same purpose as other large-scale models like BERT. DistilBERT is 60% faster and has 40% fewer parameters than BERT. It also preserves 97% of BERT performance in several downstream tasks by leveraging the knowledge distillation approach. Knowledge distillation is a refining approach in which a smaller model - the student - is trained to recreate the performance of a larger model - the teacher. DistilBERT is trained on a triple loss with student initialization.

Triple loss is a linear combination of the distillation loss Lce with the supervised training loss, the Masked Language Modeling loss Lmlm, and a cosine embedding loss (Lcos) which coordinates the direction of the student and teacher hidden state vectors, where

where ti (resp. si) is a probability estimated by the teacher (resp. the student) (Sanh et al. 2019).

We use a pretrained multilingual DistilBERT model from the huggingface transformer library distilbert-base-multilingual-cased for our purpose, which is distilled from the mBERT model checkpoint.

4.1.3 ALBERT

Present State of The Art (SoTA) LMs consist of hundreds of millions if not billions of parameters. In order to scale the models, we would be restricted by the memory limitations of compute hardware such as GPUs or TPUs. It is also found that increasing the number of hidden layers in the BERT-large model (340M parameters) can lead to worse performance. Several attempts of parameter reduction techniques to reduce the size of models without affecting their performance. Thus ALBERT: A lite BERT for self-supervised learning of language representations was proposed. ALBERT (Lan et al. 2019) overcomes the large memory consumption by incorporating several memory reduction techniques, Factorized Embedding Parameterization, cross-layer parameter sharing, and Sentence ordering Objectives.

-

Factorized Embedding Parameterization

It is learned that WordPiece embeddings are designed to learn context independent representations, but, hidden layer embeddings are designed to learn context dependent representations. BERT heavily relies on learning context dependent representations with the hidden layers. It is found that embedding matrix E must scale with Hidden layers H, and thus, this results in models having billions of parameters. However, these parameters are rarely updated during training indicating that there are insufficient useful parameters. Thus, ALBERT has designed a parameter reduction method to reduce memory consumption by changing the result of the original embedding parameter P (the product of the vocabulary size V and the hidden layer size H).

$$V * H = P \rightarrow{} V * E + E * H = P$$(7)E represents the size of the low-dimensional embedding space. In BERT, E = H. While in ALBERT, H ≫ E, so the number of parameters will be greatly reduced.

-

Cross-layer parameter sharing

ALBERT aims to elevate parameter efficiency by sharing all parameters, across all layers. Hence, feed forward network and attention parameters are shared across all layers as shown in Fig. 5.

-

Sentence Order Prediction (SOP)

ALBERT uses masked language modeling, as similar to BERT, using up to 3 word masking (max(n_gram) = 3). ALBERT also uses SOP for computing inter-sentence coherence loss. Consider two sentences being used in the same document. The positive test case is that the sentences are in a correct order, while the negative test case states that the sentences are not in a proper order. SOP results in the model learning finer-grained distinctions about coherence properties, while additionally solving the Next Sentence Prediction (NSP) task to a rational degree. Thus, ALBERT models are more effective in improving downstream tasks’ performance for multisentence encoding tasks.

By incorporating these features and loss functions, ALBERT requires much fewer parameters in contrast to the base and large versions of BERT models proposed earlier in Devlin et al. (2019), without hurting its performance.

4.1.4 RoBERTa

RoBERTa (Liu et al. 2019) is a robustly optimised BERT based model. The difference is within the masking technique. BERT performs masking once during the information processing, which is essentially a static mask, the resulting model tends to see the same form of masks in numerous training phases. RoBERTa was designed to form a dynamic mask within itself, which generated masking pattern changes every time the input sequence is fed in, thus, playing a critical role during pretraining. The encoding used was Byte-Pair encoding (BPE) (Sennrich et al. 2016), which may be a hybrid encoding between character and word level encoding that allows easy handling of huge text corpora, meaning it relies on subwords instead of full words.The model was made to predict the words using an auxiliary NSP loss. Even BERT was trained on this loss and was observed that without this loss, pretraining would hurt the performance, with significant degradation of results on QNLI, MNLI, and SQuAD.

4.1.5 XLM

The XLM model was proposed in Cross-lingual Language Model pretraining (Dai et al. 2019a). This model uses a shared vocabulary for different languages. For tokenizing the text corpus, Byte-Pair encoding (BPE) was used. Causal Language Modelling (CLM) was designed to maximize the probability of a token xt to appear at the t th position in a given sequence. Masked Language Modelling (MLM) is when we maximize the probability of a given masked token xt to appear at the t th position in a given sequence. Both CLM and MLM perform well on monolingual data. Therefore, XLM model used a translation language modelling. The sequences are taken from the translation data and randomly masks tokens from the source as well as from the target sentence. Similar to other transformer-based monolingual models, XLM was fine tuned on XLNI dataset for obtaining the cross-lingual classification. Downstream tasks on which this was evaluated on were tasks like Cross Lingual Classification, Neural Machine Translation (NMT) and LMs for low resource languages.

4.1.6 XLNet

BERT performed extremely well on almost every language modelling task. It was a revolutionary model as it could be fine-tuned for any downstream task. But even this came with a few flaws of its own. BERT was built in such a manner that it replaces random words in the sentences with a special [MASK] token and attempts to predict what the original word was. XLNet (Yang et al. 2019) pointed out certain major issues during this process. The [MASK] token which is used in the training would not appear during fine-tuning the model and for other downstream tasks. However, this approach could further create problems failing to replace [MASK] tokens at the end of pretraining. Moreover, the model finds it difficult to train when there are no [MASK] tokens in the input sentence. BERT also generates the predictions independently, meaning it does not care about the dependencies of its predictions.

XLNet uses auto regressive model (AR) language modeling which aims to estimate the probability distribution of a text corpus and without using the [MASK] token and parallel independent predictions. It is achieved through the AR modelling as it provides a reasonable way to express the product rule of factorizing the joint probability of the predicted tokens. XLNet uses a particular type of language modelling called the “permutation language modelling” in which the tokens are predicted for a particular sentence in random order rather than sequential order. The model is forced to learn bidirectional model dependencies between all combinations of the input. It is significant to note that it permutes only the factorization order and not the sequence order, and it is rearranged and brought back to the original form using the positional embedding. For sequence classification, the model is fine tuned for sentence classifier and it does not predict the tokens but predicts the the sentiment according to the embedding. The architecture of the XLNet uses transformer XL as its baseline (Dai et al. 2019b). The transformer adds recurrence to the segment level instead of the word level. Hence, fine-tuning is carried out by caching the hidden states of the previous states and passing them as keys or values when processing the current sequence. The transformer also uses the notion of relative embedding instead of positional embedding by encoding the relative distance between the words.

4.1.7 XLM-RoBERTa

XLM-RoBERTa was proposed as an unsupervised cross-lingual representation approach (Conneau et al. 2020), and it significantly outperformed multilingual BERT on a variety of cross-lingual benchmarks. XLM-R was trained on Wikipedia data of 100 languages and fine-tuned on different downstream tasks for evaluation and inference. The XNLI dataset was used for machine translation from English to other languages and vice-versa. It was checked on Named Entity Recognition (NER) and Cross Lingual Question Answering. It achieved great results on the standard GLUE benchmark and achieved state of the art results in several tasks.

4.1.8 CharacterBERT

The success of BERT has led many language representation models to adapt to the transformers architecture as their main building block, consequently inheriting the wordpiece tokenization. This could result in an intricate model that focuses on subword rather than word, for specialized domains. Hence, CharacterBERT, a new variant of BERT that completely drops the wordpiece system and uses a Character-CNN module instead to represent entire words by consulting their characters (El Boukkouri et al. 2020) as shown in Fig. 6.

Comparison of the context-independent representation systems and tokenization approach in BERT and CharacterBERT (El Boukkouri et al. 2020)

The characterBERT is based on ”base-uncased” version of BERT (L = 12, H = 768, A = 12, Total Parameters 109.5M). The subsequent CharacterBERT architecture has 104.6M parameters. Usage of character-CNN results in a smaller overall model, in spite of using a complex character module,mfor BERT’s wordpiece matrix possesses 30K X 768-D vectors, while CharacterBERT utilizes 16-D character embeddings with the majority of small-sized CNNs. Seven 1-D CNNs with the following filters are used: [1, 32], [2, 32], [3, 64], [4, 128], [5, 256], [6, 512] and [7, 1024]. The Kannada word chennagide written in latin script, which can be translated as Great. Figure 6 compares how CharacterBERT focuses more on the entire word rather than subwords as observed in BERT.

4.2 Multi-Task Learning (MTL)

We generally focus more on optimizing a particular metric to score well on a certain benchmark. Thus, we train on a single model or an ensemble of models to perform our desired task. These are then fine-tuned until we no longer see any major increase in its performance. However, the downside to this is that we lose out on much of the information which could have helped our model to train better. If there are multiple related tasks, we could devise an MTL model which can further elevate its performance in multiple tasks by learning from shared representations between related tasks. We aim to achieve better F1-Scores by leveraging this approach. Since both SA and OLI are sequence classification tasks, we believe that an MTL model trained on these two related tasks will achieve better results, in comparison to their performances when trained separately on a model to predict a single task. Most of the methodologies of MTL in NLP are based on the success of MTL models in Computer Vision. We use two novel approaches for MTL in NLP, which are discussed in the subsequent sections.

4.2.1 Hard Parameter Sharing

This is one of the most common approaches to MTL. Hard parameter sharing of hidden layers (Caruana 1997) is applied by sharing the hidden layers between the tasks, while keeping the task-specific output layers separate. Hard parameter sharing is represented in Fig. 7. As both of the tasks are very similar, one of the tasks of the model is supposed to learn from the representations of the other task. The more are the number of tasks that we are learning simultaneously, the more the representations the model will capture and reduce the chances of overfitting in our model (Ruder 2017). As shown in Fig. 7, the model will have two outputs. We would be using different loss functions to optimize the performance of the models.

A loss function takes the (output, target) a pair of inputs, and computes a value that estimates how far away the output is from the target. To back propagate the errors, we first clear the gradients to prevent the accumulation of gradients in each epoch. In this method, we back propagate the losses separately and the optimizer updates the parameters for each of the tasks separately. After the loss function calculates the losses, we add the losses of the two tasks and the optimizer then updates the parameters for both tasks simultaneously, where LossSA and LossOLI are losses for SA and OLI.

4.2.2 Soft Parameter Sharing

This is another approach of MTL where constrained layers are added to support resemblance among related parameters. Unlike hard parameter sharing, each task has its own model, and it learns for each task to regularize the distances between the different models’ parameters in order to encourage the parameter to be similar. Unlike hard parameter sharing, this approach gives more adaptability to the tasks by loosely coupling the representations of the shared space. We would be using Frobenious norm for our experiments as the additional loss term (Fig. 8).

-

1.

Additional Loss Term

This approach of soft parameter sharing is to impose similarities between corresponding parameters by augmenting the loss function with an additional loss term, as we have to learn 2 tasks. Let Wij denote the task j for the ith layer.

$$L=l+\sum\limits_{S(L)}\lambda_i\mid\mid W_i^{(S)}-W_i^{(O)}\mid\mid_{(F)}^2$$(9)Where l is the original loss function for both of the tasks and ∣∣.∣∣F2 is the squared Frobenious norm. A similar approach was used for low resource dependency parsing, by employing a cross-lingual parameter sharing whose learning objective involved regularization using Frobenious norm (Duong et al. 2015).

-

2.

Trace Norm

We intend to penalize trace norm resulting from stacking WiS and WiO. A penalty encourages estimation of shrinking coefficient estimates thus resulting in dimension reduction (Yang and Hospedales 2017). The trace norm can be defined as the sum of singular values

$$\mid\mid\textbf{W}\mid\mid = {\sum}_{i} \sigma_{i}$$(10)

4.3 Loss Functions

Loss function is a technique of evaluating the effectiveness of specific algorithm models on the given data. If the predictions are deviating from expected results, the purpose of a loss function is to output a high value. The output of the loss function decreases when the predictions start to match the expected results. With the help of an optimization function, a loss function comprehends to reduce the error in the predictions. In this section, we will be discussing several loss functions that are going to be used in our experiments.

4.3.1 Cross Entropy Loss

This is one of the most commonly used loss functions for classification tasks. It quantifies from the study of information theory and entropy and is calculated as the difference of the probability distributions for a given random variable. Entropy can be defined as the number of entities required for the transmission of a randomly selected event in a probability distribution. “Cross Entropy is the average number of bits needed to encode data from a source coming from a distribution p when we use a model q” (Murphy 2012). The divination of this definition can be conveyed if we consider a target as an underlying probability distribution P and an approximation of the target probability distribution as Q. Let the cross-entropy between two probability distributions, Q from P, be stated as H(P,Q). It is calculated as follows:

For the sake of multi-label classification, it is computed as follows:

Where ti and si are the ground truths for each class i in C.

Considering Class Imbalance

As specified earlier we observe that the datasets have class imbalances and are not equally distributed as shown in Table 1. Thus we consider the weights of each class and pass the weights as a tensor to the parameter while computing the loss. Class Weights give inverse class weights to penalize the underrepresented class for class imbalance.

where C is the number of classes. The resultant tensor is [w1,w2,...,wc− 1,wc]

4.3.2 Multi-class Hinge Loss

Hinge Loss function was initially proposed as an alternative to cross-entropy for binary classification. It was primarily developed for use with SVM models. The targets values are in the set {-1, 1}. The main purpose of hinge loss is to maximize the decision boundary between two groups that are to be discriminated, i.e, a binary classification problem. For linear classifiers w,x, hinge loss is computed as follows

Squared Hinge Loss function was developed as an extension, which computes the square of the score hinge loss. It smoothens the surface of the error function, thus making it easier for computational purposes.

Categorical Hinge Loss or multiclass hinge loss is computed as follows, “For a prediction y, take all values unequal to t, and compute the loss. Eventually, sum them all together to find the multiclass hinge loss” (Weston and Watkins 1999; Zhang et al. 2014; Rakhlin 2016; Shalev-Shwartz and Ben-David 2014). The regular targets are computed into categorical data and the loss function is calculated as follows

4.3.3 Focal Loss

The Focal Loss was initially proposed for dense object detection task as an alternative to CE loss. It enables training highly accurate dense object detectors for highly imbalanced datasets (Lin et al. 2017). For imbalanced text datasets, it handles class imbalance for by calculating the ratio of each class and thus assigning weights based on whether it is hard or soft examples (inliers) (Tula et al. 2021; Ma et al. 2020).

In CE, easily classified examples incur a loss with non-trivial magnitude. We define focal loss by adding a modulating factor loss to the cross entropy loss with a tunable focusing parameter γ ≥ 0 (Lin et al. 2017) as:

where p ∈ [0,1] is the estimated probability of the model for a given class. When γ = 0, the above formula represents CE. If γ > 1, the modulating factor assists in reducing the loss for easily classified examples (pt ≥ 0.5) resulting in more number of corrections of misclassified examples.

4.3.4 Kullback Leibler Divergence Loss

The Kullback-Leibler Divergence (KLD) measures the difference in the probability distribution of two random variables (Kullback and Leibler 1951). Theoretically, a KLD score of 0 indicates that the two distributions are identical.

It is defined as “the average number of extra bits needed to encode the data, due to the fact that we used the distribution q to encode the data instead of the true distribution p” (Murphy 2012), it is also known as relative entropy. It is generally used for models that have complex functions such as Variational Autoencoders (VAEs) for text generation rather than simple multi-class classification tasks (Prokhorov et al. 2019; Asperti and Trentin 2020). Consider two random distributions, P and Q. The main intuition behind KLD is that if the probability for an event from Q is small, but that of P is large, then there exists a large divergence. It is used to compute the divergence between discrete and continuous probability distributions (Brownlee 2019). It is computed as follows

5 Experiments and Evaluation

5.1 Experiments Setup

We use the pretrained models available on Huggingface TransformersFootnote 2 (Wolf et al. 2020), while fine-tuning the model on a python-based deep learning framework, PytorchFootnote 3 (Paszke et al. 2019) and Scikit-LearnFootnote 4 (Pedregosa et al. 2011) for evaluating the performance of the models. For MTL experiments, we take the naive weighted sum by assigning equal weights to the losses (Eigen and Fergus 2015). We have experimented with various hyperparameters that are listed as shown in Table 7. The experiments were conducted by dividing the dataset into three parts: 80% for training, 10% for validation, and 10% for testing. The class-wise support of the test is shown in Fig. 2 The experiments of all models were conducted on Google Colab (Bisong 2019).

-

Epoch: As the pretrained LMs consist of hundreds of Millions of parameters, we limit training up to 5 epochs, owing to memory limitations (Pan and Yang 2009).

-

Batch Size: Different Batch sizes were used among [16, 32, 64].

-

Optimizer: we use Adam optimizer with weight decay (Loshchilov and Hutter 2019).

-

Loss weights: We give equal importance to two tasks and add losses as shown in Formula (8).

5.2 Text Preprocessing

Preprocessing is one of the important steps in any NLP systems, especially for text classification tasks (Uysal and Gunal 2014). Comments on YouTube are grammatically inconsistent in nature, and thus contain emojis, mentions, hashtags, elongated words, expressions and urls which makes the process of tokenization quite streneous (del Arco et al. 2021). To overcome these challenges we follow these steps:

-

Converting the comments to lower case.

-

The emojis are replaced by the words that the emoji represents like happy, sad, among other emotions depicted by emojis. As emojis mainly depict the intention of a user, it would be imperative to replace them with their meanings to pick up their cues. As majority of the models are pretrained only on unlabelled text, we feel that it would be necessary.

-

URLs and other links are replaced by the word, ‘URL’.

-

Multiple spaces in a sentence and other special characters are removed as they do not contribute significantly in the overall intention of a comment.

5.3 Transfer Learning Fine-Tuning

We have exhaustively implemented several pretrained language models by fine-tuning them for text classification. All of the models we use are pretrained on large corpora consisting of unlabelled text. As we are dealing with code-mixed text, it would be interesting to see the performance of the models in Kannada, Malayalam, and Tamil, as all models are pretrained on either monolingual or multilingual corpora, it is imperative that the fine-tuned models could have a difficulty to classify code-mixed sentences. For the optimizer, we leverage weight decay in Adam optimizer (AdamW), by decoupling weight decay from the gradient update (Loshchilov and Hutter 2019; Kingma and Ba 2014). The primary step is to use the pretrained tokenizer to first cleave the word into tokens. Then, we add the special tokens needed for sentence classification ([CLS] at the first position, and [SEP] at the end of the sentence as shown in Fig.3). In the figure, tokens T1, T2, ..., Tn represent the cleaved tokens obtained after tokenizing. After special tokens are added, the tokenizer replaces each token with its id from the embedding table which is a component we obtain from the pretrained model.

BERT (Devlin et al. 2019) was originally pretrained on English texts, and was later extended for mbert (Pires et al. 2019), which is a language model pretrained on the Wikipedia dumps of the top 104 languages. mBERT consists of 512 input tokens, output being represented as a 768 dimensional vector and 12-attention heads. It is worth noting that we have used both multilingual and monolingual models (pretrained in English), to analyse the improvements, as we are dealing with code-mixed texts. There are two models of mBERT that are available, for our task, we use the BERT-base, Multilingual Cased checkpointFootnote 5. In MTL, we use distilbert-base-multilingual-cased for Tamil, while bert-base-multilingual-cased on Malayalam and Kannada datasets, based on their performances on STL on the DravidianCodeMix dataset.

5.4 Results Analysis

This section entails a comprehensive analysis of the capablities of several pretrained LMs. We have employed the popular metrics in NLP tasks, including Precision (P), Recall (R), F1-Score (F), weighted average, and macro-average. F1-Score is the harmonic average of recall and precision, taking values between 0 and 1. The metrics are computed as follows,

Where, c is the number of classes:

-

TP—True Positive examples are predicted to be positive and are positive;

-

TN—True Negative examples are predicted to be negative and are negative;

-

FP—False Positive examples are predicted to be positive but are negative;

-

FN—False Negative examples are predicted to be negative but are positive.

The weighted-Average and Macro-Average are computed as follows:

We have evaluated the performance of the models on various metrics such as precision, recall, and F1-Score. Accuracy gives more importance to the TP and TN, while disregarding FN and FP. F1 score is the harmonic average of precision and recall. Therefore, this score takes both FP and FN into account. Due to the persistence of class imbalance in our datasets, we use weighted F1-Score as the evaluation metric. The benefits of MTL is multifold as these two tasks are related to each other. MTL offers several advantages such as improved data efficiency, reduces overfitting while faster learning by leveraging auxillary representations (Ruder 2017). As a result, MTL allows us to have a single shared model in lieu of training independent models per task (Dobrescu et al. 2020). Hard parameter sharing involves the practice of sharing model weights between multiple tasks, as they are trained jointly to minimize multiple losses. However, soft parameter sharing involves all individual task-specific models to have different weights which would add the distance between the different task specific models that would have to be optimized (Crawshaw 2020). We intend to experiment with MTL to achieve a slight improvement in the performance of the model along with reduced time and space constraints in hard parameter sharing (Table 2).

Apart from the pretrained LMs shown in Tables 3, 4, and 5, we have tried other domain specific LMs. IndicBERT (Kakwani et al. 2020) is a fastText based word embeddings and ALBERT-based LM trained on 11 Indian languages along with English, and is a multilingual model similar to mBERT (Pires et al. 2019). IndicBERT was pretrained on IndicCorp (Kakwani et al. 2020), the sentences of which were trained using a sentence piece tokenizer (Kudo and Richardson 2018). However, when IndicBERT was fine-tuned for sentiment analysis and offensive language identification, it was found that the model performed very poorly, despite being pretrained on 12 languages, inclusive of Kannada, Malayalam, Tamil, and English. We believe that one of the main reasons for its poor performance, despite being pretrained on a large corpus, has to do with the architecture of the model which was also encountered by Puranik et al. (2021). Even though IndicBERT was pretrained in a multilingual setting, it followed the architecture of ALBERT using the standard masked language modeling objective. We believe that this is due to the cross-layer parameter sharing that hinders its performance in a multilingual setting (when its pretrained on more than one language). Consequentially, ALBERT tends to focus on the overall spatial complexity and training time (Lan et al. 2019).

We have also experimented with Multilingual Representations for Indian Languages (MuRIL), a pretrained LM that was pretrained on Indian languages as similar to IndicBERT but differs in terms of pretraining strategy and the corpus used (Khanuja et al. 2021). It follows the architecture of BERT-base encoder model, however it is trained on two objectives, masked language modeling, and transliterated language modeling (TLM). TLM leverages parallel data unlike the former training objective. However, in spite of having a TLM objective, the model performed worse than BERT base. which was the base encoder model for MuRIL. Hence, we have not tabulated the classification report of both MuRIL and IndicBERT. We will be analysing the performance of models on different datasets separately.

5.4.1 Kannada

Tables 3 and 6 presents the classification report of the models on Kannada dataset. We opted to train the STL with CE Loss, which is a popular choice among loss functions for classification instances (De Boer et al. 2005). We observe that mBERT (multilingual-bert) achieves the highest weighted F1-Score among other models as observed in Table 3.

mBERT achieved a 0.591 for sentiment analysis and 0.686 for offensive language identification. In spite of achieving the highest weighted average of F1-Scores of the classes, we can observe that the some classes have performed very poorly with 0.0 as their F1-Score. In offensive language identification, we can see that most of the models perform poorly on 3 classes, Offensive Untargeted, Offensive Targeted Group, and Offensive Targeted Others. One of the main reasons could be due to the less support of these classes in the test set. Offensive Untargeted has a support of 27, while offensive Targeted Group has 44 and Offensive Targeted others has a mere 14 out of the total test set having a support of 728. As the ratio of the majority class to the minority class is very severe (407:14), which is essentially the reason why the weighted F1-Scores of Not Offensive and Offensive Targeted Individual are much higher in comparison to the low-resourced classes. Among monolingual LMs, CharacterBERT performs at par with mBERT. It is interesting to note that we use general-character-bertFootnote 6, a LM that was pretrained only on English Wikipedia and OpenWebText (Liu and Curran 2006). We believe the approach employed in characterBERT, of attending to the characters to attain a single word embedding as opposed to the process of tokenization in BERT, as illustrated in Fig. 6. This is one of the reasons why CharacterBERT outperforms other monolingual models such as RoBERTa, ALBERT, and XLNet. Despite the superiority of other models that are pretrained on more data and better strategies.

To our surprise, we observe that XLM-RoBERTa, a multilingual LM pretrained on the top 100 languages, performed the worst among the models. One of the main reasons for the low performance of XLM-RoBERTa (-20%) could be on the account of low support of the test set. However, due to the nature of the language, it would be hard to extract more data. Another reason for the poor performance is based on the architecture of RoBERTa models, upon which the XLM-R model is based. Unlike the conventional wordpiece (Schuster and Nakajima 2012) and Unigram tokenizer (Kudo 2018), RoBERTa uses a Byte-level BPE tokenizer (Sennrich et al. 2016) for its tokenization process. However, BPE tends to have a poor morphological alignment with the original text (Jain et al. 2020). As Dravidian Languages are morphologically rich (Tanwar and Majumder 2020), this approach results in poor performance on sentiment analysis and offensive language identification. DistilBERT scores more than the other multilingual models such as XLM and XLM-R, in spite of having very few parameters in comparison to large LMs such as XLM-R base (66M vs 270M). However, among all models, mBERT had the best overall scores in both tasks. Hence, we used BERT to perform the MTL models by training them for hard parameter sharing and soft parameter sharing.

We have fine-tuned BERT for these loss functions as it outperformed other pretrained LMs for CEs. We have employed four loss functions. The highest weighted F1-Score for sentiment analysis was 0.602, which was achieved when mBERT was trained in soft parameter sharing strategy with CE as the loss function. There is an increase of 0.101 in contrast to STL. However, the output of the second task scored 0.672, which is lower than the STL score (-0.140 F1-Score). As we take the naive sum of the losses when training in a multi-task setting, the losses of one task suppresses the other task, thus, only one task majorly benefiting from the approach (Maninis and Radosavovic 2019). However, it is worth noting that the approach did achieve a competitive weighted F1-Score, if not greater than the former. The highest F1-Score achieved for offensive language identification was 0.700, which was achieved by training mBERT in hard parameter sharing strategy, with CE as its loss function. However, the same issue of supression of performance on the other task was observed as it scored 0.590. It can be observed that only two of the classes scored 0.0 in MTL in contrast to three in STL. When both models were trained with KLD as its loss function, the performance was abysmal, and it was only able to classify a single class in the respective tasks (Positive and Not Offensive) among all. When trained on Hinge Loss and Focal Loss, we observe that they attend to the class imbalance. Hence, we observe that MTL frameworks tend to perform slightly better than when treated as individual tasks.

Figure 9 represents the training accuracy of sentiment analysis and offensive language identification in an MTL scenario. It can be observed from the graph that there is a bigger jump for sentiment analysis after the first epoch in contrast to offensive language identification, where there is a steady increase in the accuracy. Figure 10a and b exhibit the confusion matrices of the best performing model for the respective tasks. For sentiment analysis, we observe that a lot of labels are being misclassified. The trend of misclassification can also be observed in offensive language identification, where most of the samples are being misclassified into four classes (instead of 6), with the absence of samples being classified into OTO and OU.The samples of OTO and OU are being misclassified into other classes, which is mainly due to the low support of these classes in the test set (Table 7).

5.4.2 Malayalam

Tables 4 and 8 illustrate the classification report of the models on STL, and MTL respectively. It can be observed that among STL, fine-tuning mBERT with CE gave a weighted F1-Score of 0.635 for sentiment analysis and 0.902 for offensive language identification. DistilBERT outperforms BERT on offensive language identification, however, it scores poorly on sentiment analysis. DistilBERT reduces the occurrence of misclassification, as it is able to classify four out of the five classes separately, unlike mBERT, which correctly classifies two out of five classes in offensive language identification. Even though XLM has higher class-wise F1-Scores for both tasks, when trained on an MTL setting, it performed very poorly. This could be due to the pretraining strategy of XLM, as it was only pretrained on Wikipedia, which does not improve the performance on low-resource languages. Added to that, acquiring parallel data for TLM during pretraining could be very challenging (Grégoire and Langlais 2018). Several classes have scored 0.0 for class-wise weighted F1-Scores due to less support on the test set (Class Imbalance). Since mBERT is the strongest model among all, we experiment it for MTL.

We observe that mBERT fine-tuned on hard parameter sharing strategy with CE as its loss, achieved the highest score of 0.668 (+ 3.3% from STL) in sentiment analysis, while its secondary task scored 0.905 (+ 0.03%). Despite not being the best weighted F1-Score, it outperformed the STL result of mBERT. We observe that when mBERT is trained using soft parameter sharing with Focal Loss being its loss function, it attains the highest weighted F1-Score for offensive language identification (+ 0.6%), while scoring 0.651 (+ 1.6%) on sentiment analysis. We also observe that KLD’s performance is appalling and could be due to the perseverance of the loss function. KLD is likely to treat the following multi-class classification problem as a regression problem, hence predicting a single class that has the highest support. However, it has been previously used for classification problems (Nakov et al. 2016), but in our case, the loss performs poorer than the performance of the algorithms that serve as the baseline (Chakravarthi et al. 2020). It is to be noted that the increase in performance is more for sentiment analysis in contrast to offensive language identification. The time required to train, along with the memory constraints, are among the reasons why we opt for MTL.

Figure 11 represents the training accuracy of both tasks in the best performing model. After the first epoch, we see that there is a steady increase in both of the tasks. However, after the third epoch, we do not see any improvement in the performance of both of the tasks. Figure 12 reports the confusion matrices of the best performing models (in MTL). For sentiment analysis, we observe that most of the labels are misclassified for the samples of Mixed Feelings, which is mainly due to the low support in the training and test set as observed in Fig. 12a. For offensive language identification, we observe that the model tends to classify all of the samples in the test set into 2 classes, NO, and OL. As stated previously, low support of the other classes during training is why the model misclassifies its labels.

5.4.3 Tamil

Table 2 gives an insight into the support of the classes on the test set. We observe that the support of Offensive Targeted Others is quite low in contrast to Not Offensive (52:3148). From Table 5, DistilmBERT is the best performing model among all models. In spite of being a smaller, distilled form of BERT, it performs better than the parent model. Even though it was suggested that DistilBERT retains 97% of BERT’s accuracy, it has performed better than BERT previously (Maslej-Krešň’akov’a et al. 2020), mainly due to the custom triple loss function and fewer parameters (Lan et al. 2019). The weighted average of F1-Score for sentiment analysis is 0.614 and 0.743 for offensive language identification, all trained with CE. Even though BERT achieves competitive performance, three out of six classes have a weighted average F1-Score of 0.0, due to misclassification. Hence, we train DistilmBERT on MTL. We observe that XLM has also performed better than BERT and XLM-RoBERTa.

It can be observed from Table 9 that DistilmBERT, when trained using hard parameter sharing strategy on CE loss yields the best results for offensive language identification with a weighted F1-Score of 0.753 (+ 1.0%), and 0.614 on sentiment analysis (+/-0.0%). However, the best score for offensive language identification was achieved by training DistilmBERT on soft parameter sharing strategy with Focal Loss. It achieved a score of 0.625 (+ 1.1%) on sentiment analysis and 0.745 (+ 0.2%) on offensive language identification. It is to be noted that training on both CE and FL achieves better results than the best scores set forth by the STL model. The Hinge Loss function helps achieve better results for precision and recall. During training, the accuracy curve of sentiment analysis is increasing at a greater rate in contrast to offensive language identification, as displayed in Fig. 13. Figure 14 display the class-wise predictions by DistilmBERT trained on hard parameter sharing with CE. Most of the samples of NO class are predicted correctly, with the majority of the rest being mislabeled as OU. Due to low support in the training and test set, no samples of OTO have been predicted correctly. Most of the classes are incorrectly predicted as OU, which is mainly due to the close interconnection among the classes. In sentiment analysis, it can be observed that a significant number of samples of Positive and Negative classes have been predicted as Neutral. This is also observed in Mixed Feeling (MF), where most of the samples have been misclassified as Positive and Negative. The misclassification is mainly due to the nature of the class, as Mixed Feelings (MF) can be either of the two classes.

6 Conclusion

Despite of the rising popularity of social media, the lack of code-mixed data in Dravidian languages has motivated us to develop MTL frameworks for sentiment analysis and offensive language identification in three code-mixed Dravidian corpora, namely, Kannada, Malayalam, and Tamil. The proposed approach of fine-tuning multilingual BERT to a hard parameter sharing with cross entropy loss yields the best performance for both of the tasks in Kannada and Malayalam, achieving competitive scores in contrast to the performance when its counterparts are treated as separate tasks. For Tamil, our approach of fine-tuning multilingual distilBERT in soft parameter sharing with cross entropy loss scores better than the other models, mainly due to its triple loss function employed during pretraining. The performance of these models highlights the advantages of using an MTL model to attend to two tasks at a time, mainly reducing the time required to train the models while additionally reducing the space complexities required to train them separately. For future work, we intend to use uncertainty weighting to calculate the impact of one loss function on the other during MTL.

References

Anagha M, Kumar RR, Sreetha K, Raj PR (2015) Fuzzy logic based hybrid approach for sentiment analysis of malayalam movie reviews. In: 2015 IEEE International conference on signal processing, informatics, communication and energy systems (SPICES), IEEE, pp 1–4

Appidi AR, Srirangam VK, Suhas D, Shrivastava M (2020) Creation of corpus and analysis in code-mixed Kannada-English Twitter data for emotion prediction. In: Proceedings of the 28th international conference on computational linguistics, International Committee on Computational Linguistics, Barcelona, Spain (Online), pp 6703–6709. https://www.aclweb.org/anthology/2020.coling-main.587

del Arco FMP, Molina-González MD, Ureña-López LA, Martín-Valdivia MT (2021) Comparing pre-trained language models for spanish hate speech detection. Expert Syst Appl 166(114):120. https://doi.org/10.1016/j.eswa.2020.114120

Asperti A, Trentin M (2020) Balancing reconstruction error and kullback-leibler divergence in variational autoencoders. IEEE Access 8:199,440–199,448. https://doi.org/10.1109/ACCESS.2020.3034828

Bali K, Sharma J, Choudhury M, Vyas Y (2014) “I am borrowing ya mixing ?” an analysis of English-Hindi code mixing in Facebook. In: Proceedings of the First workshop on computational approaches to code switching, Association for Computational Linguistics, Doha, Qatar, pp 116–126. https://doi.org/10.3115/v1/W14-3914, https://www.aclweb.org/anthology/W14-3914

Banerjee S, Chakravarthi BR, McCrae JP (2020) Comparison of pretrained embeddings to identify hate speech in indian code-mixed text. In: 2020 2Nd international conference on advances in computing, communication control and networking (ICACCCN), IEEE, pp 21–25

Barman U, Das A, Wagner J, Foster J (2014) Code mixing: A challenge for language identification in the language of social media. In: Proceedings of the First workshop on computational approaches to code switching, Association for Computational Linguistics, Doha, Qatar, pp 13–23. https://doi.org/10.3115/v1/W14-3902, https://www.aclweb.org/anthology/W14-3902

Bhat S (2012) Morpheme segmentation for Kannada standing on the shoulder of giants. In: Proceedings of the 3rd workshop on south and southeast asian natural language processing, The COLING 2012 Organizing Committee, Mumbai, India, pp 79–94. https://www.aclweb.org/anthology/W12-5007

Bisong E (2019) Google Colaboratory. Apress, Berkeley, CA, pp 59–64. https://doi.org/10.1007/978-1-4842-4470-8_7

Brownlee J (2019) How to calculate the kl divergence for machine learning. Available at http://machinelearningmastery.com/divergence-between-probability-distributions/l

Caruana R (1997) Multitask learning. Mach Learn 28(1):41–75. https://doi.org/10.1023/A:1007379606734

Chakravarthi BR (2020) HopeEDI: A multilingual hope speech detection dataset for equality, diversity, and inclusion. In: Proceedings of the Third workshop on computational modeling of people’s opinions, personality, and emotion’s in social media, Association for Computational Linguistics, Barcelona, Spain (Online), pp 41–53. https://www.aclweb.org/anthology/2020.peoples-1.5

Chakravarthi BR, Jose N, Suryawanshi S, Sherly E, McCrae JP (2020) A sentiment analysis dataset for code-mixed Malayalam-English. In: Proceedings of the 1st joint workshop on spoken language technologies for under-resourced languages (SLTU) and collaboration and computing for under-resourced languages (CCURL), European Language Resources association, Marseille, France, pp 177–184. https://www.aclweb.org/anthology/2020.sltu-1.25

Chakravarthi BR, Muralidaran V, Priyadharshini R, McCrae JP (2020) Corpus creation for sentiment analysis in code-mixed Tamil-English text. In: Proceedings of the 1st joint workshop on spoken language technologies for under-resourced languages (SLTU) and collaboration and computing for under-resourced languages (CCURL), European Language Resources association, Marseille, France, pp 202–210. https://www.aclweb.org/anthology/2020.sltu-1.28

Chakravarthi BR, Priyadharshini R, Jose NMAK, Mandl T, Kumaresan PK, Ponnusamy RVH, McCrae John Philip Sherly E (2021) Findings of the shared task on Offensive Language Identification in Tamil, Malayalam, and Kannada. In: Proceedings of the First workshop on speech and language technologies for dravidian languages. Association for Computational Linguistics

Chakravarthi BR, Priyadharshini R, Muralidaran V, Jose N, Suryawanshi S, Sherly E, McCrae JP (2021) Dravidiancodemix: Sentiment analysis and offensive language identification dataset for dravidian languages in code-mixed text. Language Resources and Evaluation

Changpinyo S, Hu H, Sha F (2018) Multi-task learning for sequence tagging: An empirical study. In: Proceedings of the 27th international conference on computational linguistics, Association for Computational Linguistics, Santa Fe, New Mexico, USA, pp 2965–2977. https://www.aclweb.org/anthology/C18-1251

Chhablani G, Bhartia Y, Sharma A, Pandey H, Suthaharan S (2021) Nlrg at semeval-2021 task 5: Toxic spans detection leveraging bert-based token classification and span prediction techniques

Clarke I, Grieve J (2017) Dimensions of abusive language on Twitter. In: Proceedings of the First workshop on abusive language online, Association for Computational Linguistics, Vancouver, BC, Canada, pp 1–10. https://doi.org/10.18653/v1/W17-3001, https://www.aclweb.org/anthology/W17-3001

Conneau A, Khandelwal K, Goyal N, Chaudhary V, Wenzek G, Guzmán F, Grave E, Ott M, Zettlemoyer L, Stoyanov V (2020) Unsupervised cross-lingual representation learning at scale. In: Proceedings of the 58th annual meeting of the association for computational linguistics, Association for Computational Linguistics, Online, pp 8440–8451. https://doi.org/10.18653/v1/2020.acl-main.747, https://www.aclweb.org/anthology/2020.acl-main.747

Crawshaw M (2020) Multi-task learning with deep neural networks: A survey. arXiv:2009.09796

Dadvar M, Trieschnigg D, Ordelman R, de Jong F (2013) Improving cyberbullying detection with user context. In: Serdyukov P, Braslavski P, Kuznetsov SO, Kamps J., Rüger S, Agichtein E, Segalovich I, Yilmaz E (eds) Advances in information retrieval. Springer, Berlin, Heidelberg, pp 693–696

Dai Z, Yang Z, Yang Y, Carbonell J, Le Q, Salakhutdinov R (2019) Transformer-XL: Attentive language models beyond a fixed-length context. In: Proceedings of the 57th annual meeting of the association for computational linguistics, Association for Computational Linguistics, Florence, Italy, pp 2978–2988. https://doi.org/10.18653/v1/P19-1285, https://www.aclweb.org/anthology/P19-1285

Dai Z, Yang Z, Yang Y, Carbonell JG, Le QV, Salakhutdinov R (2019) Transformer-xl: Attentive language models beyond a fixed-length context. arXiv:abs/1901.02860. 1901.02860

Das A, Bandyopadhyay S (2010) SentiWordNet for Indian languages. In: Proceedings of the Eighth workshop on asian language resouces, Coling 2010 Organizing Committee, Beijing, China, pp 56–63. https://www.aclweb.org/anthology/W10-3208

Das A, Gambäck B (2014) Identifying languages at the word level in code-mixed Indian social media text. In: Proceedings of the 11th international conference on natural language processing, NLP Association of India, Goa, India, pp 378–387. https://www.aclweb.org/anthology/W14-5152

De Boer PT, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Annals of Operations Research 134(1):19–67

Deng J, Dong W, Socher R, Li L (2009) Kai Li, Li fei-fei: imagenet: A large-scale hierarchical image database. In: 2009 IEEE Conference on computer vision and pattern recognition, pp 248–255. https://doi.org/10.1109/CVPR.2009.5206848

Devlin J, Chang MW, Lee K, Toutanova K (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of the 2019 conference of the North American chapter of the association for computational linguistics: human language technologies, Volume 1 (Long and Short Papers), Association for Computational Linguistics, Minneapolis, Minnesota, pp 4171–4186. https://doi.org/10.18653/v1/N19-1423, https://www.aclweb.org/anthology/N19-1423

Djuric N, Zhou J, Morris R, Grbovic M, Radosavljevic V, Bhamidipati N (2015) Hate speech detection with comment embeddings. In: Proceedings of the 24th international conference on world wide web, pp 29–30

Dobrescu A, Giuffrida MV, Tsaftaris SA (2020) Doing more with less: a multitask deep learning approach in plant phenotyping. Frontiers in plant science 11

Duong L, Cohn T, Bird S, Cook P (2015) Low resource dependency parsing: Cross-lingual parameter sharing in a neural network parser. In: Proceedings of the 53rd annual meeting of the association for computational linguistics and the 7th international joint conference on natural language processing (Volume 2: Short Papers), Association for Computational Linguistics, Beijing, China, pp 845–850. https://doi.org/10.3115/v1/P15-2139, https://www.aclweb.org/anthology/P15-2139

Eigen D, Fergus R (2015) Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In: 2015 IEEE International conference on computer vision (ICCV), pp 2650–2658

El Boukkouri H, Ferret O, Lavergne T, Noji H, Zweigenbaum P, Tsujii J (2020) CharacterBERT: Reconciling ELMo and BERT for word-level open-vocabulary representations from characters. In: Proceedings of the 28th international conference on computational linguistics, International Committee on Computational Linguistics, Barcelona, Spain (Online), pp 6903–6915. https://www.aclweb.org/anthology/2020.coling-main.609

Ghanghor NK, Krishnamurthy P, Thavareesan S, Priyadharshini R (2021) Chakravarthi, B.R.: IIITK@dravidianlangtech-EACL2021: Offensive Language Identification and Meme Classification in Tamil, Malayalam and Kannada. In: Proceedings of the First workshop on speech and language technologies for dravidian languages. Association for Computational Linguistics, Online

Grégoire F, Langlais P (2018) Extracting parallel sentences with bidirectional recurrent neural networks to improve machine translation. In: Proceedings of the 27th international conference on computational linguistics, Association for Computational Linguistics, Santa Fe, New Mexico, USA, pp 1442–1453. https://www.aclweb.org/anthology/C18-1122

Hande A, Priyadharshini R, Chakravarthi BR (2020) KanCMD: Kannada CodeMixed dataset for sentiment analysis and offensive language detection. In: Proceedings of the Third workshop on computational modeling of people’s opinions, personality, and emotion’s in social media, Association for Computational Linguistics, Barcelona, Spain (Online), pp 54–63. https://www.aclweb.org/anthology/2020.peoples-1.6

Jain K, Deshpande A, Shridhar K, Laumann F, Dash A (2020) Indic-transformers: An analysis of transformer language models for indian languages

Jin N, Wu J, Ma X, Yan K, Mo Y (2020) Multi-task learning model based on multi-scale cnn and lstm for sentiment classification. IEEE Access 8:77,060–77,072. https://doi.org/10.1109/ACCESS.2020.2989428

Kakwani D, Kunchukuttan A, Golla SNCG, Bhattacharyya A, Khapra MM, Kumar P (2020) IndicNLPSuite: Monolingual corpora, evaluation benchmarks and pre-trained multilingual language models for Indian languages. In: Findings of the association for computational linguistics: EMNLP 2020, Association for Computational Linguistics, Online, pp 4948–4961. https://doi.org/10.18653/v1/2020.findings-emnlp.445, https://www.aclweb.org/anthology/2020.findings-emnlp.445

Khanuja S, Bansal D, Mehtani S, Khosla S, Dey A, Gopalan B, Margam DK, Aggarwal P, Nagipogu RT, Dave S et al (2021) Muril:, Multilingual representations for indian languages. arXiv:2103.10730

Kingma DP, Ba J (2014) Adam:, A method for stochastic optimization. arXiv:1412.6980

Kuchaiev O, Ginsburg B (2017) Factorization tricks for LSTM networks. arXiv:abs/1703.10722